Abstract

Artificial Intelligence (AI) is rapidly transforming the landscape of critical care, offering opportunities for enhanced diagnostic precision and personalized patient management. However, its integration into ICU clinical practice presents significant challenges related to equity, transparency, and the patient-clinician relationship. To address these concerns, a multidisciplinary team of experts was established to assess the current state and future trajectory of AI in critical care. This consensus identified key challenges and proposed actionable recommendations to guide AI implementation in this high-stakes field. Here we present a call to action for the critical care community, to bridge the gap between AI advancements and the need for humanized, patient-centred care. Our goal is to ensure a smooth transition to personalized medicine while, (1) maintaining equitable and unbiased decision-making, (2) fostering the development of a collaborative research network across ICUs, emergency departments, and operating rooms to promote data sharing and harmonization, and (3) addressing the necessary educational and regulatory shifts required for responsible AI deployment. AI integration into critical care demands coordinated efforts among clinicians, patients, industry leaders, and regulators to ensure patient safety and maximize societal benefit. The recommendations outlined here provide a foundation for the ethical and effective implementation of AI in critical care medicine.

Keywords: Artificial intelligence, Critical care medicine, Personalized medicine, Ethics, Healthcare innovation

Introduction

Artificial intelligence (AI) is rapidly entering critical care, where it holds the potential to improve diagnostic accuracy and prognostication, streamline intensive care unit (ICU) workflows, and enable personalized care. [1, 2] Without a structured approach to implementation, evaluation, and control, this transformation may be hindered or possibly lead to patient harm and unintended consequences.

Despite the need to support overwhelmed ICUs facing staff shortages, increasing case complexity, and rising costs, most AI tools remain poorly validated and untested in real settings. [3, 45]

To address this gap, we issue a call to action for the critical care community: the integration of AI into the ICU must follow a pragmatic, clinically informed, and risk-aware framework. [6–8] As a result of a multidisciplinary consensus process with a panel of intensivists, AI researchers, data scientists and experts, this paper offers concrete recommendations to guide the safe, effective, and meaningful adoption of AI into critical care.

Methods

The consensus presented in this manuscript emerged through expert discussions, rather than formal grading or voting on evidence, in recognition that AI in critical care is a rapidly evolving field where many critical questions remain unanswered. Participants were selected by the consensus chairs (MC, AB, FT, and JLV) based on their recognized contributions to AI in critical care to ensure representation from both clinical end-users and AI developers. Discussions were iterative with deliberate engagement across domains, refining recommendations through critical examination of real-world challenges, current research, and regulatory landscapes.

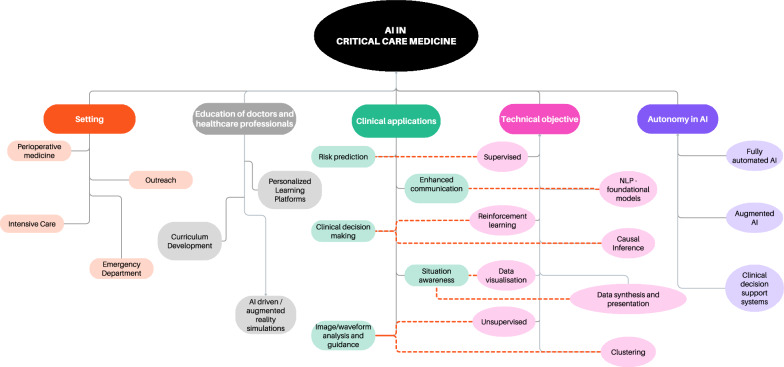

While not purely based on traditional evidence grading, this manuscript reflects a rigorous, expert-driven synthesis of key barriers and opportunities for AI in critical care, aiming to bridge existing knowledge gaps and provide actionable guidance in a rapidly evolving field. To guide physicians in this complex and rapidly evolving arena [9], some of the current taxonomy and classifications are reported in Fig. 1.

Fig. 1.

Taxonomy of AI in critical care

Main barriers and challenges for AI integration in critical care

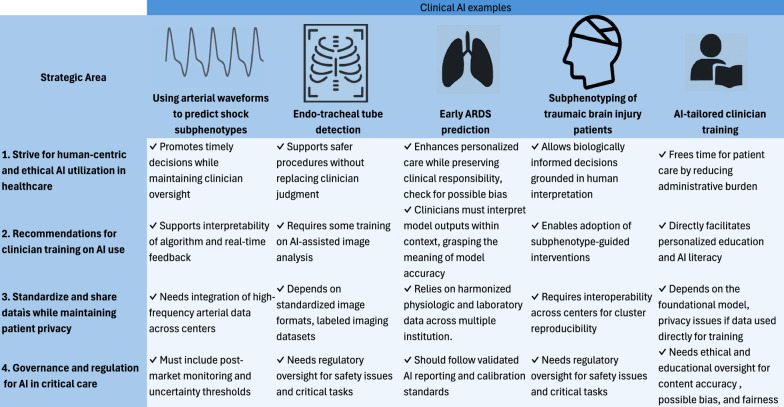

The main barriers to AI implementation in critical care determined by the expert consensus are presented in this section. These unresolved and evolving challenges have prompted us to develop a series of recommendations to physicians and other healthcare workers, patients, and societal stakeholders, emphasizing the principles we believe should guide the advancement of AI in healthcare. Challenges and principles are divided into four main areas, 1) human-centric AI; 2) Recommendation for clinician training on AI use; 3) standardization of data models and networks and 4) AI governance. These are summarized in Fig. 2 and discussed in more detail in the next paragraphs.

Fig. 2.

Recommendations, according to development of standards for networking, data sharing and research, ethical challenges, regulations and societal challenges, and clinical practice

The development and maintenance of AI applications in medicine require enormous computational power, infrastructure, funding and technical expertise. Consequently, AI development is led by major technology companies whose goals may not always align with those of patients or healthcare systems [10, 11]. The rapid diffusion of new AI models contrasts sharply with the evidence-based culture of medicine. This raises concerns about the deployment of insufficiently validated clinical models. [12]

Moreover, many models are developed using datasets that underrepresent vulnerable populations, leading to algorithmic bias. [13] AI models may lack both temporal validity (when applied to new data in a different time) and geographic validity (when applied across different institutions or regions). Variability in temporal or geographical disease patterns including demographics, healthcare infrastructure, and the design of Electronic Health Records (EHR) further complicates generalizability.

Finally, the use of AI raises ethical concerns, including trust in algorithmic recommendations and the risk of weakening the human connection at the core of medical practice, which is the millenary relation between physicians and patients. [14]

Recommendations

Here we report recommendations, divided in four domains. Figure 3 reports a summary of five representative AI use cases in critical care—ranging from waveform analysis to personalized clinician training—mapped across these four domains.

Fig. 3.

Summary of five representative AI use cases in critical care—ranging from waveform analysis to personalized clinician training—mapped across these 4 domains

Strive for human-centric and ethical AI utilization in healthcare

Alongside its significant potential benefit, the risk of AI misuse cannot be underestimated. AI algorithms may be harmful when prematurely deployed without adequate control [9, 15–17]. In addition to the regulatory frameworks that have been established to maintain control (presented in Sect."Governance and regulation for AI in Critical Care") [18, 19] we advocate for clinicians to be involved in this process and provide guidance.

Develop human-centric AI in healthcare

AI development in medicine and healthcare should maintain a human-centric perspective, promote empathetic care, and increase the time allocated to patient-physician communication and interaction. For example, the use of AI to replace humans in time-consuming or bureaucratic tasks such as documentation and transfers of care [20–22]. It could craft clinical notes, ensuring critical information is accurately captured in health records while reducing administrative burdens [23].

Establish social contract for AI use in healthcare

There is a significant concern that AI may exacerbate societal healthcare disparities [24]. When considering AI’s potential influence on physicians'choices and behaviour, the possibility of including or reinforcing biases should be examined rigorously to avoid perpetuating existing health inequities and unfair data-driven associations [24]. It is thus vital to involve patients and societal representatives in discussions regarding the vision of the next healthcare era, its operations, goals, and limits of action [25]. The desirable aim would be to establish a social contract for AI in healthcare, to ensure the accountability and transparency of AI in healthcare. A social contract for AI in healthcare should define clear roles and responsibilities for all stakeholders—clinicians, patients, developers, regulators, and administrators. This includes clinicians being equipped to critically evaluate AI tools, developers ensuring transparency, safety, and clinical relevance, and regulators enforcing performance, equity, and post-deployment monitoring standards. We advocate for hospitals to establish formal oversight mechanisms, such as dedicated AI committees, to ensure the safe implementation of AI systems. Such structures would help formalize shared accountability and ensure that AI deployment remains aligned with the core values of fairness, safety, and human-centred care.

Prioritize human oversight and ethical governance in clinical AI

Since the Hippocratic oath, patient care has been based on the doctor-patient connection where clinicians bear the ethical responsibility to maximize patient benefit while minimizing harm. As AI technologies are increasingly integrated into healthcare, their responsibility must also extend to overseeing its development and application. In the ICU, where treatment decisions balance between individual patient preferences and societal consideration, healthcare professionals must lead this transition [26]. As intensivists, we should maintain governance of this process, ensuring ethical principles and scientific rigor guide the development of frameworks to measure fairness, assess bias, and establish acceptable thresholds for AI uncertainty [6–8].

While AI models are rapidly emerging, most are being developed outside the medical community. To better align AI development with clinical ethics, we propose the incorporation of multidisciplinary boards comprising clinicians, patients, ethicists, and technological experts, who should be responsible for systematically reviewing algorithmic behaviour in critical care, assessing the risks of bias, and promoting transparency in decision-making processes. In this context, AI development offers an opportunity to rethink and advance ethical principles in patient care.

Recommendations for clinician training on AI use

Develop and assess the Human-AI interface

Despite some promising results [27, 28], the clinical application of AI remains limited [29–31]. The first step toward integration is to understand how clinicians interact with AI and to design systems that complement, rather than disrupt, clinical reasoning [32]. This translates into the need for specific research on the human-AI interface, where a key area of focus is identifying the most effective cognitive interface between clinicians and AI systems. On one side, physicians may place excessive trust on AI model results, possibly overlooking crucial information. For example, in sepsis detection an AI algorithm might miss an atypical presentation or a tropical infectious disease due to limitations in its training data; if clinicians overly trust the algorithm’s negative output, they may delay initiating a necessary antibiotic. On the other, the behaviour of clinicians can influence AI responses in unintended ways. To better reflect this interaction, the concept of synergy between human and AI has been proposed in the last years, emphasizing that AI supports rather than replaces human clinicians [33]. This collaboration has been described in two forms: human-AI augmentation (when human–AI interface enhances clinical performance compared to human alone) and human-AI synergy (where the combined performance exceeds that of both the human and the AI individually) [34]. To support the introduction of AI in clinical practice in intensive care, we propose starting with the concept of human-AI augmentation, which is more inclusive and better established according to medical literature [34]. A straightforward example of the latter is the development of interpretable, real-time dashboards that synthetize complex multidimensional data into visual formats, thereby enhancing clinicians’ situational awareness without overwhelming them.

Improve disease characterization with AI

Traditional procedures for classifying patients and labelling diseases and syndromes based on a few simple criteria are the basis of medical education, but they may fail to grasp the complexity of underlying pathology and lead to suboptimal care. In critical care, where patient conditions are complex and rapidly evolving, AI-driven phenotyping plays a crucial role by leveraging vast amounts of genetic, radiological, biomarker, and physiological data. AI-based phenotyping methods can be broadly categorized into two approaches.

One approach involves unsupervised clustering, in which patients are grouped based on shared features or patterns without prior labelling. Seymour et al. demonstrated how machine learning can stratify septic patients into clinically meaningful subgroups using high-dimensional data, which can subsequently inform risk assessment and prognosis [35]. Another promising possibility is the use of supervised or semi-supervised clustering techniques, which incorporate known outcomes or partial labelling to enhance the phenotyping of patient subgroups [36].

The second approach falls under the causal inference framework, where phenotyping is conducted with the specific objective of identifying subgroups that benefit from a particular intervention due to a causal association. This method aims to enhance personalized treatment by identifying how treatment effects vary among groups, ensuring that therapies are targeted toward patients most likely to benefit. For example, machine learning has been used to stratify critically ill patients based on their response to specific therapeutic interventions, potentially improving clinical outcomes [37]. In a large ICU cohort of patients with traumatic brain injury (TBI), unsupervised clustering identified six distinct subgroups, based on combined neurological and metabolic profiles. [38]

These approaches hold significant potential for advancing acute and critical care by ensuring that AI-driven phenotyping is not only descriptive, but also actionable. Before integrating these methodologies into clinical workflows, we need to make sure clinicians can accept the paradigm shift between broad syndromes and specific sub-phenotypes, ultimately supporting the transition toward personalized medicine [35, 39–41].

Ensure AI training for responsible use of AI in healthcare

In addition to clinical practice, undergraduate medical education is also directly influenced by AI transformation [42] as future workers need to be equipped to understand and use these technologies. Providing training and knowledge from the start of their education requires that all clinicians understand data science and AI's fundamental concepts, methods, and limitations, which should be included in medical degree core curriculum. This will allow clinicians to use and assess AI critically, identify biases and limitations, and make well-informed decisions, which may ultimately benefit the medical profession's identity crisis and provide new careers in data analysis and AI research [42].

In addition to undergraduate education, it is essential to train experienced physicians, nurses, and other allied health professional [43]. The effects of AI on academic education are deep and outside the scope of the current manuscript. One promising example is the use of AI to support personalized, AI-driven training for clinicians—both in clinical education and in understanding AI-related concepts [44]. Tools such as chatbots, adaptive simulation platforms, and intelligent tutoring systems can adapt content to students’ learning needs in real time, offering a tailored education. This may be applied to both clinical training and training in AI domains.

Accepting uncertainty in medical decision-making

Uncertainty is an intrinsic part of clinical decision-making, with which clinicians are familiar and are trained to navigate it through experience and intuition. However, AI models introduce a new type of uncertainty, which can undermine clinicians'trust, especially when models function as opaque “black boxes” [45–47]. This increases cognitive distance between model and clinical judgment, as clinicians don’t know how to interpret it. To bridge this gap, explainable AI (XAI) has emerged, providing tools to make model predictions more interpretable and, ideally, more trustworthy to reduce perceived uncertainty [48].

Yet, we argue that interpretability alone is not enough [48].To accelerate AI adoption and trust, we advocate that physicians must be trained to interpret outputs under uncertainty—using frameworks like plausibility, consistency with known biology, and alignment with consolidated clinical reasoning—rather than expecting full explainability [49].

Standardize and share data while maintaining patient privacy

In this section we present key infrastructures for AI deployment in critical care [50]. Their costs should be seen as investment in patient outcomes, processes efficiency, and reduced operational costs. Retaining data ownership within healthcare institutions, and recognizing patients and providers as stakeholders, allows them to benefit from the value their data creates. On the contrary, without safeguards clinical data risk becoming proprietary products of private companies—which are resold to their source institutions rather than serving as a resource for their own development—for instance, through the development and licensing of synthetic datasets [51].

Standardize data to promote reproducible AI models

Standardized data collection is essential for creating generalizable and reproducible AI models and fostering interoperability between different centres and systems. A key challenge in acute and critical care is the variability in data sources, including EHRs, multi-omics data (genomics, transcriptomics, proteomics, and metabolomics), medical imaging (radiology, pathology, and ultrasound), and unstructured free-text data from clinical notes and reports. These diverse data modalities are crucial for developing AI-driven decision-support tools, yet their integration is complex due to differences in structure, format, and quality across healthcare institutions.

For instance, the detection of organ dysfunction in the ICU, hemodynamic monitoring collected by different devices, respiratory parameters from ventilators by different manufacturers, and variations in local policies and regulations all impact EHR data quality, structure, and consistency across different centres and clinical trials.

The Observational Medical Outcomes Partnership (OMOP) Common Data Model (CDM), which embeds standard vocabularies such as LOINC and SNOMED CT, continues to gain popularity as a framework for structuring healthcare data, enabling cross-centre data exchange and model interoperability [52–54]. Similarly, Fast Healthcare Interoperability Resources (FHIR) offers a flexible, standardized information exchange solution, facilitating real-time accessibility of structured data [55].

Hospitals, device and EHR companies must contribute to the adoption of recognized standards to make sure interoperability is not a barrier to AI implementation.

Beyond structured data, AI has the potential to enhance data standardization by automatically tagging and labelling data sources, tracking provenance, and harmonizing data formats across institutions. Leveraging AI for these tasks can help mitigate data inconsistencies, thereby improving the reliability and scalability of AI-driven clinical applications.

Prioritize data safety, security, and patient privacy

Data safety, security and privacy are all needed for the application of AI in critical care. Data safety refers to the protection of data from accidental loss or system failure, while data security is related with defensive strategies for malicious attacks including hacking, ransomware, or unauthorized data access [56]. In modern hospitals, data safety and security will soon become as essential as wall oxygen in operating rooms [57, 58]. A corrupted or hacked clinical dataset during hospital care could be as catastrophic as losing electricity, medications, or oxygen. Finally, data privacy focuses on the safeguard of personally information, ensuring that patient data is stored and accessed in compliance with legal standards [56].

Implementing AI that prioritizes these three pillars will be critical for resilient digital infrastructure in healthcare. A possible option for the medical community is to support open-source modes to increase transparency and reduce dependence on proprietary algorithms, and possibly enable better control of safety and privacy issues within the distributed systems [59]. However, sustaining open-source innovation requires appropriate incentives, such as public or dedicated research funding, academic recognition, and regulatory support to ensure high-quality development and long-term viability [60]. Without such strategies, the role of open-source models will be reduced, with the risk of ceding a larger part of control of clinical decision-making to commercial algorithms.

Develop rigorous AI research methodology

We believe AI research should be held to the same methodological standards of other areas of medical research. Achieving this will require greater accountability from peer reviewers and scientific journals to ensure rigor, transparency, and clinical relevance.

Furthermore, advancing AI in ICU research requires a transformation in the necessary underlying infrastructure, particularly when considering high-frequency data collection and the integration of complex, multimodal patient information, detailed in the sections below. In this context, the gap in data resolution between highly monitored environments such as ICUs and standard wards become apparent. The ICU provides a high level of data granularity due to high resolution monitoring systems, capable of capturing the rapid changes in a patient's physiological status [61]. Consequently, the integration of this new source of high-volume, rapidly changing physiological data into medical research and clinical practice could give rise to “physiolomics”, a proposed term to describe this domain, that could become as crucial as genomics, proteomics and other “-omics” fields in advancing personalized medicine.

AI will change how clinical research is performed, improving evidence-based medicine and conducting randomized clinical trials (RCTs) [62]. Instead of using large, heterogeneous trial populations, AI might help researchers design and enrol tailored patient subgroups for precise RCTs [63, 64]. These precision methods could solve the problem of negative critical care trials related to inhomogeneities in the population and significant confounding effects. AI could thus improve RCTs by allowing the enrolment of very subtle subgroups of patients with hundreds of specific inclusion criteria over dozens of centres, a task impossible to perform by humans in real-time practice, improving trial efficiency in enrolling enriched populations [65–67]. In the TBI example cited, conducting an RCT on the six AI-identified endotypes—such as patients with moderate GCS but severe metabolic derangement—would be unfeasible without AI stratification [38]. This underscores AI’s potential to enable precision trial designs in critical care.

There are multiple domains for interaction between AI and RCT, though a comprehensive review is beyond the scope of this paper. These include trial emulation to identify patient populations that may benefit most from an intervention, screening for the most promising drugs for interventions, detecting heterogeneity of treatment effects, and automated screening to improve the efficiency and cost of clinical trials.

Ensuring that AI models are clinically effective, reproducible, and generalizable requires adherence to rigorous methodological standards, particularly in critical care where patient heterogeneity, real-time decision-making, and high-frequency data collection pose unique challenges. Several established reporting and validation frameworks already provide guidance for improving AI research in ICU settings. While these frameworks are not specific to the ICU environment, we believe these should be rapidly disseminated into the critical care community through dedicated initiatives, courses and scientific societies.

For predictive models, the TRIPOD-AI extension of the TRIPOD guidelines focuses on transparent reporting for clinical prediction models with specific emphasis on calibration, internal and external validation, and fairness [68]. PROBAST-AI framework complements this by offering a structured tool to assess risk of bias and applicability in prediction model studies [69]. CONSORT-AI extends the CONSORT framework to include AI-specific elements such as algorithm transparency and reproducibility for interventional trials with AI [70], while STARD-AI provides a framework for reporting AI-based diagnostic accuracy studies [71]. Together, these guidelines encompass several issues related to transparency, reproducibility, fairness, external validation, and human oversight—principles that must be considered foundational for any trustworthy AI research in healthcare. Despite the availability of these frameworks, many ICU studies involving AI methods still fail to meet these standards, leading to concerns about inadequate external validation and generalizability [68, 72, 73].

Beyond prediction models, critical care-specific guidelines proposed in recent literature offer targeted recommendations for evaluating AI tools in ICU environments, particularly regarding data heterogeneity, patient safety, and integration with clinical workflows. Moving forward, AI research in critical care must align with these established frameworks and adopt higher methodological standards, such as pre-registered AI trials, prospective validation in diverse ICU populations, and standardized benchmarks for algorithmic performance.

Encourage collaborative AI models

Centralizing data collection from multiple ICUs, or federating them into structured networks, enhances external validity and reliability by enabling a scale of data volume that would be unattainable for individual institutions alone [74]. ICUs are at the forefront of data sharing efforts, offering several publicly available datasets for use by the research community [75]. There are several strategies to build collaborative databases. Networking refers to collaborative research consortia [76] that align protocols and pool clinical research data across institutions. Federated learning, by contrast, involves a decentralized approach where data are stored locally and only models or weights are shared between centres [77]. Finally, centralized approaches, such as the Epic Cosmos initiative, leverage de-identified data collected from EHR and stored on a central server providing access to large patient populations for research and quality improvement purposes across the healthcare system [78]. Federated learning is gaining traction in Europe, where data privacy regulations have a more risk-averse approach to AI development, thus favouring decentralized models [79]. In contrast, centralized learning approaches like Epic Cosmos are more common in the United States, where there is a more risk-tolerant environment which favours large-scale data aggregation.

In parallel, the use of synthetic data is emerging as a complementary strategy to enable data sharing while preserving patient privacy. Synthetic datasets are artificially generated to reflect the characteristics of real patient data and can be used to train and test models without exposing sensitive information [80]. The availability of large-scale data, may also support the creation of digital twins. Digital twins, or virtual simulations that mirror an individual’s biological and clinical state and rely on high-volume, high-fidelity datasets, may allow for predictive modelling and virtual testing of interventions before bedside application and improve safety of interventions.

The ICU community should advocate for the diffusion of further initiatives to extended collaborative AI models at national and international level.

Governance and regulation for AI in Critical Care

Despite growing regulatory efforts, AI regulation remains one of the greatest hurdles to clinical implementation, particularly in high-stakes environments like critical care, as regulatory governance, surveillance, and evaluation of model performance are not only conceptually difficult, but also require a large operational effort across diverse healthcare settings. The recent European Union AI Act introduced a risk-based regulatory framework, classifying medical AI as high-risk and requiring stringent compliance with transparency, human oversight, and post-market monitoring [18]. While these regulatory efforts provide foundational guidance, critical care AI presents unique challenges requiring specialized oversight.

By integrating regulatory, professional, and institutional oversight, AI governance in critical care can move beyond theoretical discussions toward actionable policies that balance technological innovation with patient safety [73, 81, 82].

Grant collaboration between public and private sector

Given the complexity and significant economic, human, and computational resources needed to develop a large generative AI model, physicians and regulators should promote partnerships among healthcare institutions, technology companies, and governmental bodies to support the research, development, and deployment of AI-enabled care solutions [83]. Beyond regulatory agencies, professional societies and institutional governance structures must assume a more active role. Organizations such as Society of Critical Care Medicine (SCCM), European Society of Intensive Care Medicine (ESICM), and regulatory bodies like the European Medical Agency (EMA) should establish specific clinical practice guidelines for AI in critical care, including standards for model validation, clinician–AI collaboration, and accountability. Regulatory bodies should operate at both national and supranational levels, with transparent governance involving multidisciplinary representation—including clinicians, data scientists, ethicists, and patient advocates—to ensure decisions are both evidence-based and ethically grounded. To avoid postponing innovation indefinitely, regulation should be adaptive and proportionate, focusing on risk-based oversight and continuous post-deployment monitoring rather than rigid pre-market restrictions. Furthermore, implementing mandatory reporting requirements for AI performance and creating hospital-based AI safety committees could offer a structured, practical framework to safeguard the ongoing reliability and safety of clinical AI applications.

Address AI divide to improve health equality

The adoption of AI may vary significantly across various geographic regions, influenced by technological capacities, (i.e. disparities in access to software or hardware resources), and differences in investments and priorities between countries. This “AI divide” can separate those with high access to AI from those with limited or no access, exacerbating social and economic inequalities.

The EU commission has been proposed to act as an umbrella to coordinate EU wide strategies to reduce the AI divide between European countries, implementing coordination and supporting programmes of activities [84]. The use of specific programmes, such as Marie-Curie training networks, is mentioned here to strengthen the human capital on AI while developing infrastructures and implementing common guidelines and approaches across countries.

A recent document from the United Nations also addresses the digital divide across different economic sectors, recommending education, international cooperation, and technological development for an equitable AI resource and infrastructure allocation [85].

Accordingly, the medical community in each country should lobby at both national level and international level through society and WHO for international collaborations, such as through the development of specific grants and research initiatives. Intensivist should require supranational approaches to standardized data collection and require policies for AI technology and data analysis. Governments, UN, WHO, and scientific society should be the target of this coordinated effort.

Continuous evaluation of dynamic models and post-marketing surveillance

A major limitation in current regulation is the lack of established pathways for dynamic AI models. AI systems in critical care are inherently dynamic, evolving as they incorporate new real-world data, while most FDA approvals rely on static evaluation. In contrast, the EU AI Act emphasizes continuous risk assessment [18]. This approach should be expanded globally to enable real-time auditing, validation, and governance of AI-driven decision support tools in intensive care units, as well as applying to post-market surveillance. The EU AI Act mandates ongoing surveillance of high-risk AI systems, a principle that we advocate to be adopted internationally to mitigate the risks of AI degradation and bias drift in ICU environments. In practice, this requires AI commercial entities to provide post-marketing surveillance plans and to report serious incidents within a predefined time window (15 days or less) [18]. Companies should also maintain this monitoring as the AI systems evolve over time. The implementation of these surveillance systems should include standardized monitoring protocols, embedded incident reporting tools within clinical workflows, participation in performance registries, and regular audits. These mechanisms are overseen by national Market Surveillance Authorities (MSAs), supported by EU-wide guidance and upcoming templates to ensure consistent and enforceable oversight of clinical AI systems.

Require adequate regulations for AI deployment in clinical practice

Deploying AI within complex clinical environments like the ICU, acute wards, or even regular wards presents a complex challenge [86].

We underline three aspects for adequate regulation: first, a rigorous regulatory process for evaluation of safety and efficacy before clinical application of AI products. A second aspect is related with continuous post-market evaluation, which should be mandatory and conducted according to other types of medical devices [18].

The third important aspect is liability, identifying who should be held accountable if an AI decision or a human decision based on AI leads to harm. This relates with the necessity for adequate insurance policies. We urge regulatory bodies in each country to provide regulations on these issues, which are fundamental for AI diffusion.

We also recommend that both patients and clinicians request that regulatory bodies in each country update current legislation and regulatory pathways, including clear rules for insurance policies to anticipate and reduce the risk for case laws.

Conclusions

In this paper, we outlined key barriers to the adoption of AI in critical care and proposed actionable recommendations across four domains: 1) ensuring human-centric and ethical AI use, 2) promoting training for clinical application, 3) standardizing data infrastructure while safeguarding security and privacy, and 4) strengthening governance and regulation. These recommendations involve all possible healthcare stakeholders, including physicians and healthcare providers, patients, industry and academic developers, and regulators. Ultimately, the AI revolution is not about technological development, but about how it will affect human relations with patients, their families, and physicians [87].

Physicians may increasingly use AI to support clinical decision-making, yet the core values of medical practice—human connection, empathy, and the patient-physician relationship—must not be violated. We call on the global critical care community to collaborate in shaping this innovative future to ensure that AI integration enhances, rather than erodes, the quality of care and patient well-being.

Acknowledgements

Not applicable

Author contributions

MC, MG, AB, BS and JLV wrote the first draft. All authors have contributed the discussion and to the final manuscript's discussion, revision, and approval.

Funding

Author LAC is funded by the National Institute of Health through DS-I Africa U54 TW012043-01 and Bridge2AI OT2OD032701, the National Science Foundation through ITEST #2148451, and a grant of the Korea Health Technology R&D Project through the Korea Health Industry Development Institute (KHIDI), funded by the Ministry of Health & Welfare, Republic of Korea (grant number: RS-2024-00403047).

Data availability

No datasets were generated or analysed during the current study.

Declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

All authors consent to this publication.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Deo RC. Machine learning in medicine. Circulation. 2015;132:1920–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019;25:44–56. [DOI] [PubMed] [Google Scholar]

- 3.Shillan D, Sterne JAC, Champneys A, Gibbison B. Use of machine learning to analyse routinely collected intensive care unit data: A systematic review. Crit Care 2019; 23 10.1186/S13054-019-2564-9. [DOI] [PMC free article] [PubMed]

- 4.Yang Z, Cui X, Song Z. Predicting sepsis onset in ICU using machine learning models: a systematic review and meta-analysis. BMC Infect Dis. 2023;23:1–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Shakibfar S, Nyberg F, Li H, et al. Artificial intelligence-driven prediction of COVID-19-related hospitalization and death: a systematic review. Front Public Health. 2023; 11 10.3389/FPUBH.2023.1183725. [DOI] [PMC free article] [PubMed]

- 6.Choudhury A, Asan O. Role of Artificial Intelligence in Patient Safety Outcomes: Systematic Literature Review. JMIR Med Inform. 2020;8: e18599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Zhang J, Zhang Z-M. Ethics and governance of trustworthy medical artificial intelligence. BMC Med Inform Decis Mak. 2023;23:7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Richardson JP, Smith C, Curtis S, et al. Patient apprehensions about the use of artificial intelligence in healthcare. NPJ Digit Med. 2021;4:140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kwong JCC, Nguyen D-D, Khondker A, et al. When the Model Trains You: Induced Belief Revision and Its Implications on Artificial Intelligence Research and Patient Care — A Case Study on Predicting Obstructive Hydronephrosis in Children. NEJM AI 2024; 1. 10.1056/AICS2300004.

- 10.Yu K-H, Healey E, Leong T-Y, Kohane IS, Manrai AK. Medical Artificial Intelligence and Human Values. N Engl J Med. 2024;390:1895–904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hogg HDJ, Al-Zubaidy M, Talks J, et al. Stakeholder Perspectives of Clinical Artificial Intelligence Implementation: Systematic Review of Qualitative Evidence. J Med Internet Res 2023;25:e39742https://www.jmir.org/2023/1/e39742 2023; 25: e39742. [DOI] [PMC free article] [PubMed]

- 12.Kerasidou A, Kerasidou X (Charalampia). AI in Medicine. 2021. [PubMed]

- 13.Omiye JA, Lester JC, Spichak S, Rotemberg V, Daneshjou R. Large language models propagate race-based medicine. npj Digital Medicine 2023 6:1 2023; 6: 1–4. [DOI] [PMC free article] [PubMed]

- 14.Rony MKK, Parvin MR, Wahiduzzaman M, Debnath M, Bala S Das, Kayesh I. “I Wonder if my Years of Training and Expertise Will be Devalued by Machines”: Concerns About the Replacement of Medical Professionals by Artificial Intelligence. SAGE Open Nurs 2024; 10. 10.1177/23779608241245220/ASSET/IMAGES/LARGE/10.1177_23779608241245220-FIG1.JPEG. [DOI] [PMC free article] [PubMed]

- 15.Perry Wilson F, Martin M, Yamamoto Y, et al. Electronic health record alerts for acute kidney injury: multicenter, randomized clinical trial. BMJ 2021; 372. 10.1136/BMJ.M4786. [DOI] [PMC free article] [PubMed]

- 16.Ji Z, Lee N, Frieske R, et al. Survey of Hallucination in Natural Language Generation. ACM Comput Surv 2023; 55. 10.1145/3571730.

- 17.Oniani D, Hilsman J, Peng Y, et al. Adopting and expanding ethical principles for generative artificial intelligence from military to healthcare. npj Digital Medicine 2023 6:1 2023; 6: 1–10. [DOI] [PMC free article] [PubMed]

- 18.EU Artificial Intelligence Act| Up-to-date developments and analyses of the EU AI Act. https://artificialintelligenceact.eu/ (accessed July 6, 2024).

- 19.Artificial Intelligence and Machine Learning in Software as a Medical Device| FDA. https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-software-medical-device (accessed July 6, 2024).

- 20.Tierney AA, Gayre G, Hoberman B, et al. Ambient Artificial Intelligence Scribes to Alleviate the Burden of Clinical Documentation. NEJM Catal 2024; 5. 10.1056/CAT.23.0404/ASSET/306B0943-50F3-4D5F-917B-797FC28F3BF6/ASSETS/GRAPHIC/CAT.23.0404-F3.PNG.

- 21.HIMSS24: How Epic is building out AI, ambient tech in EHRs. https://www.fiercehealthcare.com/ai-and-machine-learning/himss24-how-epic-building-out-ai-ambient-technology-clinicians (accessed July 5, 2024).

- 22.Chen S, Guevara M, Moningi S, et al. The effect of using a large language model to respond to patient messages. Lancet Digit Health. 2024;6:e379–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Lin S. A Clinician’s Guide to Artificial Intelligence (AI): Why and How Primary Care Should Lead the Health Care AI Revolution. J Am Board Fam Med. 2022;35:175. [DOI] [PubMed] [Google Scholar]

- 24.Pyrros A, Rodríguez-Fernández JM, Borstelmann SM, et al. Detecting Racial/Ethnic Health Disparities Using Deep Learning From Frontal Chest Radiography. J Am Coll Radiol. 2022;19:184–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zack T, Lehman E, Suzgun M, et al. Assessing the potential of GPT-4 to perpetuate racial and gender biases in health care: a model evaluation study. Lancet Digit Health. 2024;6:e12-22. [DOI] [PubMed] [Google Scholar]

- 26.van de Sande D, van Genderen ME, Huiskens J, Gommers D, van Bommel J. Moving from bytes to bedside: a systematic review on the use of artificial intelligence in the intensive care unit. Intensive Care Med. 2021;47:750–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Shamout F, Zhu T, Clifton DA. Machine Learning for Clinical Outcome Prediction. IEEE Rev Biomed Eng. 2021;14:116–26. [DOI] [PubMed] [Google Scholar]

- 28.Levi R, Carli F, Arévalo AR, et al. Artificial intelligence-based prediction of transfusion in the intensive care unit in patients with gastrointestinal bleeding. BMJ Health Care Inform 2021; 28. 10.1136/BMJHCI-2020-100245. [DOI] [PMC free article] [PubMed]

- 29.Hah H, Goldin D. Moving toward AI-assisted decision-making: Observation on clinicians’ management of multimedia patient information in synchronous and asynchronous telehealth contexts. Health Informatics J 2022; 28. 10.1177/14604582221077049/ASSET/IMAGES/LARGE/10.1177_14604582221077049-FIG4.JPEG. [DOI] [PubMed]

- 30.Shen J, Zhang CJP, Jiang B, et al. Artificial intelligence versus clinicians in disease diagnosis: Systematic review. JMIR Med Inform 2019; 7. 10.2196/10010. [DOI] [PMC free article] [PubMed]

- 31.Zhang A, Xing L, Zou J, Wu JC. Shifting machine learning for healthcare from development to deployment and from models to data. Nature Biomedical Engineering 2022 6:12 2022; 6: 1330–45. [DOI] [PMC free article] [PubMed]

- 32.Shickel B, Loftus TJ, Adhikari L, Ozrazgat-Baslanti T, Bihorac A, Rashidi P. DeepSOFA: A Continuous Acuity Score for Critically Ill Patients using Clinically Interpretable Deep Learning. Sci Rep 2019; 9. 10.1038/S41598-019-38491-0. [DOI] [PMC free article] [PubMed]

- 33.Crigger E, Reinbold K, Hanson C, Kao A, Blake K, Irons M. Trustworthy Augmented Intelligence in Health Care. J Med Syst. 2022;46:12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Vaccaro M, Almaatouq A, Malone T. When combinations of humans and AI are useful: A systematic review and meta-analysis. Nature Human Behaviour 2024 8:12 2024; 8: 2293–303. [DOI] [PMC free article] [PubMed]

- 35.Seymour CW, Kennedy JN, Wang S, et al. Derivation, Validation, and Potential Treatment Implications of Novel Clinical Phenotypes for Sepsis. JAMA. 2019;321:2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Peikari M, Salama S, Nofech-Mozes S, Martel AL. A Cluster-then-label Semi-supervised Learning Approach for Pathology Image Classification. Scientific Reports 2018; 8: 1–13. [DOI] [PMC free article] [PubMed]

- 37.Zhang Z, Chen L, Sun B, et al. Identifying septic shock subgroups to tailor fluid strategies through multi-omics integration. Nat Commun. 2024;15:9028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Åkerlund CAI, Holst A, Stocchetti N, et al. Clustering identifies endotypes of traumatic brain injury in an intensive care cohort: a CENTER-TBI study. Crit Care. 2022;26:1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Mathew D, Giles JR, Baxter AE, et al. Deep immune profiling of COVID-19 patients reveals distinct immunotypes with therapeutic implications. Science. 1979;2020:369. 10.1126/science.abc8511. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Sinha P, Furfaro D, Cummings MJ, et al. Latent class analysis reveals COVID-19-related acute respiratory distress syndrome subgroups with differential responses to corticosteroids. Am J Respir Crit Care Med. 2021;204:1274–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Maslove DM, Tang B, Shankar-Hari M, et al. Redefining critical illness. Nature Medicine 2022 28:6 2022; 28: 1141–8. [DOI] [PubMed]

- 42.Wood EA, Ange BL, Miller DD. Are We Ready to Integrate Artificial Intelligence Literacy into Medical School Curriculum: Students and Faculty Survey. J Med Educ Curric Dev. 2021;8:238212052110240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kung TH, Cheatham M, Medenilla A, et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digital Health. 2023;2: e0000198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Narayanan S, Ramakrishnan R, Durairaj E, Das A. Artificial Intelligence Revolutionizing the Field of Medical Education. Cureus. 2023;15: e49604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Groen AM, Kraan R, Amirkhan SF, Daams JG, Maas M. A systematic review on the use of explainability in deep learning systems for computer aided diagnosis in radiology: Limited use of explainable AI? Eur J Radiol 2022; 157. 10.1016/j.ejrad.2022.110592. [DOI] [PubMed]

- 46.Kempt H, Heilinger JC, Nagel SK. “I’m afraid I can’t let you do that, Doctor”: meaningful disagreements with AI in medical contexts. AI Soc. 2023;38:1407–14. [Google Scholar]

- 47.Chen JH, Dhaliwal G, Yang D. Decoding Artificial Intelligence to Achieve Diagnostic Excellence: Learning From Experts, Examples, and Experience. JAMA. 2022;328:709–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Loftus TJ, Shickel B, Ruppert MM, et al. Uncertainty-aware deep learning in healthcare: A scoping review. PLOS Digital Health. 2022;1: e0000085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Jin W, Li X, Hamarneh G. Why is plausibility surprisingly problematic as an XAI criterion? 2023; published online March 30. https://arxiv.org/abs/2303.17707v3 (accessed Feb 10, 2025).

- 50.Wolff J, Pauling J, Keck A, Baumbach J. The economic impact of artificial intelligence in health care: Systematic review. J Med Internet Res 2020; 22 10.2196/16866. [DOI] [PMC free article] [PubMed]

- 51.Chen J, Chun D, Patel M, Chiang E, James J. The validity of synthetic clinical data: A validation study of a leading synthetic data generator (Synthea) using clinical quality measures. BMC Med Inform Decis Mak 2019; 19. 10.1186/S12911-019-0793-0,. [DOI] [PMC free article] [PubMed]

- 52.Stang PE, Ryan PB, Racoosin JA, et al. Advancing the science for active surveillance: Rationale and design for the observational medical outcomes partnership. Ann Intern Med. 2010;153:600–6. [DOI] [PubMed] [Google Scholar]

- 53.McDonald CJ, Huff SM, Suico JG, et al. LOINC, a universal standard for identifying laboratory observations: A 5-year update. Clin Chem. 2003;49:624–33. [DOI] [PubMed] [Google Scholar]

- 54.SNOMED CT. https://www.nlm.nih.gov/healthit/snomedct/index.html (accessed June 14, 2025).

- 55.Resourcelist - FHIR v5.0.0. https://www.hl7.org/fhir/resourcelist.html (accessed June 14, 2025).

- 56.Ewoh P, Vartiainen T. Vulnerability to Cyberattacks and Sociotechnical Solutions for Health Care Systems: Systematic Review. J Med Internet Res. 2024;26: e46904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.McCoy TH, Perlis RH. Temporal Trends and Characteristics of Reportable Health Data Breaches, 2010–2017. JAMA. 2018;320:1282–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Jiang JX, Ross JS, Bai G. Ransomware Attacks and Data Breaches in US Health Care Systems. JAMA Netw Open 2025; 8. 10.1001/JAMANETWORKOPEN.2025.10180,. [DOI] [PMC free article] [PubMed]

- 59.Hahn E, Blazes D, Lewis S. Understanding How the ‘Open’ of Open Source Software (OSS) Will Improve Global Health Security. Health Secur. 2016;14:13–8. [DOI] [PubMed] [Google Scholar]

- 60.Kobayashi S, Kane TB, Paton C. The Privacy and Security Implications of Open Data in Healthcare. Yearb Med Inform. 2018;27:41–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Ren IdY, Loftus Id TJ, Li Y, et al. Physiologic signatures within six hours of hospitalization identify acute illness phenotypes. PLOS Digital Health. 2022;1: e0000110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Angus DC. Randomized Clinical Trials of Artificial Intelligence. JAMA. 2020;323:1043–5. [DOI] [PubMed] [Google Scholar]

- 63.Komorowski M, Lemyze M. Informing future intensive care trials with machine learning. Br J Anaesth. 2019;123:14–6. [DOI] [PubMed] [Google Scholar]

- 64.Seitz KP, Spicer AB, Casey JD, et al. Individualized Treatment Effects of Bougie versus Stylet for Tracheal Intubation in Critical Illness. Am J Respir Crit Care Med. 2023;207:1602–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.PANTHER– Precision medicine Adaptive Network platform Trial in Hypoxemic acutE respiratory failuRe - ERS - European Respiratory Society. https://www.ersnet.org/science-and-research/clinical-research-collaboration-application-programme/panther-precision-medicine-adaptive-network-platform-trial-in-hypoxemic-acute-respiratory-failure/ (accessed June 14, 2025).

- 66.Bhavani SV, Holder A, Miltz D, et al. The Precision Resuscitation With Crystalloids in Sepsis (PRECISE) Trial: A Trial Protocol. JAMA Netw Open. 2024;7: e2434197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Wijnberge M, Geerts BF, Hol L, et al. Effect of a Machine Learning-Derived Early Warning System for Intraoperative Hypotension vs Standard Care on Depth and Duration of Intraoperative Hypotension during Elective Noncardiac Surgery: The HYPE Randomized Clinical Trial. JAMA - Journal of the American Medical Association. 2020;323:1052–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Collins GS, Moons KGM, Dhiman P, et al. TRIPOD+AI statement: updated guidance for reporting clinical prediction models that use regression or machine learning methods. BMJ. 2024;385: e078378. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Moons KGM, Damen JAA, Kaul T, et al. PROBAST+AI: an updated quality, risk of bias, and applicability assessment tool for prediction models using regression or artificial intelligence methods. BMJ 2025; 388. 10.1136/BMJ-2024-082505. [DOI] [PMC free article] [PubMed]

- 70.Liu X, Cruz Rivera S, Moher D, et al. Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI extension. Nat Med. 2020;26:1364–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Sounderajah V, Ashrafian H, Golub RM, et al. Developing a reporting guideline for artificial intelligence-centred diagnostic test accuracy studies: the STARD-AI protocol. BMJ Open. 2021;11: e047709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Collins GS, Reitsma JB, Altman DG, Moons KGM. Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD). Ann Intern Med. 2015;162:735–6. [DOI] [PubMed] [Google Scholar]

- 73.Leisman DE, Harhay MO, Lederer DJ, et al. Development and Reporting of Prediction Models: Guidance for Authors From Editors of Respiratory, Sleep, and Critical Care Journals. Crit Care Med. 2020;48:623–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Sauer CM, Dam TA, Celi LA, et al. Systematic Review and Comparison of Publicly Available ICU Data Sets-A Decision Guide for Clinicians and Data Scientists. Crit Care Med. 2022;50:E581–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Sauer CM, Dam TA, Celi LA, et al. Systematic Review and Comparison of Publicly Available ICU Data Sets—A Decision Guide for Clinicians and Data Scientists. Crit Care Med. 2022;50: e581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Benthin C, Pannu S, Khan A, Gong M. The nature and variability of automated practice alerts derived from electronic health records in a U.S. nationwide critical care research network. Ann Am Thorac Soc 2016; 13: 1784–8. [DOI] [PMC free article] [PubMed]

- 77.Li R, Romano JD, Chen Y, Moore JH. Centralized and Federated Models for the Analysis of Clinical Data. Annu Rev Biomed Data Sci. 2024;7:179–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Tarabichi Y, Frees A, Honeywell S, et al. The Cosmos Collaborative: A Vendor-Facilitated Electronic Health Record Data Aggregation Platform. ACI open. 2021;5: e36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Brauneck A, Schmalhorst L, Majdabadi MMK, et al. Federated Machine Learning, Privacy-Enhancing Technologies, and Data Protection Laws in Medical Research: Scoping Review. J Med Internet Res. 2023;25: e41588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Tao F, Qi Q. Make more digital twins. Nature 2021 573:7775 2019; 573: 490–1. [DOI] [PubMed]

- 81.Warraich HJ, Tazbaz T, Califf RM. FDA Perspective on the Regulation of Artificial Intelligence in Health Care and Biomedicine. JAMA. 2025;333:241–7. [DOI] [PubMed] [Google Scholar]

- 82.Nong P, Hamasha R, Singh K, Adler-Milstein J, Platt J. How Academic Medical Centers Govern AI Prediction Tools in the Context of Uncertainty and Evolving Regulation. NEJM AI 2024; 1. 10.1056/AIP2300048.

- 83.Reddy S, Rogers W, Makinen VP, et al. Evaluation framework to guide implementation of AI systems into healthcare settings. BMJ Health Care Inform. 2021;28: 100444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Artificial Intelligence in Healthcare. https://www.europarl.europa.eu/RegData/etudes/STUD/2022/729512/EPRS_STU(2022)729512_EN.pdf (accessed June 12, 2025).

- 85.United nations. Mind the AI Divide: Shaping a Global Perspective on the Future of Work. https://www.un.org/digital-emerging-technologies/sites/www.un.org.techenvoy/files/MindtheAIDivide.pdf (accessed June 12, 2025).

- 86.Greco M, Caruso PF, Cecconi M. Artificial Intelligence in the Intensive Care Unit. Semin Respir Crit Care Med. 2020. 10.1055/s-0040-1719037. [DOI] [PubMed] [Google Scholar]

- 87.Leenen JPL, Ardesch V, Kalkman CJ, Schoonhoven L, Patijn GA. Impact of wearable wireless continuous vital sign monitoring in abdominal surgical patients: before–after study. BJS Open 2024; 8. 10.1093/BJSOPEN/ZRAD128. [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

No datasets were generated or analysed during the current study.