ABSTRACT

Introduction

This study aimed to investigate the attitude of medical and paramedical students at Mashhad (Northeast of Iran) regarding artificial intelligence (AI).

Methods

This cross‐sectional study was conducted from April to June 2023. A predeveloped standard questionnaire was used to collect the data. We used the proportional per‐size sampling method with email distribution containing explanations and a link to a web‐based survey.

Results

A total of 208 students answered the survey (response rate: 98%). We did not find any significant difference between the AI attitude of students in the academic field (p = 0.505), professional interest (p = 0.609), and educational level (p = 0.128). Overall, 61.9% of medical and 67.4% of paramedical students were optimistic about the role of AI. However, a considerable proportion of students struggled to accurately define AI, reflecting gaps in conceptual familiarity rather than technical knowledge (paramedical students = 56.62% and medical students = 45.26%).

Conclusion

This study explored medical and paramedical students' perceived understanding of AI and their conceptual limitations. Identifying the positive and negative attitudes of students who are the future employees of the healthcare system can help planners and policymakers in the field of AI in health design the road map of the future of AI in health in the best way.

Keywords: AI, artificial intelligence, attitude, medical student, paramedical students, perspectives

1. Introduction

Artificial intelligence (AI) refers to the development of intelligent systems capable of mimicking human cognitive functions, such as learning, reasoning, and problem‐solving, through the analysis of large datasets [1]. Over the past decades, AI has evolved rapidly and has been recognized as a key component of the fourth industrial revolution [2, 3, 4, 5, 6, 7]. In healthcare, AI has shown promising applications, including improving diagnostic accuracy, optimizing treatment strategies, and enhancing patient outcomes [8, 9, 10].

Among AI‐driven technologies, decision support systems have demonstrated significant utility in clinical practice, particularly in disease prediction, personalized treatment planning, and resource allocation [11, 12]. AI‐powered deep learning models are widely used in radiology, dermatology, and pathology for automated image‐based diagnosis [13, 14, 15, 16]. Machine learning—a subset of AI—enables systems to autonomously analyze vast amounts of medical data, identify complex patterns, and generate predictive models without explicit programming [17]. AI applications are expanding beyond imaging, finding use in cardiovascular signal processing, mental health diagnostics through natural language processing, and predictive modeling for early disease detection [18, 19, 20, 21, 22]. These developments highlight AI's growing role in transforming various medical domains.

Despite these advancements, the successful implementation of AI in healthcare is influenced by multiple factors, including healthcare providers' awareness, acceptance, and preparedness [23, 24, 25, 26]. Several studies have assessed physicians' perceptions of AI. For example, a study on ophthalmologists indicated that while 89% of respondents acknowledged the concept of AI, 75% believed it should be formally integrated into clinical training [27]. Another survey in Australia and New Zealand examined healthcare professionals' attitudes toward AI‐driven robotic solutions, emphasizing that the success of AI applications relies on both professional and public perception [23]. Furthermore, a study by Martinho et al. [28] identified four main perspectives among physicians regarding AI ethics: (1) AI as a beneficial tool that enhances physicians' efficiency, (2) the necessity of strict regulations, (3) trust in private technology companies, and (4) the importance of explainability to ensure clinical acceptance. Additionally, Klumpp et al. [29] highlighted AI's diverse applications in hospital settings, ranging from data management to human‐computer interaction, underscoring the importance of tailored implementation strategies.

While AI literacy is becoming an integral part of medical education in developed countries [30, 31], its integration into medical curricula in low‐ and middle‐income countries (LMICs) remains limited. AI‐driven automation has raised concerns among students regarding job displacement, ethical challenges, and the reliability of AI systems, potentially contributing to professional uncertainty [32]. However, studies assessing healthcare students' perspectives on AI are scarce, particularly in LMICs, where AI integration faces infrastructural, educational, and policy‐related barriers [33]. In many high‐income countries, structured AI training is increasingly incorporated into medical education [34], but in developing countries like Iran, AI education remains largely absent from formal curricula [33, 35]. This gap is exacerbated by limited institutional support, scarce AI research funding, and insufficient digital literacy among healthcare students. Furthermore, the COVID‐19 pandemic has highlighted the potential of AI in streamlining healthcare processes, reducing workload, and minimizing diagnostic errors [36], making AI education more critical than ever.

Understanding students' knowledge, attitudes, and educational needs regarding AI is essential for developing AI‐integrated medical curricula tailored to the specific challenges of LMICs. Most previous studies have focused on high‐income countries, with limited evidence on how students in resource‐limited settings perceive AI and its implications for their future careers [37]. This study aims to explore the perceived understanding and attitudes of medical and paramedical students toward AI at Mashhad University of Medical Sciences (MUMS), Iran. Specifically, we seek to:

-

1.

Evaluate students' AI literacy and perceptions across different medical and paramedical disciplines.

-

2.

Highlight areas of conceptual uncertainty and barriers to AI education in an LMIC setting.

-

3.

Determine preferred AI learning approaches and students' perspectives on curriculum integration.

By addressing these objectives, this study contributes to the growing discourse on AI education in healthcare, providing insights to guide curriculum development in LMICs and ensure the future workforce is adequately prepared for AI‐driven transformations in medicine.

2. Material and Methods

2.1. Study Design

The period of this cross‐sectional investigation was April–June 2023. According to Helinsky's assertion, every ethical research guideline has been followed in this investigation. The students gave their informed consent before participating in the study once it was explained to them. All personal data has been used in a discreet manner, without revealing identities.

2.2. Sample Size

The research population included medical and paramedical students of MUMS, Mashhad, Iran, in all available fields. Hence, the sample size of each faculty and different fields was determined independently and by the proportional per‐size method. The sample size was determined based on the Cochran formula for limited populations with 0.05, pq0.5, a tolerable error rate of d = 0.1, and given the total population size (2191 medical students and 913 paramedical students). Accordingly, a sample of 87 individuals was obtained for paramedical students, and 96 samples were obtained for medical students.

The required sample size for the research design.

The statistic value is equal to the standard normal curve area under curve 2.

and The frequency ratio of the desired trait in the target population.

Effect size or precision.

2.3. Eligibility Criteria

The inclusion criteria were that all medical and paramedical students were studying at the time of this study. On the other hand, the exclusion criteria included transfer and guest students from other universities, students who were on academic leave at the time of the study, and foreign students.

2.4. Data Collection

In this study, a predeveloped and validated questionnaire designed by Teng et al. [18] was used. The original English version was translated into Persian using the forward and backward translation method based on WHO guidelines [38]. Two independent bilingual translators first translated the tool from English to Persian, then back‐translated it to English, and finalized the version through consensus to ensure conceptual equivalence.

The final questionnaire consisted of 15 items, including 11‐point Likert‐scale questions, multiple‐choice items, rank‐ordering questions, and one open‐ended item. The first item asked participants to provide their own definition of AI. These open‐ended responses were independently reviewed and coded by two researchers from health informatics and medical education. Definitions were categorized into four groups: (1) accurate (mentioning autonomous decision‐making, learning, or adaptability); (2) partially accurate (e.g., referring to machine learning only); (3) inaccurate; and (4) “I don't know”. Discrepancies were resolved by a third expert in clinical education. This categorization framework was adapted from Teng et al. (2022), and the standard AI definition used in the survey—“software that can learn from experience, adapt to new inputs, and make decisions”—served as the coding reference.

For the remaining items, students were instructed to refer to the standardized AI definition provided earlier in the survey. These items assessed students' attitudes and sentiments toward AI, such as their optimism, concerns, ethical awareness, and perspectives on AI's anticipated role in their careers and education. Several of these questions employed 11‐point Likert scales (0 = strongly disagree, 10 = strongly agree), enabling quantitative analysis of self‐reported perceptions. Additionally, students indicated their interest in receiving AI‐related education and their preferred formats for such training (e.g., short workshops, formal coursework, or graduate‐level programs). While these items did not directly assess technical knowledge, they offered insight into students' self‐perceived familiarity with AI and the areas in which they felt a need for further education.

The questionnaire covered six major domains: (1) demographic data (age, gender, educational level, and field of study), (2) perceived awareness of AI, (3) attitudes toward the potential impact of AI, (4) preferences for AI curriculum integration, (5) priorities in AI education design, and (6) time and setting preferences for learning AI. The final survey was developed electronically and distributed via institutional email lists among medical and paramedical students at Mashhad University of Medical Sciences.

2.5. Questionnaire Validation

Following the translation of the questionnaire into Persian, face validity was evaluated by five nurses and five physicians, who provided feedback on the content, clarity, readability, and simplicity. Based on their input, the final editions of the questionnaire was formulated. Subsequently, the content validity of the questionnaire was assessed using a six‐member expert panel consisting of specialists in biostatistics, health information management, medical informatics, one specialist physician, and one nurse, employing the Delphi method. The content validity ratio (CVR) and content validity index (CVI) were computed for this target. The minimum acceptable CVR values for the one‐sided tests proposed by Lawshe were 0.99 for the adequacy of content validity for six experts [39]. Following this, to calculate the validity index, experts provided their opinions on the relevance, clarity, simplicity, and transparency of each questionnaire item using a four‐part Likert scale: “completely relevant,” “relevant but requires revision,” “requires significant revision,” and “not relevant.” Consequently, the ratio of the number of experts who rated the questions as “completely relevant” and “relevant but requires revision” to the total number of experts was computed, yielding a CVI value of 0.98 for the developed tool, which is considered acceptable. Finally, to evaluate the internal reliability of the research questionnaire, the Cronbach's alpha method was utilized. Ultimately, the Cronbach's alpha coefficient for the research instrument was determined to be 0.76.

2.6. Statistical Analysis

SPSS VE 11 software was used for data analysis. First, in general, the condition of normality was measured by the Shapiro‐Wilk method, and if the data distribution was not normal or the data were not normalized (after changing the variable), nonparametric techniques were used; alternatively, parametric techniques were used. ANOVA test was used to compare the findings and chi‐square test was used to check the independence between qualitative variables.

3. Results

A total of 208 students answered the survey (response rate: 98%). In light of Table 1, the majority of paramedical students (n = 63; 55.8%) were in the age group of 22–19 years, and the majority of medical students (n = 59; 62.1%) were in the age group of 23–26 years. The majority of paramedical students were female (n = 74; 64.6%), and the majority of medical students were male (n = 49; 51.6%). Also, the majority of paramedical students were at the continuous bachelor's level (n = 84; 74.3%), and the majority of medical students were at the general doctorate level (n = 84; 88.4%). Twenty‐eight medical students (29.5%) were in the field of basic sciences, and 24 (21.2%) were in the field of health information technology.

Table 1.

Details about the participants' demographics.

| Variables | Medical students | Paramedical students | ||

|---|---|---|---|---|

| Gender | ||||

| Female | 46 (48.4) | 74 (64.6) | ||

| Male | 49 (51.6) | 40 (35.4) | ||

| 95 (100.0) | 113 (100.0) | |||

| Age | ||||

| 19–22 | 27 (28.4) | 63 (55.8) | ||

| 23–26 | 59 (62.1) | 34 (30.1) | ||

| 27–30 | 7 (7.4) | 9 (8.0) | ||

| 31–40 | 1 (1.1) | 7 (6.2) | ||

| > 40 | 1 (1.1) | 0 | ||

| Grade | ||||

| Continuous BSc | 1 (1.1) | 84 (74.3) | ||

| Discontinuous BSc | 1 (1.1) | 5 (4.4) | ||

| MSc | 9 (9.5) | 17 (15.0) | ||

| MD | 84 (88.4) | 0 | ||

| Ph.D | 0 | 7 (6.2) | ||

| Year of entry | ||||

| 2014 | 2 (2.2) | 0 | ||

| 2015 | 2 (2.1) | 3 (2.7) | ||

| 2016 | 12 (12.6) | 3 (2.7) | ||

| 2017 | 10 (10.5) | 0 | ||

| 2018 | 17 (17.9) | 4 (3.5) | ||

| 2019 | 22 (23.2) | 15 (13.3) | ||

| 2020 | 6 (6.3) | 19 (16.8) | ||

| 2021 | 20 (21.1) | 36 (31.9) | ||

| 2022 | 4 (4.2) | 33 (29.2) | ||

| Field of study | Basic science | 28 (29.5) | Physiotherapy | 19 (16.8) |

| Intern | 19 (20.0) | Occupational therapy | 14 (12.4) | |

| Stager 1 | 17 (17.9) | Health information technology | 24 (21.2) | |

| Stager 2 | 14 (14.7) | Social work | 4 (3.5) | |

| Physiopath 1 | 4 (4.2) | Laboratory sciences | 10 (8.8) | |

| Physiopath 2 | 13 (13.7) | Radiology | 13 (11.5) | |

| Optometry | 16 (14.2) | |||

| Speech Therapy | 13 (11.5) | |||

| Total | 95 (100.0) | 113 (100) | ||

Table 2 presents descriptive data about the AI attitude among the participants. In total, 88.4% of medical students and 85.8% of paramedical students with a score above 5 supported the development of AI in their field of study. Also, 94.8% of medical students and 90.4% of paramedical students with a score above five stated that AI affects their careers. The majority of medical (78.9%) and paramedical (82.3%) students stated that the principles of AI should be taught to healthcare students. Also, the majority of medical students (73.7%) and paramedical students (72.5%) stated that they are aware of the ethical ramifications of using AI in their line of work.

Table 2.

Descriptive information regarding AI attitudes among participants.

| Questions | Variables | Medical students | Paramedical students |

|---|---|---|---|

| Which of the following, if you are able to select more than one, best sums you up overall? |

|

37 (38.9) | 48 (42.5) |

|

12 (12.6) | 34 (30.1) | |

|

21 (22.1) | 16 (14.2) | |

|

13 (13.7) | 9 (8) | |

|

4 (4.2) | 1(0.9) | |

|

5 (5.3) | 2 (1.8) | |

|

How much do you support or oppose the development of AI in your field of study, on a scale of 0–10? 10 is strong support; 5 is neutral; and 0 is firmly opposed. |

1 | 1 (1.1) | 1 (0.9) |

| 2 | 2 (2.1) | 0 | |

| 3 | 5 (5.3) | 3 (2.7) | |

| 4 | 1 (1.1) | 1 (0.9) | |

| 5 | 2 (2.1) | 11 (9.7) | |

| 6 | 8 (8.4) | 10 (8.8) | |

| 7 | 11 (11.6) | 14 (12.4) | |

| 8 | 20 (21.1) | 21 (18.6) | |

| 9 | 12 (12.6) | 10 (8.8) | |

| 10 | 33 (34.7) | 42 (37.2) | |

| AI, in my opinion, will affect my career. | 1 | 0 | 0 |

| 2 | 0 | 0 | |

| 3 | 1 (1.1) | 1 (0.9) | |

| 4 | 1 (1.1) | 2 (1.8) | |

| 5 | 3 (3.2) | 8 (7.1) | |

| 6 | 3 (3.2) | 3 (2.7) | |

| 7 | 17 (17.9) | 14 (12.4) | |

| 8 | 8 (8.4) | 28 (24.8) | |

| 9 | 9 (9.5) | 15 (13.3) | |

| 10 | 53 (55.8) | 42 (37.2) | |

| In my opinion, AI fundamentals should be taught to healthcare students. | 1 | 0 | 0 |

| 2 | 0 | 0 | |

| 3 | 3 (3.2) | 5 (4.4) | |

| 4 | 4 (4.2) | 5 (4.4) | |

| 5 | 13 (13.7) | 10 (8.8) | |

| 6 | 11 (11.6) | 8 (7.1) | |

| 7 | 14 (14.7) | 12 (10.6) | |

| 8 | 14 (14.7) | 16 (14.2) | |

| 9 | 5 (5.3) | 17 (15) | |

| 10 | 31 (32.6) | 40 (35.4) | |

| I am aware of the moral ramifications of using AI in my line of work. | 1 | 1 (1.1) | 0 |

| 2 | 3 (3.2) | 1 (0.9) | |

| 3 | 2 (2.1) | 2 (1.8) | |

| 4 | 6 (6.3) | 7 (6.2) | |

| 5 | 13 (13.7) | 21 (18.6) | |

| 6 | 8 (8.4) | 10 (8.8) | |

| 7 | 12 (12.6) | 19 (16.8) | |

| 8 | 21 (22.1) | 21 (18.6) | |

| 9 | 7 (7.4) | 11 (9.7) | |

| 10 | 22 (23.2) | 21 (18.6) | |

| The use of AI in my field gives me optimism. | 1 | 1 (1.1) | 3 (2.7) |

| 2 | 3 (3.2) | 2 (1.8) | |

| 3 | 4 (4.2) | 3 (2.7) | |

| 4 | 2 (2.1) | 4 (3.5) | |

| 5 | 7 (7.4) | 12 (10.6) | |

| 6 | 12 (12.6) | 11 (9.7) | |

| 7 | 16 (16.8) | 7 (6.2) | |

| 8 | 14 (14.7) | 21 (18.6) | |

| 9 | 7 (7.4) | 17 (15) | |

| 10 | 29 (30.5) | 33 (29.2) | |

| Regarding the part AI will play in my field, I am concerned. | 1 | 9 (9.5) | 8 (7.1) |

| 2 | 6 (6.3) | 6 (5.3) | |

| 3 | 14 (14.7) | 6 (5.3) | |

| 4 | 5 (5.3) | 11 (9.7) | |

| 5 | 14 (14.7) | 21 (18.6) | |

| 6 | 5 (5.3) | 16 (14.2) | |

| 7 | 6 (6.3) | 8 (7.1) | |

| 8 | 11 (11.6) | 14 (12.4) | |

| 9 | 10 (10.5) | 7 (6.2) | |

| 10 | 15 (15.8) | 16 (14.2) | |

| AI is a technology, in my opinion, that has to be handled carefully. | 1 | 1 (1.1) | 0 |

| 2 | 0 | 0 | |

| 3 | 0 | 0 | |

| 4 | 1 (1.1) | 0 | |

| 5 | 3 (3.2) | 7 (6.2) | |

| 6 | 3 (3.2) | 2 (1.8) | |

| 7 | 13 (13.7) | 9 (8) | |

| 8 | 12(12.6) | 17 (15) | |

| 9 | 13 (13.7) | 16 (14.2) | |

| 10 | 49 (51.6) | 62 (54.9) | |

| Please sum up your thoughts about AI in your field in one word or sentence: | Optimist | 64 (67.4) | 70 (61.9) |

| Pessimistic | 19 (20) | 24(21.2) | |

| No comments | 12 (12.6) | 19 (16.8) | |

| In a single sentence, define AI. | I do not know | 35 (36.8) | 54 (47.8) |

| Correct | 25 (26.3) | 19 (16.8) | |

| Partial correct | 27 (28.4) | 30 (26.5) | |

| Incorrect | 8 (8.4) | 10 (8.8) | |

| What is the timeline you see for AI to affect your career? |

|

19 (20) | 27 (23.9) |

|

32 (33.7) | 47 (41.6) | |

|

37 (38.9) | 23 (20.4) | |

|

6 (6.3) | 13 (11.5) | |

|

1(1.1) | 3 (2.7) | |

| Should you include instruction on the fundamentals of AI in your curriculum or should it be extracurricular and done outside of it? |

|

52 (54.7) | 69 (61.1) |

|

41 (43.2) | 42 (37.2) | |

| Which of the following events, aimed at providing an overview of AI fundamentals, would you be interested in attending? (Able to choose more than one) |

|

8 (8.4) | 19 (16.8) |

|

57 (60) | 58 (51.3) | |

|

10 (10.5) | 13 (11.5) | |

|

18 (18.9) | 23 (20.4) | |

| 2 (2.1) | 0 |

| Questions | Variables | Participants | First priority | Second priority | Third priority | Fourth priority | Fifth priority | Sixth priority | Seventh priority |

|---|---|---|---|---|---|---|---|---|---|

| Which three goals—if your curriculum introduced the fundamentals of AI—would you prioritize? | Determine whether technology is suitable in a certain therapeutic setting. | Medical students | 10 (10.5) | 16 (16.8) | 11 (11.6) | 17 (17.9) | 14 (14.7) | 12 (12.6) | 15 (15.8) |

| Paramedical students | 18 (15.9) | 16 (14.2) | 13 (11.5) | 15 (13.3) | 12 (10.6) | 20 (17.7) | 19 (16.8) | ||

| Comprehend and evaluate outcomes produced by AI | Medical students | 11 (11.6) | (27.4) | 19(20) | 14 (14.7) | 8 (8.4) | 7 (7.4) | 10 (10.5) | |

| Paramedical students | 11 (9.7) | 17 (15) | 19 (16.8) | 22 (19.5) | 20 (17.7) | 11 (9.7) | 13 (11.5) | ||

| Possess the ability to explain technology to people in a way that they can comprehend. | Medical students | 5 (5.3) | 9 (9.5) | 12 (12.6) | 17 (17.9) | 24 (25.3) | 13 (13.7) | 15 (15.8) | |

| Paramedical students | 19 (16.8) | 17 (15) | 15 (13.3) | 15 (13.3) | 19 (16.8) | 14 (12.4) | 14 (12.4) | ||

| Determine the moral ramifications of applying AI to medical settings. | Medical students | 14 (14.7) | 12 (12.6) | 16 (16.8) | 8 (8.4) | 14 (14.7) | 20 (21.1) | 11 (11.6) | |

| Paramedical students | 13 (11.5) | 10 (8.8) | 17 (15) | 16(14.2) | 13 (11.5) | 22 (19.5) | 22 (19.5) | ||

| Recognize the operation of the underlying technological processes. | Medical students | 13 (13.7) | 7 (7.4) | 10 (10.5) | 12 (12.6) | 17(17.9) | 15 (15.8) | 21 (22.1) | |

| Paramedical students | 23 (20.4) | 18 (15.9) | 12 (10.6) | 17(15) | 17 (15) | 8 (7.1) | 18 (15.9) | ||

| Acquire knowledge of the jargon to facilitate communication and teamwork with engineers and developers. | Medical students | 10 (10.5) | 9 | (9.5) | 15 (15.8) | 13 (13.7) | 19 (20) | 19 (20) | |

| Paramedical students | 7 (6.2) | 15 (13.3) | 15 (13.3) | 12 (10.6) | 20 (17.7) | 24 (21.2) | 20 (17.7) | ||

| Determine how AI may enhance the quality of healthcare. | Medical students | 32 (33.7) | 15 (15.8) | 18 (18.9) | 12 (12.6) | 5 (5.3) | 9 (9.5) | 4 (4.2) | |

| Paramedical students | 22 (19.5) | 20 (17.7) | 22 (19.5) | 16 (14.2) | 12 (10.6) | 14 (12.4) | 7 (6.2) |

Furthermore, 82% of medical students and 78.7% of paramedical students stated that using AI in my field gives me optimism. However, 49.5% of medical students and 54.1% of paramedic students stated that they are concerned about the role AI will play in their field. In this regard, students (medicine: 46.4%; paramedicine: 93.9%) stated that AI is a technology that should be used carefully.

When asked to comment on their sentiments toward AI, medical and paramedical students were largely upbeat about the role of AI (paramedical students = 61.9%; medical students = 67.4%). Also, when students were asked to describe AI in one sentence, 56.62% of paramedic students and 45.26% of medical students defined it incorrectly or stated that they did not know.

Students were questioned: Should AI principles be taught outside of the classroom or as part of the curriculum? The majority of them answered (medical students: 61.1%; paramedical students: 54.7%) that it should be part of my curriculum or educational program. Additionally, most medical and paramedical students predicted that within the next ten to twenty years, AI would be included in their field.

On the other hand, when students from various disciplines were asked which three goals were most important when introducing AI principles into their curricula, most medical students consistently ranked the following among the top goals: (1) identify ways in which AI can improve the quality of healthcare; (2) understand and interpret results generated by AI. (3) Identify the ethical implications of using AI in clinical disciplines. On the other hand, most of the paramedical students' priorities were as follows: (1) Understand how basic technological processes work. (2) Identify ways in which AI can boost the quality of healthcare. (3) Understand and interpret the results generated by AI.

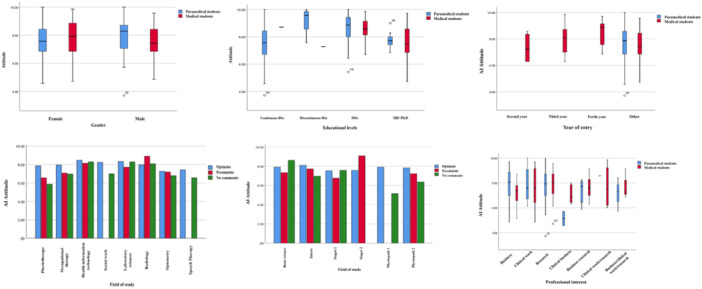

To examine differences in students' attitudes toward AI based on variables such as gender, academic level, year of entry, field of study, and professional interest, a one‐way analysis of variance (ANOVA) was conducted. The results indicated no significant differences in students' AI attitudes across these variables. The effect size (η²) and 95% confidence intervals (CI) for each variable were as follows: gender (F(1, 206) = 1.53, p = 0.217, η² = 0.014, 95% CI [−0.22, 0.09]), academic level (F(3, 204) = 2.10, p = 0.128, η² = 0.018, 95% CI [−0.20, 0.11]), year of entry (F(9, 198) = 0.89, p = 0.473, η² = 0.005, 95% CI [−0.16, 0.12]), field of study (F(5, 202) = 0.82, p = 0.505, η² = 0.007, 95% CI [−0.18, 0.15]), and professional interest (F(6, 201) = 0.64, p = 0.609, η² = 0.003, 95% CI [−0.14, 0.10]). These findings suggest that none of these demographic and academic factors had a notable impact on students’ attitudes toward AI (Figure 1).

Figure 1.

Attitude towards AI classified by gender, educational levels, year of entry, fields of study, and professional interests.

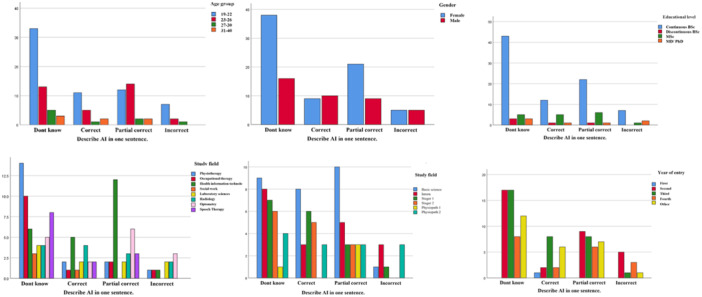

Furthermore, to assess differences in students' knowledge of AI based on age group, academic level, year of entry, gender, and field of study, a Chi‐Square test was performed. The results indicated no significant associations between these variables and students' AI knowledge. The effect size (Cramer's V) and 95% confidence intervals for each variable were as follows: age group (χ²(9, N = 208) = 7.45, p = 0.590, Cramer's V = 0.08, 95% CI [0.03, 0.14]), gender (χ²(3, N = 208) = 4.57, p = 0.206, Cramer's V = 0.10, 95% CI [0.04, 0.15]), academic level (χ²(9, N = 208) = 8.26, p = 0.508, Cramer's V = 0.07, 95% CI [0.02, 0.12]), year of entry (χ²(12, N = 208) = 14.35, p = 0.279, Cramer's V = 0.09, 95% CI [0.03, 0.14]), and field of study (χ²(21, N = 208) = 29.14, p = 0.111, Cramer's V = 0.11, 95% CI [0.05, 0.16]). These findings indicate that students' knowledge of AI did not significantly differ across demographic and academic categories (Figure 2).

Figure 2.

Knowledge towards AI classified by age groups, gender, educational levels, year of entry, and fields of study.

4. Discussion

4.1. Principal Findings

This study found that many medical and paramedical students demonstrated limited conceptual clarity and self‐reported familiarity with AI, particularly in defining core concepts. These findings underscore the urgent need for incorporating structured AI education within medical and paramedical curricula. As AI continues to be integrated into healthcare systems, equipping future professionals with foundational knowledge and awareness is essential. Despite these limitations, most students expressed optimistic views regarding AI's role in their future careers (paramedical students: 61.9%; medical students: 67.4%).

No significant differences were observed in attitudes toward AI across different educational levels (p = 0.128) or among students with varying professional interests (p = 0.609). This suggests a broad acceptance of AI among students from diverse academic backgrounds. Our findings align with previous international studies. For instance, a multicenter study in Germany reported that medical students did not express significant concern about AI replacing radiologists [40]. Similarly, a study conducted in Pakistan found that medical students held favorable attitudes toward AI [5]. In the United Kingdom, a survey of general practitioners indicated that AI could enhance physician efficiency, although ethical concerns were also raised [41]. Likewise, a global survey of pathologists revealed a predominantly positive attitude toward AI as a tool for improving efficiency and quality assurance, whereas a separate study in South Korea suggested that physicians there do not anticipate AI replacing their roles [42].

In our study, 56.62% of paramedical students and 45.26% of medical students were unable to accurately define AI or admitted to lacking knowledge about it. This finding is consistent with an online survey conducted in southern Vietnam, where 92.2% of respondents reported having no specific knowledge of AI. However, 77.9% believed AI would be beneficial for their careers, and 77.2% expected it to play a role in public health monitoring and epidemic prevention [43]. Addressing this knowledge gap, the Ontario Medical Students Association has advocated for the integration of AI education into medical curricula [44]. A lack of understanding regarding AI integration in healthcare and misinterpretation of AI‐generated results could lead to distrust and hesitation in adopting these technologies. Our findings suggest that incorporating AI education into medical training can alleviate concerns and facilitate the acceptance of AI in the healthcare community. Similar findings were reported in a study of medical physicists and oncologists in New Zealand, which demonstrated a positive correlation between prior AI exposure and its acceptance [45]. However, to effectively integrate AI into healthcare systems—especially in LMICs—educational initiatives must be accompanied by structural reforms in data and information infrastructures. Recent reviews in the field of health informatics highlight the fragmentation of registration systems and the lack of national strategies for interoperable digital platforms. These limitations restrict the broader implementation of intelligent technologies, underscoring that improving students' AI literacy must be paralleled by the development of cohesive, technology‐ready ecosystems in healthcare [46].

A systematic review by Mousavi Baigi et al., analyzing 38 studies, found that although students generally held positive attitudes toward AI, most had limited exposure to its practical applications and lacked hands‐on experience [33]. Additionally, a more recent review examining AI literacy among healthcare professionals and students reported that in nearly half of the included studies, participants demonstrated very low levels of self‐reported AI knowledge and confidence in applying AI tools [47]. These findings highlight the need for structured and accessible AI education to improve students' familiarity with core concepts and their readiness for AI integration. Supporting this conclusion, Sun and Medaglia [48] and Ghaddaripouri [49] emphasized that insufficient awareness and understanding are among the key perceived barriers to the successful adoption of AI in healthcare settings.

Conversely, Chen et al. found that while medical students and professionals recognize the growing role of AI in clinical practice, they lack hands‐on experience and practical knowledge. Their study, which assessed AI acceptance among medical professionals worldwide, concluded that although participants had a generally positive attitude toward AI, they remained cautious. The study also recommended that increased AI education could help reduce concerns and resistance to its adoption [50].

Regarding ethical considerations, 73.7% of medical students and 72.5% of paramedical students (scoring above 5) indicated an understanding of AI's ethical implications. AI introduces new ethical challenges, such as increased bias risks and the potential for diagnostic errors, which may result in over‐ or under‐diagnosis with significant clinical consequences [51]. As a result, medical ethics education must evolve in parallel with the anticipated acceleration of AI development in medicine [52]. However, AI ethics education in medical programs remains insufficient. A study involving 487 medical students from Germany, Austria, and Switzerland revealed that most had received little to no formal training on AI ethics [53, 54].

In our study, concerns regarding AI's impact on students' future careers were apparent. Many students predicted that AI would become an integral part of their profession within the next 10–20 years. In this regard, research conducted by Deloitte [55] and in collaboration with the Oxford Martin Institute [56] suggested that AI could automate 35% of UK jobs within the same time frame. Other studies have highlighted that occupations involving repetitive and predictable tasks are most susceptible to automation [57, 58]. However, some studies argue that AI could enhance job quality and improve wealth distribution [59]. It is increasingly evident that AI will not entirely replace medical professionals but will serve as an auxiliary tool to enhance patient care [52].

4.2. Strengths and Limitations

This study has several limitations. First, self‐selection bias may have influenced participant recruitment and engagement. While this study did not aim to comprehensively assess technical knowledge of AI, the open‐ended definition item served as a proxy to explore students' conceptual clarity, whereas the remaining items focused on perceptions, attitudes, and educational preferences. Second, the study focused exclusively on the attitudes and knowledge of medical and paramedical students, leaving out the perspectives of practicing clinicians and specialists. Third, the study was conducted at a single institution, Mashhad University of Medical Sciences (MUMS) in Iran. However, considering that Mashhad is Iran's second most populous city and that MUMS is one of the country's leading medical universities, the findings may still be generalizable to other middle‐ and low‐income countries.

One of the study's key strengths is the use of a standardized questionnaire, whose validity had already been established. Additionally, this study compared the perspectives of students from various medical and paramedical disciplines, providing a comprehensive understanding of their views on AI. Unlike many previous studies that primarily focused on high‐income countries, this study offers insights into a middle‐income setting. Future studies could further explore patient perspectives on AI in healthcare. Additionally, qualitative interviews with key stakeholders could be conducted to assess their readiness for integrating AI into clinical and rehabilitative practices.

5. Conclusion

This study assessed students' perceived understanding of AI and revealed areas of conceptual ambiguity based on their ability to define AI and their self‐reported familiarity. Although most students expressed optimism about the integration of AI in their future careers, the findings suggest that many lacked foundational conceptual clarity. Importantly, students consistently reported diverse educational needs regarding AI, with the majority supporting its inclusion in the formal curriculum. To effectively prepare the future healthcare workforce for AI‐driven transformations, targeted curriculum development should be informed by students' attitudes, perceived readiness, and preferences. Recognizing these dimensions can guide policymakers and educators in designing responsible, context‐appropriate AI literacy programs.

Author Contributions

Seyyedeh Fatemeh Mousavi Baigi: conceptualization, writing – original draft, writing – review and editing, methodology, formal analysis, resources. Masoumeh Sarbaz: validation, writing – review and editing, formal analysis. Ali Darroudi: formal analysis, data curation, writing – review and editing. Khalil Kimiafar: writing – review and editing, conceptualization, project administration, supervision.

Ethics Statement

The ethics committee of Mashhad University of Medical Sciences granted approval for this study (approval number: IR.MUMS.FHMPM.REC.1403.242).

Conflicts of Interest

The authors declare no conflicts of interest.

Transparency Statement

The lead author Khalil Kimiafar affirms that this manuscript is an honest, accurate, and transparent account of the study being reported; that no important aspects of the study have been omitted; and that any discrepancies from the study as planned (and, if relevant, registered) have been explained.

Acknowledgments

This study is part of project No. 4030739 conducted by the Student Research Committee, Mashhad University of Medical Sciences, Mashhad, Iran. We extend our sincere gratitude to the “Student Research Committee” and the “Research & Technology Chancellor” of Mashhad University of Medical Sciences for their financial support of this study. We also wish to thank the medical and paramedical students of Mashhad University of Medical Sciences who participated in this study and contributed to the completion of the research. Additionally, the authors acknowledge the assistance of ChatGPT (OpenAI) in providing English translation and improving the linguistic clarity of the manuscript. The final responsibility for the content of the manuscript lies solely with the authors.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

- 1. Kurni M., Mohammed M. S., and Srinivasa K. G., A beginner's Guide to Introduce Artificial Intelligence in Teaching and Learning (Springer Nature, 2023). [Google Scholar]

- 2. Ngiam K. Y. and Khor I. W., “Big Data and Machine Learning Algorithms for Health‐Care Delivery,” Lancet Oncology 20, no. 5 (2019): e262–e273, 10.1016/S1470-2045(19)30149-4. [DOI] [PubMed] [Google Scholar]

- 3. Pham B. T., Nguyen M. D., Bui K.‐T. T., Prakash I., Chapi K., and Bui D. T., “A Novel Artificial Intelligence Approach Based on Multi‐Layer Perceptron Neural Network and Biogeography‐Based Optimization for Predicting Coefficient of Consolidation of Soil,” Catena 173 (2019): 302–311, 10.1016/j.catena.2018.10.004. [DOI] [Google Scholar]

- 4. Rai A., Constantinides P., and Sarker S., “Next Generation Digital Platforms: Toward Human‐AI Hybrids,” Mis Quarterly 43, no. 1 (2019): iii–ix, https://misq.umn.edu/misq/downloads/download/editorial/687/. [Google Scholar]

- 5. Abid S., Awan B., Ismail T., et al., “Artificial Intelligence: Medical Student S Attitude in District Peshawar Pakistan,” Pakistan Journal of Public Health 9, no. 1 (2019): 19–21, 10.32413/pjph.v9i1.295. [DOI] [Google Scholar]

- 6. Waymel Q., Badr S., Demondion X., Cotten A., and Jacques T., “Impact of the Rise of Artificial Intelligence in Radiology: What Do Radiologists Think?,” Diagnostic and Interventional Imaging 100, no. 6 (2019): 327–336, 10.1016/j.diii.2019.03.015. [DOI] [PubMed] [Google Scholar]

- 7. Ooi S., Makmur A., Soon Y., et al., “Attitudes Toward Artificial Intelligence in Radiology With Learner Needs Assessment Within Radiology Residency Programmes: A National Multi‐Programme Survey,” Singapore Medical Journal 62, no. 3 (2021): 126–134, 10.11622/smedj.2019141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Buch V. H., Ahmed I., and Maruthappu M., “Artificial Intelligence in Medicine: Current Trends and Future Possibilities,” British Journal of General Practice 68, no. 668 (2018): 143–144, 10.3399/bjgp18X695213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Mehta N., Pandit A., and Shukla S., “Transforming Healthcare With Big Data Analytics and Artificial Intelligence: A Systematic Mapping Study,” Journal of Biomedical Informatics 100 (2019): 103311, 10.1016/j.jbi.2019.103311. [DOI] [PubMed] [Google Scholar]

- 10. Horgan D., Romao M., Morré S. A., and Kalra D., “Artificial Intelligence: Power for Civilisation–and for Better Healthcare,” Public Health Genomics 22, no. 5–6 (2020): 145–161, 10.1159/000504785. [DOI] [PubMed] [Google Scholar]

- 11. Sloane E. B. and J. Silva R., “Artificial Intelligence in Medical Devices and Clinical Decision Support Systems,” Clinical Engineering Handbook 1 (2020): 556–568, 10.1016/B978-0-12-813467-2.00084-5. [DOI] [Google Scholar]

- 12. Wang F., Casalino L. P., and Khullar D., “Deep Learning in Medicine—Promise, Progress, and Challenges,” JAMA Internal Medicine 179, no. 3 (2019): 293–294, 10.1001/jamainternmed.2018.7117. [DOI] [PubMed] [Google Scholar]

- 13. Lee H., Tajmir S., Lee J., et al., “Fully Automated Deep Learning System for Bone age Assessment,” Journal of Digital Imaging 30 (2017): 427–441, 10.1007/s10278-017-9955-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Oakden‐Rayner L., “Reply to ‘Man Against Machine: Diagnostic Performance of a Deep Learning Convolutional Neural Network for Dermoscopic Melanoma Recognition In Comparison to 58 Dermatologists’ by Haenssle et al,” Annals of Oncology 30, no. 5 (2019): 854, 10.1093/annonc/mdy519. [DOI] [PubMed] [Google Scholar]

- 15. Sarazin M., ed., Artificial Intelligence in Health. John Wiley & Sons, 2024. [Google Scholar]

- 16. Topol E. J., “High‐Performance Medicine: The Convergence of Human and Artificial Intelligence,” Nature Medicine 25, no. 1 (2019): 44–56, 10.1038/s41591-018-0300-7. [DOI] [PubMed] [Google Scholar]

- 17. Ravali R. S., Vijayakumar T. M., Santhana Lakshmi K., et al., “A Systematic Review of Artificial Intelligence for Pediatric Physiotherapy Practice: Past, Present, and Future,” Neuroscience Informatics 2, no. 4 (2022): 100045, 10.1016/j.neuri.2022.100045. [DOI] [Google Scholar]

- 18. Teng M., Singla R., Yau O., et al., “Health Care Students' Perspectives on Artificial Intelligence: Countrywide Survey in Canada,” JMIR Medical Education 8, no. 1 (2022): e33390, 10.2196/33390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Hannun A. Y., Rajpurkar P., Haghpanahi M., et al., “Cardiologist‐Level Arrhythmia Detection and Classification in Ambulatory Electrocardiograms Using a Deep Neural Network,” Nature Medicine 25, no. 1 (2019): 65–69, 10.1038/s41591-018-0268-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Yu K.‐H., Beam A. L., and Kohane I. S., “Artificial Intelligence in Healthcare,” Nature Biomedical Engineering 2, no. 10 (2018): 719–731, 10.1038/s41551-018-0305-z. [DOI] [PubMed] [Google Scholar]

- 21. Romero‐Brufau S., Wyatt K. D., Boyum P., Mickelson M., Moore M., and Cognetta‐Rieke C., “A Lesson in Implementation: A Pre‐Post Study of Providers' Experience With Artificial Intelligence‐Based Clinical Decision Support,” International Journal of Medical Informatics 137 (2020): 104072, 10.1016/j.ijmedinf.2019.104072. [DOI] [PubMed] [Google Scholar]

- 22. Deep Learning|Nature, accessed on 19 September 2022, https://www.nature.com/articles/nature14539.

- 23. Scheetz J., Rothschild P., McGuinness M., et al., “A Survey of Clinicians on the Use of Artificial Intelligence in Ophthalmology, Dermatology, Radiology and Radiation Oncology,” Scientific Reports 11, no. 1 (2021): 5193, 10.1038/s41598-021-84698-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Abdullah R. and Fakieh B., “Health Care Employees' Perceptions of the Use of Artificial Intelligence Applications: Survey Study,” Journal of Medical Internet Research 22, no. 5 (2020): e17620, 10.2196/17620. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Castagno S. and Khalifa M., “Perceptions of Artificial Intelligence Among Healthcare Staff: A Qualitative Survey Study,” Frontiers in Artificial Intelligence 3 (2020): 578983, 10.3389/frai.2020.578983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Laï M.‐C., Brian M., and Mamzer M.‐F., “Perceptions of Artificial Intelligence in Healthcare: Findings From a Qualitative Survey Study Among Actors in France,” Journal of Translational Medicine 18, no. 1 (2020): 14, 10.1186/s12967-019-02204-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Al‐Khaled T., Valikodath N., Cole E., et al., “Evaluation of Physician Perspectives of Artificial Intelligence in Ophthalmology: A Pilot Study,” Investigative Ophthalmology and Visual Science 61, no. 7 (2020): 2023, https://iovs.arvojournals.org/article.aspx?articleid=2769713. [Google Scholar]

- 28. Martinho A., Kroesen M., and Chorus C., “A Healthy Debate: Exploring the Views of Medical Doctors on the Ethics of Artificial Intelligence,” Artificial Intelligence in Medicine 121 (2021): 102190, 10.1016/j.artmed.2021.102190. [DOI] [PubMed] [Google Scholar]

- 29. Klumpp M., Hintze M., Immonen M., et al., ed., “Artificial Intelligence for Hospital Health Care: Application Cases and Answers to Challenges in European hospitals,” in Healthcare (Basel), (MDPI, 2021), 10.3390/healthcare9080961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Liu Y., Chen P.‐H. C., Krause J., and Peng L., “How to Read Articles That Use Machine Learning: Users' Guides to the Medical Literature,” Journal of the American Medical Association 322, no. 18 (2019): 1806–1816, 10.1001/jama.2019.16489. [DOI] [PubMed] [Google Scholar]

- 31. Reznick R. K., Harris K., Horsley T., and Hassani M. S., Task Force Report on Artificial Intelligence and Emerging Digital Technologies. Royal College of Physicians and Surgeons of Canada. 2020, https://www.royalcollege.ca/content/dam/document/membership-and-advocacy/2020-task-force-report-on-ai-and-emerging-digital-technologies-e.pdf.

- 32. Wong L. P. W., “Artificial Intelligence and Job Automation: Challenges for Secondary Students' Career Development and Life Planning,” Merits 4, no. 4 (2024): 370–399, 10.3390/merits4040027. [DOI] [Google Scholar]

- 33. Mousavi Baigi S. F., Sarbaz M., Ghaddaripouri K., Ghaddaripouri M., Mousavi A. S., and Kimiafar K., “Attitudes, Knowledge, and Skills Towards Artificial Intelligence Among Healthcare Students: A Systematic Review,” Health Science Reports 6, no. 3 (2023): e1138, 10.1002/hsr2.1138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Wahl B., Cossy‐Gantner A., Germann S., and Schwalbe N. R., “Artificial Intelligence (AI) and Global Health: How Can Ai Contribute to Health in Resource‐Poor Settings?,” BMJ Global Health 3, no. 4 (2018): e000798, 10.1136/bmjgh-2018-000798. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Raoofi A., Takian A., Akbari Sari A., Olyaeemanesh A., Haghighi H., and Aarabi M., “COVID‐19 Pandemic and Comparative Health Policy Learning in Iran,” Archives of Iranian medicine 23, no. 4 (2020): 220–234, 10.34172/aim.2020.02. [DOI] [PubMed] [Google Scholar]

- 36. Xiang Y., Zhao L., Liu Z., et al., “Implementation of Artificial Intelligence in Medicine: Status Analysis and Development Suggestions,” Artificial Intelligence in Medicine 102 (2020): 101780, 10.1016/j.artmed.2019.101780. [DOI] [PubMed] [Google Scholar]

- 37. Sit C., Srinivasan R., Amlani A., et al., “Attitudes and Perceptions of UK Medical Students Towards Artificial Intelligence and Radiology: A Multicentre Survey,” Insights Into Imaging 11 (2020): 14, 10.1186/s13244-019-0830-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. World Health Organisation , 2017. Process of Translation and Adaptation of Instrument, http://www.who.int/substance_abuse/research_tools/translation/en/.

- 39. Lawshe C. H., “A Quantitative Approach to Content Validity,” Pers. Psychol. 28, no. 4 (1975): 563–575, 10.1111/j.1744-6570.1975.tb01393.x. [DOI] [Google Scholar]

- 40. Pinto dos Santos D., Giese D., Brodehl S., et al., “Medical Students' Attitude Towards Artificial Intelligence: A Multicentre Survey,” European Radiology 29 (2019): 1640–1646. [DOI] [PubMed] [Google Scholar]

- 41. Blease C., Kaptchuk T. J., Bernstein M. H., Mandl K. D., Halamka J. D., and DesRoches C. M., “Artificial Intelligence and the Future of Primary Care: Exploratory Qualitative Study of UK General Practitioners' Views,” Journal of Medical Internet Research 21, no. 3 (2019): e12802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Oh S., Kim J. H., Choi S.‐W., Lee H. J., Hong J., and Kwon S. H., “Physician Confidence in Artificial Intelligence: An Online Mobile Survey,” Journal of Medical Internet Research 21, no. 3 (2019): e12422.43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Truong N. M., Vo T. Q., Tran H. T. B., Nguyen H. T., and Pham V. N. H., “Healthcare Students' Knowledge, Attitudes, and Perspectives Toward Artificial Intelligence in the Southern Vietnam,” Heliyon 9, no. 12 (2023): e22653, 10.1016/j.heliyon.2023.e22653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Ontario Medical Students Association , Training for the Future: Preparing Medical Students for the Impact of Artificial Intelligence, Approved February 23, 2019, accessed March 13, 2021, https://omsa.ca/sites/default/files/policy_or_position_paper/115/position_paper_preparing_medical_students_for_artificial_intelligence_2019_feb.pdf.

- 45. Victor Mugabe K., “Barriers and Facilitators to the Adoption of Artificial Intelligence in Radiation Oncology: A New Zealand Study,” Technical Innovations & Patient Support in Radiation Oncology 18 (2021): 16–21, 10.1016/j.tipsro.2021.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Mousavi Baigi S. F., Sarbaz M., Sobhani‐Rad D., Mousavi A. S., and Kimiafar K., “Rehabilitation Registration Systems: Current Recommendations and Challenges,” Frontiers in Health Informatics 11, no. 1 (2022): 124, 10.30699/fhi.v11i1.388. [DOI] [Google Scholar]

- 47. Kimiafar K., Sarbaz M., Tabatabaei S. M., et al., “Artificial Intelligence Literacy Among Healthcare Professionals and Students: A Systematic Review,” Frontiers in Health Informatics 12 (2023): 168, 10.30699/fhi.v12i0.524. [DOI] [Google Scholar]

- 48. Sun T. Q. and Medaglia R., “Mapping the Challenges of Artificial Intelligence in the Public Sector: Evidence From Public Healthcare,” Government Information Quarterly 36, no. 2 (2019): 368–383, 10.1016/j.giq.2018.09.008. [DOI] [Google Scholar]

- 49. Ghaddaripouri K., Mousavi Baigi S. F., Abbaszadeh A., and Mazaheri Habibi M. R., “Attitude, Awareness, and Knowledge of Telemedicine Among Medical Students: A Systematic Review of Cross‐Sectional Studies,” Health Science Reports 6, no. 3 (2023): e1156, 10.1002/hsr2.1156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Chen M., Zhang B., Cai Z., et al., “Acceptance of Clinical Artificial Intelligence Among Physicians and Medical Students: A Systematic Review With Cross‐Sectional Survey,” Frontiers in Medicine 9 (2022): 990604, 10.3389/fmed.2022.990604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Weidener L. and Fischer M., “Role of Ethics in Developing AI‐Based Applications in Medicine: Insights From Expert Interviews and Discussion of Implications,” JMIR AI 3, no. 1 (2024): e51204, 10.2196/51204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Davenport T. and Kalakota R., “The Potential for Artificial Intelligence in Healthcare,” Future Healthcare Journal 6, no. 2 (2019): 94–98, 10.7861/futurehosp.6-2-94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Weidener L. and Fischer M., “Artificial Intelligence Teaching as Part of Medical Education: Qualitative Analysis of Expert Interviews,” JMIR Medical Education 9, no. 1 (2023): e46428, 10.2196/46428. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Srinivasan M., Venugopal A., Venkatesan L., and Kumar R., “Navigating the Pedagogical Landscape: Exploring the Implications of AI and Chatbots in Nursing Education,” JMIR nursing 7 (2024): e52105, 10.2196/52105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Insights D. State of AI in the Enterprise. Retrieved from www2 deloitte com/content/dam/insights/us/articles/4780_State‐of‐AI‐in‐theenterprise/AICognitiveSurvey2018_Infographic pdf. 2018.

- 56. UserTesting H. Chatbot Apps Are on the Rise but the Overall Customer Experience (cx) Falls Short According to a UserTesting report. UserTesting; 2019, https://www.usertesting.com/company/newsroom/press-releases/healthcare-chatbot-apps-are-rise-overall-customer-experience-cx.

- 57. Nazareno L. and Schiff D. S., “The Impact of Automation and Artificial Intelligence on Worker Well‐Being,” Technology in Society 67 (2021): 101679, 10.1016/j.techsoc.2021.101679. [DOI] [Google Scholar]

- 58. Tambe P., Cappelli P., and Yakubovich V., “Artificial Intelligence in Human Resources Management: Challenges and a Path Forward,” California Management Review 61, no. 4 (2019): 15–42, 10.1177/0008125619867910. [DOI] [Google Scholar]

- 59. Li P., Bastone A., Mohamad T. A., and Schiavone F., “How Does Artificial Intelligence Impact Human Resources Performance. Evidence From a Healthcare Institution in the United Arab Emirates,” JIK 8, no. 2 (2023): 100340, 10.1016/j.jik.2023.100340. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.