Abstract

While ascribing medical errors primarily to systems factors can free clinicians from individual blame, there are elements of medical errors that can and should be attributed to individual factors. These factors are related less commonly to lack of knowledge and skill than to the inability to apply the clinician’s abilities to situations under certain circumstances. In concert with efforts to improve health care systems, refining physicians’ emotional and cognitive capacities might also prevent many errors. In general, physicians have the sensation of making a mistake because of the interference of emotional elements. We propose a so-called rational-emotive model that emphasizes 2 factors in error causation: (1) difficulty in reframing the first hypothesis that goes to the physician’s mind in an automatic way, and (2) premature closure of the clinical act to avoid confronting inconsistencies, low-level decision rules, and emotions. We propose a teaching strategy based on developing the physician’s insight and self-awareness to detect the inappropriate use of low-level decision rules, as well as detecting the factors that limit a physician’s capacity to tolerate the tension of uncertainty and ambiguity. Emotional self-awareness and self-regulation of attention can be consciously cultivated as habits to help physicians function better in clinical situations.

Keywords: Medical errors, decision making/education, professional practice, risk management, medical mistakes, quality of health care, self-awareness, clinical reasoning

INTRODUCTION

The discussion of clinical errors has shifted from being an almost taboo matter to being a major focus of decision theory,1– 3 epidemiology,4 health services research,5 and quality assurance policy.6, 7 A systemic perspective on clinical errors proposes that behind each error there is often a chain of circumstances involving multiple actors within the organization as a whole.5, 8 The current tendency is to displace individual guilt to a more institutional perspective. The positive consequence is that physicians can examine their own errors without activating feelings of blame, which usually paralyze the individual’s and the team’s capacity to correct the error and prevent future ones. This systemic perspective, however, should not reduce the degree to which physicians should be held accountable to be vigilant and engage in self-monitoring. Some evidence indicates that errors often result not from a lack of knowledge but from the mindless application of unexamined habits and the interference of unexamined emotions.9, 10

METHODS

Case 1.

A 47-year-old man with abdominal pain and decreased urination was seen in the emergency department of a well-respected hospital. The board-certified and experienced physician taking a cursory history assumed that the decreased urinary flow was due to dehydration, even though he was aware that the patient had recently had bladder surgery for localized carcinoma and just had a Foley catheter removed. The patient was signed out to another physician at the end of the shift. When intravenous hydration did not result in improvement, the new physician increased the rate of the intravenous infusion. Only the next day, when seen by another resident, did the situation seem obvious: a new catheter was inserted with relief of the pain and a yield of 1.5 L of urine. By then, the patient had developed a fever, and the urine appeared to be infected.

Whereas it is clear that some errors may have been made in the hand-offs of care, lack of individual vigilance and inability to think about the concrete situation in a new way contributed to poor care. To a certain degree, each physician’s competence in this situation depended on the ability to avoid routine and to tune clinical abilities to deal with the situation. We use the terms tune or calibrate in the same way one might use them when referring to tuning a musical instrument or calibrating a glucometer.11 Self-awareness is the process whereby the physician self-calibrates to produce the desired effect: an effective communication process, or an accurate physical examination.11, 12. The cognitive and emotional processes that physicians use to increase self-awareness in the moment during everyday medical practice, however, have not been described in depth until recently.

We present this article as a preliminary step to answer the question of whether training in self-awareness can prevent clinical errors.13 To that end, we explore a rational-emotive model of clinical error prevention, building on our own clinical, teaching, and research experience,12, 14– 16 and develop some educational strategies that can help to prevent clinical errors by increasing access to the thoughts and feelings that guide clinical actions. We hope to build on the literature that describes the cognitive structure of medical knowledge17– 19 to bring attention to the influence of emotions and self-calibration in daily practice. Briefly, the model involves strategies for recognizing situations that increase the risk of errors (which often involve denial, fatigue, or distraction), attending to previously unexamined decision rules that are being applied to the situation, seeking opportunities for engaging in reframing to revise an understanding of the clinical situation, and promoting a habit of self-questioning during clinical work.

The Rational-Emotive Model

Physicians tend to reason tacitly to the extent of consciously perceiving only the tip of the iceberg of their own thinking processes. Beneath the surface there are quasi-automatic mental operations that are often useful mental shortcuts; however, sometimes these same shortcuts can play tricks on reasoning skills. In the case above, decreased urination is often associated with dehydration, but clearly it is not the only explanation. Failure to examine the reasoning process led to perpetuation of an error.

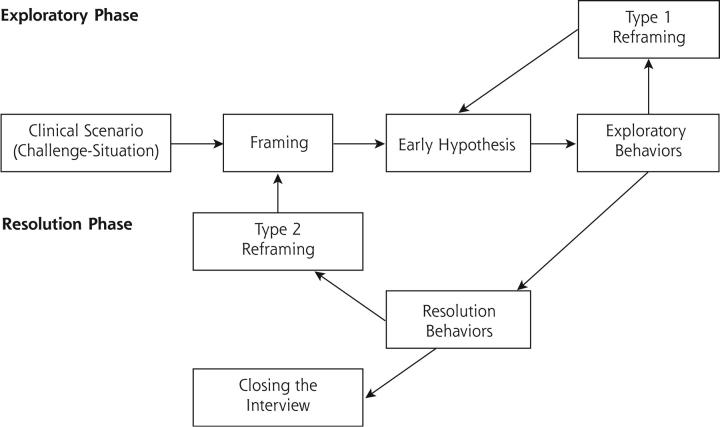

Figure 1 ▶ represents the rational-emotive model of the clinical act. This model takes into account the division of the encounter into 2 phases: the exploratory phase and the resolution phase.20 In the exploratory phase, the physician experiences the need to know how to resolve the patient’s problem as quickly as possible to reduce the psychological tension the physician experiences. Observe how the model works based on the following example:

Figure 1.

Rational-emotive model.

Framing consists of responding to the question: “What is it that I am supposed to do in this particular scenario?” After framing, there automatically appears an early hypothesis that the physician tries to verify. When findings do not fit the hypothesis, other hypotheses might be considered (type 1 reframing), sometimes even global reframing (type 2 refram-ing), such as, for example, “I am not dealing with a shoulder pain, I should consider domestic violence.”

Case 2.

A physician evaluates a child with high fever, headache, and confusion at 4:00 am after an exhausting shift. The physician activates the decision rules: “Headache and fever with confusion, rule out nuchal rigidity.” While looking for the Kernig sign, however, an image of what will happen if this sign were to be positive is projected, which, in turn, would mean performing a lumbar puncture. At this point, the physician automatically and unconsciously lowers the sensitivity of the Kernig sign; if the sign is not clearly positive, the physician will consider it negative. The same sign that might have been positive at the beginning of the shift mysteriously becomes negative at 4:00 am.

The challenge situation is perceived as the confluence of (1) the demand encountered by the physician (in this case, a child with fever, headache, and confusion); and (2) the environment in which this demand is made (a fatigued physician at 4:00 am in the emergency department). The physician then creates a frame for the situation, ie, an understanding of what he or she must do during the visit. In our case, this frame could well be as follows: “This patient has headache and fever with confusion, so I have to make sure the patient does not have a serious infection.” This frame is automatically associated to an early hypothesis: viral infection, meningitis, pneumonia, etc. The exploratory phase includes maneuvers of observation, listening, questioning, and physical examination to support or refute the early hypothesis. The resolution phase includes arriving at an understanding of the diagnosis and therapeutic plan and then closure.

When the data obtained by the physician do not confirm the early hypotheses, or new data require adding to or modifying these early hypotheses, the physician engages in type 1 reframing to arrive at a more accurate understanding of the situation. In this example, type 1 reframing would have occurred had the physician found a high fever with cognitive impairment not explained by a viral infection. Type 2 refram-ing occurs when it is necessary not only to adjust the hypotheses but to rethink the whole clinical situation. This situation can occur at the initiative of the patient (“I think you’re wrong, Doctor, I’m not depressed”) or as the result of new data (eg, the unexpected finding of lymphadenopathy and splenomegaly during a routine physical examination). Type 2 reframing requires more effort than type 1 reframing, because it disrupts the time allotment and fatigue management that the physician had planned.

The case above gives us also the opportunity to analyze the role of somatic markers and emotional satisfaction as regulators of decision making. Our argument is based on the notion that physicians do not act as rationally as they believe they do, and that emotional satisfaction often comes into play.21 Before making a decision, for example, at some level physicians weigh the satisfaction that each option is likely to produce by imagining the result of an action before doing it. This exercise leads physicians to weigh different options based on the projected image, which might be at odds with a rational analysis of the outcome.22 Damasio’s somatic marker hypothesis23, 24 goes one step further: Before making a decision, physicians evoke images and emotions for each of the options in play—they project themselves into the imagined situation and feel as if they were there. In subsequent similar situations, that as-if feeling, which Damasio calls a somatic marker, comes forward automatically, without the effort of imagining, facilitating decision making.

Cognitive Activation and Errors

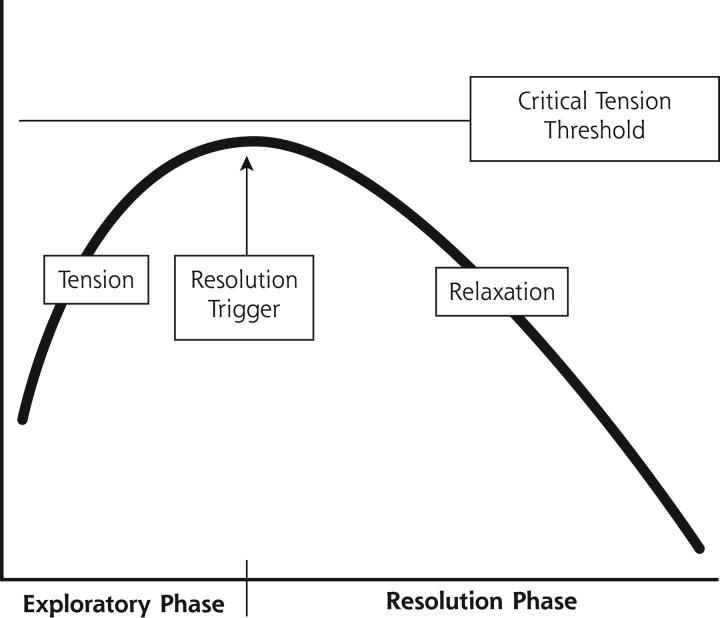

In Figure 2 ▶ we explain the concept of critical tension. When physicians are facing the challenge to make a diagnosis to solve a patient’s problem, they experience a degree of emotional tension. This tension is more extreme when the situation is atypical or ambiguous, or when the patient is perceived as demanding. In addition, all physicians have good and bad days. An important hypothesis of our model is that the days are good partially by virtue of the physician’s ability to tolerate the emotional tension of not knowing. A bad day precipitates the premature closing of the encounter or other strategies to decrease the emotional discomfort. Table 1 ▶ lists factors that interfere with professional performance partially as a result of lower tolerance of critical tension.

Figure 2.

Clinical tension.

Trigger resolution consists of saying to oneself: “Stop asking or exploring the patient, I know the diagnosis or what to do.” Sometimes the physician needs more time to achieve a diagnosis, but the tension of not knowing what to do is so important that the physician achieves critical tension, and the resolution trigger is activated. At this point the physician can accept as a good diagnosis an early hypothesis that does not fit well with the case.

Table 1.

Some Restrictive Factors Interfering With Professional Performance

| Excessive or Lack of Emotional Arousal | Excessive or Lack of Hedonic Tone |

| Fatigue | Patient hostility (especially when indirectly expressed) |

| Poor clinical skills | The professional’s feelings of rejection or hostility toward the patient (especially when unrecognized) |

| Transient cognitive problems, (for example, sleep disturbances, alcohol consumption, etc) | The clinician has a somatic discomfort |

| Lack of motivation | Creating more work if a certain hypothesis is confirmed |

| Urgency to finish | |

| Overwhelming clinical workload, “excessive workload” |

Case 3.

Dr. X is tired at the end of his work shift. He attends a 64-year-old patient complaining of chest pain. Diagnosing musculoskeletal chest pain, he prescribes an analgesic. Shortly thereafter a colleague asks his opinion about another patient the colleague is “not sure about.” Dr. X. overcomes his fatigue and carries out a complete interview, physical examination, and electrocardiogram in the presence of his colleague and a junior trainee. Once home, Dr. X asks himself whether the first patient did not also deserve these same tests and why he treated the 2 cases differently.

This case illustrates the concept of the level of cognitive activation. This activation level can depend upon attitude. Fatigue, perceptions of urgency or danger to the patient, or even physicians’ perceptions that they might lose professional prestige, among other factors, can raise levels of cognitive activation.25 In this example, in the solitude of the first visit, the physician could permit himself to be minimally activated and allow himself to be beaten by fatigue. In the second visit, during which the physician had to demonstrate his skill in front of his colleague and was forewarned by the inner dialog (“I’m not sure about this patient”), the physician’s activation level increased. If the physician were able to learn from this situation, he could conclude, “When I’m tired, I might imagine that I am presenting a case to a respected colleague to avoid a premature closure.”

Unlike a computer, a human being self-regulates the amount and type of attention paid to the environment and manages the energy this effort requires.26 The preceding examples show a lack of regulation on the part of both physicians. A human being can function in a spectrum that ranges from very low arousal, almost bordering somnolence, to a state of hyperexcitation, with associated feelings ranging from pleasure to frank discomfort. Apter’s reversal theory27 describes how a change in the intensity of a stimulus can change the quality of an emotion, going from a state of enjoyment to a state of displeasure, or from cooperative emotions to emotions of competition. For example, a patient asking the same question 2 or more times can change a physician’s reaction from interest to irritation.

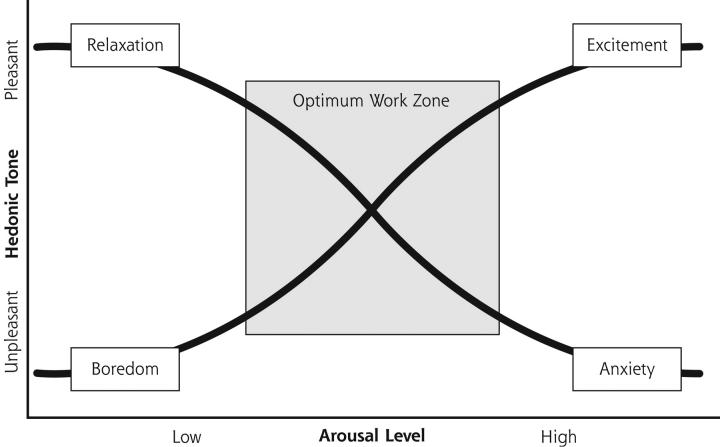

Apter’s theory also predicts that physicians have an ideal cognitive activation zone (Figure 3 ▶) to open a library of decision rules in which are stored the memories of similar clinical experiences that mark their presentation at a certain moment. He conceptualizes 2 axes: arousal and pleasure. Along the axis of arousal, for instance, the physician can be affected by physical fatigue, boredom, or anxiety. Along the axis of pleasure, for example, if the physician is flooded by emotions of irritation or hostility, the physician will be tempted to shorten the encounter. To close the clinical encounter, however, the physician always needs a cognitive alibi, such as, “this patient exaggerates his or her symptoms,” or “he or she has the same thing I diagnosed last week.”

Figure 3.

Apter’s model of emotional reversal theory.

The optimum work zone avoids extreme values in arousal and hedonic (pleasure) tone. Extreme positions make cognitive processes difficult.

Low-Level Decisions Rules as a Strategy to Close the Encounter

Case 4.

A 52-year-old woman complaining of colicky lower abdominal hypogastric pain who reports a history of nephrolithiasis. The physical examination showed no tenderness anywhere but in the hypogastrium. A quick urinalysis showed no hematuria. The physician diagnosed renal colic. A few hours later the patient returned and was found to have ovarian torsion.

In this case the physician formulated a simple preliminary hypothesis, “She is having a recurrence of her renal colic,” and applied a basic decision rule looking for colicky pain, hematuria, and costover-tebral tenderness. Finding only the first item, the physician ignored the disconfirming data because, “If it’s not renal colic, I can’t imagine what it could be, so...it must be!” Perhaps the physician believed that admitting uncertainty would be humiliating in front of colleagues, or perhaps the effort to consider other possibilities would make the physician more irritable or unsure of the diagnosis.

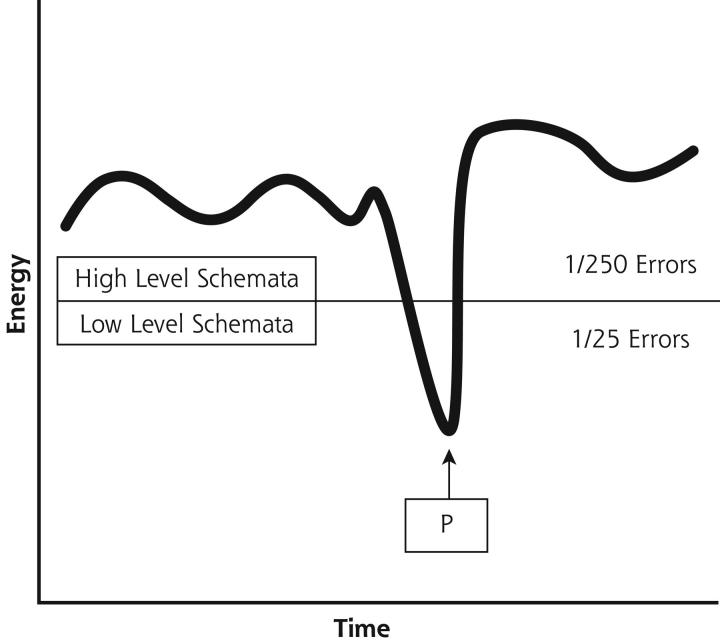

Figure 4 ▶ shows a physician who is able to apply high-level decision rules most of the time. Examples would be, “to allow a diagnosis of nephrolithiasis, the pain should be colicky and it should almost always be accompanied by hematuria,” or “when there is also rebound tenderness, I should carry out a rectal or vaginal examination.” These complex, elaborated decision rules might lead to a low rate of error, perhaps 1 in 250. Sometimes, though, for reasons of fatigue, cognitive overload, or fear of loss of self-esteem, the physician might substitute lower level decision rules and not recognize that they are inadequate to the situation.

Figure 4.

Low- or high-level schemata in use.

Observe that in a point of time (P) the physician applies lower level schemata, increasing the rate of errors. When this happens, the physician needs a cognitive alibi, such as “I am very tired,” “this patient is exaggerating,” etc.

In the above example, the physician’s internal dialogue might be articulated as, “In a patient with colicky lumbar pain and a history of renal colic, I can diagnose nephrolithiasis and proceed to give her analgesics.” It is not uncommon for such simple solutions to the diagnosis of renal colic to be acquired during medical school. Should the solution emerge solely as a way to justify a premature closure of the encounter, however, this otherwise seemingly useful shortcut might lead to a higher rate of error, perhaps 1 clinical error in 25 patients (these rates are merely conjectures). Despite being an experienced doctor, the physician was unable to counterbalance some of the factors that are synthesized in Table 1 ▶. Maybe, at a certain point, the physician believed that the clinical performance was suboptimal and activated a cognitive alibi, such as “the most common is the most frequent,” “I can assume this risk,” or “this patient exaggerates her symptoms.” This cognitive alibi allows the physician to resolve the situation with less effort; at the same time it puts the physician at risk of making avoidable and inappropriate errors considering a high level of competence in other circumstances. This tendency can be counteracted by acquiring and reinforcing habits of mind and behavior described below. Other cognitive alibis and how to substitute for more appropriate thoughts are summarized in Table 2 ▶.

Table 2.

Low-Level and High-Level Decision Rules

| Low-Level Decision Rules | High-Level Decision Rules |

| Learned in basic stages of apprenticeship | Learned from experience |

| Errors experienced not incorporated | Errors experienced incorporated |

| Tacit knowledge not reconsidered | Tacit knowledge conscientiously revisited |

Implications of the Model

The 2 practical implications of the model we propose are that excellent physicians (1) use their capacity for insight to detect when they are at risk for cognitive distortion, premature closure, or use of low-level decision rules; and (2) detect moments when it is necessary to reframe the visit. So, as part of basic clinical training, a physician should assimilate as habits the following cognitive, emotional, and behavioral skills.

First, there are tools to help physicians learn to detect states of low cognitive activation or emotional saturation, which lead to not giving importance to (or even rejecting) the demands or complaints of patients. For example, we invite physicians to detect physical and psychological signs of fatigue (such as deterioration in handwriting, tremor, headache, forgetfulness, irritability, and inattentiveness), enhancing a better control over the environment and a systems-wide strategy of intelligent time assignment.

Second, physician training can focus on learning to reframe with minimal effort, although doing so might entail a delay in the resolution of the clinical task. Teaching methods should subject the student to a series of simulated situations in which he or she must detect whether the need to reframe an early hypothesis exists. This proposal goes beyond the suggestions of Barrows and Kassirer’s iterative model,28 in that we suggest provoking situations in which the physician must reopen the encounter that had initially been closed and, in the process, analyze the emotional resistance to reframing.

Third, physicians should develop methods to recognize cognitive abilities and substitute more appropriate explanations for one’s behavior, incorporating new clinical decision rules based on their own and on their colleagues’ errors (Table 3 ▶). These decision rules must be formulated simply, clearly, and in relation to concrete clinical situations. To this end, the student should learn (1) to recognize situations in which an error would have been committed, (2) examine why an error would have been committed, and (3) formulate high-level decision rules to correct this error. This process requires curiosity29 and development of tolerance to the ambiguity of “not-knowing” as a task of professional development.

Table 3.

Examples of Low-Level vs High-Level Schemata

| Low-Level Schemata | High-Level Schemata |

| I’ve got it! As soon as the patient told me, I knew what he had. | I should look beyond early hypotheses. |

| If the patient is satisfied with the diagnosis of another physician, why should I bother to find out more data? | I should always form my own criteria. |

| When in doubt, choose the simplest or most convenient hypothesis. | When in doubt, assume the worst hypothesis. |

| Complains a lot? He doesn’t have anything! | I must take a fresh look - perhaps by recording what the patient expresses and later reading it back, paying attention to the diagnosis that spontaneously comes to mind. |

The didactic proposal we make incorporates, among others, the following skills: first, the physician should cultivate the use of high-level decision rules. The physician learns to distinguish between decision rules that require little effort for their implementation (low-level) and those that require more energy (high-level decision rules). Through simulations, he or she learns to use the more appropriate decision rules (Table 2 ▶) and to identify when cognitive alibis are being used to support a low-level decision rule (Table 3 ▶). For instance, a physician can be trained to question a cognitive alibi, such as ”If the patient is satisfied with the diagnosis of another physician, why should I bother to find out more data?” and replace it with “I should always form my own impressions.”

Second, the physician should foster the habit of self-questioning. We believe there are 2 ways to learn from errors. The situation-specific strategy consists in learning to regard any risk situation as a red flag. In the example of colicky abdominal pain, future patients with typical pain but without hematuria will receive a more tentative diagnosis of renal colic. A physician can also learn in a more general way, however, by detecting when low-level decision rules are appearing, or when he or she is closing the encounter before the basic information is acquired. Physicians who are able to ask themselves, “Did I get enough verbal or physical data?” and “Am I persisting in my first decision because of tiredness or self-esteem?” are acquiring a general skill, which we call reframing training that can be applied to both emotions and cognitions.

Habitual use of 2 or 3 questions from Table 4 ▶ can become routine quality-control points. The questions should reflect the individual characteristics and needs of each physician. Often, the physician is aware that some type of habit of self-questioning is already in use, in which case, his or her performance must simply be optimized.

Table 4.

Habits of Self-Questioning: Reflective Questions

| How might my previous experience affect my actions with this patient? |

| What am I assuming about this patient that might not be true? |

| What surprised me about this patient? How did I respond? |

| What interfered with my ability to observe, be attentive, or be respectful with this patient? |

| How could I be more present with and available to this patient? |

| Were there any points at which I wanted to end the visit prematurely? |

| If there were relevant data that I ignored, what might they be? |

| What would a trusted peer say about the way I managed this situation? |

| Were there any points at which I felt judgmental about the patient-- in a positive or negative way? |

Third, the physician should work on subjectivity, specifically on intuitive clinical impressions. In this sense, we teach to distinguish between intuitive (or analogical) thinking and criterion-based (or categorical) thinking. The general impression of serious illness when a patient is pale and has lost weight is of the first type, while prescribing a radiological study for all patients with unexplained cough of more than 1 month of evolution is of the second type. The skill of the physician often depends on the skill in switching from one type of thinking to the other, recognizing the limits of both and knowing up to which point analogical and categorical thinking can complement each other. We have developed a series of techniques to bring the physician closer to this learning process.

CONCLUSION

The rational-emotive model of the clinical act helps physicians become aware of their early hypotheses and how they frame the clinical encounter. It proposes creating habits, especially the habit of reframing. It also invites an application of cognitive and emotional strategies to correct avoidance behavior, which would otherwise lead to precipitous closing of the clinical encounter. We advocate the need to teach future physicians not only the use of hard technologies but also the use of the technology derived from their own emotional and cognitive capacities.

Acknowledgments

We would like to thank Drs. Fred Platt, R. Barker, J. Cebrià, and V. Ortún for their invaluable suggestions.

Conflict of interest: none reported

Funding support: This work has had the support of the Fundació Jordi Goli Gurina.

REFERENCES

- 1.Sackett DL, Haynes RB, Tugwell P. Clinical Epidemiology: A Basic Science for Clinical Medicine. Boston, Mass: Little, Brown & Co; 1985.

- 2.Dowie J, Elstein A, eds. Professional Judgement. A Reader in Clinical Decision Making. Cambridge, UK: Cambridge University Press; 1994.

- 3.Sternberg RJ, ed. Thinking and Problem Solving. New York, NY: Academic Press; 1994.

- 4.Localio AR, Lawthers AG, Brennan TA, et al. Relation between malpractice claims and adverse events due to negligence: results of the Harvard Medical Practice Study III. N Engl J Med. 1991;325:245–251. [DOI] [PubMed] [Google Scholar]

- 5.Kohn LT, Corrigan JM, Donaldson MS. Institute of Medicine. Committee on Quality of Health Care in America. To Err is Human. Building a Safer Health System. Washington, DC: National Academy Press; 2000. [PubMed]

- 6.Advisory Commission on Consumer Protection and Quality in the Health Care Industry. Quality first: better health care for all Americans. 1998. Available at: http://www.hcqualitycommission.gov/final/. [PubMed]

- 7.Leape LL, Woods DD, Hatlie MJ, et al. Promoting patient safety and preventing medical error. JAMA. 1998;280:1444–1447. [DOI] [PubMed] [Google Scholar]

- 8.Sentinel Event Alert. The Joint Commission on Accreditation of Healthcare Organizations, Oakbrook Terrace, Ill: JCAHO, 1998.

- 9.Ely JW, Levinson W, Elder N, Mainous AG, Vinson DC. Perceived causes of family physicians’ errors. J Fam Pract. 1995;40:337–344. [PubMed] [Google Scholar]

- 10.Dimsdale JE. Delays and slips in medical diagnosis. Perspect Biol Med. 1984;27:213–220. [DOI] [PubMed] [Google Scholar]

- 11.Novack DH, Suchman AL, Clark W, Epstein R, Najberg E, Kaplan C. Calibrating the physician: personal awareness and effective patient care. JAMA. 1997;278:502–509. [DOI] [PubMed] [Google Scholar]

- 12.Epstein RM. Mindful practice. JAMA. 1999;282:833–839. [DOI] [PubMed] [Google Scholar]

- 13.Reason J. Human Error. Cambridge, UK: Cambridge University Press; 1990.

- 14.Borrell F. Entrevista Clínica. Barcelona: Doyma-Mosby; 1989, 2003.

- 15.Epstein RM. Mindful practice in action (I): technical competence, evidence-based medicine and relationship-centered care. Fam Syst Health. 2003;21:1–9. [Google Scholar]

- 16.Epstein RM. Mindful practice in action (II): cultivating habits of mind. Fam Syst Health. 2003;21:11–17. [Google Scholar]

- 17.Bordage G. Elaborated knowledge: a key to successful diagnostic thinking. Acad Med. 1994;69:883–885. [DOI] [PubMed] [Google Scholar]

- 18.Bordage G, Zacks R. The structure of medical knowledge in the memories of medical students and general practitioners: categories and prototypes. Med Educ. 1984;18:406–416. [DOI] [PubMed] [Google Scholar]

- 19.Friedman MH, Connell KJ, Olthoff AJ, Sinacore JM, Bordage G. Medical student errors in making a diagnosis. Acad Med. 1998;73:S19–S21. [DOI] [PubMed] [Google Scholar]

- 20.Byrne PS, Long BEL. Doctors Talking to Patients. Exeter: Royal College of General Practitioners; 1984.

- 21.Newell A, Simon HA. Human Problem Solving. Englewood Cliffs, NJ: Prentice-Hall; 1972.

- 22.Beach LR. Image Theory: Decision-making in Personal and Organizational Contexts. Chichester, UK: Ed Wiley; 1990.

- 23.Damasio AR. Decartes’ Error: Emotion, Reason, and the Human Brain. New York, NY: Avon Hearst; 1995.

- 24.Damasio AR. The Feeling of What Happens: Body and Emotion in the Making of Consciousness. New York, NY: Harcourt Brace; 1999.

- 25.Lehaey TH, Harris RJ. Learning and Cognition. Englewood Cliffs, NJ: Prentice Hall Inc; 1997.

- 26.Dreyfus HL, Dreyfus SE. Mind Over Machine. New York, NY: Free Press; 1986.

- 27.Apter MJ. Reversal Theory: Motivation, Emotion and Personality. Lon-don: Routledge; 1989.

- 28.Kassirer J , Kopelman RI. Learning Clinical Reasoning. Baltimore, Md: Williams and Wilkins; 1991.

- 29.Fitzgerald F. Curiosity. Minn Med. 1999;82:10–12. [PubMed] [Google Scholar]