During their years of formal training, medical students and residents are exposed to intensive teaching experiences designed specifically to produce clinicians whose skills reflect current best knowledge and practice. But despite a good deal of lip service from faculty during those years about the importance of “lifelong learning,” these same students and residents receive virtually no meaningful guidance on keeping up-to-date after they finish medical school and residency.

Once in practice, therefore, practitioners are essentially on their own as they face the serious challenge of keeping their knowledge, skills, and performance up-to-date, and up to consistently high standards, in the midst of an increasingly hectic professional life. They discover, moreover, that in contrast to the highly structured environment of medical school and residency—with their tightly controlled standards, defined curricula and abundant faculty, supervisors, and mentors—the opportunities and mechanisms for keeping up-to-date in practice are many and varied, ranging from formal, faculty-driven programs, which are what is generally meant by the term “continuing medical education” (CME), to informal, open-ended, and learner-driven ones.1 The informal variety, which is not usually even thought of as CME, includes a wide spectrum of important professional activities, such as reading journals and textbooks, the use of consultants, practice audit, and participation in continuous quality-improvement activities, in the development and use of practice guidelines, in teaching, and in the experience of practice itself.

The sheer logistical challenge to clinicians of keeping up-to-date is therefore compounded by the varied and confusing array of potentially “educational” activities. It is just plain hard to know what is educational, in either a theoretical or practical sense, which makes it difficult to know where to invest precious educational time, not to mention mental and physical effort, and money. The discussion that follows tries to bring some coherence to this confusing state of affairs and provide some guidance in making choices in this important area of professionalism.

THE TWO-COMPONENT CME SYSTEM

The formal CME system, which operates under standards created and enforced by the Accreditation Council on Continuing Medical Education (ACCME), is characterized by: centralized control; standards that focus on educational process rather than medical outcomes; a time-based system of educational credits; legislative and organizational ties to licensing and credentialling; the use of a passive, knowledge-update educational model; substantial expense in terms of both dollars and time; and major connections to industry.2

Typically, formal CME consists of lecture courses ranging from a single hour to several days, a format Philip Nowlen has described in the following melancholy terms3:

A single instructor lectures and lectures and lectures fairly large groups of . . . people who sit for long hours in an audiovisual twilight, making never-to-be-read notes at rows of narrow tables covered with green baize and appointed with fat binders and sweating pitchers of ice-water.

Such courses are based, ideally, on learners’ needs for clinical knowledge but, in fact, often are driven at least as much by faculty interests and expertise. In return for their attendance, course participants receive so-called category 1 CME credit on an hour-for-hour basis. Category 1 credit, in turn, is defined as “documentable and sponsor-verifiable” educational activity, and is generally produced by organizations whose CME activities are developed in accordance with the ACCME's seven educational “essentials.” Aside from the narrow focus on knowledge in such courses, the preoccupation here is obviously on control and public accountability, rather than on learning, or improved clinical effectiveness per se. Indeed, in the words of the American Medical Association's Dr. Dennis Wentz, category 1 credit dominates the U.S. CME system because it produces “. . . what bureaucrats like: evidence of an attendance slip!”2

The informal system is “everything else,” including all the many activities—journal and textbook reading, etc.—noted above. The informal system is characterized by: local, distributed control that rests principally with individual physicians and health care organizations; no consistent or national criteria for educational credit; no formal requirement in connection with licensing or credentialling (at least not yet); an active, learner-centered, learner-driven educational model; lack of definition of costs in time and dollars; and (so far) limited connection with industry.

The open-ended quality of the informal CME system makes it more difficult to characterize, but in at least some instances, participation in informal CME provides so-called category 2 credit, which is awarded for “education verified by the physician-participant.” (Recent changes in the rules have made it possible to receive category 1 credit for journal reading, but only under strictly controlled conditions, as when a journal publishes a print version of a defined education program.) Category 2 credit carries less official, regulatory weight for relicensing and credentialling than category 1 credit, which is somewhat paradoxical because, as noted below, the evidence for the educational effectiveness of the passive, knowledge-update programs typically associated with category 1 credit is less strong than it is for many of the activities associated with category 2 credit. A further (administrative) paradox is that CME credits are defined by one of ACCME's parent organizations, the American Medical Association, rather than by the ACCME. The resultant separation of control of the CME credit system from CME accreditation itself unfortunately adds confusion and inefficiency to an already overburdened and heavily bureaucratic process.

DOES FORMAL CME WORK?

Formal CME in the United States is an enormous undertaking, absorbing hundreds of thousands of faculty and learner hours, and hundreds of millions of dollars, each year. An investment on this scale implies that the value of CME is well established, and that physicians and CME providers alike clearly must also believe this is so. Unfortunately, earlier efforts to study the effectiveness of CME in a serious way—narrowly defined as improvement in patient outcomes, but also improvement in clinical practices, or even increased physician knowledge—did not demonstrate convincingly that CME in the traditional “predisposing” mode, i.e., that focused on communicating or disseminating information, was, in fact, effective at any level.4

With time, however, and with improvements in the methodology for measuring educational effectiveness, it has become clear that formal CME can and does work, even at the strictly clinical level.5 Measurable effectiveness is largely limited, however, to programs that go beyond predisposing activities (i.e., knowledge update), and that include “enabling” strategies (e.g., on-site interventions within practices that support or facilitate changes and improvements in clinical care—for example, patient education activities or so-called academic detailing), “reinforcing” strategies (e.g., reminders and feedback), or, most impressively, multifaceted interventions that combine all three, i.e., predisposing, enabling, and reinforcing strategies.

As to the effectiveness of informal CME, the evidence, although much more limited, seems to indicate a significant impact on clinical practice, at least for activities like small-group, practice-based learning, searching the medical literature for answers to clinical questions, and practice audit.1 These observations are congruent with those of Philip Nowlen, whose detailed, scholarly studies of the effectiveness of continuing education extend across a wide range of disciplines, both inside medicine and outside it.3 In brief, Nowlen has also found that education in the knowledge-update mode (the equivalent of predisposing education) is the least likely to lead to meaningful changes in professional practice. Programs that go beyond knowledge acquisition and come to grips with skills (Nowlen's “competence” mode) have a considerably greater chance of improving professional work. (Examples in medicine would include interactive programs designed to improve interviewing skills, or self-assessment programs that present diagnostic challenges.)

Even professionals who are highly competent do not, however, always deal consistently well with the complex, tangled medical problems or “messes” they face in daily practice. Impediments to high-quality practice often arise because either the complex organizations in which these professionals work fail to operate smoothly or efficiently, or the interaction between competent professionals and good systems fails to work well. Education programs that assess the needs of professionals in the system and the system in which they work, and deal with the malfunctions at this higher, more complex level of organization (Nowlen's “performance” mode) are more difficult to develop, hence harder to find, but represent the most sophisticated, and potentially the most effective of educational approaches. If performance-mode education sounds familiar, it is because it shares many of the elements of continuous quality improvement, the increasingly powerful approach to managing messes that has evolved outside, and in parallel with, continuing education over the past several decades.6

A BROADER VIEW OF KEEPING UP-TO-DATE

Clinicians who take seriously their obligation to keep up-to-date thus face a paradox. On the one hand, the traditional system of formal CME seems to make little difference to actual clinical practice, although it does appear to satisfy certain administrative requirements for public accountability, as well as certain other social and professional needs described below. On the other hand, most physicians can and do grow, progress, develop, and keep up-to-date to a greater or lesser degree during their practice years. They learn about new diseases; they learn to use new diagnostic and therapeutic approaches; and they learn to use old ones better. How they really do so is still somewhat of a mystery,7 but, taken together, the evidence suggests that this occurs through one or another version of “experiential learning.”2,8,9 The phenomenon of physicians’ continued growth and change over time is, thus, quite distinct from the process of formal CME, and for that reason is sometimes referred to by the broader and more meaningful term “continuing professional development.”

Experiential learning in medicine, as the name implies (and as William Osler pointed out many decades ago), differs fundamentally from classroom learning in that it starts and ends with the experience of patient care. Thus, the experiential learning cycle requires that clinicians, first, continue to involve themselves fully, openly, and without bias in new clinical experiences. But experience alone does not make clinical education. To lead to professional growth, it must be linked to observation and reflection on these experiences: a process of defining the problems and formulating the pertinent questions about what has occurred in the course of practice. This, in turn, makes it possible to search out, explore, and understand the principles and patterns, the concepts and constructs—in brief, the explanatory theories—that underlie clinical problems. Finally, these patterns, principles, and theories are then used to support decisions and help solve the next set of unfamiliar problems. Thus, reflection, exploration, and understanding are crucial, but by themselves also do not make clinical education; to be effective they, in turn, must be linked back to experience and the solving of new problems.

The appropriate model for the teacher in experiential learning, then, is the coach rather than the lecturer9; the appropriate unit of credit is the unit of learning, not the unit of teaching.2

SOME PRACTICAL IDEAS

Given the concerns about the lack of clinical effectiveness of formal CME, it is gratifying to know that at least a few serious efforts are being made to move the state of the art of continuing professional development beyond formal CME, as follows.

Individual Learning Programs

There is now, for example, some experience with learning programs that are tailored to individual learning needs, both in the United States10 and abroad (particularly the United Kingdom and Australia). These involve detailed assessment of learning needs, work with peer-mentors, the design of learning plans, and periodic reassessment of progress. Although these programs seem to work, they are highly labor intensive and require major institutional support to be sustained. David Sackett and his colleagues have given a great deal of thought to the techniques of keeping up-to-date clinically, and have shared their experience with methods for reviewing one's own performance, tracking down evidence, choosing journals to read, surveying the medical literature, critically appraising articles, and the like.11 Then there are a variety of self-assessment instruments such as the American College of Physicians’ Medical Knowledge Self-Assessment Program (MKSAP), specifically designed to help physicians evaluate the state of their own knowledge and teach themselves. (MKSAP is one of a relatively limited number of print programs that provides category 1 credit; two different electronic versions of MKSAP are also now available.) And now, of course, specialty board recertification provides added impetus for all this effort.

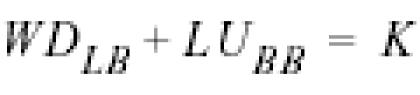

Equally important, however, is the model of continuing professional development now being developed and used in Canada under the auspices of its Royal College of Physicians and Surgeons, the so-called Maintenance of Competence Program, or MOCOMP.2 The program incorporates a creative approach to experiential learning much like the one promulgated years ago by Dr. David Seegal, a member of the clinical faculty at Columbia University's College of Physicians and Surgeons, in his mysterious formula:

This translates into the instruction to “Write it down in the little book, then look it up in the big book, which leads to increase in your knowledge.” The “it,” of course, refers to anything you have seen or heard in the course of working with patients (experience) that you haven’t understood. The “little book” is any place you might keep written notes and reminders (observation and reflection, problem and question formulation) where they won’t get lost or mixed up; nowadays, of course, this might be a palmtop computer. The “big book” might be a text, but now would also include the whole universe of print and electronic information sources (containing the patterns, principles, and theories). In the MOCOMP system, clinicians use an electronic log system to record both their questions and the information they have obtained in response to those questions, along with the impact, if any, on their practices. To receive educational credit, physicians then submit their logs from time to time to the Royal College of Physicians and Surgeons.

Journal Reading

How do other educational activities such as regular reading of journals fit into the picture? Even though category 1 CME credit is not generally available for medical journal reading, medical journals (covered in another section of this supplement) are well established as an important source of continuing education for physicians, at least at the level of knowledge development. Particularly critical in relation to the use of the medical journal literature in today's professional environment are critical appraisal skills for selecting, filtering, and evaluating published information,11 skills that now include an understanding of the nature and role of systematic reviews,12 and evidence-based medicine.13

Live CME Courses

Despite the lack of evidence that CME courses, by themselves, produce measurable change in clinical practices, formal courses do have a place in continuing professional development. At the least, they are a relatively efficient way to identify information that will serve as a context and guide for clinical decision making; they very likely also provide opportunities for reflection on practice experiences and identification of constructs and theories, important elements in the experiential learning cycle. In these courses the choice of material and its synthesis by faculty are critical. Unlike medical journal publishing or guideline development, however, such courses lack a process analogous to peer review, so biases—conscious and unconscious, personal and professional, intellectual and financial—are more likely to creep in. In choosing formal CME courses, therefore, it is particularly important to be on the alert for potential sources of bias: e.g., faculty whose points of view are narrowly limited to particular schools of thought, institutions, or geographic areas; substantial industry control of content; inadequate time to ask questions or engage in discussion; and the like.

An example of a specific, highly successful CME course illustrates a number of other subtle but pragmatic issues that are often overlooked but can make a major difference in the usefulness of a course. The “board review” course in emergency medicine presented annually over a number of years at a major U.S. academic medical center is such an example. The course is scheduled reasonably close to the examination, but far enough in advance so that participants have time to absorb the material and fill in the gaps in their knowledge identified during the course. Tuition is substantial, but this can be viewed as reflecting the absence of industry support. In planning each year's course, its directors make extensive use of feedback from the course from the previous year, obtained through detailed questionnaires that were handed out and collected at the end of each day's sessions. Then, several weeks before the new course, registrants receive a brief questionnaire asking what topics and issues they are most interested in learning about. The course directors and faculty have planned the curriculum with enough flexibility so that they can adjust their presentations somewhat, based on this “just-in-time” educational needs assessment. Most of the faculty are experienced emergency room clinicians from the faculty of the host institution, but a number of outstanding teachers from around the country are included as well, which provides a broader, national perspective.

The course begins with some highly pragmatic sessions on things registrants need and want to know about board examinations, such as the general nature of the board examination, strategies for answering questions, and the like. The bulk of the course then consists of systematic, intense, clinically oriented lectures and discussions on emergency medicine, based on a comprehensive curriculum developed by a national emergency medicine body. Lectures are supplemented with optional (and well-attended) sessions that give participants access to individual, interactive computer-based tutorials and multiple-choice question exercises. After the course, the directors follow up with participants (on a voluntary basis), to learn how they performed on the examinations; the overall performance rates are then used in considering future modifications of the program. In short, the directors of this course care: they provide what participants both want and need; they pay attention to details; they use their experience with each year's course to improve the next year’s; they are organized and systematic in their approach to content; and they use a mixture of educational approaches designed to reinforce one another.

Several other points are worth considering in selecting live CME courses and attending them. First, as knowledge-update courses are by themselves not very useful in improving practice, it also makes sense, at least in principle, to look for programs that are not limited to knowledge transfer but are in one way or another explicitly linked to “enablers” or “reinforcers”; such courses are, unfortunately, still rare. Second, application of the “commitment for change” technique may also help in getting the most out of formal knowledge-update CME courses.2 This technique consists simply of going into each portion of a CME program with the explicit intent of identifying those few items of information that are sufficiently compelling to warrant committing yourself to further action, e.g., to learn more, to discard a previous practice, to adopt a new one. At the end of each teaching session, it is then important to write down those commitments, keeping the list short and as specific as possible. Then, at a point long enough after the program to have had the opportunity to make the appropriate changes, it makes sense to go back over the list to see which changes you actually made, which you did not, and if not, why not.

Finally, formal CME courses contribute to professional life in important ways that are generally not even considered in educational research. That is, a major reason why clinicians attend such courses is to receive reassurance that their current practices still measure up to shared professional standards, i.e., to know that no important new developments have taken place. Much of the value of these courses, therefore, seems to come through reinforcing practice, rather than promoting change. Moreover, live courses give clinicians the opportunity to connect with their peers, share their concerns and problems, their satisfactions and achievements, thus strengthening their links to the profession and their identity as medical professionals. Even at its scientific best, medicine is always a social act; accordingly, these “social” functions of CME courses must be taken seriously.

SUMMING UP

On the face of it, continuing professional development is relatively straightforward, consisting largely of a particular set of attitudes and some simple techniques of learning. Looked at this way, all that is required is the willingness to take on new experiences, a reflective state of mind, the skills and tools for finding, absorbing and understanding new conceptual frameworks and explanatory principles, and the confidence to leave existing practices for new ones. Of course, like many things that seem simple, the underlying reality is much more complex. The attitudes are neither easy to develop nor easy to maintain. Many of the techniques are actually very sophisticated and difficult to learn. And as the years go by, the pressures of practice leave less and less time and energy for this dimension of professional life.

In confronting these more complex realities, it may help to remember that all physicians struggle, more or less successfully, with the issue of professional development. One reason learning to do things better is difficult is because it implies the way you’ve done them in the past may not have been so good; and while this is a definite threat to one's self-esteem, facing up to this challenge is a mark of true professionalism. It may also help to keep in mind that continuing professional development does not, and should not, occur in a vacuum: learning, keeping up-to-date, and up to the mark is intrinsically a collective process that strengthens the ties of individual clinicians to the profession as a whole. And, finally, it may help to remember that medicine is not unique in these struggles. Indeed, the need for continuing self-improvement is the one element that is consistently seen as being central to professionalism across all disciplines.3

REFERENCES

- 1.Davidoff F. CME in the U.S. Postgrad Med J. 1995;72:536–8. [PubMed] [Google Scholar]

- 2.Davidoff F. Who Has Seen a Blood Sugar? Reflections on Medical Education. Philadelphia, Pa: American College of Physicians; 1996 [Google Scholar]

- 3.Nowlen PM. New York: NY: Collier Macmillan; 1988. A New Approach to Continuing Education in Business and the Professions. [Google Scholar]

- 4.Davis DA, Haynes RB, Chambers L, Neufield VR, McKibbon A, Tugwell P. The impact of CME: a methodological review of continuing medical education literature. Evaluation Health Professions. 1984;7:251–83. [Google Scholar]

- 5.Davis DA, Thomson MA, Oxman AD, Haynes RB. Changing physician performance. A systematic review of continuing medical education strategies. JAMA. 1995;274:700–5. doi: 10.1001/jama.274.9.700. [DOI] [PubMed] [Google Scholar]

- 6.Berwick DM. Harvesting knowledge from improvement. JAMA. 1996;275:877–8. [PubMed] [Google Scholar]

- 7.Fox RD. Mazmanian PE. Putnam RW. New York: NY: Praeger; 1989. Changing and Learning in the Lives of Physicians. [Google Scholar]

- 8.Kolb DA. Experience as the Source of Learning and Development. Englewood Cliffs: NJ: PTR Prentice Hall; 1984. Experiential Learning. [Google Scholar]

- 9.Schön D. San Francisco: Calif: Jossey-Bass; 1988. Educating the Reflective Practitioner: Toward a New Design for Teaching and Learning in the Professions. [Google Scholar]

- 10.Manning PR, Clintworth WA, Sinopoli LM, Taylor JP, Krochalk PC, Gilman NJ. A method of self-directed learning in continuing medical education with implications for recertification. Ann Intern Med. 1989;107:909–13. doi: 10.7326/0003-4819-107-6-909. [DOI] [PubMed] [Google Scholar]

- 11.Sackett DL. Haynes R. Guyatt GH. Tugerll P. 2nd ed. Boston: Mass: Little, Brown, and Co; 1991. Clinical Epidemiology: A Basic Science for Clinical Medicine. [Google Scholar]

- 12.Chalmers I. The Cochrane Collaboration: preparing, maintaining, and disseminating systematic reviews of the effects of health care. Ann NY Acad Sci. 1993;703:156–63. doi: 10.1111/j.1749-6632.1993.tb26345.x. [DOI] [PubMed] [Google Scholar]

- 13.Rosenberg W, Donald A. Evidence based medicine: an approach to clinical problem-solving. BMJ. 1995;310:1122–6. doi: 10.1136/bmj.310.6987.1122. [DOI] [PMC free article] [PubMed] [Google Scholar]