Abstract

Objective: To provide a scientific method for the development, validation, and correct use of a survey tool.

Background: Many athletic trainers are becoming involved in research to benefit either their own situations or the larger profession of athletic training. One of the most common methods used to gain this necessary information is a survey, with either a questionnaire or an interview technique. Formal instruction in the development and implementation of surveys is essential to the success of the research. As with other forms of experimental research, it is important to validate and ensure the reliability of the instruments (ie, questionnaires) used for data collection. It is also important to survey an appropriate sample of the population to ensure the appropriateness of applying the findings to the larger population. Lastly, to ensure adequate return of data, specific techniques are suggested to enhance data collection and the ability to apply the findings to the larger population.

Description: A review of the procedures used in the development and validation of a survey instrument is provided. Information on survey-item development is provided, including types of questions, formatting, planning for data analysis, and suggestions to ensure better data acquisition and analysis.

Advantages: By following the suggested procedures in this article, athletic training researchers will be able to better collect and use survey data to enhance the profession of athletic training.

Keywords: evaluation, questionnaire development, validating surveys, research procedures

Survey research using questionnaires or interviews is become increasingly visible in athletic training education today. Focused questionnaires and interview methods are 2 of the more common methodologic strategies; the techniques I discuss in this work are specific to questionnaire research. Survey research involves the use of a self-administered questionnaire designed to gather specific data via a self-reporting system.1,2 Self-reporting is not a direct method of observation of a respondent's behavior or actions; however, there is no better method to assess a subject's psychologically based variables (such as perceptions, fears, motivations, attitudes, or opinions2) and specific demographic data. Through the data collected, the investigator attempts to assess the relative incidence, distribution, and interrelations of naturally occurring phenomena, attitudes, or opinions and to establish the incidence and distribution of characteristics or relationships among characteristics.3 In other words, survey research allows an investigator to get a “snapshot” of what is happening at a given time or situation and then allows the investigator to determine how that snapshot influences other behaviors or situations.

Using questionnaires can be a more advantageous process than interview research for the survey-research process. Questionnaires allow for a standardized set of questions, not biased by interviewer participation, to be answered by subjects on their own time. Questionnaires also allow for anonymity, encouraging more honest and candid responses, and often a higher response rate. A response rate from 60% to 80% of a sample is considered excellent.2 Finally, this method of research is appealing to many athletic trainers, because the time needed to conduct the research is more under the control of the investigator. Investigators may use the less busy times in their schedules to develop and analyze their data, which decreases the demands on them during the busier periods of their jobs. The disadvantages of this type of research are the limitations of a self-reporting system, the potential for misunderstandings or misinterpretations of questions or response choices,2 and gaining adequate access to subjects. However, if attention is paid to ensuring validity and reliability of the instrument, the effect of these disadvantages is lessened.

SURVEY-RESEARCH PLAN OF ACTION

Successful survey research must be purposeful, useful, and applicable. There should be a guiding purpose or reason for collecting data; as a method of data collection, surveys are used to collect all types of data, based upon research questions that need to be answered. Too often, surveys are conducted without specific direction, because “they are the easiest way to do research” or to “find out what others are thinking” about a specific topic. The purpose of a research survey is not to “fish” for answers but to assess if a predetermined issue is indeed the issue and whether it influences the outcomes as hypothesized by the investigator. If done correctly, survey research can be just as fact finding, challenging, and rewarding as experimental-design research. Finally, survey research should be important and useful to not only the individual or group collecting the data but also to a larger group. That is, the results of a specific survey can only be generalized to the population represented by the sample used in the study. For consideration for publication and consuming others' time, the analysis of the data collected should have a wider impact and applicability. Although broadening the subject pool or geographic distribution may increase the likelihood of greater applicability of results, it cannot be assured.

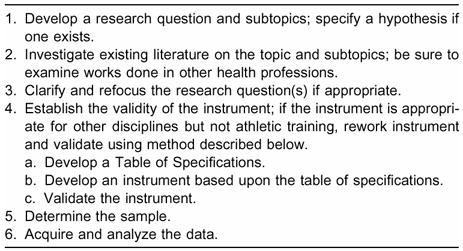

As with all types of research, the development of a survey-research study requires a specific plan of action. This 6-step process begins with the identification of the research question and the applicable subtopics. It involves a sequential process that ensures that the information gathered is useful and useable. Table 1 provides an overview of the plan of action for a survey study. Each area of this plan is discussed in more detail in subsequent sections.

Table 1. Survey-Research Plan of Action

DEVELOPING THE QUESTION

When beginning an investigation, it is important to determine the goal or purpose of conducting a survey. Most individuals begin by determining a specific question to answer, then develop the questions or hypotheses that guide the initial question. For example, if an investigator wishes to examine the characteristics of an effective clinical instructor (CI), the investigator may want to examine psychological characteristics, teaching techniques, and personal attributes found in those CIs who are perceived to be effective. To help determine the underlying principles or variables that should be considered when developing a research question, it is essential to examine the published literature.

It is important to determine how others have addressed this question, their perceptions of the need for the question to be answered, and the outcomes of their investigations. It is also important to look at the literature in fields related to athletic training, such as physical therapy, nursing, and teacher education. These professions are older than the profession of athletic training, and research in these disciplines may lead the athletic training investigator (ATI) to findings or considerations not previously examined in our field. These literature reviews also may provide examples of methods not previously considered and offer the investigator standardized, validated survey tools. After examining the literature, the investigator re-examines the research question and clarifies and refines the topic to be examined.

DEVELOPING THE INSTRUMENT

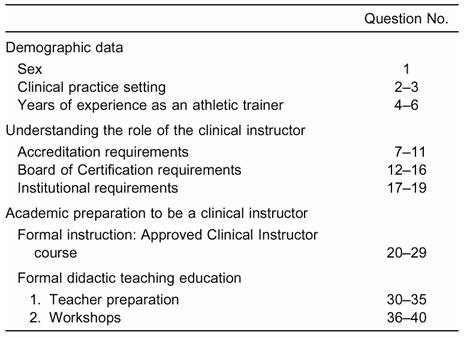

One of the more time-consuming parts of survey research involves the creation and validation of the survey instrument or questionnaire. Many steps are involved in the development of a series of items that address the research question or questions. The most efficient way to develop appropriate items is to create a Table of Specifications (ToS). The ToS delineates the main topics of the survey; these topics should be directly related to the research question. Under each topic area, there may be subconcepts or subtopics that the ATI wishes to investigate more specifically. In essence, the ToS becomes an outline of the content of the survey. Table 2 demonstrates an excerpt from a typical ToS for a research question that examines the effectiveness of CIs; this example is continued through each section of the article.

Table 2. Sample Table of Specifications

The ToS is used as a guide to develop appropriate questions and to determine criterion-related validity and the plan for item analysis. As questions or items are developed, they should be assigned to a topic area in the ToS. Items should fit into one of the categories of the ToS; an item can be reworded to fit more appropriately into a category, or it may be placed aside for use in a future study.

Writing questions depends on the kind of information being sought, question structure, and actual choice of words. Most questions involve assessments of attitudes, beliefs, behaviors, or attributes.4 Attitude questions indicate direction of the respondents' feelings: whether they are in favor of or oppose an idea, or if something is good or bad. For example, an attitude question might read, “It is important for all clinical instructors to possess good communication skills.” Belief questions assess what a person thinks is true or false, and may be used to determine knowledge of a specific fact.4 For example, a belief question might read, “To be a clinical instructor in athletic training, I must be an Approved Clinical Instructor as recognized by the National Athletic Trainers' Association and the Commission on Accreditation of Allied Health Education Programs.”

Behavior questions are designed to elicit respondents' beliefs about their behaviors.4 Because there is no direct observation of the respondent, there is no way to ensure that the respondent is truly telling the ATI what he or she really does. Belief questions tell the ATI what respondents believe they do; this is a weakness of the self-reporting system. One way to cross-check the authenticity of a respondent's belief is to ask someone else to evaluate how the respondent responds to the situation or to use interview techniques. For example, if a CI is asked to evaluate how often he elicits questions from his athletic training student, he may indicate several times throughout a day. The athletic training student then could be asked how often his CI elicits questions from him. This type of questioning may confirm that the respondent's beliefs are consistent with his student's beliefs about his or her behavior. Consistency of response is evaluated during the analysis phase of the study.

The fourth type of question that can be used in a survey is an attribute question that provides primarily demographic data.4 Most surveys involve some collection of demographic information, so that the investigator can use those variables later to analyze the data based upon specific demographic considerations. For example, by acquiring information as to sex of a CI and years of clinical experience as a CI, the investigator may be able to determine later if sex or years of clinical experience or both influenced the outcome of CI effectiveness. Investigators should be cautioned to have a designated purpose or need to collect specific demographic information and to collect only that data germane to the research question. Collection of personally sensitive data, such as annual income or political affiliation, should only be included if it is important to the overall purpose of the study.

Survey questions can be written in many ways, and each method requires different considerations for item analysis, or how the data will be used to determine the results of a specific question.5 Close attention should be paid to how the data will be analyzed upon the completion of the study, as different statistical techniques require different formats of data (eg, ordinal, nominal). For example, age can be assessed as an exact number or as part of a range of numbers. The ATI must determine in advance if it is important to analyze the results of the survey based upon a specific age or if age ranges will be sufficient.

When writing survey questions, open-ended versus closed-ended questions provide unique challenges for the ATI. Open-ended questions may allow respondents to answer completely, creating personalized answers using their own words and without investigator bias or limits4: for example, “Please describe the attributes of an effective clinical instructor.” This method is very appropriate for qualitative studies but may create more concerns if the analysis is quantitative. Open-ended questions may be difficult to code and analyze because respondents may answer in many different ways.2 The ATI may be unable to interpret the open-ended response without clarification from the respondent.4 One method for using the open-ended question technique is to delimit the response. For example, the ATI might ask the respondents for their opinions on the best method to tape an ankle for a recent injury (2 weeks old) to the calcaneofibular ligament, rather than asking for the best method to tape an ankle.

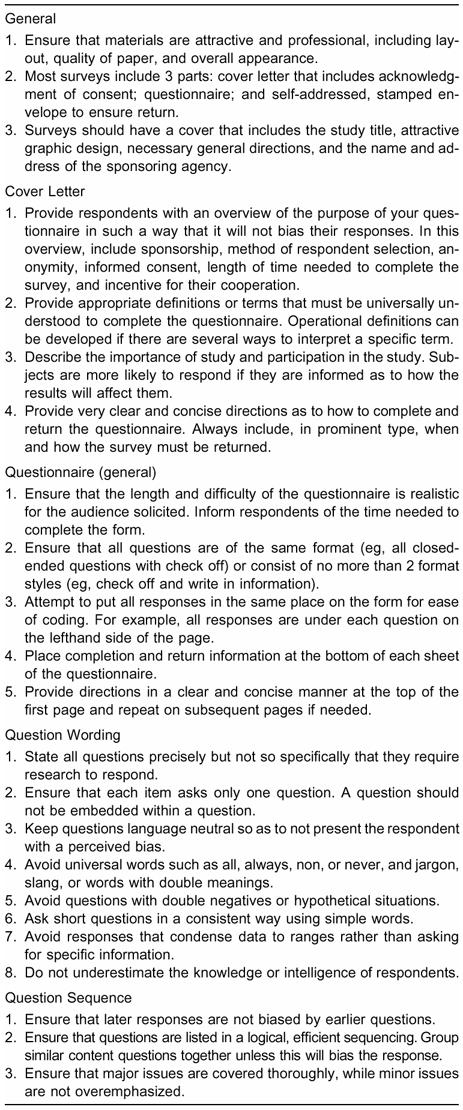

Closed-ended questions are one of the more common types used in athletic training literature. Closed-ended questions require the subject to select a response from a list of predetermined items developed by the investigator. These types of questions are typical of standardized multiple-choice tests; they allow for consistency in response and may be coded more easily. Potential responses may be presented in either random or purposeful order. The disadvantage to this style of question development is that it may limit the expression of respondents' opinions in “their own ways,” thus potentially biasing the data.2,4 The items developed should be exhaustive in nature, providing the respondent with all possible responses, and they should be mutually exclusive. With mutually exclusive items, each choice should clearly represent a unique option.2 An example of a poorly worded item would be, “I feel that I am prepared academically and clinically for my role as a clinical instructor.” This example is asking for feedback on 2 separate concepts: academic and clinical preparation. It would be preferable to separate the concepts into 2 different questions: “I feel that I am prepared academically for my role as a clinical instructor,” and “I feel prepared clinically for my role as a clinical instructor.” Table 3 provides additional considerations for developing both open-ended and closed-ended questions.

The other common data-collection method is the scale. The scale is an ordered system that provides an overall rating representing the intensity felt by a respondent to a particular attitude, value, or characteristic. Scales allow the ATI to distinguish among respondents.2 While several types of scales are used in questionnaires, the 2 most common in athletic training literature are the Likert and Guttman scales. Likert scales are summative scales that are used most often to assess attitudes or values. A series of statements expressing a viewpoint is listed, and the respondents are asked to select a ranked response that reflects the level with which they agree or disagree with the statement.2 Potential responses are presented in rank order. A large number of items or statements, usually 10 to 20 items, is required when using a Likert scale. An equal number of the items should reflect favorable and unfavorable attitudes to truly discriminate the respondents' opinions.2 Responses generally are provided in 5 categories (strongly agree, agree, neutral, disagree, and strongly disagree), but some support exists for the use of an even number of categories to require respondents to take a definitive position (either positive or negative) on the response. In other words, if 3 responses are positive and 3 are negative, respondents are forced to make a directional decision.

Guttman scales are cumulative scales that present a set of statements reflecting increasing intensities of the characteristics being measured. This technique is designed to ensure that only one dimension exists within a set of responses; only one unique combination of responses can achieve a desired score.2 In this cumulative scale, if respondents agree with one item, they also should agree with designated other items. For example, if a respondent believes that an effective CI communicates well with coaches, he or she also would select responses that indicate that the CI has open communication with colleagues and students. Success using Guttman scales relies on having a large number of respondents to assess patterns accurately.2

VALIDATING THE INSTRUMENT

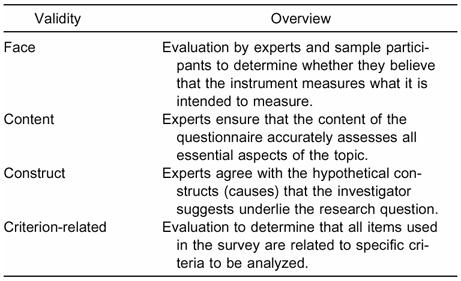

To ensure the accuracy of the data collected and the conclusions derived from the findings, it is essential to validate the survey; a valid test is also reliable.2 Validation of an instrument ensures that the instrument is measuring what it is intended to measure and allows the investigator to make decisions that answer the research question(s) based upon a specific population.1–3,6 When conducting survey research, it is important to determine the validity of the instrument via 4 assessments: face validity, content validity, construct validity, and criterion-related validity (Table 4).

Table 4. Types of Validity

Face Validity

Face validity is the evaluation by both experts and sample participants to determine if those individuals believe that the instrument measures what it is intended to measure.3 Face validation is subjective and the weakest of the assessment methods scientifically; however, it is essential in the development of a valid survey tool. Face validity helps to ensure that the instrument will be acceptable to “those who administer it, those who are tested by it, or those who will use the results.”2,4 One way to determine the face validity of an instrument designed to assess the impressions of a specific group is to gather a small sample of that group and have them review the questionnaire. For example, if the effectiveness of CIs was being assessed, CIs and possibly their students would be solicited for their opinions. Individuals selected for this task may be part of a local group of students or CIs (convenient sampling) or may be randomly solicited from all CIs and athletic training students from across the country, district, or region (stratified random sampling).

This small sample would be asked to complete the questionnaire, noting confusing items or concerns as they proceed through the instrument. Upon their completion of the survey, the ATI would record the amount of time it took to complete the questionnaire for future use as part of the introduction section of the instrument. Then the ATI would solicit information regarding issues and concerns noted while completing the survey. These issues need to be addressed and corrected before the instrument is used to collect data for the actual study. Sample participants also provide feedback as to the quality of the overall intent of the questionnaire and their perceptions of how other similar participants may perceive the questionnaire. An item-by-item discussion of the questions may take place to ensure that all sample participants perceive the questions in a consistent manner.4

This portion of the instrument assessment also assists in determining the reliability of the instrument. The sample group may be used to assist in establishing the reliability of the instrument. The sample could either take the survey twice (test, retest) to determine their ability to answer the questionnaire consistently, or the group could answer the questionnaire only once. The results of the survey would be split (eg, odd-numbered and even-numbered questions) to compare one section of questions with another (split half) to determine if the responses were consistent. If the results are consistent, then the instrument may be considered reliable.

If this sample contains “experts” in the content included in the questionnaire, those individuals may assist in the establishment of the content validity (explained below). A similar evaluation process also would be conducted using an instrument-development expert who may or may not be knowledgeable about the content. If this individual is also an expert on the content or construct of the instrument, it would be appropriate to utilize those skills in other validation processes (eg, content validity, construct validity).7

Content Validity

Establishing the content validity of an instrument helps to ensure that the questionnaire accurately assesses all essential aspects of a given topic.1,2,8 Content validity, also referred to as intrinsic validity, relies on at least one “expert” (but does not prohibit the use of a panel), who reviews the instrument and determines if the questions satisfy the content domain.2,4 An example of an expert panel is a group of experienced CIs who personally have been recognized for their work as CIs or whose effectiveness has been validated by their students' successes. After the expert review of content, the questionnaire is revised before it can be used for the actual study. Although subjective in function, this process indicates that the questionnaire “appears” to be serving its intended purpose and can be reflected accurately in the ToS.

Construct Validity

Construct validity is linked very closely to content validity and is thought to be more abstract and basic in the validation of a questionnaire.1 Construct validity should be determined if no criterion or content area is accepted as entirely adequate to define the quality to be measured. Empirically, factor analysis can be used to identify constructs; the factors identified should reflect the constructs. For example, if we are attempting to determine the effectiveness of CIs, we realize that many intangible reasons underlie why individuals are effective in this role. In the development of the questionnaire, the ATI must infer that some imperceptible things contribute to the CIs' demonstrations of effectiveness. To determine construct validity of a questionnaire, the ATI must validate that others agree with the hypothetical constructs (causes) the ATI suggests underlie the CI's effectiveness.1,8 Therefore, as the experts determine content validity, they also are accepting the construct validity that underlies the content.

Criterion-Related Validity

Criterion-related validity is the most objective and practical approach to determining the validity of a questionnaire. In an earlier step in this process, a ToS for the survey is developed. To determine the instrument's criterion-related validity, the ATI must validate that all items in the questionnaire can be related to a specific criterion delineated in the ToS. This validation is important in the determination of the concurrent and predictive validity of the questionnaire results.2 Concurrent validity allows the ATI to interpret that the findings derived from the instrument can be used to accurately replace a more cumbersome process to determine the same outcomes. To determine concurrent validity, the ATI must demonstrate that results from one instrument correlate with the findings generated by an existing instrument. This technique is helpful in the development of new evaluation tools or to replace older tools that are less specific to athletic training.

More appropriate to athletic training research, predictive validity attempts to establish that a measure will be a valid predictor of some future criterion score.2 In athletic training education research, we may wish to determine if the scores on the National Athletic Trainers' Association Board of Certification certification examination or future successes of students can be predicted from specific behaviors demonstrated by the CIs with whom they have worked. Predictive validity is an essential concept in clinical and educational decision making, because it provides a rationale for using that measurement as a predictor for some other outcome.2,3 Predictive validity does not imply causality but does verify an association between outcomes.1

As with all types of research, it is important to ensure the protection of the subjects used in the study. Appropriate institutional internal review-board procedures should be followed, and approval must be granted by the ATI's internal review board before human subjects are involved in the instrument-review process. Each institutional review board has its own standards as to when approval is required in this instrument-development process; therefore, it is important for ATIs to check with their board representatives before beginning the instrument-development process. Survey research rarely requires a full board review and generally is considered using the expedited review process. Investigators using this research technique should consult with their individual institutional review boards before initiating any use of human subjects.

DATA ACQUISITION AND ANALYSIS

The sampling techniques used in other types of research are appropriate when conducting survey research. A sample is not representative of a population unless all members of that population have a known chance of being included in the sample.4 Once an appropriate sample has been identified, the validated instrument may be distributed. An adequate return rate (60% to 80%) can be ensured in several ways.2 Consideration of the time commitments and responsibilities of the potential respondents is very important. For example, if the ATI wants to solicit information from certified athletic trainers working football, it may be wise to avoid mailing surveys to them from August through November. Another consideration may be the number of surveys the designated individuals receive over the course of a year. For example, PDs may receive 10 to 20 surveys each year assessing their opinions and programmatic data in a variety of areas. If an ATI requires the input of PDs, then the best way to elicit an appropriate response rate is to ask for cooperation and permission to mail the survey in advance. The quality of the survey and importance of the results to the respondents also are determining factors in the response rate.

One way to inform a potential respondent of the importance of the results of a study is to include a short, motivational cover letter. No survey should be sent without a cover letter. The purpose of the cover letter is to introduce the survey and provide the motivation for the respondent to complete the survey. It also provides an opportunity for the ATI to anticipate and counter any questions or reasons why a potential respondent would not complete the survey,4 such as being too busy, not having enough time, or the results not affecting the respondent. Also included in the cover letter is encouragement and confirmation that the respondent is very important to the success of the study and that any personal or individual information gathered is held in confidence; the length of time required to complete the study is also noted.4

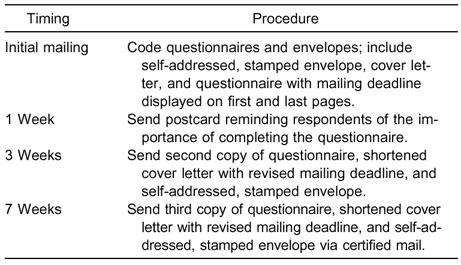

The procedure for soliciting responses to a survey involves 3 basic steps (Table 5). The ATI begins by coding all forms with a tracking number before they are distributed. This coding system helps an ATI to identify individuals who have not responded and to provide those who have not responded with additional reminders and opportunities to respond.4 The coding of the forms may be kept blinded from the ATI with the help of a disinterested colleague who assists in follow-up mailings and reminders. A data-coding–system data analysis also must be determined. A predetermined plan is needed to transfer nominal, ordinal, or interval data into numeric formats for use in statistical programs. This system varies with the type of statistical program to be used for analysis; however, most institutions have statistical experts on staff who can assist with this process.

Table 5. Procedure for Soliciting Results with a Validated Survey

After the initial mailing, a follow-up reminder postcard may be sent 1 week after the initial mailing to encourage participation.4 The postcard should provide a way for the potential respondents to contact the ATI if they misplaced the questionnaire or have questions regarding the survey, and it should thank those who already responded. Three weeks after the initial mailing, a second copy of the questionnaire, with a shortened cover letter and a revised return mailing date, may be sent again to all of those potential respondents who have not yet returned the survey.4 Seven weeks after the initial mailing, a final mailing, similar to the second mailing, should be sent via certified mail to emphasize the importance of a response.4 The second and third mailings are not always possible due to financial or time constraints, but they aid in ensuring a better return rate.

Once all the forms are returned, the data must be coded based on the predetermined data-coding system. Some ATIs may choose to use computerized assessment tools, such as Scantron sheets (Scantron Corp, Tustin, CA), as opposed to instruments that must be coded by hand. Although the computerized forms may facilitate data processing for the ATI, they may not be as convenient or as motivational for the subjects. As mentioned previously, to maximize the potential of the data collected, an ATI should consider how the data will be coded for analysis during instrument development. The data-analysis technique used at this point in the study is standardized and is done in the same manner that is used with other research techniques. Specific analysis is not included in this discussion.

In conclusion, survey research is a very worthwhile and important method for gathering information about issues involving athletic training education. The purpose of this article was to provide ATIs with a scientific method for the development and implementation of valid survey instruments. As with all types of research, it is important for the future of the profession to ensure that this type of research is done in the most scientifically appropriate manner possible. The findings from this research can be used to establish policy, validate policies and procedures, and move the education of athletic training professionals into positive future directions.

REFERENCES

- Payton O D. Research: The Validation of Clinical Practice. 3rd ed. FA Davis; Philadelphia, PA: 1993. p. 70–75,94–95,224,342. [Google Scholar]

- Portney L G, Watkins M P. Foundations of Clinical Research: Applications to Practice. 2nd ed. Prentice Hall Health; Upper Saddle River, NJ: 2000. p. 79–87,256–258,285–287,293–294,299–304. [Google Scholar]

- Kidder L H, Judd C M. Research Methods in Social Relations. 5th ed. Hold, Rinehart, and Winston Inc; Orlando, FL: 1986. p. 53–55,131. [Google Scholar]

- Dillman D A. Mail and Telephone Surveys: The Total Design Method. John Wiley & Sons Inc; New York, NY: 1978. p. 41,58,79–89,96,123–124,157–158,165–177,183. [Google Scholar]

- Worthen B R, Sanders J R. Educational Evaluation: Alternative Approaches and Practical Guidelines. Longman; White Plains, NY: 1987. pp. 306–307. [Google Scholar]

- Cook T D, Campbell D T. Quasi-Experimentation: Design & Analysis Issues for Field Settings. Houghton Mifflin Co; Boston, MA: 1979. p. 37. [Google Scholar]

- Ferguson G A, Takane Y. Statistical Analysis in Psychology and Education. McGraw-Hill; New York, NY: 1989. pp. 469–470. [Google Scholar]

- Cronbach L J, Meehl P E. Construct validity in psychological tests. Psychol Bull. 1955;52:281–302. doi: 10.1037/h0040957. [DOI] [PubMed] [Google Scholar]