Abstract

AIMS—To determine the interobserver and the intraobserver reliability of a published classification scheme for corneal topography in normal subjects using the absolute scale. METHOD—A prospective observational study was done in which 195 TMS-1 corneal topography maps in the absolute scale were independently classified twice by three classifiers—a cornea fellow, an ophthalmic technician, and an optometrist. From these observations the interobserver reliability for each category and the intraobserver reliability for each observer were determined in terms of the median weighted kappa statistic for each category and for each observer. RESULTS—For interobserver reliability, the median weighted kappa statistic for each category varied from 0.72 to 0.97 and for intraobserver reliability the range was 0.79 to 0.98. CONCLUSION—This classification scheme is extremely robust and even in the hands of less experienced observers with minimal training it can be relied upon to provide consistent results. Keywords: cornea; topography; classification; reliability

Full Text

The Full Text of this article is available as a PDF (149.6 KB).

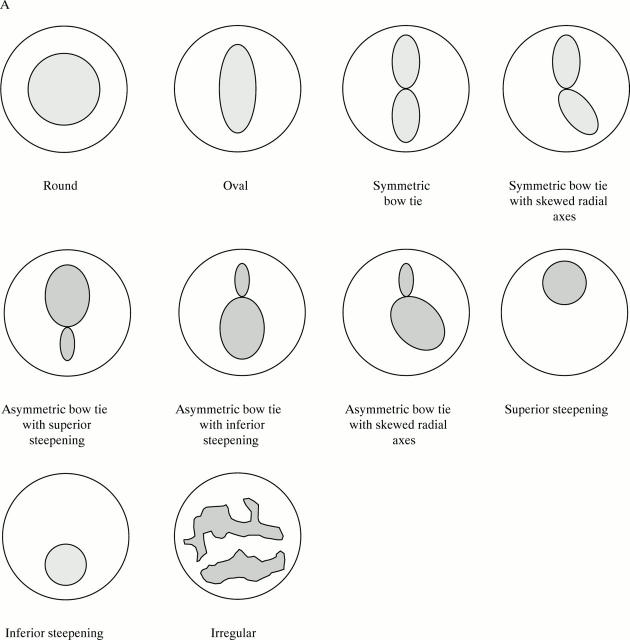

Figure 1 .

(A) Diagrammatic representation of the various topographical patterns used by this classification scheme. See text for detailed definitions. (B) A bow tie is considered to have "skewed radial axes" when the smaller of the two angles between the two lobes (θ1) is less than 150 degrees.

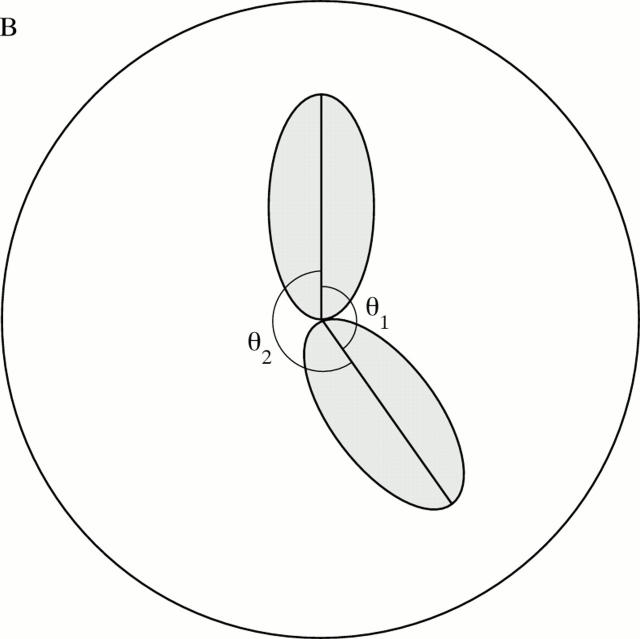

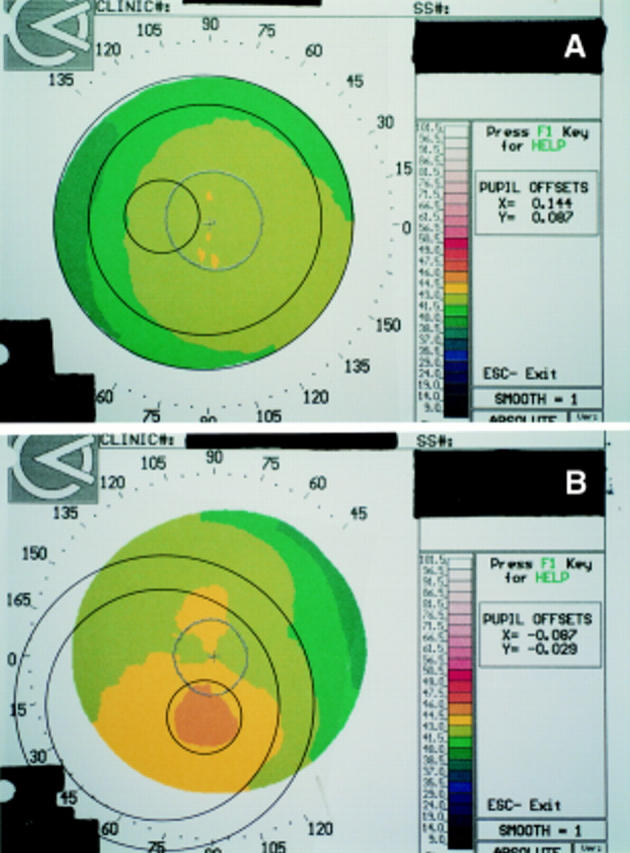

Figure 2 .

(A) Transparent overlay placed over a TMS 1 map. The outermost circle occupies the area that a TMS 1 map would occupy when all points on all 25 rings are digitised. The intermediate circle occupies an area equal to two thirds of the entire map. This area is used to determine the classification of a map. The innermost circle occupies an area equal to 10% of the central two thirds area of the entire map. (B) The overlay being used to assess whether a colour occupies 10% of the central two thirds area of the map.

Selected References

These references are in PubMed. This may not be the complete list of references from this article.

- Bahn C. F., Glassman R. M., MacCallum D. K., Lillie J. H., Meyer R. F., Robinson B. J., Rich N. M. Postnatal development of corneal endothelium. Invest Ophthalmol Vis Sci. 1986 Jan;27(1):44–51. [PubMed] [Google Scholar]

- Bogan S. J., Waring G. O., 3rd, Ibrahim O., Drews C., Curtis L. Classification of normal corneal topography based on computer-assisted videokeratography. Arch Ophthalmol. 1990 Jul;108(7):945–949. doi: 10.1001/archopht.1990.01070090047037. [DOI] [PubMed] [Google Scholar]

- Cicchetti D. V., Sharma Y., Cotlier E. Assessment of observer variability in the classification of human cataracts. Yale J Biol Med. 1982 Mar-Apr;55(2):81–88. [PMC free article] [PubMed] [Google Scholar]

- Cicchetti D. V., Sparrow S. A. Developing criteria for establishing interrater reliability of specific items: applications to assessment of adaptive behavior. Am J Ment Defic. 1981 Sep;86(2):127–137. [PubMed] [Google Scholar]

- Gibson R. A., Sanderson H. F. Observer variation in ophthalmology. Br J Ophthalmol. 1980 Jun;64(6):457–460. doi: 10.1136/bjo.64.6.457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gormley D. J., Gersten M., Koplin R. S., Lubkin V. Corneal modeling. Cornea. 1988;7(1):30–35. [PubMed] [Google Scholar]

- Hall J. N. Inter-rater reliability of ward rating scales. Br J Psychiatry. 1974 Sep;125(0):248–255. doi: 10.1192/bjp.125.3.248. [DOI] [PubMed] [Google Scholar]

- King A. J., Farnworth D., Thompson J. R. Inter-observer and intra-observer agreement in the interpretation of visual fields in glaucoma. Eye (Lond) 1997;11(Pt 5):687–691. doi: 10.1038/eye.1997.178. [DOI] [PubMed] [Google Scholar]

- Kirei B. R. Semen characteristics in 120 fertile Tanzanian men. East Afr Med J. 1987 Jul;64(7):453–457. [PubMed] [Google Scholar]

- Landis J. R., Koch G. G. The measurement of observer agreement for categorical data. Biometrics. 1977 Mar;33(1):159–174. [PubMed] [Google Scholar]

- Rabinowitz Y. S., Yang H., Brickman Y., Akkina J., Riley C., Rotter J. I., Elashoff J. Videokeratography database of normal human corneas. Br J Ophthalmol. 1996 Jul;80(7):610–616. doi: 10.1136/bjo.80.7.610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sparrow J. M., Ayliffe W., Bron A. J., Brown N. P., Hill A. R. Inter-observer and intra-observer variability of the Oxford clinical cataract classification and grading system. Int Ophthalmol. 1988 Jan;11(3):151–157. doi: 10.1007/BF00130616. [DOI] [PubMed] [Google Scholar]