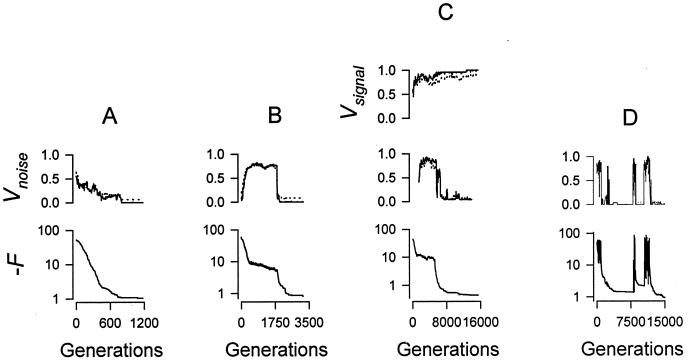

Figure 3.

Evolution histories in the presence of random noise, different in each generation, showing noise vulnerability (see Fig. 2 and text) and fitness; M = 100, R = 2 (A–C), or 4 (D). (A) Fitness improves as noise is steadily excluded from parts of the network, leading to a network with a protected subsystem consisting of noise-free output elements (Upper, solid) and the elements that compute the output (Upper, dotted). (B) The more common pattern in which the restructuring of the network by evolution initially increases the dissemination of noise information, despite the selective advantage that would accrue from its elimination. During this period, fitness slowly improves as a result of adaptations that “filter” the noise, reducing noise-related output variance. This is followed by a phase of rapid irreversible noise exclusion, which allows further evolution to maximum fitness. (C) The target function was randomly switched between step functions at t = 30 and t = 70, and a bit predicting which step function would be used was intercalated into the even time bins of the noise input, while the odd bins contained only random noise. A network evolved that was able to decode the inputs, creating a protected subsystem from which the noise was excluded (Middle) while the predictive signal was widely distributed (Top). (D) Noise correlated over a number of generations was produced by randomly resetting one randomly chosen bit of the noise array in each generation. In this environment, noise exclusion evolves, but can be reversed by maladaptive selection events (see Fig. 4), causing “information catastrophes” during the latter stages of evolution.