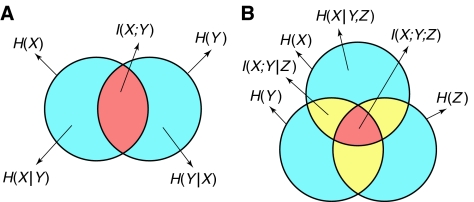

Figure 1.

Venn diagrams indicating the mutual information common to multiple variables. (A) Mutual information common to two variables. Arrows stemming from the perimeter of a circle refer to the area inside the whole circle. Arrows stemming from the interior of a region refer to the area of that region. The mutual information I(X;Y) is defined by the intersection of the two sets, whereas the joint entropy H(X,Y)—not shown—is defined by the union of the two sets. (B) Mutual information common to three variables. The mutual information I(X;Y;Z) is defined by the intersection of the three sets. The bivariate synergy of any two of the variables with respect to the third is equal to the opposite of the mutual information common to the three variables and therefore positive synergy cannot be shown in a Venn diagram.