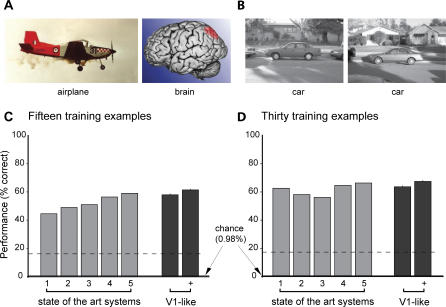

Figure 1. Performance of a Simple V1-Like Model Relative to Current Performance of State-of-the-Art Artificial Object Recognition Systems (Some Biologically Inspired) on an Ostensibly “Natural” Standard Image Database (Caltech101).

(A) Example images from the database and their category labels.

(B) Two example images from the “car” category.

(C) Reported performance of five state-of-the-art computational object recognition systems on this “natural” database are shown in gray (1 = Wang et al. 2006; 2 = Grauman and Darrell 2006; 3 = Mutch and Lowe 2006; 4 = Lazebnik et al. 2006; 5 = Zhang et al. 2006). In this panel, 15 training examples were used to train each system. Since chance performance on this 102-category task is less than 1%, performance values greater than ∼40% have been taken as substantial progress. The performance of the simple V1-like model is shown in black (+ is with “ad hoc” features; see Methods). Although the V1-like model is extremely simple and lacks any explicit invariance-building mechanisms, it performs as well as, or better than, state-of-the-art object recognition systems on the “natural” databases (but see Varma and Ray 2007 for a recent hybrid approach, that pools the above methods to achieve higher performance).

(D) Same as (C) except that 30 training examples were used. The dashed lines indicates performance achieved using an untransformed grayscale pixel space representation and a linear SVM classifier (15 training examples: 16.1%, SD 0.4; 30 training examples: 17.3%, SD 0.8). Error bars (barely visible) represent the standard deviation of the mean performance of the V1-like model over ten random training and testing splits of the images. The authors of the state-of-the-art approaches do not consistently report this variation, but when they do they are in the same range (less than 1%). The V1-model also performed favorably with fewer training examples (see Figure S4).