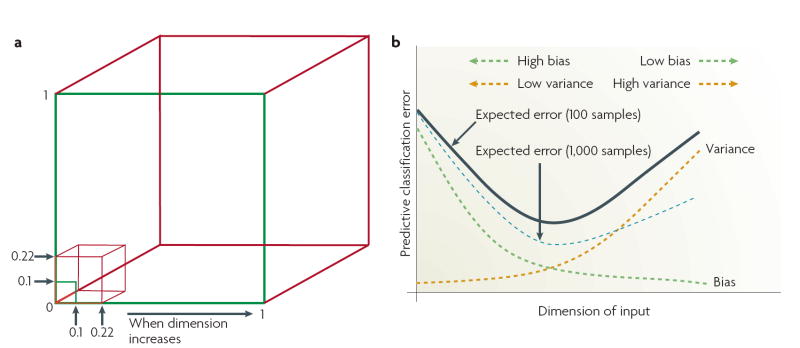

Figure 4. The curse of dimensionality and the bias or variance dilemma.

a | The geometric distributions of data points in low- and high-dimensional space differ significantly. For example, using a subcubical neighbourhood in a 3-dimensional data space (red cube) to capture 1% of the data to learn a local model requires coverage of 22% of the range of each dimension (0.01 ≈ 0.223) as compared with only 10% coverage in a 2-dimensional data space (green square) (0.01 = 0.102). Accordingly, using a hypercubical neighbourhood in a 10-dimensional data space to capture 1% of the data to learn a local model requires coverage of as much as 63% of the range of each dimension (0.01 ≈ 0.6310). Such neighbourhoods are no longer ‘local’111. As a result, the sparse sampling in high dimensions creates the empty space phenomenon: most data points are closer to the surface of the sample space than to any other data point111. For example, with 5,000 data points uniformly distributed in a 10-dimensional unit ball centred at the origin, the median distance from the origin to the nearest data point is approximately 0.52 (more than halfway to the boundary), that is, a nearest-neighbour estimate at the origin must be extrapolated or interpolated from neighbouring sample points that are effectively far away from the origin111. b | A practical demonstration is the bias–variance dilemma36,111,115. Specifically, the mismatch between a model and data can be decomposed into two components; bias that represents the approximation error, and variance that represents the estimation error. Added dimensions can degrade the performance of a model if the number of training samples is small relative to the number of dimensions. For a fixed sample size, as the number of dimensions is increased there is a corresponding increase in model complexity (increase in the number of unknown parameters), and a decrease in the reliability of the parameter estimates. Consequently, in the high-dimensional data space there is a trade-off between the decreased predictor bias and the increased prediction uncertainty36,111.