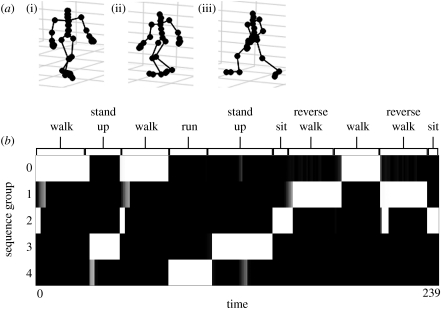

Figure 3.

(a) Three separate motion capture inputs from a human subject. Each input is a set of angles from 32 joints. When shown a sequence of such poses, humans have no difficulty recognizing activities such as running, walking and sitting. However, actions are difficult or impossible to recognize from static poses such as these (i)–(iii), because many poses could be part of several different actions. (b) Unsupervised classification of motion capture sequences. The state-splitting algorithm described in this paper was shown a sequence of 239 poses in which the subject repeatedly performed four different actions (‘walk’, ‘run’, ‘sit’ and ‘stand up’). As an extra test, the ‘walk’ sequences were also played backwards as a fifth action (‘reverse walk’), guaranteeing that the exact same poses were used but in a different temporal order. The vertical axis represents the five sequences groups, learned without supervision. The horizontal axis shows the time progression of the 239 poses. The labels at the top of the chart indicate what action the subject was performing at that time. The learned sequences closely match the subject's performed actions, demonstrating that the state-splitting method was able to learn the sequences.