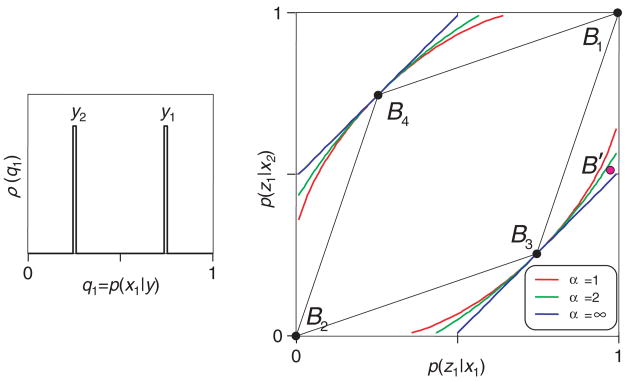

Figure 2.

Testing a neural code in a scenario in which the Shannon index has the advantage. The neural code has two words: y1, for which the a posteriori probabilities of the stimuli x1 and x2 are given by 0.75 and 0.25, and y2, for which the a posteriori probabilities of the stimuli are given by 0.25 and 0.75 (inset). The diamond-shaped region in the main graph shows the range of behaviors that can be supported by the code and the bounds provided by several different indices of transmitted information. The Shannon bound (α = 1) is tighter than the Bayes bound (α =∞). For further details, see the text.