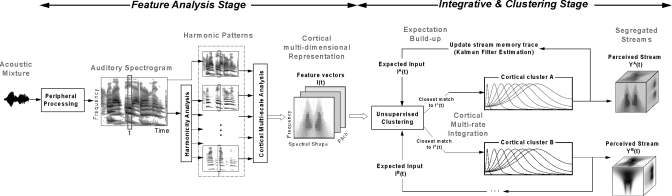

Figure 1.

Schematic of a model for auditory scene analysis. The computational model consists of two stages: a feature analysis stage, which maps the acoustic waveform into a multidimensional cortical representation, and an integrative and clustering stage, which segregates the cortical patterns into corresponding streams. The gray captions within each stage emphasize the principle outputs of the different modules. In the feature analysis stage, additional dimensions are added to the representation at each module, evolving from a 1D acoustic waveform (time) to a 2D auditory spectrogram (time-frequency) to a three-dimensional harmonicity mapping (time-frequency-pitch frequency) to a 4D multiscale cortical representation (time-frequency-pitch frequency-spectral shape). The integrative and clustering stage of the model is initiated by a clustering module which determines the stream that matches best the incoming feature vectors I(t). These vectors are then integrated by multirate cortical dynamics, which recursively update an estimate of the state of streams A and B via a Kalman-filter-based process. The cortical cluster use their current states to make a prediction of the expected inputs at time t+1: IA(t+1) and IB(t+1). The perceived streams are always available online as they evolve and take their final stable form.