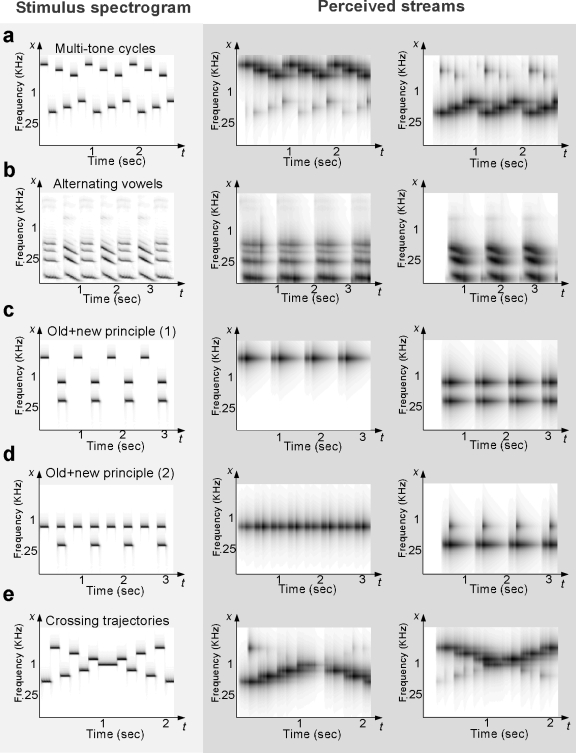

Figure 7.

Model simulations of “classic” auditory scene analysis demonstrations. The left column of panels shows the acoustic stimuli fed to the model. The middle and right columns depict the results of the stream segregation process, which are defined as the time-frequency marginal obtained by integrating the time-frequency activity across the entire neural population (scale and rate filters) representing each cluster. (a) Multitone cycles: The stimulus consists of alternating a high sequence of 2500, 2000, and 1600 Hz notes and a low sequence of 350, 430, and 550 Hz (Bregman and Ahad, 1990). The frequency separation between the two sequences induces a perceptual split into two streams (middle and right panels). (b) Alternating vowels: Two natural ∕e∕ and ∕ə∕ vowels are presented in an alternating sequence at a rate of roughly 2 Hz. The vowels are produced by a male speaker with an average pitch of 110 Hz. Timbre differences (or different spectral shapes) cause the vowel outputs to segregate into separate streams. (c) Old+new principle (1): An alternating sequence of a high A note (1800 Hz) and BC complex (650 and 300 Hz) is presented to the model. The tone complex BC is strongly glued together by virtue of common onset cues, and hence segregates from the A sequence which activates a separate frequency region. (d) Old+new principle (2): The same design as in simulation (c) is tested again with a new note A frequency at 650 Hz. Since tones A and B activated the same frequency channel, they are now grouped as a perceptual stream separate from stream C (gray panels), following the continuity principle. (e) Crossing trajectories: A rising sequence (from 400 to 1600 Hz in seven equal log-frequency steps) is interleaved with a falling sequence of similar note values in reverse (Bregman and Ahad, 1990).