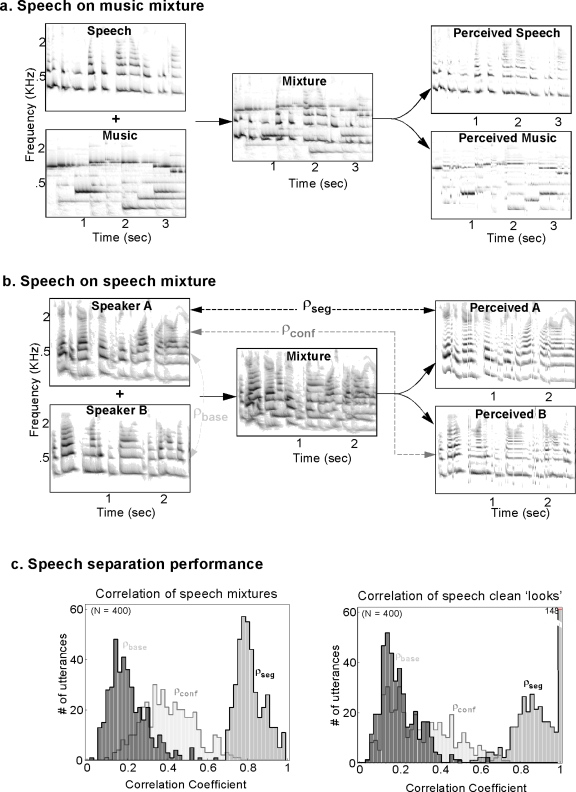

Figure 8.

Model performance with real speech mixtures. (a) Speech-on-music mixtures: Left panels depict spectrograms of a male utterance and a piano melody. The mixture of these two waveforms is shown in the middle. Model outputs segregate the two sources into two streams that resemble the original clean spectrograms (derived as time-frequency marginals similar to Fig. 7). (b) Speech-on-speech mixtures: Male and female speech are mixed and fed into the model, which segregates them into two streams. To evaluate performance, correlation coefficients (ρ) are computed as indicators of the match between the original and recovered spectrograms: ρseg measures the similarity between the original and streamed speech of the same speaker. ρbase measures the (baseline) similarity between the two original speakers. ρconf measures the confusions between an original speaker and the other competing speaker. (c) Speech segregation performance is evaluated by the distribution of the three correlation coefficients. Left panel illustrates that the average values of ρseg=0.81 are well above those of ρconf=0.4 and ρbase=0.2, indicating that the segregated streams match the originals reasonably well, but that some interference remains. Right panel illustrates results from the model bypassing the harmonic analysis stage (see text for details). The improved separation between the distributions demonstrates the remarkable effectiveness of the integrative and clustering stage of the model when harmonic interference is completely removed (distribution means ρseg=0.9, ρconf=0.3, and ρbase=0.2).