Abstract

Although there is widespread agreement that the hippocampus is critical for explicit episodic memory retrieval, it is controversial whether this region can also support indirect expressions of relational memory when explicit retrieval fails. Here, using functional magnetic resonance imaging (fMRI) with concurrent indirect, eye-movement-based memory measures, we obtained evidence that hippocampal activity predicted expressions of relational memory in subsequent patterns of viewing, even when explicit, conscious retrieval failed. Additionally, activity in the lateral prefrontal cortex, and functional connectivity between the hippocampus and prefrontal cortex was greater for correct than for incorrect trials. Together, these results suggest that hippocampal activity can support the expression of relational memory even when explicit retrieval fails, and that recruitment of a broader cortical network may be required to support explicit associative recognition.

Considerable evidence indicates that the hippocampus and adjacent medial temporal lobe (MTL) cortical structures support long-term declarative memory (Cohen & Squire, 1980; Squire, Stark, & Clark, 2004). Several theories implicate these structures specifically in conscious retrieval of past events and experiences (e.g., Moscovitch, 1995; Tulving & Schacter, 1990), with particular import placed on the role of the hippocampus in conscious recollection (Aggleton & Brown, 1999; Yonelinas, 2002). An alternative view points to a critical role for the hippocampus in the encoding and retrieval of memories for arbitrary relationships among items that co-occur in the context of some scene or event (Eichenbaum, Otto, & Cohen, 1994). In general, the relational memory theory is compatible with other accounts of MTL function, as conscious recollection likely depends on the ability to encode, and subsequently retrieve, arbitrary inter-item or item-context relationships (Davachi, 2006; Eichenbaum, Yonelinas, & Ranganath, 2007). However, one area where these theories diverge concerns the role of the hippocampus in the expression of relational memory, even in the absence of awareness. Whereas some theories propose that relationally-bound memory representations, supported by the hippocampus, can be expressed even when explicit reports fail (Eichenbaum, et al., 1994; Eichenbaum, 1999), others emphasize the tight link between hippocampal function and conscious retrieval of past events (Aggleton & Brown, 1999; Moscovitch, 1995; Squire, et al., 2004; Tulving & Schacter, 1990; Yonelinas, 2002).

Findings from recent experiments suggest that relational memory may be evident in patterns of eye movements even when conscious recollection fails. In these experiments, participants study realistic scenes and are subsequently tested with scenes that are repeated exactly as they were studied and scenes that have been systematically manipulated. Participants typically fixate disproportionately on regions of scenes that have been manipulated, suggesting that memory for the original item-location relationships has modulated viewing patterns (e.g., Hayhoe, Bensinger, & Ballard, 1998; Henderson & Hollingworth, 2003; Ryan, Althoff, Whitlow, & Cohen, 2000; Smith, Hopkins, & Squire, 2006). Critically, these eye movement-based relational memory effects have been documented even when participants fail to explicitly detect scene changes (e.g., Hayhoe, et al., 1998; Henderson & Hollingworth, 2003; Ryan, et al., 2000), suggesting that eye movement measures can be used to address questions about hippocampal involvement in relational memory retrieval even when overt behavioral reports are incorrect.

Other paradigms have been used to demonstrate that memory can rapidly influence eye movement behavior (Hannula, Ryan, Tranel, & Cohen, 2007; Holm, Eriksson, & Andersson, 2008; Ryan, Hannula & Cohen, 2007), and that eye-movement-based memory effects can occur far in advance of explicit recognition (Hannula, et al., 2007; Holm, et al., 2008). For example, in one study (Hannula, et al., 2007), eye movements were monitored during an associative recognition test in which a previously studied scene was presented (“scene cue”), and then three previously studied faces were superimposed on that scene (“test display”). It was hypothesized that the scene cue would elicit expectancies about the face with which it was paired during the study trials, and consistent with this prediction, eye movements were drawn disproportionately to the associated face just 500-750ms after presentation of the test display. The rapid onset of this effect is notable considering that the position of the associated face could not be predicted, and that 500-750ms is only enough time to permit at most two or three fixations. Furthermore, disproportionate viewing occurred over a second in advance of overt recognition, which suggests that the effect of relational memory on eye movement behavior might have preceded conscious identification of the match.

The results described above suggest that eye movements can be used to index relational memory retrieval prior to, and possibly even in the absence of awareness. Accordingly, in the present experiment, we used fMRI with concurrent eye tracking to test whether activity in the hippocampus and/or other MTL regions would be correlated with eye movement-based relational memory measures even when explicit recognition has failed. Participants in this experiment studied several face-scene pairs, and on each test trial, they were presented with a studied scene, followed by a brief delay, and finally presentation of three studied faces superimposed on that scene (see Figure 1). Critically, one of the faces had been paired with the scene during the study phase (henceforth referred to as the “matching face”), whereas the other two had been paired with different scenes. We expected that presentation of the scene cue would prompt retrieval of the associated face, resulting in increased viewing of that face when the test display was presented (Hannula, et al., 2007). The proportion of time spent viewing the matching face was used as an indirect, eye-movement-based measure of relational memory retrieval. We expected that activity in the hippocampus following the scene cue, would predict subsequent expression of relational memory in eye movement behavior, even when conscious recollection failed.

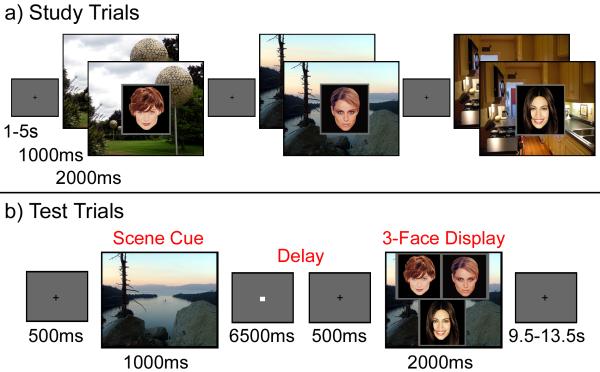

Figure 1. Experimental paradigm.

(a) Illustration of study trial events. (b) Illustration of a single test trial.

RESULTS

Behavioral Performance: Associative Recognition Accuracy

Participants made accurate responses on 62.29% (SD=11.10%) of the trials, made incorrect responses on 25.3% (SD=12.55%) of the trials, and responded “don’t know” on 12.4% (SD=10.23%) of the trials. Response times were faster for correct (2110.17ms; SD=630.80) than for incorrect (2671.73ms; SD=850.71) trials, t(13)=4.35, p<.001.

Memory for Face-Scene Relationships is Evident in Eye-Movement Behavior

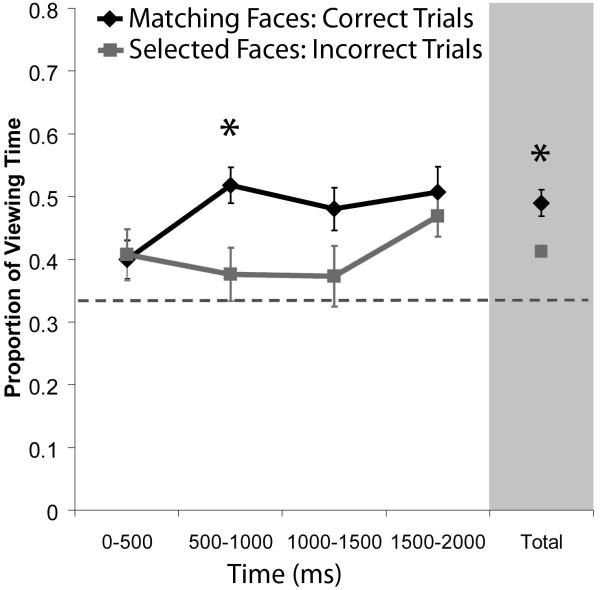

It was predicted that the scene cue would elicit relational memory retrieval, and that this would manifest as rapid, disproportionate viewing of the matching face. Such an effect could not be supported by simple influences of item familiarity because all three faces in each test display had been seen during the study trials. However, it is reasonable to suppose that participants might spend more time fixating any face that happened to be selected, even those selected in error. To account for this possibility, we examined whether participants spent more time viewing correctly identified matching faces than faces selected incorrectly. A repeated measures ANOVA which examined viewing time data as a function of face type (match, selected) and time bin (0-500, 500-1000, 1000-1500, and 1500-2000ms) revealed that more time was spent viewing correctly identified matching faces (M=.48; SD=.08) than selected faces (M=.40; SD=.04), F(1,13)=10.88, p<.01. Consistent with previous results (Hannula, et al., 2007), disproportionate viewing of matching faces emerged 500-1000ms after the 3-face test display was presented (t(13)=3.90, Bonferroni corrected p<.01; see Figure 2). These results confirm the rapid influence of relational memory on eye movement behavior, over and above any simple effect of response intention or execution.

Figure 2. Relational memory rapidly influences eye movement behavior.

Mean proportion of viewing time allocated to the matching face (correct trials) and to selected faces (incorrect trials). Viewing time measures are plotted in successive 500ms time bins starting with the onset of the 3-face test display. More time was spent viewing correctly identified matching faces than faces that were selected on incorrect trials just 500-1000ms after the 3-face display was presented. The proportion of total viewing time allocated to each face collapsed across the entire 2s test trial is also illustrated. Standard error bars are plotted around the means; the dashed line represents chance viewing.

MTL Activity during the Scene Cue Predicts Disproportionate Viewing of Matching Faces

Initial fMRI analyses examined the relationship between MTL activity and eye movement behavior by contrasting trials according to whether participants spent a disproportionate amount of time viewing the matching face (“DPM” trials) or a disproportionate amount of time viewing one of the non-matching faces (“DPNM” trials). The criterion for disproportionate viewing in this analysis was that the proportion of time spent viewing one face had to exceed the proportion of time spent viewing the remaining two faces by at least 10 percent (see Supplemental Materials for details). We reasoned that, on DPM trials, participants had successfully retrieved information about the previously studied face-scene relationship that was sufficient to influence subsequent eye movement behavior, whereas this did not occur on DPNM trials (Supplemental Figure 2 illustrates the time-course of these viewing effects); importantly, response times to DPM (2296.66ms, SD=693.96) and DPNM (2583.81ms, SD=825.43) trials were not significantly different, t(13)=1.50, p=.16.

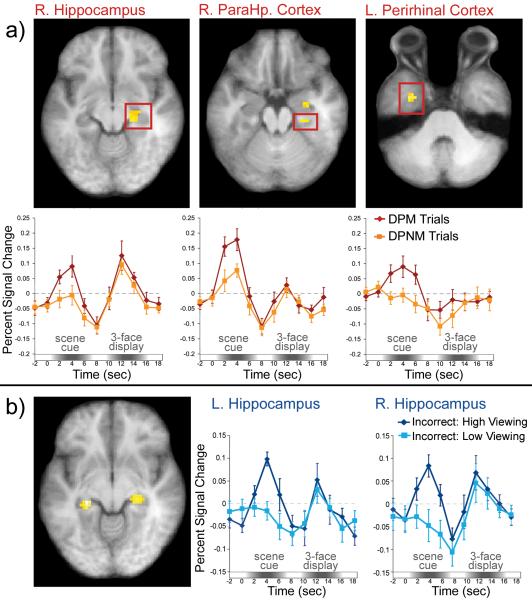

Based on the idea that the hippocampus and adjacent MTL cortical structures are critical for relational memory retrieval, we predicted that activity in these regions during the scene cue would be greater for DPM than for DPNM trials. Consistent with this prediction, BOLD signal was greater for DPM than for DPNM trials in two regions of the right hippocampus (anterior local maximum at x = 30, y = -12, z = -24; t(13)=4.06; posterior local maxima at x = 24, y = -27, z = -9; t(13)=3.94), the right parahippocampal cortex (local maxima at x = 30, y = -27, z = -18; t(13)=3.46), and bilaterally in anterior regions of the parahippocampal gyrus, which likely correspond to the perirhinal cortex (Insausti, Juottonen, Soininen, et al., 1998; left local maxima at x = -33, y = -9, z = -36; t(13)=4.21; right local maxima at x = 33, y = -18, z = -30; t(13)=5.31). Representative trial-averaged time courses are presented in Figure 3a.

Figure 3. Medial temporal lobe (MTL) activity predicts eye movement-based expressions of relational memory, even when explicit recognition has failed.

(a) Examples of MTL regions that showed increased BOLD signal during the scene cue for trials in which participants viewed the matching face disproportionately (DPM trials) vs. trials in which they viewed one of the non-matching faces disproportionately (DPNM trials). Trial-averaged time courses extracted from each ROI illustrate differences in BOLD signal between DPM and DPNM trials during presentation of the scene cue. (b) BOLD signal was greater in both the left and the right hippocampus for incorrect high viewing trials than for incorrect low viewing trials.

Because response accuracy was greater for DPM trials (M=83.30%, SD=3.56), than for DPNM trials (M=35.20%, SD=3.80), it could be argued that correlations between MTL activity and eye movements simply reflected explicit relational memory retrieval. Accordingly, we performed follow-up fMRI analyses to more specifically test whether MTL activity might index eye-movement-based relational memory effects even on trials for which overt recognition failed. In these analyses, we focused specifically on trials for which participants failed to identify the matching face. A median split, based on the proportion of total viewing time directed to the matching face, was used to separately bin trials that were associated with relatively high or low viewing of that face (Supplemental Figure 3 illustrates the time-course of these viewing effects). A mapwise analysis in which activity during the scene cue was contrasted between incorrect high- and incorrect low-viewing trials revealed suprathreshold voxels in bilateral regions of the hippocampus (left local maxima: x = -24, y = -30, z = 6; t(13)=5.39; right local maxima: x = 27, y = -27, z = -6; t(13)=4.14; see Figure 3b). This result implicates the hippocampus in retrieval of information about previously studied face-scene relationships that is sufficient to influence eye movement behavior even when explicit recognition has failed.

Perirhinal and Prefrontal Activity during the Scene Cue Predicts Accuracy

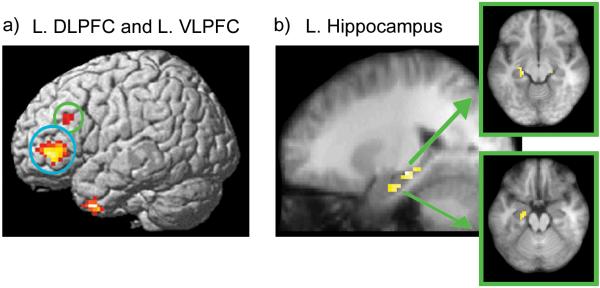

The next fMRI analysis examined MTL activity during the scene cue as a function of accuracy, irrespective of eye movement behavior. Activity during the scene cue was greater for correct than for incorrect trials in a region of left perirhinal cortex, with an activation peak close to the one observed for the disproportionate viewing contrast (local maxima at x = -21, y = 0, z = -36; t(13)=5.65). Surprisingly, there were no suprathreshold activity differences in the hippocampus or the parahippocampal cortex during any part of the test trial. Outside of the MTL, however, several cortical regions (see Supplemental Table 1) showed increased activity during correct, as compared with incorrect trials, including left dorsolateral prefrontal cortex (DLPFC: local maxima at x = -48, y = 27, z = 30; t(13)=5.36) and left ventrolateral prefrontal cortex (VLPFC: local maxima at x = -48, y = 42, z = 0; t(13)=8.38; see Figure 4a). Results from several studies suggest that these prefrontal regions may implement control processes that support explicit memory attributions (e.g., Dobbins & Sanghoon, 2006; Ranganath, Johnson, & D’Esposito, 2000; for a review see Fletcher & Henson, 2001).

Figure 4. Lateral prefrontal activity and functional connectivity with the hippocampus predicts accurate relational memory decisions.

(a) Regions that showed greater BOLD signal during the scene cue for correct trials than for incorrect trials are rendered on a template brain. Lateral prefrontal areas identified in this contrast are circled (L. DLPFC in green; L. VLPFC in blue). (b) Representative regions in the left hippocampus that exhibited greater connectivity with the left DLPFC seed region on correct than on incorrect trials during presentation of the 3-face test display.

In order to determine whether PFC activity was also correlated with relational memory as expressed indirectly in eye movement behavior, parameter estimates for DPM and DPNM trials were extracted from each prefrontal ROI. Following presentation of the scene cue, activity in both regions was greater for DPM than for DPNM trials (Left DLPFC: t(13)=2.70, p<.05; Left VLPFC: t(13)=2.34, p<.05); local maxima identified in the direct contrast of DPM vs. DPNM trials are summarized in Supplemental Table 2. As indicated earlier, however, eye movements were strongly associated with behavioral response accuracy, so this result does not necessarily indicate whether activity in these ROIs was predictive of eye movement behavior even when recognition failed. To test this possibility, parameter estimates were extracted from the prefrontal ROIs for incorrect trials on which viewing of the match was high vs. low. Unlike what was observed in the hippocampus, activity in these ROIs did not differentiate between incorrect high and low viewing trials (all t’s≤1.87, all p’s>.05); local maxima identified in the direct contrast of incorrect-high vs. incorrect-low viewing trials are summarized in Supplemental Table 3.

Functional Connectivity between Hippocampus and PFC is Increased during Accurate Associative Recognition

Results described above are consistent with the possibility that the hippocampus supports recovery of relational memory, and that this information may be communicated to prefrontal regions in order to guide overt decision behavior. If this view is correct, then one might expect increased functional connectivity between the prefrontal regions and the MTL for correct, as compared to incorrect trials. To test this prediction, we ran functional connectivity analyses using the prefrontal ROIs identified in the accuracy contrast as seed regions. Estimates of activity during each phase of each trial were separately averaged within the seed regions for correct and incorrect trials and these estimates were correlated with estimates of activity in the rest of the brain (Rissman, Gazzaley, & D’Esposito, 2004; see Supplemental Materials for details). Voxels in the MTL that showed increased correlations with prefrontal ROIs on correct, as compared to incorrect trials were then identified. There were no statistically reliable changes in connectivity between lateral prefrontal regions and MTL structures during the scene cue or the delay period. During presentation of the 3-face test display, however, functional connectivity between the left DLPFC seed region and several hippocampal regions (left anterior hippocampus: x = -21, y = -18, z = -18; t(13)=4.01; left posterior hippocampus: x = -21, y = -24, z = -9; t(13)=4.78; right anterior hippocampus: x = 24, y = -21, z = -15; t(13)=3.53) was increased on correct, as compared to incorrect trials (see Figure 4b). Functional connectivity was also increased between the left VLPFC seed and regions in the left hippocampus (x = -21, y = -18, z = -12; t(13)=3.58), left parahippocampal cortex (x = -18, y = -24, z = -21; t(13)=5.90), and left perirhinal cortex (x = -18, y = -6, z = -33; t(13)=4.36) during presentation of the 3-face test display for correct, versus incorrect, trials.

DISCUSSION

The aim of the current investigation was to determine whether the hippocampus and adjacent MTL structures support the expression of relational memory in eye movement behavior, even when behavioral responses are incorrect. Such an outcome would be significant because most theories emphasize the role of MTL structures in conscious retrieval of past events (Aggleton & Brown, 1999; Moscovitch, 1995; Squire, et al., 2004; Tulving & Schacter, 1990; Yonelinas, 2002). Results showed that activity in the hippocampus during presentation of the scene cue predicted subsequent viewing of the associated face during the 3-face test display, even when participants failed to explicitly identify the match. In contrast, activity in PFC regions was sensitive to subsequent response accuracy, but did not predict viewing of matching faces on incorrect trials. Finally, functional connectivity between lateral PFC and hippocampus was increased during presentation of the 3-face test display on correct, as compared to incorrect, trials. Together, these results suggest that hippocampal activity may support the expression of relational memory, and that recruitment of a broader network of regions may be required to use this information to guide overt behavior.

Previous evidence taken to support hippocampal contributions to memory without awareness (Chun & Phelps, 1999; Greene, Gross, Elsinger, & Rao, 2007; Ryan, et al., 2000) has been challenged by recent research (Manns & Squire, 2001; Smith, et al., 2006; Preston & Gabrieli, 2008). For example, the failure of amnesic patients to show implicit response facilitation to repeated displays in the contextual cueing task (Chun & Phelps, 1999) has since been attributed to extensive, rather than hippocampally-limited, MTL lesions (Manns & Squire, 2001), and results from a recent fMRI experiment (Preston & Gabrieli, 2008) showed that hippocampal activation during performance of the contextual cueing task was tied to explicit recognition of repeated displays. At first blush, these results may seem to challenge the idea that the hippocampus can support expressions of relational memory without awareness. However, it has been argued that contextual cueing may depend on configural representations supported by extrahippocampal regions such as the perirhinal cortex, rather than the kind of relational memory representations thought to depend on the hippocampus (Preston & Gabrieli, 2008).

The results reported here suggest that the hippocampus can support expressions of relational memory even when behavioral responses are incorrect. These results are compelling when considered along with previous findings which show that amnesic patients fail to look disproportionately at relational changes in previously studied scenes even though college-age participants do so despite being unaware of the manipulation (Ryan, et al., 2000). Our results also complement previous fMRI research which has shown increased hippocampal activity during presentation of subliminally presented face-occupation pairs (e.g., Degonda, Mondadori, Bosshardt, et al., 2005) and during implicit learning (Greene, Gross, Elsinger, & Rao, 2006; Schendan, Searl, Melrose, & Stern, 2003).

Considered together, these results are consistent with the two-stage model of recollection recently proposed by Moscovitch (2008). According to this model, the initial activation of hippocampal representations (“ecphory’) can guide behavior in an obligatory manner, even before information is consciously apprehended. Thereafter, the individual may become aware of ecphoric output, and consciously use this output to guide volitional behavior. The model suggests that hippocampal activity should be correlated with recollection under most circumstances (Eichenbaum et al., 2007), but also suggests that the hippocampus can support expressions of memory even when the second, conscious stage of processing is disrupted.

Although our results indicate that explicit recollection is not a necessary condition for hippocampal recruitment, they do not contradict the idea that hippocampal activity is typically correlated with recollection. Hippocampal activity was not robustly correlated with overt response accuracy in the current experiment, but there are several possible explanations for this null result (see Supplemental Materials for details). Furthermore, although hippocampal activation has been correlated with recollection in many studies (e.g.,. Diana, Yonelinas, & Ranganath, in press), null results in this area are not uncommon (see Henson, 2005 for review). In general, further work needs to be done to examine the connection between hippocampal activity, eye movement-based measures of relational memory and explicit recognition accuracy. A full factorial analysis would be needed to address this question, but because viewing of the match was correlated with accuracy, it was not feasible to examine accuracy effects for trials matched on viewing time in the current investigation. Accordingly, an important question for future research is whether hippocampal activity would be greater for correct trials with high viewing of the match than for incorrect trials with high viewing of the match.

As indicated above, activity in the left lateral PFC during processing of the scene cue and functional connectivity between this region and the hippocampus during presentation of the 3-face test display was correlated with accurate associative recognition. Previous research implicates left lateral prefrontal regions in retrieval of source information or contextual recollection that may support accurate responses (see Fletcher & Henson, 2001), and recent work (Dobbins & Sanghoon, 2006) suggests that left DLPFC in particular may be important for evaluating recovered content with respect to a particular behavioral goal. The present results suggest that the hippocampus may support retrieval of relationally-bound information, but that regions in the prefrontal cortex may also be recruited to support the use of this information in order to guide explicit associative memory decisions (Duarte, Ranganath, & Knight, 2005).

The practical implications of the results reported here are potentially far-reaching because they suggest that eye movements provide a powerful approach to investigating relational memory and hippocampal function. Accordingly, eye-tracking may be a valuable tool in translational research, as it is often difficult to overtly assess relational memory in cognitively impaired clinical populations (who may not be able to perform complex meta-cognitive judgments) or in monkeys and rodents (for whom subjective reports of memory retrieval are not possible and must be inferred). Along similar lines, recent work (Richmond & Nelson, 2009) has demonstrated that this methodological approach is beneficial to memory studies conducted with infants, who cannot yet report the contents of what has been successfully retrieved from memory. Finally, eye-tracking could be used to obtain information about past events from participants who are unaware or attempting to withhold that information. In other words, there may be circumstances in which eye movements provide a more veridical and robust account of past events or experiences than behavioral reports alone.

METHODS

Participants

Participants were 18 right-handed individuals (8 women) from the UC Davis community who were paid in exchange for participation. Four participants were excluded from the reported analyses; one because behavioral performance was at chance and the remaining three because eye position could not be reliably monitored.

Procedure

After informed consent was obtained, and instructions were provided, each participant practiced the face-scene association task (see below). Scanning commenced once the experimenter was satisfied with the participant’s comprehension of the task. The scanning session consisted of 4 study blocks, each followed immediately by a corresponding test block. Eye position was monitored throughout the entire scanning session, and the eye-tracker was calibrated using a 3×3 spatial array prior to the initiation of each experimental block (example stimuli are illustrated in Figure 1 and more detailed information about stimuli and counterbalancing can be found in the Supplemental Materials).

Each study block consisted of 54 study trials, in which a unique scene was presented for 1s, after which a single face was superimposed on top of that scene for 2s. To elicit reasonably high levels of accuracy, participants were instructed to determine whether each person denoted by the face looked like they belonged in the place depicted in the scene. A variable duration inter-trial interval (ITI; range 1-5s) separated subsequent trials, and a white fixation cross was presented centrally during the final 500ms of the ITI to warn participants that the next trial was about to begin (see Figure 1). Participants were told that they should orient their gaze to this fixation cross in preparation for the next trial, but that they could move their eyes freely once the scene was presented. The total duration of each study block (i.e. scanning run) was 336s, including a 12s unfilled interval at the beginning of each block.

Each test trial (18 per block) began with the presentation of a scene that had been viewed in the previous study block (“scene cue”). The scene remained on the screen for 1s and was followed by a 7s delay. Participants were instructed that they should use the scene as a cue to retrieve the associated face before the 3-face test display was presented. A white fixation cross, presented in the center of the screen during the final 500ms of the delay period, encouraged participants to orient their gaze toward the center of the screen in anticipation of the 3-face test display, which remained on the screen for 2s. When the test display was presented, participants were to indicate, via button press, which face (left, right, or bottom) had been paired with that scene earlier. Participants were also given the option to respond “don’t know” if they were unsure about the identity of the match and speed was emphasized, but not at the expense of accuracy. A variable duration ITI (range 10-14s) separated subsequent trials, and a centrally located white fixation cross, presented in the final 500ms of the ITI, warned participants that the next trial was about to begin (see Figure 1). The total duration of each test block (i.e. scanning run) was 408s, including a 12s unfilled interval at the beginning of each block.

Eye Tracking Acquisition and Analysis

Eye position was monitored during fMRI scanning at a rate of 60 Hz using an MRI - compatible Applied Science Laboratories (ASL) 504 long-range optics eye tracker. Eye tracking analyses focused on eye movements that occurred during the 2s following 3-face display onset. Fixations made during this period were assigned to particular regions of interest (ROIs) within each 3-face test display (i.e., left face, right face, bottom face, background scene), and the proportion of total viewing time allocated to each ROI was calculated (see Supplemental Materials for details).

Image Acquisition and Pre-processing

MRI data were acquired with a 3T Siemens Trio scanner located at the UC Davis Imaging Research Center. Functional data were obtained with a gradient echoplanar imaging (EPI) sequence (repetition time, 2000ms; echo time, 25ms; field of view, 220; 64 × 64 matrix); each volume consisted of 34 axial slices, each with a slice thickness of 3.4mm, resulting in a voxel size of 3.4375 × 3.4375 × 3.4mm. Coplanar and high-resolution T1-weighted anatomical images were acquired from each participant, and a simple motor-response task (Aguirre, Zarahn, & D’Esposito, 1997) was performed to estimate subject-specific hemodynamic response functions (HRF).

Preprocessing was performed using Statistical Parametric Mapping (SPM5) software. EPI data were slice-timing corrected using sinc interpolation to account for timing differences in acquisition of adjacent slices, realigned using a 6-parameter, rigid-body transformation, spatially normalized to the Montreal Neurological Institute (MNI) EPI template, resliced into 3mm isotropic voxels, and spatially smoothed with an isotropic 8mm full-width at half-maximum Gaussian filter.

fMRI Data Analysis

Event-related BOLD responses associated with each component of each test trial (i.e. scene cue, delay, and 3-face test display) were deconvolved using linear regression (Zarahn, Aguirre, & D’Esposito, 1997). Covariates of interest were created by convolving vectors of neural activity for each trial component with subject-specific HRFs derived from responses in the central sulcus for each participant during a visuomotor response task. Data from the visuomotor response task were unavailable or unreliable for four participants. For these individuals, covariates were constructed by convolving neural activity vectors with an average of empirically-derived HRFs from 18 participants.

Separate covariates were constructed to model responses for each test trial component (scene cue, delay, 3-face display) as a function of viewing time (i.e. DPM vs. DPNM trials), viewing time for incorrect trials only (i.e., incorrect trials with high viewing of the match vs. incorrect trials with low viewing of the match), and behavioral response accuracy (i.e. correct vs. incorrect identification of the matching face). Each classification scheme resulted in 6 distinct covariates of interest that modeled activity during each task phase either as a function of eye-movement-based memory measures (scene cue - disproportionate match, scene cue - disproportionate non-match, etc.), eye-movement-based memory measures for incorrect trials (scene cue - incorrect high viewing, scene cue - incorrect low viewing, etc.), or response accuracy (scene cue - correct, scene cue - incorrect, etc.). Additional covariates of no interest modeled spikes in the time series, global signal changes that could not be attributed to variables in the design matrix (Desjardins, Kiehl, & Liddle, 2001), scan-specific baseline shifts, and an intercept. Regression analyses were then performed on single-subject data using the general linear model with filters applied to remove frequencies above .25 Hz and below .005 Hz. These analyses yielded a set of parameter estimates for each participant, the magnitude of which can be interpreted as an estimate of the BOLD response amplitude associated with a particular trial component (e.g. responses during the scene cue on DPM trials).

After single-subject analyses were completed, images for the contrasts of interest were created for each participant. Contrast images were entered into a second-level, one-sample t-test, in which the mean value across participants for every voxel was tested against zero. Significant regions of activation in the MTL were identified using an uncorrected threshold of p<.005 and a minimum cluster size of 8 contiguous voxels. With this voxel-wise threshold, the familywise error rate for the MTL (i.e. hippocampus, parahippocampal, perirhinal, and entorhinal cortices), estimated using a Monte Carlo procedure (as implemented in the AlphaSim program in the AFNI software package), was constrained at p<.05. Because we predicted that relational memory retrieval would be triggered by presentation of the scene cue, analyses reported here focus on this task period.

Detailed information about the number of trials per bin for each participant in every contrast is provided in Supplemental Table 4. Because bin sizes for incorrect viewing time analyses were small for some participants, additional analyses were conducted to examine the reliability of incorrect high vs. low viewing time effects in the fMRI data when these participants were excluded. Results of these analyses are consistent with those reported in the manuscript (see Supplemental Table 5).

Additional analyses were performed to identify regions outside of the MTL for which activity during presentation of the scene cue was correlated with eye-movement behavior and response accuracy. These regions were identified using an uncorrected threshold of p<.001 and a minimum cluster size of 8 contiguous voxels. Coordinates of local maxima from these contrasts during presentation of the scene cue are summarized in Supplemental Tables 1-3.

Supplementary Material

Acknowledgements

This work was supported by National Institute of Mental Health Fellowship F32MH075513 (D.E.H.) and National Institutes of Health Grant MH68721 (C.R.). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute of Mental Health or the National Institutes of Health. Many thanks to Charlie Nuwer and Evan Katsuranis for technical guidance and assistance with data collection and to Neal Cohen for sharing the materials that were used in this experiment.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERENCES

- Aggleton JP, Brown MW. Episodic memory, amnesia, and the hippocampal-anterior thalamic axis. Behav Brain Sci. 1999;22:425–444. [PubMed] [Google Scholar]

- Aguirre GK, Zarahn E, D’Esposito M. The variability of human BOLD hemodynamic responses. Neuroimage. 1998;8:360–369. doi: 10.1006/nimg.1998.0369. [DOI] [PubMed] [Google Scholar]

- Chun MM, Phelps EA. Memory deficits for implicit contextual information in amnesic subjects with hippocampal damage. Nat Neurosci. 1999;2:844–847. doi: 10.1038/12222. [DOI] [PubMed] [Google Scholar]

- Cohen NJ, Squire LR. Preserved learning and retention of pattern-analyzing skill in amnesia: dissociation of knowing how and knowing that. Science. 1980;210:207–210. doi: 10.1126/science.7414331. [DOI] [PubMed] [Google Scholar]

- Davachi L. Item, context, and relational episodic encoding in humans. Curr Opin Neurobiol. 2006;16:693–700. doi: 10.1016/j.conb.2006.10.012. [DOI] [PubMed] [Google Scholar]

- Degonda N, Mondadori CR, Bosshardt S, Schmidt CF, Boesiger P, Nitsch RM, Hock C, Henke K. Implicit associative learning engages the hippocampus and interacts with explicit associative learning. Neuron. 2005;46:505–520. doi: 10.1016/j.neuron.2005.02.030. [DOI] [PubMed] [Google Scholar]

- Desjardins AE, Kiehl KA, Liddle PF. Removal of confounding effects of global signal in functional MRI analyses. Neuroimage. 2001;13:751–758. doi: 10.1006/nimg.2000.0719. [DOI] [PubMed] [Google Scholar]

- Diana RA, Yonelinas AP, Ranganath C. Medial temporal lobe activity during source retrieval reflects information type, not memory strength. J Cogn Neuro. doi: 10.1162/jocn.2009.21335. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dobbins IG, Sanghoon H. Cue-versus probe-dependent prefrontal cortex activity during contextual remembering. J Cogn Neuro. 2006;18:1439–1452. doi: 10.1162/jocn.2006.18.9.1439. [DOI] [PubMed] [Google Scholar]

- Duarte A, Ranganath C, Knight RT. Effects of unilateral prefrontal lesions on familiarity, recollection, and source memory. J Neurosci. 2005;25:8333–8337. doi: 10.1523/JNEUROSCI.1392-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichenbaum H. Conscious awareness, memory and the hippocampus. Nat Neurosci. 1999;2:775–776. doi: 10.1038/12137. [DOI] [PubMed] [Google Scholar]

- Eichenbaum H, Otto T, Cohen NJ. Two functional components of the hippocampal memory system. Behav Brain Sci. 1994;17:449–517. [Google Scholar]

- Eichenbaum H, Yonelinas AP, Ranganath C. The medial temporal lobe and recognition memory. Annu Rev Neurosci. 2007;30:123–152. doi: 10.1146/annurev.neuro.30.051606.094328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fletcher PC, Henson RN. Frontal lobes and human memory: insights from functional neuroimaging. Brain. 2001;124:849–881. doi: 10.1093/brain/124.5.849. [DOI] [PubMed] [Google Scholar]

- Greene AJ, Gross WL, Elsinger CL, Rao SM. An fMRI analysis of the human hippocampus: inference, context, and awareness. J Cogn Neurosci. 2006;18:1156–1173. doi: 10.1162/jocn.2006.18.7.1156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greene AJ, Gross WL, Elsinger CL, Rao SM. Hippocampal differentiation without recognition: an fMRI analysis of the contextual cueing task. Learn Mem. 2007;14:548–553. doi: 10.1101/lm.609807. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hannula DE, Ryan JD, Tranel D, Cohen NJ. Rapid onset relational memory effects are evident in eye movement behavior, but not in hippocampal amnesia. J Cogn Neuro. 2007;19:1690–1705. doi: 10.1162/jocn.2007.19.10.1690. [DOI] [PubMed] [Google Scholar]

- Hayhoe M, Bensinger DG, Ballard DH. Task constraints in visual working memory. Vision Research. 1998;38:125–137. doi: 10.1016/s0042-6989(97)00116-8. [DOI] [PubMed] [Google Scholar]

- Henderson JM, Hollingworth A. Eye movements and visual memory: Detecting changes to saccade targets in scenes. Percept Psychophys. 2003;65:58–71. doi: 10.3758/bf03194783. [DOI] [PubMed] [Google Scholar]

- Henson RN. A mini-review of fMRI studies of human medial temporal lobe activity associated with recognition memory. Q J Exp Psychol, Series B. 2005;58:340–360. doi: 10.1080/02724990444000113. [DOI] [PubMed] [Google Scholar]

- Holm L, Eriksson J, Andersson L. Looking as if you know: Systematic object inspection precedes object recognition. Journal of Vision. 2008;8:14.1–7. doi: 10.1167/8.4.14. [DOI] [PubMed] [Google Scholar]

- Insausti R, Juottonen K, Soininen H, Insausti AM, Partanen PV, Laakso MP, Pitkanen A. MR volumetric analysis of the human entorhinal, perirhinal, and temporopolar cortices. American Journal of Neuroradiology. 1998;19:659–671. [PMC free article] [PubMed] [Google Scholar]

- Manns JR, Squire Perceptual learning, awareness, and the hippocampus. Hippocampus. 2001;11:776–782. doi: 10.1002/hipo.1093. [DOI] [PubMed] [Google Scholar]

- Moscovitch M. Recovered consciousness: a hypothesis concerning modularity and episodic memory. Journal of Clinical Experimental Neuropsychology. 1995;17:276–290. doi: 10.1080/01688639508405123. [DOI] [PubMed] [Google Scholar]

- Moscovitch M. The hippocampus as a “stupid” domain-specific module: Implications for theories of recent and remote memory, and of imagination. Can J Exp Psychol. 2008;62:62–79. doi: 10.1037/1196-1961.62.1.62. [DOI] [PubMed] [Google Scholar]

- Preston AR, Gabrieli JD. Dissociation between explicit memory and configural memory in the human medial temporal lobe. Cereb Cortex. 2008;18:2192–2207. doi: 10.1093/cercor/bhm245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ranganath C, Johnson MK, D’Esposito M. Left anterior prefrontal activation increases with demands to recall specific perceptual information. J Neurosci. 2000;20:1–5. doi: 10.1523/JNEUROSCI.20-22-j0005.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richmond J, Nelson CA. Relational memory during infancy: evidence from eye tracking. Developmental Science. 2009 doi: 10.1111/j.1467-7687.2009.00795.x. Advance Online Publication. [DOI] [PubMed] [Google Scholar]

- Rissman J, Gazzaley A, D’Esposito M. Measuring functional connectivity during distinct stages of a cognitive task. Neuroimage. 2004;23:752–763. doi: 10.1016/j.neuroimage.2004.06.035. [DOI] [PubMed] [Google Scholar]

- Ryan JD, Althoff RR, Whitlow S, Cohen NJ. Amnesia is a deficit in relational memory. Psychol Sci. 2000;11:454–461. doi: 10.1111/1467-9280.00288. [DOI] [PubMed] [Google Scholar]

- Ryan JD, Hannula DE, Cohen NJ. The obligatory effects of memory on eye movements. Memory. 2007;15:508–525. doi: 10.1080/09658210701391022. [DOI] [PubMed] [Google Scholar]

- Schendan HE, Searl MM, Melrose RJ, Stern CE. An fMRI study of the role of the medial temporal lobe in implicit and explicit sequence learning. Neuron. 2003;37:1013–1025. doi: 10.1016/s0896-6273(03)00123-5. [DOI] [PubMed] [Google Scholar]

- Smith CN, Hopkins RO, Squire LR. Experience-dependent eye movements, awareness, and hippocampus-dependent memory. J Neurosci. 2006;26:11304–11312. doi: 10.1523/JNEUROSCI.3071-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Squire LR, Stark CE, Clark RE. The medial temporal lobe. Annu Rev Neurosci. 2004;27:279–306. doi: 10.1146/annurev.neuro.27.070203.144130. [DOI] [PubMed] [Google Scholar]

- Tulving E, Schacter DL. Priming and human memory systems. Science. 1990;247:301–306. doi: 10.1126/science.2296719. [DOI] [PubMed] [Google Scholar]

- Yonelinas AP. The nature of recollection and familiarity: A review of 30 years of research. Journal of Memory and Language. 2002;46:441–517. [Google Scholar]

- Zarahn E, Aguirre G, D’Esposito M. A trial-based experimental design for fMRI. Neuroimage. 1997;6:122–138. doi: 10.1006/nimg.1997.0279. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.