Abstract

When faced with choices between two sources of reward, animals can rapidly adjust their rates of responding to each so that overall reinforcement increases. Herrnstein's ‘matching law’ provides a simple description of the equilibrium state of this choice allocation process: animals reallocate behavior so that relative rates of responding equal, or match, the relative rates of reinforcement obtained for each response. Herrnstein and colleagues proposed ‘melioration’ as a dynamical process for achieving this equilibrium, but left details of its operation unspecified. Here we examine a way of filling in the details that links the decision-making and operant-conditioning literatures and extends choice-proportion predictions into predictions about inter-response times. Our approach implements melioration in an adaptive version of the drift-diffusion model (DDM), which is widely used in decision-making research to account for response-time distributions. When the drift parameter of the DDM is 0 and its threshold parameters are inversely proportional to reward rates, its choice proportions dynamically track a state of exact matching. A DDM with fixed thresholds and drift that is determined by differences in reward rates can produce similar, but not identical, results. We examine choice probability and inter-response time predictions of these models, separately and in combination, and possible implications for brain organization provided by neural network implementations of them. Results suggest that melioration and matching may derive from synapses that estimate reward rates by a process of leaky integration, and that link together the input and output stages of a two-stage stimulus-response mechanism.

Keywords: drift-diffusion, decision making, melioration, matching, operant conditioning, instrumental conditioning, reward rate, reinforcement learning, neural network

1. Introduction

For much of the twentieth century, psychological research on choice and simple decision making was typically carried out within one of two separate traditions. One is the behaviorist tradition, emerging from the work of Thorndike and Pavlov and exemplified by operant conditioning experiments with animals (e.g., Ferster & Skinner, 1957), including the variable interval (VI) and variable ratio (VR) tasks that we examine in this article. The other tradition, while also focused quantitatively on simple behavior, can be categorized as cognitivist: its emphasis is on internal, physical processes that transduce stimuli into responses, and on behavioral techniques for making inferences about them. This style of research originated in the mid-1800s in the work of Donders, and is exemplified by choice-reaction time experiments with humans (e.g., Posner, 1978), among other approaches.

Today the boundaries between these traditions are less well defined. From one side, mechanistic models of internal processes have achieved growing acceptance from contemporary behaviorists (e.g., Staddon, 2001). From the other side, there is a growing appreciation for the role of reinforcement in human cognition (e.g., Bogacz, Brown, Moehlis, Holmes, & Cohen, 2006; Busemeyer & Townsend, 1993). Here we propose a theoretical step toward tightening the connection between these traditions. This step links models of choice based on the content of a perceptual stimulus (as in simple decision making experiments) with models of choice based on a history of reinforcement (as in operant conditioning experiments). It thereby provides a potential explanation of response time (RT) and inter-response time (IRT) data in operant conditioning, and the development of response biases in simple decision making. As we show, behavioral results in both the conditioning and decision making literatures are consistent with the predictions of the model we propose to link these traditions.

Specifically, we prove that a classic behaviorist model of dynamic choice reallocation — ‘melioration’ (Herrnstein, 1982; Herrnstein & Prelec, 1991; Herrnstein & Vaughan, 1980; Vaughan, 1981) — can be implemented by a classic cognitive model of two-alternative choice-reaction time — the drift-diffusion model (Ratcliff, 1978), hereafter referred to as the DDM — under natural assumptions about the way in which reinforcement affects the parameters of the DDM. Melioration predicts that at equilibrium, behavior satisfies the well-known ‘matching law’ (Herrnstein, 1961). This states that relative choice proportions equal, or match, the relative rates of the reinforcement actually obtained in an experiment:

| (1) |

Here Bi represents the rate at which responses of type i are emitted, and Ri represents the rate of reinforcement, or reward, earned from these responses (we will use the terms ‘reinforcement’ and ‘reward’ interchangeably).

The DDM and variants of it can in turn be implemented in neural networks (Bogacz et al., 2006; Gold & Shadlen, 2001; Smith & Ratcliff, 2004; Usher & McClelland, 2001), and we show that parameter-adaptation by reinforcement can be carried out by simple physical mechanisms — leaky integrators — in such networks. Furthermore, while it is relatively abstract compared to more biophysically detailed alternatives, our simple neural network model gains analytical tractability by formally approximating the DDM, while at the same time maintaining a reasonable, first-order approximation of neural population activity (as in the influential model of Wilson & Cowan, 1972). It therefore provides an additional, formal point of contact between psychological theories, on the one hand, and neuroscientific theories about the physical basis of choice and decision making, on the other.

In what follows, we show how melioration emerges as a consequence of placing an adaptive form of the DDM in a virtual ‘Skinner box’, or operant conditioning chamber, in order to perform a concurrent variable ratio (VR) or variable interval (VI) task. In these tasks, an animal faces two response mechanisms (typically lighted keys or levers). In both tasks, once a reward becomes available, a response is then required to obtain it, but ordinarily no ‘Go’ signal indicates this availability. In a VR task, rewards are made available for responses after a variable number of preceding responses; each response is therefore rewarded with a constant probability, regardless of the inter-response duration. To model a VR task mathematically, time can therefore be discretized into a sequence consisting of the moments at which responses occur. In a VI task, in contrast, rewards become available only after a time interval of varying duration has elapsed since the previous reward-collection, and this availability does not depend on the amount of any responding that may have occurred since that collection. Modeling VI tasks therefore requires a representation of time that is continuous rather than discrete. Finally, ‘concurrent’ tasks involve two or more response mechanisms with independent reward schedules. Each of these may be a VR or VI schedule, or one of a number of other schedule-types; the particular combination used is then identified as, for example, a VR-VR, VI-VI, or VR-VI schedule.

Having shown how an adaptive DDM can implement melioration, we then develop a neural implementation of this model that can be used to make predictions about firing rates and synaptic strengths in a model of brain circuits underlying choice and simple decision making.

In the Discussion, we address the relationship of this model to other neural models of melioration and matching, and we propose a possible mapping of the model onto the brain. We conclude by addressing the prospects for extending the current model to tasks involving more than two concurrent responses.

2. Results

2.1. Choice proportions of the adaptive DDM

Exact melioration and matching occur for one model in a family of adaptive choice models based on the DDM; for the other models in this family, close approximations to matching can be obtained.

The model family that we analyze uses the experience-based or feedback-driven learning approach of the adaptive DDM in Simen, Cohen, and Holmes (2006). This adaptive model was designed to learn to approximate optimal decision-making parameters (specifically, response thresholds) of the DDM in a two-alternative decision making context (discussed in Bogacz et al., 2006). In an operant conditioning context, and with a slight change to its method of threshold adaptation, the expected behavior of this model (Model 1) is equivalent to melioration, which leads to matching at equilibrium (i.e., a state in which choice proportions are essentially unchanging). Model 1 makes choices probabilistically, and as a function of the relative reward rate (Ri/(R1 + R2)) earned for each of the two alternatives (this quantity is sometimes referred to as ‘fractional income’, e.g., Sugrue, Corrado, & Newsome, 2004).

We also include in this family another model (Model 2) that adapts a different DDM parameter (drift). This model is discussed in Bogacz, McClure, Li, Cohen, and Montague (2007). Although it cannot achieve exact matching (Loewenstein & Seung, 2006), this model provides an account of another important function that is widely used in reinforcement learning (Sutton & Barto, 1998) to determine choice probabilities: the ‘softmax’ or logistic function of the difference in reward rates earned from the two alternatives. We address this model because behavioral evidence abounds for both types of choice function, and because the adaptive DDM may be a single mechanism that can account for both.

The rest of the model family consists of parameterized blends of these two extremes. These blended models adapt thresholds and drift simultaneously in response to reward rates (the proportional weighting of each of these parameters defines the model space spanning the range between Model 1 and Model 2).

We begin our discussion of choice proportions with Model 1, which implements melioration and achieves matching exactly via threshold adaptation. We then move on to the alternative that adapts drift, and finally to models that blend the two approaches.

2.1.1. Model 1: the threshold-adaptive, zero-drift diffusion model

In order to determine the effects of parameter adaptation on the behavior of Model 1 (or any other model in the adaptive DDM family), we make use of known, analytical expressions for its expected error proportions and decision times in the context of decision making tasks.

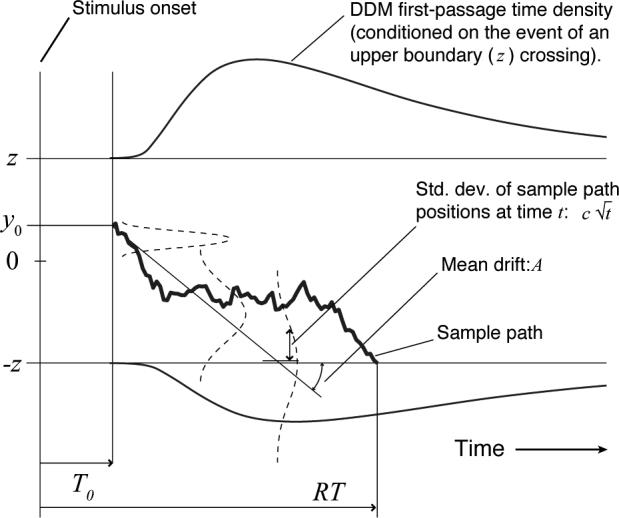

In a decision-making task, the response time of the DDM is determined by the time it takes after the onset of a stimulus for a drift-diffusion process to reach an upper or lower threshold (±z; see Fig. 1). A drift-diffusion process is a random walk with infinitesimally small time steps that can be defined formally (Gardiner, 2004) by the following stochastic differential equation (SDE):

| (2) |

See Fig. 1 for an interpretation of the drift parameter A and the noise parameter c. As the distance between thresholds and starting point increases, response time increases. At the same time, accuracy increases, because it is less likely that random fluctuations will push the diffusion process across the threshold corresponding to the wrong response to the current perceptual stimulus.

Figure 1.

Parameters, first-passage density and sample path for the extended drift-diffusion model (DDM). The leftmost point of the horizontal, time axis is the time at which stimulus onset occurs. In this example, the drift, which models the effect of a perceptual stimulus, is downward with rate A; y0 is the starting point of the diffusion process. The sample path is an individual random walk in continuous time; the distribution of an ensemble of such paths is shown by the dashed Gaussians that expand vertically as time progresses. Response time distributions are equivalent to the distributions of first-passage times shown as ex-Gaussian-shaped curves above the upper and below the lower threshold (T0 is depicted here as elapsing before the random walk begins, but this is only for simplicity — a component of T0 should follow the first-passage to encode motor latency.)

The expected proportion of errors (denoted 〈ER〉) and the expected decision time (denoted 〈DT〉) are described by the following analytic expressions (Busemeyer & Townsend, 1992; cf. Bogacz et al., 2006 and Gardiner, 2004):

| (3) |

| (4) |

Varying the drift (A), thresholds (±z) and starting point (y0) produces adaptive performance.

Model 1 is a variation on the model in Simen et al. (2006). The latter model achieves an approximately reward-maximizing speed-accuracy tradeoff (SAT) in a large class of simple decision making tasks. It does this by setting the absolute value of both thresholds equal to an affine function of the overall reward rate R earned from either response: z = zmax – w · R. Its basic operating principle is that as R increases, thresholds decrease, so that the diffusion process reaches a threshold more quickly (speed increases), but is also more likely to cross the wrong threshold (accuracy decreases).

The current model, Model 1, sets thresholds to be inversely proportional to reward rates. This inverse proportionality produces SAT-adjustment properties similar to those of the affine function used in Simen et al. (2006). Model 1 also generalizes the symmetric threshold-setting algorithm defined in that article to allow for independent adaptation of the two thresholds (we denote their values as θ1, corresponding to the upper threshold, +z, and θ2, corresponding to the lower threshold, −z) based on independent estimates of the reward rate earned for each response (denoted R1 and R2 respectively):

| (5) |

This asymmetric threshold adaptation is equivalent to adapting thresholds ±z symmetrically while simultaneously adapting the starting point y0; however, picking the convention that the starting point is always 0 makes notation more compact. Eqs. 3-4 can then be interpreted by substituting (θ1 + θ2)/2 for z, and (θ2 – θ1)/2 for y0. We refer to Model 1 as the threshold-adaptive DDM.

In order for an analysis of response times and choice probabilities based on the DDM to be exact, however, we cannot allow the threshold to change during the course of a single decision. A threshold that grows during decision making will produce different expected response times and probabilities that are difficult to derive analytically. In order to make exact analytical use of the DDM, the model updates the thresholds according to Eq. 5 only at the moment of each response:

| (6) |

Thereafter, they remain fixed until the next response. Simulations suggest that using Eq. 5 directly without this change-and-hold updating produces very similar results.

2.1.2. Reward rate estimation

In order to use reinforcement history to control its threshold parameters, the model must have a mechanism for estimating the rate of reward earned for each type of response (we will refer to the two response types in a two-alternative task — e.g., a left vs. a right lever press — as response 1 and response 2). The model computes the estimate Ri of reward earned for response i by the ‘leaky integrator’ system defined in Eq. 7:

| (7) |

The time-solution of Eq. 7, Ri(t), is obtained by convolving the impulse-response function of a low-pass, resistor-capacitor (RC) filter (a decaying exponential) with the input reward stream, ri(t) (Oppenheim & Willsky, 1996). If rewards are punctate and intermittent, then they can be represented by a reward stream ri(t) which is a sum of Diracdelta impulse functions (sometimes referred to as ‘stick functions’). Eq. 7 is a continuous-time generalization of the following difference equation, which defines Ri as an exponentially weighted moving average of the input ri(n) (where n indexes time steps of size Δt; n can also be used to index only the times at which responses are emitted):

| (8) |

This is a common approach to reward rate estimation in psychological models (e.g., Killeen, 1994). The extreme rapidity with which animals are able to adapt nearly optimally to changing reinforcement contingencies (e.g., Gallistel, Mark, King, & Latham, 2001), and near-optimal fitted values of τ in Sugrue et al. (2004), suggest that animals must also have a mechanism for optimizing τR or α as well (e.g., the multiple time scales model of Staddon & Higa, 1996).

We take a continuous-time approach (Eq. 7) in order to account for animal abilities in variable interval (VI) tasks (and for simplicity, we leave τR fixed). In these tasks, the reward rate in time (rather than the proportion of rewarded responses) must be known in order to adapt properly.1

2.1.2. Matching by the threshold-adaptive, zero-drift diffusion model

We now describe what happens when the threshold-adaptive diffusion model (Model 1) is applied to a typical task in the instrumental or operant conditioning tradition. One such task is a concurrent VI-VI task, in which there is usually not a signal to discriminate, and no ‘Go’ signal or cue to respond.2

A natural application of the DDM to this design involves setting the drift term to 0: no sensory evidence is available from the environment for which a response will produce reward. Instead, only reinforcement history is available to guide behavior. We refer to the DDM with drift identically 0 as a zero-drift diffusion model.

As we show in Appendix B, the choice probabilities are as follows for a zero-drift diffusion model with starting point equal to 0, and thresholds θ1 and θ2 of possibly differing absolute values:

| (9) |

Substituting ξ/Ri for θi gives the following:

| (10) |

When decision making is iterated repeatedly with a mean response rate b, the rate Bi of behavior i equals Pi · b. Eq. 10 is then equivalent to the matching law (Eq. 1) for two-response tasks.

If we ignore response times and simply examine choice sequences, we note that the adaptive diffusion model is equivalent to a biased coin-flipping procedure, or Bernoulli process, for selecting responses (at least whenever it is in a steady state in which relative response rates are constant for some time period). For that reason, the adaptive diffusion model predicts that the lengths of runs in which only one of the two responses is emitted will be distributed approximately as a geometric random variable — this is a feature of its behavior that is commonly used to distinguish coin-flipping models that account for matching from others that match by some other means. Geometrically distributed run lengths frequently occur in experiments in which a changeover delay (COD) or other penalty is given for switching from one response to the other (e.g., Corrado et al., 2005) whereas run lengths are non-geometric when CODs are absent (e.g., Lau & Glimcher, 2005). Indeed, without such penalties, matching itself is usually violated. This result suggests that, in addition to the account we give here of choice by reinforcement-biased coin-flipping, some theoretical account must eventually be given for an apparently prepotent tendency toward response-alternation that competes with the biasing effects of reinforcement. Such an account, however, is beyond the scope of our current analysis.

2.1.3. Melioration by the threshold-adaptive, zero-drift diffusion model

Melioration itself arises from Model 1 automatically. Loosely speaking, melioration (Herrnstein, 1982; Herrnstein & Prelec, 1991; Herrnstein & Vaughan, 1980; Vaughan, 1981) is any process whereby an increase in obtained reward for one response leads to a greater frequency of that response. (The formal definition of melioration and a proof based on Eq. 10 that Model 1 implements melioration are given in Appendix A.)

Because an increase in reward rate for one response brings its threshold closer to the DDM starting point, the model dynamically reallocates choice proportions so that the more rewarding response is selected with higher probability, and thus relatively more frequently, in such a way that exact matching occurs at equilibrium. Importantly, though, the model's overall response rate must also be known before anything can be said about the absolute frequency of each type of response.

2.1.4. Difficulties faced by Model 1

In fact, without some additional model component for controlling response rates, the zero-drift diffusion model with thresholds set by Eq. 5 and — critically — reward rates estimated by Eq. 7 produces a response-rate that inevitably collapses to 0 at some point. This occurs because at least one and possibly both of the two thresholds moves away from the starting point after every response (because one or both of the reward rate estimates must decrease at every moment). This slows responding, which in turn reduces the rate of reward in a VR or VI task, in a vicious circle that ultimately results in the complete cessation of responding. We discuss why this result is inevitable in section 2.2.

One way to resolve this problem is to enforce a constant rate of responding. This can be achieved by renormalizing both thresholds after each response so that their sum is always equal to a constant K (i.e., divide the current value for θi (call it ) by the sum of current values and multiply by the sum of old values (θ1 + θ2) = K to get the new value ; as a result, ).

Some form of renormalization is therefore promising as a solution to response-rate collapse. However, since inter-response times and response rates are variable features of behavior that we seek to explain by the use of the DDM, we can not assume constant response rates. Instead we use a different renormalization scheme, outlined in section 2.2.7, that is based on a second drift-diffusion process operating in parallel with the choice process. This parallel process effectively times intervals (Simen, 2008) and adaptively enforces a minimum overall response rate (B1 + B2).

It is worth noting that an even simpler solution exists which can account for RT data in many tasks, but which is unable to handle traditional VI schedules. Rather than basing thresholds on reward rates, this solution sets thresholds inversely proportional to the expected reward magnitude for each response, computed by Eq. 8, as for example in Bogacz et al. (2007) and Montague and Berns (2002). In this case, reward rates do not decrease toward 0 (and thresholds do not increase toward infinity) at every moment other than when a reward is received. Instead, a threshold only increases when its corresponding response earns less than what is expected, and complete cessation of responding never occurs except on an extinction schedule — that is, a schedule in which rewards are omitted on every response.

Nevertheless, peculiarities in the RT and IRT predictions of Model 1 (discussed in Section 2.2) and the need to consider VI schedules for generality lead us next to consider a second approach to adaptive behavior by the DDM.

2.1.5. Model 2: the drift-adaptive, fixed-threshold DDM

Adapting drift on the basis of reward rates is another way to achieve choice reallocation, and this approach has antecedents in psychology (Busemeyer & Townsend, 1993) and behavioral neuroscience (Yang et al., 2005). A drift-adaptation approach does not generally achieve exact matching, but it also does not suffer the same sort of response rate collapse as the threshold-adaptive diffusion model.

Bogacz et al. (2007) investigated drift adaptation rather than threshold adaptation in a drift-diffusion model of human performance in an economic game similar to a concurrent VR-VR experiment (Egelman, Person, & Montague, 1998; Montague & Berns, 2002). They noted that when drift is determined by the difference in expected value for each response (i.e., A in Eqs. 3-4 equals γ · (R1 – R2)), and when the input stimulus equally favors both responses, then choice probability is given by a sigmoid function of the difference in expected value (specifically, a logistic function equal to 1 – 〈ER〉, with 〈ER〉 defined by Eq. 3 with y0 = 0). They further note that this choice probability rule is identical to the ‘softmax’ function typically used for probabilistic action selection in reinforcement learning (Sutton & Barto, 1998). For this model, the choice proportion ratio is as follows:

| (11) |

Eq. 11 can approximate the strict matching law as long as R1/(R1 + R2) is not too close to 0 or 1, and the model's trial-by-trial performance is qualitatively similar to melioration as defined by Herrnstein and Vaughan (1980) (cf. Montague & Berns, 2002 and Soltani & Wang, 2006). Corrado et al. (2005) also find evidence for a better fit to monkey behavioral data using a sigmoid function of reward rate differences than was found in fits of a choice function based on the ratio of reward rates (as in Sugrue et al., 2004).

In order to get the close fits that are sometimes observed experimentally (Davison & McCarthy, 1988; Williams, 1988) between data and the predictions of the strict matching law over the total possible range of relative reward rate values (R1/(R1 + R2)) ranging from 0 to 1, a DDM-derived logistic choice function (as in Eq. 3) requires the right balance between expected reward difference (proportional to A), noise (c) and fixed threshold z. For any given data set for which strict matching appears to hold, a parameter set can be found so that a logistic function of reward rate differences fits the data fairly well. However, for a different data set with a different range of reward rates that also accords with strict matching (e.g., a condition in the same experiment that doubles or halves the reward magnitues for both responses), the same threshold and noise values cannot produce a good fit. When choice proportions are plotted as a function of relative reward rates (as in Fig. 3E), the same logistic function will overmatch3 if reward magnitudes for each response are boosted. The sigmoid in that case will become too steep at its inflection point to approximate the identity line predicted by matching. Thus, empirical results in the literature suggest that in order for sigmoid choice functions derived from the DDM to fit data generally, either thresholds or noise must be adapted as well as drift.

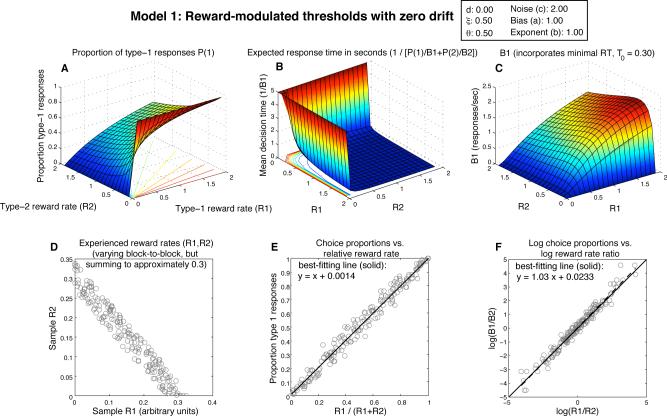

Figure 3.

Expected behavior of Model 1, the zero-drift diffusion model (drift=0) with threshold adaptation (θi = ξ/Ri). A) Surface shows expected proportion of 1-responses as a function jointly of reward rate for 1-responses (R1) and reward rate for 2-responses (R2); radial lines in the R1,R2 plane show contours of constant choice probability. B) Expected response time as a function of R1 and R2; this 3D plot is rotated relative to panels A and C to make the shape of the surface more easily discernible; since its height goes to infinity, it is also truncated to 5 sec. C) Expected 1-response rate (B1) as a function of R1,R2 (notice the collapse to 0 along both the R1 and R2 axes). D) Scatterplot of random R1,R2 pairs, representing blocks of 100 responses in which those reward rates were obtained. E) Scatterplot showing expected choice proportions plotted vs. relative reward rate in those blocks of responses; the best-fitting line (solid) is superimposed, and its equation is displayed. Matching predicts a slope of 1 and intercept of 0 (dashed line). F) Choice ratio B1/B2 plotted vs. the reward ratio R1/R2 on a log-log scale, which is frequently used for highlighting generalized matching behavior. Predicted behavior is plotted as a dashed line; best-fitting line is solid.

We have demonstrated that the threshold-adaptive, zero-drift diffusion model predicts the strict matching law.4 Furthermore, the drift-adaptive, fixed-threshold DDM predicts the sigmoid choice function for which some researchers have found evidence (e.g., Lau & Glimcher, 2005 and Corrado et al., 2005). On the basis of implausible IRT predictions of either model in isolation (discussed in the next section), we will argue that simultaneously adapting both drift and thresholds in response to changing reward rate estimates is the most sensible modeling approach.

We now turn to the other major feature of behavior that the DDM is used to explain in decision making research — response times. These predictions may be used to predict response rate and inter-response times in operant conditioning tasks.

2.2 Decision times and inter-response times

We have shown that the DDM can implement biased coinflipping as a temporally extended stochastic process. As was shown in Bogacz et al. (2006) and Usher and McClelland (2001), a simple stochastic neural network can in turn implement diffusion processes (see section 2.5). Thus, to the extent that neural networks stand as plausible models of brain circuits, the preceding results suggest progress in mapping the abstract coin-flipping stages of several models onto the brain (for example, the models of Corrado et al., 2005; Daw, O'Doherty, Dayan, Seymour, & Dolan, 2006; Lau & Glimcher, 2005; Montague & Berns, 2002).

However, the real strength of the DDM in decision-making research has been its ability to provide a principled account for the full shape of RT distributions in a variety of decision making experiments involving humans and non-human primates (Smith & Ratcliff, 2004). Since we propose to model performance in typical VR and VI conditioning tasks (tasks in which no signal to respond is given) by restarting the DDM from 0 after every response, the same first-passage time distributions of the DDM serve as IRT predictions without any modifications (cf. a similar approach in Blough, 2004). These IRT predictions must be addressed before an adaptive DDM can be considered a plausible behavioral model of operant conditioning data.

2.2.1. Slower responding for less rewarding responses

The adaptive DDM discussed in section 2.1 predicts slower responding when a response is chosen that has recently been less rewarding than the alternative (either because rewards for that response have been small when the response was made, or because that response has been made only infrequently). This is true regardless of whether drift or threshold or both of these are adapted. In the case of threshold adaptation alone (Model 1), the less rewarded response will have a threshold that is on average farther from the starting point than the threshold for the more rewarded response; a zero-drift diffusion process therefore takes more time to reach the more distant threshold. The same holds for a model in which thresholds are equidistant, but drift is nonzero (Model 2); in that case, drift toward one threshold will produce faster responses of that type than the alternative. These qualitative predictions are implied by Eq. 4 when reward-modulated thresholds and/or drift are substituted.

2.2.2. Absolute rates of responding

Beyond predicting relative response rates, the DDM predicts absolute IRTs and absolute rates of responding. In fact, the adaptive DDM with threshold modulation and zero drift (Model 1) predicts a variant of the absolute response-rate phenomenon known as ‘Herrnstein's hyperbola’ when we make the same assumptions as Herrnstein and colleagues. Herrnstein's hyperbola (De Villiers & Herrnstein, 1976) is a hyperbolic function that describes response rate as a function of earned reward rate (R1) in a variety of single-schedule tasks (those in which there is only a single response alternative):

| (12) |

Here Re is the ‘extraneous’ rate of reward earned from all other behaviors besides the behavior of interest (for example, Re may represent the reward the animal obtains from grooming behaviors in an experiment that focuses on lever-pressing rates as B1). The constant k represents the sum of all behaviors in which the subject engages during the experiment (both the experimental response and all other behaviors). This result derives from the matching law (Eq. 1) as long as Re is assumed to be constant (De Villiers & Herrnstein, 1976). In fact, however, the constant k assumption does not appear to be widely accepted by researchers in animal behavior, because of variations that appear to depend on satiety and other experimental factors (Davison & McCarthy, 1988; Williams, 1988). Furthermore, assuming a constant rate of reward Re earned from inherently rewarding behavior extraneous to the task seems implausible. Nevertheless, Eq. 12 can be successfully fit to data from a wide range of experiments, and when we make the same assumptions, the adaptive-threshold, zero-drift diffusion model (Model 1) produces a very similar equation for B1, with one interesting deviation.

In order to model single-schedule performance with the DDM, we assume that the upper threshold, θ1, corresponds to response 1, and that the lower threshold, which we now call θe, corresponds to choosing some other response (e.g., grooming), the total rate of reward for which is Re. The mean decision time of the zero-drift diffusion model is the following (see Eq. 34 in Appendix C):

| (13) |

T0 represents the assumption of an unavoidable sensory-motor latency that must be added to the decision time of Eq. 34 in order to give a response time; T0 may itself be assumed to be a random variable, typically modeled as uniformly distributed and with variance that is small relative to that of decision times (at least in the typical decision making experiment; cf. Ratcliff & Tuerlinckx, 2002).

It will also be useful to note the following relationship between the rate of behavior i and the rate of overall behavior, which is proved in Appendix D:

| (14) |

That is, the expected rate of the ith response is the probability of choosing the ith response, times the expected rate of responses of any kind.

Substituting Eq. 9 and Eq. 13 into Eq. 14 gives the following:

| (15) |

Except for the final term in the denominator, this is identical to Eq. 12. Furthermore, k in DeVilliers and Herrnstein's formula — which is intended to represent the rate of all behavior in total — corresponds in Eq. 15 to the inverse of the residual latency T0, and this is indeed the least upper bound on the rate of behavior that can be produced by the model (holding Re fixed and taking R1 to infinity). Thus the threshold-adaptive DDM (Model 1) provides nearly the same account for approximately hyperbolic single-schedule responding as the matching law (as long as R1 and Re are not too small, and under the problematic assumption of constant Re — we examine the consequences of abandoning this assumption in section 2.2.4).

2.2.3. Choice and IRT predictions combined

Now we are in a position to examine the combined choice proportion and IRT/response-rate predictions of the adaptive DDM, to see how they compare to the choice-proportion predictions of the strict and generalized matching laws, and to the response-rate predictions of the strict matching law in single-schedule tasks. Fig. 3 shows the expected proportion of 1-responses for the threshold-adaptive, zero-drift diffusion model in panel A, the expected decision time for either type of response (without the contribution of the residual latency T0) in panel B, and the expected rate of 1-responses, B1, in panel C. Taking slices through the surface in panel C by holding R2 fixed and letting R1 range from 0 to infinity produces the quasi-hyperbolic functions of R1 defined by Eq. 15. All surfaces are shown as functions defined for pairs of earned reward rates, R1 and R2 (R2 may be interpreted as extraneous reward (Re) in a single-schedule task, or as the reward rate for 2-responses in a concurrent task).

Panel D shows a uniform sampling of reward rate pairs that might be earned in many blocks of an experiment in which the overall rate of reward is kept roughly constant by the experimenter, but in which one response may be made more rewarding than the other (as in Corrado et al., 2005); each scatterplot point represents a single block. Each point in panels E and F shows the proportion of 1-responses in a block of 100 responses from the choice probability function in panel A. The two reward rates ‘experienced’ in each 100-response sample correspond to one of the 200 points in the R1,R2 plane plotted in panel D. Panel E plots 1-response proportion against the relative reward rate for 1-responses; points falling on the diagonal from (0,0) to (1,1) adhere to the strict matching law. Panel F plots the same data, but in terms of behavior ratios vs. reward ratios, on a log-log scale; points falling on any straight line in this plane adhere to the generalized matching law (Baum, 1974). Note that Herrnstein's hyperbola would produce a B1 surface in panel C that would look identical in shape to the surface in panel A — thus, it is the values of B1 corresponding to values of R2 near 0 that disrupt the equivalence of the threshold-adaptive DDM and the predictions of the matching law.

2.2.4. Response-rate collapse in Model 1

The response rates of Model 1 and Model 2 are determined by the decision time of the DDM. If we do not renormalize thresholds, then we can abandon the implausible assumption of constant response times made in section 2.1.4. We can also abandon the implausible assumption of constant Re, made in section 2.2.2, by modeling rewards for extraneous behavior as we would in a two-choice task: whenever the lower threshold θe is crossed, extraneous behavior is performed and Re either increases or decreases.

However, when we do this, Model 1 produces a catastrophic outcome: response rates on both alternatives ultimately diminish to 0. This occurs because as Ri approaches 0, θi approaches infinity (by Eq. 5). Eq. 13 then implies that RT also goes to infinity as long as does not approach 0 (i.e., as long as Rj has some finite maximum). In fact, both R1 and Re have finite maxima, because the residual latency T0 ensures that both B1 and Be are finite (and rewards are contingent upon behavior). Thus θ1 and θe are bounded below at values greater than 0. At the same time, reward rates for either behavior can be arbitrarily close to 0, so that θ1 and θe (and therefore RT) are unbounded above.

In VR-VR tasks, in fact, one response winds up being selected exclusively by Model 1, just as it would be by melioration per se. This leads to a reward rate of zero for that response, and therefore, by the argument just given, complete catatonia. Similar problems occur in VI-VI tasks even when both options have been chosen in the last few trials, because an unrewarded choice threshold accelerates when it increases, but decelerates when it decreases. This follows from our use of a reward-rate estimation process (Eq. 7) that changes continuously in time.

2.2.5. Reward magnitude-independent response rates in Model 2

The drift-adaptive, fixed-threshold model (Model 2) does not have this problem in the regime of exclusive choice; when drift strongly favors one response over the other, the favored response is made so much more rapidly than the less preferred that overall response time is finite (see Fig. 4B). Holding thresholds constant and adapting only drift instead produces the converse problem of much larger response times near a 1:1 ratio of the two response types (which is the ratio expected when drift is near 0). Furthermore, expected response rates are equal for all points on the line R1 = R2, even (0,0). Near the origin, though, the response rate of any plausible model should go to 0 or at least decrease, since no reward is being earned. Functionally, this is a less severe problem than the response-rate collapse produced by Model 1, since Eq. 13 shows that a zero-drift model always has a finite expected response time if thresholds are finite (note that the B1 surface is above 0 everywhere along the R1 axis in Fig. 4C). This response-rate pattern is nevertheless quite implausible, given the widespread finding that response rate increases as reward rate increases. Furthermore, the IRT near R1 ≈ R2 grows arbitrarily large as thresholds grow large (or noise grows small).

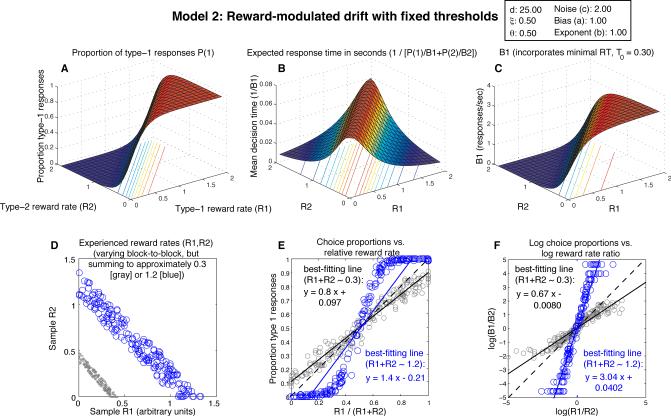

Figure 4.

Expected behavior of Model 2, the fixed-threshold diffusion model with drift adaptation (drift = d · (R1 – R2), with drift coefficient d = 25, and threshold θi = 0.5). Notice the implausibly high response rate at (R1,R2) = (0,0) in panels B and C, a point where no responding should be expected. Furthermore, while a reasonable approximation to strict matching is observed in Panel E for relative reward rates near 0.5 and summed reward rates near 0.3 as in Fig. 3 (gray scatterplots), keeping other parameter values the same while doubling reward rates in panel D (so that the rates obtained for responses 1 and 2 sum to approximately 0.6) produces overmatching (not shown); quadrupling rewards leads to extreme overmatching (blue scatterplots in D, E, and F). In contrast, Model 1's behavior approximates strict matching for all reward rate combinations.

Therefore, in order for Model 2 to achieve reasonable response times near a 1:1 behavioral allocation where drift A is near 0 (i.e., R1 ≈ R2), Eq. 4 may require thresholds to be small, or noise to be large, or both. If noise is large and thresholds are small, however, then the overall response rate is high (and IRT is small) for all combinations of reinforcement history (R1,R2). Also, substituting small thresholds or large noise into Eq. 3 produces a shallow sigmoid that can result in undermatching behavior5 in experiments with approximately a fixed level of total reward (corresponding, for example, to the scatterplot of reward rate pairs in panel D of Figs. 3-5). Thus, for Model 2, either overall response rate is uniformly very high regardless of reinforcement history, or dramatic slowdowns in response rate occur near a 1:1 behavioral allocation.

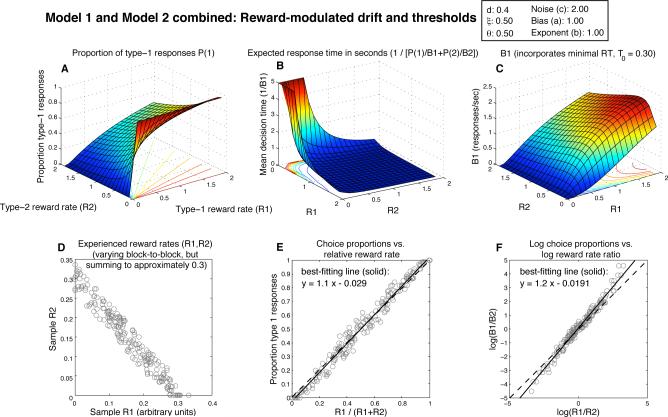

Figure 5.

Expected behavior of a combination of Model 1 and Model 2, the DDM with both threshold and drift adaptation (drift = d · (R1 – R2), θi = ξ/Ri, with d = 0.4, and θi = 0.5). Note the rotation once again of the plot in panel B relative to panel A and C, and its truncation to 5 sec. As with Model 1 alone, the absolute magnitude of obtained reward rates has little effect on the quality of the approximation to strict matching.

2.2.6. Model 1 and Model 2 combined

Combining threshold and drift adaptation can mitigate these IRT problems, just as combining them may be necessary in order to fit choice proportions. As shown in Fig. 5, overall response rate is low and IRT is high near (R1,R2) = (0,0) (panel B), which is consistent with the observation that animals cease responding in extinction (that is, when rewards are no longer given for responses). Also, B1 is greater than 0 when R2 = 0 and R1 > 0 (panel C). This too is closer to what is seen empirically, and to what is predicted by the matching law under the assumption of constant reward for extraneous behavior.

The surfaces in Fig. 5 B and C are somewhat deceptive, though: they are based on expected DT as predicted by Eq. 4, and this equation assumes a fixed threshold and drift. In order for these surfaces to provide useful descriptions of the system's behavior, the point (R1,R2) must change slowly enough that the average response rate over multiple responses converges to nearly its expected value. An argument based on iterated maps then shows that the system will in fact reach an equilibrium somewhere (with the particular equilibrium response rates depending on the reward schedule) so that these figures are still useful. The problem is that this equilibrium can easily occur at (R1,R2) = (0,0) as thresholds increase over multiple trials. Even worse, a reward rate estimate can collapse to nearly 0 (with the value of its corresponding threshold on the next trial exploding to infinity) within the course of a single, long response, and such long responses are bound to occur eventually, even if they are rare.

2.2.7. Threshold renormalization

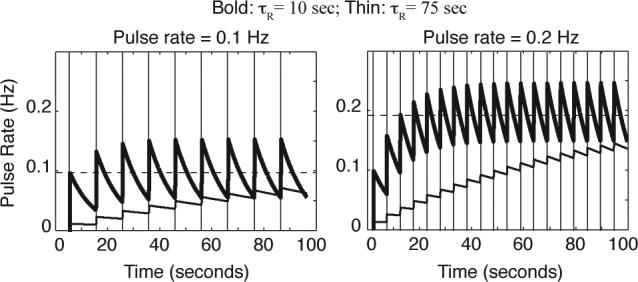

We propose a threshold renormalization process that solves both problems: it prevents single-trial threshold blowups, and, across multiple trials, it breaks the system out of the vicious circle in which lower reward rates lead to lower response rates, which lead in turn to still lower reward rates in VI and VR tasks. This approach uses an adaptive, drift-diffusion-based interval timer, defined by the following SDE, to bound response times:

| (16) |

This diffusion process begins at 0 after every response and has a positive drift that is proportional to the current reward rate being earned from all responses (R = R1 + R2), plus a positive constant ζ that ensures a minimum rate of responding. With a single fixed threshold K > 0, the first-passage-time distribution of this system is the Wald, or inverse Gaussian, distribution, with expected time K/(R+ζ) and variance Kc2/(R + ζ)3 (Luce, 1986).

Whenever an upper-limit IRT duration encoded by the timer has elapsed without a response, the timer triggers a rapid potentiation of both reward rate estimates: both estimates are multiplied by a quantity that grows rapidly on the time scale of an individual response time. This potentiation increases, and thresholds concomitantly decrease, until one response threshold hits the choice diffusion process at its current position. At this point, both the choice drift-diffusion process and the timer start over again and race each other to their respective thresholds. Thus, the choice diffusion process frequently triggers a response before the IRT-limit has elapsed on the next trial. This model component solves the threshold-instability problem, but it is modular and separable; it may be possible by some method currently unknown to us to renormalize thresholds without it.

2.2.8. Model performance in a dynamic VR task

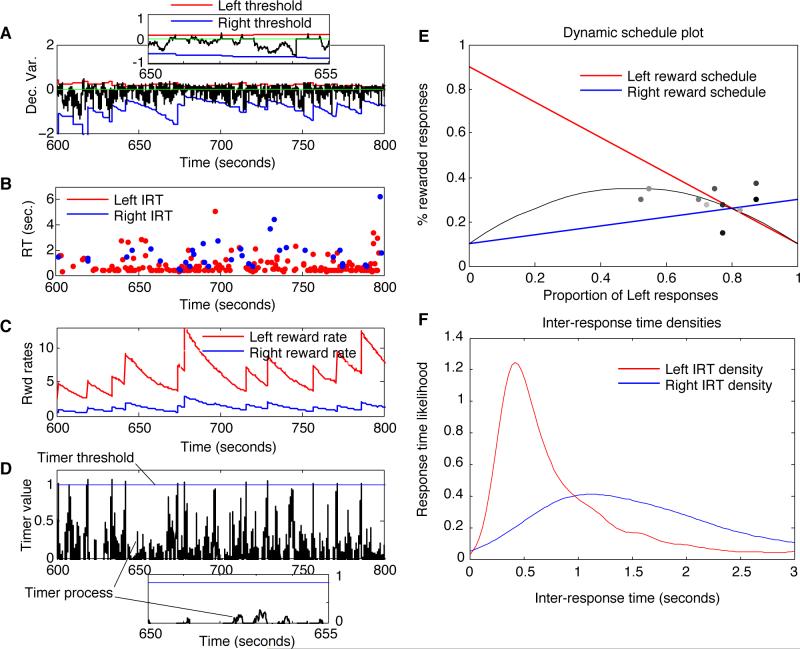

Fig. 6 shows the entire system at work in real time on a dynamic, concurrent VR-VR task. This task is prototypical of economic game tasks performed by humans (e.g. ‘the Harvard Game’, Herrnstein, 1997, and Egelman et al., 1998). It is a classic example of dynamic reinforcement contingencies that depend on a subject's previous response history (e.g., Herrnstein & Prelec, 1991). One parameterization of this task is depicted graphically in panel E. There, the horizontal axis represents the percentage of leftward responses made by a subject in the last n trials (in this case, the typical value of 40), in a task involving left and right button pressing (the percentage of rightward responses is 100 minus this value). The vertical axis represents the expected percentage of responses that are rewarded with a unit of reward (variants of this scheme involve basing either the magnitude of the reward, the delay to reward delivery, or the interval between rewards in a concurrent VI-VI task, on response history). The solid, descending straight line plots the reward percentage for leftward responses as a function of past response history; the ascending, dashed line plots the reward percentage for rightward responses. The curved dashed line represents the expected value obtained at a given allocation of behavior.

Figure 6.

Melioration by an adaptive-threshold, zero-drift diffusion model in a dynamic, VR-VR task (with threshold explosion controlled by a drift-diffusion timer). A) The diffusion process over the course of many responses. The trajectory of the decision variable is in black, causing Left responses whenever it intersects the upper threshold in red, and Right responses when it intersects the bottom threshold in blue. At each response, it resets to 0 and begins to drift and diffuse again. A 5-sec window is magnified in the inset. B) The plot of inter-response times (IRTs) in seconds. At points where the IRTs jump to a large value, the system is in danger of response-rate collapse (which is prevented by the expiration of a drift-diffusion response timer). C) The two reward rate estimates over time; large jumps indicated potentiation occurring because the response timer elapsed. D) The ‘decision’ variable of the DDM timer, in black, and a fixed threshold in blue. Whenever the timer hits threshold, a weight renormalization takes place. This occurs for three out of the six responses occurring in the magnified, twenty-second window shown in the inset. E) The reward schedules for Left responses (red) and Right responses (blue) as a function of response proportions in the preceding 40 trials (expected reward is in grey). Dots show choice proportion and reward proportion on the previous 40 trials, plotted once every 40 trials; light dots represent points near the beginning of the simulation, while dark dots represent points near the end. F) The IRT distribution for Left (red) and Right (blue) responses — Right IRTs were on average much longer than those for the more preferred Left responses.

In this figure, Model 1 was simulated to illustrate the effect of the adaptive interval timer (since Model 1 is the most susceptible to response-rate collapse) and to show how close to the system is able to come to strict matching (since only Model 1 produces exactly the right response proportions to achieve strict matching). Panel A shows the choice drift-diffusion process iterated repeatedly within boundaries defined by upper and lower thresholds, which are in turn defined by the two reward rate estimates in panel C. Individual response times generated by the model are plotted at the time of their occurrence in panel B, and the Gaussian kernel-smoothed empirical densities of response times for left and right responses are shown in panel F; these densities display the pronounced RT/IRT difference between more and less preferred responses that can develop for some parameterizations of the model. Panel E superimposes a scatterplot of a sequence of leftward response proportions (horizontal coordinate) and the corresponding reward earned (vertical coordinate) on consecutive blocks of 40 responses, in the task defined by the dynamic VR-VR schedules given by the straight lines; points occurring closer to the end of the simulation are darker. Thus the system quickly moves to the matching point (the intersection of the two schedule lines, which is the only possible equilibrium point for a strict meliorator), but then moves around it in a noisy fashion. This noisy behavior results from computing response proportions inside a short time window, making it difficult to distinguish Model 1 from Model 2 (Bogacz et al., 2007) and related models (e.g., Montague & Berns, 2002; Sakai & Fukai, 2008; Soltani & Wang, 2006) in this task.

2.3 Neural network implementation

The adaptive drift-diffusion model can be implemented by a simple, stochastic neural network. Here we build on key results from a proof of this correspondence in Bogacz et al. (2006). We use these results to show that drift adaptation is achieved by changing a set of weights linking stimulus-encoding units to response-preparation units. These latter units prepare responses by integrating sensory information and competing with other units preparing alternative responses. We refer to these weights as stimulus-response (SR) weights. We then show that threshold adaptation is achieved by adapting a second set of weights linking response-preparation units to response-trigger units — we refer to these as response-outcome (RO) weights (highlighting the fact that these weights are modulated in response to outcomes of behavior, regardless of the current stimulus).6

2.3.1 Neural network assumptions

The neural DDM implementation of Bogacz et al. (2006) and Usher and McClelland (2001) rests on a simple leaky integrator model of average activity in populations of neurons (cf. Gerstner, 1999; Shadlen & Newsome, 1998; Wong & Wang, 2006). A single quantity, Vi(t), stands for a time-averaged rate of action potential firing by all units in population i (action potentials themselves are not modeled). In the same manner as the reward rate estimator of Eq. 7 (but with a much faster time constant), this time-average is presumed to be computed by the synapses and membrane of receiving neurons acting as leaky integrators applied to input spikes. Reverberation within an interconnected population is then presumed to lead to an effective time constant for the entire population that is much larger than those of its constituent components (Robinson, 1989; Seung, Lee, Reis, & Tank, 2000).

Each unit in the network is defined by the following system of SDEs, which, aside from its stochastic component, is fairly standard in artificial neural network modeling (Hertz, Krogh, & Palmer, 1991):

| (17) |

| (18) |

Eq. 17 states that momentary input to unit i is computed as a weighted sum of momentary outputs (Vj) from other units. This output is corrupted by adding Gaussian white noise (dWij/dt),7 representing the noise in synaptic transmission between units. This internally generated noise may be large or small relative to the environmental noise that is received from the sensory periphery; for our purposes, all that matters is that there are uncorrelated sources of white noise in the system. For simplicity, we weight the noise by a constant coefficient c, rather than potentiating it by the connection strength wij. Weight-dependent potentiation seems at least as plausible as a constant coefficient and would cause the noise amplitude in a receiving unit to depend on the firing rates of units projecting to it — we do not yet know how such state-dependent noise would affect our results.

This converging, noisy input is then low-pass filtered by the unit to reduce the noise — that is, the unit's leaky integration behavior causes it to attenuate, or filter out, high frequencies (Oppenheim & Willsky, 1996). As in Eq. 7, the unit in Eq. 17 computes the continuous-time equivalent of an exponentially weighted average, with smaller τi producing steeper time-discounting; dividing through by τi shows that smaller τi also produces less attenuation of noise. Thus xi(t) represents a time-average of its net input that trades off noise attenuation against the ability to pass high-frequency input signals.

This time-averaged value is then squashed by a logistic sigmoid function (Eq. 18), to capture the notion that firing rates in neurons are bounded below by 0 and above by some maximum firing rate — we will exploit this gradual saturation effect in constructing response triggers. Finally, amplification or attenuation of the outputs of a unit are then implemented by the interconnection strengths wij.

In what follows, we will further assume that some units operate mainly in the approximately linear range of the logistic centered around its inflection point (cf. Cohen, Dunbar, & McClelland, 1990); in those cases, a linearized approximation to the above equations is appropriate. When linearized, Eqs. 17-18 can be combined into a single, linear, SDE commonly known as an Ornstein-Uhlenbeck (OU) process:

| (19) |

(Note that without the negative feedback term – yi, Eq. 19 is a drift-diffusion process.) Here, Ii represents the net input to unit i, and dWi refers to a Wiener process that is the net result of summed noise in the inputs (as long as the noise in these inputs is uncorrelated, this summing produces another Wiener process, but with larger variance). Units of this type were used in the DDM-implementation of Bogacz et al. (2006), but for our purposes, saturating nonlinearities in some units will be critical features of the model.

2.3.2 Implementation of the drift-diffusion model

Stochastic neural network models have been related to random walk and sequential sampling models of decision making (including the DDM) by a number of researchers (e.g., Bogacz et al., 2006; Gold & Shadlen, 2002; Roe, Buse-meyer, & Townsend, 2001; Smith & Ratcliff, 2004; Usher & McClelland, 2001; Wang, 2002).

For a standard two-alternative decision making task — in which each trial has one correct and one error response — one unit acts as an integrator of evidence in favor of one hypothesis about the correct response, and the other as an integrator for the other hypothesis. Mutual inhibition creates competition between the integrators, such that increased activation in one retards the growth or leads to a decrease of activation in the other (thereby forming a ‘neuron-antineuron’ pair). By using activations to represent ‘preference’ rather than ‘evidence’, however, the same model can be applied to operant conditioning tasks.

A network of this type can be defined by a system of two SDEs describing the activity of the two integrators over time. The state of the system can then be plotted as a point in a two-dimensional space called the phase-plane. These SDEs in turn can be reduced to a single SDE (Grossberg, 1988; Seung, 2003) which, when properly parameterized, or balanced (i.e., wlateral in Fig. 7 equals 1), is identical to the DDM (Bogacz et al., 2006). This single SDE describes how the difference in activation between the two accumulators, xd, changes over time. If the input to the first accumulator is I1, and the input to the second is I2, then the SDE is:

| (20) |

This equation describes how the state of the system moves along a line through the phase-plane that Bogacz et al. (2006) refer to as the decision line. The system rapidly approaches this line from the origin, and then drifts and diffuses along it until reaching a point at which one unit is sufficiently active to trigger its corresponding response.8

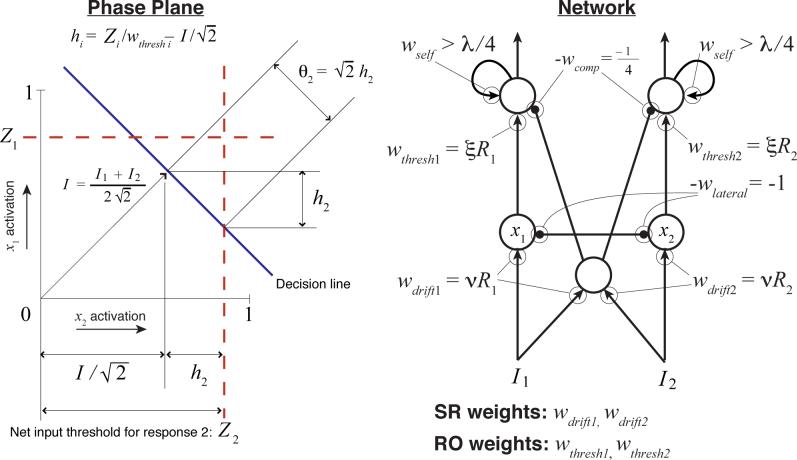

Figure 7.

Threshold modulation for the drift-diffusion model. On the left is a phase-plane for integrator activation. Assuming that the network is balanced and that thep integrators are leaky enough, then reductions of the trigger-unit thresholds (Z1 and Z2) by size Δ are equivalent to reductions of size √2Δ along the decision line. To ensure that thresholds are not reduced below 0 on the decision line (the point where the ray from the origin intersects the decision line), a compensating term must be added to the triggering thresholds. Note that if integrator activity is bounded above by 1, then unlike the DDM itself, an absolute threshold value Zi > 1 implies a total inability to produce response i. The network on the right depicts units governed by Eqs. 17-18 as circles; positive interconnection weights wij are depicted as arrowheads, and negative weights as small, solid circles; labels next to the arrowheads/circles identify the value of each weight. Eq. 19 is a suitable approximation for the leaky integrator units at the bottom of the network (they are assumed to remain in the linear range of their activation functions), but bistability (and therefore nonlinearity) are essential aspects of the threshold-readout units at the top. White noise is added to the output of any unit after weighting by a connection strength. The network depiction shows the weights that are modulated by reward, along with the diffuse inhibition necessary to add the compensating factor to the thresholds; for simplicity, it does not show units that would be necessary to carry out threshold renormalization by occasionally multiplying both RO weights by a large constant.

Bogacz et al. (2006) also showed (effectively) that a small time constant τd in a balanced model leads to tight clustering of the two-dimensional process around the attracting decision line. When tight clustering occurs, we can ignore fluctuations away from the decision line and think of the system as simply drifting and diffusing along it. A simple geometric argument (see Fig. 7) then shows that in the case of small τ, absolute thresholds applied to individual integrator outputs translate into thresholds qi for the drift-diffusion process implemented by xd as follows:

| (21) |

Here, Zi is an absolute firing rate threshold applied to unit i (we will address a possible physical mechanism for threshold ‘readout’ below). Thus, any change to Zi leads to corresponding changes in θi. This fact provides the basis for implementing the threshold adaptation procedure of Model 1, discussed below.

Fig. 8A shows an example of the evolution of a balanced system over time. After stimulus onset in a decision making task — or after reset to the origin following the previous response in a VR or VI task without a ‘Go’ signal — the system state (y1,y2) approaches the attracting decision line. Slower, diffusive behavior occurs along this line, and as long as the thresholds are stationary, the process will ultimately cross one of them with probability 1. Projection of the state (y1,y2) onto the decision line yields the net accumulated evidence x(t), which approximates the DDM as shown in Fig. 8C.

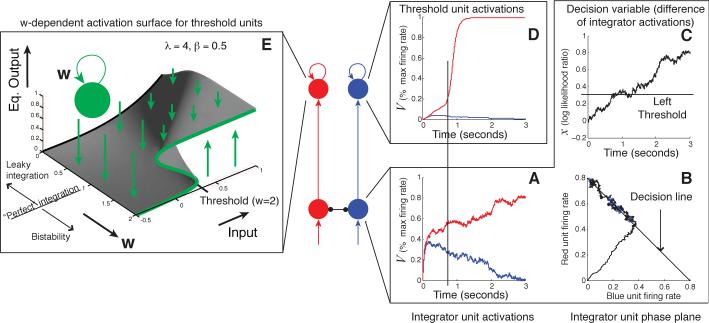

Figure 8.

A two-stage neural network implementing a decision process. The first layer (bottom red and blue units) implements the preference-weighing diffusion process: the two units’ activations are plotted in the box A; these activations are then shown in the phase plane in box B, which depicts the predicted attracting line for the two-dimensional process; box C shows the difference between these activations over time, forming a one-dimensional random walk. The second, bistable layer of units implements response triggers that apply thresholds to this accumulated preference. Self-excitation w creates bistability by transforming the sigmoid activation function from the black curve at the left of the activation surface plot on the left into the green function on the right. The resulting activity is approximately digital, with rates between 0 and 1 occurring only transiently at the time of a threshold crossing.

2.3.3 Drift adjustment by SR weights

As in the stochastic neural network models already mentioned, as well as in (Grossberg, 1971, 1982; Grossberg & Gutowski, 1987), reward-modulated stimulus-response mappings are presumed to be encoded by the input weights labelled ‘SR’ in Fig. 7. For typical VI and VR experimental designs, we have assumed that there is only a single stimulus that is continuously present. Thus, for simplicity, we set the pre-weighted input to each integrator unit to 1 throughout the course of a simulated experiment (inputs can be toggled between 1 and 0 to model cued-response experiments). After each response, we set each SR weight proportional to the current estimate of reward rate for the corresponding response. Inputs to each unit thus equal nRi(tL), where tL is the time of the previous response, so that drift is given by the following:

| (22) |

Again, we have assumed adaptation of Ri(t) in continuous time by Eq. 7, but discrete updating of the weight based on the current Ri value at the time of each response, as in Eq. 6. This approach ensures stationary drift rates during a single response, which is necessary in order for Eqs. 3-4 specifying expected choice proportions and response times to be exact. Nevertheless, these results are still approximately correct even if we continuously adapt the weights, as long as reward rate estimates do not change too rapidly during the preparation of individual responses. In this respect, weights as well as units have the exponential decay property of a capacitor or leaky integrator, which is a feature of a number of synaptic plasticity models (Hertz et al., 1991).

2.3.4 Threshold adjustment by RO weights

A threshold can be implemented by a low-pass filter unit with a sigmoidal activation function if the unit's output is fed back into itself through a sufficiently strong recurrent connection wii (i.e., wii > λ/4 in Eq. 18). As in the model of Wilson and Cowan (1972), such units develop bistability and hysteresis as self-excitation is increased (see Fig. 8E). In contrast, linear units become unstable and blow up to infinity when self-excitation is strong enough; a squashing function, however, traps such an explosive process against a ceiling.

Because of this behavior, strongly self-exciting units can function as threshold-crossing detectors and response-triggers: below a critical level of input, their output is near 0 (i.e., the value of approximately 0.3 labeled ‘Threshold’ on the Input axis in Fig. 8E); above the critical input level, output jumps like an action potential, to nearly the highest possible value. Once activated, a threshold unit then displays hysteresis, remaining at a high output level for some period of time even if inputs decrease.

Response-trigger units therefore implement energy barriers that accumulated evidence or preference must surmount in order to generate a punctate response. This is ideal behavior for a circuit element that makes an all-or-none decision about whether to initiate a sequence of muscle contractions. The activation of such a response-trigger varies continuously, as we should expect from any plausible model of a physical mechanism. Nevertheless, at all times other than when input signals have recently exceeded threshold, response-trigger outputs are far from the levels needed to contract muscles. Furthermore, this hysteresis property can be used to control the emission of ‘packets’ of multiple, rapid responses, rather than the individual responses on which we have so far focused attention: as long as a trigger's output is high, a fast oscillator can be toggled on by the response-trigger output to produce high-rate responding. Such packets of high-rate responding are often observed in conditioning experiments (e.g., Church, Meck, & Gibbon, 1994).

These properties are important for a physical implementation of thresholds. For ease of analysis, though, thresholds can still be modeled with sufficient accuracy to predict behavior as simple step functions. The critical level of input necessary to cause a response-trigger unit to transition between the low- and high-activation states (about 0.3 in Fig. 8E) can be considered to be a simple threshold applied to integrator outputs. This fact makes threshold adaptation easy to understand: from the perspective of an integrator unit (one of the bottom units in the network diagrams of Fig. 7 and Fig. 8), this threshold Z is reduced by m if an amount m of additional excitation is supplied to the threshold unit on top of the excitation provided by the integrator. Similarly, if the excitatory weight connecting an integrator to a threshold unit with threshold Z is multiplied by γ, then the effective threshold for the integrator becomes Z/γ.

Therefore, by multiplying the RO weights by an estimate of reward rate for the corresponding response, Ri, our threshold adaptation algorithm can be directly implemented. The only remaining issue stems from the fact that the effective DDM threshold θi is an affine function of the absolute firing rate threshold Z (Eq. 21). In order to make θi inversely proportional to Ri, we must cancel the additive term by adding (I1 +I2)/4. We can do this simply by inhibiting the response-trigger units by exactly this amount. This need to cancel motivates our use of a collector of diffuse, excitatory input to provide pooled inhibition to both response triggers (see the middle unit in the network of Fig. 7). Bogacz and Gurney (2007) similarly used diffuse, pooled inhibition to generalize a neural implementation of the DDM to an asymptotically optimal statistical test for more than two decision making alternatives; Frank (2006) used pooled inhibition to implement inhibitory control; and Wang (2002) used it simply to implement a biologically realistic form of lateral inhibition between strictly excitatory units.

3. Discussion

We have analyzed an implementation of melioration by an adaptive drift-diffusion model. Adaptation is achieved by estimating the rate of reward earned for a response through a process of leaky integration of reward impulses; reward rate estimates then weight the input signals to a choice process implemented by a competitive neural network with a bistable output layer. Weighting signals by reward-rate amounts to adapting the threshold (Model 1) and drift (Model 2) of the DDM, which is implemented by the neural choice network when the network's lateral, inhibitory weights balance the ‘leakiness’ of the leaky integration in its input layer (Bogacz et al., 2006). Diffusive noise in processing then leads to random behavior that can serve the purpose of exploration.

Our attempt to blend operant conditioning theory and cognitive reaction time theory has historical antecedents in the work of researchers in the behaviorist tradition (Davison & Tustin, 1978; Davison & McCarthy, 1988; Nevin, Jenkins, Whittaker, & Yarensky, 1982) who have linked operant conditioning principles with signal detection theory (SDT; Green & Swets, 1966). Indeed, SDT is ripe for such an interpretation, given its reliance on incentive structures to investigate humans’ low-level signal processing capabilities. Our work is part of a natural extension of that approach into decision making that takes place over time, producing RT/IRT data which is not normally considered in SDT.

In addition to serving as a bridge between melioration and a possible neural implementation, the adaptive DDM makes quantitative predictions about inter-response times as well as choice probabilities in operant conditioning experiments with animal subjects, and economic game experiments with human subjects. Response times and inter-response times are a valuable dependent variable in such tasks that can help to elucidate the mechanisms underlying choice. Indeed, although choice-RT and IRT data in concurrent tasks seem to have received less attention than response proportions in the animal behavior literature, RT and IRT data have occasionally been used to distinguish between alternative models of operant conditioning (e.g., Blough, 2004; Davison, 2004). Data from both of these articles included long-tailed RT/IRT distributions that appear log-normal or ex-Gaussian — approximately the shape predicted by the DDM. Blough (2004) specifically fit RT distributions with a DDM and found better evidence for adaptation of drift as a function of reward rate rather than of threshold. In addition, monkey RT data and neural firing rate data from the motion discrimination, reaction-time experiment of Roitman and Shadlen (2002) have been taken to support both the DDM and neural integrators of the type we have discussed as models of decision making (cf. Gold & Shadlen, 2001).

In a replication of the human economic game experiment of Egelman et al. (1998) and Montague and Berns (2002), Bogacz et al. (2007) found clear evidence of an RT effect as a function of an enforced delay between opportunities to respond. As the delay interval grew, mean RT also grew. For delays of 0 msec, 750 msec and 2000 msec, average inter-choice times (including the enforced delay) were 766 msec, 1761 msec and 3240 msec respectively. This corresponds to average RTs of 766 msec, 1011 msec, and 1240 msec respectively. This is a profound effect on RT by an independent variable — enforced inter-trial delay — that had no obvious effect on relative reward rates or observed choice probabilities, and is therefore completely outside the scope of models of behavioral reallocation such as melioration. Bogacz et al. (2007) gave a compelling account of choice probabilities in this task in terms of a drift-adaptive DDM with reward rates updated only after each response,9 but fits of that model did not take RT data into account and produced parameters for which RTs must be greater than those observed. When we examined the performance of a threshold-adaptive, zero-drift diffusion model with similarly discrete reward-rate updates in this task,10 it achieved fast enough RTs but exhibited a tendency toward exclusive preference for one or the other response that was not observed in the data. Thus, it seems likely that combining threshold and drift adaptation and/or including an adaptive timing mechanism would give a significantly better fit to choice and RT data taken together. In addition, the delay-dependent RT results of Bogacz et al. (2007) appear to call for a version of the adaptive DDM in which internal estimates of reward rate decay during the delay period (as in Eq. 7) and concomitantly, drift decreases and/or thresholds increase.

Both the adaptive-drift and adaptive-threshold versions of the DDM predict that RTs and IRTs must be longer for the less preferred choice in a two-choice task, and this qualitative relationship is seen in both animal and human behavioral data (Blough, 2004; Busemeyer & Townsend, 1993; Petrusic & Jamieson, 1978). We take these and other behavioral results as strong evidence that adaptive random walk models may provide an account of dynamical choice behavior on a trial-by-trial level that is furthermore explicit in the following sense: as an SDE, it specifies the state of the choice process from moment to moment.

This explicit character of diffusion-implemented melioration lends itself naturally to theorizing about the physical mechanisms underlying choice. In addition to efforts in theoretical behaviorism, much recent empirical and theoretical work in neuroeconomics has been devoted to understanding these mechanisms. In particular, a variety of explicitly neural models of choice in response to changing reward contingencies have been proposed (e.g., Grossberg & Gutowski, 1987; Montague & Berns, 2002; Soltani & Wang, 2006). Most share a common structure: input weights leading into a competitive network are modified in response to reward inputs, leading to choice probabilities that are a logistic/softmax or otherwise sigmoidal function of the difference between input weight strengths (and thus of the difference between expected reward values). A key distinction of the neurally implemented threshold-adaptive DDM (Model 1) is that it includes reward-modulated weights at a later, output stage of processing in the network, and can thereby formally achieve exact matching. In its location of reward-modulated synaptic weights, this model is structurally similar to the more abstract neural model in Loewenstein and Seung (2006), whose synaptic strengths are modified by a more general (but probably more slowly changing) process that acts to reduce the covariance of reward and response, and which can also achieve exact matching in tasks not involving continuous time (VI tasks, for example, do not appear to be within the scope of this covariance-reduction rule).

When choice proportions in empirical data do not clearly distinguish between input-stage and output-stage models, the best way to distinguish them (or to identify the relative contributions of input and output weights) may be to fit both choice and RT/IRT data simultaneously.

3.1. Mapping on to neuroanatomy

The model family that we have presented provides a generic template for reward-modulated decision making circuits in the brain. By positing that connection strengths are equivalent to reward-rate estimators, the model represents a theoretical view of synapses at the sensory-motor interface as leaky integrators of reward impulses. An alternative view is one in which population firing rates, rather than synaptic strengths, represent these integrated impulses (e.g., firing rates directly observed in monkey anterior cingulate cortex, Seo & Lee, 2007, and perhaps indirectly in a host of human brain imaging experiments, Rushworth, Walton, Kennerley, & Bannerman, 2004). Under the assumptions of Eq. 17, this alternative view seems more consistent with the affine threshold transformation of Simen et al. (2006) than the multiplicative weight adaptation of Models 1 and 2; however, if firing rate representations can act multiplicatively rather than additively on decision-making circuits, then a synaptic weight and a population firing-rate become functionally equivalent.

In any case, diffuse transmission of reward impulses is central to both approaches. The prominent role of the basal ganglia in reward processing and action initiation therefore suggests that reward-modulated sensory-motor connections may map onto routes through these subcortical structures. Bogacz and Gurney (2007) and Frank (2006) hypothesize that the subthalamic nucleus in the basal ganglia controls responding by supplying diffuse inhibition to all response units, and Lo and Wang (2006) also attribute control functionality to the basal ganglia in a model of saccade thresholds. Given that both the excitatory and inhibitory paths in Fig. 7 must be potentiated by reward, we speculate that the excitatory and inhibitory pathways in our model may map onto cortex and the basal ganglia as follows: SR weights correspond to cortico-cortical connections (consistent with reward-modulated firing rates observed in monkey lateral intraparietal cortex, e.g., Platt & Glimcher, 1999); RO weights correspond to direct-pathway cortico-striatal connections; and compensatory weights correspond to excitation of the indirect pathway through the basal ganglia (Alexander, De-Long, & Strick, 1986). The mapping we propose assumes that the direct pathway through the basal ganglia is parallel and segregated, but that the indirect pathway is not. Alternatively, the subthalamic nucleus may play a diffuse inhibition role here that is analagous to that proposed in Bogacz and Gurney (2007) or Frank (2006).

The multi-stage architecture of this brain circuitry lends itself to multiple-layer models. However, splitting into multiple layers and using integrator-to-threshold weights are also functionally necessary in our model, because threshold behavior (bistability and hysteresis) cannot be obtained from the drift-diffusion process itself — and without nonlinear energy barriers, any buildup of activation in decision units would produce proportional, premature movement in response actuators such as eye or finger muscles. In contrast, other neural models lump both integration and threshold functionality into a single layer of bistable units. For each response, these models implement a nonlinear stochastic process with an initially small drift, followed by a rapid increase in drift after reaching a critical activation level (e.g., Soltani & Wang, 2006; Wong & Wang, 2006). Analytical RT predictions for such models are not currently known, and it may be possible to approximate such systems with idealized two-layer models for which analytically tractable RT predictions exist. However, we were originally motivated to split the system into two layers — rather than to lump the integration and threshold functions into one layer — by the results of Bogacz et al. (2006), who argued for starting-point/threshold modulations without drift modulation in certain decision making tasks in order to maximize reward. See other arguments in favor of splitting over lumping in Schall (2004). Nevertheless, lumping layers together clearly cannot be ruled out, and a single-layer model seems more economical in resources and more parsimonious in parameters than a two-layer model.

In general, the additional layer in our model might earn its keep in at least two ways: