Abstract

This preliminary study examined the effects of hearing loss and aging on the detection of AV asynchrony in hearing-impaired listeners with cochlear implants. Additionally, the relationship between AV asynchrony detection skills and speech perception was assessed. Individuals with normal-hearing and cochlear implant recipients were asked to make judgments about the synchrony of AV speech. The cochlear implant recipients also completed three speech perception tests, the CUNY, HINT sentences, and the CNC test. No significant differences were observed in the detection of AV asynchronous speech between the normal-hearing listeners and the cochlear implant recipients. Older adults in both groups displayed wider timing windows over which they identified AV asynchronous speech as being synchronous than younger adults. For the cochlear implant recipients, no relationship between the size of the temporal asynchrony window and speech perception performance was observed. The findings from this preliminary experiment suggest that aging has a greater effect on the detection of AV asynchronous speech than the use of a cochlear implant. Additionally, the temporal width of the AV asynchrony function was not correlated with speech perception skills for hearing-impaired individuals who use cochlear implants.

Keywords: Cochlear Implants, Audiovisual Asynchrony, Speech Perception

The assessment of speech perception skills in cochlear implant recipients commonly involves a battery of behavioral measures that examine how individuals perceive stimuli through one modality, namely, the information acquired through listening-alone. However, in order to fully understand speech perception processes it also is appropriate to evaluate the impact that visual cues have on word and sentence recognition abilities. In the normal-hearing population, numerous studies have shown that both visual and auditory information play an important role in speech perception. For example, Sumby and Pollack (1954) demonstrated the importance of visual information for speech understanding in the presence of background noise. This study demonstrated that in extremely noisy listening conditions (i.e., −30 dB signal-to-noise ratio) when visual cues are heavily relied on, the difference in intelligibility scores from the auditory-alone condition and the audiovisual condition can range from 40% to 80% for words and sentences, respectively. Conversely, in quiet conditions no differences in performance were noted between the auditory-alone and audiovisual testing conditions. The benefits of visual cues for the identification of sentences in noise also was demonstrated by Middelweerd and Plomp (1987). These studies have addressed the impact that auditory and visual cues can have on speech understanding, both in quiet and in particularly noisy listening conditions. For individuals who use a cochlear implant, and therefore limited access to auditory information, the use of visual cues can potentially aid with the recognition of speech, especially in noisy or reverberant environments. Understanding the cooperative interaction of auditory and visual cues under adverse listening conditions would be beneficial for the clinical management of individuals who use cochlear implants. Specifically, the results from this study may lead to improved aural rehabilitation programs that focus on the optimization and integration of visual and audio cues.

Research on the integration process of auditory and visual cues in individuals who have been profoundly deaf and subsequently receive a cochlear implant is an ongoing process. Specifically, neural-imaging and electrophysiology work has suggested that adults who use cochlear implants may integrate and/or process auditory and visual speech differently than normal-hearing adults. Neuro-imaging data also have shown that the visual cortex of cochlear implant recipients is more active than normal-hearing controls when listening to meaningful speech sounds, and further, this activation increases with implant experience (Giraud et al., 2001a, Giraud et al., 2001b). Additionally, electrophysiological data have suggested that visual cortex activation varies depending on how well cochlear implant recipients perform on speech perception tasks (Doucet et al., 2006). Larger peak amplitudes of the visual evoked potential were recorded for good performers in comparison to poorer performers. Information on how cochlear implant recipients process auditory, visual and audiovisual speech would be beneficial in the continual effort to improve aural rehabilitation programs for these individuals.

Within the hearing population, the examination of multimodal speech has revealed that speech understanding can occur with degraded asynchronous audiovisual (AV) speech (McGrath and Summerfield, 1985, Grant and Greenberg, 2001, Pandey et al., 1986). Specifically, Grant and Greenberg (2001) demonstrated that normal-hearing individuals could successfully recognize degraded audiovisual asynchronous IEEE sentences. The timing of the audio and visual components of the sentences was offset to produce audiovisual sentences that differed in degrees of asynchrony. They found that when the auditory signal led the visual signal by up to approximately 40 ms or the visual signal led the auditory signal by up to 160 to 200 ms, the stimuli could be successfully recognized. McGrath and Summerfield (1985) also demonstrated that when using an F0-modulated pulse train audio feed as part of an audiovisual signal, AV asynchronous speech was not affected when the audio and visual delays were less than 160 ms. Similar findings were reported by Pandey, Kunov, and Abel (1986) who demonstrated that in the presence of background noise, AV asynchronous sentences could be successfully perceived for asynchrony levels up to approximately 120 ms. More recently, Conrey and Pisoni (2006) demonstrated that young adults could identify isolated AV asynchronous words as being completely synchronous over a temporal window of approximately 150 ms.

However, very little research has been conducted on how individuals with hearing impairment integrate auditory and visual signals. A hearing loss would imply that all of the auditory frequency bandwidths from an audiovisual speech signal are not adequately perceived, thereby potentially preventing the complete integration of auditory and visual stimuli. Grant and Seitz (1998) studied a group of adults with mild sloping to severe hearing losses to determine the importance of synchronous AV speech stimuli for speech understanding. The findings showed that speech recognition for audiovisual sentences was not affected until the audio delay exceeded 200 ms. As suggested by the recent neural-imaging and electrophysiology work, visual input might be processed differently in individuals with severe-to-profound hearing losses, and therefore, the severity of the hearing loss could have an impact on the ability to perceive asynchronous speech. Presumably, individuals with profound hearing losses have had to rely on visual cues to a greater extent than individuals with hearing losses that range from mild to severe.

Grant and Seitz (1998) also reported that the correlation between measures of AV integration for consonants and measures of AV integration for sentences were not significant, contrary to the authors' assumptions that AV integration is a measurable skill that individuals use whenever they have access to A and V cues. It was suggested that the two measures require different processing skills, which could have led to the resulting non-significant findings. Specifically, if individuals were not able to process initial segments of the asynchronous AV connected speech, it would be difficult to correctly process later segments. The ability to quickly encode and process the asynchronous speech, therefore, would impact the overall results. Intimately tied with the processing speed of asynchronous AV speech, is the ability to store and then later retrieve critical speech cues from working memory. The greater the number of segments presented, as in connected AV asynchronous speech, the more challenging it would be to store and later retrieve presented speech. Both working memory and the ability to process speech information quickly or to process rapidly presented speech are skills that have been shown to decline with increasing age (Salthouse and Babcock, 1991, Gordon-Salant and Fitzgibbons, 2004). Although the age range of hearing-impaired adults examined in the Grant and Seitz (1998) study was 41 to 76 years old (mean 66 years old), the goal of the study was not to determine the impact that increasing age had on audiovisual speech perception. To further understand how the integration of AV speech occurs, therefore, study of this phenomenon with an elderly population of listeners is necessary.

Although AV asynchrony perception has not been specifically examined in the elderly population, the perception of auditory and visual information has been assessed using several behavioral measures. Cienkowski and Carney (2002) measured the McGurk effect in younger and older adults to assess the effects of aging on the ability to integrate auditory and visual information. They found that the percentage of fused responses to an auditory /bi/ and a visual /gi/ and an auditory /pi/ and visual /ki/ were not significantly different in younger and older age groups, suggesting that older adults are just as successful at integrating auditory and visual signals as younger adults. However, because individual differences of auditory and visual performance were not reported, it is unclear whether the younger and older study participants were integrating the auditory and visual cues in similar manners. That is, despite the similar performance in fusion rates for the younger and older adults, the separate contributions that auditory and visual cues provided to the overall performance for the two subgroups of adults was not evaluated.

In a more recent study, Sommers, Tye-Murray, and Spehar (2005) examined the individual contributions that auditory and visual information provide for the integration of AV stimuli, in elderly and middle-aged normal-hearing adults. Participants were asked to repeat AV consonants, isolated words and sentences under several different signal-to-noise ratios that would produce similar auditory performance between the two age groups. The older normal-hearing participants demonstrated significantly poorer speechreading skills overall than the younger adults suggesting that aging may be associated with declines in mechanisms that are responsible for the successful encoding of visual information, independent of hearing status. Analyses revealed that the age differences that were obtained in audiovisual performance reflected age related differences in speechreading abilities rather than the ability to integrate or combine auditory and visual stimuli. Poor speechreading skills in an elderly group of adults who used cochlear implants were also observed by Hay-McCutcheon et al. (2005).

In the Sommers, Tye-Murray, and Spehar (2005) study, however, the integration of AV material was assessed using speech perception tests rather than through the examination of the integration of speech that occurs when the auditory and visual components of a signal have been altered (i.e., through timing differences of the auditory and visual components or simultaneous presentation of different audio and visual cues). If age-related differences in audiovisual perception can be accounted through age-related differences in speechreading abilities, then this same effect should be observed when assessing the integration of AV asynchronous speech. In order to more fully understand the integration of AV speech, therefore, it will be necessary to examine not only the impact that aging might have on the ability to integrate AV speech stimuli but also to examine the effects of cochlear implant use on this ability.

The two main objectives of the present study were to examine AV asynchrony detection in individuals who use cochlear implants and to assess the impact that aging has on these skills. Additionally, a secondary goal was to examine the association between AV asynchrony detection and performance on speech perception tasks within the group of cochlear implant recipients. Groups of middle-aged and elderly normal-hearing adults and cochlear implant recipients were used in this study. The findings reported here are preliminary in nature due to the limited number of study participants.

METHODS

Study Participants

Both normal-hearing listeners and cochlear implant recipients participated in this study. Two different groups of English speaking cochlear implant recipients were recruited, 13 elderly adults ranging in age from 66 to 81 (mean 73 years old), and 12 middle-aged adults ranging in age from 41 to 54 years old (mean 47 years old). These individuals received either a Cochlear Corporation, an Advanced Bionics, or a Med El cochlear implant between the years of 1995 and 2004 at the Indiana University School of Medicine, Department of Otolaryngology—Head and Neck Surgery. One elderly participant had bilateral implants, the first device was implanted in 1996 while the second was implanted in 2004. The study participant demographics are provided in Table 1. All of the adults were native English speakers and none of them reported a history of stroke or head injury. The normal-hearing participants consisted of 12 middle-aged adults ranging in age from 41 to 55 years old (mean age 48 years old), and 10 elderly adults ranging in age from 65 to 79 years old with a mean age of 70 years old. All of the normal-hearing participants were recruited locally through posted advertisements and word of mouth. All of the normal-hearing study participants reported that English was their first language, that they did not have prior speechreading training, and that they had no history of stroke or head injury.

Table 1.

Study participant demographics.

| Middle-Aged Subjects |

Age at Test |

Age Onset of HL |

Duration HL |

Etiology | Age Implant |

Length of CI Use |

CI | Strategy |

|---|---|---|---|---|---|---|---|---|

| abf1 | 42 | 4 | 37 | Unknown, progressive | 38 | 4.0 | N24 CI24R (CS) | ACE |

| abi1 | 45 | 28 | 17 | Unknown | 44 | 1.5 | MECombi40+H | CIS |

| abk1 | 41 | 39 | 2 | Autoimmune | 31 | 9.7 | CL Multi | CIS |

| abn1 | 49 | 18 | 31 | Meniere's | 45 | 3.5 | MECombi40+H | CIS |

| abs1 | 54 | 4 | 50 | Unknown, progressive | 53 | 1.4 | CLHiRes90K | HiRes |

| abv1 | 45 | 3 | 42 | Unknown, progressive | 39 | 6.2 | N24 CI24M | ACE |

| abw1 | 42 | 19 | 23 | Ototoxicity | 40 | 2.4 | MECombi40+H | CIS |

| aby1 | 47 | 19 | 28 | Unknown, progressive | 45 | 2.7 | MECombi40+H | CIS |

| acb1 | 53 | 13 | 40 | Unknown, progressive | 50 | 2.8 | N24 CI24R (CS) | ACE |

| acr1 | 48 | 14 | 34 | Unknown, progressive | 39 | 9 | CL Multi | CIS |

| adh1 | 55 | 44 | 11 | Cholesteatoma, progressive |

51 | 4.0 | N24 CI24R (CS) | ACE |

| adi1 | 45 | 2 | 43 | Unknown, progressive | 36 | 8.1 | CL Multi | CIS |

| MEAN (SD) | 47.2 (4.8) | 17.3(14.0) | 29.8(14.3) | 42.6 (6.6) | 4.6 (2.9) | |||

|

Elderly Subjects |

||||||||

| abg1 | 72 | 39 | 33 | Hereditary, progressive | 70 | 1.7 | N24 CI24R (CS) | ACE |

| abh1 | 78 | 56 | 22 | Meniere's | 75 | 3.2 | N24 CI24R (CS) | ACE |

| abj1 | 71 | 45 | 26 | Hereditary, progressive | 68 | 3.1 | CL Hi Focus CII | MPS |

| abm1 | 79 | 29 | 50 | Meniere's | 79 | 0.6 | MECombi40+H | CIS |

| abo1 | 66 | 24 | 42 | Unknown, progressive | 65 | 1.0 | MECombi40+H | CIS |

| abp1 | 71 | 11 | 60 | Scarlet fever, progressive |

68 | 2.4 | MECombi40+HS | CIS |

| abr1 | 72 | 52 | 20 | Unknown, progressive | 69 | 3.4 | MECombi40+H | CIS |

| abq1 | 75 | 25 | 50 | Unknown, progressive | 67 | 8.3 | MECombi40 | CIS |

| abz1 | 75 | 30 | 45 | Unknown, progressive | 71 | 4.5 | N24 CI24R (CS) | ACE |

| acc1 | 68 | 63 | 5 | Unknown, progressive | 67 | 1.4 | MECombi40+H | CIS |

| acd1 | 69 | 49 | 20 | Meniere's | 68 | 1.0 | N24CI24R (CS) | ACE |

| acf1 | 81 | 69 | 12 | Unknown, progressive | 77 | 3.4 | MECombi40+H | CIS |

|

adc1-R adc1-L |

76 | 35 | 41 | Unknown, progressive | 67 75 |

9.5 0.6 |

N22 CI22M (RE) N24 CI24R (CS) |

SPEAK SPEAK |

| MEAN (SD) | 73.1 (4.6) | 41 (17.6) | 32.1 (17.2) | 70.1 (4.3) | 3.3 (2.7) |

Ages and durations are provided in years. HL = hearing loss; CI = cochlear implant; N24 CI24R (CS) = Cochlear Corporation Nucleus 24 Contour implant; MECombi40+H = Med-El Combi 40+ standard cochlear implant; CL Multi = Advanced Bionics Corporation Clarion Multi-Strategy cochlear implant; CLHiRes90K = Advanced Bionics Corporation Clarion HiResolution 90K; N24 CI24M = Cochlear Corporation Nucleus 24 cochlear implant; CL Hi Focus CII = Advanced Bionics Corporation Clarion HiFocus CII with positioner; MECombi40+HS = Med-El Combi 40+ compressed.

Screening Tests

Pure-tone air-conduction thresholds were obtained for all normal-hearing listeners from octaves 250 Hz to 4000 Hz using a Grason-Stadler GSI 61 Clinical Audiometer and EAR insert earphones. For purposes of this study, normal-hearing was defined as behavioral thresholds of 25 dB HL or better at all test frequencies. Additionally, all individuals included in this study had symmetrical audiometric hearing configurations (i.e., less than 20 dB HL difference between ears at one test frequency). Additionally, the sound field audiometric behavioral thresholds also were obtained for the cochlear implant recipients.

Screening for vision was completed prior to testing to ensure that all participants were capable of perceiving and encoding visual speech information. Normal or corrected-to-normal visual acuity of 20/25 or better was indicated for all study participants. Additionally, the Mini Mental Status Exam was administered to all individuals to assess global cognitive function (Folstein et al., 1975). All individuals who participated in this study received a score of 27 or better out of a possible 30 points. The mean score for cognitively intact individuals in the Folstein, Folstein, and McHugh (1975) study was 27.6 with a range of 24 to 30.

Procedures and Stimuli

Speech Tests

Three speech perception measures were administered to the hearing-impaired adults who used cochlear implants. The Consonant-Nucleus-Consonant (CNC) word recognition test (one list of 50 words) (Peterson and Lehiste, 1962), the sentences from the Hearing in Noise Test (HINT) sentence recognition test (two lists of 10 sentences each) (Nilsson et al., 1994), and the City University of New York (CUNY) sentence test (two lists of 12 sentences each) (Boothroyd et al., 1988) were presented to the cochlear implant recipients in an Industrial Acoustics Company (IAC) sound booth. All of the speech tests were administered without the presentation of additional background via a sound field speaker presented at 0° azimuth. The standard procedure for the HINT test (i.e., determining a sentence reception threshold in noise) was not used. Rather, only the sentence lists from this test were used to assess speech understanding in quiet only. The stimuli for both the HINT sentences and CNC word test were presented at 70 dB SPL. The CNC word test was administered first, followed by the HINT sentences and the CUNY sentence test. Additionally, the CUNY sentence test was presented in three modalities in the following order: auditory-only (A), visual-only (V) and audio-visually (AV). All study participants were instructed to repeat the stimuli they heard or saw for these tasks. Guessing was encouraged. For all tests, a percentage correct score was obtained as the dependent measure.

AV Asynchrony Test

The AV asynchrony detection task used in this experiment was the same as that employed by Conrey and Pisoni (2006). A list of ten familiar English words was presented to the listeners using a single talker. The words were chosen from the Hoosier Audiovisual Multitalker Database which contains digitized movies of isolated monosyllabic words spoken by single talkers (Lachs and Hernandez, 1998). The most intelligible talker of this database, as determined by Lachs (1999), was chosen for stimulus presentation. To prepare synchronous and asynchronous AV stimuli, Final Cut Pro 3 (copyright 2003, Apple Computer, Inc.) was used to manipulate the audio and visual signals. The stimuli were prepared so that the only cues that could be used to make judgments about the synchrony of the signals were temporally based between the audio and visual leads. Specifically, the audio track did not play while the screen was blank and all of the speech sounds and active articulatory movements remained within the movie.

Previous research on AV synchrony perception has revealed that normal-hearing young adults have a fairly wide range over which they will judge AV signals as being synchronous or asynchronous (Grant et al., 2004, Grant and Greenberg, 2001, McGrath and Summerfield, 1985). AV asynchronous signals have been judged to be synchronous over a range of approximately 200-250 ms (i.e., visual signal leading audio signal by approximately 50 ms to audio signal leading visual signal by approximately 200 ms). For purposes of this study, therefore, a wide range of asynchronous stimuli were used in order to ensure that study participants were able to judge the stimuli as being asynchronous or synchronous. The stimuli from Conrey and Pisoni (2006) ranged from the auditory signal leading the visual signal by 300 ms (i.e., A300V) to the visual signal leading the auditory signal by 500 ms (i.e., V500A). Twenty-five asynchrony levels (i.e., differences in presentation timing of the auditory and visual signals) that covered a range of 800 ms from A300V to V500A were used. Each successive level of asynchrony, either audio-leading or visual-leading, differed by 33.33 ms increments. Nine stimuli had auditory leads, one was synchronous, and 15 had visual leads for each of the ten stimulus words that were used. As a result, a total of 250 trials were presented to the participants in a randomized order. The visual and audio stimuli were presented using an Apple G4 computer and Advent sound field speakers, respectively. The speakers were placed at ± 45° azimuth from the listeners who were seated approximately 19 inches from both the speakers and a Dell flat screen computer monitor. Both the normal-hearing adults and the cochlear implant recipients were tested using this procedure.

Before the session began, the participants were given both written and oral instructions explaining the task and were presented with examples of asynchronous and synchronous AV stimuli. For each trial, the participants were asked to judge whether the AV stimulus was synchronous or asynchronous (“in sync” or “not in sync”). They were instructed to press one button on a response box if they thought the audio and visual stimuli were synchronous and a different button if they thought the stimuli were asynchronous. In order to alert the participants for an upcoming AV token, a fixation mark (“+”) flashed on the computer screen for 200 ms which was then followed by a blank screen for 300 ms. The audio components of the video presentation were presented at 70 dB SPL for all study participants without additional background noise.

RESULTS

The behavioral audiometric threshold data for cochlear implant recipients and the normal-hearing individuals are displayed in Tables 2 and 3, respectively. The cochlear implant recipient data presented in Table 2 reveal similar mean behavioral threshold responses for middle-aged and elderly cochlear implant recipients at 250 Hz and 500 Hz. A one way ANOVA revealed no significant differences in thresholds between the two groups for the two warble tone behavioral thresholds. Significant differences between the middle-aged and elderly cochlear implant recipients were obtained for the 1000 Hz [F(1,25)=6.16, p=0.02], 2000 Hz [F(1,25)=7.14, p=0.01] and 4000 Hz [F(1,25)=4.56, p=0.04] behavioral thresholds. Poorer thresholds for these frequencies were obtained for the elderly adults compared to the middle-aged adults. Additionally, one way ANOVAs performed using the normal hearing behavioral threshold data presented in Table 3 revealed significant differences in thresholds between middle-aged and older adults for the right ear at 1000 Hz [F(1,21)=8.42, p=0.009] and 4000 Hz [F(1,21)=8.79, p=0.008]. Left ear significant differences between the two aged groups also were noted at 1000 Hz [F(1,21)=4.48, p=0.04] and 4000 Hz [F(1,21)=4.85, p=0.04]. A significant difference in the left ear pure tone average (PTA: behavioral thresholds averaged at 500 Hz, 1000 Hz and 2000 Hz) was revealed [F(1,21)=5.50, p=0.03] but no significant difference between the two aged groups for the right PTA was indicated. Previous research has found that individuals over the age of 60 experience significant hearing loss at frequencies above 4000 Hz (Pearson et al., 1995, Lee et al., 2005). We cannot, therefore, rule out the possibility that the older adults who participated in this study did not have significant hearing loss at 8000 Hz. A hearing loss at 8000 Hz could have implications for the perception and detection of all of the frequency components of the words that were used in the asynchrony detection task.

Table 2.

Sounds field behavioral audiometric thresholds for cochlear implant recipients. Thresholds are listed in dB HL for each test frequency.

| Middle- Aged Subjects |

250 Hz |

500 Hz |

1000 Hz |

2000 Hz |

4000 Hz |

|---|---|---|---|---|---|

| abf1 | 28 | 24 | 24 | 22 | 26 |

| abi1 | 14 | 22 | 20 | 22 | 22 |

| abk1 | 22 | 34 | 22 | 22 | 28 |

| abn1 | 24 | 26 | 28 | 22 | 26 |

| abs1 | 24 | 28 | 26 | 22 | 38 |

| abv1 | 40 | 40 | 40 | 35 | 35 |

| abw1 | 18 | 26 | 24 | 22 | 24 |

| aby1 | 18 | 28 | 24 | 32 | 32 |

| acb1 | 22 | 26 | 28 | 20 | 28 |

| acr1 | 44 | 36 | 30 | 28 | 32 |

| adh1 | 36 | 35 | 32 | 28 | 28 |

| adi1 | 36 | 12 | 12 | 18 | 26 |

| Mean | 27.2 | 28.1 | 25.8 | 24.4 | 28.8 |

|

Elderly Subjects |

250 Hz |

500 Hz |

1000 Hz |

2000 Hz |

4000 Hz |

| abg1 | 24 | 32 | 36 | 36 | 32 |

| abh1 | 28 | 36 | 38 | 28 | 36 |

| abj1 | 24 | 24 | 28 | 28 | 30 |

| abm1 | 32 | 32 | 32 | 31 | 36 |

| abo1 | 26 | 24 | 30 | 26 | 28 |

| abp1 | 24 | 26 | 32 | 30 | 38 |

| abr1 | 30 | 35 | 25 | 20 | 20 |

| abq1 | 20 | 22 | 28 | 28 | 28 |

| abz1 | 32 | 38 | 36 | 32 | 36 |

| acc1 | 22 | 22 | 24 | 28 | 34 |

| acd1 | 26 | 32 | 28 | 26 | 30 |

| acf1 | 20 | 30 | 28 | 28 | 34 |

| adc1-R adc1-L |

42 34 |

42 36 |

40 40 |

34 36 |

44 40 |

| Mean | 27.4 | 30.8 | 31.8 | 29.6 | 33.3 |

Table 3.

Behavioral audiometric thresholds for normal hearing listeners. Thresholds were obtained using insert earphones and are listed in dB HL.

| Middle- Aged Subjects |

Right Ear | Left Ear | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 250 Hz |

500 Hz |

1000 Hz |

2000 Hz |

4000 Hz |

PTA- R |

250 Hz |

500 Hz |

1000 Hz |

2000 Hz |

4000 Hz |

PTA- L |

|

| NH1 | 20 | 15 | 5 | 5 | 15 | 8.3 | 15 | 5 | 5 | 0 | 5 | 3.3 |

| NH2 | 10 | 5 | 0 | -5 | 10 | 0 | 15 | 10 | 0 | 5 | 5 | 5 |

| NH5 | 5 | 20 | 10 | 15 | 15 | 15 | 10 | 10 | 5 | 10 | 10 | 8.3 |

| NH9 | 15 | 15 | 20 | 10 | 15 | 15 | 10 | 10 | 10 | 10 | 15 | 10 |

| NH10 | 10 | 10 | 10 | 10 | 0 | 10 | 10 | 10 | 5 | 10 | 5 | 8.3 |

| NH11 | 15 | 20 | 10 | 10 | 15 | 13.3 | 10 | 10 | 5 | 10 | 15 | 8.3 |

| NH14 | 5 | 5 | 0 | 5 | 5 | 3.3 | 5 | 5 | 5 | 5 | 15 | 5 |

| NH15 | 10 | 10 | 10 | 15 | 5 | 11.6 | 10 | 5 | 10 | 15 | 15 | 10 |

| NH17 | 0 | 0 | 10 | 5 | 5 | 5 | 10 | 5 | 5 | 5 | 5 | 5 |

| NH18 | 10 | 10 | 10 | 5 | 5 | 8.3 | 10 | 15 | 10 | 15 | 10 | 13.3 |

| NH19 | 15 | 15 | 10 | 10 | 5 | 11.7 | 15 | 10 | 15 | 5 | 15 | 10 |

| NH20 | 10 | 5 | 10 | 10 | 20 | 8.3 | 15 | 5 | 5 | 15 | 10 | 8.3 |

| Mean | 10.4 | 10.8 | 8.8 | 7.9 | 9.6 | 9.2 | 11.3 | 8.3 | 6.7 | 8.8 | 10.4 | 7.9 |

|

Elderly Subjects |

||||||||||||

| NH25 | 20 | 25 | 20 | 10 | 25 | 18.3 | 20 | 25 | 25 | 10 | 20 | 20 |

| NH28 | 5 | 10 | 15 | 10 | 25 | 11.7 | 10 | 5 | 5 | 20 | 25 | 10 |

| NH32 | 10 | 5 | 20 | 0 | 15 | 8.3 | 10 | 10 | 5 | 5 | 15 | 6.7 |

| NH33 | 15 | 15 | 15 | 15 | 15 | 15 | 10 | 10 | 10 | 10 | 10 | 10 |

| NH35 | 20 | 5 | 10 | 5 | 10 | 6.7 | 15 | 10 | 10 | 15 | 5 | 11.7 |

| NH36 | 10 | 5 | 15 | 20 | 20 | 13.3 | 0 | 15 | 5 | 10 | 15 | 10 |

| NH42 | 15 | 10 | 20 | 10 | 15 | 13.3 | 20 | 20 | 20 | 5 | 15 | 15 |

| NH47 | 10 | 10 | 15 | 10 | 15 | 11.7 | 5 | 10 | 10 | 15 | 15 | 11.7 |

| NH49 | 5 | 0 | 5 | 5 | 10 | 3.3 | 0 | 0 | 10 | 5 | 15 | 5 |

| NH48 | 20 | 10 | 15 | 25 | 20 | 16.7 | 10 | 10 | 15 | 20 | 15 | 15 |

| Mean | 13 | 9.5 | 15 | 11 | 17 | 11.8 | 10 | 11.5 | 11.5 | 11.5 | 15 | 11.5 |

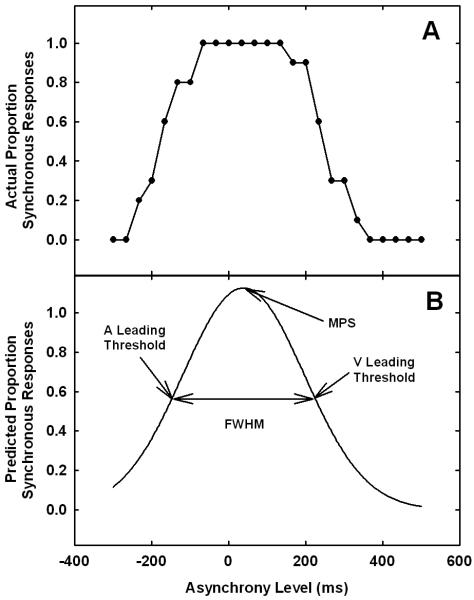

An individual example of an AV asynchrony function for a cochlear implant recipient is displayed in Figure 1 Panel A. In this figure, the mean proportion of synchronous responses is presented as a function of the asynchrony level in milliseconds. On the abscissa, negative asynchrony levels indicate that the auditory signal led the visual signal by a specified time (e.g., A300V), the zero point indicates that both the audio and visual signals were synchronous in time (i.e., 0), and positive asynchrony levels indicate that the visual signal led the audio signal (e.g., V400A). The ordinate axis represents the proportion of synchronous responses that were reported at a specific asynchrony level. Each AV asynchrony level was presented using 10 different words and the listener's task was to judge whether or not the stimulus was out of sync. For this particular example, the trials of A300V, A267V, V367A, V400A, V433A, V467A, and V500A were judged to be asynchronous with 100% accuracy. This individual reported that the audio and visual signals were completely synchronous for the asynchrony levels of A67V, A33V, 0, V33A, V67A, V100A, and V133A. For all other asynchrony levels, the study participant inconsistently reported that the AV stimuli were synchronous.

Figure 1.

An individual AV asynchrony function. Panel A displays the observed function and Panel B shows the Gaussian curve fitted to the observed function. The proportion of synchronous responses is shown as a function of the asynchrony level. A positive asynchrony level indicates that the visual signal was presented prior to the auditory signal and a negative level indicates that the auditory signal was presented before the visual signal. The A-leading threshold is the asynchrony level for the y value at 50% of the distance from the minimum to the maximum of the auditory leading portion of the curve. The V-leading threshold is the asynchrony level for the y value at 50% of the distance from the maximum to the minimum of the visual leading portion of the curve. The FWHM is the value of the asynchrony width of the half-maxima of the function. Also displayed is the mean point of synchrony (MPS).

In order to quantify the AV asynchrony functions, symmetrical Gaussian curves were fitted to individual asynchrony curves through the use of Sigma Plot 9.01 software and the following equation:

| (1) |

In this equation, y is the observed proportion of synchronous responses for each individual at each asynchrony level, x. The x-intercept, xo, represents the mean point of synchrony (MPS). Both a and b are generated parameters from the Sigma Plot software that aid with curve fitting. The Gaussian curve fitted to the individual asynchrony function shown in Panel A is displayed in Panel B of Figure 1. Note that because this is a fitted curve capable of modeling both symmetric and asymmetric data around the 0 point, some of the maximum proportioned responses obtained were actually larger than 1.0 as was shown with the collected data presented in Panel A. The four features that describe this AV asynchrony function are the MPS, the auditory (A) leading threshold, the visual (V) leading threshold and the full-width half maximum (FWHM). The A-leading threshold is the asynchrony level for the y value at 50% of the distance from the minimum to the maximum of the auditory leading portion of the curve (i.e., the left portion of the curve). Similarly, the V-leading threshold is the asynchrony level for the corresponding y value at 50% of the distance from the maximum to the minimum of the visual leading portion (i.e., the right portion) of the Gaussian function. The FWHM is the value of the asynchrony width at the half-maxima (i.e., 50%) of the function. This value was obtained by adding the absolute value on the x-axis (in ms) that corresponded with the A-leading threshold with the point on the x-axis that corresponded to the V-leading threshold (in ms). For this individual, the MPS was 39.15 ms, the A leading threshold was −145.23 ms, the V leading threshold was 226.65, and the FWHM was 371.88 ms (i.e., 145.23 + 226.65).

There were 9 study participants that did not observe asynchronous signals with 100% accuracy when the A stimulus led the V stimulus by 300 ms. However, for all of these cases, the participants identified asynchronicity with greater than 60% accuracy and the majority of these participants (i.e., 7) identified the asynchronous relationship with greater than 80% accuracy. Similarly, there were 8 participants who did not identify an asynchronous relationship with the V signal leading the A signal by 500 ms. Of these 8 participants, 6 of them identified the asynchronous relationship with 90% accuracy and 2 identified the asynchronous relationship with 80% accuracy. For both ends of the asynchrony spectrum, therefore, it was possible to obtain the point at which 50% of the responses were judged to be asynchronous. Future work, however, should expand the time frame over which the detection of audiovisual asynchrony occurs for cochlear implants recipients.

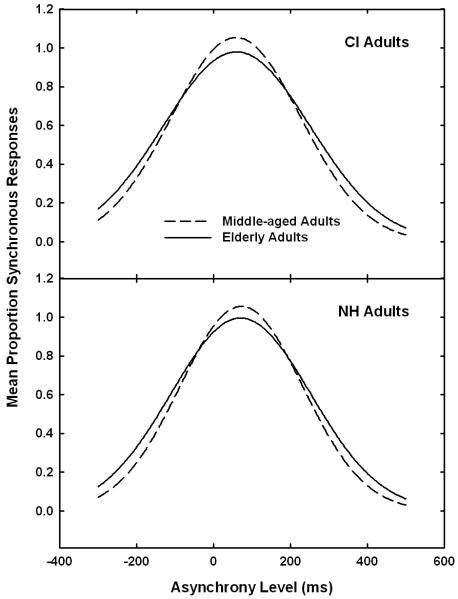

The mean AV asynchrony data for all of the cochlear implant recipients and the normal-hearing adults are shown in Figure 2. For both panels, the mean proportion of synchronous responses is displayed as a function of the asynchrony level. The top panel of the figure shows the data for the cochlear implant recipients and the bottom panel displays the data for the normal-hearing adults. In both panels, the overall results for the middle-aged adults are shown with the dotted line, and the data for the older adults are shown using the solid line. Additionally, the MPS, the A-leading threshold, the V-leading threshold and the FWHM values for the normal-hearing and cochlear implant recipients are presented in Table 4.

Figure 2.

The mean AV asynchrony data for the cochlear implant recipients and normal-hearing adults, generated using a curve fitting procedure described in the text. The mean proportion of the synchronous responses is displayed as a function of the asynchrony level. The top panel shows the data for the cochlear implant recipients and the bottom panel shows the data for the normal-hearing listeners. The solid lines show the elderly adult data and the dotted lines shows the middle-aged adult data.

Table 4.

Mean Asynchrony Data.

| MPS | A-leading Threshold |

V-leading Threshold |

FWHM | |

|---|---|---|---|---|

| Middle-Aged CI |

58.4210 | −135.9933 | 260.8817 | 396.8750 |

| Elderly - CI | 59.8846 | −154.1708 | 295.8292 | 450.0000 |

| Middle-Aged NH |

72.3434 | −112.3147 | 262.6853 | 375.0000 |

| Elderly - NH | 70.7044 | −134.0327 | 294.0923 | 428.1250 |

Note: Values are in milliseconds; MPS: mean point of synchrony; FWHM: Full Width Half Maximum

Two-way ANOVA analyses were performed using age and hearing status (i.e., cochlear implant recipients or normal-hearing adults) as independent variables and MPS, A-leading threshold, V-leading threshold, and FWHM as the dependent variables. A significant age-effect was obtained for the A-leading threshold [F(1,46)=4.989, p=0.03] and for the FWHM [F(1,46)=4.921, p=0.03]. The power for both of these results, however, was 0.58 which is below the 0.80 standard. For these two outcomes, the middle-aged adults (i.e., cochlear implant recipients and normal-hearing adults) had A-leading thresholds that were closer to the point of AV synchrony (i.e., 0 on the abscissa in Figure 2) and had narrower FWHMs than the elderly adults. No other main effects or interactions were obtained for any of the other analyses.

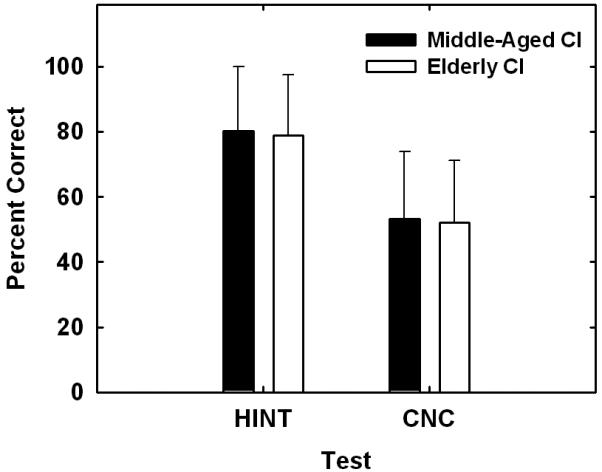

The results obtained from the cochlear implant recipients for the HINT sentences and CNC word test are presented in Figure 3. This figure displays the mean and standard deviations for both of the perception tests. The solid bars show the data for the middle-aged participants and the open bars represent the data for the elderly adults. The mean correct HINT sentences and CNC scores were 79.49% and 52.64%, respectively. One-way ANOVA analyses revealed no significant differences in mean correct scores between the middle-aged and elderly cochlear implant recipients for the HINT sentences and CNC perception tests.

Figure 3.

Mean percent correct scores for the HINT sentences and CNC speech perception tests. The solid bars show the data for the middle-aged participants and the white bars show the data for the elderly participants. The error bars display the standard deviation.

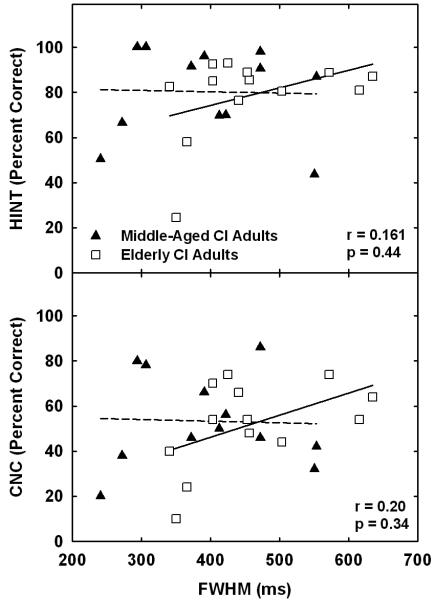

The correlation between the HINT sentences and CNC tests and the FWHM data are presented in Figure 4. The top panel of Figure 4 shows the correlation between the results from the HINT sentences and the FWHM data, and the bottom panel shows the correlation results for the CNC word test and the FWHM data. The data from the middle-aged cochlear implant recipients are identified using the black triangles and the data for the elderly cochlear implant recipients are shown using the open squares. The dashed and solid lines represent the linear regression results for the middle-aged and elderly adults, respectively. The overall Pearson correlation results for both groups are displayed in the bottom right-hand corner of each panel. The correlation coefficients for the two subgroups are presented in Table 5.

Figure 4.

Correlation results for the HINT sentences and CNC tests with the FWHM data. The top panel shows the results for the HINT data versus the FWHM data and the bottom panel shows the CNC data versus the FWHM data. The solid triangles and white squares show individual data for the middle-aged and elderly cochlear implant recipients, respectively. Linear regression lines for the middle-aged and elderly adults are displayed using the dashed and solid lines, respectively. The Pearson correlation results for the overall distribution of middle-aged and elderly adults, along with the significance levels, are displayed in each panel.

Table 5.

Pearson correlation coefficients and significance values in parentheses (an asterisk denotes significant findings at the 0.05 level)

|

FWHM |

HINT | CNC | CUNY A | CUNY V | CUNY AV |

|---|---|---|---|---|---|

| Middle- aged CI |

−0.03 (0.92) |

−0.04 (0.91) |

−0.30 (0.33) |

−0.22 (0.50) |

−0.61 (0.04)* |

| Elderly CI | 0.41 (0.17) |

0.50 (0.09) |

0.53 (0.06) |

−0.21 (0.49) |

−0.23 (0.44) |

For the data from the HINT sentences and CNC test, the elderly adults displayed increasing FWHMs with better auditory-only speech perception results. As shown in Table 5, the Pearson correlation analyses revealed no significant relationship between either the HINT sentences and FWHM data, and the CNC and FWHM data (elderly HINT and FWHM: r = 0.406, p = 0.17; elderly CNC and FWHM: r = 0.495, p = 0.09). Conversely, the results from the middle-aged adults tended to show a slightly negative trend. Specifically, there was a very slight tendency for poorer speech perception scores to be associated with wider FWHMs. These findings also were not significant (middled-aged HINT and FWHM: r = −0.031, p = 0.92; middle-aged CNC and FWHM: r = −0.037, p = 0.91).

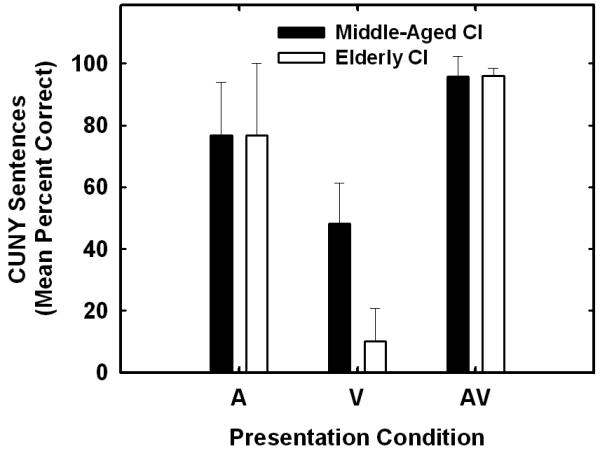

Figure 5 shows the mean results obtained from the CUNY sentence test. The data obtained from each of the three different presentation conditions, auditory-alone (A), visual-alone (V) and audiovisually (AV) are displayed. The solid bars show the data for the middle-aged adults with a cochlear implant and the white bars show the data for the elderly adults who used cochlear implants. The error bars represent the standard deviation around the mean. It can be observed in the figure that the AV scores for both the elderly and middle-aged groups are similar and very close to ceiling. The A scores also similar for both groups and are close to the 80% correct point. No significant differences in performance between the elderly and middle-aged groups were observed for both these A and AV conditions as determined using one-way ANOVA analyses.

Figure 5.

Mean percent correct scores for the CUNY sentence test. The mean percent correct CUNY scores for each presentation condition (i.e., A, V and AV) are shown. The solid bars show the data for the elderly adults and the white bars show the data for the middle-aged adults. The error bars represent the standard deviation around the mean.

The data for the CUNY V scores shown in Figure 5, however, suggest that the middle-aged cochlear implant recipients were better speechreaders than the older adults. The performance of the elderly individuals on this task was close to floor while the middle-aged adults were able to complete this task with near 50% accuracy on average. A one-way ANOVA indicated that the difference in V scores between these two groups was highly significant [F(1,24) = 62.36, p<0.0001).

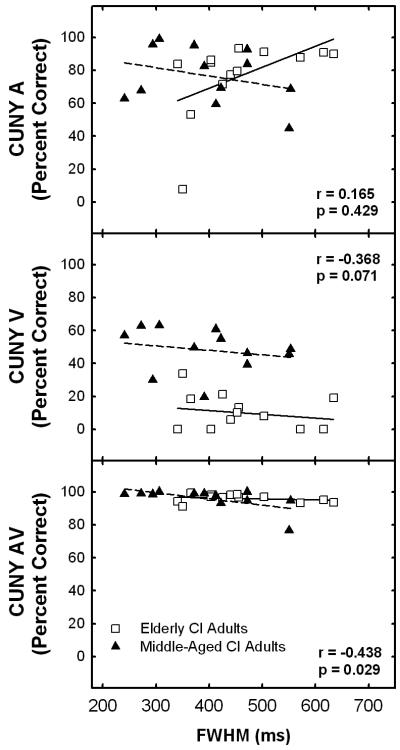

The data presented in Figure 6 show the correlation between the CUNY speech perception data and the AV asynchrony detection results for the hearing-impaired adults who use cochlear implants. In this figure, the CUNY speech perception results are displayed on the ordinate and the FWHM data are presented on the abscissa. The solid triangles represent individual data from the middle-aged cochlear implant recipients, and the white squares represent data from the elderly cochlear implant recipients. Linear regression lines for the middle-aged and elderly adults are indicated by the dashed and solid lines, respectively, and the Pearson correlation results for the collective set of data are presented in each panel.

Figure 6.

Correlation results for the CUNY (percent correct) and FWHM (ms) data. The solid triangles and the white squares represent individual data for the middle-aged and elderly cochlear implant recipients, respectively. The dotted and dashed lines represent the linear regression results for the middle-aged and elderly adults, respectively. The Pearson correlation results and the significance levels are displayed in each individual graph.

Similar to the results obtained with the HINT sentences and the CNC word test, the A results presented in the top panel suggest that the CUNY scores decrease with increasing FWHMs for the middle-aged adults, but the elderly adults show the opposite tendency. These trends were not significant for either group as determined using Pearson correlation analyses as displayed in Table 4 (middle-aged: r = −0.307, p = 0.33; elderly: r = 0.531, p = 0.06). The overall correlation also was not found to be significant as shown in the bottom right corner of the top panel. For the V results, shown in the middle panel, there was a tendency for both groups to have poorer speech perception scores associated with wider FWHMs. This finding did not reach significance as a group (r = −0.368, p = 0.071) nor did it reach significance for each subgroup as shown in Table 4 (middle-aged: r = −0.215, p = 0.50; elderly: r = −0.209, p = 0.49). A significant correlation (r = −0.438, p = 0.029) was obtained for the AV data suggesting that wider FWHMs resulted in poorer AV speech perception scores. When examining the correlation results for each subgroup of participants, the middle-aged adults showed a significant correlation between AV speech perception and FWHMs (r = −0.609, p = 0.04), and conversely, the elderly group showed a non-significant result (r = 0.233, p = 0.44) (see Table 5). Due to the observed ceiling effects, however, these findings need to be viewed with some caution.

DISCUSSION

The goals of this initial study were to examine how hearing-impaired listeners with cochlear implants perceive asynchronous AV speech and to explore the effects of aging on the detection of AV asynchrony. A secondary objective of this study was to determine the association between AV asynchrony detection and speech understanding abilities in quiet. The results of this study revealed that both normal-hearing elderly individuals and elderly adults who use cochlear implants have significantly wider FWHMs than their middle-aged counterparts. Specifically, the elderly normal-hearing and cochlear implant participants perceived asynchronous spoken words as being synchronous over wider time windows than the middle-aged adults. The average FWHMs for the elderly normal hearing and cochlear implant population was approximately 440 ms compared to an average of 386 ms for the younger normal-hearing individuals and cochlear implant recipients. For the speech perception tasks, no differences were observed for the auditory-alone conditions in the HINT, CNC and CUNY findings between the middle-aged and elderly cochlear implant recipients. Conversely, the results for the visual-only scores on the CUNY sentence test revealed that elderly cochlear implant recipients have significantly lower scores than the middle-aged cochlear implant recipients. These findings are similar to earlier results reported by Battmer, Gupta, Allum-Mecklenburg, and Lenarz (1995) and Hay-McCutcheon et al. (2005). Examination of the correlations between the detection of asynchronous AV speech and auditory-only and visual-only speech understanding abilities revealed that none of the findings from the speech perception tests (i.e., HINT, CNC and CUNY) were correlated with the FWHM data. Alternatively, a significant trend for wider FHWM values to be correlated with poorer audiovisual CUNY results was observed for the middle-aged adults but not for the elderly adults.

AV Asynchrony Detection

The AV asynchrony findings reported here are similar to results previously reported in the literature. Conrey and Pisoni (2006) reported that the FWHM was on average 372 ms for young adults aged 18 to 22 years old, which was very similar to the FWHMs reported in the present study for the normal-hearing middle-aged adults (i.e., 375 ms). Grant and Greenberg (2001), McGrath and Summerfield (1985), and Pandy, Kunov, and Abel (1986) also reported findings on speech understanding using AV asynchronous material. All three papers reported that words in sentences can be successfully identified when the auditory and visual components are approximately 200-250 milliseconds out of sync. Although the findings from the current study cannot be directly compared to the results of these earlier studies because of procedural differences, it is clear that AV asynchronous speech is perceived as synchronous over a fairly wide window of approximately several hundred milliseconds.

AV Asynchrony Detection and Aging

The findings from this study also suggest that age rather than hearing impairment is more closely linked with the detection of AV asynchronous speech. The data displayed in Figure 2 suggest that compared to normal-hearing and hearing-impaired middle-aged adults, older normal-hearing and hearing-impaired individuals have a significantly wider window over which they identify AV asynchronous speech as being synchronous. This study revealed that individuals who use a cochlear implant do not have more difficulty detecting AV asynchronous speech than individuals with normal hearing. To more fully understand the effects of aging and the use of a cochlear implant on the processing of AV asynchronous speech, further research should focus on both the identification and discrimination of AV asynchronous speech.

Previous studies have also shown that elderly people experience declines in other tasks associated with speech processing, such as gap detection and listening in noise (Stuart and Phillips, 1996, Fitzgibbons and Gordon-Salant, 1996, Gordon-Salant and Fitzgibbons, 2004, Pichora-Fuller and Souza, 2003). These declines in speech perception performance can be partially attributed to changes that occur within the peripheral auditory system (Souza and Turner, 1994, Humes, 1996, Schneider et al., 2000). However, other researchers have argued that differences in performance between younger and older adults on complex tasks such as gap detection and sound duration discrimination cannot be attributed exclusively to peripheral sensory deterioration (Fitzgibbons and Gordon-Salant, 1996, Gordon-Salant and Fitzgibbons, 2004, Pichora-Fuller and Souza, 2003). Most likely, neurophysiologic changes that occur within the central auditory system and other connected brain regions also contribute to the declines in performance observed in complex listening tasks with elderly individuals.

In terms of the auditory periphery, both the middle-aged and older control group included in this study had hearing within normal limits. However, within the range of normal hearing, the behavioral audiometric threshold data indicated that the older normal-hearing individuals had significantly higher thresholds than the middle-aged normal-hearing individuals at 1000 Hz and 4000 Hz. Additionally, the sound field thresholds for the cochlear implant recipients revealed that the older study participants, compared to their middle-aged counterparts, had significantly higher thresholds at 1000 Hz, 2000 Hz and 4000 Hz. It is possible, therefore, that the differences in AV asynchrony detection could have been a direct consequence of the physiological differences in the peripheral auditory system between middle-aged and older individuals. The mid- and high-frequency information included in the asynchronous speech could have been processed differently at the periphery for the middle-aged adults compared to the older adults. Consequently, the differences in auditory processing could have influenced the detection of AV asynchrony.

Differences in the central auditory systems between middle-aged and older adults also should be considered in any account of the differences in AV asynchrony detection. Specifically, several studies have found that older adults experience difficulty with tasks that require them to divide their attention. Madden, Pierce, and Allen (1996) demonstrated that the reaction time to identify specific target signals from a group of distracting signals was significantly longer in an elderly group of individuals aged 63 to 70 than in a young group of individuals aged 18 to 29 years old. Additionally, Mayr (2001) reported that in a task requiring study participants to switch between different types of decisions between trials, older adults (mean age 71 years old, SD=3.3 years) had significant longer reaction times for this task than adults (mean age 33 years old, SD=1.4 years). It is possible, therefore, that the attentional demands that were required in the current study (i.e., attending to both the auditory and visual streams and making a decision about their synchrony) placed greater processing demands on the older participants than the middle-aged participants, and this could have contributed to the observed differences in performance between the two age groups.

AV Asynchrony Detection and Speech Perception in Hearing-Impaired Listeners with Cochlear Implants

Contrary to the findings of the data from Conrey and Pisoni (2006), who examined AV asynchrony perception in young normal-hearing individuals, no significant relationship between the AV asynchrony detection task and the A and V CUNY scores was found for the hearing-impaired listeners with cochlear implants in this study (see Figure 6). In addition, because the CUNY V scores were not found to be strongly correlated with asynchronous AV speech detection for cochlear implant recipients, the differences observed in the detection of asynchronous audio-visual signals can not be attributed wholly to differences in speechreading abilities between middle-aged and older adults.

A correlation was observed between the AV CUNY results and the FWHM data for the middle-aged cochlear implant recipients, but this finding needs to be interpreted with some caution due to the ceiling effects noted with all of the AV CUNY data. It is possible that if more variance in the CUNY AV scores been obtained in the cochlear implant population a more robust relationship between speech perception and AV asynchrony detection would have emerged.

An interesting trend, however, did emerge in the results from the auditory-only speech perception tests and the FWHM data. Specifically, for the elderly cochlear implant population, better performance on the HINT sentences, CNC and CUNY A tests tended to be correlated with wider FWHMs. Conversely, performance on these two measures for the middle-aged adults revealed a flat relationship (i.e., no correlation). Because elderly adults demonstrated significantly wider FWHMs than middle-aged adults, further work with larger numbers of participants should explore the perception of AV asynchronous sentences – as opposed to words – and its correlation with the perception of auditory-only sentences in a group of middle-aged and elderly people who use cochlear implants. It is possible that elderly individuals who have good speech recognition skills rely less on visual input, and consequently have poorer speech reading skills compared to their middle-aged counterparts and less likely to detect AV asynchrony. Further exploration in this area has the potential to provide insight into the processing of connected discourse rather than the perception of isolated words, which would in turn provide insights into how elderly individuals process speech in less than ideal listening conditions.

CONCLUSIONS

In summary, the findings from this first examination of the audiovisual integration skills of individuals who use cochlear implants suggest that aging has a greater effect on the detection of AV asynchronous speech than a severe-to-profound hearing loss that has been partially corrected through the use of a cochlear implant. Additionally, the temporal width of the AV asynchrony function was not correlated with speech perception skills for hearing-impaired individuals who use cochlear implants. However, when exploring the relationship between AV asynchrony detection and speech perception skills, the results suggest that middle-aged and elderly individuals might process auditory and visual speech cues differently in a range of word and sentence perception tasks.

ACKNOWLEDGMENTS

This research was supported in part by National Institutes of Health (NIDCD) T32 DC00012 and the Psi Iota Xi Philanthropic Organization. The efforts of the hearing-impaired and normal-hearing adults who participated in this study are gratefully acknowledged. We also would like to thank Brianna Conrey and Luis Hernandez for their help with setting up the stimuli used in this project and for all of the other technical support they provided during the course of this study. Comments from two reviewers on an earlier version of this paper are very much appreciated. Portions of these data were presented at the 2005 Conference on Implantable Auditory Prostheses, Pacific Grove, CA.

REFERENCES

- BATTMER RD, GUPTA SP, ALLUM-MECKLENBURG DJ, LENARZ T. Factors influencing cochlear implant perceptual performance in 132 adults. Annals of Otology Rhinology and Laryngology. 1995;166(Suppl):185–187. [PubMed] [Google Scholar]

- BOOTHROYD A, HNATH-CHISOLM T, HANIN L, KISHON-RABIN L. Voice fundamental frequency as an auditory supplement to the speechreading of sentences. Ear & Hearing. 1988;9:306–312. doi: 10.1097/00003446-198812000-00006. [DOI] [PubMed] [Google Scholar]

- CIENKOWSKI KM, CARNEY AE. Auditory-visual speech perception and aging. Ear & Hearing. 2002;23:439–449. doi: 10.1097/00003446-200210000-00006. [DOI] [PubMed] [Google Scholar]

- CONREY B, PISONI DB. Auditory-visual speech perception and synchrony detection for speech and nonspeech signals. Journal of the Acoustical Society of America. 2006;119:4065–4073. doi: 10.1121/1.2195091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DOUCET ME, BERGERON F, LASSONDE M, FERRON P, LEPORE F. Cross-modal reorganization and speech perception in cochlear implant users. Brain. 2006;129:3376–3383. doi: 10.1093/brain/awl264. [DOI] [PubMed] [Google Scholar]

- FITZGIBBONS PJ, GORDON-SALANT S. Auditory temporal processing in elderly listeners. Journal of the American Academy of Audiology. 1996;7:183–189. [PubMed] [Google Scholar]

- FOLSTEIN MF, FOLSTEIN SE, MCHUGH PR. Mini Mental State: A practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- GIRAUD AL, PRICE CJ, GRAHAM JM, TRUY E, FRACKOWIAK RSJ. Cross-modal plasticity underpins language recovery after cochlear implantation. Neuron. 2001a;30:657–663. doi: 10.1016/s0896-6273(01)00318-x. [DOI] [PubMed] [Google Scholar]

- GIRAUD AL, TRUY E, FRACKOWIAK R. Imaging plasticity in cochlear implant patients. Audiology and Neuro-Otology. 2001b;6:381–393. doi: 10.1159/000046847. [DOI] [PubMed] [Google Scholar]

- GORDON-SALANT S, FITZGIBBONS PJ. Effects of stimulus and noise rate variability on speech perception by younger and older adults. Journal of the Acoustical Society of America. 2004;115:1808–1817. doi: 10.1121/1.1645249. [DOI] [PubMed] [Google Scholar]

- GRANT KW, GREENBERG S. Speech intelligibility derived from asynchronous processing of auditory-visual information; International Conference on Auditory Visual Speech Processing; Scheelsminde, Denmark: 2001. [Google Scholar]

- GRANT KW, GREENBERG S, POEPPEL D, VAN WASSENHOVE V. Effects of spectro-temporal asynchrony in auditory and auditory-visual speech processing. Seminars in Hearing. 2004;3:241–255. [Google Scholar]

- GRANT KW, SEITZ PF. Measures of auditory-visual integration in nonsense syllables and sentences. Journal of the Acoustical Society of America. 1998;104:2438–2450. doi: 10.1121/1.423751. [DOI] [PubMed] [Google Scholar]

- HAY-MCCUTCHEON MJ, PISONI DB, KIRK KI. MidWinter Meeting of the Association for Research in Otolaryngology. New Orleans, LA: Feb 19-24, 2005. Speech recognition skills in the elderly cochlear implant population: A preliminary examination. 2005. [Google Scholar]

- HUMES LE. Speech understanding in the elderly. Journal of the American Academy of Audiology. 1996;7:161–167. [PubMed] [Google Scholar]

- LACHS L. Use of partial stimulus information in spoken word recognition without auditory stimulation. Indiana University; Bloomington, IN: 1999. [Google Scholar]

- LACHS L, HERNANDEZ LR. Update: The Hoosier audiovisual multitalker database. Indiana University; Bloomington, IN: 1998. [Google Scholar]

- LEE FS, MATTHEWS LJ, DUBNO JR, MILLS JH. Longitudinal study of pure-tone thresholds in older persons. Ear & Hearing. 2005;26:1–11. doi: 10.1097/00003446-200502000-00001. [DOI] [PubMed] [Google Scholar]

- MADDEN DJ, PIERCE TW, ALLEN PA. Adult age differences in the use of distractor homogeneity during visual search. Psychology and Aging. 1996;11:454–474. doi: 10.1037//0882-7974.11.3.454. [DOI] [PubMed] [Google Scholar]

- MAYR U. Age differences in the selection of mental sets: The role of inhibition, stimulus ambiguity, and response-set overlap. Psychology and Aging. 2001;16:96–109. doi: 10.1037/0882-7974.16.1.96. [DOI] [PubMed] [Google Scholar]

- MCGRATH M, SUMMERFIELD Q. Intermodal timing relations and audio-visual speech recognition by normal-hearing adults. Journal of the Acoustical Society of America. 1985:678–685. doi: 10.1121/1.392336. [DOI] [PubMed] [Google Scholar]

- MIDDELWEERD MJ, PLOMP R. The effect of speechreading on the speech-reception threshold of sentences in noise. Journal of the Acoustical Society of America. 1987;82:2145–2147. doi: 10.1121/1.395659. [DOI] [PubMed] [Google Scholar]

- NILSSON M, SOLI SD, SULLIVAN JA. Development of the Hearing in Noise Test for the measurement of speech reception thresholds in quiet and in noise. Journal of the Acoustical Society of America. 1994;95:1085–1099. doi: 10.1121/1.408469. [DOI] [PubMed] [Google Scholar]

- PANDEY PC, KUNOV H, ABEL SM. Disruptive effects of auditory signal delay on speech perception with lipreading. The Journal of Auditory Research. 1986;26:27–41. [PubMed] [Google Scholar]

- PEARSON JD, MORRELL DH, GORDON-SALANT S, BRANT LJ, METTER EJ, KLEIN LL, FOZARD JL. Gender differences in a longitudinal study of age-associated hearing loss. Journal of the Acoustical Society of America. 1995;97:1196–1205. doi: 10.1121/1.412231. [DOI] [PubMed] [Google Scholar]

- PETERSON GE, LEHISTE I. Revised CNC lists for auditory tests. Journal of Speech and Hearing Disorders. 1962;27:62–65. doi: 10.1044/jshd.2701.62. [DOI] [PubMed] [Google Scholar]

- PICHORA-FULLER MK, SOUZA PE. Effects of aging on auditory processing of speech. International Journal of Audiology. 2003;42:2S11–2S16. [PubMed] [Google Scholar]

- SALTHOUSE TA, BABCOCK RL. Decomposing adult age differences in working memory. Developmental Psychology. 1991;27:763–776. [Google Scholar]

- SCHNEIDER BA, DANEMAN M, MURPHY DR, KWONG SEE S. Listening to discourse in distracting settings: The effects of aging. Psychology and Aging. 2000;15:110–125. doi: 10.1037//0882-7974.15.1.110. [DOI] [PubMed] [Google Scholar]

- SOMMERS M, TYE-MURRAY N, SPEHAR B. Auditory-visual speech perception and auditory-visual enhancement in normal-hearing younger and older adults. Ear & Hearing. 2005;26:263–275. doi: 10.1097/00003446-200506000-00003. [DOI] [PubMed] [Google Scholar]

- SOUZA PE, TURNER CW. Masking of speech in young and elderly listeners with hearing loss. Journal of Speech and Hearing Research. 1994;37:655–661. doi: 10.1044/jshr.3703.655. [DOI] [PubMed] [Google Scholar]

- STUART A, PHILLIPS DP. Word recognition in continuous and interrupted broadband noise by young normal-hearing, older normal-hearing, and presbyacusic listeners. Ear & Hearing. 1996;17:478–489. doi: 10.1097/00003446-199612000-00004. [DOI] [PubMed] [Google Scholar]

- SUMBY WH, POLLACK I. Visual contributions to speech intelligibility in noise. Journal of the Acoustical Society of America. 1954;26:212–215. [Google Scholar]