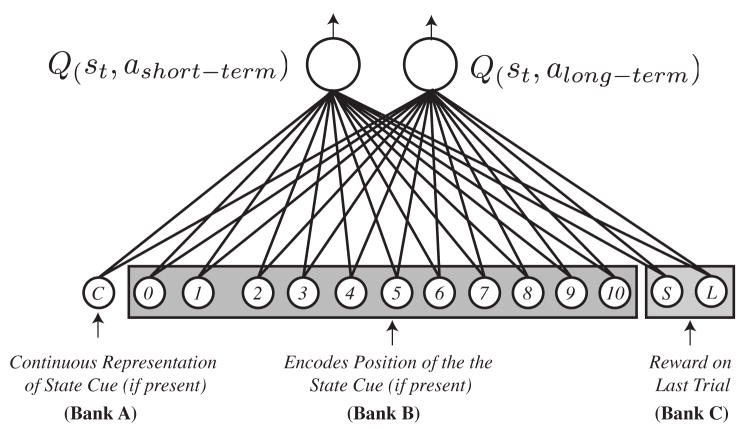

Figure 5.

Diagram of the architecture of the Q-learning network model. These models use cues such as experimenter-provided state cues or the reward on the previous trial in order to estimate the value of each action. Input was a single vector of length 14 which encoded various aspects of the display (see the main text for details). A set of learned connection weights passed activation from the input units to the output nodes which, in turn, estimate the current value of the state-action pair Q(s, a). Critically, the models must learn through experience how perceptual cues in the task relate to the goal of maximizing reward.