Abstract

Reaching toward a cup of coffee while reading the newspaper becomes exceedingly difficult when other objects are nearby. Although much is known about the precision of visual perception in cluttered scenes, relatively little is understood about acting within these environments – the spatial resolution of visuomotor behavior. When the number and density of objects overwhelm visual processing, crowding results, which serves as a bottleneck for object recognition. Despite crowding, featural information of the ensemble persists, thereby supporting texture perception. While texture is beneficial for visual perception, it is relatively uninformative for guiding the metrics of grasping. Therefore, it would be adaptive if the visual and visuomotor systems utilized the clutter differently. Using an orientation task, we measured the effect of crowding on vision and visually guided grasping and found that the density of clutter similarly limited discrimination performance. However, while vision integrates the surround to compute a texture, action discounts this global information. We propose that this dissociation reflects an optimal use of information by each system.

Keywords: crowding, clutter, grasping, kinematics, dual-visual systems, perception-action dissociation

Introduction

Few natural environments outside the laboratory provide instances where objects appear in complete isolation. The visual system must therefore rapidly process competing objects of interest to enable accurate perception. Visual crowding – a degradation of peripheral feature discrimination that varies with viewing eccentricity and density of surrounding objects – sets limits on an observer's ability to perceptually individuate and recognize objects in realistic, cluttered scene (Bouma, 1970; He et al., 1996; Levi, 2008). The size of the crowded region is used to measure the functional spatial resolution of vision, thereby giving the lower bound on where visual awareness arises (He et al., 1997; Pelli, 2008). Given that one of the primary functions of vision is the guidance of action, and because we act within cluttered scenes every day, it is important to know how visual crowding limits the resolution of visually guided grasping.

When we reach toward an object, the visuomotor system must access metric information about the target, such as its actual size and orientation (Aglioti et al., 1995; Ganel et al., 2008). Information in the background of the visual scene – the clutter or context – is less informative for action, and may therefore be discounted. This contrasts with visual perception: the visual context in which an object is seen is informative and influences perception of the object in many ways. For example, the perceived brightness, color, texture, blurriness, size, distance, number, orientation, shape, motion, and even identity of an object depends on the sort of visual information present in the background (De Valois et al., 1986; Singer and D'Zmura, 1994; Palmer, 1999; Oliva and Torralba, 2007). Relevant to the current study, the perceptual system rapidly extracts the average featural information from a crowd of objects (see Figure 1). Possibly driven by over-integration, this ensemble information may support texture perception, and provide access to summary information about the scene (Parkes et al., 2001; Chong and Treisman, 2003; Alvarez and Oliva, 2008, 2009; Haberman and Whitney, 2009). Because contextual information may be differentially useful for perception and action, we might expect a dissociation between perceptual and visuomotor behavior in cluttered scenes.

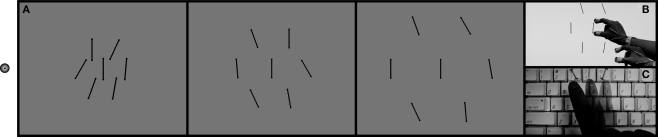

Figure 1.

Demonstration of stimulus and task. While fixating on the dot on the left (A), participants discriminated the central, target bar in each display by reaching and grasping the orientation of the target (B), or by a 3AFC perceptual response (C). The orientation of the target bar in each case above is vertical, yet it appears tilted in the direction of the ensemble, or average distractor orientation. Do we reach toward this ensemble orientation?

The goal of the present study was to measure an ecological question of how crowding impacts visually guided behavior, specifically, the spatial resolution of grasping. In doing so, we also tested the hypothesis that the visuomotor system, unlike the perceptual system, is able to discount contextual information when a target is placed within a cluttered scene. Experiment 1 confirmed this hypothesis; although there was a similar level of crowding for visual and visuomotor discriminations, the visuomotor system discounted the information present in the background clutter. Experiment 2 further characterized the spatial resolution of grasping in the upper and lower visual fields, showing that like perception, the effect of crowding was reduced in the lower visual field.

Materials and Methods

Participants

Four observers (three male, one female; mean age 22.3; SD = 3.9) participated in both experiments. All participants were experienced psychophysical observers, and three were naïve to the purpose of the experiment. All had normal or corrected-to-normal vision and were right-hand dominant, as determined by their writing hand. Participants gave informed consent to participate in this study, which was approved by the Institutional Review Board of the University of California, Davis.

Apparatus

Stimuli were presented on a Toshiba Regza LCD monitor with a display resolution of 1,024 × 768 pixels and a refresh rate of 60 Hz. An iMac computer running MATLAB (The MathWorks Inc., Natick, MA, USA) and using the Psychophysics Toolbox (Brainard, 1997; Pelli, 1997) controlled stimulus presentation. The monitor was placed 51.5 cm from the observer, which was within comfortable reach of the participants’ right hand. A 1/4″ sheet of Plexiglas covered the screen to protect the monitor during reaching movements. An Optotrak Certus tracking system sampled participants’ hand position in 3-D at a rate of 60 Hz using infrared markers attached to the participants’ thumb and forefinger.

Stimuli

The stimulus consisted of a black central target bar surrounded by an equidistant radial array of distractor bars of identical size and color. Six distractor bars were placed at 0°, 60°, 120°, 180°, 240°, and 300° around the target (see Figure 1). Each bar was 4.4° (4 cm) long, and 0.24° (0.21 cm) wide, with a rounded top 0.35° (0.32 cm) in diameter. The background luminance of the monitor was 125 cd/m2 and the luminance of the bars was 0.22 cd/m2. Observers wore an eye patch over their left eye and maintained fixation on a small LED mounted to the side of the LCD monitor throughout the trial block.

In Experiment 1, the stimulus appeared along the horizontal meridian either 20°, 30°, or 40° to the right of the observers’ fixation. The density of the array (center-to-center spacing of the distractors and central target) varied from 4.3° (most crowded) to 10.7° (least crowded) in four steps of 1.6°. The orientation of the center target bar was set at 5°, 0°, or −5° from vertical, randomly on each trial. The target eccentricity, density of the array, and the target orientation were randomly determined on each trial. Each flanking bar had a pseudorandom orientation within ±30° of vertical in intervals of 5°, such that the mean, or ensemble, orientation of the six distractor bars ranged from −15° to 15° about vertical in intervals of 5°. The distractor orientations were manipulated independent of the target orientation. Thus, the orientation of the distractors gave no information about the orientation of the target. In each trial block, observers were presented with an equal number of all possible target and mean distractor orientation combinations. Blocks of perceptual report trials and grasping trials were presented in random order. Each participant made 1,890 total judgments (3 eccentricities × 5 densities × 21 trials × 3 trial blocks × 2 types of report – perceptual or grasping).

Procedure

Participants triggered each trial by pressing the spacebar on a keyboard. The stimulus appeared for 500 ms or until the participant removed their hand from the spacebar to make either a perceptual or visuomotor response. In separate interleaved trial blocks, observers discriminated the orientation of a central bar in one of two ways. In grasping trials, observers reached to the target and executed a pincer grasp with their thumb and forefinger as if they were actually grasping the target (Figure 1B). In perceptual trials, observers were instructed to make a standard 3AFC perceptual discrimination of the target bar's tilt by pressing one of three labeled keyboard buttons reflecting the three possible target tilts (Figure 1C). The angle of the participants’ grasp, the visuomotor dependent measure, was recorded as the angle between the forefinger and thumb as the forefinger came within 2.5 cm of the monitor. This distance was used to allow for slight variations in the position of the screen due to flexing of the protective cover. To directly compare continuous visuomotor responses to the perceptual 3AFC trials, the participants’ grasping angles were collapsed into one of three non-overlapping response categories for each trial, as described below. The participants had unlimited time to begin the next trial.

Control trials were presented before and after each experimental session for both perceptual and grasping trials to ensure that the LED markers attached to participants’ fingers did not slip during the session, and importantly, to collapse a continuous visuomotor response into three categories. This allowed grasping and perceptual experimental trials to be directly compared. Each control trial was identical in timing and procedure to experimental trials except that the target bar appeared in isolation (no crowding). Each control trial block consisted of 15 trials (5 trials at each of the 3 target orientations). Binning was accomplished as follows. First, we measured participants’ grasp angle for each of the three possible isolated target bars (left, vertical, and right). We then bisected the vectors of observers’ average grasp angle for the left and vertical targets and likewise for the vertical and rightward tilting targets. Participants’ test grasp on each experimental trial could then be classified into one of three non-overlapping bins: a leftward grasp (if it fell to the left of the bisected vector between control grasps to leftward and vertical targets), a rightward grasp (if it fell to the right of the bisected vector between control grasps to vertical and rightward targets), or a vertical grasp (if it fell between the rightward and leftward bins).

In the first experiment, we measured the spatial resolution of visually guided action, and we tested whether the contextual information (the nearby objects that crowd a target object) is treated differently by the perceptual and visuomotor systems. We manipulated both the eccentricity (20°, 30°, and 40°) and density (center-to-center distance of distractor bars to a target) of a cluttered display, which was visible for up to 500 ms. Observers responded to the target's orientation by either perceptually reporting the tilt of the bar, or by reaching for and grasping the same bar on the screen (Figure 1).

Experiment 2 methods

Experiment 2 was identical to Experiment 1, with the following two exceptions. First, stimuli were presented at isoeccentric points in either the upper or lower visual fields, but at the same location on the screen in order to maintain the biomechanical requirements of reaches to stimuli in both visual fields (cf. Danckert and Goodale, 2001). Second, the center of the target was separated 18.1° horizontally, and 19.7° vertically from the fixation point, and the target could be in the upper or lower visual field (equidistant to fixation point). As a result of this setup, the stimulus position in Experiment 2 was predictable, which differed from the unpredictable location along the horizontal meridian of Experiment 1. Blocks of perceptual and visuomotor trials were counterbalanced to reduce order effects. The order of testing in the upper and lower visual fields was also randomized for each observer. Observers made 1,260 total judgments in Experiment 2 (21 trials × 3 trial blocks × 5 distractor densities × 2 visual fields × 2 response types).

Results

Experiment 1

The degree of crowding was measured by correlating participants’ discrimination of the target bar (either perceptual or grasping responses, classified into three categories) with the target bars’ actual orientation, which had three possible orientations. These scores for perceptual trials and grasping trials were converted into Fischer z scores, averaged, and plotted in Figure 2A.

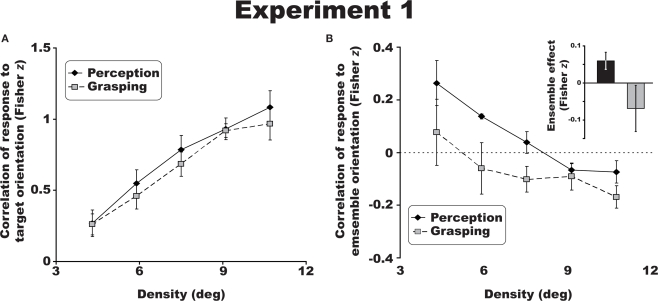

Figure 2.

Group results for Experiment 1. The crowding effect (A) for perceptual (solid line) and visuomotor judgments (dotted line) of target orientation collapsed across the three eccentricities tested. There was a significant decrease in discrimination performance with increasing eccentricity and increased flanker density. Perceptual responses were more attracted to the mean ensemble orientation of the distractor bars (B), while visuomotor judgments revealed a relative repulsion to this ensemble orientation (negative Fisher z scores). Inset: data for perception (black) and visuomotor responses (gray) collapsed across densities. All error bars are between-observer SEM.

A 2 × 3 × 5 repeated measures ANOVA, revealed that crowding had a similar effect on perceptual and visuomotor discriminations of orientation across all eccentricities (20°, 30°, and 40°) and densities (4.3°, 5.9°, 7.5°, 9.1°, and 10.7°) tested [Figure 2A; F(1,3) = 2.1, p = 0.244; η2 = 0.410]. Both the perceptual and visuomotor crowding tasks resulted in the classic crowding profile: discrimination performance declined with increasing eccentricity [F(2,6) = 46.4, p < 0.01; η2 = 0.939], and increasing density [F(4,12) = 58.3, p < 0.001; η2 = 0.951]. There was an interaction between eccentricity and distractor density. As the eccentricity of stimulus presentation increased from 20° to 40°, crowding occurred with larger distractor spacing [F(8,24) = 3, p < 0.05; η2 = 0.50]. A 3 (eccentricity) × 3 (target angle) repeated measures ANOVA confirmed that observers were easily able to grasp at isolated targets (see Section “Materials and Methods”) in three different orientations during control trials [F(2,6) = 39.2, p < 0.001, η2 = 0.929]. This suggests that the reduction in accuracy of reaches to a crowded target were not a result of visual acuity limits or simple motor error unrelated to crowding.

Analyzing the ensemble information generated by the distractors revealed a dissociation between observers’ perceptual and visuomotor responses. Following the same procedure used for measuring the crowding effect above, we correlated each response with the average orientation of the six flanking bars at each density and eccentricity. This calculation gave a measure of how the surrounding distractors contributed to perceptual and visuomotor judgments irrespective of the target orientation. When collapsed across all trials in Experiment 1 (three eccentricities and five densities), perceptual judgments were positively correlated with the average orientation of the distractors – ensemble averaging (Figure 2B), consistent with Parkes et al. (2001) study. Remarkably, visuomotor orientation judgments revealed a repulsion effect of the participant's grasp angle relative to the average orientation of the flanking array, despite the same performance as measured by the crowding effect (see bar graph, inset in Figure 2B). The average effect of the surrounding distractors differed across perceptual and visuomotor trials [F(1,3) = 10.7, p < 0.05; η2 = 0.782].

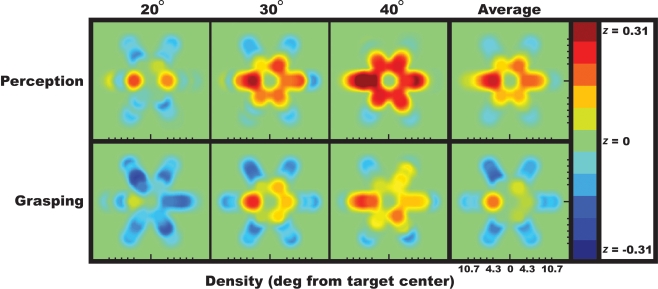

To better visualize the relative contribution that each individual distractor had on observers’ responses, the spatial integration fields for perceptual and visuomotor judgments are shown as heatmaps in Figure 3. These heatmaps demonstrate that, in line with previous research, distractors along the radial axis more strongly influenced perceptual judgments than distractors above or below the target (Wolford and Chambers, 1983; Toet and Levi, 1992; Feng et al., 2007). While perception integrated surrounding objects (more red in Figure 3), participants’ grasp angles were oriented away from this perceptual ensemble (more blue in Figure 3).

Figure 3.

Visualization of the data plotted in Figure 2B across space. For each possible distractor position, this heatmap reveals how the flanker bars capture (red) or repel (blue) the perceived or grasped target bar orientation. Each panel shows the degree to which observers’ perceptual or visuomotor responses correlated with each of the six distractor positions at each eccentricity. While flankers tended to capture or attract the perceived target orientation, they tended to repel the grasped target orientation.

Experiment 2

One of the characteristic spatial asymmetries of visual crowding is that it is stronger in the upper visual field (He et al., 1996). The functional significance of this finding is that we must often make fine spatial discriminations in the lower visual field and having higher spatial resolution would improve these judgments. Because vision is largely for the purpose of guiding action, we might expect that there would be higher effective spatial resolution of action in the lower visual field as well. The goal of Experiment 2 was to test this.

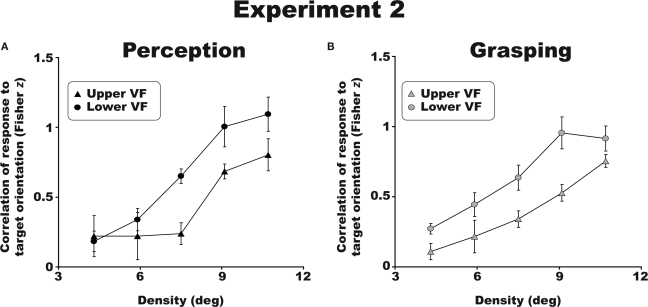

Visual and visuomotor crowding profiles followed the same trends across visual fields. Figure 4 shows significantly stronger crowding in the upper as compared to lower visual field for perceptual (Figure 4A) and grasping tasks (Figure 4B) [F(1,3) = 11.7; p < 0.05, η2 = 0.796). The crowding effect in the upper and lower visual fields did not differ between perceptual and grasping trials [F(1,3) = 0.2, p = 0.69; η2 = 0.06]. This pattern of results was identical for all four participants tested. As expected, there was also a main effect of density, with more crowding occurring at higher flanker densities [F(4,12) = 34.5; p < 0.01, η2 = 0.92). These results reveal an upper versus lower visual field asymmetry in visually guided reaching in crowded scenes, and bridge previous work on perceptual crowding (He et al., 1996) and asymmetries in reaching in non-crowded scenes (Danckert and Goodale, 2001).

Figure 4.

Group results for Experiment 2. Both perceptual (A) and visuomotor (B) discrimination performance was superior (less crowding) in the lower visual field. All error bars are between-observer SEM.

Discussion

The spatial resolution of vision limits our ability to perceive and act on objects in realistic scenes. The experiments here reveal that the spatial resolution of both visually guided reaching and perception is limited to about the same degree. Importantly, however, the manner in which the perceptual and visuomotor systems rely on contextual information in scenes differs; perception depends on integrating the orientation of objects across the scene, while the visuomotor system, conversely, discounts the surrounding context and sometimes even repels away from it. The results suggest that although there are absolute limits to the spatial resolution of information available to perception and action, the visuomotor and perceptual systems weight space differently, and this differential weighting optimizes ensemble perception while leaving action toward individual objects as accurate as possible.

Several studies have shown that our perceptual system rapidly processes the mean, or ensemble characteristics of a cluttered scene, even when objects are quite complex (Ariely, 2001; Chong and Treisman, 2003; Haberman and Whitney, 2007). This information may support texture perception, or summary information about the summary of a scene (Parkes et al., 2001). Precise information about a crowded target's orientation, while unavailable to perceptual report, nevertheless contributes precisely to the perception of the average global orientation of an array via a highly precise linear averaging (Parkes et al., 2001). While these ensemble percepts provide a very useful heuristic for perception, it is less clear how the metrics of a reach system would benefit from such averaging. In most situations, we reach toward object boundaries and contours, not toward a global texture. Therefore, the dissociated responses found in the current study may reflect the most advantageous solution for perception and action. When the target cannot be individuated and recognized (due to crowding), integrating distractor information is not beneficial because the ensemble orientation information is not informative as to the tilt of the target.

While research on visual crowding is plentiful, the degree to which action is impacted by clutter is relatively understudied. Research using visual search tasks has shown that saccadic eye movements change as a function of crowding (i.e., object spacing and similarity of non-target features). These studies provide the most direct evidence that the density and similarity or non-target features degrade the accuracy, and slow the speed of saccades in cluttered scenes (Vlaskamp et al., 2005; Vlaskamp and Hooge, 2006). These authors concluded that measures of the “visual span” – the size of the field that is searched per saccade – is directly related to the size of the crowded region (Chung et al., 1998; Vlaskamp and Hooge, 2006). Thus crowding is proposed to be the primary limiting factor for reading (Pelli et al., 2007). Distractor objects have also been found to slow ballistic hand movements to a target and change the biomechanics of grasp size (Howard and Tipper, 1997; Tipper et al., 1997; Mon-Williams et al., 2001). These effects are not crowding, per se, but are proposed to be part of a visuomotor strategy of obstacle avoidance. The current studies isolate crowding specifically, and in so doing reveal the spatial resolution of visually guided action across eccentricity.

Our findings lend credence to both sides of a contentious debate as to whether perception and action can be dissociated in normal observers (Goodale and Milner, 1992). In most of these studies, participants’ visuomotor responses are typically less influenced by contextual illusions of size or orientation. Action tends to correlate more with the actual metric information about the target, and this is thought by some to reflect differential processing mechanisms or frames of reference in the ventral and dorsal visual pathways (Ungerleider and Mishkin, 1982; Goodale and Milner, 1992; Aglioti et al., 1995; Carey, 2001; Ganel et al., 2008), but see (Post and Welch, 1996; Franz, 2001; Smeets and Brenner, 2006; Franz and Gegenfurtner, 2008). The current study tested the hypothesis that a visuomotor response, such as grasping, could be dissociated from perceptual report in the crowding paradigm, which is distinct from the abovementioned studies. We found that visually guided grasping did not “break” crowding – visuomotor responses were no closer to the actual orientation of the target than was visual perception, but grasping differentially weighted surround information. Thus, the spatial resolution of grasping, which reflects the internal representation of a crowded target available for action, is similar to the resolution of vision.

Several potential neural mechanisms could potentially explain the observed differences between perceptual and visuomotor responses. There could be differences in the extent of spatial integration or pooling for perception and action; there could also be differences in the cortical magnification and/or delays in timing between perceptual and visuomotor systems that may lead to a dissociation in the use of contextual information. To test the possibility that cortical magnification plays a different role in perceptual and visuomotor processing, a stimulus that offsets cortical magnification by varying in contrast or size could be employed (Rovamo and Virsu, 1979; Makela et al., 2001). Whether cortical magnification differentially affects visual information used for perception and action is an intriguing question, and should be investigated in future studies. Likewise, whether crowding occurs on a different time scale for perception and action is an interesting but open question.

Perceptual and visuomotor systems often work in close association for everyday tasks (Pelz et al., 2001). In day-to-day routines, there is often undesirable clutter filling our visual world. The current study shows that the resolution – the finest grain of detail available to perceptual and visuomotor systems – is similarly limited by the degree of clutter, or crowding. However, we found differences in the use of the surrounding information, which reflects a more optimal use of information by each system. It is beneficial to perceive group information such as texture, while we rarely reach toward that same information. Research on crowding should therefore consider the differential effects that it may have on action. Do the training effects found in perceptual crowding translate to better visuomotor resolution as well (Green and Bavelier, 2003, 2007; Chung, 2007; Huckauf and Nazir, 2007)? If so, perceptual training within cluttered scenes may improve visuomotor performance in individuals with central field visual loss (e.g., Timberlake et al., 2008).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank Jason Fischer for help with data visualization and David Horton for his helpful advice throughout. Work supported by NIH and NSF.

References

- Aglioti S., DeSouza J. F., Goodale M. A. (1995). Size-contrast illusions deceive the eye but not the hand. Curr. Biol. 5, 679–685 10.1016/S0960-9822(95)00133-3 [DOI] [PubMed] [Google Scholar]

- Alvarez G. A., Oliva A. (2008). The representation of simple ensemble visual features outside the focus of attention. Psychol. Sci. 19, 392–398 10.1111/j.1467-9280.2008.02098.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alvarez G. A., Oliva A. (2009). Spatial ensemble statistics are efficient codes that can be represented with reduced attention. Proc. Natl. Acad. Sci. U.S.A. 106, 7345–7350 10.1073/pnas.0808981106 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ariely D. (2001). Seeing sets: representation by statistical properties. Psychol. Sci. 12, 157–162 10.1111/1467-9280.00327 [DOI] [PubMed] [Google Scholar]

- Bouma H. (1970). Interaction effects in parafoveal letter recognition. Nature 226, 177–178 10.1038/226177a0 [DOI] [PubMed] [Google Scholar]

- Brainard D. H. (1997). The psychophysics toolbox. Spat. Vis. 10, 433–436 10.1163/156856897X00357 [DOI] [PubMed] [Google Scholar]

- Carey D. P. (2001). Do action systems resist visual illusions? Trends Cogn. Sci. 5(3), 109–113 10.1016/S1364-6613(00)01592-8 [DOI] [PubMed] [Google Scholar]

- Chong S. C., Treisman A. (2003). Representation of statistical properties. Vis. Res. 43, 393–404 10.1016/S0042-6989(02)00596-5 [DOI] [PubMed] [Google Scholar]

- Chung S. T. (2007). Learning to identify crowded letters: does it improve reading speed? Vis. Res. 47, 3150–3159 10.1016/j.visres.2007.08.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chung S. T., Mansfield J. S., Legge G. E. (1998). Psychophysics of reading. XVIII. The effect of print size on reading speed in normal peripheral vision. Vis. Res. 38, 2949–2962 10.1016/S0042-6989(98)00072-8 [DOI] [PubMed] [Google Scholar]

- Danckert J., Goodale M. A. (2001). Superior performance for visually guided pointing in the lower visual field. Exp. Brain Res. 137, 303–308 10.1007/s002210000653 [DOI] [PubMed] [Google Scholar]

- De Valois R. L., Webster M. A., De Valois K. K., Lingelbach B. (1986). Temporal properties of brightness and color induction. Vis. Res. 26, 887–897 10.1016/0042-6989(86)90147-1 [DOI] [PubMed] [Google Scholar]

- Feng C., Jiang Y., He S. (2007). Horizontal and vertical asymmetry in visual spatial crowding effects. J. Vis. 7, 13, 1–10 [DOI] [PubMed] [Google Scholar]

- Franz V. H. (2001). Action does not resist visual illusions. Trends Cogn. Sci. 5, 457–459 10.1016/S1364-6613(00)01772-1 [DOI] [PubMed] [Google Scholar]

- Franz V. H., Gegenfurtner K. R. (2008). Grasping visual illusions: consistent data and no dissociation. Cogn Neuropsychol. 25, 920–950 10.1080/02643290701862449 [DOI] [PubMed] [Google Scholar]

- Ganel T., Tanzer M., Goodale M. A. (2008). A double dissociation between action and perception in the context of visual illusions: opposite effects of real and illusory size. Psychol. Sci. 19, 221–225 10.1111/j.1467-9280.2008.02071.x [DOI] [PubMed] [Google Scholar]

- Goodale M. A., Milner A. D. (1992). Separate visual pathways for perception and action. Trends Neurosci. 15(1), 20–25 10.1016/0166-2236(92)90344-8 [DOI] [PubMed] [Google Scholar]

- Green C. S., Bavelier D. (2003). Action video game modifies visual selective attention. Nature 423, 534–537 10.1038/nature01647 [DOI] [PubMed] [Google Scholar]

- Green C. S., Bavelier D. (2007). Action-video-game experience alters the spatial resolution of vision. Psychol. Sci. 18, 88–94 10.1111/j.1467-9280.2007.01853.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haberman J., Whitney D. (2007). Rapid extraction of mean emotion and gender from sets of faces. Curr. Biol. 17, R751–R753 10.1016/j.cub.2007.06.039 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haberman J., Whitney D. (2009). Seeing the mean: Ensemble coding for sets of faces. J. Exp. Psychol. Hum. Percept. Perform. 35, 718–734 10.1037/a0013899 [DOI] [PMC free article] [PubMed] [Google Scholar]

- He S., Cavanagh P., Intriligator J. (1996). Attentional resolution and the locus of visual awareness. Nature 383, 334–337 10.1038/383334a0 [DOI] [PubMed] [Google Scholar]

- He S., Cavanagh P., Intriligator J. (1997). Attentional resolution. Trends Cogn. Sci. 1, 115–121 10.1016/S1364-6613(97)89058-4 [DOI] [PubMed] [Google Scholar]

- Howard L. A., Tipper S. P. (1997). Hand deviations away from visual cues: indirect evidence for inhibition. Exp. Brain Res. 113, 144–152 10.1007/BF02454150 [DOI] [PubMed] [Google Scholar]

- Huckauf A., Nazir T. A. (2007). How odgcrnwi becomes crowding: stimulus-specific learning reduces crowding. J. Vis. 7, 18, 1–12 [DOI] [PubMed] [Google Scholar]

- Levi D. M. (2008). Crowding – an essential bottleneck for object recognition: a mini-review. Vis. Res. 48, 635–654 10.1016/j.visres.2007.12.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Makela P., Nasanen R., Rovamo J., Melmoth D. (2001). Identification of facial images in peripheral vision. Vis. Res. 41, 599–610 10.1016/S0042-6989(00)00259-5 [DOI] [PubMed] [Google Scholar]

- Mon-Williams M., Tresilian J. R., Coppard V. L., Carson R. G. (2001). The effect of obstacle position on reach-to-grasp movements. Exp. Brain Res. 137, 497–501 10.1007/s002210100684 [DOI] [PubMed] [Google Scholar]

- Oliva A., Torralba A. (2007). The role of context in object recognition. Trends Cogn. Sci. 11, 520–527 10.1016/j.tics.2007.09.009 [DOI] [PubMed] [Google Scholar]

- Palmer S. E. (1999). Vision Science: Photons to Phenomenology. Cambridge, MA, MIT Press [Google Scholar]

- Parkes L., Lund J., Angelucci A., Solomon J. A., Morgan M. (2001). Compulsory averaging of crowded orientation signals in human vision. Nat. Neurosci. 4, 739–744 10.1038/89532 [DOI] [PubMed] [Google Scholar]

- Pelli D. G. (1997). The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat. Vis. 10, 437–442 10.1163/156856897X00366 [DOI] [PubMed] [Google Scholar]

- Pelli D. G. (2008). Crowding: a cortical constraint on object recognition. Curr. Opin. Neurobiol. 18, 445–451 10.1016/j.conb.2008.09.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelli D. G., Tillman K. A., Freeman J., Su M., Berger T. D., Majaj N. J. (2007). Crowding and eccentricity determine reading rate. J. Vis. 7, 20, 1–36 [DOI] [PubMed] [Google Scholar]

- Pelz J., Hayhoe M., Loeber R. (2001). The coordination of eye, head, and hand movements in a natural task. Exp. Brain Res. 139, 266–277 10.1007/s002210100745 [DOI] [PubMed] [Google Scholar]

- Post R. B., Welch R. B. (1996). Is there dissociation of perceptual and motor responses to figural illusions? Perception 25, 569–581 10.1068/p250569 [DOI] [PubMed] [Google Scholar]

- Rovamo J., Virsu V. (1979). An estimation and application of the human cortical magnification factor. Exp. Brain Res. 37, 495–510 10.1007/BF00236819 [DOI] [PubMed] [Google Scholar]

- Singer B., D'Zmura M. (1994). Color contrast induction. Vis. Res. 34, 3111–3126 10.1016/0042-6989(94)90077-9 [DOI] [PubMed] [Google Scholar]

- Smeets J. B., Brenner E. (2006). 10 years of illusions. J. Exp. Psychol. Hum. Percept. Perform. 32, 1501–1504 10.1037/0096-1523.32.6.1501 [DOI] [PubMed] [Google Scholar]

- Timberlake G. T., Grose S. A., Quaney B. M., Maino J. H. (2008). Retinal image location of hand, fingers, and objects during manual tasks. Optom. Vis. Sci. 85, 270–278 10.1097/OPX.0b013e31816928b9 [DOI] [PubMed] [Google Scholar]

- Tipper S., Howard L., Jackson S. (1997). Selective reaching to grasp: evidence for distractor interference effects. Vis. Cogn. 4, 1–38 10.1080/713756749 [DOI] [Google Scholar]

- Toet A., Levi D. M. (1992). The two-dimensional shape of spatial interaction zones in the parafovea. Vis. Res. 32, 1349–1357 10.1016/0042-6989(92)90227-A [DOI] [PubMed] [Google Scholar]

- Ungerleider L., Mishkin M. (1982). Two cortical visual systems. In Analysis of Visual Behavior, Ingle D. J., Goodale M. A., Mansfield R. J. W., eds (Cambridge, MA, MIT Press; ), pp. 549–586 [Google Scholar]

- Vlaskamp B. N., Hooge I. T. (2006). Crowding degrades saccadic search performance. Vis. Res. 46, 417–425 10.1016/j.visres.2005.04.006 [DOI] [PubMed] [Google Scholar]

- Vlaskamp B. N., Over E. A., Hooge I. T. (2005). Saccadic search performance: the effect of element spacing. Exp. Brain Res. 167, 246–259 10.1007/s00221-005-0032-z [DOI] [PubMed] [Google Scholar]

- Wolford G., Chambers L. (1983). Lateral masking as a function of spacing. Percept. Psychophys. 33, 129. [DOI] [PubMed] [Google Scholar]