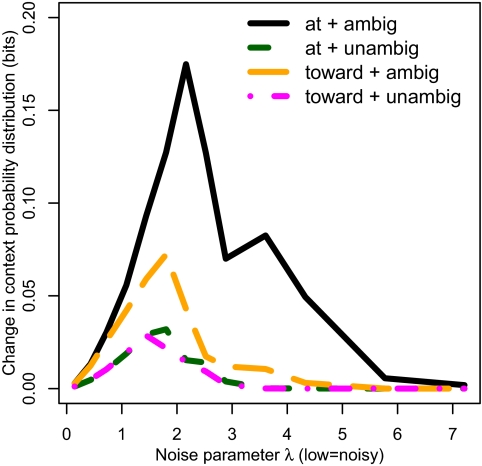

Fig. 1.

Size of change (K-L divergence) in expected probability distribution over preceding word sequences upon encountering tossed/thrown in sentences 2 and 3, under noisy-channel Bayesian inference. The parameter λ, expressing the level of perceptual noise, is free in the model; over a wide range of parameter values, the sentence types observed to be harder for humans to process are those in which the shift in probability distribution is larger. Variants of the true context have probability exponentially decreasing in λ times the Levenshtein edit distance from the true context. See SI Appendix for model details.