Abstract

Pigeon and human subjects were given repeated choices between variable and adjusting delays to token reinforcement that titrated in relation to a subject's recent choice patterns. Indifference curves were generated under two different procedures: immediate exchange, in which a token earned during each trial was exchanged immediately for access to the terminal reinforcer (food for pigeons, video clips for humans), and delayed exchange, in which tokens accumulated and were exchanged after 11 trials. The former was designed as an analogue of procedures typically used with nonhuman subjects, the latter as an analogue to procedures typically used with human participants. Under both procedure types, different variable-delay schedules were manipulated systematically across conditions in ways that altered the reinforcer immediacy of the risky option. Under immediate-exchange conditions, both humans and pigeons consistently preferred the variable delay, and indifference points were generally ordered in relation to relative reinforcer immediacies. Such risk sensitivity was greatly reduced under delayed-exchange conditions. Choice and trial-initiation response latencies varied directly with indifference points, suggesting that local analyses may provide useful ancillary measures of reinforcer value. On the whole, the results indicate that modifying procedural features brings choices of pigeons and humans into better accord, and that human–nonhuman differences on risky choice procedures reported in the literature may be at least partly a product of procedural differences.

Keywords: risky choice, reinforcer delay, adjusting-delay procedures, cross-species comparisons, token reinforcement, key peck, keyboard response, pigeons, adult humans

Research in the area of risky choice is broadly concerned with how the value of a reinforcer is affected by the variance associated with that reinforcer. Risky choice is typically studied by giving subjects choices between fixed and variable outcomes of the same arithmetic mean. Preference for the fixed (certain) outcome has been termed risk aversion, and preference for the variable (uncertain) outcome risk proneness. With choices between fixed and variable delays to reinforcement, nonhumans are strongly risk prone, preferring the variable delay (see Mazur, 2004, for a review). This robust sensitivity to reinforcer delay has been found across a broad range of species (insects, fish, birds, and mammals; Kacelnik & Bateson, 1996), and reinforcer types (e.g., water, food; Bateson & Kacelnik, 1995, 1997; Case, Nichols, & Fantino, 1995; Cicerone, 1976; Davison, 1969, 1972; Fantino, 1967; Frankel & vom Saal, 1976; Gibbon, Church, Fairhurst, & Kacelnik, 1988; Herrnstein, 1964; Hursh & Fantino, 1973; Kendall, 1989; Killeen, 1968; Logan, 1965; Mazur, 1984; Navarick & Fantino, 1975; O'Daly, Case, & Fantino, 2006; Orduna & Bouzas, 2004; Rider, 1983; Sherman & Thomas, 1968; Zabludoff, Wecker, & Caraco, 1988).

Although scant by comparison, laboratory research with human subjects on similar procedures is mixed and difficult to interpret. Some results show risk aversion (e.g., Kohn, Kohn, & Staddon, 1992), others indifference between the outcomes (e.g., Weiner, 1966). A recent exception to these findings was reported by Locey, Pietras, and Hackenberg (2009). In their study, human participants were given repeated choices between fixed and variable reinforcer delays of the same arithmetic mean with 30-s video clips serving as reinforcers. The videos were preselected by the participants, and played only during reinforcement periods (i.e., the tape stopped at all other times). The variable-delay distributions were bivalued—one long and one short delay, the arithmetic average of which equaled the fixed delay. The fixed delay was systematically varied across conditions: 15 s (1 s and 29 s variable delays), 30 s (1 s and 59 s variable delays) and 60 s (1 s and 119 s variable delays). Similar to results with nonhumans but dissimilar to previous results with human participants, 3 of 4 subjects strongly preferred the variable delays to reinforcement.

In a second part of the study, the 3 participants who preferred the variable reinforcer delays were given repeated choices between different variable-delay schedules composed of different distribution types (exponential, bimodal, rectangular and normal). In general, the choice patterns were ordered with respect to distribution type, with stronger preference for the distributions with a higher proportion of short delays. The results were consistent with the nonlinear discounting of delayed reinforcement seen with other animals (e.g., Mazur, 1986a).

The disproportionate weighting of short delays reported by Locey et al. (2009) may have depended on the use of video rather than token (point or money) reinforcers. This is consistent with the results of other studies with human participants showing enhanced sensitivity to reinforcer delay with video reinforcers (Hackenberg & Pietras, 2000; Navarick, 1996). Unlike token reinforcers such as points, whose reinforcing efficacy depends on later exchange for other reinforcers, video reinforcers are “consumed” as they are earned. This may lend greater time urgency to video reinforcers as compared to the more conventional token-type reinforcer, bringing human behavior into greater alignment with that seen in other animals. If correct, this interpretation implies that previous differences in risky choice between human and nonhuman subjects may be more a function of procedural variables than actual species differences.

Additional support for this hypothesis would come from studying nonhuman choices in situations more akin to the token-type reinforcement systems commonly employed in research with humans. For example, in a self-control task, Jackson and Hackenberg (1996) gave pigeons repeated choices between a single token delivered immediately and three tokens delivered after a brief delay. Tokens could be exchanged for food during scheduled exchange periods later each trial. Across conditions, the delay to the exchange period was manipulated. When the exchange period was scheduled immediately following each token delivery, the pigeons preferred the smaller–sooner reinforcer (i.e., impulsivity), a result typically observed with nonhumans. When the exchange period was scheduled after a fixed delay from either choice, however, preference reversed in favor of the larger–later reinforcer (i.e., self-control), a result typically observed with humans. In other words, when the procedures were more typical of nonhuman self-control procedures—differential delays to food—more “animal-like” performance was seen, but when the procedures were more typical of human self-control procedures—with delays to terminal reinforcers held constant—more “human-like” performance was seen. This lends support to the idea that procedural variables, such as the delay to the exchange period, may be responsible for cross-species differences in choice behavior.

The present study sought to bring into greater alignment the procedures used with humans and nonhuman subjects in a risky choice context. It involved explicit comparisons across (a) species (humans and pigeons), and (b) procedures (consumable-type and token-type reinforcers). Choices by pigeons (Experiment 1) and humans (Experiment 2) produced tokens exchangeable for consumable-type reinforcers: mixed-grain for pigeons and preferred video clips for humans. The procedures were structured in ways that mimicked consumable-type and token-type reinforcement systems. This was accomplished by manipulating the exchange delay—the time between earning a token and exchanging it for the terminal reinforcer. In some conditions (immediate exchange), a token could be exchanged for the reinforcer as soon as it was earned. In other conditions (delayed exchange), groups of 11 tokens had to be earned prior to exchange for reinforcement. Immediate-exchange conditions are more akin to consumable-type reinforcement procedures, permitting immediate consumption of the reinforcer. Delayed-exchange conditions are more akin to token-type reinforcement systems, in which the exchange period is scheduled after a group of tokens has been earned. If choice patterns depend at least in part on these procedural variables, then we would expect choices of both species to be more risk prone (preferring variable outcomes) under immediate-exchange than under delayed-exchange conditions.

Risk sensitivity in both experiments was assessed with an adjusting-delay procedure, in which the delay to a constant (standard) alternative changes systematically in relation to a subject's recent choice patterns. Subjects chose between a bi-valued (mixed-time) and an adjusting delay to token reinforcement. The main manipulation concerned changes in the economic context; or more specifically, the delay between earning a token and exchanging it for food (Experiment 1) or video (Experiment 2). Under immediate-exchange conditions, exchange periods were scheduled just after each token was earned, permitting immediate exchange and consumption of the reinforcer. Under delayed-exchange conditions, exchange periods were scheduled after 11 tokens had been earned, requiring accumulation of tokens for later exchange and consumption. The goal under both procedures was to establish indifference points—points of subjective equality—between variable and adjusting reinforcer delays.

A second manipulation concerned the delays comprising the variable schedule. These were changed in such a way to alter the relative reinforcer immediacies while holding constant the mean reinforcer delay. Choice patterns of both species under both procedure types were evaluated in relation to Mazur's (1986b) model:

| 1 |

where the subjective value (V) of a delayed outcome that delivers one of n possible delays on a given trial, is a function of the amount of reinforcement (A), the delay to the outcome (D), and sensitivity to changes in delay (K). Pi is the probability that a delay of Di seconds will occur. This model predicts that the value or strength of a reinforcer decreases as the delay to reinforcement increases, with the strength of a variable delay computed by taking the average value weighted by the probability of occurrence of all programmed delays.

Equation 1 has provided a good description of prior results on adjusting delay procedures, including the risky-choice variant used here (Mazur, 1986b). The Locey et al. (2009) results, described above, were broadly consistent with the delay-based calculation of reinforcer value proposed by Equation 1, in that the model reliably predicted the direction of preference for all subjects. More precise predictions of the model were precluded, however, by the near exclusive preferences generated by the experimental procedures.

Titrating procedures, like those used in the present study, have been shown to produce dynamic and graded measures of preference, permitting a sharper quantitative assessment of temporal discounting. In addition, these procedures have been used effectively in laboratory studies of reinforcer discounting in a range of species (Mazur, 1987, 2007; Rodriguez & Logue, 1988), and are therefore well suited to the present cross-species analysis. Combining these procedures with the manipulations of economic context enables a quantitative comparison of risky choice across procedure type and species.

EXPERIMENT 1

Method

Subjects

Four experimentally naïve male White Carneau pigeons (Columba livia), numbered 75, 995, 967 and 710, served as subjects. All were maintained at approximately 83% of their free-feeding weights. Pigeons were housed individually in a colony room, with continuous access to grit and water outside of the experimental sessions. The colony room was lighted on a 16:8 hr light/dark cycle.

Apparatus

One Lehigh Valley Electronics® operant chamber (31 cm long, 35 cm wide, 37 cm high), with an altered control panel was used. The panel displayed three horizontally-spaced response keys, each 2.5 cm in diameter and mounted 5.5 cm apart, requiring a force of approximately 0.26 N to operate. Each side key could be illuminated to produce green, red, or yellow colors; the center key could be red or white. Twelve evenly-spaced red stimulus lamps served as tokens. The tokens were positioned 2.5 cm above the response keys and arranged horizontally at 0.8 cm apart. A 0.1-s tone accompanied each token illumination and darkening. A square opening located 9 cm below the center response key and 10 cm above the floor allowed for access to mixed grain when the food hopper was raised. The hopper area contained a light that was illuminated during food deliveries, and a photocell that allowed for precisely timed access to grain. The chamber also contained a houselight, aligned 5 cm above the center response key. A sound-attenuating box enclosed the chamber, with ventilation fans and white noise generators continuously running to mask extraneous noise during sessions. Experimental contingencies were arranged and data recorded via a computer programmed in MedState Notation Language with Med-PC® software.

Procedure

Training

Before exposure to the experimental contingencies, the pigeons received several weeks of daily sessions on a backward-chaining procedure designed to train token production and exchange. Following magazine and key-peck shaping, pecking the center (red) key in the presence of one illuminated token turned off the token light, produced a 0.1-s tone, and raised the hopper for 2.5 s. Once this exchange response was established, pigeons were required to peck one or the other side key (illuminated either green or yellow with equal probability each cycle) to produce (illuminate) a token. Token production was accompanied by a 0.1-s tone and led immediately to the exchange period, during which the side keys were darkened and the center red key was illuminated. As before, a single peck on the center exchange key turned off the token light, produced a 0.1-s tone and raised the hopper for 2.5 s. When this chain of responses had been established, the next training phase required 11 tokens to accumulate (by making 11 responses on the illuminated token-production keys) before advancing to the exchange period. The side position of the illuminated token-production key continued to occur randomly after each token presentation. Tokens were always produced from left to right and withdrawn from right to left.

Experimental procedure

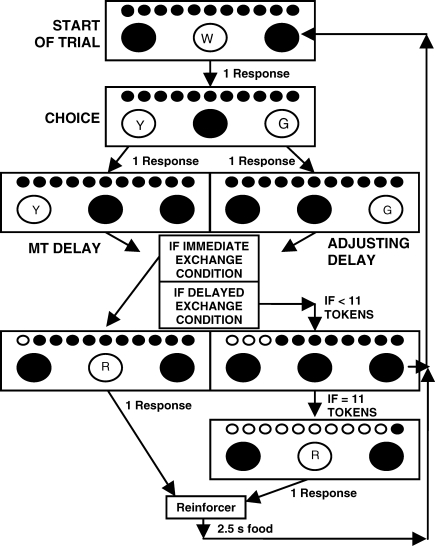

Figure 1 shows a procedural schematic of contingencies on a typical choice trial, in which subjects chose between variable (mixed-time, MT) and adjusting time delays. The first two trials in a session were forced-choice trials designed to provide exposure to both choice alternatives. These trials were the same as free-choice trials except that only one response key was illuminated and effective. Thereafter, when either alternative was selected on two consecutive free-choice trials, a forced-choice of the other, nonchosen, alternative was presented. The remaining trials were free-choice trials, in which both options were available. These trials began with a trial-initiation response, requiring a response on the center white key, after which both side keys were illuminated: one with green light and the other with yellow light. The position of the alternatives was determined randomly each trial.

Fig 1.

Procedural schematic of a free-choice trial in both the immediate and delayed exchange conditions. Blackened keys indicate that they are not illuminated. Tokens are always illuminated red. Reinforcer is 2.5-s access to food. W = white, Y = yellow, G = green, R = red, MT = Mixed Time.

Throughout the experiment, a single response on the green response key initiated the adjusting delay, and a single response on the yellow response key initiated the MT delay. For the duration of the delays, the chosen key color remained illuminated while the houselight and other key alternative were darkened. After the delay had elapsed, one token stimulus light and the houselight were illuminated. During the exchange periods, wherein subjects could “trade in” earned tokens for access to food, both side keys were darkened and the center red key was illuminated. One response on the center key turned off a single token light and produced the 2.5-s access to mixed grain. When all tokens had been exchanged, a new trial began.

Each selection of the MT alternative decreased the adjusting delay on the subsequent trial by 10%. Conversely, each selection of the adjusting alternative increased by 10% the adjusting delay on the subsequent trial. The adjusting delay had a lower limit of 1 s and an upper limit of 120 s, and was unaffected by forced-choice responses.

There were two independent variables in the experiment: (1) MT distribution, and (2) exchange delay. The MT distribution consisted of four values, all with the same overall mean interfood interval of 30 s: (a) 1 s, 59 s; (b) 10 s, 50 s; (c) 20 s, 40 s; and (d) 30 s, 30 s (or FT 30 s). The exchange delay consisted of two values: immediate and delayed. In immediate conditions, the exchange period commenced immediately after each token was earned. In delayed conditions, the exchange period did not occur until 11 tokens had been earned (from free- and forced-choice trials combined). In these delayed-exchange conditions, all 11 tokens were exchanged for reinforcers in succession, each requiring one exchange response on the center red key per token. Each session consisted of 44 trials, hence, 44 food deliveries. The only difference between immediate and delayed-exchange was in the timing and number of exchange periods: 44 exchange periods with one reinforcer per exchange (immediate exchange) or 4 exchange periods with 11 reinforcers per exchange (delayed exchange).

The MT distribution was varied systematically across experimental conditions, first under immediate exchange conditions, then under delayed exchange conditions. These latter conditions also included interspersed replications of immediate-exchange conditions at each MT value to facilitate comparisons of the immediate and delayed exchange. To provide comparable starting points, each experimental condition began with the adjusting delay set to 30 s (equivalent to the mean interreinforcer interval of the MT schedule). Most conditions were conducted without an intertrial interval (ITI) separating successive choice trials, but one condition per subject also included an ITI. During this condition, the ITI was at least 10 s in length, and if the adjusting delay was chosen on the previous trial and was currently shorter than 30 s, the adjusting delay was subtracted from 30 and added to the 10 s ITI. This requirement held approximately equal the overall rate of reinforcement from both alternatives. Table 1 lists the sequence and number of sessions per condition.

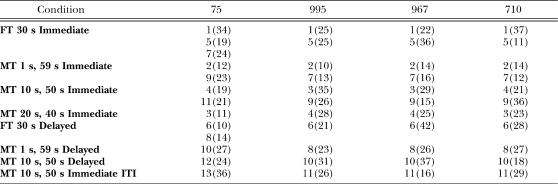

Table 1.

Sequence and number of sessions per condition (in parentheses) for each pigeon in Experiment 1.

| Condition | 75 | 995 | 967 | 710 |

| FT 30 s Immediate | 1(34) | 1(25) | 1(22) | 1(37) |

| 5(19) | 5(25) | 5(36) | 5(11) | |

| 7(24) | ||||

| MT 1 s, 59 s Immediate | 2(12) | 2(10) | 2(14) | 2(14) |

| 9(23) | 7(13) | 7(16) | 7(12) | |

| MT 10 s, 50 s Immediate | 4(19) | 3(35) | 3(29) | 4(21) |

| 11(21) | 9(26) | 9(15) | 9(36) | |

| MT 20 s, 40 s Immediate | 3(11) | 4(28) | 4(25) | 3(23) |

| FT 30 s Delayed | 6(10) | 6(21) | 6(42) | 6(28) |

| 8(14) | ||||

| MT 1 s, 59 s Delayed | 10(27) | 8(23) | 8(26) | 8(27) |

| MT 10 s, 50 s Delayed | 12(24) | 10(31) | 10(37) | 10(18) |

| MT 10 s, 50 s Immediate ITI | 13(36) | 11(26) | 11(16) | 11(29) |

Sessions were conducted daily, 7 days per week. Conditions remained in effect for a minimum of 10 sessions and until stable indifference points were obtained. To assess stability, free choice trials in a session (typically between 30 and 35, but variable given the nature of the forced-choice trials) were divided in half and median adjusting values were calculated, resulting in two data points per session. Data were judged visually stable with no trend or bounce, and according to the following criteria: (1) the last six sessions (12 data points) contained neither the highest nor lowest half-session median of the condition; and (2) the mean of the last three sessions could not differ from the mean of the previous three sessions, nor could either grouping differ from the overall six-session mean by more than 15% or 1 s (whichever was larger).

Results

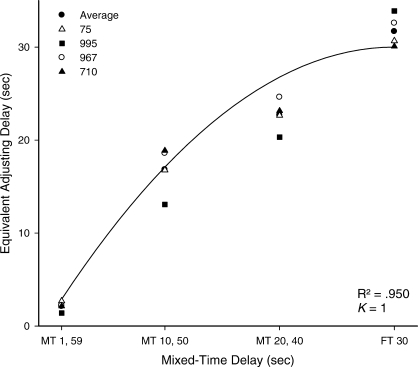

Indifference points between the variable and adjusting delays were determined by averaging the obtained adjusting delays over the last 12 data points (six sessions) per condition. Indifference points resulting from the immediate exchange conditions were evaluated in relation to Equation 1. Figure 2 displays the obtained indifference points for all 4 subjects under immediate-exchange conditions plotted as a function of the MT delay, with the MT schedules arranged along the x-axis according to their smallest included delay. Data from initial and replication conditions were averaged in this plot, providing one data point per subject per condition. The curve displays the predicted delays provided by Equation 1 with A = 100 (an arbitrary value because amount was not manipulated) and parameter K held constant at 1 (a value which has provided a good description of prior pigeon data; Mazur, 1984, 1986b, 1987). This, too, proved appropriate for our results, with Equation 1 accounting for 95.0% of the variance in performance. The equation was particularly appropriate when accounting for data obtained in the MT conditions containing one relatively short delay to reinforcement (i.e., MT 1 s, 59 s and MT 10 s, 50 s).

Fig 2.

Mean indifference points as a function of the mixed-time (MT) or fixed-time (FT) delay condition for all 4 subjects in Experiment 1. The curve displays predicted indifference points from Equation 1 with K = 1. See text for additional details.

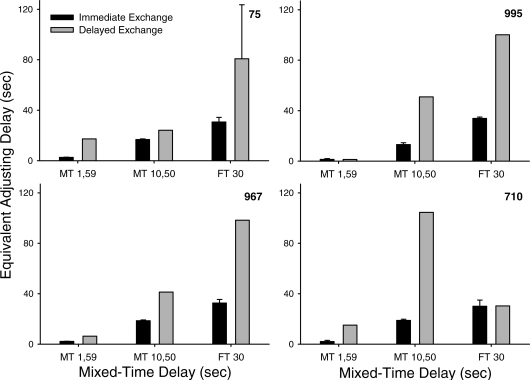

The pooled data of Figure 2 are consistent with the data for individual subjects. The individual-subject indifference points can be seen in Figure 3, along with those from the delayed-exchange conditions. The delayed-exchange conditions produced higher indifference points at each MT value. Similar to performance in the immediate-exchange conditions, indifference points generally increased as a function of the smallest delay in the MT schedule. At the longer delays, indifference points were generally in excess of the mean IRI of 30 s, reflecting increased choices for the adjusting delay.

Fig 3.

Mean indifference points for each subject from immediate-exchange (black bars) and delayed-exchange (grey bars) conditions as a function of the mixed-time (MT) or fixed-time (FT) delay. Error bars represent standard deviations.

Including a 10-s ITI had little effect on choice patterns. Indifference points during the initial and replication MT 10 s, 50 s conditions averaged 16.8 s without an ITI and 16.2 s with an ITI.

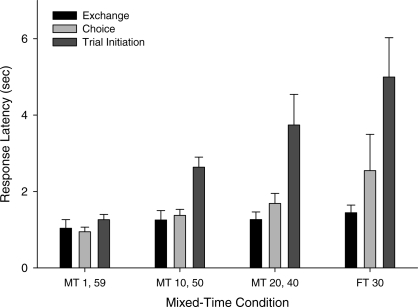

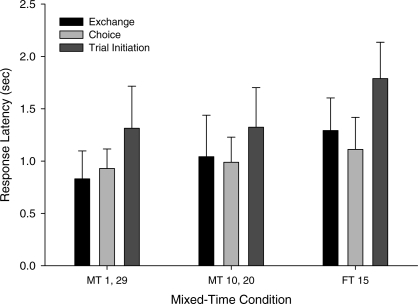

In addition to the choice data, latency measures were also analyzed across exchange-delay and MT-delay conditions. Figure 4 shows mean latencies, averaged across subjects and (adjusting and variable) trial types, under immediate-exchange conditions. The three bars correspond to latencies to respond on the exchange key, the choice key, and the trial-initiation key. Exchange latencies were relatively short and undifferentiated across conditions, but choice and trial-initiation latencies varied systematically as a function of the MT schedule: latencies increased as a direct function of the shortest delay in the MT schedule. These latencies showed some parallel to the overall preferences for the different MT delays, with shorter latencies occurring in conditions with smaller obtained adjusting delays.

Fig 4.

Mean latencies to respond on exchange, choice, and trial initiation keys as a function of the mixed-time (MT) or fixed-time (FT) delay, averaged across subjects, in immediate-exchange conditions. Error bars indicate across-subject standard deviations.

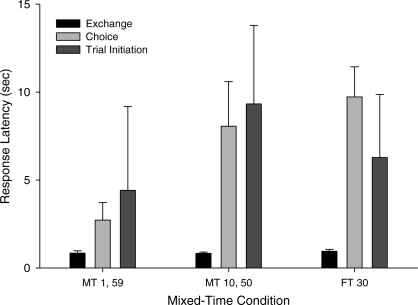

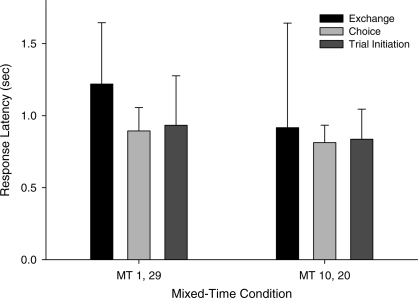

Figure 5 shows comparable latency data under delayed-exchange conditions. Overall, a similar pattern emerged, with shorter choice and trial-initiation latencies in the MT 1 s, 59 s condition than in the conditions with longer reinforcer delays. In comparing across Figures 4 and 5, note the differently scaled axes, showing the generally longer latencies under delayed-exchange than immediate-exchange conditions.

Fig 5.

Mean latencies to respond on exchange, choice, and trial initiation keys as a function of the mixed-time (MT) or fixed-time (FT) delay, averaged across subjects, in delayed-exchange conditions. Error bars indicate across-subject standard deviations.

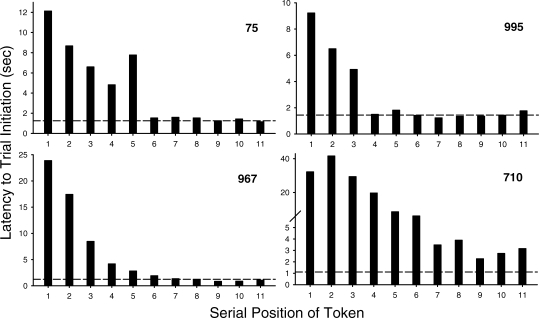

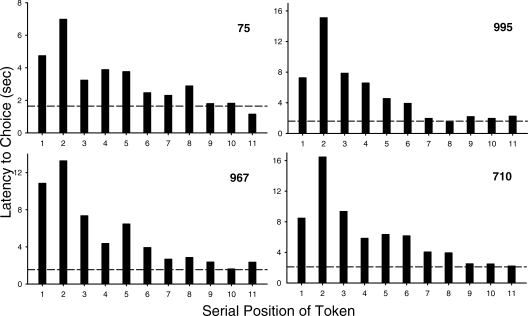

For delayed-exchange conditions, choice and trial-initiation latencies were also analyzed across trials leading to an exchange period. The results are displayed in Figures 6 and 7, respectively, with the dashed lines providing a reference point under immediate-exchange conditions. Trials preceding an exchange period are shown on the abscissa, with proximity decreasing from left to right. For all pigeons, both trial initiation (Figure 6) and choice latencies (Figure 7) were longer in the early than in the late trials preceding exchange, and in many cases were a graded function of trial position.

Fig 6.

Mean latencies to respond on the center trial-initiation key as a function of trial position across successive blocks of trials preceding an exchange period under delayed-exchange conditions. The leftmost point on the abscissa indicates the trial furthest from the upcoming exchange period. The dashed line in each plot indicates the comparable mean latencies under immediate-exchange conditions. Note the differently scaled y-axes.

Fig 7.

Mean latencies to respond on the choice keys as a function of trial position across successive blocks of trials preceding an exchange period under delayed-exchange conditions. The dashed line in each plot indicates the comparable mean latencies under immediate-exchange conditions. Note the differently scaled y-axes.

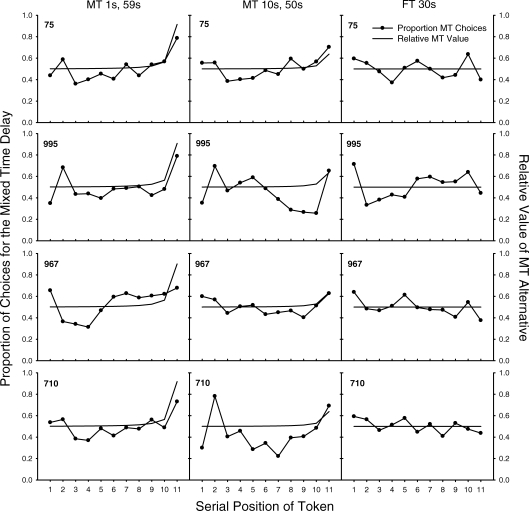

Choice patterns in delayed-exchange conditions were also analyzed in relation to trial position and Equation 1. The closed circles in Figure 8 show the proportion of choices made for the variable (MT) schedule as a function of trial position preceding an exchange period. The leftmost point on the x-axis represents the first token earned (the point furthest from the upcoming exchange), and the rightmost point the trial immediately preceding exchange. The reference lines show the relative value of the MT (variable) alternative across trial positions preceding an exchange period under the MT 1 s, 59 s (leftmost panels), MT 10 s, 50 s (center panels) and FT 30 s delayed-exchange conditions, computed with respect to exchange delays according to Equation 1 (with D being the total average delay to the upcoming exchange period). To provide an example of how relative value was computed, in the MT 1 s, 59 s condition the average delay to exchange from the start of a block of 11 trials is 330 s. At this choice point, the values of the two alternatives are roughly similar. The approximate value of the adjusting delay is 0.120 (computed with D = 330) and the MT delay 0.121 (computed with D = 301 and D = 359, each occurring with p = 0.5). When making a choice to earn the 11th token (the token directly preceding exchange), the values of the alternatives diverge, with the MT having a value of 14.54 and the adjusting delay an approximate value of 1.31. The relative value was then computed as the value of the MT alternative divided by the summed value of both alternatives. For the MT 1 s, 59 s and MT 10 s, 50 s conditions, the relative value is largely equivalent until the trial most proximal to the upcoming exchange period, at which point it shifts strongly in favor of the variable (MT) schedule. This predicted upturn in preference for the variable option in the trial preceding exchange is consistent with the data for 3 of the 4 subjects. With the exception of Pigeon 967 in the MT 1 s, 59 s condition, choice proportions were highest on the final trial preceding an exchange period during the MT 1 s, 59 s and MT 10 s, 50 s conditions, indicating strong preference for the variable schedule in close temporal proximity to an exchange. Choices during the FT 30 s schedule were largely undifferentiated throughout the 11 trials preceding exchange, which is consistent with the predictions of Equation 1.

Fig 8.

Closed circles show the proportion of choices for the mixed-time (MT) delay as a function of trial position across a block of trials preceding an exchange period in the MT 1 s, 59 s condition (left panels), the MT 10 s, 50 s condition (center panels), and the FT 30 s condition (right panels). The reference lines show the relative value (V) of the MT alternative across trials, computed according to Equation 1 with K = 1.

Discussion

The current experiment shows that pigeons are risk prone with respect to reinforcer delays, preferring variable delays at values less than the mean reinforcer delay, a finding that is consistent with previous nonhuman research with food reinforcers (e.g., Bateson & Kacelnik, 1995, 1997; Cicerone, 1976; Herrnstein, 1964; Killeen, 1968; Mazur, 1987; Rider, 1983). Additionally, risky-choice patterns were well described by the hyperbolic decay model when choices produced tokens immediately exchangeable for food. According to Equation 1, subjects should under immediate-exchange conditions prefer the variable (MT) schedule because the 50% probability of receiving the short reinforcement delay outweighs the value of the adjusting reinforcer delay, even when accounting for the diminished value of the relatively longer MT delay (Mazur, 2004). More specifically, obtained indifference points should be graded in relation to the short delay in the MT pair, such that lower indifference points are expected when the short delays are smallest. Our results confirm this: the pigeons more frequently chose the variable schedule, and obtained indifference points were ordered with respect to the shortest delay in the MT schedule. Choice patterns were well described by Equation 1 (holding K constant at 1) at both the individual and group level. This, too, is consistent with prior research (Mazur, 1984, 1986b, 1987). The fit was improved only slightly (95.2% vs. 95.0% of the variance accounted for) when K was allowed to vary across subjects (accomplished by reducing the sum of the squared residuals using the Solver function in Microsoft Excel®).

Conditions were also designed to encompass features common to most human risky choice experimentation. When subjects were required to produce 11 tokens prior to exchange for food, choice patterns differed markedly from those seen under immediate-exchange conditions. First, substantially higher indifference points were obtained, indicating sometimes extreme risk aversion. Sensitivity to delay was nevertheless demonstrated. First, indifference points were ordered with respect to the shortest delay in the MT distribution. Second, there was marked preference for the variable option on trials most proximal to exchange periods.

Preferences were systematically related to response latencies. In both immediate and delayed exchange conditions, shorter latencies to trial initiation and to choice were associated with risk-prone choice (lower indifference points). Moreover, in delayed-exchange conditions, both trial initiation (Figure 6) and choice latencies (Figure 7) were longer in the early than in the late trials, a result that is broadly consistent with the shift toward more risk-prone choices in trials proximal to the upcoming exchange (Figure 8). Such temporal regularities are consistent with those reported under extended-chained schedules of reinforcement (e.g., Bullock & Hackenberg, 2006; Webbe & Malagodi, 1978), supporting a view of the delayed-exchange procedure as a kind of token reinforcement schedule (see Hackenberg, 2009, for a review).

For none of the 4 subjects did the inclusion of an ITI have a discernible effect upon preferences, in that indifference points stabilized close to those obtained in previous conditions using the same MT schedule. This finding confirms Mazur's (1988, 1989) demonstration that the ITI has only a minimal effect upon choices compared to delays between choice and reinforcement, and will not be discussed further.

EXPERIMENT 2

In Experiment 1, delays to reinforcement were discounted more sharply in the immediate-exchange than in the delayed-exchange conditions. The reduced delay discounting when reinforcement is provided after a fixed period may at least partially account for the mixed results of previous human choice research using points later exchangeable for money at the end of the experimental session. This is the subject of Experiment 2.

Experiment 2 was designed to mimic critical features of Experiment 1 but with humans instead of pigeons and 30-s video clips instead of food. Participants chose between variable and adjusting delays to reinforcement under immediate-exchange and delayed-exchange conditions. Like Experiment 1, the current experiment included forced-choice trials and steady-state conditions that remained in effect until a predetermined stability criterion was met. If procedural variables are indeed the reason for the behavioral disparities across species, then humans' choices—like their pigeon counterparts in Experiment 1—should favor the variable schedule more strongly in immediate-exchange conditions than in delayed-exchange conditions. Moreover, if the video clips function as effective reinforcers, one might further expect Equation 1 to describe the choice patterns.

Method

Subjects

One male and 4 female undergraduate students between the ages of 18 and 22 participated. They were recruited via advertisements in a local university newspaper and fliers posted around the campus, and were selected for participation based on their daily availability, inexperience with behavior analysis, and high interest in watching television shows. Subjects were paid a flat rate of $3.00 per day, with an additional $3.50 per hour paid contingent upon completion of the study. The average overall earnings were $5.99 per hour (range, $5.90 to $6.08). Subjects were not paid until participation was completed. Prior to the beginning of the study, subjects signed an informed consent form and were told that they would be expected to participate 5 days per week for approximately 2 months.

Apparatus

Sessions were conducted in a closed experimental room measuring 2.7 m wide and 1.8 m long. Subjects were seated in front of a Gateway PC computer on which the experimental contingencies were arranged and data recorded via Microsoft Visual Basic® software. The computer was programmed to display three square response buttons (each 5 cm by 5 cm) that could be operated by positioning the cursor over one and clicking it with the computer mouse. Twelve circles located 3 cm above the response buttons served as tokens and were illuminated red when earned. Token illumination and darkening was accompanied by a 0.1-s beep. Reinforcement consisted of video clips from preferred television programs operated by Windows Media Player® and were displayed on the full screen of the computer during reinforcement periods.

Procedure

Subjects were exposed to two sessions per day, 5 days per week. Upon arrival, each subject was escorted to the experimental room, leaving behind possessions (e.g., watches, cell phones, book bags) in a different room. At the start of each session a subject was asked to select a television show to watch from a computer-displayed list of the following eight programs: Friends, Will and Grace, Family Guy, Wallace and Gromit, Sports Bloopers, Looney Tunes, The Simpsons, and Seinfeld. Many of the program choices were series with multiple episodes that were cycled through in sequence. Therefore, if a subject so chose, episodes of a single program could be viewed in series across successive sessions. A session consisted of a single full-length episode of a program (approximately 22 min, with commercials omitted) divided into 44 equivalent segments approximately 30 s in duration.

Once the program had been selected, the following instructions were displayed on the screen: “Use the mouse to earn access to videos. You will need to use only the mouse for this part of the experiment. When you are ready to begin, click the ‘begin’ button below.” At the end of each two-session visit, subjects rated the two recently viewed videos on a 1-5 point scale ranging from “Very Good” to “Very Bad”. Subjects also were asked to generally evaluate the sessions by typing into the computer an open-ended verbal response.

The start of each trial was marked by the simultaneous presentation of three square response buttons, centered vertically and horizontally on the screen. Only the center button was activated and illuminated; one mouse click on this trial-initiation button would then deactivate this button and allow access to the side choice keys. A single click on either key would initiate the scheduled delay and deactivate the nonchosen button. The chosen button remained illuminated throughout the delays. When the delay had elapsed, one token light was illuminated simultaneously with a 1-s beep. In immediate-exchange conditions, the exchange period (signaled by a red center button) occurred after a single token presentation. A single click on the exchange button presented full-screen access to the 30-s video clip. In delayed-exchange conditions, the exchange period was presented when 11 tokens had been earned. Each of the 11 tokens could be exchanged in immediate succession for eleven 30-s video clips by making one exchange response per video clip. The choice screen was presented again after each reinforcer, permitting additional exchange (if tokens remained) or the start of a new trial (if no tokens remained).

Prior to the start of the experiment, a delay sensitivity probe was implemented to assess control by delay to video reinforcement. In these sessions, subjects were given repeated choices between a 1-s and a 15-s delay to the onset of a 30-s video clip for at least two sessions of 44 trials before moving to the experiment proper. Of 6 participants tested, one did not consistently prefer the shorter delay to reinforcement and was terminated from participation in the study.

As in Experiment 1, MT distribution and exchange delay were manipulated systematically across conditions. The average IRI was held constant at 15 s, with standard MT delays of (a) 1 s, 29 s; (b) 10 s, 20 s; and (c) 15 s, 15 s (FT 15 s). At the beginning of each condition, the adjusting delay started at the average IRI of 15 s. Each selection of the MT alternative decreased the adjusting delay on the following trial by 10%, while each selection of the adjusting delay increased that delay by 10% on the subsequent trial. The adjusting delay had a lower limit of 1 s and an upper limit of 60 s. Conditions lasted a minimum of four sessions and were deemed stable via visual inspection of trend and bounce. Table 2 shows the sequence and number of conditions per participant. Two participants (247 and 250) voluntarily left the study early and did not complete all of the planned experimental conditions.

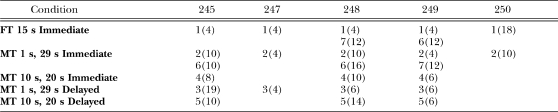

Table 2.

Sequence and number of sessions per condition (in parentheses) for each participant in Experiment 2.

| Condition | 245 | 247 | 248 | 249 | 250 |

| FT 15 s Immediate | 1(4) | 1(4) | 1(4) | 1(4) | 1(18) |

| 7(12) | 6(12) | ||||

| MT 1 s, 29 s Immediate | 2(10) | 2(4) | 2(10) | 2(4) | 2(10) |

| 6(10) | 6(16) | 7(12) | |||

| MT 10 s, 20 s Immediate | 4(8) | 4(10) | 4(6) | ||

| MT 1 s, 29 s Delayed | 3(19) | 3(4) | 3(6) | 3(6) | |

| MT 10 s, 20 s Delayed | 5(10) | 5(14) | 5(6) |

Results

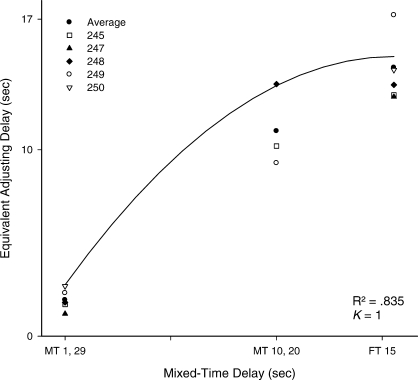

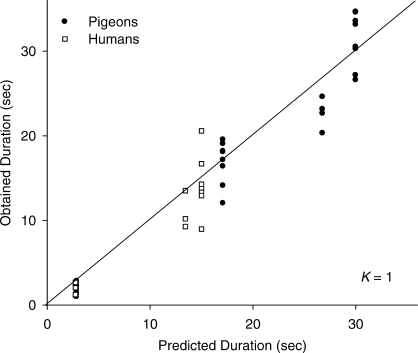

Indifference points were determined by averaging the obtained adjusting delays over the last three stable data points per condition. Figure 9 displays these indifference points for all participants, with the resulting pooled data fitted to Equation 1 with parameter K fixed at 1 (the same value used in Experiment 1). Data from initial and replication conditions were averaged, providing one indifference point per participant per condition. Equation 1 provided a good fit to the pooled data, with 83.5% of the variance accounted for.

Fig 9.

Mean indifference points as a function of the mixed-time (MT) or fixed-time (FT) delay condition for all subjects in Experiment 2. The curve displays predicted indifference points from Equation 1 with K = 1.

Because most of the participants completed conditions at only two different MT delays, individual curves were not plotted. Performances were generally similar across individuals, however. Indifference points were ordered with respect to relative reinforcer immediacy, and there was little overlap in the distribution of points comprising the means. Indifference points ranged between 1.1 s and 2.6 s (M = 1.94 s) under MT 1 s, 29 s, from 9.2 s to 13.5 s (M = 11.0 s) under MT 10 s, 20 s, and from 12.9 to 17.2 (M = 14.4 s) under the FT 15-s control condition. The latter result indicates a general lack of bias for either alternative.

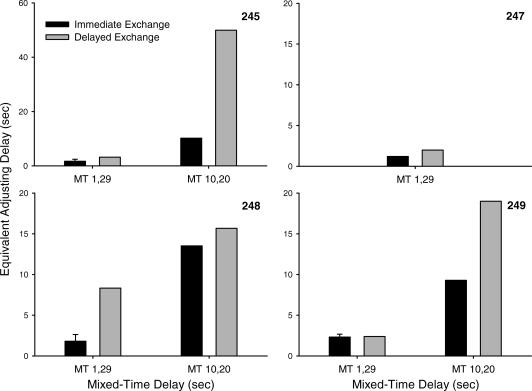

Mean indifference points obtained during the immediate and delayed exchange conditions are compared in Figure 10, with error bars displayed for conditions that were replicated (note the higher y-axis scale for Participant 245). As in Experiment 1, the delayed-exchange conditions resulted in less discounting of reinforcer delay, as evidenced by the higher indifference points relative to those obtained during immediate-exchange conditions. This was particularly evident when the MT values contained relatively long delays to reinforcement (i.e., the MT 10 s, 20 s condition), with mean indifference points of 28.2 s under delayed exchange and 11 s under immediate exchange. There was also evidence of delay sensitivity under delayed exchange. In all 3 participants who completed more than one delayed exchange condition, indifference points were higher under conditions with relatively longer short delays (e.g., MT 10 s, 20 s versus MT 1 s, 29 s.)

Fig 10.

Mean indifference points for individual subjects from immediate exchange (black bars) and delayed exchange (grey bars) conditions as a function of the mixed-time (MT) or fixed-time (FT) delay, including standard deviations. Note the extended y-axis for Subject 245.

As in Experiment 1, latencies to trial initiation, choice, and token exchange responses were also examined as a function of the different MT delay conditions. Figures 11 and 12 display this result during the immediate and delayed exchange conditions, respectively, averaged across subjects. In immediate-exchange conditions (Figure 11), latencies to trial initiation and exchange both increased as a function of the short delay in the MT pair, but choice latencies were largely unaffected by MT schedule. As in Experiment 1, when latencies changed across conditions they were positively related to obtained indifference points, with shorter latencies occurring in conditions in which shorter adjusting delays were obtained. Response latencies in the delayed exchange conditions were largely undifferentiated across conditions, but were somewhat longer for exchange responses.

Fig 11.

Mean latencies to respond on choice, exchange, and trial initiation keys as a function of the mixed-time (MT) or fixed-time (FT) delay, averaged across subjects, in immediate exchange conditions. Error bars indicate across-subject standard deviations.

Fig 12.

Mean latencies to respond on choice, exchange, and trial initiation keys as a function of the mixed-time (MT) or fixed-time (FT) delay, averaged across subjects, in delayed exchange conditions. Error bars indicate across-subject standard deviations.

Discussion

The current experiment brought into closer alignment the choice procedures used with human and nonhuman subjects. First, participants were exposed to experimental contingencies repeatedly over trials and across sessions, rather than the more typical practice of “one-shot” choices. Also, unlike prior research with hypothetical outcomes, participants earned access to actual consequences: video clips of favorite TV programs. By providing immediately consumable reinforcement in a repeated-trials format, it was hypothesized that participants would make their choices based on the programmed delays to reinforcement rather than the overall-session rate of reinforcement. Our results confirm this: During immediate exchange conditions, choices were sensitive to delay, resembling that of nonhuman subjects (including those in Experiment 1). Indifference points conformed well to the predictions of Equation 1, even when employing the same discounting parameter value as used with food-deprived pigeon subjects. The delayed-exchange conditions produced elevated indifference points, indicating greater risk aversion, more like that often seen in laboratory risky-choice procedures with humans.

As in Experiment 1, latencies to trial initiation, choice, and token exchange responses were examined as a function of the different MT delay conditions. A similar pattern was evident under immediate-exchange conditions for the humans in this experiment as for the pigeons in Experiment 1: latencies to trial initiation and exchange increased as obtained adjusting delays for that MT schedule increased.

The use of video reinforcement also extends prior research with humans (e.g., Navarick, 1996; 1998; Hackenberg & Pietras, 2000; Locey et al., 2009) and demonstrates the utility of this reinforcer type for use in laboratory research. Across the range of conditions studied here, video reinforcers shared important functional characteristics with more common consumable reinforcers (e.g., food). Although many questions remain concerning its relative reinforcer efficacy, the present results suggest that video reinforcers can function as an effective tool in laboratory research with humans.

GENERAL DISCUSSION

The present study compared the choice patterns of pigeons and humans in a risky-choice context. A major objective was to bring human and pigeon procedures into closer alignment, facilitating species comparisons. To that end, pigeons and humans were exposed to analogous procedures that included forced-choice trials, steady-state analyses, and consumable-type reinforcement across two different economic contexts: immediate-exchange, most analogous to prior research with nonhumans, and delayed-exchange, most analogous to prior research with humans. Thus, if procedural differences are responsible for prior species differences, then choice patterns of both species should be more risk-prone under immediate-exchange than under delayed-exchange conditions. Moreover, if choices are sensitive to delay, then indifference points should be ordered with respect to reinforcer immediacy. Both of these results were confirmed.

Discounting by reinforcer delay was seen both with pigeons (Experiment 1) and humans (Experiment 2). This was reflected in both (a) the greater number of choices made for the variable (MT) schedule under the immediate-exchange conditions (as indexed by lower indifference points); and (b) the ordering of indifference points with respect to relative reinforcer immediacy. Both of these results are in accord with previous research (e.g., Bateson & Kacelnik, 1995; Gibbon, et al., 1988; Mazur, 1987; 2006). The results are also broadly consistent with Equation 1, a model of hyperbolic reinforcer discounting. The model accounted for 95% and 83.5% of the overall variance in the pigeon and human data sets, respectively. For individual pigeon subjects, using K values of 1.0 provided reasonable fits to Equation 1, with the percentages of variance accounted for ranging from 91.2% to 97.3% across subjects. There is ample precedent for allowing K to vary (e.g., Mazur, 1986b, 1987; Myerson & Green, 1995); however, doing so produced only moderately higher percentages ranging from 91.6% to 97.3% for pigeons and 83.5% to 84.5% for humans.

Although we did not have enough data points to provide informative best-fit K values for individual human data, the pooled K values were higher than those frequently reported for human choices with hypothetical outcomes (Lagorio & Madden, 2005; Myerson & Green, 1995; Petry & Casarella, 1999). Attaining higher K values is likely due to the consumable-type reinforcers used in the current study. This type of reinforcer allowed for the assessment of preferences within a time frame more comparable to that consistently used with nonhumans: actual reinforcers delayed by seconds or minutes rather than reinforcers that are either hypothetical or delayed by days or years.

More direct comparisons across species are provided in Figure 13, which shows obtained indifference points for pigeons and humans in the present study. To facilitate comparisons, indifference points are plotted as a function of the predicted equivalent adjusting delays provided by Equation 1. The data for both species are ordered according to the predictions of the hyperbolic-decay model. On the whole, Equation 1 provided a good description of the data for both pigeons and humans, suggesting similarities in the way delayed reinforcers are discounted for different species, whether those reinforcers are food for pigeons or videos for humans.

Fig 13.

Individual-subject indifference points for pigeon (closed circle) and human (open square) subjects under immediate-exchange conditions as a function of the predicted delay (D) from Equation 1.

Although broadly consistent with Equation 1, the results are also consistent with other discounting models. Both exponential and linear models provided generally comparable fits. Given the generally good fits overall, more precise model testing must await additional research. The present analysis suggests, however, that the methods employed here are well suited to quantitative cross-species comparisons in delay discounting.

In delayed-exchange conditions, both pigeons and humans favored the adjusting alternative (producing higher indifference points) to a far greater degree than when tokens were exchanged immediately. As in the immediate-exchange conditions, indifference points increased with relative reinforcer delay, but the indifference points were uniformly higher, indicating shallower discounting.

These differences between immediate and delayed exchange show that risky choice depends in part on the economic context. Reinforcers were discounted more sharply in contexts permitting immediate consumption than in contexts requiring accumulation of reinforcers for later exchange. This basic result confirms previous research (e.g., Hyten, Madden, & Field, 1994; Jackson & Hackenberg, 1996), but to date, the relevant data are from comparisons across studies. The present study is noteworthy in that it provides direct comparisons of pigeons and humans in both types of economic context. That the economic context produced generally similar results for pigeons and humans attests to the major influence of this variable.

The latency measures revealed some similarities across species, but differed in quantitative detail. For pigeons, trial-initiation and choice latencies varied with relative reinforcer delay under both immediate and delayed exchange, with shorter overall latencies occurring in conditions with lower indifference points. For humans, trial-initiation latencies were sensitive to reinforcer delay under immediate but not delayed exchange, with choice latencies relatively undifferentiated across conditions. In many cases, the latency measures were inversely related to preference, as might be expected from a unitary conception of reinforcer value. The overall pattern of results shows how local measures of behavior can be usefully brought to bear on conceptions of reinforcer value.

In sum, the present results suggest that pigeons' and humans' choices are influenced in similar ways by reinforcer delay. The general comparability of results seen with pigeons and humans may be due to the use of “consumable-type” reinforcement with humans. Other studies employing such consumable-type reinforcers with humans have also reported preferences more in line with the delay sensitivity seen with nonhumans (e.g., Forzano & Logue, 1994; Hackenberg & Pietras, 2000; Locey et al., 2009; Millar & Navarick, 1984). Along with exposure to forced-choice trials and repeated exposure to the contingencies across multiple daily sessions, the use of consumable-type reinforcers brought the procedures into greater alignment with those typically used with other animals. The present results join with a growing body of findings showing that previously reported differences between humans and other animals may be at least partly attributed to procedural features. Only with procedures matched on important functional characteristics can genuine species differences be separated from procedural differences.

Acknowledgments

This research was supported by Grant IBN 0420747 from the National Science Foundation. An earlier version of this paper was submitted by the first author to the Graduate School at the University of Florida in partial fulfillment of the Master of Science Degree. Portions of these data were presented at the 2006 meeting of the Southeastern Association for Behavior Analysis and the 2007 meetings of the Society for the Quantitative Analysis of Behavior and the Association for Behavior Analysis International. The authors thank Anthony DeFulio, Rachelle Yankelevitz, Leonardo Andrade and Bethany Raiff for their assistance with data collection, and to Marc Branch, Jesse Dallery, and David Smith for comments on an earlier version.

REFERENCES

- Bateson M, Kacelnik A. Preferences for fixed and variable food sources: Variability in amount and delay. Journal of the Experimental Analysis of Behavior. 1995;63:313–329. doi: 10.1901/jeab.1995.63-313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bateson M, Kacelnik A. Starlings' preferences for predictable and unpredictable delays to food. Animal Behavior. 1997;53:1129–1142. doi: 10.1006/anbe.1996.0388. [DOI] [PubMed] [Google Scholar]

- Bullock C.E, Hackenberg T.D. Second-order schedules of token reinforcement with pigeons: Implications for unit price. Journal of the Experimental Analysis of Behavior. 2006;85:95–106. doi: 10.1901/jeab.2006.116-04. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Case D.A, Nichols P, Fantino E. Pigeons' preference for variable-interval water reinforcement under widely varied water budgets. Journal of the Experimental Analysis of Behavior. 1995;64:299–311. doi: 10.1901/jeab.1995.64-299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cicerone R.A. Preference for mixed versus constant delay of reinforcement. Journal of the Experimental Analysis of Behavior. 1976;25:257–261. doi: 10.1901/jeab.1976.25-257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M.C. Preference for mixed-interval versus fixed-interval schedules. Journal of the Experimental Analysis of Behavior. 1969;12:247–252. doi: 10.1901/jeab.1969.12-247. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davison M.C. Preference for mixed-interval versus fixed-interval schedules: Number of component intervals. Journal of the Experimental Analysis of Behavior. 1972;17:169–176. doi: 10.1901/jeab.1972.17-169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fantino E. Preference for mixed- versus fixed-ratio schedules. Journal of the Experimental Analysis of Behavior. 1967;10:35–43. doi: 10.1901/jeab.1967.10-35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forzano L.B, Logue A.W. Self-control in adult humans: Comparison of qualitatively different reinforcers. Learning and Motivation. 1994;25:65–82. [Google Scholar]

- Frankel P.W, vom Saal W. Preference between fixed-interval and variable-interval schedules of reinforcement: Separate roles of temporal scaling and predictability. Animal Learning and Behavior. 1976;4:71–76. [Google Scholar]

- Gibbon J, Church R.M, Fairhurst S, Kacelnik A. Scalar expectancy theory and choice between delayed rewards. Psychological Review. 1988;95:102–114. doi: 10.1037/0033-295x.95.1.102. [DOI] [PubMed] [Google Scholar]

- Hackenberg T.D. Token reinforcement: A review and analysis. Journal of the Experimental Analysis of Behavior. 2009;91:257–286. doi: 10.1901/jeab.2009.91-257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hackenberg T.D, Pietras C.J. Video access as a reinforcer in a self-control paradigm: A method and some data. Experimental Analysis of Human Behavior Bulletin. 2000;18:1–5. [Google Scholar]

- Herrnstein R.J. Aperiodicity as a factor in choice. Journal of the Experimental Analysis of Behavior. 1964;7:179–182. doi: 10.1901/jeab.1964.7-179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hursh S.R, Fantino E. Relative delay of reinforcement and choice. Journal of the Experimental Analysis of Behavior. 1973;19:437–450. doi: 10.1901/jeab.1973.19-437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hyten C, Madden G.J, Field D.P. Exchange delays and impulsive choice in adult humans. Journal of the Experimental Analysis of Behavior. 1994;62:225–233. doi: 10.1901/jeab.1994.62-225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackson K, Hackenberg T.D. Token reinforcement, choice, and self-control in pigeons. Journal of the Experimental Analysis of Behavior. 1996;66:29–49. doi: 10.1901/jeab.1996.66-29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kacelnik A, Bateson M. Risk theories—the effects of variance on foraging decisions. American Zoologist. 1996;36:402–434. [Google Scholar]

- Kendall S.B. Risk-taking behavior of pigeons in a closed economy. Psychological Record. 1989;39:211–220. [Google Scholar]

- Killeen P. On the measurement of reinforcement frequency in the study of preference. Journal of the Experimental Analysis of Behavior. 1968;11:263–269. doi: 10.1901/jeab.1968.11-263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohn A, Kohn W.K, Staddon J.E.R. Preferences for constant duration delays and constant sized rewards in human subjects. Behavioural Processes. 1992;26:125–142. doi: 10.1016/0376-6357(92)90008-2. [DOI] [PubMed] [Google Scholar]

- Lagorio C.H, Madden G.J. Delay discounting of real and hypothetical rewards III: Steady-state assessments, forced-choice trials, and all real rewards. Behavioural Processes. 2005;69:173–187. doi: 10.1016/j.beproc.2005.02.003. [DOI] [PubMed] [Google Scholar]

- Locey M.L, Pietras C.J, Hackenberg T.D. Human risky choice: Delay sensitivity depends on reinforcer type. Journal of Experimental Psychology: Animal Behavior Processes. 2009;35:15–22. doi: 10.1037/a0012378. [DOI] [PubMed] [Google Scholar]

- Logan F.A. Decision making by rats: Uncertain outcome choices. Journal of Comparative and Physiological Psychology. 1965;59:246–251. doi: 10.1037/h0021850. [DOI] [PubMed] [Google Scholar]

- Mazur J.E. Tests of an equivalence rule for fixed and variable reinforcer delays. Journal of Experimental Psychology: Animal Behavior Processes. 1984;10:426–436. [PubMed] [Google Scholar]

- Mazur J.E. Choice between single and multiple delayed reinforcers. Journal of the Experimental Analysis of Behavior. 1986a;46:67–77. doi: 10.1901/jeab.1986.46-67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur J.E. Fixed and variable ratios and delays: Further tests of an equivalence rule. Journal of Experimental Psychology: Animal Behavior Processes. 1986b;12:116–124. [PubMed] [Google Scholar]

- Mazur J.E. An adjusting procedure for studying delayed reinforcement. In: Commons M.L, Mazur J.E, Nevin J.A, Rachlin H, editors. Quantitative analyses of behavior, Vol. 5. Effects of delay and intervening events on reinforcement value. Hillsdale, NJ: Erlbaum; 1987. pp. 55–73. [Google Scholar]

- Mazur J.E. Estimation of indifference points with an adjusting delay procedure. Journal of the Experimental Analysis of Behavior. 1988;49:37–47. doi: 10.1901/jeab.1988.49-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur J.E. Theories of probabilistic reinforcement. Journal of the Experimental Analysis of Behavior. 1989;51:87–99. doi: 10.1901/jeab.1989.51-87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur J.E. Risky choice: Selecting between certain and uncertain outcomes. Behavior Analyst Today. 2004;5:190–203. [Google Scholar]

- Mazur J.E. Mathematical models and the experimental analysis of behavior. Journal of the Experimental Analysis of Behavior. 2006;85:275–291. doi: 10.1901/jeab.2006.65-05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur J.E. Rats' choices between one and two delayed reinforcers. Learning and Behavior. 2007;35:169–176. doi: 10.3758/bf03193052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Millar A, Navarick D.J. Self-control and choice in humans: effects of video game playing as a positive reinforcer. Learning and Motivation. 1984;15:203–218. [Google Scholar]

- Myerson J, Green L. Discounting of delayed rewards: Models of individual choice. Journal of the Experimental Analysis of Behavior. 1995;64:263–276. doi: 10.1901/jeab.1995.64-263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Daly M, Case D.A, Fantino E. Influence of budget and reinforcement location on risk-sensitive preference. Behavioural Processes. 2006;73:125–135. doi: 10.1016/j.beproc.2006.04.005. [DOI] [PubMed] [Google Scholar]

- Orduna V, Bouzas A. Energy budget versus temporal discounting as determinants of preference in risky choice. Behavioural Processes. 2004;67:147–156. doi: 10.1016/j.beproc.2004.03.019. [DOI] [PubMed] [Google Scholar]

- Petry N.M, Casarella T. Excessive discounting of delayed rewards in substance abusers with gambling problems. Drug and Alcohol Dependence. 1999;56:25–32. doi: 10.1016/s0376-8716(99)00010-1. [DOI] [PubMed] [Google Scholar]

- Rider D. Preference for mixed versus constant delays of reinforcement: Effect of probability of the short, mixed delay. Journal of the Experimental Analysis of Behavior. 1983;39:257–266. doi: 10.1901/jeab.1983.39-257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodriguez M.L, Logue A.W. Adjusting delay to reinforcement: Comparing choice in pigeons and humans. Journal of Experimental Psychology: Animal Behavior Processes. 1988;14:105–117. [PubMed] [Google Scholar]

- Sherman J.A, Thomas J.R. Some factors controlling preference between fixed-ratio and variable-ratio schedules of reinforcement. Journal of the Experimental Analysis of Behavior. 1968;11:689–702. doi: 10.1901/jeab.1968.11-689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webbe F.M, Malagodi E.F. Second-order schedules of token reinforcement: Comparisons of performance under fixed-ratio and variable-ratio exchange schedules. Journal of the Experimental Analysis of Behavior. 1978;30:219–224. doi: 10.1901/jeab.1978.30-219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiner H. Preference and switching under ratio contingencies with humans. Psychological Reports. 1966;18:239–246. doi: 10.2466/pr0.1966.18.1.239. [DOI] [PubMed] [Google Scholar]

- Zabludoff S.D, Wecker J, Caraco T. Foraging choice in laboratory rats: Constant vs. variable delay. Behavioural Processes. 1988;16:95–110. doi: 10.1016/0376-6357(88)90021-6. [DOI] [PubMed] [Google Scholar]