Abstract

Recent work on event perception suggests that perceptual processing increases when events change. An important question is how such changes influence the way other information is processed, particularly during dual-task performance. In this study, participants monitored a long series of distractor items for an occasional target as they simultaneously encoded unrelated background scenes. The appearance of an occasional target could have two opposite effects on the secondary task: It could draw attention away from the second task, or, as a change in the ongoing event, it could improve secondary task performance. Results were consistent with the second possibility. Memory for scenes presented simultaneously with the targets was better than memory for scenes that preceded or followed the targets. This effect was observed when the primary detection task involved visual feature oddball detection, auditory oddball detection, and visual color-shape conjunction detection. It was eliminated when the detection task was omitted, and when it required an arbitrary response mapping. The appearance of occasional, task-relevant events appears to trigger a temporal orienting response that facilitates processing of concurrently attended information (Attentional Boost Effect).

Introduction

Most of the time the external environment is relatively constant. Even when driving a person’s immediate environment changes little: Her position within the car stays the same and the car typically moves straight and at a steady speed. However, sometimes the external environment changes in meaningful ways, requiring a reevaluation of the current situation and perhaps a response. For example, a traffic light may change from green to yellow, or a pedestrian may step into the road. Several theories of perception and attention suggest that changes in events may lead to improved perceptual processing (Aston-Jones & Cohen, 2005; Bouret & Sara, 2005; Grossberg, 2005; Zacks, Speer, Swallow, Braver, & Reynolds, 2007). Increased attention to novel events, or to events that mark a change in context has long been associated with better memory for those events (Fabiani & Donchin, 1995; Hunt, 1995; Newtson & Engquist, 1976; Ranganath & Rainer, 2003; Swallow, Zacks, & Abrams, 2009). An important and as yet unanswered question, however, is how task-relevant changes in events (e.g., the traffic light changing from green to yellow) impact the way other, task-relevant information is processed (e.g., the pedestrian on the corner).

Theories of cognition and perception suggest two opposite predictions about the relationship between the occurrence of a task-relevant change in an event and the way other information is processed at that time. In general, when attention to one task increases, performance on a second task suffers (Pashler, 1994). In one study, Duncan (1980) asked participants to search for two briefly presented targets that appeared either at the same time or at different times. Participants were more likely to miss a target in one location if they detected a simultaneously presented target in the other location. Furthermore, work on the psychological refractory period (PRP) has shown that when two tasks share a limited capacity processing step (the “central bottleneck”), processing for the second task is delayed until processing for the first task is complete (Pashler, 1994). It is therefore likely that the detection of task-relevant changes draw attentional resources away from processing other information or performing other tasks (interference hypothesis).

However, several theories of perception, attention, and learning suggest that perceptual processing temporarily increases in response to goal-relevant changes in the external environment (Aston-Jones & Cohen, 2005; Bouret & Sara, 2005; Grossberg, 2005; Zacks, Speer, Swallow, Braver, & Reynolds, 2007). For example, in the Adaptive Gain Theory of locus coeruleus-norepinephrine (LC-NE) function, Aston-Jones and Cohen (2005) characterize the phasic response of LC neurons as a temporal attentional filter. This theory suggests that once a task-relevant event has been detected LC neurons fire to increase the sensitivity (or gain) of target neurons, leading to a transient increase in perceptual processing. In addition, a recent theory of event perception suggests that changes in observed activities trigger additional perceptual processing, updating internal representations of the current event (Zacks, Speer, Swallow, Braver, & Reynolds, 2007). These theories suggest that increasing attention in response to task-relevant changes in events may facilitate cognitive processing at the moment of the change (facilitation hypothesis). They further suggest that this facilitation may result from orienting attention to the moment in time that the change occurred, perhaps through the opening of an attentional gate (cf . Olivers & Meeter, 2008). It is unclear, however, whether the facilitation from temporal orienting is restricted to the changed event, or whether it also spreads to concurrently presented, secondary tasks.

To examine how task-relevant changes in events influence the way other relevant information is processed we asked participants to encode a long series of briefly presented images while they simultaneously performed an unrelated, continuous detection task. For this dual-task encoding phase participants encoded images as they monitored a second stimulus stream (e.g., a square or a letter in the center of the picture) and pressed a key whenever they detected an infrequent target (e.g., a white square among black square distractors, or a red-X among other red letters and other non-red Xs). Because distractors were usually presented, the appearance of a target constituted a task-relevant change that required additional attention to process. In addition, previous research has shown that identifying a target, but not rejecting a distractor, interferes with the processing of a second target (the attentional-dwell time) for several hundred milliseconds (Duncan, Ward, & Shapiro, 1994; Moore, Egeth, Berglan, & Luck, 1996; Raymond, Shapiro, & Arnell, 1992; Wolfe, 1998) [footnote 1].1 Therefore, attention to the target detection task should be greater when a target appears than when a distractor appears. The effect of this change of attention on encoding was examined by presenting the background images at a set serial position relative to the targets. Thus, some images were presented at the same time as the targets, some images were presented immediately after the target and some images were presented immediately before the target. In a second phase participants performed a recognition test on the images.

This design has two important features. First, because the targets were not part of the background images, the target-detection task was separate from the image-encoding task. Second, by presenting background images at a set time relative to the targets, the effects of the targets on memory for background images presented with the target as well as that for images presented before or after the target could be examined. The interference and facilitation hypotheses suggest opposite effects of the appearance of targets on later memory for concurrently presented images: These images could be more poorly remembered than images encoded when distractors appeared (interference) or they could be better remembered than images encoded when distractors appeared (facilitation). In addition, it is possible that increasing attention to the targets could have long-lasting or even retroactive effects on background image processing. The appearance of occasional targets could interfere with subsequent as well as concurrent background scenes, or it could increase levels of arousal, perhaps facilitating memory for the preceding images (Anderson, Wais, & Gabrieli, 2006).

Overview of the experiments

Several experiments were performed, first, to evaluate how the appearance of targets in one task influences performance on an image-encoding task, second, to evaluate the role of attention in this relationship, and third, to provide boundary conditions for this relationship. Experiment 1 showed that when scenes were presented at the same time that a visual feature-oddball target occurred they were later better remembered than scenes presented before or after the target. Experiment 2 demonstrated that this effect is not modality specific, and that detecting auditory targets can facilitate image encoding. Because increasing attention to the target appears to boost encoding of the concurrently presented background image we refer to this phenomenon as the Attentional Boost Effect. Experiments 3 and 4 examined the “attention” element of the Attentional Boost Effect. The data from Experiment 3 showed that the Attentional Boost Effect depends on attending to the target detection task and cannot be attributed to the perceptual saliency of the odd-featured targets. Experiment 4 extended the Attentional Boost Effect to detection tasks that require identifying color-shape conjunctions, which impose significant attentional demands on the task (Treisman & Gelade, 1980). Finally, Experiment 5 presents an important boundary condition for the Attentional Boost Effect, showing that it is eliminated when the target detection task requires an arbitrary stimulus-response mapping. We suggest that the appearance of occasional targets in a stream of distractors triggers a temporal orienting response to the moment in time that the target appears. The orienting response opens an attentional “gate” that facilitates processing in the concurrent secondary image-encoding task.

Experiment 1

Experiment 1 examined the relationship between target detection and encoding a concurrently presented image. Participants encoded scenes while they performed a simple detection task that was irrelevant to the scene-encoding task. For the detection task, participants pressed a spacebar whenever the fixation point was an infrequent white square (target) rather than a black square (distractors). The experiment was performed twice with two separate groups of participants. In both experiments the presentation rate was 500 ms per item; however, the scenes lasted the entire 500 ms in one experiment (Experiment 1a) and 100 ms (followed by 400 ms of a blank screen) in the other (Experiment 1b) [footnote 2].2

Methods

Participants

Twenty-two people (18–24 years old) participated in these experiments. Twelve participated in Experiment 1a and eight participated in Experiment 1b. Data from one additional participant in each experiment were excluded due to poor performance on the target detection task (responses were made to fewer than 80% of the targets). All participants gave informed consent and were compensated $10 or with course credit for their time.

Materials

A set of 330 color pictures of indoor and outdoor scenes was acquired from personal collections and from Aude Oliva’s online database (http://cvcl.mit.edu/database.htm). The pictures were diverse and included images of mountains, forests, beaches, farms, urban streets, skyscrapers, and animals. None of the pictures contained text or were left-right symmetrical. The pictures were randomly assigned to be old scenes, which were shown in the encoding task (n = 130), to be novel scene foils for the recognition task (n = 130), and to be used as filler scenes to separate trials during the encoding task (n = 70). Randomization was performed separately for each participant, removing systematic differences in images shown in different conditions. A separate set of 17 scenes was used for familiarizing participants with the dual-task encoding phase.

The stimuli were presented on a 19” monitor (1024×768 pixels, 75 Hz) with a PowerPC Macintosh computer using MATLAB R2008b and the PsychToolBox version 3 (Brainard, 1997; Pelli, 1997). Viewing distance was approximately 40 cm but was otherwise unrestrained.

Tasks

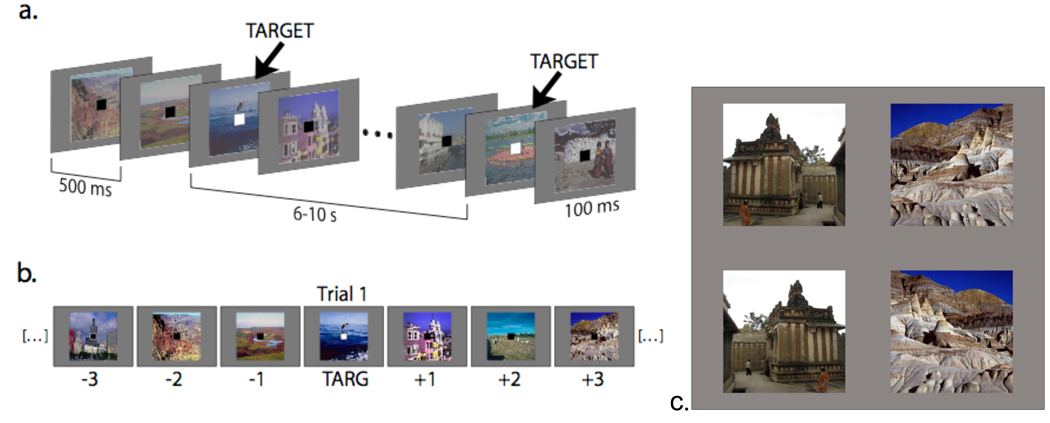

For the dual-task encoding phase squares and scenes were continuously presented at a rate of 500 ms per image (Figure 1a). One square (1°×1°) and one scene (12.7°×12.7°) appeared simultaneously at the center of the screen (with the square in front). In Experiment 1a, the scene was presented for 500 ms and the square was presented for 100 ms (after which no square appeared over the scene for 400 ms). In Experiment 1b, the scene and square were presented for 100 ms, followed by a 400 ms blank interval. Participants were asked to press the spacebar as quickly as possible whenever they saw a white square (target) and to make no response whenever a black square (distractor) appeared. Participants were instructed to remember all of the scenes for a later recognition test, but were not informed of the exact nature of this test.

Figure 1.

Dual-task encoding design. (a) In the first part of the experiment participants were shown a long sequence of scenes and asked to memorize the scenes for a later memory test. They were also told to monitor a square that was briefly presented in the center of the scene and to press the spacebar when it was white instead of black (Experiments 1a & 1b). (b) Scenes were presented at fixed serial position relative to the target. (c) An example of the recognition memory test: Participants picked the image that was exactly the same as what they saw earlier.

One hundred targets were presented in the dual-task encoding phase of the experiment, which was divided into 10 blocks of 10 targets. The temporal relationship between the presentation of a given scene and the target was held constant by randomly assigning each of the 130 old scenes to one of 13 serial positions around the target (randomization was performed separately for each participant). There were 10 unique scenes per position. The square at fixation was black in all positions except for the seventh position (the target position), which had white squares. Thus, a trial series consisted of six distractor displays, a target display, and six additional distractor displays. The 10 background scenes assigned to the seventh position were always presented with the white-square target.

To reduce the temporal regularity with which the target (white square) was presented, zero to eight filler scenes (all presented with the black square) separated the trial series (e.g., appeared between serial position 13 and serial position 1). The number of filler scenes between each trial series was randomly determined at the beginning of the experiment. Filler scenes were randomly selected to appear in the filler positions until all the filler scenes were presented. Once the filler scenes had been exhausted, they were again randomly selected with the constraint that no scene was immediately repeated. Including the filler displays, there were approximately 170 continuously presented displays per block, though the exact number varied due to randomization of the number of filler displays.

To ensure that performance on the memory task was above chance [footnote 3]3, the scenes were presented 10 times over the 10 blocks of the dual-task encoding phase. All old scenes were presented once per block. Across blocks, although a particular scene was assigned to a specific position relative to the target, the order of the scenes was randomized: Each time a scene was presented, it could appear after any of the 10 scenes assigned to the previous serial position and before any of the 10 scenes assigned to the next serial position. Participants were allowed to take a break after each block.

Approximately two minutes after completing the dual-task encoding phase, participants performed a four-alternative-forced-choice (4AFC) recognition test on the scenes (Figure 1c). Each trial consisted of four alternatives in different visual quadrants: an old scene, a novel scene, and mirror-reversed images of the old and novel scenes (the two images were flipped on the vertical axis). The 4AFC procedure measured memory for scene identity memory (picking either the old image or its mirror-reversed counterpart but not the other two) and scene orientation memory (picking the correct orientation among trials with correct picture memory responses). The mirror-reversed counterpart to an image was always presented in an adjacent location. The center of each image was 16° from the center of the screen. The old scenes were tested in a random order and were randomly paired with the novel scenes. Participants were instructed to select the exact picture that had been shown to them during the dual-task encoding phase by pressing the key on the number pad (1, 2, 4, and 5) that corresponded to the location of the image they chose (lower left, lower right, upper left, and upper right, respectively). Participants were given an unlimited amount of time to respond.

Results and Discussion

Phase 1: Dual-task encoding

Participants responded to almost all of the white square targets (Experiment 1a: mean accuracy = 98.4%, SD = 1.7, mean response time = 447 ms, SD = 58; Experiment 1b: mean accuracy = 93.9%, SD = 5.2, mean response time = 474 ms, SD = 54) and false alarms were made on few trials (Experiment 1a: mean false alarm rate = 3.67%, SD = 5.69; Experiment 1b: mean false alarm rate = 2.12%, SD = 3.31). However, participants in Experiment 1a detected reliably more targets than did participants in Experiment 1b, F(1, 18) = 8.18, p = .01, perhaps because there were fewer irrelevant visual transients (onsets and offsets of the images). There were no reliable differences in response time, F(1, 18) = 1.12, p = .30.

Phase 2: Recognition memory

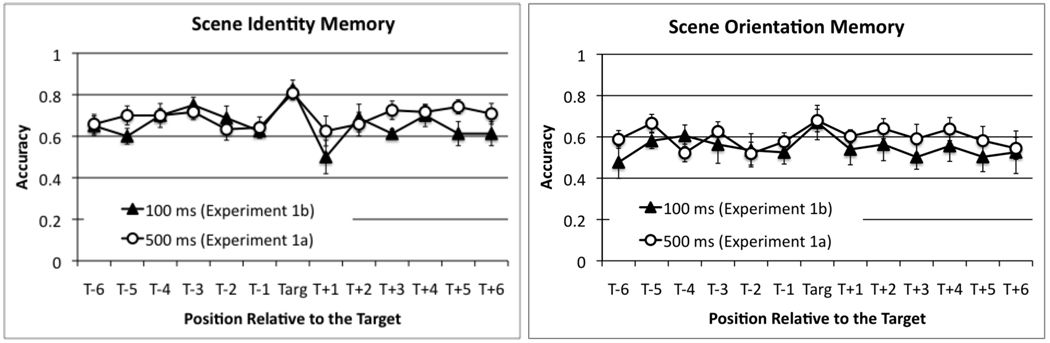

Figure 2 plots scene identity memory accuracy (the proportion of trials where the old image or its mirror-reversed counterpart was selected) and orientation memory accuracy (the proportion of trials where the old image was selected among correct scene identity responses).

Figure 2.

Proportion of correctly recognized scenes in Experiments 1a (N=12; scenes lasted for 500ms each) and 1b (N=8; scenes lasted for 100ms and followed by a 400ms blank) as a function of the scene’s serial position relative to the target during encoding. Error bars represent ±1 S.E. of the mean.

(1) Scene identity memory

The likelihood that a scene was correctly recognized depended on the scene’s serial position during encoding. An ANOVA on position (1–13) as a within-subject factor and experiment version (1a and 1b) as a between-subject factor revealed a significant main effect of the scene’s serial position during encoding, F(12, 216) = 2.83, p < .001, ηp2 = .14, and no main effect of experiment, F(1, 18) = 1.60, p > .22, or experiment by position interaction, F(12, 216) < 1, ns. Consistent with the facilitation hypothesis, scene identity memory was more accurate for scenes encoded at the target position than at the other positions.

To better characterize the effects of detecting occasional targets in one stimulus stream on encoding scenes presented in a second stimulus stream, a second analysis compared recognition accuracy for scenes presented in serial positions 7 (target), 6 (target-minus-one), 8 (target-plus-one), the mean of positions 1–5 (pre-target), and the mean of positions 9–13 (post-target). Because the interaction between experiment and position was not reliable the data from the two experiments were combined. A Bonferonni corrected alpha level of .003 was adopted to control for the large number of comparisons. Picture memory was better for scenes encoded when targets appeared than for scenes encoded when distractors appeared, smallest t(19) = 3.86, p = .001, d = 1.258. None of the other pair-wise comparisons reached significance, all ps > .05, indicating that identity memory for scenes presented with distractors did not vary across encoding positions.

Analyses of response times revealed no reliable effects of encoding position or experiment, and encoding position did not interact with experiment, largest F(1, 18) = 2.03, p = .172, for the main effect of experiment. This was true for all experiments, and because the recognition task was unspeeded and recognition accuracy was far from ceiling, response times will not be mentioned further.

(2) Scene Orientation memory

Orientation memory was slightly but significantly better than chance (M = 55.5%, SD = 3.08% in Experiment 1a and M = 60.4%, SD = 6.28% in Experiment 1b), both ps < .001. An ANOVA with position (1–13) and experiment (1a and 1b) as factors indicated that the main effect of position was not significant, F(12, 216) = 1.26, p > .243. There was a marginal trend for better orientation memory in Experiment 1a than 1b, F(1, 18) = 3.94, p > .063, ηp2 = .179. However, the interaction between position and experiment was not significant, F(12, 216) < 1, ns. Near chance performance for orientation memory appears to have produced considerable noise in the data, which may explain the failure to find a reliable effect of serial position. Indeed, post hoc analyses suggested that the relationship between orientation-memory and serial position was consistent with that observed for scene identity memory. An ANOVA on five serial positions (pre-target, target-minus-one, target, target-plus-one, and post-target) and experiment (1a and 1b) revealed a reliable main effect of position, F(4, 72) = 2.56, p < .046, ηp2 = .116, but no effects of experiment, F(1, 18) = 2.73, p > .116, or an interaction, F(4, 72) < 1, ns. Orientation memory for scenes encoded in the target position was significantly better than that for scenes encoded in the pre-target, target-minus-one, and post-target positions, smallest t(19) = 2.11, p < .049, d = 0.67, and was marginally better than for scenes in the target-plus-one position, smallest t(19) = 1.77, p < .093, d = 0.57. Orientation memory for scenes did not significantly vary over the non-target positions, largest t(19) = .524, p > .606. Because orientation memory performance was poor in this and the following experiments, we only briefly mention it in subsequent experiments. Detailed orientation memory data appear in the Appendix.

These data clearly show that scenes presented when targets also appeared were better recognized than scenes presented when distractors appeared. Therefore, although participants must engage in additional processing when targets appear (Duncan, 1980; Duncan et al., 1994), there was no evidence that encoding scenes presented in a second stimulus stream suffered. In addition, the effects of occasional targets on scene encoding were restricted to the concurrently presented scene.

Although several theories suggest that the appearance of occasional targets may facilitate perceptual processing, the precise timing of these effects was not clear a priori. For example, the appearance of emotionally arousing images facilitates long-term memory for neutral images presented shortly before the arousing image as well as for the arousing image itself (Anderson, et al., 2006). In addition, any advantage for scenes presented with targets could have been delayed or extended over time. However, there was little evidence that attending to targets affected memory for subsequent scenes; neither was there evidence of retrospective facilitation of scenes preceding the target position. The data from Experiment 1 suggest that the facilitative effects of detecting targets on encoding background images is limited to a relatively brief period of time.

Experiment 2 – Auditory Targets

Because the squares were presented over the scenes in Experiment 1, it is possible that the improvement in recognition memory for images encoded when targets appeared was due to the spread of visual attention from the targets in the center of the image. Furthermore, the images and the targets were both in the visual modality, leaving open the possibility that the effect of targets on image processing is modality specific. In Experiment 2 participants performed an auditory target detection task analogous to the visual target detection tasks used in the previous experiments. If the memory advantage for images encoded with targets is modality specific or is due to the spread of attention to portions of the image that surround the targets, then it should not be observed with auditory targets. If the memory advantage is instead due to the detection of an occasional task-relevant event, then scenes presented with auditory targets should be better recognized than scenes presented with auditory distractors. Experiment 2 also provided additional insight into the timing and nature of the facilitation effect observed in Experiment 1 by masking the scenes after 200 ms. As in Experiment 1b, this prevented multiple fixations of the scene; however masking also reduced the amount of time that the images could be perceptually processed.

Methods

Participants

Ten participants (18–21 years old) were recruited for this experiment. All participants gave informed consent and were compensated for their time.

Materials

This experiment used the materials and apparatus from Experiment 1a. Auditory tones were presented over headphones.

Task

For the dual-task encoding phase participants monitored auditory tones (100 ms in duration followed by 400 ms blank interval) and pressed the spacebar whenever they heard a target tone instead of a distractor tone. Low tones (200 Hz) were targets and high tones (400 Hz) were distractors for half the participants. This was reversed for the other half of the participants. Scenes were presented for 200 ms and then masked for 300 ms to limit the number of fixations. The mask consisted of a 256×256 pixel region of a novel scene that had been scrambled by dividing it into 20×20 pixel sections and then shuffling them. Finally, each trial series consisted of seven serial positions relative to the target. Targets were presented at position 4. There were a total of 100 targets in the dual-task encoding phase, which was divided into 10 blocks of 10 targets. The four-alternative forced choice recognition test used in Experiment 1 was administered approximately two minutes after the dual-task encoding phase.

Results and Discussion

Phase 1: Dual-task encoding

Performance in the auditory detection task was high, with participants responding to a mean of 98.5% of the target beeps (SD = 1.4%). False alarms were made on a mean of 0.5% of the trials (SD = 0.527%). The mean of the participants’ median response times was 333 ms (SD = 54.4 ms).

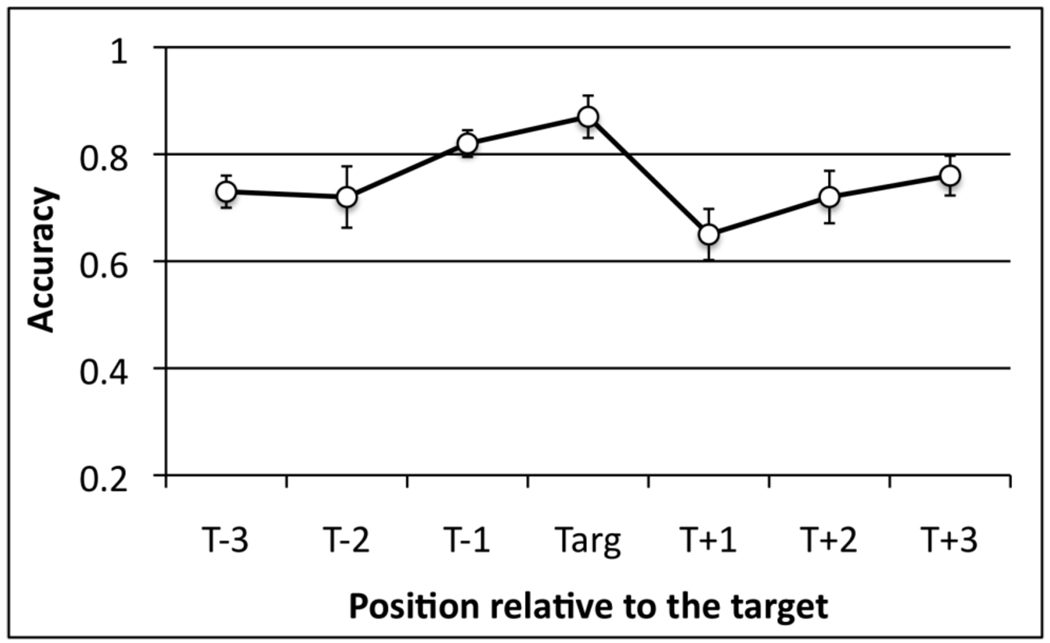

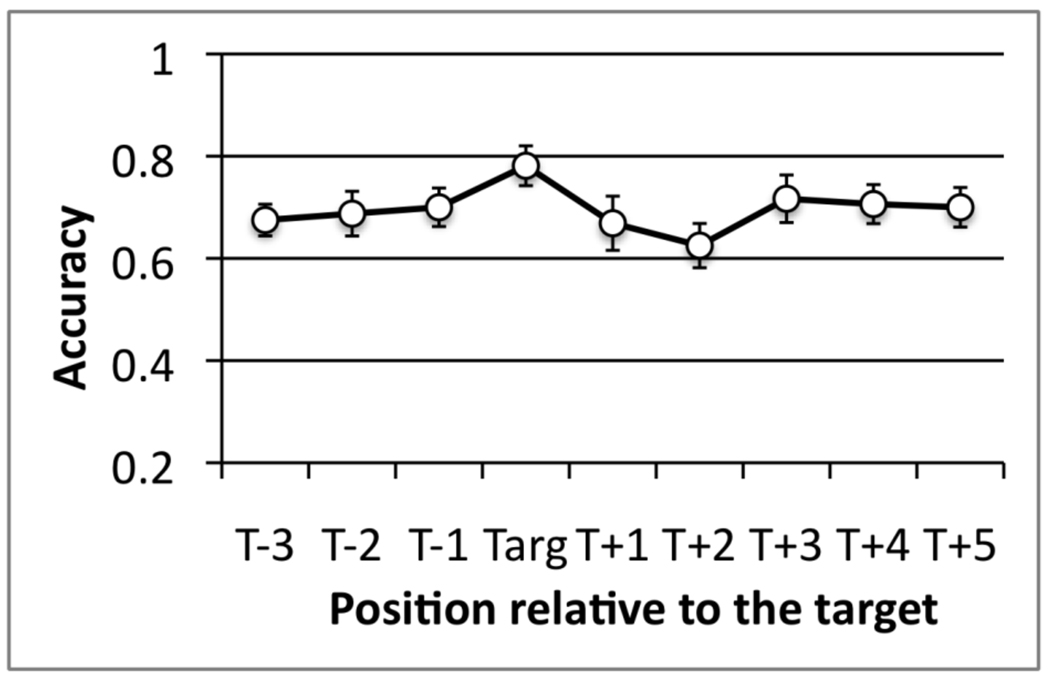

Phase 2. Scene recognition memory (Figure 3)

Figure 3.

Scene identity memory accuracy in Experiment 2 as a function of the scene’s serial position during encoding. Error bars represent ±1 S.E. of the mean.

The serial position of the scene during encoding significantly affected scene identity memory, F(6, 54) = 3.65, p < .004, ηp2 = .288. Because there were no reliable differences in scene identity memory for scenes presented in non-target positions, the target-minus-one and target-plus-one positions were collapsed into the pre-and post-target conditions in planned comparisons. Scene identity memory was better for scenes encoded in the target position than for scenes encoded in the pre-target and post-target positions, smallest t (9) = 2.35, p = .043, d = 1.02. As in Experiment 1, identity memory for scenes in pre-THE target positions was not reliably different than identity memory for scenes in post-target positions, t(9) = 1.68, p = .127.

Scene orientation memory (see Appendix) did not vary across serial positions during encoding, F(6, 54) = 1.20, p > .32. However, not only was overall orientation memory performance at chance (mean across positions = 50%, S.E. = 2.6%), it was not reliably greater than chance for any position, all ps > .25. Due to this poor level of performance on orientation memory, these data are not interpretable and will not be discussed further.

As in the previous experiment, scenes encoded when an occasional auditory target was presented were better discriminated from novel scenes than were scenes encoded when distractors were presented. This advantage was observed for scenes that were presented for 200 ms and then masked, limiting additional perceptual processing. In short, the data replicated Experiment 1: Identity memory was better for scenes presented with targets than for scenes presented with distractors. This suggests that the memory advantage for images presented with targets cannot be simply attributed to the spread of attention and perceptual processing from the visual target to surrounding regions of the scene. It further indicates that increasing attention to auditory targets results in enhanced processing of concurrently presented visual images.

In some ways these data are reminiscent to recent reports of improved visual processing at the moment that a single auditory beep is presented. For example, in their experiments, Van der Berg, Olivers, Bronkhorst, and Theeuwes (2008) asked participants to search for a vertical bar among more than 35 slightly tilted distractor bars. The bars intermittently changed color (e.g., from green to red), and sometimes the target bar changed color at the same time that an auditory beep was presented. Under these conditions search times dramatically decreased, suggesting that the beep led to a pop-out like effect of the target. Other work has shown that auditory beeps alleviate the attentional blink (Olivers & Van der Burg, 2008), and increase the perceived duration of visual events (Vroomen & de Gelder, 2000). The integration of visual and auditory events may therefore partly explain the memory benefit for scenes encoded with auditory targets. However, it is important to note that auditory beeps were presented with all of the scenes in this experiment. The distinction made here is whether the beep was a target or distractor. It is not clear whether the auditory-visual integration effects described in previous experiments would preferentially occur for target beeps over distractor beeps.

The data from the first two experiments were remarkably consistent: Scenes encoded when an occasional target in an unrelated stream appears are better remembered than those encoded when a distractor appears [footnote 4].4 This is observed despite the fact that the attention to the detection task must increase in response to the appearance of targets (Duncan, 1980; Duncan et al., 1994). Rather, the data suggest that increasing attention to targets boosts memory for images presented at that time. For the remainder of the paper we will refer to the difference in performance for images presented with targets and images presented with distractors as the Attentional Boost Effect. The mechanisms that underlie this effect are undetermined, but a first step in identifying them is to examine its constraints as well as the conditions under which it is observed. The remainder of the paper focuses on these two issues. The next two experiments examined the “attention” element of the Attentional Boost Effect. The final experiment examined the interaction between attentional boost and attentional competition in dual-task performance.

Experiment 3 – Ignore the Target Detection Task

We have suggested that enhanced memory for scenes presented with targets in the previous experiments resulted from changes in attention associated with the detection of an occasional target. This assumption is based partly on previous studies that suggest that the detection of a target requires more attention than the rejection of a distractor (Duncan, 1980; Duncan et al., 1994; Raymond et al., 1992). Pilot data also supported this claim, showing that simple feature-detection tasks are sufficient to produce a relatively long attentional dwell time (see Footnote 1). Regardless, detecting an odd-colored visual target or an odd-pitched auditory target is a relatively simple task. In addition, the odd-featured targets in Experiments 1 and 2 were perceptually salient (e.g., the white square is brighter than the black squares). Therefore, to more directly examine the role of attention in the Attentional Boost Effect, we repeated Experiment 1a but instructed participants to ignore the squares and focus on the scenes. If the boost seen in Experiment 1a was driven by the perceptual saliency of an odd-featured target square, then the effect should still be observed when the white squares are not task-relevant. Alternatively, if the boost was triggered by a change in the attentional demands of the target detection task, then it should be eliminated in Experiment 3.

Methods

Participants

Sixteen participants (18–30 years old) completed this experiment.

Procedure

The experiment was identical to Experiment 1a except that participants were instructed to ignore the squares in the middle of the scene. As in Experiment 1a, participants were instructed to encode the scenes for a later memory task.

Results and Discussion

Because they did not perform the detection task, participants in Experiment 3 did not generate data during the scene-encoding phase. During the recognition phase, scene identity memory (Figure 4) was uniform across all encoding positions, F(12, 180) < 1, ns. Critically, however, there was no evidence that scenes encoded in the “target” position (i.e., the feature-oddball position) were better recognized than scenes encoded in the other positions, all ps > .05. In addition, orientation memory (see Appendix) was at chance overall (overall 48.8%, S.E. = 1.4%), and it also did not reliably vary across positions, F(12, 180) = 1.34, p > .20.

Figure 4.

Scene identity memory as a function of the serial position of the scene during single-task encoding in Experiment 3 and during dual-task encoding in Experiment 1a (replotted from Figure 2 to facilitate comparison). Serial positions are relative to the feature-oddball white square (WS or W). Error bars represent ±1 S.E. of the mean.

To further evaluate the importance of target detection in the Attentional Boost Effect, a direct comparison of scene identity memory in Experiment 1a and Experiment 3 was performed. Because there were no differences among all the positions before the target, or among all the positions after the target in Experiment 1a, we pooled data across positions 1–6 (pre-target), and positions 8–13 (post-target). An ANOVA on attentional demand (dual-task of Experiment 1a and single-task of Experiment 3) as a between-subject factor, and position (pre-target, target, and post-target) as a within subject factor revealed a significant main effect of position, F(2, 52) = 11.28, p < .001, ηp2 = .302, and a significant interaction between attentional demand and position, F(2, 52) = 5.75, p < .006, ηp2 = .181. The attentional demand by position interaction reflected the presence of an Attentional Boost Effect when the squares were attended, F(2, 22) = 11.58, p < .001, ηp2 = .513, and the absence of the effect when the squares were not attended, F(2, 30) = 1.54, p > .23. The main effect of attentional demand was not significant, F(1, 26) = 1.42, p > .25.

If the Attentional Boost Effect is driven by the perceptual saliency of an odd-colored square then it should have been observed in Experiment 3. Because this was not the case, the data suggest that the memory boost for scenes in the target position in Experiment 1 was driven by attention to the square task. The data also provide some evidence that detecting targets in the square task in Experiment 1 overcame the effects of dual-task interference. Whereas there was no evidence of dual-task interference for scene memory in the target position, t(26) = 0.45, p > .65, for non-target positions accuracy was marginally higher in the single-task (Experiment 3, mean = 76.3%, S.E.=3%) than in the dual-task conditions (Experiment 1a, mean = 68.5%, S.E. = 2.2%), t(26) = 1.99, p < .057.

Experiment 4 – Conjunction Target

To provide additional evidence that the Attentional Boost Effect occurs when the attentional demands of the target detection task briefly increase, Experiment 4 used a color-shape conjunction task. Participants were asked to press the spacebar whenever a red X was presented at the center of the display. The distractors were other red letters whose shapes resembled the X (Y, V, K, and Z) as well as Xs in other colors. To correctly detect the target red-X, participants needed to properly bind color and shape together, a process that requires focused attention (Treisman & Gelade, 1980). Because the letter X and the color red were repeated in the distractors, the perceptual saliency of the target was eliminated. Nonetheless, orienting attention to the occasional target should have still led to a transient increase of attention to that moment in time. If so the Attentional Boost Effect should be present when participants search for targets defined by color-shape conjunctions. Alternatively, if the Attentional Boost Effect is restricted to simple oddball detection tasks, it should be eliminated in Experiment 4.

Methods

Participants

There were 16 participants (18–23 years old).

Materials

The same materials used in Experiment 1a were used in this experiment.

Task

For the dual-task encoding phase participants monitored colored letters for a red-X (conjunction search). Whenever a scene was presented, any of four letters (“X”, “Y”, “Z”, and “V”) could appear in any of four colors (red, blue, green and yellow) in a gray box (1.25°×1.25°) over the scene. The letters (.67° tall) were presented for 400 ms with a 100 ms inter-stimulus interval. Participants pressed the space bar whenever a red-X (target) appeared and made no response when any other color-letter combination appeared (distractors). Scenes were presented for 500 ms (0 ms ISI). There were nine serial positions and targets were presented at position 4. There were 100 targets in the dual-task encoding phase.

In the second phase scene memory was tested using the four-alternative forced choice test described in Experiment 1.

Results and Discussion

Phase 1: Dual-task encoding

Participants responded to a mean of 94.2% of the conjunction targets (SD = 4.4) with a mean response time of 495 ms (SD = 46.2). Responses were made to a distractor on a mean of 3.25% of the trials (SD = 2.79).

Phase 2: Recognition memory

As illustrated in Figure 5, scene identity memory varied across positions and was best for scenes that were encoded when the conjunction targets also appeared. An ANOVA on position (1–9), however, did not reveal a main effect of position, F(8, 120) = 1.57, p = .142, perhaps because this analysis was underpowered (i.e., the omnibus F is the average of all Fs for all positions, including all the nontarget positions that a priori would not differ from one another). Importantly, planned comparisons did indicate that scene identity memory was greater at the target position than the pre-target, t(15) = 2.32, p < .035, d = 0.728, or post-target positions, t(15) = 2.23, p < .042, d = 0.64, leading to a significant effect of position in an ANOVA on position with 3 levels (target, pre-target, and post-target), F(2, 30) = 3.96, p <.03, ηp2 = .209. Furthermore, a follow-up analysis indicated that the magnitude of the position effect was not significantly different for the conjunction search task and for the feature oddball detection task in Experiment 1a, F(2, 44) = 1.01, p >.374 for the interaction of experiment and position, F(2, 44) = 13.9, p <.001, ηp2 = .388 for the main effect of position, ηp2 = .209, F(2, 44) <1, ns for the main effect of experiment. These data indicate that the Attentional Boost Effect was present when the targets were defined by the conjunction of two features (d for targets vs. distractors = .706). However, the increase in task difficulty of the conjunction search task relative to feature oddball detection may have reduced its magnitude, at least numerically (d for targets vs. distractors = 1.27 in Experiment 1a). We will return to this issue in Experiment 5.

Figure 5.

Scene identity memory accuracy in Experiment 4 as a function of the serial position of the scene during encoding. Error bars represent ±1 S.E. of the mean.

Orientation memory (see Appendix) was qualitatively similar to the pattern shown in scene identity memory, although the memory advantage for scenes encoded at the target position failed to reach statistical significance in the planned comparisons, F(2, 30) = 2.28, p > .12.

Experiments 1, 2 and 4 demonstrated that detecting a target in one task enhances later memory for scenes that were presented at the same time as the target. We have proposed that this effect results from a boost of attention at the moment that the target occurs. Several pieces of evidence support this conjecture, suggesting that the Attentional Boost Effect is related to “attention” rather than the perceptual saliency of the target. First, this effect was eliminated when participants ignored the target detection task (Experiment 3). These data indicate that the perceptual saliency of an odd-featured item is not sufficient to facilitate memory for the concurrently presented items. Second, the effect occurred when a color-shape conjunction, rather than a simple feature, defined the targets in the primary detection task (Experiment 4). Again, these data show that targets need not be perceptually salient in order to produce the Attentional Boost Effect. They further demonstrate that memory for scenes that are concurrently presented with targets is enhanced when attention is needed to bind the features of the target into a single object (Treisman & Gelade, 1980). Finally, studies on dual-target detection costs (Duncan, 1980; Duncan et al., 1994; Raymond et al., 1992) and our own pilot data (see Footnote 1) show that attentional demands are greater during target detection than during distractor rejection, even when targets are defined by feature oddballs (see also Wolfe et al., 2003). These data are consistent with the claim that transient increases of attention to the targets lead to better memory for concurrently presented scenes. Increases of attention in one task can facilitate, rather than impair, performance on a second task.

Experiment 5 – Attentional Competition and Attentional Boost

Although the Attentional Boost Effect seems to contradict predictions from dual-task interference theories, it cannot be taken as evidence against attentional competition. Instead, the Attentional Boost Effect may reflect a tradeoff between attentional competition and attentional boost, with the latter dominating under some circumstances. If this is the case, then increasing attentional competition when targets but not distractors appear may eliminate the Attentional Boost Effect. This final experiment illustrates the dynamic tradeoff between attentional competition and attentional boost.

Two versions of the detection task differed in the type of response that was made to the odd-colored target squares. In both tasks, the targets were red squares and green squares and the distractors were black squares. In the simple-detection task, participants pressed the spacebar whenever either a red or a green square appeared. In the arbitrary-mapping task, participants pressed one key for red squares and another key for green squares. No responses were required for the distractor squares in either task. As in Experiment 1, targets in the simple-detection task should produce a transient increase in attention relative to distractors, producing an Attentional Boost Effect. In the arbitrary-mapping task, the detection of a target should also lead to a transient increase in attention. However, additional attentional processes, such as accessing the response mapping in working memory (“red is key ‘r’, green is key ‘g’”) and selecting an arbitrary response, are required for responding to the targets in this task. These demands on attention may offset any attentional boost from orienting attention to the time when the target appeared, eliminating the Attentional Boost Effect in a subsequent memory test.

Methods

Participants

Thirty-two participants (19–33 years old) were in Experiment 5.

Materials

Fifty additional scenes were added to the same materials used in Experiment 1a, for a total of 380 distinct scenes.

Task

For the dual-task encoding phase, the targets were red squares and green squares among black distractor squares. One group of 16 participants performed a simple-detection task, pressing the spacebar whenever either a red square or green square appeared. The second group of 16 participants performed an arbitrary-mapping task, pressing the ‘r’ key whenever a red square appeared and the ‘g’ key whenever a green square appeared. Scenes were presented for 500 ms. Squares were presented for the first 100 ms that the scene was on the screen, and then erased. Trials consisted of seven encoding positions and targets occurred on position 4. There were 10 scenes per position per target square color and 100 additional filler scenes. There were 200 targets in the dual-task encoding phase of both tasks.

In the second phase scene memory was tested using the 4AFC described in Experiment 1.

Results and Discussion

Phase 1: Dual-task encoding

In Experiment 5, participants performing the simple-detection task responded to a mean of 97.9% (SD = 1.65) of targets with a mean response time of 438 ms (SD = 35). Participants in the arbitrary-mapping task accurately responded to reliably fewer targets (M = 92.3%; SD = 4.44) and made slower responses (M = 581 ms; SD = 79.4) than those in the simple-detection task, smallest t(30) = 4.75, p < .001, d = 1.68. In both tasks, participants made false alarms on a small percentage of trials (simple-detection task: M = 1.59%, SD = 2.1; arbitrary-mapping task: M = 1.25%, SD = 1.99).

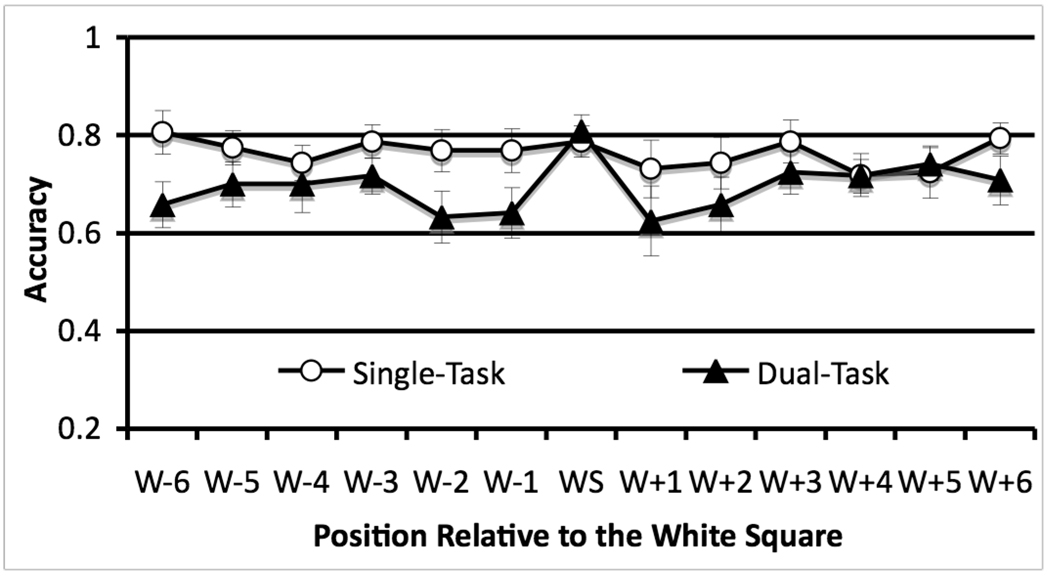

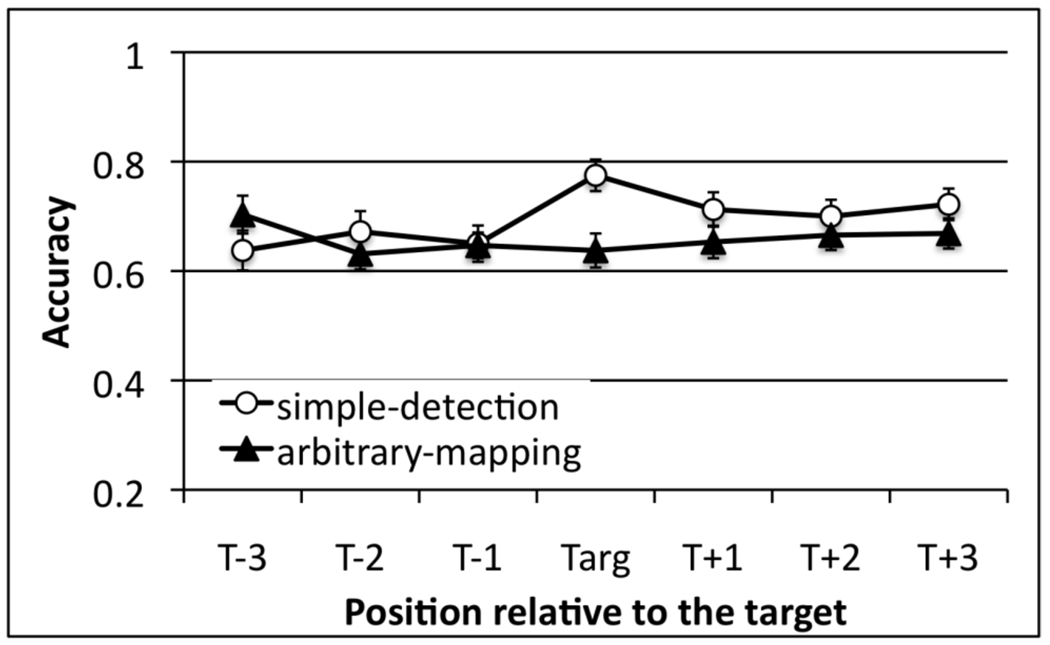

Phase 2: Scene recognition memory

As can be seen in Figure 6, the effect of position on scene identity memory depended on the task that participants performed. An ANOVA on primary task demand (simple-detection vs. arbitrary-mapping) as a between-subject factor and encoding position (1–7) as a within-subject factor revealed a significant interaction between position and primary task demand, F(6, 180) = 2.74, p < .014, ηp2 = .084; the two main effects were not significant, F(6, 180) = 1.35, p > .23 for position, F(1, 30) = 1.93, p > .18 for task demand. The interaction resulted from the lack of a position effect in the arbitrary-mapping task, F(6, 90) < 1, ns, and the presence of a position effect in the simple detection task, F(6, 90) = 3.43, p < .004, ηp2 = .186. Follow up t-tests confirmed that accuracy for scenes in the target position was significantly greater than for those in the pre-target positions, t(15) = 3.23, p < .006, d = 1.03, and post-target positions, t(15) = 2.15, p < .049, d = 0.617, in the simple-detection task but not in the arbitrary-mapping task, largest t(15) = 0.61, p > .50.

Figure 6.

Scene identity memory accuracy in Experiment 5 as a function of the serial position of the scene during encoding. Error bars represent ±1 S.E. of the mean.

Orientation memory performance (see Appendix) was at chance for both the simple-detection task (mean = 50.5%, S.E. = 1.4%, p > .70) and the arbitrary-mapping task (mean = 51.3%, S.E. = 1.2%, p > .20). An ANOVA indicated that there were no reliable effects of position, primary task demand, or their interaction on orientation memory (all Fs < 1, ns.).

This experiment illustrates that the Attentional Boost Effect likely reflects the combination of two attentional effects: secondary task facilitation as a consequence of increased attention due to target detection, and secondary task interference as a consequence of the increased processing demands associated with target detection. When the additional attentional demands of target detection are relatively low (e.g., in a simple detection task), the two effects sum up to a net facilitation. As additional demands for target detection increase, interference should overcome facilitation, eliminating the Attentional Boost Effect.

The claim that the increased attentional demands of the arbitrary-mapping task cancelled out the attentional boost associated with target detection may appear somewhat inconsistent with the data from Experiment 4, in which a conjunction search task produced the Attentional Boost Effect. However, these tasks differed in two critical ways. First, as is evident in the target detection response times, the arbitrary mapping task was more difficult than the conjunction search task (mean RT = 581 ms for arbitrary mapping and 495 ms for conjunction search, t(30) = 3.76, p < .001). Greater detection task difficulty in the arbitrary mapping task than in the conjunction search task likely led to greater competition for attentional resources when the target appeared, canceling the facilitative effects of target detection on scene encoding. Second, the arbitrary-mapping and conjunction-search tasks have different types of attentional demands. Whereas the conjunction-search task used in Experiment 4 demanded greater attention for perceptual recognition, the arbitrary-mapping task in Experiment 5 demanded greater attention for response selection or working memory. Perceptual attention and response selection are somewhat separable in cognitive tasks (Pashler, 1994). Future studies are needed to examine how attentional demands at different stages of processing interact with the Attentional Boost Effect.

General Discussion

Although the capacity limits of attention suggest that reacting to changes in events should interfere with performance on a second task, several theories of perception and attention suggest that changes in events trigger additional perceptual processing. In several experiments we asked participants to encode a long series of images while they monitored a second stimulus stream for occasional targets. Because distractors were usually presented and targets were rare, the appearance of a target constituted a task-relevant change in the ongoing event. Despite the fact that attention to the detection task should increase when targets appear, there was no evidence of impaired encoding at those times. Rather, the data were consistent with the facilitation hypothesis: In all but one case (the arbitrary-mapping task in Experiment 5) images presented with targets were better encoded than images presented with distractors. This advantage was observed across different types of detection tasks (simple oddball detection in visual and auditory streams and feature-conjunction detection). However, it was eliminated when participants ignored the detection task and when responses were arbitrarily mapped to the targets in the detection task. The data suggest a new phenomenon: Increasing attention to targets in one task facilitates or boosts performance in a second concurrent task (the Attentional Boost Effect).

Other related phenomena

The data presented in this paper are the first to demonstrate that detecting a target in one task can facilitate performance in a second image encoding task. However, although there are differences, aspects of the Attentional Boost Effect are similar to other attentional and memory phenomena in the literature.

Perceptual learning

A phenomenon similar to the Attentional Boost Effect has been observed in several studies of perceptual learning. Seitz and Watanabe (2003, 2005) asked participants to report the identities of two, briefly presented and infrequent white letters that were presented in a stream of black letters. A random-dot motion display with sub-threshold coherence was presented with each letter, but a particular direction of motion was always presented with the target letters. In a later test, participants more accurately identified the direction of supra-threshold motion that was the same direction as the sub-threshold motion paired with target letters than those that were paired with other directions. These data suggest that increasing attention to task-relevant information enhances perceptual learning of task-irrelevant background information. Seitz & Watanabe (2005) attribute this enhancement to a reward-based learning mechanism that globally reinforces perceptual information presented during a rewarding event, even when that information is not attended or explicitly identified. Unlike the Attentional Boost Effect, however, these perceptual learning effects relied on several hours of training, participants performed only one task, the motion stimuli were not relevant to participants’ ongoing task, and the motion direction was not perceptible by the participants (cf. Seitz & Watanabe, 2003). In addition, the perceptual learning effects described by Seitz and Watanabe (2003; 2005) were eliminated when the coherent motion of the random dot displays was above threshold and could be attended (Tsushima, Seitz, & Watanabe, 2008). Thus, there appear to be important differences in the role of attention to the background images in these perceptual learning effects and the Attentional Boost Effect. However, differences in the paradigms that elicit these two effects make it difficult to determine whether they result from similar cognitive processes without further investigation.

Memory isolation effects

In some ways, the Attentional Boost Effect is reminiscent of the isolation effect in memory (Hunt, 1995; Schmidt, 1991). In the standard version of these experiments, participants are given a list of items to memorize for a later test. All but one of the words are from the same category (e.g., one word is “apple” in a list of birds). On a later test the item that is different is recalled at a higher rate than the other items on the list, a phenomenon initially described by von Restorff (Hunt, 1995). Many manipulations produce the isolation effect, including displaying one item in a different font, color, or in a different size than the other items (Schmidt, 1991). Some explanations of the isolation effect suggest that it occurs because items that are distinct from the preceding context attract attention, elaborative processing, and are separately organized in memory than the other items in the list (Fabiani & Donchin, 1995) [footnote 5].5

Similarly, it may appear that in the studies reported here images presented with targets were better remembered because they occurred as part of a “distinctive event.” However, there are several problems with equating the Attentional Boost Effect with the isolation effect. First, previous research has shown that the memory isolation effect disappears when the feature that made an item distinctive during encoding is not reproduced during retrieval. For example, the memory isolation effect is not observed when words that were presented in a distinctive font size during encoding (e.g., the words were written in large letters when the standard font size was medium) were presented in the same sized font as the standard words during the recognition test (e.g., all words were presented in a medium sized font; Fabiani & Donchin, 1995). In contrast, the Attentional Boost Effect occurs despite the fact that the distinctive events (the targets) are not presented with the scenes during the test and that participants were only required to discriminate old scenes from new scenes. Second, the data from Experiments 3 and 4 show that perceptual distinctiveness is neither sufficient nor necessary to produce the Attentional Boost Effect. If perceptual distinctiveness were sufficient to facilitate memory for the scenes presented with the targets, then the Attentional Boost Effect should have occurred in the single-task encoding condition tested in Experiment 3. Moreover, if perceptual distinctiveness were necessary to produce the Attentional Boost Effect, then it should not have been observed in Experiment 4, in which the targets were defined by the conjunction of two features that appeared frequently in the stimulus stream (the color red and the letterform X). Finally, a simple memory isolation account of the Attentional Boost Effect would predict that scenes presented with the red and green target squares in the arbitrary-mapping task of Experiment 5 should be better remembered than those that were presented with black distractor squares. However, no memory advantage for these scenes was observed.

Although we are arguing the Attentional Boost Effect is not the isolation effect, it is likely that the processes that underlie it may be some of the same processes involved in producing the isolation effect, particularly increased attention to the unique item at the time of encoding. What is novel about the findings reported here, however, is that they show that increasing attention to the unique item facilitates the encoding of other, concurrent, task-relevant information.

The Attentional Blink

The attentional blink refers to impaired detection of a target presented in a rapid stream of visual stimuli (usually 100 ms/item) when it is presented within approximately 200–500 ms of an earlier target (Chun & Potter, 1995; Raymond et al., 1992). The use of rapid-serial-visual-presentation used in the current paradigm makes it similar to the attentional blink. However, the presentation rate was much slower, and the two tasks were concurrent rather than sequential. In addition, there was little evidence of impaired memory for scenes presented in post-target positions in these data, differentiating the Attentional Boost Effect from the attentional blink.

Theoretical interpretation

Although the purpose of this study was to describe and constrain the Attentional Boost Effect, several theories of attention and perception permit some speculation about its underlying mechanisms. A recent theory of event perception, Event Segmentation Theory (EST) provides some insight into how changes in events might influence attention and memory over time (Zacks, Speer, Swallow, Braver, & Reynolds, 2007). EST suggests that active memory is regulated by a control mechanism that is similar to the dopamine based gating mechanisms thought to underlie goal-maintenance and cognitive control (Botvinick, Braver, Barch, Carter, & Cohen, 2001; Frank, Loughry, & O'Reilly, 2001; Miller & Cohen, 2001; O'Reilly, Braver, & Cohen, 1999). This gating mechanism may be implemented by subcortical neuromodulatory structures, such as the locus coeruleus (LC), that are involved in alerting and orienting to salient environmental changes (Aston-Jones & Cohen, 2005; Bouret & Sara, 2005; Coull, 1998; Robbins, 1997). The alerting response is a brief increase in attention to the external environment in response to sudden salient environmental changes (Posner & Boies, 1971; Sokolov, Nezlina, Polyanskii, & Evtikhin, 2002). EST suggests that once an event changes, the gating mechanism is triggered and internal representations of the ongoing event are updated. EST therefore predicts that perceptual information should be preferentially encoded when events change. In the Attentional Boost Effect, the appearance of a target in the detection task constitutes a change in the ongoing task and may trigger the opening of an attentional gate, enhancing perceptual processing of information that is concurrently presented with the targets.

The notion that the Attentional Boost Effect results from the opening of an attentional gate is consistent with several accounts of transient attention. According to these theories, target detection is associated with an increase in attention to sensory representations for approximately 150 ms (cf., Bowman & Wyble, 2007; Nakayama & Mackeben, 1989; Olivers & Meeter, 2008; Reeves & Sperling, 1986). For example, in the Boost and Bounce Theory of temporal attention, Olivers and Meeter propose that sensory representations receive excitatory feedback following the detection of a target, but are inhibited following the detection of a distractor (Olivers & Meeter, 2008). In the Simultaneous Type, Serial Token (ST2) model of attention, the appearance of a target is also posited to trigger a transient period of excitatory feedback to salient item representations (Bowman & Wyble, 2007). It is possible that such a gating or filtering mechanism underlies the Attentional Boost Effect: The appearance of a target may trigger a transient increase in excitatory feedback to representations of the concurrently presented images. An important point, however, is that these models were designed to account for attentional effects that occur for the target itself, or for subsequently presented items that appear in the same spatial location as the target. It is therefore not clear whether they can fully account for the data presented here without some modification.

Other data show that the occurrence of unexpected events, infrequent events, and exposure to novel stimuli increase attention and arousal and are associated with the release of norepinephrine (NE) from the LC (Aston-Jones, Rajkowski, Kubiak, & Alexinsky, 1994; Schultz & Dickinson, 2000; Vankov, Hervé-Minvielle, & Sara, 1991) and acetylcholine (ACh) from the nucleus basalis (Acquas, Wilson, & Fibiger, 1996; Ranganath & Rainer, 2003; Schultz & Dickinson, 2000). ACh is released to widespread regions of the cortex, particularly to the hippocampus and other regions of the medial temporal lobe and is involved in signaling the appearance of novel stimuli (Acquas, Wilson, & Fibiger, 1996; Ranganath & Rainer, 2003; Yamaguchi, Hale, D'Esposito, & Knight, 2004). LC neurons widely project throughout the cortex, and the NE they release may increase the sensitivity of neural networks to input from earlier processing regions (Servan-Schreiber, Printz, & Cohen, 1990). Therefore, NE may act to reinforce perceptual information that is present when unexpected events occur (Aston-Jones & Cohen, 2005; Aston-Jones, Rajkowski, Kubiak, & Alexinsky, 1994; Schultz & Dickinson, 2000; Seitz & Watanabe, 2005). Indeed, Aston-Jones and Cohen (2005) suggest that phasic responses of the LC act as a temporal filter to perceptual input, facilitating neural processing of task-relevant information. At the cognitive level, the release of NE may correspond to the opening of an attentional gate to task-relevant information that is encountered at a particular moment in time (cf. Nieuwenhuis, Gilzenrat, Holmes & Cohen, 2005; Olivers & Meeter, 2008).

With these data and theories in mind, we propose that the Attentional Boost Effect reflects the opening of an attentional gate consequent to orienting attention to a particular moment in time. Specifically, we suggest that detecting an occasional target in one task may induce a transient attentional orienting response to the moment in time that the target appeared. This attentional orienting response might then open an attentional gate that facilitates the processing and encoding of both primary and secondary task information into memory. We are currently investigating whether the attentional gate is restricted to secondary information that coincides with the target stimulus in space and in time. However, we note that it is also possible that the Attentional Boost Effect reflects reward processing for information presented with targets (cf. Seitz & Watanabe, 2003), and/or an increase in physiological arousal in response to the appearance of targets.[footnote 6]6 Additional work is needed to evaluate these possibilities and to pinpoint the precise mechanisms of the Attentional Boost Effect.

In addition, because we used scene memory to measure the Attentional Boost Effect, it is not yet clear whether the effect is due to additional perceptual processing (as we have suggested) or to the enhancement of later mnemonic processes such as consolidation and elaboration. This problem is amplified by the fact that the images were presented several times, rather than once, making it possible that an extended learning process underlies the effect (cf. Seitz & Watanabe, 2005; Seitz & Dinse, 2007). Additional research will need to evaluate whether the Attentional Boost Effect reported here can be attributed, in part, to differences in the way images encoded when targets appear are consolidated and stored in memory. However, in other work we have shown that the Attentional Boost Effect is evident in perceptual discrimination tasks (Swallow, Makovski, & Jiang, submitted). In these experiments, participants were better able to report the gender of a face that coincided with a target or that followed a prime face that coincided with a target rather than a distractor. It is therefore unlikely that the effects reported here result solely from enhanced consolidation and elaborative processing for scenes that are presented at the same time as targets.

Conclusion

It has long been known that contextually novel stimuli capture attention and are better remembered than other stimuli. However, it has previously been unclear how changes in events influence the way other information is processed. In several experiments we showed that despite the fact that identifying and responding to task-relevant changes in events requires attention, these changes are associated with increased processing of concurrently presented and unrelated information, a phenomenon we call the Attentional Boost Effect. We have shown that the Attentional Boost Effect occurs with a variety of target detection tasks and cannot be attributed to the perceptual saliency of the occasional targets. The Attentional Boost Effect is eliminated when the target detection task is ignored, and when the target detection task requires arbitrary stimulus mapping. Although the exact nature of the Attentional Boost Effect requires further investigation, it may reflect the opening of an attentional gate following a temporal orienting response. These data are unique in that they show that changes in one stimulus can facilitate processing of another, task-relevant stimulus. Thus, when a traffic light changes from green to yellow, the Attentional Boost Effect suggests that processing of the pedestrian at the crosswalk is enhanced rather than impaired.

Acknowledgements

This research was funded in part by NIH 071788 and funding from the Institute of Marketing and Research of the University of Minnesota. We thank Andrew Hollingworth, Todd Horowitz, Eric Ruthruff, Tal Makovski, and an anonymous reviewer for comments, and Jennifer Decker, Kathryn Hecht, Shannon Perry, Heather Vandenheuvel, and Leah Watson for help with data collection.

Appendix

Appendix.

Orientation memory in Experiments 2–5.

| Experiment 2. Auditory targets | |||||||

|---|---|---|---|---|---|---|---|

| Position | T−3 | T−2 | T−1 | Target | T+1 | T+2 | T+3 |

| Accuracy | .50 | .57 | .47 | .45 | .59 | .53 | .40 |

| S.E. | .07 | .07 | .03 | .07 | .06 | .05 | .08 |

| Experiment 3. Single-task | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Position | T−6 | T−5 | T−4 | T−3 | T−2 | T−1 | Target | T+1 | T+2 | T+3 | T+4 | T+5 | T+6 |

| Accuracy | .44 | .52 | .48 | .49 | .42 | .55 | .54 | .47 | .41 | .48 | .59 | .46 | .49 |

| S.E. | .04 | .04 | .04 | .04 | .04 | .04 | .05 | .04 | .04 | .06 | .04 | .05 | .05 |

| Experiment 4. Feature-conjunction detection | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Position | T−3 | T−2 | T−1 | Target | T+1 | T+2 | T+3 | T+4 | T+5 |

| Accuracy | .52 | .62 | .58 | .66 | .52 | .56 | .59 | .56 | .58 |

| S.E. | .05 | .05 | .04 | .04 | .07 | .06 | .06 | .06 | .04 |

| Experiment 5a. Red & green simple-detection | |||||||

|---|---|---|---|---|---|---|---|

| Position | T−3 | T−2 | T−1 | Target | T+1 | T+2 | T+3 |

| Accuracy | .47 | .50 | .49 | .51 | .51 | .54 | .52 |

| S.E. | .03 | .03 | .03 | .04 | .03 | .03 | .03 |

| Experiment 5a. Red & green arbitrary stimulus-response mapping | |||||||

|---|---|---|---|---|---|---|---|

| Position | T−3 | T−2 | T−1 | Target | T+1 | T+2 | T+3 |

| Accuracy | .55 | .53 | .46 | .54 | .47 | .52 | .53 |

| S.E. | .04 | .04 | .04 | .04 | .03 | .02 | .04 |

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

In a pilot study, we used the attentional blink procedure (see Duncan et al., 1994; Raymond, Shapiro, & Arnell, 1992) to measure the attentional-dwell time for white target items presented among black distractor items. Eight participants viewed a stream of letters presented at a rate of 105 ms/item. Most of the letters were black but on half the trials one letter was white. At the end of the stream, participants reported whether a white letter was present or absent (T1 task), and whether the letter X was present or absent (T2; T2 trailed T1 by 1, 2, 4, or 8 lags). We found that T2 performance was impaired at lag 2 on T1-present trials (69% at Lag 2 vs. 89% at Lag 8, p < .01); T2 performance was highly accurate on T1-absent trials (89%). These results indicate that detecting a simple feature oddball places demands on attention, a conclusion supported by other visual attention tasks (e.g., Wolfe, Butcher, Lee, & Hyle, 2003).

In the second case the shorter scene durations prevented multiple fixations of the scene. However, some participants complained about this procedure due to the frequent onset and offset of the stimuli. Later experiments were more similar to Experiment 1a.

A pilot study showed that presenting participants with only one block of the scene-encoding task led to near-chance memory performance for the scenes.

In additional experiments we found similar results when famous faces, rather than scenes, were used as background stimuli, and when the 4AFC recognition-memory task was replaced with an “old”/”new” recognition task.

Because the isolation effect occurs for items that are presented early in a list and before any particular context has been established (Hunt, 1995; Hunt & Lamb, 2001), several theorists argue that it arises from the way the items are organized in memory or from retrieval related processing. For example, it has been suggested that the isolation effect is primarily due to a failure of retrieval cues to sufficiently specify the to be remembered items (cf. Nairne, 2006; Park, Arndt, & Reder, 2006), that distinctive items are organized into separate, and smaller categories in memory than are the standard items (Fabiani & Donchin, 1995), or that it reflects a failure to evaluate the differences between the standard items at encoding (cf. Hunt & Lamb, 2001).

Another explanation is that participants may have strategically attended to the scenes that were presented with targets because they surmised that these scenes were more important than the others. However, it is not clear why participants would strategically encode scenes presented with targets in the simple detection and conjunction-search tasks, but not scenes presented with the infrequent white squares in Experiment 3 (which had no apparent purpose) or with the targets in Experiment 5. Without additional detail, the strategy account of the Attentional Boost Effect provides little basis for making predictions about how the effect would be influenced by different task manipulations, making it difficult to falsify.

References

- Acquas E, Wilson C, Fibiger HC. Conditioned and unconditioned stimuli increase cortical and hippocampal acetylcholine release: Effects of novelty, habituation, and fear. Journal of Neuroscience. 1996;16:3089–3096. doi: 10.1523/JNEUROSCI.16-09-03089.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson AK, Wais PE, Gabrieli JDE. Emotion enhances remembrance of neutral events past. Proceedings of the National Academy of Science, USA. 2006;103(5):1599–1604. doi: 10.1073/pnas.0506308103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aston-Jones G, Cohen JD. An integrative theory of locus coeruleus-norepinephrine function: Adaptive gain and optimal performance. Annual Review of Neuroscience. 2005;28:403–450. doi: 10.1146/annurev.neuro.28.061604.135709. [DOI] [PubMed] [Google Scholar]

- Aston-Jones G, Rajkowski J, Kubiak P, Alexinsky T. Locus coeruleus neurons in monkey are selectively activated by attended cues in a vigilance task. Journal of Neuroscience. 1994;14(7):4467–4480. doi: 10.1523/JNEUROSCI.14-07-04467.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick MM, Braver TS, Barch DM, Carter CS, Cohen JD. Conflict monitoring and cognitive control. Psychological Review. 2001;108(3):624–652. doi: 10.1037/0033-295x.108.3.624. [DOI] [PubMed] [Google Scholar]

- Bowman H, Wyble B. The simultaneous type, serial token model of temporal attention and working memory. Psychological Review. 2007;114:38–70. doi: 10.1037/0033-295X.114.1.38. [DOI] [PubMed] [Google Scholar]

- Bouret S, Sara SJ. Network reset: a simplified overarching theory of locus coeruleus noradrenaline function. Trends in Neuroscience. 2005;28(11):574–582. doi: 10.1016/j.tins.2005.09.002. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spatial Vision. 1997;10:433–436. [PubMed] [Google Scholar]

- Chun MM, Potter MC. A two-stage model for multiple target detection in rapid serial visual presentation. Journal of Experimental Psychology: Human Perception & Performance. 1995;21(1):109–127. doi: 10.1037//0096-1523.21.1.109. [DOI] [PubMed] [Google Scholar]

- Coull JT. Neural correlates of attention and arousal: Insights from electrophysiologicy, functional neuroimaging and psychopharmacology. Progress in Neurobiology. 1998;55:343–361. doi: 10.1016/s0301-0082(98)00011-2. [DOI] [PubMed] [Google Scholar]

- Duncan J. The locus of interference in the perception of simultaneous stimuli. Psychological Review. 1980;87(3):272–300. [PubMed] [Google Scholar]

- Duncan J, Ward R, Shapiro K. Direct measurement of attentional dwell time in human vision. Nature. 1994;369(6478):313–315. doi: 10.1038/369313a0. [DOI] [PubMed] [Google Scholar]

- Fabiani M, Donchin E. Encoding processes and memory organization: A model of the von Restorff Effect. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1995;21(1):224–240. doi: 10.1037//0278-7393.21.1.224. [DOI] [PubMed] [Google Scholar]

- Frank MJ, Loughry B, O'Reilly RC. Interactions between frontal cortex and basal ganglia in working memory: A computational model. Cognitive, Affective, and Behavioral Neuroscience. 2001;1(2):137–160. doi: 10.3758/cabn.1.2.137. [DOI] [PubMed] [Google Scholar]

- Grossberg S. Linking attention to learning, expectation, competition, and consciousness. In: Itti L, Rees G, Tsotos J, editors. Neurobiology of attention. San Diego: Elsevier; 2005. pp. 652–662. [Google Scholar]

- Hunt RR. The subtlety of distinctiveness: What von Restorff really did. Psychonomic Bulletin & Review. 1995;2(1):105–112. doi: 10.3758/BF03214414. [DOI] [PubMed] [Google Scholar]

- Hunt RR, Lamb CA. What causes the isolation effect? Journal of Experimental Psychology: Learning, Memory, and Cognition. 2001;27(6):1339–1366. [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annual Review of Neuroscience. 2001;24(1):167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Moore CM, Egeth H, Berglan L, Luck SJ. Are attentional dwell times inconsistent with serial visual search? Psychonomic Bulletin & Review. 1996;3:360–365. doi: 10.3758/BF03210761. [DOI] [PubMed] [Google Scholar]

- Nairne JS. Modeling distinctiveness: Implications for general memory theory. In: Hunt RR, Worthen JB, editors. Distinctiveness and Memory. New York: Oxford University Press; 2006. pp. 27–46. [Google Scholar]

- Newtson D, Engquist G. The perceptual organization of ongoing behavior. Journal of Experimental Social Psychology. 1976;12:436–450. [Google Scholar]

- Nieuwenhuis S, Gilzenrat MS, Holmes BD, Cohen JD. The role of the locus coeruleus in mediating the attentional blink: A neurocomputational theory. Journal of Experimental Psychology: General. 2005;134:291–307. doi: 10.1037/0096-3445.134.3.291. [DOI] [PubMed] [Google Scholar]

- O'Reilly RC, Braver TS, Cohen JD. A biologically based computational model of working memory. In: Miyake A, Shah P, editors. Models of Working Memory: Mechanisms of Active Maintenance and Executive Control. Cambridge: Cambridge University Press; 1999. pp. 375–411. [Google Scholar]

- Olivers CN. The time course of attention. Current Directions in Psychological Science. 2007;16(1):11–15. [Google Scholar]

- Olivers CN, Meeter M. A boost and bounce theory of temporal attention. Psychological Review. 2008;115(4):836–863. doi: 10.1037/a0013395. [DOI] [PubMed] [Google Scholar]

- Olivers CN, Van der Burg E. Bleeping you out of the blink: Sound saves vision from oblivion. Brain Research. 2008;1242:191–199. doi: 10.1016/j.brainres.2008.01.070. [DOI] [PubMed] [Google Scholar]

- Park H, Arndt J, Reder LM. A contextual interference account of distinctiveness effects in recognition. Memory & Cognition. 2006;34:743–751. doi: 10.3758/bf03193422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pashler H. Dual-task interference in simple tasks: Data and theory. Psychological Bulletin. 1994;116(2):220–244. doi: 10.1037/0033-2909.116.2.220. [DOI] [PubMed] [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision. 1997;10:437–442. [PubMed] [Google Scholar]

- Posner MI, Boies SJ. Components of Attention. Psychological Review. 1971;78(5):391–408. [Google Scholar]

- Ranganath C, Rainer G. Neural mechanisms for detecting and remembering novel events. Nature Reviews Neuroscience. 2003;4:193–202. doi: 10.1038/nrn1052. [DOI] [PubMed] [Google Scholar]

- Raymond JE, Shapiro KL, Arnell KM. Temporary suppression of visual processing in an RSVP task: An attentional blink? Journal of Experimental Psychology: Human Perception & Performance. 1992;18:849–860. doi: 10.1037//0096-1523.18.3.849. [DOI] [PubMed] [Google Scholar]

- Reeves A, Sperling G. Attention gating in short-term visual memory. Psychological Review. 1986;93:180–206. [PubMed] [Google Scholar]

- Robbins TW. Arousal systems and attentional processes. Biological Psychology. 1997;45:57–71. doi: 10.1016/s0301-0511(96)05222-2. [DOI] [PubMed] [Google Scholar]

- Schmidt SR. Can we have a distinctive theory of memory. Memory & Cognition. 1991;19:523–542. doi: 10.3758/bf03197149. [DOI] [PubMed] [Google Scholar]