Abstract

Event-related potentials were recorded from adults and 4-month-old infants while they watched pictures of faces that varied in emotional expression (happy and fearful) and in gaze direction (direct or averted). Results indicate that emotional expression is temporally independent of gaze direction processing at early stages of processing, and only become integrated at later latencies. Facial expressions affected the face-sensitive ERP components in both adults (N170) and infants (N290 and P400), while gaze direction and the interaction between facial expression and gaze affected the posterior channels in adults and the frontocentral channels in infants. Specifically, in adults, this interaction reflected a greater responsiveness to fearful expressions with averted gaze (avoidance-oriented emotion), and to happy faces with direct gaze (approach-oriented emotions). In infants, a larger activation to a happy expression at the frontocentral negative component (Nc) was found, and planned comparisons showed that it was due to the direct gaze condition. Taken together, these results support the shared signal hypothesis in adults, but only to a lesser extent in infants, suggesting that experience could play an important role.

Keywords: ERP, facial expressions, gaze, shared signal hypothesis

Facial expressions play an important role in social communication. For example, social context influences facial expressions of emotion, and humans use facial expressions to interpret the intentions and goals of others (Erickson and Schulkin, 2003). An equally important role in social communication is played by changes in the direction of eye gaze, with direct (mutual) gaze providing a basic platform for other kinds of interaction and communication (e.g. Senju and Johnson, 2009). In the natural environment, facial expressions are always present along with eye gaze, and a number of phenomena have been described from research with adults that involve interactions between expression and direction of eye gaze (Adams and Kleck, 2003, 2005). While previous research has described the neurodevelopmental mechanisms of facial expression processing (e.g. Eimer and Holmes, 2002; Batty and Taylor, 2003) and suggested hypotheses about the early functional emergence of emotion-related brain systems (Leppänen and Nelson, 2009), or has described the effects of eye gaze when presented with neutral faces (e.g. Watanabe et al., 2002), very few studies to date have investigated the neurodevelopment of the interaction between gaze and facial expression. In particular, studying infants allows us to ascertain the role of experience in these effects observed in adults. In the present study, our primary aim is to explore the emergence of this interaction by testing adults and infants with the same stimuli and basic paradigm.

The most influential account of the interaction between facial expression and gaze direction comes from a series of behavioural studies with adults presented by Adams and Kleck (2003, 2005). These authors argued that facial expressions and gaze direction both contribute to elicit basic behavioural motivations in the viewer to approach or avoid. They stated that when gaze direction is combined with the intent communicated by a specific expression, it enhances the perception of that emotion (‘shared signal hypothesis’). In particular, they found an interaction between facial expressions and gaze direction that allows the viewer to more rapidly detect joyful and angry expressions (approach-oriented emotions) when accompanied by direct gaze, and fearful and sad expressions (avoidance-oriented emotions) with averted gaze.

While behavioural data are useful for generating hypotheses about the interaction between expressions and gaze, many questions about this issue can only be addressed by measuring the time course of streams of visual processing. For example, are expression and eye gaze initially processed independently before being integrated at a later stage, or are particular combinations of expression and gaze detected rapidly and automatically before further detailed analysis of a perceived face? These questions can be addressed by studying the scalp-recorded event-related potentials (ERP) that result from viewing faces.

One of the main questions investigated in the existing ERP literature concerns the interaction between face encoding and emotion processing. ERP studies with adults showed that faces elicit a larger negative deflection at the occipital–temporal recording sites ∼170 ms after the stimulus onset (Bentin et al., 1996). This face-sensitive component, known as the N170, has been associated with the structural encoding of faces, but it remains controversial whether this component is affected by emotional expression or whether the emotional content of the face is processed at a different stage.

For instance, many studies reported the effects of emotion at shorter latencies than the N170 component (Eimer and Holmes, 2002; Batty and Taylor, 2003, 2006). This early brain response, starting at ∼100 ms, suggests an automatic and global encoding of emotional expressions. However, specific effects to particular expressions have also been observed at longer latencies, supporting the hypothesis of separate neural networks for processing the negative emotional expressions.

In contrast to the earlier studies, other works show that emotional expressions can affect the N170 (Batty and Taylor, 2003; Blau et al., 2007; Leppänen et al., 2007), suggesting simultaneous processing of the structural properties of a face and its emotional content. The larger response to fearful emotional expression is often attributed to a greater allocation of attention, as unconscious mobilisation (Batty and Taylor, 2003), or as an adaptive reaction in order to learn more about the source of the threat (Leppänen et al., 2007).

Although the studies reviewed earlier have helped us to better understand the interaction between basic face encoding and expression, they did not examine the influence that the accompanying gaze direction might have on this processing. To our knowledge, there is only one study that has addressed this issue. Klucharev and Sams (2004) compared a negative emotion (angry) with a positive one (happy) while varying gaze direction. They observed an effect of interaction ∼300 ms at the parietal channel (P8) in which angry expressions with direct gaze elicited a larger response than happy expressions, while happy faces with averted gaze elicited larger ERPs than angry ones. The results led the authors to speculate that angry expressions directed to an individual are important for rapid detection. However, they do not explain why happy expressions accompanied with averted gaze are more important to be detected than angry expressions. Further, it is not clear why changes in the amplitude of ERP components would imply faster behavioural responses.

Studying the development of the interaction between expressions and eye gaze can potentially inform us about the underlying mechanisms. For example, some combinations of gaze direction and emotion may be so biologically significant that they do not require prior experience. Alternatively, learning mechanisms may associate certain expressions with particular directions of eye gaze. Behavioural studies of development have revealed that even newborns can discriminate between certain emotional expressions; in fact they prefer to look at happy expressions rather than neutral or fearful ones (Farroni et al., 2007). Newborn responses differ from 4- to 7-month olds, as infants prefer to look at fearful expressions rather than neutral (Menon et al., manuscript in preparation) or happy faces (de Haan et al., 2004; Leppänen et al., 2007). These changes in behavioural responses during development may be the result of both neural development and the familiarity of the emotional facial expressions.

Several ERP studies have studied infant brain responses to faces. de Haan et al. (2002) compared 6-month-old infants’ responses to human faces with those to nonhuman primates faces and responses to upright and inverted faces. They recorded two main components, the N290 and the P400, both elicited and influenced by face stimulus species and inversion, respectively. Halit and colleagues (2003) compared these face-sensitive ERP components in 3- and 12-month-old infants and evidence led them to conclude that in terms of function the N290 and the P400 become more finely tuned to processing human faces with age. In addition, the negative central component (Nc) is one of the most studied components of the infants ERPs. The Nc appears to reflect allocation of attention to novel faces (Nelson, 1994), and to respond to aspects of face and object recognition in 6-month-old infants (de Haan and Nelson, 1997, 1999; Nelson et al., 2000).

These infant ERP components may also reflect processing of the emotional content of the face, as they have been found to be affected by certain emotional expressions. For example, Leppänen and colleagues (2007) found an effect of expression at the P400 and Nc with larger amplitude for fearful expressions compared with happy and neutral expressions. This observation was consistent with previous studies from Nelson and de Haan (1996). More recently Kobiella et al. (2008) found a larger N290 and Nc in response to angry compared with fearful expressions, and a larger P400 in response to fearful compared with angry expressions. These findings support the view that the infant N290 and P400 components are precursors of the adult N170 (de Haan et al., 2003), and that in infants the structural encoding of a face occurs simultaneously with the emotional content. In addition, Kobiella et al. (2008) conclude that infants’ attention is evoked more strongly by angry expressions, possibly because the infant is directly addressed and threatened by this expression. Additionally, Striano and Hoehl (Striano et al., 2006; Hoehl and Striano, 2008) investigated the infants’ brain activity in response to an angry expression accompanied by direct or averted gaze. Infants showed enhanced neural processing of angry expressions when accompanied by direct gaze at the positive slow wave (PSW) at 4 months, and at the Nc component at 7 months, suggesting a greater allocation of attention to socially relevant stimuli. In a similar result to this last study, direct gaze enhances the amplitude of the N290 component in neutral faces in 4-month olds (Farroni et al., 2002), supporting the importance of direct gaze processing from an early age. These ERP findings are in line with the behavioural studies concerning the special status of direct gaze in newborns and early infancy (Farroni et al., 2002, 2003; Rigato et al., 2008). However, we note that Striano and Hoehl (Striano et al., 2006; Hoehl and Striano, 2008) failed to find a greater activation in response to direct gaze in happy and fearful expressions.

In the present study, we recorded adult ERPs to neutral, happy and fearful emotional expressions accompanied by direct and averted gaze. In line with the existing literature, we predicted an early global effect of emotional expression (Batty and Taylor, 2003, 2006), a larger N170 in response to fearful faces (Batty and Taylor, 2003; Blau et al., 2007; Leppänen et al., 2007), an independent effect of gaze, and interactions later in processing time between expression and gaze (Klucharev and Sams, 2004). If the shared signal hypothesis tested behaviourally by Adams and Kleck (2003; 2005) reflects underlying brain activity, then we hypothesize larger activation (as reflected in enhanced ERP amplitude) for fearful faces with averted gaze and for happy expressions with direct gaze. On the other hand, if the interaction found in previous ERP studies (Klucharev and Sams, 2004) reflects the importance of the fast detection of hostile expressions or threats, then we expect greater activation only for the expression of fear. Additionally, 4-month-old infants were tested with happy and fearful facial expressions, displaying direct and averted gaze, in a between-subject paradigm, according to previous works with infants (de Haan et al., 2002; Halit et al., 2003; Striano et al., 2006). We chose to study 4-month olds because this is the age at which interactions between the basic effects of facial emotional expression and gaze direction have already been observed (Striano et al., 2006; Menon et al., manuscript in preparation). Furthermore, as previous studies found that younger infants differentiate from older infants in terms of neural responses (de Haan et al., 2002; Halit et al., 2004), testing 4-month olds might help to better understand the interactions throughout the course of development. We predicted larger responses to fearful faces on the face-sensitive components (Leppanen et al., 2007; Kobiella et al., 2008), an independent gaze effect (Farroni et al., 2002), and later interactions between expression and gaze, at least for the negative expression (Striano et al., 2006; Hoehl and Striano, 2008).

EXPERIMENT 1: ADULTS

Methods

Participants

Thirteen adults (eight females and five males), aged between 18 and 30 years (mean age 25.2), volunteered in the study. An additional adult was tested, but was excluded from the final sample due to technical error. All the participants had normal or corrected-to-normal vision and received payment for their participation (£7).

Stimuli

ERPs were recorded while participants viewed colour pictures of six different female face identities. Adults were tested with neutral, fearful and happy expressions, each displaying direct or averted (left and right) gaze. The pictures were selected from the MacBrain Face Stimulus Set. [Development of the MacBrain Face Stimulus Set was overseen by Nim Tottenham and supported by the John D. and Catherine T. MacArthur Foundation Research Network on Early Experience and Brain Development. The expressions in the MacBrain Face Stimulus Set have been shown to be identifiable by both children and adults (Tottenham et al., 2002)]. In order to display different gaze directions, the pictures were modified with Adobe Photoshop 7.0.

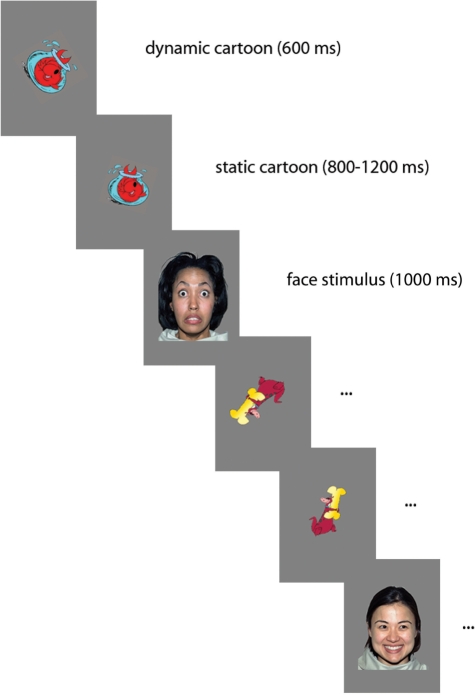

Procedure

Participants sat on a chair 90 cm away from a 40 × 29 cm computer monitor within an acoustically and electrically shielded and dimly lit room. The visual angle of the face stimuli was 14 × 10°. A video camera, mounted below the monitor and centred on the subject’s face, allowed us to record his/her gaze. Each trial started with a colour cartoon displayed in the middle of the screen, first dynamic and then reducing to a smaller static image, which lasted between 1400 and 1800 ms. Following this, a face replaced the cartoon for 1000 ms (Figure 1). To ensure compatibility with the infant paradigm, sounds were occasionally used to maintain participants’ attention. Face stimuli with direct or averted gaze, counterbalanced for right and left gaze direction, were presented in a random order and with equal probability for a total number of 600 trials (200 trials for each facial expression, half with direct and half with averted gaze direction). The average number of trials considered for the analysis was 91.5 for neutral-direct, 92.3 for neutral-averted, 91.9 for fearful-direct, 91.9 for fearful-averted, 91.3 for happy-direct and 90.2 for happy-averted.

Fig. 1.

Schematic of the stimulus presentation sequence. The cartoon presentation begins as dynamic (600 ms), and then becomes static (ISI 800–1200 ms). The face presentation lasts 1000 ms.

EEG recording and analysis

Brain electrical activity was recorded continuously by using a Geodesic Sensor Net, consisting of 128 silver–silver-chloride electrodes evenly distributed across the scalp (Figure 2). The vertex served as the reference. The electrical potential was amplified with 0.1–100 Hz bandpass, digitized at a 500 Hz sampling rate, and stored on a computer disk for off-line analysis. The data were analysed off-line by using NetStation 4.0.1 analysis software (Electrical Geodesic Inc.). Averaged ERPs were calculated and time-locked to stimulus presentation onset, and baseline corrected to the average amplitude of the 200 ms interval preceding stimulus onset. Continuous EEG data were segmented in epochs from 200 ms before until 800 ms after stimulus onset, and low-pass filtered at 30 Hz using digital elliptical filtering. Segments with eye movements and blinks were detected visually and rejected from further analysis. In accordance with the previous studies (Leppänen et al., 2007), if >10% of the channels (≥13 channels) were rejected, the whole segment was excluded. If fewer than 13 channels were rejected in a segment, the bad channels were replaced. Finally, ERPs were re-referenced to the average potential over the scalp.

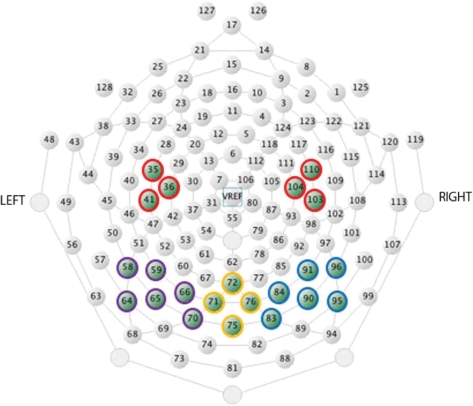

Fig. 2.

Location of electrodes used in statistical analyses in Experiment 1 (adults). In purple, yellow and blue are the channels selected for the left, medial and right occipital areas, respectively.

Statistical analyses of the ERP data focused on emotional expression and gaze direction effects at occipital–temporal and frontal electrode sites. The individual electrodes included in the occipital–temporal sites were: 58, 59, 63, 64, 65, 66, 69, 70, 71 (left), 84, 85, 90, 91, 92, 95, 96, 97, 100 (right), 61, 67, 68, 72, 73, 77, 78, 79 (medial). The electrodes included in the frontal sites were: 25, 21 (left), 124, 119 (right), 5, 6, 11, 12 (medial). At the occipital–temporal area, we observed effects between 70 and 110 ms, 110 and 150 ms, 140 and 210 ms, 190 and 280 ms and we measured later effects between 280 and 800 ms, in three distinct time-windows (280–380, 380–500 and 500–800 ms). At the frontal area, our analyses were restricted to the early and later latencies, as the effects observed between 100 and 300 ms mirrored the effects of the posterior components indicating a common generator.

The amplitude and the latency scores of the ERPs components were analysed by a 3 × 2 × 3 repeated measures analyses of variance (ANOVA) with facial expression (neutral, happy and fearful), gaze direction (direct and averted) and scalp region (right, left and medial) as within-subject factors. When the ANOVA yielded significant effects, pairwise comparisons were carried out by using t-tests, where an α level of 0.05 was used for all statistical tests.

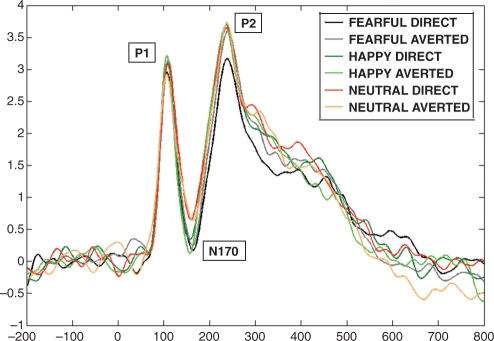

Results

Face stimuli elicited the expected deflections at P1 (121 ms), N170 (164 ms) and P2 (241 ms) at occipitotemporal electrodes. The analyses revealed significant scalp region effects at occipitotemporal locations. The region effect consisted of a larger and more positive amplitude at medial sites than lateral sites between 70 and 110 ms [F(2,24) = 7.177, P = 0.014] and between 280 and 800 ms [F(2,24) = 19.486, P ≤ 0.001]. The amplitude of the N170 was larger at lateral sites [F(2,24) = 10.389, P ≤ 0.001], while the amplitude of the P2 was larger at medial scalp regions [F(2,24) = 29.337, P ≤ 0.001]. On both of these components, the peak latency was shorter at medial than lateral locations [N170: F(2,24) = 25.873, P ≤ 0.001; P2: F(2,24) = 57.940, P ≤ 0.001].

Effects of facial expression were found at both the occipitotemporal and frontal locations. Within the occipitotemporal area, the peak latency of the P1 and of the N170 components was affected by the expression [P1: F(2,24) = 7.484, P = 0.013; N170: F(2,24) = 4.428, P = 0.032]. Both these components peaked earlier to happy than to fearful expressions. The N170 amplitude was influenced by facial expression, but this effect was mediated by an interaction with the scalp region [F(4,48) = 3.482, P = 0.031] that revealed a larger amplitude to fearful expressions than neutral in the right hemisphere only (t12 = 2.681, P = 0.020).

Over frontal channels, the amplitude from 500 ms to the end of the segment was significantly affected by facial expression [F(2,24) = 3.963, P = 0.033], showing larger negative amplitude to happy than to fearful and neutral expressions.

A significant gaze direction effect was found at the occipital location. Specifically, the amplitude of the P2 [F(1,12) = 5.374, P = 0.039] was larger to averted than to direct gaze. This effect was mediated by an interaction with the facial expression [F(2,24) = 5.094, P = 0.043]. Pairwise comparisons, using a t-test, revealed that the P2 component amplitude was larger to fearful faces with averted gaze than direct gaze (t12 = –2.543, P = 0.026), and to happy with direct gaze than to fearful with direct gaze (t12 = –2.374, P = 0.035) (Figure 3).

Fig. 3.

Grand average for all the six conditions on occipital channels in Experiment 1 (adults). The P1 component peaked earlier for happy and fearful than neutral expressions. The N170 peaked earlier to happy than fearful and neutral faces, and it is larger to fearful than neutral expressions. The P2 is more pronounced for averted gaze compared to direct gaze condition; for fearful faces with averted than direct gaze, and for happy with direct gaze compared to fearful with direct gaze.

Discussion

In accord with our predictions, in adults the effects of facial expressions were observed at shorter latency than those to gaze direction. Further, interactions between the processing of emotional expressions and gaze directions appeared at still longer latencies, and this could indicate an initial phase of independent processing of the two aspects of faces before the information is integrated. Nonetheless, the fact that the main effect of gaze occurred at the same latency as its interaction with facial expression means that we cannot conclude definitively that gaze processing is independent from facial expression processing. Finally, the type of interaction we observed was consistent with predictions based on the shared signal hypothesis.

The P1 and the N170 components peaked earlier to the expression of happiness, and the latter component’s amplitude was larger to fearful expressions than neutral in the right hemisphere. These findings are consistent with previous studies with adults and children, which found a shorter latency to positive expressions than to negative ones (Batty and Taylor, 2003, 2006), and a greater amplitude of the N170 to fearful expressions (Batty and Taylor, 2003; Blau et al., 2007; Leppänen et al., 2007).

The gaze effect was observed as a greater amplitude to averted than to direct gaze at the P2 component, an observation partially consistent with Klucharev and Sams (2004) who found a larger deflection from 300 ms to averted than direct gaze, and with Watanabe (2002) who observed a larger amplitude response to averted than direct gaze over temporal areas. In addition, in our study a significant interaction between facial expression and gaze direction was found modulating the amplitude of the P2 component. Results from individual comparisons are consistent with the shared signal hypothesis. In fact, we found enhanced amplitude for fearful expressions accompanied with averted gaze relative to the same emotion with direct gaze, and for happy expressions displaying direct gaze rather than fearful expressions with direct gaze. In contrast, our results are less consistent with Klucharev and Sams (2004) who argued for the importance of rapid detection of a hostile expression directed to an individual. However, in their work angry facial expressions were used, while here participants were presented with fearful faces.

In the next experiment, infants were presented with happy and fearful emotional expressions, accompanied with direct and averted gaze, in order to investigate whether the interaction between expression and gaze processing is evident in infancy in the same way as it is in adulthood.

EXPERIMENT 2: INFANTS

Methods

Participants

Twenty-eight healthy infants, aged between 106 and 134 days (mean age 123.6 days) participated in the study. Informed consent was obtained from the parents. The testing took place only if the infant was awake and in an alert state. Given the short span of attention at this age, we ran the study with each facial expression as a separate experiment. Fourteen infants participated in Experiment 2a (fearful expression condition), and 14 infants participated in Experiment 2b (happy expression condition). After excluding 12 of them (seven due to fussiness and five infants for technical errors), the final sample consisted of 15 females and 13 males.

Stimuli

As with adults, ERPs were recorded while infants viewed colour pictures of six different female face identities. The pictures were the same as used in Experiment 1. In Experiment 2a, infants were presented with faces exhibiting a fearful expression, while in Experiment 2b, they were presented with a happy expression. In both the experiments, faces displayed direct or averted (left and right) eye gaze.

Procedure

Infants sat on their parent’s lap 60 cm away from a 40 × 29 cm computer monitor within an acoustically and electrically shielded and dimly lit room. The visual angle of the face stimuli was 22 × 15°. The apparatus and procedure were the same as used in Experiment 1.

The stimuli were displayed either until the infant became fussy and inattentive or reached 200 trials (100 trials for each gaze condition, direct and averted). The average number of trials considered for the analysis was 38.8 for fearful-direct, 36.9 for fearful-averted, 31.7 for happy-direct and 34.4 for happy-averted.

EEG recording and analysis

Brain electrical activity was recorded continuously by using a Hydrocel Geodesic Sensor Net, consisting of 128 silver–silver-chloride electrodes evenly distributed across the scalp (Figure 4). The vertex served as the reference. The EEG analyses followed the same procedure as in Experiment 1. According to previous studies (de Haan et al., 2002; Halit et al., 2003; Leppänen et al., 2007), if >15% of the channels (≥19 channels) were invalid, the whole segment was excluded. If fewer than 19 channels were invalid in a segment, the bad channels were replaced.

Fig. 4.

Location of electrodes used in statistical analyses in Experiment 2 (infants). In purple, yellow and blue are the channels selected for the left, medial and right occipital areas, respectively. In red are the channels selected for the frontocentral areas.

Statistical analyses of the ERP data targeted the examination of emotional expression and gaze direction effects at occipital–temporal and frontocentral electrode sites. The individual electrodes that were included in the occipital–temporal sites were: 64, 58, 59, 65, 66, 70 (left), 83, 84, 90, 91, 95, 96 (right), 71, 72, 75, 76 (medial). The electrodes included in the frontocentral sites were: 36, 41, 35 (C3) and 104, 110, 103 (C4). At the occipital area, we observed early effects between 100 and 130 ms, the P1 between 130 and 250 ms, the N290 between 240 and 350 ms, the P400 between 350 and 450 ms and we measured later effects between 450 and 800 ms. The Nc at the frontocentral area was observed within a 300- to 600-ms time-window. As for the adults, our analyses at the frontal area are restricted to the early (100–130 ms) and later latencies (450–800 ms). The electrodes included in this site were: 24, 20, 19, 18, 23, 22 (left), 124, 118, 4, 10, 3, 9 (right), 11, 12, 16, 5, 6 (medial).

In Experiments 2a and b, for each condition (fearful and happy), the amplitude and the latency scores of the ERP components were analysed by a 2 × 3 repeated measure ANOVA with gaze direction (direct and averted), and scalp region (right, left and medial) as within-subject factors, for the occipital regions. The frontal and frontocentral regions were analysed by a t-test comparing gaze directions. In order to compare the results from both the conditions, a 2 × 2 × 3 ANOVA was performed, with facial expression (fearful or happy) as a between-subjects factor, and gaze direction (direct and averted) and scalp region (right, left and medial) as within-subjects factors. When ANOVA yielded significant effects, pairwise comparisons were carried out by using a t-test, where an α-level of 0.05 was used for all statistical tests.

Results

Experiment 2a: Fearful facial expression

Face stimuli elicited the expected deflections at P1 (178 ms), N290 (288 ms) and P400 (425 ms) at occipitotemporal electrodes. At occipitotemporal electrodes, the analyses revealed scalp region effects. Specifically, the P1 [F(2,26) = 6.912, P = 0.008] and the N290 [F(2,26) = 27.75, P < 0.001] components peaked earlier at the medial than lateral sites. There were no significant effects of gaze either on the amplitude or peak latency of the components observed.

Experiment 2b: Happy facial expression

Face stimuli elicited the expected deflections at P1 (175 ms), N290 (286 ms) and P400 (405 ms) at occipitotemporal electrodes. As in the fearful expression condition, analyses revealed a scalp region effect in the occipitotemporal area: P1 [F(2,26) = 13.626, P = 0.001] and N290 [F(2,26) = 3.927] components peaked earlier at the medial than lateral sites.

A main effect between gaze direction and scalp region at P1 peak latency [F(2,26) = 3.542, P ≤ 0.05] was revealed. Pairwise comparisons revealed that over the medial channels, this component peaked earlier to averted than direct gaze (t13 = 2.706, P = 0.018).

Further Analysis

Comparison between Experiments 2a and b

In order to compare the results from both the conditions, a 2 × 2 × 3 ANOVA was performed, with facial expression (fearful and happy) as a between-subjects factor, and gaze direction (direct and averted) and scalp region (right, left and medial) as within-subjects factors.

The analyses revealed significant scalp region effects at the occipitotemporal location. Within the time-window chosen to detect earlier effects, the region effect consisted in a larger positive amplitude at the medial electrodes than lateral electrodes [F(2,52) = 6.906, P = 0.004], while the P1 and the N290 components revealed effects of peak latency. In fact, both of them peaked earlier at medial than lateral electrodes [P1: F(2,52) = 19.716, P < 0.001; N290: F(2,52) = 24.769, P < 0.001].

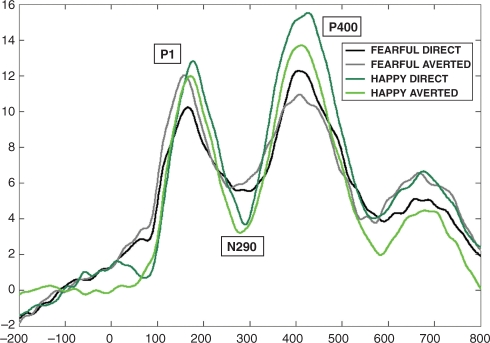

There were effects of facial expression at both the occipitotemporal and frontocentral locations. At the occipitotemporal area, the P400 peaked earlier to happy expressions than to fearful expressions [F(1,26) = 7.785, P = 0.010]. The N290 peak latency was influenced by an interaction between facial expression and scalp region [F(2,52) = 4.834, P = 0.022]. Pairwise comparisons revealed a trend towards a significant difference in the left hemisphere channels (t26 = 1.964, P = 0.060) between fearful and happy expression. Further analyses showed that it was due to the N290 component peaking earlier to the happy expression compared with the fearful, in the direct gaze condition only (t26 = 2.219, P = 0.035) (Figure 5).

Fig. 5.

Grand average for all the four conditions on occipital channels in Experiments 2a and 2b (infants). The P400 component peaked earlier for happy than fearful expressions. (The N290 peaked earlier to happy than fearful faces only on the left channels.)

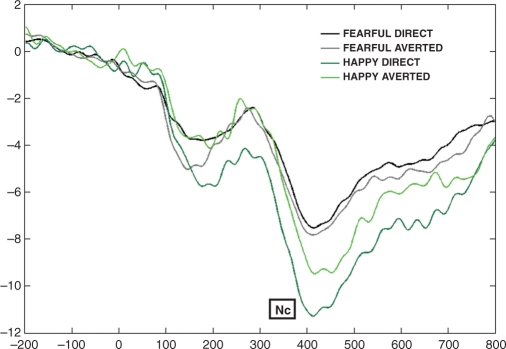

The amplitude of the frontocentral Nc was affected by facial expression [F(1,26) = 4.699, P = 0.040], with a larger deflection for happy than fearful expression. Post hoc analyses revealed a significant effect only for the direct gaze condition (t26 = 2.668, P = 0.013) (Figure 6).

Fig. 6.

Grand average for all the four conditions on C3 (36, 41, 35) and C4 (104, 110, 103) in Experiments 2a and 2b (infants). The Nc amplitude is larger to happy than fearful facial expressions, especially for the direct gaze condition.

In addition, over the frontal channels there was a short latency effect of facial expression on amplitude in the time-window between 100 and 130 ms [F(1,26) = 4.130, P ≤ 0.050], with a larger and more negative deflection to fearful than happy expressions.

Discussion

As predicted, facial expressions modulated the face-sensitive components in infants, but contrary to our predictions we did not find a larger amplitude response to fearful expressions.

At the occipitotemporal area, the latency of the P400 and the N290 components were affected by the expression being processed with a shorter latency for the happy than the fearful one. This finding is in line with the results from adult research as these components are thought to be the precursors of the adult N170 (de Haan et al., 2002, 2003). In contrast to our findings, Leppänen and colleagues (2007) found a significant effect of P400 amplitude, but no latency effect. However, this remains consistent with the hypothesis that the P400 is one of the two potential precursors of the N170 (de Haan et al., 2003), as the results from adults showed that the N170 was affected by emotional expression in both latency and amplitude scores.

The frontocentral Nc was affected by emotional expression, but in a different way from previous studies (Nelson et al., 1996; de Haan et al., 2004; Leppänen et al., 2007); it was larger in amplitude to happy rather than to fearful expression. It is notable that the previous ERP studies tested older infants. Therefore, our different results may reflect a developmental shift between 4- and 7-month olds. Thus, in order to better understand this unpredicted outcome, we explored the effect further by running post hoc comparisons. The amplitude of the Nc was significantly larger to happy than to fearful expressions when both of these stimuli were accompanied by direct gaze. This finding partially accords with our results with adults and the shared signal hypothesis (Adams and Kleck, 2003, 2005). In fact, results from infants may support the hypothesis of approach-oriented expressions (happy), but not the avoidance-oriented emotions (fear). In addition, our results correspond with recent findings with newborns (Farroni et al., 2007; Rigato et al., 2008) that the most preferred face stimulus at birth contains both direct gaze and a happy expression, suggesting that faces that enhance the likelihood of social communication attracts newborns’ attention.

CONCLUSIONS

The current study has extended previous findings on adults and infants’ neural processing of facial expressions and eye gaze direction. Specifically, we examined the hypotheses that there is (i) differential processing of expressions evident on face-sensitive ERP components, (ii) an independent effect on ERP components of gaze direction and (iii) a later in processing time interaction between the expression and eye gaze. With regard to the latter, we looked for a pattern of interaction consistent with the shared signal hypothesis of Adams and Kleck (2003, 2005) involving an association between happy expression with direct gaze, and fearful expressions with averted gaze.

In the current study, we found that infants and adults have an overall similar pattern of response to faces that present differing expressions and eye gaze. Differential expression processing was observed at the components known to reflect face encoding, the N170 in adults and the P400 and the N290 in infants. Further, at the frontal electrodes, we recorded an early greater amplitude of response to fearful expressions in infants that may reflect an early enhanced activation to threatening stimuli. In adults, the enhanced ERP amplitude to the expression of fear was observed at longer latencies, possibly reflecting a less automatic response since this effect lasted until the end of the recording segment.

Detecting gaze direction in the context of facial expressions may be important in order to better decode the meaning of that emotional face and plan subsequent action to avoid dangerous events or approach pleasant situations. The shared signal hypothesis (Adams and Kleck 2003, 2005) claims that gaze direction and expression are linked to approach and avoidance reactions. Our ERP results with adults are generally consistent with this hypothesis, at least for the expression of happiness and fear.

It is notable that in infants, an effect of gaze direction was found only in the happy expression group when analysed separately. This may be because this expression is more familiar to the infants than the fearful one. This greater familiarity may allow easier integration of the expression with gaze direction. In contrast, if the fearful expression is a novel stimulus for them, decoding the expression may draw processing resources away from analysis of gaze. Farroni et al. (2002) found that the presence of direct gaze in neutral faces facilitates the neural processing associated with the earliest steps of face encoding. In the light of the present results, it may be that infants are able to analyse gaze direction together with expressions that they have previously experienced as occurring together. In accord with this view, we observed an enhanced processing to happy faces accompanied with direct gaze. Yet, the question of whether infants process gaze direction only when familiar expressions are presented, i.e. neutral or happiness, or also with other approach-oriented emotions, remains open. However, we note that the gaze effect occurred earlier in infants than in adults. One factor that needs to be considered with regard to this is that infants were tested in a between-subject paradigm; therefore individual infants and adults experienced a different number of conditions, and this may have influenced the results obtained. Nevertheless, if the differential response in processing gaze in happy expressions was due to the number of conditions observed, the same effect would be expected for fearful expressions also. Since no effect of gaze was found with this latter expression, difference in the number of conditions observed is unlikely to explain our results.

In conclusion, our results indicate that expression may be processed independently of gaze direction at early stages, and only become integrated later in processing time. In adults, this later interaction involves both the emotions investigated here, while in infants it involved only the happy expression. The nature of the neural interaction we observed is consistent with the shared signal hypothesis in adults. In infants, it may be that this effect is confined to the approach-oriented emotions and not the avoidance-oriented emotions. Further research is required to verify these findings with a larger set of facial expressions. Whether experience plays a role or whether the effect is due to maturation independent of experience, remains an open question. The experiments reported in the current study do not allow us to definitely conclude which factors caused the differences observed between adults and infants. However, the proposal that experience may influence the developmental change is consistent with the change in behavioural responses to facial expressions seen between newborns and older infants.

Conflict of interest

None declared.

Acknowledgments

The authors are grateful to the adults, the infants and their parents, who participated in the study; to Fani Deligianni for the help with the software and to the research assistants of the Babylab for help in testing the infants. This work was supported by the Marie Curie Fellowship [MEST-CT-2005-020725 to S.R.]; the Welcome Trust [073985/Z/03/Z to T.F.]; and the Medical Research Council [Programme Grant G0701484 to M.H.J.].

REFERENCES

- Adams RB, Jr, Kleck RE. Perceived gaze direction and the processing of facial displays of emotion. Psychological Science. 2003;14(6):644–7. doi: 10.1046/j.0956-7976.2003.psci_1479.x. [DOI] [PubMed] [Google Scholar]

- Adams RB, Jr, Kleck RE. Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion. 2005;5:3–11. doi: 10.1037/1528-3542.5.1.3. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MT. Early processing of the six basic facial emotional expressions. Cognitive Brain Research. 2003;17:613–20. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MT. The development of emotional face processing during childhood. Developmental Science. 2006;9(2):207–20. doi: 10.1111/j.1467-7687.2006.00480.x. [DOI] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G. Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience. 1996;8:551–65. doi: 10.1162/jocn.1996.8.6.551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blau VC, Maurer U, Tottenham N, McCandliss BD. The face specific N170 component is modulated by emotional facial expression. Behavioural and Brain Functions. 2007;3 doi: 10.1186/1744-9081-3-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Haan M, Nelson CA. Recognition of the mother’s face by six month-old infants: a neurobehavioral study. Child Development. 1997;68:187–210. [PubMed] [Google Scholar]

- de Haan M, Nelson CA. Brain activity differentiates face and object processing by 6 month-old infants. Developmental Psychology. 1999;34:1114–21. doi: 10.1037//0012-1649.35.4.1113. [DOI] [PubMed] [Google Scholar]

- de Haan M, Pascalis O, Johnson MH. Specialization of neural mechanisms underlying face recognition in human infants. Journal of Cognitive Neuroscience. 2002;14:199–209. doi: 10.1162/089892902317236849. [DOI] [PubMed] [Google Scholar]

- de Haan M, Johnson MH, Halit H. Development of face-sensitive event-related potentials during infancy: a review. International Journal of Psychophysiology. 2003;51:45–58. doi: 10.1016/s0167-8760(03)00152-1. [DOI] [PubMed] [Google Scholar]

- de Haan M, Belsky J, Reid V, Volein A, Johnson MH. Maternal personality and infants’neural and visual responsivity to facial expressions of emotion. Journal of Child Psychology and Psychiatry. 2004;45:1209–18. doi: 10.1111/j.1469-7610.2004.00320.x. [DOI] [PubMed] [Google Scholar]

- Eimer M, Holmes A. An ERP study on the time course of emotional face processing. Neuroreport. 2002;13:427–31. doi: 10.1097/00001756-200203250-00013. [DOI] [PubMed] [Google Scholar]

- Erickson K, Schulkin J. Facial expressions of emotion: a cognitive neuroscience perspective. Brain and Cognition. 2003;52:52–60. doi: 10.1016/s0278-2626(03)00008-3. [DOI] [PubMed] [Google Scholar]

- Farroni T, Csibra G, Simion F, Johnson MH. Eye contact detection in humans from birth. Proceeding National Academy of Sciences of the United States of America. 2002;99:9602–5. doi: 10.1073/pnas.152159999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farroni T, Mansfield EM, Lai C, Johnson MH. Motion and mutual gaze in directing infants’ spatial attention. Journal Experimental Child Psychology. 2003;85:199–212. doi: 10.1016/s0022-0965(03)00022-5. [DOI] [PubMed] [Google Scholar]

- Farroni T, Menon E, Rigato S, Johnson MH. The perception of facial expressions in newborns. European Journal of Developmental Psychology. 2007;4(1):2–13. doi: 10.1080/17405620601046832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halit H, de Haan M, Johnson MH. Cortical specialisation for face processing: face-sensitive event-related potential components in 3- and 12-month-old infants. Neuroimage. 2004;19:1180–93. doi: 10.1016/s1053-8119(03)00076-4. [DOI] [PubMed] [Google Scholar]

- Halit H, Csibra G, Volein A, Johnson MH. Face-sensitive cortical processing in early infancy. Journal of Child Psychology and Psychiatry. 2003;45:1228–34. doi: 10.1111/j.1469-7610.2004.00321.x. [DOI] [PubMed] [Google Scholar]

- Hoehl S, Striano T. Neural processing of eye gaze and threat-related emotional facial expressions in infancy. Child Development. 2008;79(6):1752–60. doi: 10.1111/j.1467-8624.2008.01223.x. [DOI] [PubMed] [Google Scholar]

- Klucharev V, Sams M. Interaction of gaze direction and facial expressions processing: an ERPs study. Neuroreport. 2004;15(4):621–6. doi: 10.1097/00001756-200403220-00010. [DOI] [PubMed] [Google Scholar]

- Kobiella A, Grossman T, Reid VM, Striano T. The discrimination of angry and fearful facial expressions in 7-month-old infants: an event-related potential study. Cognition & Emotion. 2008;22(1):134–46. [Google Scholar]

- Leppanen JM, Moulson MC, Vogel-Farley VK, Nelson CA. An ERP study of emotional face processing in the adult and infant brain. Child Development. 2007;78(1):232–45. doi: 10.1111/j.1467-8624.2007.00994.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM, Nelson CA. Tuning the developing brain to social signals of emotions. Nature Reviews Neuroscience. 2009;10:37–47. doi: 10.1038/nrn2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson CA. Neural correlates of recognition memory in the first postnatal year of life. In: Dawson G, Fischer K, editors. Human Behavior and the Developing Brain. New York: Guilford Press; 1994. pp. 269–313. [Google Scholar]

- Nelson CA, de Haan M. Neural correlates of infants’ visual responsiveness to facial expressions of emotions. Developmental Psychobiology. 1996;29:577–95. doi: 10.1002/(SICI)1098-2302(199611)29:7<577::AID-DEV3>3.0.CO;2-R. [DOI] [PubMed] [Google Scholar]

- Nelson CA, Wewerka S, Thomas KM, Tribby-Walbridge S, deRegnier R, Georgieff M. Neurocognitive sequelae of infants of diabetic mothers. Behavioral Neuroscience. 2000;114(5):950–6. [PubMed] [Google Scholar]

- Rigato S, Menon E, Farroni T, Johnson MH. The interaction between gaze direction and facial expression in newborns. 2008. Apr, Poster presented at the Annual Conference of the British Psychological Society, Dublin. [Google Scholar]

- Senju A, Johnson MH. The eye contact effect: Mechanism and development. Trends in Cognitive Science. 2009;13:127–34. doi: 10.1016/j.tics.2008.11.009. [DOI] [PubMed] [Google Scholar]

- Striano T, Kopp F, Grossman T, Reid VM. Eye contact influences neural processing of emotional expression in 4-month-old infants. Social Cognitive and Affective Neuroscience. 2006;1:87–94. doi: 10.1093/scan/nsl008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tottenham N, Borscheid A, Ellertsen K, Marcus DJ, Nelson CA. Categorization of facial expressions in children and adults: Establishing a larger stimulus set. 2002. Apr, Paper presented at the annual meeting of the Cognitive Neuroscience Society, San Francisco. [Google Scholar]

- Watanabe S, Miki K, Kakigi R. Gaze direction affects face perception in humans. Neuroscience Letters. 2002;325:163–6. doi: 10.1016/s0304-3940(02)00257-4. [DOI] [PubMed] [Google Scholar]