Abstract

The purpose of this study was to assess the effects of schema-broadening instruction (SBI) on second graders’ word-problem-solving skills and their ability to represent the structure of word problems using algebraic equations. Teachers (n = 18) were randomly assigned to conventional word-problem instruction or SBI word-problem instruction, which taught students to represent the structural, defining features of word problems with overarching equations. Intervention lasted 16 weeks. We pretested and posttested 270 students on measures of word-problem skill; analyses that accounted for the nested structure of the data indicated superior word-problem learning for SBI students. Descriptive analyses of students’ word-problem work indicated that SBI helped students represent the structure of word problems with algebraic equations, suggesting that SBI promoted this aspect of students’ emerging algebraic reasoning.

When solving word problems, students are faced with novel problems that require transfer. This can be difficult to effect in the primary grades (Durnin, Perrone, & MacKay, 1997; Foxman, Ruddock, McCallum, & Schagen, 1991, cited in Boaler, 1993; Larkin, 1989). Some psychologists view such transfer in terms of the development of schemas, by which students conceptualize word problems within categories or problem types that share structural, defining features and require similar solution methods (Chi, Feltovich, & Glaser, 1981; Gick & Holyoake, 1983; Mayer, 1992; Quilici & Mayer, 1996). The broader the schema or problem type, the greater the probability students will recognize connections between novel problems and those used for instruction and will understand when to apply the solution methods they have learned.

In a series of studies, we have relied on this conceptualization of transfer to design instruction for helping students build schemas for word-problem types and for broadening those schemas. Prior work (e.g., Fuchs et al., 2003; Fuchs, Fuchs, Finelli, et al., 2004; Fuchs, Fuchs, Prentice, et al., 2004) illustrates the efficacy of this approach, which we refer to as schema-broadening instruction (SBI), for third-grade students on problem types relevant to the third-grade curriculum. More recently, Fuchs, Powell et al. (2009) demonstrated the efficacy of SBI tutoring for a subset of third graders who experience severe mathematics difficulty. With this population, we taught simpler word-problem types, while introducing algebraic equations to represent the defining, structural features of those problem types.

We extend this line of work in the present study, applying SBI to problem types appropriate to the second-grade curriculum. We focused on typically developing second-grade students while relying on a whole-class format to deliver instruction, again incorporating algebraic equations to represent the defining, structural features of problem types. We conducted the present efficacy trial by randomly assigning 18 classrooms to control (i.e., business-as-usual) instruction or SBI. To further extend previous studies, we also analyzed students’ word-problem work to gain insight into SBI’s effects on students’ ability to represent the underlying structure of word problems using algebraic equations. A focus on algebra gains importance as high schools increasingly require students to pass an algebra course or test to graduate and because algebra is often viewed as a route toward competence with higher-level mathematics (National Mathematics Advisory Panel, 2008). For these reasons, introducing algebraic thinking early in the curriculum may represent a productive innovation, as has been argued elsewhere (e.g., National Council of Teachers of Mathematics [NCTM], 1997). Before describing the study, we provide background information on the theoretical basis for hypothesizing that SBI with this form of algebraic thinking may be efficacious, and we summarize previous related research.

Theoretical Basis for SBI

We use the term schema to refer to a generalized description of a word-problem type that requires similar solution methods (Gick & Holyoak, 1983). Schemas help students recognize connections between problems that are taught and problems that are novel, which facilitates transfer. Novel problems differ from taught problems in terms of superficial features, which make a problem novel but do not alter the problem type or the problem-solution methods. The most common superficial feature in school (and the easiest for students to handle) is the cover story. Consider the total problem type, in which quantities are combined to form a total. Let’s say a teacher uses the following total problem for instruction: Shelley has 6 apples. Robin has 3 apples. How many apples do the girls have together? Then the teacher asks students to solve a novel total problem with a new cover story: Francis has 7 cats. Anne has 3 dogs. How many animals do the girls have together? This cover story has a superficial feature that does not alter the problem type or the required solution method. The cover story only minimally taxes students’ ability to recognize the problem as belonging to the total problem type. By contrast, other superficial features increase the challenge in recognizing a novel problem as belonging to a problem type. Consider the following problem: Francis has 7 cats. Ann has 3 dogs. Ann walks her dogs 2 times every day. How many animals do the girls have together? This problem incorporates irrelevant information, which is a superficial feature that creates greater challenge for identifying the problem as belonging to the total problem type (even though, as a superficial feature, it does not alter the problem type or the problem-solution methods). Other more challenging superficial features include (but are not limited to) combining problem types, variations in format, and the use of figures to incorporate relevant information.

Two instructional components are required to support the development of schemas for problem types. The first instructional component must help students understand the defining features of a problem type as well as solution methods for solving a problem within that problem type (e.g., Mawer & Sweller, 1985; Sweller & Cooper, 1985). For this problem-solution instruction, problems vary only in terms of the cover story so that the structural features (which represent the defining features of the schema) are clear. Once mastery of problem-solution steps has been achieved, the purpose of the second instructional component is to broaden the schema for the problem type (Cooper & Sweller, 1987). For broadening schemas, a major instructional strategy is to systematically manipulate superficial features in problems by moving from variations in the cover story to variations in more challenging superficial features while emphasizing the structural features that define the problem type. Thus, transfer distance is gradually increased. Unfortunately, classroom instruction is typically limited to instruction on problem-solution methods, with variations in superficial features limited to cover stories. Little is done to broaden students’ schemas for problem types. SBI addresses both instructional components: building schemas and broadening schemas.

Prior Work Investigating SBI’s Potential

Even with systematic instructional design, schema broadening may be difficult to achieve (e.g., Bransford & Schwartz, 1999; Cooper & Sweller, 1987; Mayer, Quilici, & Moreno, 1999). Working with children in two age groups (10–12 years of age and 8–9 years of age), Chen (1999) demonstrated how varying problem features was more successful at helping older than younger students extract schemas and solve problems, thus raising questions about how to broaden schemas and promote mathematical problem solving among younger students. In addition, as is the case for much of the research on analogical problem solving and schema induction, Chen relied on single-session interventions without explicit instruction to prompt schema construction. As Quilici and Mayer (1996) noted, research is needed to examine whether explicit instruction and structured practice to help students develop schemas, rather than independent study of examples, may strengthen effects.

In a series of studies, Jitendra and colleagues have explored the potential of teacher-directed schema-based instruction as a method for promoting mathematical problem solving at the elementary grades. For example, Jitendra et al. (2007) tested the efficacy of this approach at the third-grade level, focusing on change, group, compare, and two-step problem types. They randomly assigned 88 students to schema-based strategy instruction or to a metacognitive planning condition. Instruction occurred in groups of 15–16 students for 8–9 weeks, 5 days per week, for 25 minutes per session. Six weeks after the end of treatment, significant effects favored the schema-based strategy condition on a word-problem posttest that mirrored the problem types used for instruction and on a state-administered test of mathematics performance.

In our own work, we have also relied on schema theory. Similar to Jitendra, we teach students to understand the underlying mathematical structure of the problem type, recognize problems as belonging to the problem type, and how to solve the problem type. In contrast to Jitendra, we incorporate an additional instructional component by explicitly teaching students to broaden their schema for problem types. The hope is that the addition of explicit transfer instruction will lead to more flexible and successful problem-solving performance. We refer to this approach as SBI.

In our first randomized control study (Fuchs et al., 2003) we separated the effects of (a) instruction on building the schema (i.e., understanding the underlying mathematical structure of the problem type, recognizing problems as belonging to the problem type, and solving the problem type) from (b) instruction designed to broaden the schema. The word-problem types targeted for instruction were more complex than had been studied to date with third graders, including word problems that involved finding half, step-up functions, two-step problems with pictographs, and shopping lists that required two- and three-step solutions. Third-grade classes were randomly assigned to teacher-designed word-problem instruction, experimenter-designed instruction on building the schema (i.e., understanding, recognizing, and solving the problem types), or experimenter-designed SBI on building and broadening those schemas. With the addition of experimenter-designed SBI, teachers explained how problem features such as format or vocabulary can make problems seem unfamiliar without modifying the problem type or the required solution methods. Teachers discussed examples emphasizing structural features of the problem type despite superficial features such as format or vocabulary differences. Next they provided practice in sorting novel problems in terms of structural features. They also reminded students to search novel problems for familiar structural features that would allow the students to identify the problem type. Results indicated that SBI (which included the schema-broadening activities) strengthened word-problem performance over teacher-designed instruction and over the experimenter-designed instruction on understanding, recognizing, and solving the problem types without the schema-broadening activities. Effects occurred on a far-transfer performance assessment that required students to solve multiple taught and untaught problem types within a highly novel and complex context that resembled real-life problem solving. Subsequent work (Fuchs, Fuchs, Finelli et al., 2004) has shown how SBI that addresses six superficial features is more effective than SBI that addresses three superficial features. Effect sizes favoring SBI ranged from 0.89 to 2.14 in a series of studies.

Why Incorporate Algebra to Represent the Underlying Structure of Problem Types?

Word problems that belong to a problem type share a common underlying structure. In the total problem type, for example, quantities are combined to make a larger amount. This underlying structure, an abstraction that generalizes across total problems, is concretely specified in the sample problems that fit the word-problem type. One strategy for promoting the abstract generalization is to explain the structure of the problem type while illustrating it with many examples (as just described). Another potentially effective and complementary strategy for representing and clarifying the abstract structure of a problem type is to rely on an overarching equation by using letters and mathematical symbols to represent the defining features of the problem type: P1 + P2 = T (i.e., part 1 plus part 2 equals the total). Students use the overarching equation as a structure for generating algebraic equations that match the structure of a given problem. The hope is that this overarching equation, which represents the set of relations among the quantities within a problem type, can provide a scaffold by which students systematically analyze information presented in a word problem, thereby highlighting distinctions among different problem types, facilitating recognition of problems as belonging within a word-problem type, and enhancing word-problem performance.

The idea of teaching students to translate word problems into algebraic equations is not new (Hawkes, Luby, & Touton, 1929; Paige & Simon, 1966; Stein, Silbert, & Carnine, 1997), but the use of algebraic equations to represent the structural, defining features of problem types offers promise not only for enhancing young students’ word-problem performance but also for promoting their emerging understanding of certain aspects of algebra. In this vein, we use the term algebra in a limited way to mean representing and reasoning about problem situations that contain unknowns (Izsak, 2000) and solving problems using the kinds of mathematical expressions found in algebra (Kiernan, 1992).1 For years, some (e.g., Davis, 1985, 1989; Kaput, 1995) have advocated incorporating algebra throughout the K–12 curriculum to add coherence and depth to school mathematics and to ease the transition to formal algebra. More recently, the Algebra Working Group (NCTM, 1997) further conceptualized an early focus on algebra, prompting the 2000 NCTM standards to encourage teachers to nurture students’ emerging knowledge of algebra beginning in kindergarten. As noted, in the present study we use algebra in a limited way: to represent and solve problems and to reason about relations among operations (e.g., Izsak, 2000; Kiernan, 1992).

Focusing on algebra early may be important given research on children’s algebraic reasoning, which reveals misconceptions about the equal sign (Jacobs, Franke, Carpenter, Levi, & Battey, 2007; McNeil & Alibali, 2005; Saenz-Ludlow & Walgamuth, 1998; Seo & Ginsburg, 2003) and difficulty with standard and nonstandard open equations (Carpenter & Levi, 2000; Knuth, Stephens, McNeil, & Alibali, 2006). Such difficulty, moreover, has been shown to compromise word-problem solution accuracy (Carpenter, Franke, & Levi, 2003; Powell & Fuchs, in press). Studies demonstrate how teacher dialogue (Baroody & Ginsburg, 1983; Blanton & Kaput, 2005; Saenz-Ludlow & Walgamuth, 1998) or explicit instruction about the meaning of the equal sign can promote relational understanding (McNeil & Alibali, 2005) and accuracy in solving nonstandard equations (Powell & Fuchs, in press; Rittle-Johnson & Alibali, 1999).

Other research on early algebra suggests that algebraic reasoning can occur in conjunction with arithmetic reasoning (e.g., Brizuela & Schliemann, 2004; Kaput & Blanton, 2001). For example, Schliemann, Goodrow, and Lara-Roth (2001) conducted a longitudinal study in grades 2–4. With periodic lessons, they promoted shifts from thinking about relations among particular numbers toward thinking about relations among sets of numbers and from computing numerical answers to representing relations among variables. In a similar way, Warren, Cooper, and Lamb (2006) explored the use of function tables with 9-year-olds, focusing on input and output numbers to help children extract the algebraic nature of the arithmetic involved; in four lessons, the functional thinking of 9-year-olds improved.

In the present study we took a different approach in an attempt to promote second graders’ emerging understanding about algebra. We introduced overarching equations to represent the defining features (i.e., the set of relations among the quantities within problem types) of three problem types as a scaffold for highlighting structural distinctions among the problem types, with which students could systematically analyze information presented in word problems. We taught students to use the overarching equations to identify problem types and generate equations representing the relations among the known and unknown information presented in word problems. In this way, we hoped to promote emerging knowledge of algebra about problem situations that contain unknown variables, with students solving problems using mathematical representations related to algebra.

Purpose of the Present Study

To review, previous work has demonstrated the efficacy of SBI in third grade. In the present study, we extended this program of research by focusing on younger students (i.e., second graders), using three problem types derived from the second-grade curriculum. In addition, the instructional methods extended prior SBI studies with typically developing students by incorporating algebraic equations to represent the underlying structure of the three word-problem types. Moreover, to further extend this line of work, we not only assessed effects on word-problem performance but also described the work students generated as they solved those word problems to gain insight into their ability to represent the underlying structure of word problems using algebraic equations.

Method

Participants

In five schools in a southeastern urban school district in the United States, 19 second-grade teachers (all female) were randomly assigned to SBI (n = 10) or control (i.e., business-as-usual; n = 9) word-problem instruction. Soon after random assignment, one SBI teacher was reassigned to a different grade level, leaving nine teachers in each condition. Teachers were comparable as a function of study condition on years teaching but not on race (see Table 1). However, because the differences in race were small and given that we relied on random assignment, we do not consider this problematic.

Table 1.

Demographics and Screening Data by Study Condition (n = 270)

| Variable | SBI |

Control |

||||||

|---|---|---|---|---|---|---|---|---|

| % (n) | M (SD) | % (n) | M (SD) | χ2 | p | F | p | |

| Teacher demographics: | ||||||||

| Male | .0 (0) | .0 (0) | ||||||

| Race: | 10.33 | <.001 | ||||||

| Caucasian | 77.7 (7) | 55.5 (5) | ||||||

| African-American | 22.2 (2) | 33.3 (3) | ||||||

| Asian | .0 (0) | 11.1 (1) | ||||||

| Years teaching | .14 | .72 | ||||||

| Student demographics: | ||||||||

| Male | 49.6 (65) | 48.2 (67) | .05 | .82 | ||||

| Race: | 3.89 | .42 | ||||||

| Caucasian | 29.0 (38) | 35.3 (49) | ||||||

| African-American | 61.1 (80) | 57.6 (80) | ||||||

| Hispanic | 3.1 (4) | 4.3 (6) | ||||||

| Asian | 6.9 (9) | 2.9 (4) | ||||||

| Subsidized lunch | 60.3 (79) | 66.2 (92) | 1.01 | .32 | ||||

| English language learner | 3.1 (4) | .0 (0) | 4.31 | .04 | ||||

| Teacher ranking: | .72 | .40 | ||||||

| Below grade level | 24.4 (32) | 20.1 (28) | ||||||

| Student pretest: | ||||||||

| WRAT—Arithmetic | 5.7 (1.88) | 5.5 (1.90) | .69 | .41 | ||||

| Single-digit story problems | 8.2 (3.84) | 8.4 (3.87) | .23 | .63 | ||||

Note.—SBI: n = 9 teachers, 131 students; control: n = 9 teachers, 139 students.

Student participants were the 270 children in these classrooms for whom we had obtained parental consent and who were present for both pretesting and posttesting. We began the study with 301 students in the fall and experienced 10% attrition during the school year. Students who moved before the end of the study were comparable to those who completed in terms of demographics and the descriptive data we collected on incoming mathematics performance. In each classroom, 10 to 20 students were represented in the database. See Table 1 for student demographics, descriptive data on the students’ incoming mathematics performance, and teacher ratings of students’ mathematics performance by study condition. We applied chi-square (χ2) analysis to categorical data and analysis of variance (ANOVA) to continuous data. ELL status differed as a function of study condition, although the difference between the conditions was small (3.1% vs. 0%). Incoming computation (Wide Range Achievement Test 3—Arithmetic [WRAT]; Wilkinson, 1993) and word-problem (Single-Digit Story Problems; Jordan & Hanich, 2000) performance were comparable between conditions. These two measures were used for descriptive purposes only. Additionally, teacher ratings of students’ mathematics performance as below grade level or at/above grade level were comparable as a function of condition.

Word-Problem Instruction

Instruction shared by all conditions

Control and SBI teachers relied primarily on the basal text Houghton Mifflin Math (Greenes et al., 2005) to guide their mathematics instruction. We chose the problem types for SBI from this curriculum to ensure that control students received word-problem instruction relevant to the study.

Commonalities and distinctions between control group instruction and SBI

Based on an analysis of Houghton Mifflin Math (Greenes et al., 2005) and teacher reports, key distinctions between the control and SBI conditions were as follows: First, control group instruction emphasized a metacognitive approach to solving word problems via guiding questions to help students understand, plan, solve, and reflect on the content of word problems; SBI did not employ this general set of metacognitive strategies. Second, in contrast to SBI, there was no attempt in the control condition to broaden the students’ schemas for these problem types in order to address transfer. Third, control group instruction provided more practice in applying problem-solution rules. Fourth, control group instruction provided greater emphasis on computational requirements for problem solution. Fifth, control group instruction focused less on problems with missing information in the first or second position of the addition/subtraction equations that represented the underlying structure of problems.

Important commonalities between the control and SBI conditions were as follows: instruction addressed one problem type at a time, instruction focused on the concepts underlying the problem type, instruction provided students with explicit steps for arriving at solutions to the problems presented in the narrative, and instruction relied on worked examples, guided group practice, and independent work with checking. Another commonality between the control group instruction and SBI was that research assistants delivered a 3-week introduction unit (two lessons per week) to all SBI and control group classrooms. This introduction unit was designed to teach foundational skills and general strategies for solving word problems. It addressed strategies for checking word-problem work, including the reasonableness of answers; counting strategies for adding and subtracting simple number combination problems; strategies for checking computational work; labeling word-problem answers with mathematical symbols, monetary signs, and words; and strategies for deriving information from pictures and graphs. This introduction unit, common across both conditions, did not rely on SBI. The six lessons used worked examples with explicit whole-class instruction, dyadic practice, independent work, and discussion of challenge problems. Classroom teachers were present during lessons and assisted with discipline and answering student questions. Each lesson lasted 45–60 minutes.

SBI

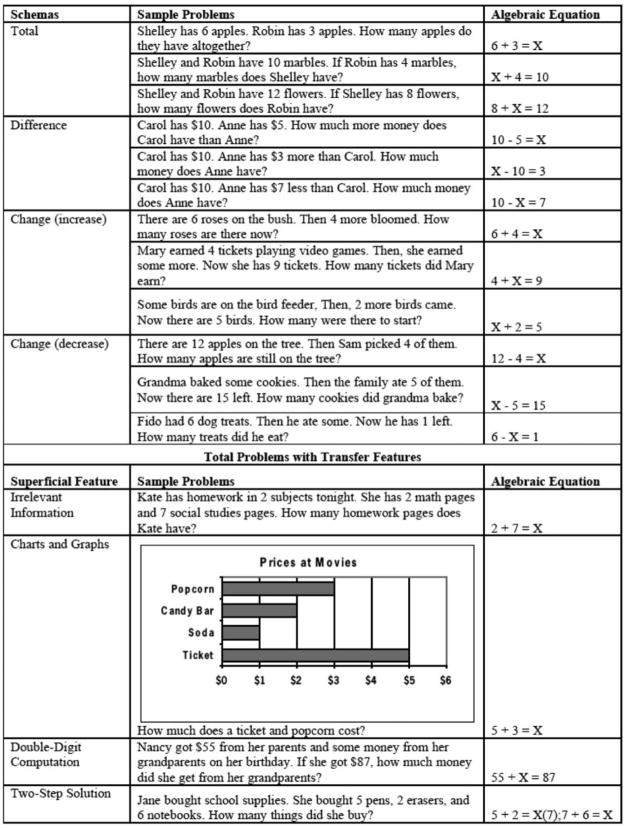

SBI lessons were incorporated within the teachers’ standard mathematics block. SBI did not constitute any teacher’s entire mathematics curriculum, but rather supplemented the teacher’s mathematics program while keeping total mathematics instruction time constant. Research assistants delivered three 4-week SBI units, each of which comprised eight lessons (i.e., 24 lessons across the three 4-week units). In addition, a 1-week review occurred at the start of January. Classroom teachers were present during lessons and assisted with discipline and answering student questions. Each lesson lasted 45–60 minutes. Each 4-week unit addressed one problem type, and subsequent units provided cumulative review of previous units. Three problem types (Riley, Greeno, & Heller, 1983) were addressed: total (or combine, in which small groups are combined into larger groups, reflecting magnitude aggregation), difference (or compare, in which two quantities are compared, reflecting magnitude comparisons), and change (in which an initial quantity increases or decreases over time, reflecting temporal issues). See Appendix Figure A1 for sample problems of each problem type.

Units 2–4 were structured in the same way. Lesson 1 addressed finding X when any of the three positions of a simple addition and subtraction algebraic equation is missing (i.e., a + b = c; x − y = z). We taught students to solve for X within the equations they generated by simply rewriting those equations so that X appeared on the right-hand side of the equations. As noted previously, we were interested in students’ ability to generate algebraic equations to represent the structure of word problems, not in students’ ability to solve for X. Lesson 2 introduced the new problem type for that unit by focusing on the conceptual underpinnings and defining features of the problem type, how the overarching equation represented the defining features of the problem type, and procedural strategies for problem solution for that problem type. Lessons 3 and 4 taught students to recognize four superficial features (that can make problems within the problem type appear novel but do not alter the problem type or the problem-solution methods): irrelevant information, relevant information presented in pictures or graphs, two-digit numbers, and the combining of problem types. Lessons 5 and 6 taught students to solve problems when the missing information was in the first or second position of the overarching equation that represented that problem type’s defining underlying structure. Lessons 7 and 8 integrated and reviewed the unit’s content.

The total problem type was addressed in Unit 2, the difference problem type in Unit 3, and the change problem type in Unit 4. At the beginning of the unit focusing on the total problem type, research assistants also taught students strategies that applied across the three problem types: to use the RUN strategy (i.e., Read the problem, Underline the question, and Name the problem type) and to identify and circle relevant information.

For total problems, the overarching equation was P1 + P2 = T (part 1 plus part 2 equals total). Students were taught to circle the kind of item being combined and important numerical values. Then they labeled numerical values as P1 (for part 1), P2 (for part 2), and T (for the total). Students designated the missing information (P1, P2, or T) with an X and created the algebraic equation representing the mathematical structure of that problem in the form of P1 + P2 = T. They then solved for X, labeled their answer, and checked their work. Difference and change problems followed an analogous procedure, however, the overarching equation representing the problem structure differed. Students were taught to represent the structure of difference problems with the overarching equation B − s = D (bigger quantity minus smaller quantity equals difference). As they read the narrative of a difference problem, they circled the bigger amount (labeled B), the smaller amount (labeled s), and the difference between amounts (labeled D). Students were taught to represent the problem structure of change problems with the overarching equation St +/− C = E (starting amount plus or minus the change amount equals end amount). As they read the narrative of a change problem, they circled the starting amount (labeled St), the change amount (labeled C), the end amount (labeled E), and specified whether that amount increased (labeled with a + next to the C for addition) or decreased (labeled with a − next to the C for subtraction; see App. Fig. A1 for examples). Using these procedures, we helped SBI students develop schemas for the three problem types in terms of whether quantities are combined, compared, or changed. Then we taught SBI students to categorize problems as total, difference, or change, and to represent the structure of each problem type with an overarching equation.

In addition, as part of SBI, we explicitly taught students to anticipate superficial features that make problems appear novel even though those problems belong to the problem types they were learning. For each problem type, we addressed the following superficial features: irrelevant information, deriving relevant information from pictures and graphs, two-digit computation, and combining problem types (requiring two-step solutions). Identifying irrelevant information taught students to recognize and ignore extraneous numerical information not needed to solve the problem. Deriving information from pictures and graphs taught students to look for relevant information beyond the word-problem narratives. Incorporating two-digit numbers taught students to apply analogous solution methods when problems involved larger quantities. Combining problem types taught students to look for multiple problem types within a single word-problem narrative; the multiple problems could represent the same or different problem types (e.g., two total problems; one total problem combined with a difference problem). See Appendix Figure A1 for examples of total problems that were “disguised” by each superficial feature.

SBI activities

Each SBI lesson comprised four activities. The first was whole-class instruction, during which research assistants reviewed concepts taught during the preceding lesson and introduced new material. Research assistants relied on instructional posters, visuals, manipulatives, and role playing; they also modeled problem-solving strategies and provided guided practice while eliciting student participation. Whole-class instruction lasted 20–25 minutes. During each lesson research assistants guided students through three to four word problems with decreasing support.

The second activity was partner work, during which students worked in dyads to complete four word problems. Two problems were similar to problems taught in that lesson and two problems reviewed previously learned concepts. Dyads comprised a stronger and a weaker student. The stronger student often took the lead in reading the word problems, however, students worked together to solve the problems. Partner work lasted 10–15 minutes and then students checked their work against an answer key and corrected mistakes.

The third activity was individual practice. Each student independently completed 10 computation problems (five single-digit and five double-digit) and one word problem. The research assistant scored each worksheet, which was worth a maximum of 20 points. After receiving their scores, students colored the number of points they earned on a bar chart, with each bar representing one session. Students could thereby monitor their progress. Individual practice lasted 10–15 minutes.

The final activity was a challenge problem, in which students applied what they had learned in SBI to more complicated problems. Students completed the challenge problem independently and then shared solution methods with the class. Research assistants led the discussion, with the challenge problem activity lasting 5 minutes.

Delivery

Three research assistants taught Unit 1, the introductory unit that all 18 classrooms received. Two research assistants taught Units 2–4 (one taught four SBI classes and the other taught five SBI classes), which constituted the SBI program. All sessions were scripted to ensure consistency of information, however, scripts were studied, not read, in order to preserve teaching authenticity. Research assistants met biweekly to discuss upcoming lessons and problem solve about implementation issues. At the end of the study, teachers reported the number of minutes per week they spent on math (including time on this project). Means for the control and SBI, respectively, were 268.03 (SD = 52.67) and 279.12 (SD = 49.83)—not a statistically significant difference.

Treatment fidelity

Every session was audiotaped, 20% of which were sampled to represent research assistant, lesson type, and unit comparably. Research assistants listened to sessions independently, noting essential components of each lesson using a fidelity checklist that had been prepared at the beginning of the study. Intercoder agreement was 96.9%. In Unit 1 (the introductory unit), the percentage of essential points addressed averaged 98.84 (SD = 2.26) for conventional classrooms and 98.54 (SD = 3.54) for SBI classrooms. For SBI instruction (Units 2–4), the percentage of points addressed averaged 99.35 (SD = 1.31).

Measures Used to Describe the Sample at Pretreatment

We used two measures to describe the sample at pretreatment (see the Participants section for further details). The WRAT (Wilkinson, 1993) assesses calculation skill, during which students have 10 minutes to write answers to calculation problems of increasing difficulty. Median reliability is .94 for 5 to 12 years of age. Single-digit story problems (Jordan & Hanich, 2000; adapted from Carpenter & Moser, 1984; Riley et al., 1983) comprises 14 word problems involving sums or minuends of nine or less. Each problem reflects a total, difference, change, or equality relationship. Credit is earned for correct mathematical answers. Alpha on this sample was .92.

Measures Used to Assess Treatment Effects

Word problems

We used two measures to assess word-problem performance: Second-Grade Vanderbilt Story Problems (VSP; Fuchs & Seethaler, 2008) and the Iowa Test of Basic Skills Level 8—Problem Solving and Data Interpretation (ITBS; Hoover, Hieronymous, Dunbar, & Frisbie, 1993). None of the problems from either measure had been used for instruction. In Table 2 we list problem features by measure and item. As shown, the measures differed in two major dimensions. First, VSP included items with missing information in all three positions of equations, whereas ITBS excluded items with missing information in the first or second position. This difference made some VSP problems more challenging than ITBS. Second, VSP only included word problems representing total, difference, and change problem types, whereas ITBS also included word problems representing multiplication, division, and fraction concepts. Thus, some ITBS problems were more challenging than VSP problems. Another key difference between the measures was the response format, with VSP requiring constructed responses and ITBS requiring multiple-choice responses.

Table 2.

Descriptions of Problems on VSP and ITBS

| Measure | Problem | Description | Accuracy |

|---|---|---|---|

| VSP | 1 | Two-step: total X3; difference X2 | 43/88 (48.9%) |

| 2 | One-step: change X3 | 79/89 (88.8%) | |

| 3 | Two-step: total X3; change X1 | 58/87 (66.7%) | |

| 4 | One-step: difference X3 | 42/88 (47.7%) | |

| 5 | One-step: total X1 | 42/87 (48.3%) | |

| 6 | One-step: change X2 | 71/87 (81.6%) | |

| 7 | One-step: change X1 | 44/85 (51.8%) | |

| 8 | One-step: total X3 | 82/86 (95.3%) | |

| 9 | One-step: difference X1 | 76/84 (90.5%) | |

| 10 | One-step: total X2, graph, irrelevant information in graph | 38/80 (47.5%) | |

| 11 | One-step: change X2, picture, irrelevant information in picture | 32/83 (38.6%) | |

| 12 | One-step: difference X1, graph, irrelevant information in picture and narrative | 43/74 (58.1%) | |

| 13 | One-step: change X3, graph, irrelevant information in graph | 66/80 (82.5%) | |

| 14 | One-step: total X1, graph, irrelevant information in graph | 44/81 (54.3%) | |

| 15 | One-step: difference X2, graph, irrelevant information in graph and narrative | 16/78 (20.5%) | |

| 16 | Two-step: total X3, change X1, graph, irrelevant information in graph | 20/75 (26.7%) | |

| 17 | One-step: total X3, graph, irrelevant information in graph | 60/77 (77.9%) | |

| 18 | One-step: difference X3, graph irrelevant information in graph and narrative | 33/75 (44.0%) | |

| ITBS | 1 | One-step: total X3 | |

| 2 | One-step: change X3 | ||

| 3 | One-step: change X3 | ||

| 4 | Two-step: total X3, total X3 | ||

| 5 | Two-step: total X3, change X3 | ||

| 6 | One-step: repeated addition or multiplication | ||

| 7 | One-step: division | ||

| 8 | Two-step: half, total X3 | ||

| 9 | One-step: repeated addition or multiplication | ||

| 10 | One-step: change X3, irrelevant information in narrative | ||

| 11 | Two-step: total X3, total X3 | ||

| 12 | One-step: total X3, irrelevant information in narrative | ||

| 13 | Two-step: total X3, change X3 | ||

| 14 | One-step: division X3 | ||

| 15 | Two-step: total X3, difference X3 | ||

| 16 | One-step: division | ||

| 17 | One-step: difference X3 | ||

| 18 | Two-step: total X3, change X3 | ||

| 19 | One-step: repeated addition or multiplication | ||

| 20 | Graph reading | ||

| 21 | One-step: total X3, graph, irrelevant information in graph | ||

| 22 | Two-step: total X3, repeated halving, graph, irrelevant information in graph | ||

| 23 | Graph reading | ||

| 24 | One-step: total X3, graph, irrelevant information in graph | ||

| 25 | One-step: difference X3, graph, irrelevant information in graph | ||

| 26 | Graph reading | ||

| 27 | Graph reading | ||

| 28 | Graph reading | ||

| 29 | One-step: difference X3, graph, irrelevant information in graph | ||

| 30 | One-step: difference X3, graph, irrelevant information in graph |

Note.—VSP is Second-Grade Vanderbilt Story Problems (Fuchs & Seethaler, 2008); ITBS is the Iowa Test of Basic Skills Level 8—Problem Solving and Data Interpretation (ITBS: Hoover, Hieronymous, Dunbar, & Frisbie, 1993). Accuracy is the number of students who used an algebraic equation correctly to represent the structure of the problem/number of students who represented the word problem using an algebraic equation; accuracy was coded only for the VSP.

VSP comprises 18 word problems that sample total, difference, and change problem types comparably, with and without the four superficial features and with missing information occurring in any of the three positions of the overarching equation representing the structure of the problem types. Credit is earned for correct math and labels in answers. Our primary analysis was based on the total score (although we provide exploratory results by problem type, by position of missing information, and by number of math steps required to solve the problem). Alpha on this sample was .93.

Level 8 of ITBS includes 30 word problems organized in three sections. In the first section, students answer eight problems that the tester reads aloud; students do not see the written problems. Each problem is read twice (for this section, problems are not reread upon student request) and students have 30 seconds to respond. For the eight problems in the second section, which are structured similarly to those in the first section, students see the written version as the tester reads each problem aloud. Students have 30 seconds to solve each problem. In the third section, which includes 14 problems that require students to find relevant information located in graphs and pictures, the tester also reads problems aloud while students see the written version. Alpha on this sample was .86.

Solving simple equations

With find X (Fuchs & Seethaler, 2008), students solve algebraic equations (e.g., a + b = c; x − y = z) that vary the position of X across all three positions. The tester demonstrates how to find X with a sample problem. Students are provided between 5 and 10 minutes to complete the eight test items. Alpha on this sample was .92.

Representing word problems with algebraic equations

To gain insight into students’ emerging knowledge of algebra, in terms of their ability to represent and reason about problem situations that contain unknowns (Izsak, 2000) and solve problems using the kinds of mathematical expressions found in algebra (Kiernan, 1992), we coded each VSP item in terms of whether students represented word problems using algebraic equations. If this was the case, we coded whether the algebraic equation correctly represented the structure of the problem and whether students included “X =” in their answers.

Data Collection

Students were pretested on single-digit story problems and WRAT, pretested and posttested on the VSP and find X, and post-tested on the ITBS. At pretesting and post-testing, measures were administered in three sessions. Research assistants, who were unfamiliar with the students they tested, followed an administration script that prompted them to read each problem aloud and allowed students adequate response time. They reread items upon student request. Students were instructed not to work ahead. Prior to testing, all SBI posters and teaching materials were removed from classrooms and, during testing, research assistants in no way prompted students to rely on SBI strategies or reminded students about SBI. Pretest data were collected in October in a whole-class format (except for small-group administrations for make-ups). SBI began the second week of October and ran for 16 weeks through the final week of February. Posttesting occurred during the first 2 weeks of March using the same format as pretesting. Two research assistants scored all protocols independently. Discrepancies in scoring and in data entry were resolved on a review of the raw data with 100% final accuracy.

Results

Table 3 displays descriptive statistics for (a) pretest, posttest, and adjusted posttest scores by study condition on VSP and find X and (b) posttest scores by study condition on ITBS. In Table 4 we display intraclass correlations at pretreatment and posttreatment, which show that the effect for classroom clustering explained between .1% and 11.7% of the variance at pretreatment (all significant except find X) and between 13.2% and 22.1% of the variance at post-treatment (all significant). In light of these intraclass correlations and because random assignment occurred at the classroom level, data were analyzed using a two-level hierarchical linear model to account for classroom-and student-level effects. Table 4 shows results for pretreatment analyses on study condition comparability and results for the effects of study condition on word-problem outcomes. To quantify the magnitude of the difference between study conditions, we calculated Hedges’s g to provide an estimate of the size of the effect while controlling for covariates and the hierarchical structure of the model.

Table 3.

Pretest Performance, Posttest Performance, and Adjusted Posttest Performance by Study Condition

| Variable | SBI |

Control |

|---|---|---|

| M (SD) | M (SD) | |

| VSP across problems: | ||

| Pretest | 10.76 (2.83) | 9.99 (2.94) |

| Posttest | 21.73 (4.31) | 17.18 (4.28) |

| Adjusted posttest | 21.78 (3.33) | 17.17 (3.44) |

| Find X: | ||

| Pretest | 1.54 (.77) | 1.83 (.77) |

| Posttest | 5.29 (1.25) | 2.68 (1.25) |

| Adjusted posttest | 5.34 (.62) | 2.67 (.74) |

| ITBS: | ||

| Pretest | NA | NA |

| Posttest | 16.14 (3.26) | 16.93 (2.54) |

| Adjusted posttest | NA | NA |

| VSP total problems: | ||

| Pretest | 2.66 (.70) | 2.59 (.75) |

| Posttest | 5.35 (1.27) | 4.23 (1.01) |

| Adjusted posttest | 5.39 (.88) | 4.24 (.77) |

| VSP difference problems: | ||

| Pretest | 2.32 (.66) | 2.14 (.61) |

| Posttest | 4.75 (1.06) | 3.85 (.99) |

| Adjusted posttest | 4.77 (.68) | 3.85 (.73) |

| VSP change problems: | ||

| Pretest | 3.45 (1.00) | 3.18 (.99) |

| Posttest | 6.23 (1.22) | 5.23 (1.44) |

| Adjusted posttest | 6.23 (.94) | 5.24 (1.12) |

| X1 problems: | ||

| Pretest | 2.10 (.64) | 1.84 (.59) |

| Posttest | 4.25 (.92) | 3.33 (.86) |

| Adjusted posttest | 4.26 (.61) | 3.33 (.65) |

| X2 problems: | ||

| Pretest | 2.39 (.85) | 2.15 (.82) |

| Posttest | 5.02 (1.27) | 4.21 (1.40) |

| Adjusted posttest | 5.04 (.93) | 4.21 (1.09) |

| X3 problems: | ||

| Pretest | 3.67 (.81) | 3.64 (.75) |

| Posttest | 7.16 (1.39) | 5.57 (1.27) |

| Adjusted posttest | 7.19 (1.05) | 5.59 (.97) |

| One-step problems: | ||

| Pretest | 8.16 (2.05) | 7.64 (2.12) |

| Posttest | 16.43 (3.53) | 13.11 (3.37) |

| Adjusted posttest | 16.47 (2.69) | 13.11 (2.76) |

| Two-step problems: | ||

| Pretest | 2.60 (.92) | 2.36 (.87) |

| Posttest | 5.31 (.81) | 4.07 (1.01) |

| Adjusted posttest | 5.32 (.57) | 4.06 (.59) |

Note.—SBI: n = 9 teachers, 131 students; control: n = 9 teachers, 139 students. VSP is the Grade 2 Vander-bilt Story Problems Test; ITBS is the Iowa Test of Basic Skills; X1, X2, and X3 are, respectively, VSP problems with missing information is in the first, second, and third positions of algebraic equations; one-step is one-step VSP problems; two-step is two-step VSP problems.

Table 4.

Hierarchical Linear Model for Variables Predicting Pretest and Posttest Scores and Intraclass Correlations (n = 270)

| Variable |

B |

SE B |

p-Value |

ICC |

p-Value (U0) |

|||||

|---|---|---|---|---|---|---|---|---|---|---|

| Pre | Post | Pre | Post | Pre | Post | Pre | Post | Pre | Post | |

| VSP: across problems | .101 | .213 | <.001 | <.001 | ||||||

| Intercept, B0: Intercept, γ 00 | 10.08 | 10.07 | .93 | 1.16 | <.001 | <.001 | ||||

| Intercept, B0: Condition, γ01 | .82 | 4.07 | 1.32 | 1.42 | .546 | .012 | ||||

| Pretest, B1: Intercept, γ10 | NA | .71 | NA | .06 | NA | <.001 | ||||

| ITBS | NA | .153 | NA | <.001 | ||||||

| Intercept, B0: Intercept, γ00 | NA | 17.02 | NA | .88 | NA | <.001 | ||||

| Intercept, B0: Condition, γ01 | NA | −.80 | NA | 1.25 | NA | .532 | ||||

| Find X | .001 | .215 | .414 | <.001 | ||||||

| Intercept, B0: Intercept, γ00 | 1.83 | 2.16 | .24 | .38 | <.001 | <.001 | ||||

| Intercept, B0: Condition, γ01 | −.20 | 2.69 | .35 | .52 | .566 | <.001 | ||||

| Pretest, B1: Intercept, γ10 | NA | .33 | NA | .06 | NA | <.001 | ||||

| Exploratory analyses: | ||||||||||

| VSP: Total problems | .065 | .192 | .004 | <.001 | ||||||

| Intercept, B0: Intercept, γ00 | 2.61 | 2.86 | .23 | .33 | <.001 | <.001 | ||||

| Intercept, B0: Condition, γ01 | .09 | 1.11 | .33 | .40 | .792 | .015 | ||||

| Pretest, B1: Intercept, γ10 | NA | .53 | NA | .07 | NA | <.001 | ||||

| VSP: Difference problems | .042 | .136 | .029 | <.001 | ||||||

| Intercept, B0: Intercept, γ00 | 2.15 | 2.58 | .20 | .31 | <.001 | <.001 | ||||

| Intercept, B0: Condition, γ01 | .21 | .81 | .29 | .39 | .487 | .053 | ||||

| Pretest, B1: Intercept, γ10 | NA | .60 | NA | .07 | NA | <.001 | ||||

| VSP: Change problems | .117 | .196 | <.001 | <.001 | ||||||

| Intercept, B0: Intercept, γ00 | 3.21 | 3.39 | .32 | .37 | <.001 | <.001 | ||||

| Intercept, B0: Condition, γ01 | .29 | .83 | .45 | .45 | .532 | .083 | ||||

| Pretest, B1: Intercept, γ10 | NA | .58 | NA | .06 | NA | <.001 | ||||

| VSP: X1 | .054 | .174 | .010 | <.001 | ||||||

| Intercept, B0: Intercept, γ00 | 1.87 | 2.49 | .19 | .27 | <.001 | <.001 | ||||

| Intercept, B0: Condition, γ01 | .29 | .82 | .27 | .35 | .301 | .031 | ||||

| Pretest, B1: Intercept, γ10 | NA | .45 | NA | .06 | NA | <.001 | ||||

| VSP: X2 | .106 | .197 | <.001 | <.001 | ||||||

| Intercept, B0: Intercept, γ00 | 2.17 | 2.96 | .27 | .35 | <.001 | <.001 | ||||

| Intercept, B0: Condition, γ01 | .25 | .70 | .39 | .46 | .524 | .147 | ||||

| Pretest, B1: Intercept, γ10 | NA | .58 | NA | .07 | NA | <.001 | ||||

| VSP: X3 | .059 | .207 | .007 | <.001 | ||||||

| Intercept, B0: Intercept, γ00 | 3.66 | 3.09 | .25 | .40 | <.001 | <.001 | ||||

| Intercept, B0: Condition, γ01 | .04 | 1.58 | .36 | .47 | .907 | .004 | ||||

| Pretest, B1: Intercept, γ10 | NA | .68 | NA | .06 | NA | <.001 | ||||

| VSP: one-step problems | .082 | .221 | <.001 | <.001 | ||||||

| Intercept, B0: Intercept, γ00 | 7.70 | 7.99 | .67 | .96 | <.001 | <.001 | ||||

| Intercept, B0: Condition, γ01 | .58 | 3.01 | .96 | 1.18 | .555 | .022 | ||||

| Pretest, B1: Intercept, γ10 | NA | .67 | NA | .06 | NA | <.001 | ||||

| VSP: two-step problems | .101 | .132 | <.001 | <.001 | ||||||

| Intercept, B0: Intercept, γ00 | 2.39 | 2.96 | .29 | .29 | <.001 | <.001 | ||||

| Intercept, B0: Condition, γ01 | .25 | 1.15 | .41 | .35 | .557 | .005 | ||||

| Pretest, B1: Intercept, γ10 | NA | .47 | NA | .06 | NA | <.001 | ||||

Note.—Pretest model comparison tests: VSP across problems: χ2(1, n = 270) = 0.38, p > .500; find X: χ2(1, n = 270) = 0.34, p > .500; VSP total problems: χ2(1, n = 270) = 0.07, p > .500; VSP difference problems: χ2(1, n = 270) = 0.50, p = .500; VSP change problems: χ2(1, n = 270) = 0.40, p > .500; VSP X1 problems: χ2(1, n = 270) = 1.10, p > .295; VSP X2 problems: χ2(1, n = 270) = 0.42, p > .500; VSP X3 problems: χ2(1, n = 270) = 0.01, p > .500; VSP one-step problems: χ2(1, n = 270) = 0.36, p = .500; VSP two-step problems: χ2(1, n = 270) = 0.36, p > .500. Posttest model comparison tests (pretest only covariate models compared to full, i.e., pretest plus condition models): VSP across problems: χ2(1, n = 270) = 6.75, p< .009; ITBS (unconditional model compared to full model since ITBS was not given at pretest): χ2(1, n = 270) = 0.40, p > .500; find X: χ2(1, n = 270) = 16.07, p< .001; VSP total problems: χ2(1, n = 270) = 6.22, p = .012; VSP difference problems: χ2(1, n = 270) = 3.84, p = .047; VSP change problems: χ2(1, n = 270) = 3.14, p = .073; VSP X1 problems: χ2(1, n = 270) = 4.83, p = .026; VSP X2 problems: χ2(1, n = 270) = 2.17, p = .137; VSP X3 problems: χ2(1, n = 270) = 8.74, p = .003; VSP one-step problems: χ2(1, n = 270) = 5.48, p< .018; VSP two-step problems: χ2(1, n = 270) = 8.40, p = .004. 0 = control (n = 139 students; 9 classrooms), 1 = treatment (n = 131 students; 9 classrooms). VSP is the Grade 2 Vanderbilt Story Problems Test; ITBS is the Iowa Test of Basic Skills; X1, X2, and X3 are, respectively, VSP problems with missing information in the first, second, and third positions of algebraic equations; one-step is one-step VSP problems; two-step is two-step VSP problems.

Pretreatment Comparability

At pretest there were no significant differences between groups on the find X measure (g = −.07), as was the case for the VSP overall score (g = .12) regardless of how we considered VSP performance (for total problems, g = .05; difference problems, g = .11; change problems, g = .13; problems with missing information in the first position of the algebra equation, g = .17; problems with missing information in the second position, g = .12; problems with missing information in the third position, g = .02; one-step problems, g = .11; two-step problems, g = .11).

Student Learning as a Function of Study Condition: Word Problems

Table 4 shows the results of HLM analyses on word-problem measures at posttest with the pretest scores used as a student-level covariate for VSP. In terms of word-problem skill, as indexed on the overall VSP score, SBI students performed reliably better than control students (g = .46). On ITBS, however, the effect for study condition was not significant.

We also conducted exploratory analyses on the VSP performance for the various problem types and problem formats sampled on the measure. We deem these analyses exploratory in light of the risk of committing a Type 1 error with eight problem types/formats to consider. In these exploratory analyses, the pattern of effects was similar to the overall VSP score across most of the ways in which we considered VSP performance. On total problems the effect was significant with g = .46 in favor of the SBI condition, and on difference and change problems the effect approached significance (g = .34 and .31, respectively, favoring the SBI condition). On problems with missing information in the first position of the algebra equation, the effect was significant (g = .42 in favor of the SBI condition), as was the case for problems with missing information in the third position (g = .56). However, for problems with missing information in the second position, the study condition was not significant (p = .147; g = .26 in favor of the SBI condition). For one-step problems, the study condition was significant (g = .44 in favor of SBI), as was the case for two-step problems (g = .48).

Solving Simple Equations and Representing Word Problems with Algebraic Equations

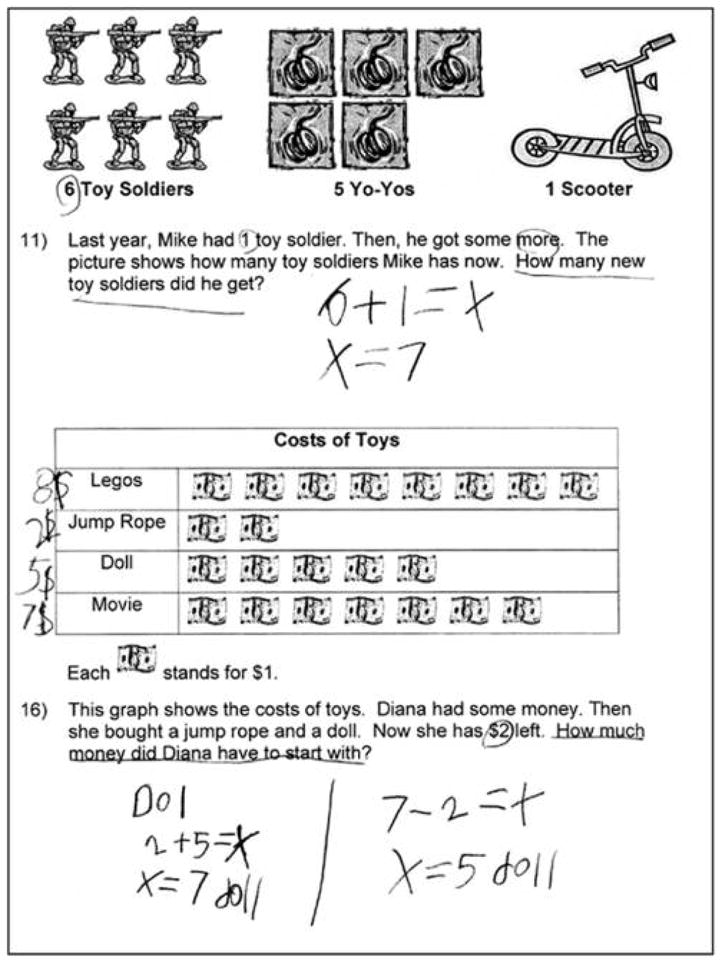

In terms of solving simple equations, SBI students reliably outperformed controls on find X (g = .87). With respect to representing word problems with algebraic equations, Figures 1 and 2 provide samples that illustrate correct and incorrect (respectively) ways in which students incorporated algebra into their problem solving. We coded each VSP item in three ways: (a) whether students represented the word problem using an algebraic equation; (b) if so, whether the algebraic equation correctly represented the structure of the problem; and (c) whether students included “X =” in their answer. There was no instance of any control student representing a word problem using an algebraic equation or including “X =” in an answer. So we present results descriptively, only for SBI students.

Fig. 1.

Examples of students’ VSP work: correct representations.

Fig. 2.

Examples of students’ VSP work: incorrect representations.

In terms of whether SBI students represented the structure of word problems using algebraic equations, 76 students (58%) represented the word problem using an algebraic equation most of the time (i.e., on 15–18 problems), 26 students (19.9%) represented the word problem using an algebraic equation some of the time (i.e., on 1–14 problems), and 29 students (22.1%) never represented the word problem using an algebraic equation. On average, SBI students represented the word problem using an algebraic equation for 11.2 of the 18 items (SD = 7.7). In Table 2 we show the number of SBI students who represented the word problem using an algebraic equation for each VSP item, which ranged from 74 (56.5%) to 89 (67.9%).

In terms of the accuracy of those equations, Table 2 shows (for each problem) the number of students who correctly represented the word problem using an algebraic equation divided by the number of students who represented the word problem using an algebraic equation. Accuracy was highest (95.3%) for a simple total problem (with missing information in the last position of the equation and with no graph or irrelevant information) and lowest (20.5%) for a complicated problem: a onestep difference problem with missing information in the second position (i.e., a more difficult problem type with the most difficult position for missing information) with irrelevant information in the narrative as well as in a graph. Accuracy varied considerably for one-step problems, but it did tend to be lower for problems with graphically or pictorially presented relevant and irrelevant information than for problems that restricted presentation of information to problem narratives.

In terms of the extent to which students solved problems using the kinds of mathematical expressions found in algebra, we coded the use of “X =” in students’ answers. Of the 131 SBI students, 71 students (54.2%) used “X =” in their answers most of the time (i.e., on 15–18 problems), 26 students (19.8%) used it in their answers some of the time (i.e., on 1–14 problems), and 34 students (28.1%) never used it in their answers. SBI students used “X =” in their answers for an average of 10.8 (SD = 7.8) problems.

Discussion

Prior work demonstrates that SBI, when conducted in a whole-class format but without algebraic equations to represent problem types, enhances third graders’ word-problem performance (e.g., Fuchs et al., 2003). The potential of SBI to incorporate algebraic equations to represent the structural, defining features of problem types has also been suggested to improve word-problem performance, but in the context of one-to-one tutoring for third-grade students with mathematics difficulties (e.g., Fuchs et al., 2009). The purpose of the present study was to extend this program of research by focusing on younger, typically developing students while incorporating algebraic equations to represent the structural, defining features of problem types. We were interested in assessing effects for a younger population not only with respect to their word-problem performance, but also in terms of their ability to represent the structure of word problems using algebraic equations. Toward this end and to extend this line of research further, we described the work students generated while solving word problems.

With respect to word-problem performance, results corroborate prior work suggesting the efficacy of SBI. This time, effects were demonstrated with typically developing second graders who were taught to use algebraic equations to represent the structural, defining features of problem types. On the VSP measure, SBI effected superior outcomes compared to the business-as-usual control group, with an effect size approaching one-half a standard deviation. Therefore, when we conducted exploratory analyses on the various VSP problem types and features, results generally favored the SBI condition, providing insight into which dimensions of the word problems created a greater challenge for students.

In other words, the exploratory analyses suggest that SBI effects were consistent and clear for one-step problems (g = .44) as well as more difficult problems that combined problem types and therefore required two-step solutions (g = .48). However, results were more clear for total problems (g = .46), the first problem type addressed in SBI, than for difference and change problems, in which effects only approached statistical significance and effect sizes hovered around one-third of a standard deviation. This pattern departs from prior work (e.g., Cummins, Kintsch, Reusser, & Weimer, 1988; Riley & Greeno, 1988; Verschaffel, De Corte, & Pauwels, 1992) demonstrating that difference problems are the most difficult of the three word-problem types. Finding that effects on change problems were comparable to effects on difference problems and less than the effects on total problems suggests that the order in which problem types are introduced during instruction may affect students’ ease in learning, thereby disturbing the natural order of difficulty. Because students learned change problems last, they experienced the fewest opportunities for review and for integrating this problem type with the others, perhaps explaining why effects for the change problem type may be less strong than for total problems and only comparable to difference problems, both of which were introduced earlier. With a longer run of the SBI program and with more time for reviewing and integrating the change problem type, effects for change problems may have been more in line with earlier, descriptive studies.

With respect to the position of the missing information, our exploratory analyses suggest that SBI effects were strongest when the missing information occurred in the third slot of the algebraic equation, followed by the first slot (with respective effect sizes of .42 and .56). For problems with missing information in the second position, the effect size was relatively small (.26). This pattern is inconsistent with previous work. For example, Schatschneider, Fuchs, Fuchs, and Compton (2006) used item-response theory to show that problems with missing information in the third position are easier to solve than problems with missing information in the second or first position, but missing information in the first (not the second) position presents the greatest challenge. This pattern has been corroborated by Garcia, Jiménez, and Hess (2006) as well as others (e.g., Riley et al., 1983). It is possible that the pattern of findings in these exploratory analyses diverge from earlier, descriptive work due to the inclusion of intervention in the present study. Specifically, in encouraging students to represent the structure of problems with algebraic equations, students had to solve for unknown variables. Considering the range of problems that the students faced in the present study, solving for the unknown was hardest when it occurred after a minus sign (in the second slot). The observed level of inaccuracy for problems with missing information in the second position may be due to student errors in solving certain types of algebraic equations rather than their ability to represent the structure of problem narratives. This explanation finds credibility in our analysis of work samples. Within one-step problems, the accuracy of students’ algebraic equations for representing the underlying structure of problems was not lower when the unknown variable occurred in the second position, and therefore students’ lack of ability to represent these problem structures does not explain the low accuracy of their word-problem solutions. This suggests the need for greater instructional emphasis on solving for unknowns within this kind of subtraction equation within SBI when algebraic reasoning is used to represent the structure of problem types.

Given the supportive findings favoring SBI on the VSP measure, it is important to note that parallel effects failed to accrue on ITBS. This was the case even though neither measure included any problem that had been used for instruction and, in that way, both measures represented transfer for both conditions. At least three major differences between the measures may explain the diverging pattern of findings. The first key difference is that VSP includes items with missing information in all three positions within the equations, whereas ITBS restricts problems to those with missing information in the third position (i.e., the easiest). In this way, VSP created greater opportunity than ITBS for SBI students to demonstrate their learning. In fact, flexibility in handling word problems with missing information in more than the conventional third position (which was registered on the VSP) is an important goal because (a) of the analytical difficulties associated with missing information in the first two positions, (b) higher-level mathematics as well as real-life problem solving require such analytical flexibility, and (c) real-life problem solving demands the analytical frameworks represented in the ability to solve for missing information within all three positions.

Another major distinction that may have contributed to the realization of effects on the VSP but not the ITBS concerns the variety of problem types sampled on the tests. VSP restricts items to total, difference, and change problem types, those central to the second-grade curriculum. By contrast, ITBS also samples word problems representative of high-level mathematics, including multiplication, division, and fraction concepts. Inclusion of these items rendered the ITBS less sensitive to the effects of SBI, because the multiplication, division, and fraction items did not provide SBI students with the opportunity to apply the strategies they had learned for representing problem types. A third key difference between the measures, response format, may have also contributed to differential findings. The Iowa requires multiple-choice answers, whereas the VSP involves open-ended responses. The multiple-choice format may have suppressed student reliance on the SBI strategies they had learned, as suggested by the limited amount of work generated on the ITBS test protocols.

These insights create the basis for several conclusions. The first concerns the need for teachers to redirect instruction toward word problems with missing information in all three positions. This is critical to foster the analytical flexibility required in higher-level mathematics as well as real-life problem solving. The second implication concerns the need to effect transfer from second-grade problem types to problems that engage the concepts of multiplication, division, and fractions. These concepts are embedded within the number concept and procedural strands of the second-grade curriculum, providing natural opportunities for teachers to extend the SBI strategies and analytical frameworks from total, difference, and change problems to more challenging word-problem types that incorporate those concepts. Finally, in the service of helping students transfer their classroom learning to high-stakes tests on which students are required to demonstrate those effects, it makes sense simply for teachers to help students understand how they might apply their strategies and skills when testing formats are novel (and even when the space provided to show work is limited).

With respect to the effects of SBI on students’ ability to represent word problems’ structure using algebraic equations, we were interested in two components. First, we considered students’ use of algebra to represent and reason about problem situations that contain unknowns (Izsak, 2000) and, second, to use the kinds of mathematical expressions found in algebra (Kiernan, 1992). We therefore coded VSP items in three ways: whether students represented word problems using algebraic equations; if so, whether algebraic equations correctly represented problem structures; and whether students included “X =” in their answers. Because there was no instance of any control student representing a word problem using an algebraic equation or including “X =” in an answer, we considered results descriptively only for SBI students.

In terms of whether students used algebra to represent and reason about problem situations that contain unknowns (Izsak, 2000), more than half of SBI students incorporated algebraic equations within their word-problem work most of the time; another 20% did so some of the time. Across the 18 VSP problems, the accuracy of those equations in representing problem structures averaged 59.4%. Students’ strong, although far from universal, reliance on algebra to represent and reason about word problems is especially notable given that posttesting in no way prompted students to rely on SBI strategies. Not surprisingly, accuracy was highest (95.3%) for a simple total problem (with missing information in the last position of the equation and with no graph/picture and no irrelevant information). Also not surprisingly, accuracy was lowest (20.5%) for a complicated onestep difference problem with missing information in the second position (i.e., a more difficult problem type with the most difficult position for missing information) with irrelevant information in the narrative as well as in a graph. Accuracy varied considerably for one-step problems and tended to be lower for problems with graphically or pictorially presented relevant and irrelevant information than for problems that restricted the presentation of relevant information to problem narratives. With respect to the students’ solving of problems using the kinds of mathematical expressions found in algebra (Kiernan, 1992), we coded students’ use of “X =” in the answers. Three-quarters of SBI students used “X =” in their answers most or some of the time.

Students’ applications and misapplications of the strategies we taught them for representing the structure word problems with algebraic equations are illustrated in Figures 1 and 2. As reflected in the correct examples, children constructed a conceptual model of the problem, identified the problem as belonging within a problem type, represented the known and unknown information within the problem narrative using the structure of the overarching equation that represents the structural features of that problem type, and based their solution plans on that model (Jonassen, 2003). In Problem 1 (see Fig. 1), the student’s first equation represents the total problem to combine the number of Tommy’s red checkers with the number of Tommy’s black checkers to find the total number (P1 + P2 = T), which then becomes the smaller (s) quantity in the difference equation comparing Tommy’s and Jacob’s amounts (B − s = D). The equation in Problem 5 represents the total problem type: Tanya and Callie’s 7 ribbons are the total amount; one part (Tanya’s number of ribbons) is known, and the student solves for the second part that contributes to the total, with missing information in the second position of the equation. Problem 7 shows a student representing a change problem in which the student solved for the starting amount of pennies, to which 4 pennies were added creating a new amount of 6 pennies. Each equation provides a conceptual match for the defining features of the problem type the narrative exemplifies.

Research shows that even high school students have difficulty mapping symbolic equations onto word problems in this way (Koedinger & MacLaren, 1997; Stacey & MacGregor, 1999) and instead tend to rely on an arithmetic approach. Such difficulty is illustrated in the incorrect examples shown in Figure 2, in which students relied on a procedural approach to generate solutions, directly translating story values into solvable algorithms. In Problem 11 (see Fig. 2), the student simply took the two known quantities from the problem narrative and supporting graph and used the word more to decide on the addition operation to combine those quantities, although the problem represents the change problem type in which the change value is the missing information. In the last example (Problem 16), a special education student set up the first equation correctly, perhaps relying on the structure of the total equation. Unfortunately, the student, perhaps relying simply on the key word left to select the subtraction operation, then failed to represent the structure of the second-step change problem correctly, which requires addition because the missing information occurs in the first position of the change equation with a decreasing change value. A direct-translation approach, as illustrated in these incorrect examples, reflects a lack of conceptual understanding not only of the problem narrative but also of the problem type. It has sometimes been characterized as a “compulsion to calculate” (Stacey & MacGregor, 1999) in which students grab numerical values presented in the problem and search for key words to select an algorithm (Sherrill, 1983). Such a compulsion to calculate deters students from identifying appropriate knowns and unknowns and from engaging in forward operations to formulate a conceptual equation (Kiernan, 1992).

As indicated by the nearly 60% of students who correctly represented the underlying structure of problems using algebraic equations, SBI with algebra moved many, although not all, students in the direction of a conceptual, algebraic approach to reason about word problems. In this way, findings suggest that SBI strengthens students’ algebraic reasoning, at least in the limited sense of representing and reasoning about problem situations that contain unknowns. More generally, together with prior work (e.g., Baroody & Ginsburg, 1983; Blanton & Kaput, 2005; McNeil & Alibali, 2005; Powell & Fuchs, in press; Rittle-Johnson & Alibali, 1999; Saenz-Ludlow & Walgamuth, 1998; Schliemann et al., 2001; Warren et al., 2006), results demonstrate that algebraic thinking can be enhanced, at least in this limited sense, among relatively young, second-grade students, many of whom are from backgrounds of poverty, as reflected in the fact that 60% of participants received subsidized lunch. The hope is that promoting such thinking within the context of relatively simple word problems grounds symbolic forms in students’ preexisting verbal comprehension and strategic competence and provides a foundation for more abstract word equation problems and more challenging symbolic equations (Koedinger & Nathan, 2004). This is important given that algebra is a gateway to high school graduation and higher-level mathematics. Findings suggest that introducing algebra early in the curriculum may help lay such a foundation, and that SBI with algebra may represent one strategy for promoting this goal.

At the same time, in considering findings, readers should note four important study limitations. First, we used letters to stand for variables in the overarching equations representing the relations among the structural features of such problem type. We taught students that these letters represent known amounts (if given in the problem) and unknown amounts (if missing from the problem). To highlight the fact that these letters stand for structural features of the word-problem types, we used uppercase letters, as did Schliemann et al. (2001), even though conventional algebra relies on lower-case letters. In a similar way, students used the uppercase X to represent the unknown variable when they generated algebraic equations to represent an actual word problem. Using upper-case letters may limit transfer to conventional algebra, and future work should investigate this possibility. A second limitation is that the present study design does not permit us to isolate the effects of using algebra to represent the underlying structure of problem types. Clearly, a study is warranted to contrast the effects of SBI with and without algebraic equations. Third, we had inadequate resources to conduct observations of the word-problem instruction. Consequently, our descriptions of the approach to business-as-usual word-problem instruction are derived from the basal curriculum on which teachers relied as well as teacher reports. Future work should conduct classroom observations to provide a deeper understanding of conventional word-problem instruction to which SBI effects are compared. Fourth, as suggested on the VSP, although SBI helps students transfer their problem-solving skills to novel problems that fall within targeted schemas, SBI did not increase students’ capacity to spontaneously develop schemas for problem types that have not been addressed instructionally, as revealed on the ITBS. A need exists to develop instructional strategies by which students develop the capacity to develop schema for problem types that are not targeted within instruction.

Acknowledgments

This research was supported in part by grant 1 RO1 HD059179 and core grant HD15052 from the National Institute of Child Health and Human Development to Vanderbilt University. Statements do not reflect the position or policy of these agencies and no official endorsement by them should be inferred.

Appendix A

Fig. A1.

Problem type examples

Footnotes

Algebra also involves the study of pattern generalization, mathematical modeling and symbolization, functional relations, graph comprehension, and covariation (e.g., Koedinger & Nathan, 2004).

References

- Baroody AJ, Ginsburg HP. The effects of instruction on children’s understanding of the “equals” sign. Elementary School Journal. 1983;84:199–212. [Google Scholar]

- Blanton ML, Kaput JJ. Characterizing a classroom practice that promotes emerging knowledge of algebra. Journal for Research in Mathematics Education. 2005;36:412–446. [Google Scholar]

- Boaler J. Encouraging the transfer of “school” mathematics to the “real world” through the integration of process and content, context, and culture. Educational Studies in Mathematics. 1993;25:341–373. [Google Scholar]

- Bransford JD, Schwartz DL. Rethinking transfer: A simple proposal with multiple implications. In: Iran-Nejad A, Pearson PD, editors. Review of research in education. Washington, DC: AERA; 1999. pp. 61–100. [Google Scholar]

- Brizuela B, Schliemann A. Ten-year-old students solving linear equations. For the Learning of Mathematics. 2004;24:33–40. [Google Scholar]

- Carpenter TP, Franke ML, Levi L. Thinking mathematically: Integrating arithmetic and algebra in elementary school. Portsmouth, NH: Heinemann; 2003. [Google Scholar]

- Carpenter TP, Levi L. Developing conceptions of emerging knowledge of algebra in the primary grades (Report No. 00-2) Madison: University of Wisconsin–Madison, National Center for Improving Student Learning and Achievement in Mathematics and Science; 2000. [Google Scholar]

- Carpenter TP, Moser JM. The acquisition of addition and subtraction concepts in grades one through three. Journal of Research in Math Education. 1984;15:179–202. [Google Scholar]

- Chen Z. Schema induction in children’s analogical problem solving. Journal of Educational Psychology. 1999;91:703–715. [Google Scholar]

- Chi MTH, Feltovich PJ, Glaser R. Categorization and representation of physics problems by experts and novices. Cognitive Science. 1981;5:121–152. [Google Scholar]

- Cooper G, Sweller J. Effects of schema acquisition and rule automation on mathematical problem solving transfer. Journal of Educational Psychology. 1987;79:347–362. [Google Scholar]

- Cummins DD, Kintsch W, Reusser K, Weimer R. The role of understanding in solving word problems. Cognitive Psychology. 1988;20:405–438. [Google Scholar]

- Davis R. ICME-5 report: Algebraic thinking in the early grades. Journal of Mathematical Behavior. 1985;4:195–208. [Google Scholar]

- Davis R. Theoretical considerations: Research studies in how humans think about algebra. In: Wagner S, Kiernan C, editors. Research issues in the learning and teaching of algebra. Vol. 4. Reston, VA: NCTM/Erlbaun; 1989. pp. 266–274. [Google Scholar]

- Durnin JH, Perrone AE, MacKay L. Teaching problem solving in elementary school mathematics. Journal of Structural Learning and Intelligent Systems. 1997;13:53–69. [Google Scholar]