Abstract

Non-sensory (cognitive) inputs can play a powerful role in monitoring one’s self-motion. Previously, we showed that access to spatial memory dramatically increases response precision in an angular self-motion updating task [1]. Here, we examined whether spatial memory also enhances a particular type of self-motion updating – angular path integration. “Angular path integration” refers to the ability to maintain an estimate of self-location after a rotational displacement by integrating internally-generated (idiothetic) self-motion signals over time. It was hypothesized that remembered spatial frameworks derived from vision and spatial language should facilitate angular path integration by decreasing the uncertainty of self-location estimates. To test this we implemented a whole-body rotation paradigm with passive, non-visual body rotations (ranging 40°–140°) administered about the yaw axis. Prior to the rotations, visual previews (Experiment 1) and verbal descriptions (Experiment 2) of the surrounding environment were given to participants. Perceived angular displacement was assessed by open-loop pointing to the origin (0°). We found that within-subject response precision significantly increased when participants were provided a spatial context prior to whole-body rotations. The present study goes beyond our previous findings by first establishing that memory of the environment enhances the processing of idiothetic self-motion signals. Moreover, we show that knowledge of one’s immediate environment, whether gained from direct visual perception or from indirect experience (i.e., spatial language), facilitates the integration of incoming self-motion signals.

Keywords: Spatial memory, path integration, vestibular navigation, manual pointing

1. Introduction

The vestibular system plays a fundamental role in a variety of motor and perceptual functions (e.g., the stabilization of gaze and posture, and the perception of verticality). One particularly crucial function is the perception of whole-body motion. Vision can be used to maintain an estimate of one’s current position and orientation when it is available, but the ability to remain oriented without vision dramatically increases the flexibility of an organism’s navigation skills. This flexibility confers important advantages for survival – for example, by allowing an individual to remain oriented at night or when moving from strong sunlight into deep shadows. When there are no external sources of information for determining one’s current position and orientation, one must rely upon internally-generated (idiothetic) self-motion signals to remain oriented, such as proprioceptive cues coming from the muscles and acceleration signals arising from the vestibular apparatus; angular displacement is derived through the temporal integration of velocity signals and the double integration of acceleration signals generated in the semicircular canals of the inner ear [17]. This method of updating orientation is known as path integration or dead reckoning [10,30,35]. A common paradigm used to assess the role of the vestibular system in self-motion perception involves passively rotating participants’ head and body and then asking them to estimate the magnitude of the rotation [12,18–21,23,34,45,52,53]. Using this approach, it has been shown that humans can estimate changes in body orientation based solely on vestibular signals. Human and animal studies have identified several distinct areas of the parietal and temporal cortex involved in the higher-level processing of vestibular signals [5,8,9,11,14,24]. Recently, Seemungal and colleagues [41] have identified right posterior parietal cortex (PPC) as one of the main cortical vestibular structures that presumably mediate whole-body motion perception. Neurophysiological studies in animals have identified a variety of vestibular-driven cells, which are thought to be the neural correlates of (angular) path-integration: place cells, head direction (HD) cells, whole-body motion cells, and more recently, grid cells (for reviews see [7,36,47]). Thus, our focus here on vestibular signals is relevant for understanding the relation between human vestibular processing and extensive physiological work in rodents investigating path integration and vestibular processing [7,42, 46].

To date, most research on angular path integration has primarily focused on the sensory inputs available during self-motion.1 However, cognitive factors such as idiosyncratic orientation strategies [44], internal spatial representations [18,37,38], expectations [12], working memory load and attention [52,53] have all been shown to influence perceptual indications of self-motion. One type of cognitive factor, which is of special interest here, is spatial memory. Although participants remain blindfolded and generate their responses in the dark in the majority of studies on vestibular function, it is clear that they still have access to an internal representation of the external environment. Little is known about how these stored spatial frameworks might impact angular path integration.

In our past work, we argued that remembering the external environment when maneuvering without vision should improve the precision of one’s current estimate of position and orientation [1]. As one moves away from a known location when traveling without vision, any systematic errors in the self-motion signals will tend to accumulate over time, yielding an increasingly inaccurate estimate of one’s position and orientation. More importantly for the present discussion, variable errors (or noise) in the self-motion signals will also tend to accumulate, yielding an increasingly imprecise estimate of one’s position and orientation. If one has access to an internal representation of the surrounding environment while moving without vision, however, this provides a structured spatial framework that could potentially facilitate integration of incoming sensory self-motion information into one’s updated position estimate. Response precision is thought to be informative about the precision of the underlying spatial representations updated during body motions [33]; when responding, participants are in effect sampling from a distribution of possible positions. Any uncertainty in the position estimate will be reflected in the variance of the distribution of behavioral responses. If remembering an environment while navigating without vision yields a more precise self-location estimate, this should be manifested as increased within-subject response precision in tasks requiring participants to indicate their current position or orientation.

We previously reported that spatial memory does indeed enhance response precision in a nonvisual spatial updating task [1]. Blindfolded participants underwent passive, whole-body rotations about an earth-vertical axis, and then manipulated a pointer to indicate the magnitude of the rotation. Prior to each body rotation, participants were either allowed full vision of the testing environment before donning the blindfold (Preview condition), or remained blindfolded (No-Preview condition). We found that response precision in trials preceded by a visual preview was dramatically enhanced [1]. No constraints were placed upon head or eye movements during the body rotations in this study – this allowed multiple sources of information (e.g., vestibular and muscular signals) to operate synergistically, as they do during everyday navigation. This method does not completely isolate path integration as the source of self-motion updating, however. In fact, to some extent, rotation magnitude could be estimated without sensing and integrating self-motion at all: one could keep one’s gaze and/or head direction fixed towards the origin during the body rotation, and then estimate the magnitude of the rotation based on the final gaze or head direction. Although path integration likely plays a role in this task, there is no way to determine the extent to which “integration-free” strategies might also be a factor. This being the case, spatial memory might affect the precision of only the integration-free processes without impacting path integration directly. Isolating path integration is crucial for linking our results with those of physiological, neuropsychological, and neuroimaging studies investigating the neural substrates of angular path integration (e.g. [7,41,42,46, 47]). This will also open the door for development of more comprehensive computational models of human path integration (e.g. [6,26]). In Experiment 1, we aimed to verify that spatial memory indeed improves the precision of path integration per se. Experiment 1 manipulated the presence or absence of visual previews, as in Arthur et al. [1], but with head and eye movements restricted during the body rotation.

Experiment 2 investigated more specifically what aspects of a remembered environment play a role in eliciting the enhanced path integration. Using a similar methodology as Experiment 1, Experiment 2 contrasted a No Preview condition with a more controlled “Preview” condition, in which the “preview” was an imagined environment defined purely by spatial language. This allowed us not only to evaluate the level of remembered spatial detail required to enhance the precision of angular path integration, but also to begin an investigation into what kind of remembered spatial configurations benefit path integration.

2. General methods

2.1. Participants

Participants were undergraduate students at The George Washington University (Washington, DC). All participants gave written consent for their participation prior to testing. The study was approved by the George Washington University ethics committee and was performed in accordance with the ethical standards of the 1964 Declaration of Helsinki. All participants were naïve in regard to the purpose of the experiment.

2.2. Materials and apparatus

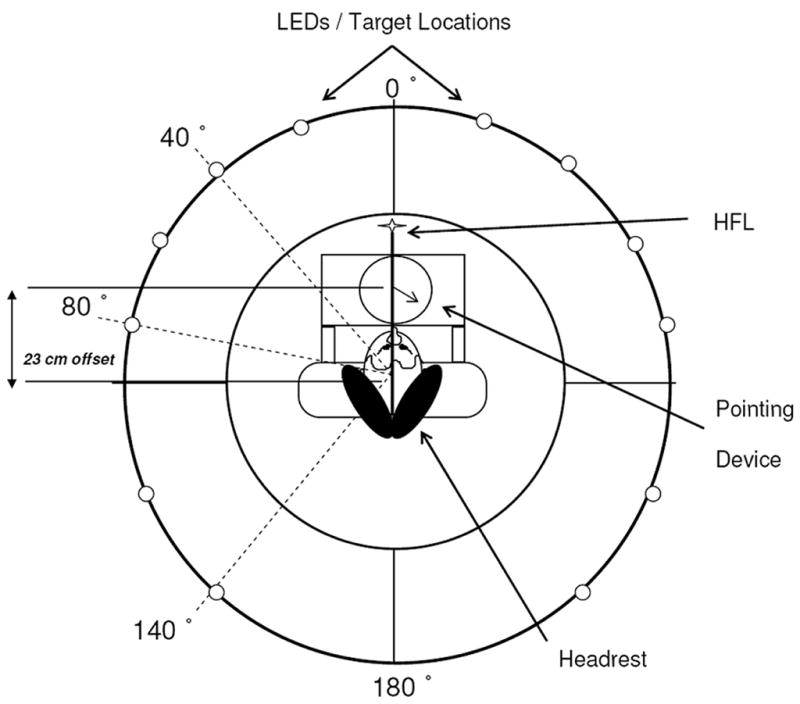

The experiments were conducted in a completely darkened laboratory, approximately 6 m square. Participants were seated in a motorized chair surrounded by a round table (0.80 m high and 1.52 m in diameter, with a circular hole 0.80 m in diameter in the center; see Fig. 1). The table was encircled by a heavy black Polyester curtain (2.55 m high and 3.0 m in diameter). A vertically- and horizontally – adjustable cushioned head rest was mounted onto the back of the chair and served as a head restraint. A single light-emitting diode (LED) was presented at eye-level and at a distance of approximately 0.55 m from the participant’s head, and remained fixed relative to the participant’s head during body rotations. This head-fixed light (HFL) served to minimize VOR and other variations in ocular behavior between participants. Participants donned black-frosted goggles containing circular holes in front of each eye (approximately 2 cm in diameter). The goggles permitted vision of the HFL but prevented vision of any light inadvertently leaking in from the surrounding environment. We did not measure eye movements to assess the accuracy of compliance to experiment instruction; we had no a priori reason to suspect that participants would not comply with the fixation instructions. A wireless pointing device was mounted on the arms of the chair immediately in front of participants, at waist level in the horizontal plane. The rotation axis of the pointer was offset from the chair axis by approximately 23 cm; at this offset, the near edge of the pointing device was within a few centimeters of the abdomen for most participants. The pointer itself was a thin rod 16 cm long centered on a polar scale graduated to the nearest degree. The experimenter controlled the acceleration, peak velocity, and total angular displacement of the motorized chair and collected pointer readings with a nearby computer. The chair was mounted to a high-precision rotary stage, the characteristics of which have been described in a previous paper [1]. All rotations entailed an accelerating portion of 45°/seĉ2, up to a peak velocity of 45°/sec, followed by a decelerating portion at 45°/seĉ2 down to a full stop. For rotation magnitudes above 40°, the velocity remained constant briefly at 45°/sec before the decelerating portion. These parameters ensured that all rotations were well above sensory threshold, with a clearly perceptible beginning and end. Graphical depictions of the velocity profiles generated using this apparatus may be found elsewhere [1]. In both experiments, the rotation magnitudes were proportional to the rotation durations. Thus, participants could use the strategy of moving the pointer more for longer-duration rotations. Because the same velocity profiles are used in the Preview and No-Preview conditions, however, this would not explain any differences between these conditions that we might observe.

Fig. 1.

Schematic overhead view of rotation table and pointing device used in Experiments 1 and 2. Access to the participant’s chair was gained via a removable panel in the table top. The table was surrounded by a heavy black Polyester curtain, 3 m in diameter (not shown). Dotted lines show rotation magnitudes. The filled circles show the six LEDs used as target locations. A pointing device was mounted on the chair in front of the participant. The center of the pointing device was offset 23 cm in a horizontal plane from the center of the chair.

Four pieces of colored construction paper (21.59 × 27.94 cm) were posted at eye-height in varying orientations on the inside of the curtain. These served as external reference cues from the immediate environment during visual previews. In addition, the circular structure of the curtain was modified by lining the inside of the chamber with chairs. This created a wall-like or rectilinear appearance, which also potentially provided contextual cues during visual previews of the environment; a sheet of paper was posted on each “wall” and was not visible in the dark. This geometric layout could be stored as a remembered spatial framework, which could be used as a cue in maintaining orientation during rotations and when generating responses [25].

2.3. Procedure

During testing, participants were seated in the chair and kept their feet on the chair platform during all body rotations. This eliminated somatosensory signals related to movement of the legs, thereby restricting sensory information about the body rotation more tightly on vestibular signals. We minimized idiosyncrasies in ocular behavior by instructing participants to fixate on the HFL during the body rotation and to continue to do so until after the pointing response was completed. Head movements were also restricted during the body rotation. Head rotations were restrained by the cushioned support mounted onto the back of the chair. The main functions of the HFL and headrest were to discourage participants from using “integration free” strategies, such as using eye and/or head signals to update their heading.

In both Experiments 1 and 2, the perceived body rotation magnitude was measured via exocentric pointing, as in our previous study [1]. Participants were informed of the offset of the pointer’s axis relative to the chair’s axis and were instructed to point so that an imaginary line would extend from the pointer and connect directly to the origin location. To demonstrate the meaning of this instruction, they were shown that for a body rotation of 90°, simply setting the pointer to 90° was inaccurate because the pointer would not point directly to the location of the origin, due to the offset of the pointer from the chair center. Participants were asked to consider the origin to be a specific location on the circular perimeter of the table, rather than a general direction with no definite distance. In all experiments, participants manipulated the pointer using their right hand. This eliminated potential biases related to the differing mechanical constraints between hands. To discourage inadvertent use of the left hand, participants held a Styrofoam ball in their left hand throughout the entire block of trials. Although these instructions required five left-handed participants in Experiment 2 to point with their non-dominant hand, previous analyses have shown that when left-handers point with their non-dominant hand, their pointing errors are virtually identical to those of right-handers [39]. After every pointing response, the direction of the pointer was recorded to the nearest degree and the pointer was returned back towards participants’ abdomen (180°). To minimize directional auditory cues, participants wore tight-fitting hearing protectors (overall noise reduction rating: −20 dB). All stimulus rotations were counterclockwise (CCW) and ranged from 25° to 145°.

3. Experiment 1: Visual previews and angular path integration

Experiment 1 used a similar method as in our previous work [1], but restricted head and eye movements so as to investigate the effects of spatial memory on the precision of self-motion updating specifically using path integration.

3.1. Method

3.1.1. Participants

Twenty participants (14 women and 6 men, average age = 19.4, range 18 to 23 years) volunteered to participate in exchange for class credit. All were right-handed.

3.1.2. Design

This experiment employed a within-subject, randomized block design. There were two blocked within-group conditions: Preview and No-Preview. Participants were individually tested in two sessions separated by an average of 8 days. Block order was fully counterbalanced. Counterclockwise rotations of 40°, 80° and 140° were administered 8 times apiece in random order in both sessions. To broaden the range of rotations, several filler trials involving counterclockwise body rotations of 55°, 70°, 100°, and 115°, measured twice apiece, were randomly interspersed with the experimental trials. Four additional counterclockwise filler rotations of either 25° or 145° were randomly interspersed into each session; this was intended to discourage participants from assuming that they would be exposed to the same set of rotation magnitudes in each session.

3.1.3. Procedure

In general, the procedure for Experiment 1 matched very closely with the procedure originally used in our previous study [1].

3.1.3.1. Preview Trials

At the beginning of each Preview trial, participants viewed the surrounding environment for several seconds. The overhead lights were extinguished, and participants donned the goggles and fixated the HFL. They then underwent a passive body rotation with only the HFL visible. After a 5 sec delay to allow the semicircular canal fluids to settle, participants were cued (via extinguishing the HFL) to give their pointing response (still without vision and without receiving error feedback). Participants were then rotated back to the starting orientation, using the same velocity profile as during the stimulus phase, to begin the next trial.

3.1.3.2. No-Preview Trials

The procedures in No-Preview trials were nearly identical to Preview trials, except here, participants were not allowed to view the environment prior to each body rotation. Participants were allowed to view the environment at the beginning of the experiment, but were disoriented with respect to the environment prior to testing (using a series of slow, clockwise and counter-clockwise whole-body rotations; see [1]), and remained in the dark throughout the entire block of No-Preview trials.

3.2. Results

Responses more than four standard deviations from the mean of a participant’s other 7 responses for a particular condition were omitted prior to analysis. This resulted in the removal of approximately 2.7% of the data. Figure 2 shows participants’ mean responses in each condition. The remaining data were analyzed in terms of two types of error: (1) Variable error, as measured by the within-subject standard deviations across eight measurements per condition. This provides a measure of response consistency, with high error indicating low consistency and vice-versa. (2) Constant error (or “bias”), calculated as the response value minus the physically correct pointing response. This provides a measure of the overall tendency to overshoot or undershoot the physically accurate value. Variable error was the main focus since it was used to test the primary predictions surrounding within-subject response precision.

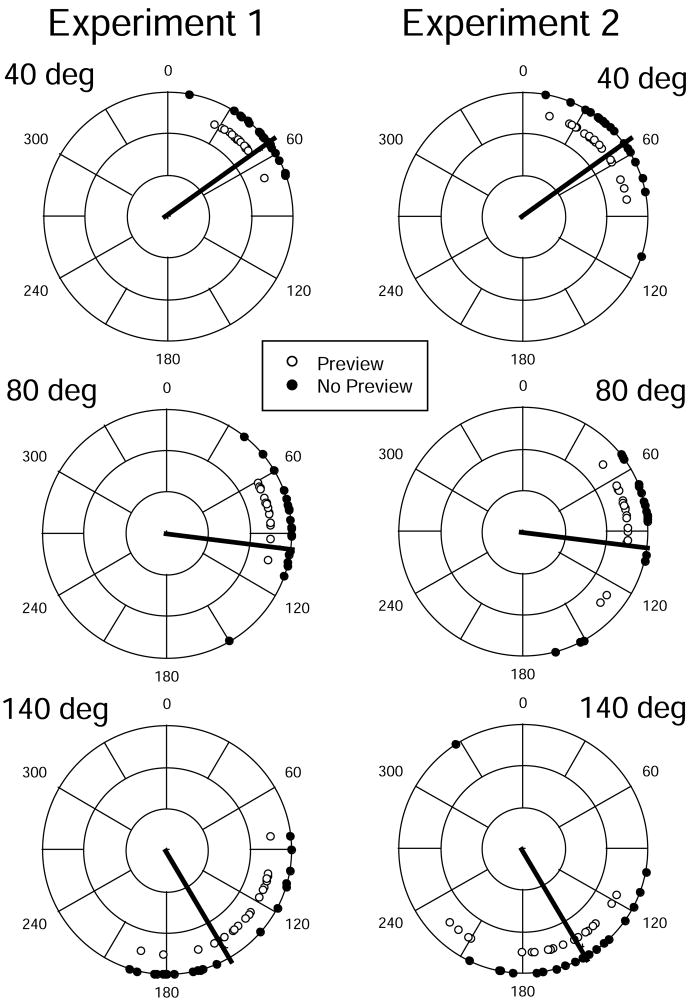

Fig. 2.

Polar plots of mean responses in each condition as a function of body rotation magnitude (40°, 80°, and 140°) in Experiments 1 and 2. Each data point is the mean of one participant’s responses over eight repetitions. Heavy solid lines denote the objectively correct response values in each condition.

Repeated measures analyses of variance (ANOVA) were conducted on both the variable errors and the constant errors, with Viewing Context (Preview/No-Preview) and Rotation Magnitude (40°, 80° and 140°) being within-group variables and block order being a between-groups variable. Participants’ mean responses in each condition are shown in Fig. 2 (left-hand panel). The mean variable error for each condition is plotted in Fig. 3; mean constant errors are plotted in Fig. 4.

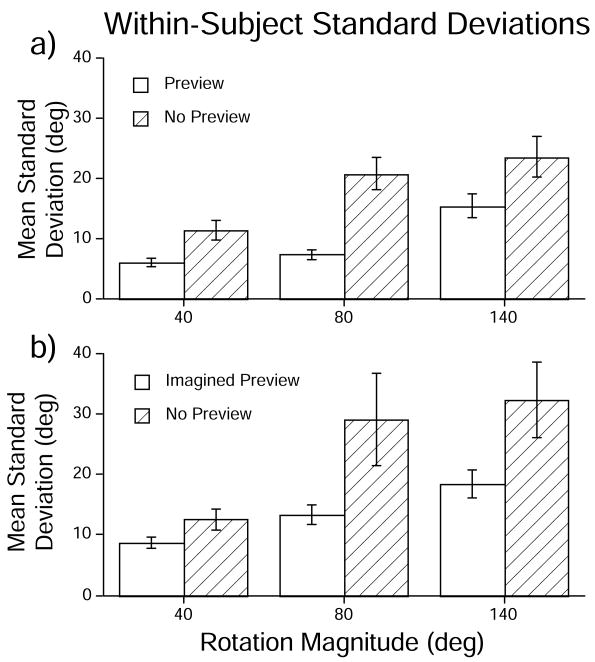

Fig. 3.

Mean within-subject standard deviations in each condition, calculated across participants, in (A) Experiment 1 and (B) Experiment 2. Error bars denote +/− one standard error of the mean.

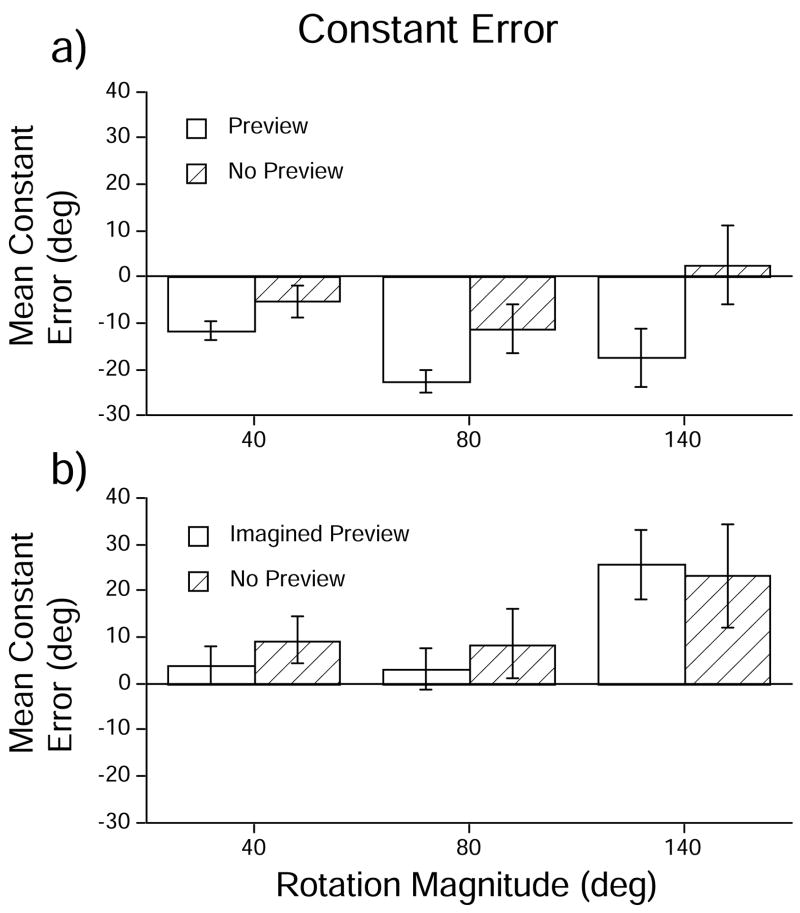

Fig. 4.

Mean constant (signed) error in each condition, calculated across participants, in (A) Experiment 1 and (B) Experiment 2. Error bars denote +/− one standard error of the mean.

3.2.1. Variable error

There was a main effect of Viewing Context (F [1, 18] = 21.45; MSE = 2396.11; p < 0.001). There was a main effect of Rotation Magnitude (F [2, 36] = 19.64; MSE = 1150.06; p < 0.001). Pairwise planned contrasts (alpha = 0.05) showed significant differences between all pairs of rotation magnitudes. There were no other main effects or interactions (all p’s > 0.05). Table 1 gives the mean coefficients of variation (the ratio of standard deviation to the mean response).

Table 1.

Mean coefficients of variation in experiments 1 and 2 as a function of rotation magnitude

| Condition, experiment | rotation magnitude |

||

|---|---|---|---|

| 40° | 80° | 140° | |

| PV, first | 14.86 (0.02) | 9.83 (0.01) | 12.12 (0.02) |

| PV, second | 23.99 (0.04) | 15.72 (0.01) | 11.11 (0.01) |

| NPV, first | 22.95 (0.03) | 23.44 (0.02) | 15.61 (0.02) |

| NPV, second | 25.16 (0.03) | 28.02 (0.04) | 20.03 (0.03) |

Notes: PV = Preview condition; NPV = No-Preview condition; Coefficients of Variation are expressed as %; Values in parentheses denote the between-subjects standard error of the mean.

Experiment 1 N = 20.

Experiment 2 N = 19.

3.2.2. Constant error

There was a main effect of Viewing Context (F [1, 18] = 18.22; MSE = 4732.47; p < 0.001), such that participants tended to generate responses that exhibited larger undershooting in Preview trials than in No-Preview trials (averaging, −17.21 and −4.65°, respectively). There were no main effects of Block Order (F [1,18] = 0.72; p = 0.407) or Rotation Magnitude (F [2, 36] = 2.81; p = 0.074). There was a significant Viewing Context × Block Order interaction (F [1,18] = 17.22; MSE = 4471.52; p < 0.001); in general, when participants received the Preview condition first, the mean constant errors for the No-Preview trials were similar to those of the Preview trials (averaging −14.04 and −13.68°, respectively), whereas when participants received the No-Preview block first, the mean constant errors significantly differed in Preview trials than in the No-Preview trials (averaging −20.38 and 4.39, respectively). There was also a Context × Rotation Magnitude × Block Order interaction (F [2,36] = 6.98; MSE = 1429.06; p = 0.003). Although we have no ready explanation for this complex interaction, the relevant means are shown in Table 2. There were no other significant interactions (all p’s > 0.05).

Table 2.

Mean constant errors in experiment 1 as a function of rotation magnitude and block order

| Condition, block order | rotation magnitude |

||

|---|---|---|---|

| 40° | 80° | 140° | |

| PV, first | −10.07 (3.43) | −20.66 (3.99) | −11.38 (9.74) |

| PV, second | −13.49 (2.35) | −24.36 (3.15) | −23.29 (8.00) |

| NPV, first | −9.56 (5.21) | −14.035 (9.21) | −17.46 (13.31) |

| NPV, second | −1.11 (4.08) | −8.35 (5.57) | 22.63 (5.75) |

Notes: PV = Preview condition; NPV = No-Preview condition.

Values in parentheses denote the between-subjects standard error of the mean (N = 20).

3.3. Discussion

There was a 93% increase in the standard deviations of the No-Preview condition from the Preview condition (averaging 18.55° vs. 9.61°, respectively). This verifies that spatial memory gained from visual previews indeed improves the precision of angular path integration.

Participants were allowed to see the testing chamber before testing; this raises the possibility that they may have been influenced to some extent by spatial memory even during No-Preview trials. It is virtually impossible to prevent participants from retrieving a memory of some environment – even if they did not remember the current testing environment, they might still call to mind some other environment. This being the case, we did not attempt to restrict vision of the testing chamber. Importantly, however, any memory of the immediate environment that participants might have called to mind during the rotations was presumably degraded as a result of the disorientation procedure and the long delay without visual input [51].

In the No-Preview condition, participants were disoriented prior to testing, whereas in the Preview condition, no disorientation was imposed. This means that disorientation itself may have been a factor in the differences we observed between the two conditions. In Experiment 2, we returned to the role of spatial memory in angular path integration, this time disorienting participants prior to both Preview and No-Preview conditions.

4. Experiment 2: Visual imagery and angular path integration

Participants in the Preview conditions of Experiment 1 saw a well-lit environment rich in visual detail. We did not attempt to control what environmental features were encoded during the preview, and thus we know little about what aspects of the previewed environment play a role in facilitating performance during subsequent non-visual path integration. A relatively high level of visual detail during the previews may be crucial for maximizing the benefits for path integration shown in Experiment 1; presumably, such previews would enhance the detail of the remembered spatial framework available during path integration, and this in turn could play a critical role in maintaining a precise estimate of one’s orientation during whole-body rotations without vision. Alternatively, the benefit of spatial memory for path integration may be sufficiently robust that remembering a relatively small number of environmental features is all that is required. Another open question concerns the importance of the specific configuration of environmental features that are encoded during the preview. For example, factors such as environmental geometry, alignment of remembered objects with major body axes (forward, back, left, or right), or even the regularity of the array of remembered objects more generally, may play important roles.

Experiment 2 was designed to begin an investigation into these issues by using a much more restricted Preview condition than in Experiment 1. Here, the “previews” did not involve vision at all, but instead were provided by spatial language – that is, participants were asked to imagine 4 locations aligned with the front, back, left and right body axes during their whole-body rotations. Providing participants with imagined previews based on spatial language dramatically reduces the level of remembered spatial detail available during the body rotations, thereby allowing us to test whether a high level of spatial detail during the preview is required to enhance subsequent path integration. We can also use spatial language to investigate whether a relatively small number of remembered objects aligned with certain major body axes is sufficient to elicit the path integration enhancements seen in Experiment 1. In principle, there is no reason to expect spatial memory encoded on the basis of visual previews to be the only effective source for enhancing path integration performance. A rich body of evidence suggests that nonvisual experiences can be as powerful as visual experiences in constructing spatial representations [13,43,48,49,55]. Most significantly, recent studies have demonstrated that internal representations resulting from spatial language are functionally equivalent to those generated by direct perception, and are updated in a similar way during body movements [4,27–29,31]. These findings support the functional equivalence hypothesis, which is the idea that spatial representations generated by direct perceptual input (vision, audition, touch) and indirect sources (e.g., spatial language) are functionally equivalent in terms of their ability to support spatially directed behaviors and computation of spatial judgments (i.e., egocentric and allocentric distance, direction, and location estimates) [3,4,29,31,54]. Thus, there are excellent reasons to expect that spatial memory based on spatial language will be useful for studying the enhanced path integration precision we have observed in the whole-body rotation paradigm. Experiment 2 tests this prediction as well.

4.1. Method

4.1.1. Participants

Nineteen participants (12 women and 7 men, average age = 19.5, range 18 to 22 years) were recruited to participate in exchange for class credit. All but 5 were right handed.

4.1.2. Design and apparatus

This experiment employed the same within-subject, randomized block design as Experiment 1. There were two blocked within-group conditions: Imagined Preview and No-Preview. Participants were individually tested in two sessions, lasting no more than 60 minutes apiece. Participants first participated in a block of Imagined Preview trials or a block of No-Preview trials; on average, participants returned eight days later to complete the other block. Half of the participants took part in the Imagined Preview trials before the No-Preview trials, with the other half receiving the reverse order. This study used the same possible rotation magnitudes and filler trials as in Experiment 1, and the same pointing device, turntable, and table shown in Fig. 1.

4.1.3. Procedure

4.1.3.1. Imagined preview trials

As in Experiment 1, participants saw the environment at the beginning of the session but were disoriented prior to testing, using the same method as in the No-Preview trials of Experiment 1, and were not allowed to view the environment thereafter. This additional manipulation effectively rules out the possibility that disorientation prior to testing was the source of the large differences in response precision between the No-Preview and Preview conditions. After disorientation, participants took part in a learning phase, followed by the experimental trials. Body rotations were conducted with only the HFL visible.

4.1.3.1.1. Learning phase

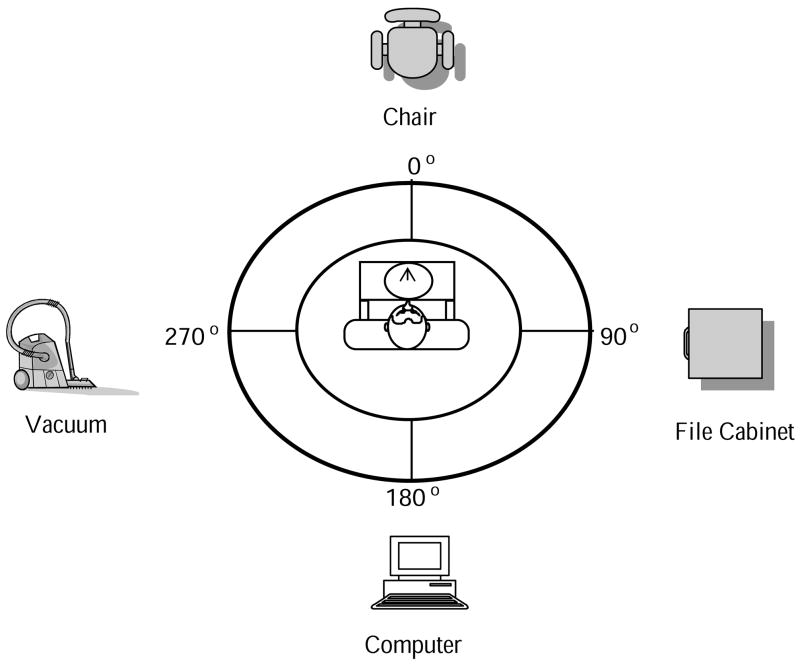

The experimenter described the positions of four objects in the environment by specifying the egocentric directions and distance (e.g., “chair, 12 o’clock, 5 feet”). Four familiar objects were selected: a chair, vacuum cleaner, computer and file cabinet. Participants were told to “get a clear image in mind of where each of the imagined objects is located” [31]; this was intended to encourage participants to encode the egocentric relations into a spatial image instead of merely creating a verbally-based representation as the spatial descriptions are converted into meaning. Object locations were distributed in four directions corresponding to the intrinsic axes of the body, with no two objects occupying the same horizontal direction (see Fig. 5).2 After describing the locations of the four objects, the experimenter probed participants’ directional knowledge of object locations by presenting them with the names of the targets in random order. After hearing the target’s name, participants were asked to aim the pointer at the remembered object location. Learning trials continued until the absolute pointing errors were less than 10°; on average, participants pointed to objects three times apiece. This ensured that participants encoded the layout described by spatial language. After the learning criterion was met, participants took part in the experimental trials.

Fig. 5.

Schematic drawing of the overhead view of the spatial layout used in the imagined preview condition in Experiment 2. The targets consisted of four familiar objects (a chair, vacuum, computer, and file cabinet) surrounding the rotation table. The targets were described using spatial language (e.g., “computer, 6 o’clock, 5 feet”). Participants sat in a chair fixed in the center of the rotation table, which was situated in the middle of the room during the learning and testing phases.

4.1.3.1.2 Experimental phase

The experimental trials were identical to those used in the Preview condition in Experiment 1, except here, participants were not allowed to view the environment prior to each body rotation. Instead, prior to each body rotation, participants were informed that they were back at the origin (0°) and instructed to get reoriented to their starting orientation in the imagined layout. They were asked to “get a clear image in mind of where each of the imagined objects is located” [31]. No error feedback was given.

4.1.3.2. No-Preview Trials

The experimental procedures in the No-Preview trials were identical to those used in the No-Preview trials in Experiment 1.

4.1.4. Analysis

As in Experiment 1, responses more than four standard deviations from the mean of a participant’s other 7 responses for a particular condition were omitted prior to analysis. This resulted in the removal of approximately 3.0% of the data. Constant and variable errors for the remaining data were calculated and ANOVAs were conducted as in Experiment 1. Participants’ mean responses in each condition are shown in Fig. 2 (right-hand panel).

4.2. Results

4.2.1. Variable error

The mean variable error for each condition is plotted in Fig. 3. An ANOVA showed an effect of Context (F [1, 17] = 7.31; MSE = 3530.81; p = 0.015), with standard deviations being approximately 82% higher in No-Preview than in Imagined Preview conditions (averaging 24.67° and 13.52°, respectively). There was a main effect of Rotation Magnitude (F [2, 34] = 12.34; MSE = 2219.92; p < 0.001), with standard deviations tending to increase with rotation angle. Table 1 gives the mean coefficients of variation. Pairwise planned contrasts (alpha = 0.05) showed significant differences between all pairs of rotation magnitudes except the 80° and 140° rotations. There was no main effect of block order (F [1,17] = 0.003; p = 0.956). There were no statistically significant interactions (Context × Block Order: F [1, 17] = 0.001; Rotation Magnitude × Block Order: F [2, 34] = 1.14; Context × Rotation Magnitude: F [2, 34] = 1.90; Context × Rotation Magnitude × Block Order: F [2, 34] = 1.85; all p’s > 0.05).

4.2.2. Constant error

The mean signed errors are plotted in Fig. 4. An ANOVA on the constant (signed) errors showed no effect of Context (F [1, 17] = 0.23; p = 0.638). The overall mean constant errors in Imagined Preview and No-Preview trials were 10.83° and 13.69°, respectively. There was an effect of Rotation Magnitude (F [2, 34] = 6.57; MSE = 4181.20; p = 0.014). Pairwise planned contrasts (alpha = 0.05) showed significant differences between all pairs of rotation magnitudes except the 40° and 80° rotations: the mean errors for 40°, 80° and 140° rotation trials were 6.56°, 5.81° and 24.40°, respectively. There was no main effect of block order (F [1,17] = 0.32; p = 0.578). There were no statistically significant interactions (Context × Block Order: F [1, 17] = 1.65; Rotation Magnitude × Block Order: F [2, 34] = 0.14; Context × Rotation Magnitude: F [2, 34] = 0.78; Context × Rotation Magnitude × Block Order: F [2, 34] = 1.95; all p’s > 0.05).

4.3. Discussion

We predicted that spatial representations constructed from spatial language would function in a similar way as visually-derived representations, acting to enhance angular path integration by decreasing uncertainty in participants’ self location estimates. Specifically, we expected that the standard deviations in the Imagined Preview condition would be significantly lower than the No-Preview trials. Results showed that the standard deviations were indeed substantially lower in the Imagined Preview trials (by approximately 82 %) than in the No-Preview trials.

Interestingly, participants in the Imagined Preview tended to overshoot in the 140° body rotation magnitudes (averaging 24.4°) (see Fig. 4). This overshooting contrasts with the general pattern of undershooting found in the Preview conditions of Experiment 1 (averaging −17.21°) and of our previous work [1]. This overshooting may be due to the fact that the imagined layout of targets was now acquired through spatial language. Unlike the visual preview condition of Experiment 1, participants were cued to pay attention to targets in the rear hemispace; in the learning phase of the Imagined Preview participants were required to encode and memorize an object located at 180°. Therefore, when integrating self-motion signals into this internal framework, participants may have used the object located at 180° rather than 90° as an “anchor” or reference point. The presence of a rearward object in spatial memory may have caused estimates of displacement to migrate towards 180°, possibly explaining the tendency to overshoot at the 140° body rotation.

The increase in within-subject response precision in the Imagined Preview condition (particularly for the larger body rotations) supports the hypothesis that access to a remembered spatial framework, and not information gained from the visual modality per se, enhances angular path integration. The fact that the results from Experiment 1 were replicated using spatial language is consistent with Loomis’ functional equivalence hypothesis [29]. These findings suggest that stored spatial representations gained from spatial language are functionally equivalent to those gained from direct visual previews in an angular path integration task. Interestingly, these results held true even though the “previews” (imagined preview in this case) were extremely impoverished compared to the visual previews of the well-lit laboratory in Experiment 1. Imagining objects located in directions aligned with salient body axes is sufficient to elicit the benefit for angular path integration. Work by Israël et al. [18] (discussed in more detail below in Section 5) suggests that even viewing a point source of light in the dark prior to undergoing a body rotation may be sufficient to elicit similar effects. Exploring the boundary conditions for such effects (e.g., level of visual detail required, the extent of effect under a wider range of rotation magnitudes, etc.) remains an interesting question for future research.

5. General discussion

This study examined the influence of stored spatial representations constructed from visual previews and spatial language on angular path integration. In both cases, response precision in indicating the magnitude of whole-body rotations was significantly enhanced when participants were provided with “previews” (whether visual or imagined) before the rotations. These results held true when head and eye movements were restricted during the body rotations, thereby requiring the use of path integration for self-motion updating during the rotation. Building on our previous work [1], the benefit of stored spatial representations for path integration was manifested primarily in terms of increased response precision. Previews had relatively little effect on accuracy. Our interpretation is that spatial memory helps decrease the variance in the underlying distribution of possible orientations when an individual updates on the basis of idiothetic signals. Subjectively, this might be experienced as an increased certainty of one’s heading when remembering a surrounding environment. Our results suggest that this increased certainty plays a relatively small role in decreasing systematic errors in self-motion sensing or response calibration.

These results add to the growing literature suggesting that sensory self-motion signals must not be considered the sole inputs to path integration; cognitive factors also play a prominent role [12,18,37,38,44,52, 53]. Our Experiment 1 is consistent with the results of Israël et al. [18], who examined a similar task in which participants underwent passive, whole-body rotations in the dark and then attempted to reproduce the magnitude of the rotation by using a joystick to turn their chair back to the initial heading. The researchers did not explicitly report within-subject response variability, but their results suggest that the variability likely decreased when participants viewed a point of light straight ahead in the dark before undergoing the body rotation, relative to conditions in which there was no prior stimulus. Our work confirms and quantifies this effect using a different response mode, and expands the focus to include imagined “previews”.

Angular path integration performance in the current paradigm is based on several component subprocesses. At a minimum, participants must (1) sense the body rotation (largely on the basis of vestibular signals), (2) integrate these self-motion signals over time to yield an estimate of the magnitude of the rotation relative to the initial heading, and (3) manually aim the pointer in a way that reflects the updated location of a reference object positioned straight ahead prior to the body rotation. In our paradigm, the sensory signals available for sensing the rotation were closely matched between the preview and no-preview conditions in both experiments. Thus, the increased response precision is unlikely to stem from this subprocess. In terms of the possible contribution of biases associated with the pointing device, previous work has shown that biases do occur when participants use pointing devices similar to the one used here, even when no self-motion updating is involved [1,2,39]. However, the same pointing device was used in both the preview and no-preview conditions, and the presence or absence of previews was manipulated within-subjects, so there should be no differences in terms of how the pointer is used between conditions.

Ruling out factors (1) and (3) in the analysis above thus implicates integration of self-motion signals (2) as the likely subprocess of path integration that is impacted by stored spatial representations. Our interpretation is that stored spatial representations can act as a framework that provides a spatial context against which incoming vestibular signals are evaluated and integrated [40]. This spatial framework enhances the precision of the estimated body heading during the rotation. To some extent, spatial memory may contribute to this enhanced precision by helping to specify the origin of rotation more precisely. Experiment 2 shows that the source modality of the stored spatial representation is not restricted to vision, and may in fact be entirely imaginary – e.g., constructed purely on the basis of experimental instructions using spatial language. This finding raises the intriguing possibility that training on the use of spatial memory and other cognitive strategies, such as mental imagery, may improve navigational skills in poor navigators. In fact, there is a growing body of evidence from research in sports psychology and cognitive neuroscience that visual imagery can facilitate performance on a wide variety of motor tasks (e.g., dart throwing, nonvisual walking, etc.) [32,50]. Our experiments here used a relatively restricted range of body rotation magnitudes and directions; an interesting topic for future research is to investigate the generality of these finding in more real-world circumstances, including larger rotation magnitudes and both CW and CCW directions. The experiments we described here also suggest that there are substantial individual differences in determining body rotation magnitude in these tasks: the No-Preview conditions between Experiments 1 and 2 were methodologically identical, and yet a comparison between Figs 4a and 4b show that mean constant errors in the No-Preview conditions tended to differ between experiments. These differences are illuminated by Fig. 2, which shows that in some cases individual subjects performed quite differently than the rest of the cohort. Future study of individual differences in this paradigm would be illuminating.

In sum, these findings emphasize the importance of the interaction between “higher-level” (cognitive) factors and “lower-level” (e.g., vestibular) information during nonvisual navigation. Vestibular-cognitive interactions are not well understood, yet there is a growing interest due to recent demonstrations of vestibular projections to the hippocampus, as well as other vestibularly-driven cortical areas (see [15] for a review). Taking the results of Experiments 1 and 2 together with our previous work [1], spatial memory clearly exerts a robust effect on the precision of angular path integration. Studies on self-motion updating often rotate participants over and over, without providing any visual experience between trials (e.g., [18,21, 22,45,52]). Our work suggests that this methodology is not optimal for eliciting peak performance. In particular, when no previews are provided, the precision of participants’ ability to update angular displacements can be underestimated significantly.

Acknowledgments

This work was supported in part by National Institutes of Health (NIH) Grant RO1 NS052137 to JP. The authors thank Rachael Hock-field, Charlotte Nordby, and Carly Kontra for their help in conducting these experiments.

Footnotes

In our usage, “sensory inputs” are those which are largely driven by incoming signals from receptor activity. By “cognitive inputs” we mean inputs which are derived from stored information, such as working memory or long-term memory representations. In this view, when there is no real-time visual input, sensory processing of visual information ceases, but other types of spatial representations may continue to exist and allow additional “cognitive processing”. We feel that these remembered spatial representation may be generated and based largely on recent sensory inputs (i.e., visual previews), but under our definition they are no longer considered “sensory”.

The objects selected were visible in the real environment before participants entered the curtained circular enclosure, but they were not visible within the enclosure and their locations in the real environment did not correspond to the verbally-described locations used in the experiment. The distribution of objects in all four directions in the horizontal plane of the imagined environment is based on the Spatial Framework Theory [13]. According to this theory, during navigation people establish spatial mental representations of the objects in the surrounding environment out of extensions of three major axes of the body: head-foot, front-back, and left-right. We chose the target directions that correspond with the major horizontal body-based axes because we felt they would be easily maintained in working memory with little memory load and with little decay in the precision of the internal representation over time [16].

References

- 1.Arthur JC, Philbeck JW, Chichka D. Spatial memory enhances the precision of angular self-motion updating. Experimental Brain Research. 2007;183:557–568. doi: 10.1007/s00221-007-1075-0. [DOI] [PubMed] [Google Scholar]

- 2.Arthur JC, Philbeck JW, Sargent J, Dopkins S. Mis-perception of exocentric directions in auditory space. Acta Psychologica. 2008;129:72–82. doi: 10.1016/j.actpsy.2008.04.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Avraamides MN. Spatial updating of environments described in text. Cognitive Psychology. 2003;47:402–431. doi: 10.1016/s0010-0285(03)00098-7. [DOI] [PubMed] [Google Scholar]

- 4.Avraamides MN, Klatzky RL, Loomis JM, Golledge RG. Use of cognitive versus perceptual heading during imagined locomotion depends on the response mode. Psychological Science. 2004;15:403–408. doi: 10.1111/j.0956-7976.2004.00692.x. [DOI] [PubMed] [Google Scholar]

- 5.Brandt T, Dieterich M. The vestibular cortex: Its locations, functions, and disorders. Annals of the New York Academy of Sciences. 1999;871:293–312. doi: 10.1111/j.1749-6632.1999.tb09193.x. [DOI] [PubMed] [Google Scholar]

- 6.Burak Y, Fiete IR. Accurate path integration in continuous attractor network models of grid cells. PLoS Computational Biology. 2009;5:e1000291. doi: 10.1371/journal.pcbi.1000291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Calton JL, Taube JS. Where am I and how will I get there from here? A role for posterior parietal cortex in the integration of spatial information and route planning. Neurobiology of Learning and Memory. 2009;91:186–196. doi: 10.1016/j.nlm.2008.09.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Cullen KE, Roy JE. Signal processing in the vestibular system during active versus passive head movements. Journal of Neurophysiology. 2004;91:1919–1933. doi: 10.1152/jn.00988.2003. [DOI] [PubMed] [Google Scholar]

- 9.Dieterich M, Bense S, Lutz S, Drzezga A, Stephan T, Bartenstein P, Brandt T. Dominance for vestibular cortical function in the non-dominant hemisphere. Cerebral Cortex. 2003;13:994–1007. doi: 10.1093/cercor/13.9.994. [DOI] [PubMed] [Google Scholar]

- 10.Etienne AS, Maurer R, Séguinot V. Path integration in mammals and its interaction with visual landmarks. Journal of Experimental Biology. 1996;199:201–209. doi: 10.1242/jeb.199.1.201. [DOI] [PubMed] [Google Scholar]

- 11.Fasold O, von Brevern M, Kuhberg M, Ploner CJ, Villringer A, Lempert T, Wenzel R. Human vestibular cortex as identi?ed with caloric stimulation in functional magnetic resonance imaging. Neuroimage. 2002;17:1384–1393. doi: 10.1006/nimg.2002.1241. [DOI] [PubMed] [Google Scholar]

- 12.Féry YA, Magnac R, Israël I. Commanding the direction of passive whole-body rotations facilitates egocentric spatial updating. Cognition. 2004;91:B1–B10. doi: 10.1016/j.cognition.2003.05.001. [DOI] [PubMed] [Google Scholar]

- 13.Franklin N, Tversky B. Searching imagined environments. Journal of Experimental Psychology. 1990;119:63–76. [Google Scholar]

- 14.Guldin WO, Akbarian S, Grüsser OJ. Cortico-cortical connections and cytoarchitectonics of the primate vestibular cortex: a study in squirrel monkeys (Saimiri sciureus) Journal of Comparative Neurology. 1992;326:375–401. doi: 10.1002/cne.903260306. [DOI] [PubMed] [Google Scholar]

- 15.Hanes DA, McCollum G. Cognitive-vestibular interactions: a review of patient difficulties and possible mechanisms. Journal of Vestibular Research. 2006;16:75–91. [PubMed] [Google Scholar]

- 16.Haun DB, Allen GL, Wedell DH. Bias in spatial memory: a categorical endorsement. Acta Psychologica. 2005;118:149–170. doi: 10.1016/j.actpsy.2004.10.011. [DOI] [PubMed] [Google Scholar]

- 17.Highstein SM. How does the vestibular part of the inner ear work? In: Baloh RW, Halmagyi GM, editors. Disorders of the vestibular system. Oxford: Oxford University Press; 1996. pp. 3–11. [Google Scholar]

- 18.Israël I, Bronstein AM, Kanayama R, Faldon M, Gresty MA. Visual and vestibular factors influencing vestibular “navigation”. Experimental Brain Research. 1996;112:411–419. doi: 10.1007/BF00227947. [DOI] [PubMed] [Google Scholar]

- 19.Israël I, Fetter M, Koenig E. Vestibular perception of passive whole-body rotation about horizontal and vertical axes in humans: goal-directed vestibulo-ocular reflex and vestibular memory-contingent saccades. Experimental Brain Research. 1993;96:335–346. doi: 10.1007/BF00227113. [DOI] [PubMed] [Google Scholar]

- 20.Israël I, Lecoq C, Capelli A, Golomer E. Vestibular memory-contingent whole-body return: Brave exocentered dancers. Annals of NY Academy of Science. 2005;1039:306–313. doi: 10.1196/annals.1325.029. [DOI] [PubMed] [Google Scholar]

- 21.Israël I, Rivaud S, Gaymard B, Berthöz A, Pierrot-Deseilligny C. Cortical control of vestibular-guided saccades in man. Brain. 1995;118:1169–1183. doi: 10.1093/brain/118.5.1169. [DOI] [PubMed] [Google Scholar]

- 22.Ivanenko Y, Grasso R. Integration of somatosensory and vestibular inputs in perceiving the direction of passive whole-body motion. Cognitive Brain Research. 1997;5:323–327. doi: 10.1016/s0926-6410(97)00011-6. [DOI] [PubMed] [Google Scholar]

- 23.Ivanenko Y, Grasso R, Israël I, Berthoz A. Spatial orientation in humans: Perception of angular whole-body displacements in two-dimensional trajectories. Experimental Brain Research. 1997;117:419–427. doi: 10.1007/s002210050236. [DOI] [PubMed] [Google Scholar]

- 24.Kahane P, Hoffmann D, Minotti L, Berthoz A. Reappraisal of the human vestibular cortex by cortical electrical stimulation study. Annals of Neurology. 2003;54:615–624. doi: 10.1002/ana.10726. [DOI] [PubMed] [Google Scholar]

- 25.Kelly JW, McNamara TP, Bodenheimer B, Carr TH, Rieser JJ. The shape of human navigation: how environmental geometry is used in maintenance of spatial orientation. Cognition. 2008;109:281–286. doi: 10.1016/j.cognition.2008.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Klatzky RL, Beall AC, Loomis JM, Golledge RG, Philbeck JW. Human navigation ability: Tests of the encoding error model of path integration. Spatial Cognition and Computation. 1999;1:31–65. [Google Scholar]

- 27.Klatzky RL, Lippa Y, Loomis JM, Golledge RG. Learning directions of objects specified by vision, spatial audition, or auditory spatial language. Learning and Memory. 2002;9:364–367. doi: 10.1101/lm.51702. [DOI] [PubMed] [Google Scholar]

- 28.Klatzky RL, Lippa Y, Loomis JM, Golledge RG. Encoding, learning and spatial updating of multiple object locations specified by 3-D sound, spatial language, and vision. Experimental Brain Research. 2003;149:48–61. doi: 10.1007/s00221-002-1334-z. [DOI] [PubMed] [Google Scholar]

- 29.Loomis JM, Klatzky RL, Avraamides M, Lippa Y, Golledge RG. Functional equivalence of spatial images produced by perception and spatial language. In: Mast F, Jäncke L, editors. Spatial Processing in Navigation, Imagery, and Perception. Springer; New York: 2007. pp. 29–48. [Google Scholar]

- 30.Loomis JM, Klatzky RL, Golledge RG, Philbeck JW. Human navigation by path integration. In: Golledge RG, editor. Wayfinding Behavior: Cognitive Mapping and Other Spatial Processes. John Hopkins Press; Baltimore, MD: 1999. pp. 125–152. [Google Scholar]

- 31.Loomis JM, Lippa Y, Klatzky RL, Golledge RG. Spatial updating of locations specified by 3-D sound and spatial language. Journal of Experimental Psychology: Human Learning, Memory, and Cognition. 2002;28:335–345. doi: 10.1037//0278-7393.28.2.335. [DOI] [PubMed] [Google Scholar]

- 32.Lutz RS. Covert muscle excitation is outflow from the central generation of motor imagery. Behavioural Brain Research. 2003;140:149–163. doi: 10.1016/s0166-4328(02)00313-3. [DOI] [PubMed] [Google Scholar]

- 33.Mergner T, Nasios G, Maurer C, Becker W. Visual object localisation in space: Interaction of retinal, eye position, vestibular and neck proprioceptive information. Experimental Brain Research. 2001;141:33–51. doi: 10.1007/s002210100826. [DOI] [PubMed] [Google Scholar]

- 34.Metcalfe T, Gresty M. Self-controlled reorienting movements in response to rotational displacements in normal subjects and patients with labyrinthine disease. Annals of New York Academy of Science. 1992;656:695–698. doi: 10.1111/j.1749-6632.1992.tb25246.x. [DOI] [PubMed] [Google Scholar]

- 35.Mittelstaedt ML, Mittelstaedt H. Homing by path integration in a mammal. Naturwissenschaften. 1980;67:566–567. [Google Scholar]

- 36.Moser EI, Kropff E, Moser MB. Place cells, grid cells, and the Brain’s spatial representation system. Annual Review of Neuroscience. 2008;31:69–89. doi: 10.1146/annurev.neuro.31.061307.090723. [DOI] [PubMed] [Google Scholar]

- 37.Okada T, Grunfeld E, Shallo-Hoffmann J, Bronstein AM. Vestibular perception of angular velocity in normal subjects and in patients with congenital nystagmus. Brain. 1999;122:1293–1303. doi: 10.1093/brain/122.7.1293. [DOI] [PubMed] [Google Scholar]

- 38.Philbeck JW, O’Leary S. Remembered landmarks enhance the precision of path integration. Psicologica. 2005;26:7–24. [Google Scholar]

- 39.Philbeck JW, Sargent J, Arthur JC, Dopkins S. Large manual pointing errors, but accurate verbal reports, for indications of target azimuth. Perception. 2008;37:511–534. doi: 10.1068/p5839. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Rieser JJ. Dynamic spatial orientation and the coupling of representation and action. In: Golledge RG, editor. Wayfinding behavior: Cognitive mapping and other spatial processes. Johns Hopkins University Press; Baltimore, MD: 1999. pp. 168–190. [Google Scholar]

- 41.Seemungal BM, Rizzo V, Gresty MA, Rothwell JC, Bronstein AM. Posterior parietal rTMS disrupts human path integration during a vestibular navigation task. Neuroscience Letters. 2008;437:88–92. doi: 10.1016/j.neulet.2008.03.067. [DOI] [PubMed] [Google Scholar]

- 42.Sharp PE, Tinkelman A, Cho J. Angular velocity and head direction signals recorded from the dorsal tegmental nucleus of gudden in the rat: Implications for path integration in the head direction cell circuit. Behavioral Neuroscience. 2001;115:571–588. [PubMed] [Google Scholar]

- 43.Shelton AL, McNamara TP. Visual memories from non-visual experiences. Psychological Science. 2001;12:343–347. doi: 10.1111/1467-9280.00363. [DOI] [PubMed] [Google Scholar]

- 44.Siegler I. Idiosyncratic orientation strategies influence self-controlled whole-body rotations in the dark. Cognitive Brain Research. 2000;9:205–207. doi: 10.1016/s0926-6410(00)00007-0. [DOI] [PubMed] [Google Scholar]

- 45.Siegler I, Viaud-Delmon I, Israël I, Berthoz A. Self-motion perception during a sequence of whole-body rotations in darkness. Experimental Brain Research. 2000;134:66–73. doi: 10.1007/s002210000415. [DOI] [PubMed] [Google Scholar]

- 46.Stackman RW, Golob EJ, Bassett JP, Taube JS. Passive transport disrupts directional path integration by rat head directional cells. Journal of Neurophysiology. 2003;90:2862–2874. doi: 10.1152/jn.00346.2003. [DOI] [PubMed] [Google Scholar]

- 47.Taube JS, Goodridge JP, Golob EJ, Dudchenko PA, Stackman RW. Processing the head direction cell signal: A review and commentary. Brain Research Bulletin. 1996;40:477–486. doi: 10.1016/0361-9230(96)00145-1. [DOI] [PubMed] [Google Scholar]

- 48.Taylor HA, Tversky B. Descriptions and depictions of environments. Memory and Cognition. 1992;20:483–496. doi: 10.3758/bf03199581. [DOI] [PubMed] [Google Scholar]

- 49.Taylor HA, Tversky B. Spatial mental models derived from survey and route descriptions. Journal of Memory and Language. 1992;31:261–282. [Google Scholar]

- 50.Vielledent S, Kosslyn SM, Berthoz A, Giraudo MD. Does mental simulation of following a path improve navigation performance without vision? Cognitive Brain Research. 2003;16:238–249. doi: 10.1016/s0926-6410(02)00279-3. [DOI] [PubMed] [Google Scholar]

- 51.Wang RF, Spelke ES. Updating egocentric representations in human navigation. Cognition. 2000;77:215–250. doi: 10.1016/s0010-0277(00)00105-0. [DOI] [PubMed] [Google Scholar]

- 52.Yardley L, Gardner M, Lavie N, Gresty M. Attentional demands of perception of passive self-motion in darkness. Neuropsychologia. 1999;37:1293–1301. doi: 10.1016/s0028-3932(99)00024-x. [DOI] [PubMed] [Google Scholar]

- 53.Yardley L, Papo D, Bronstein A, Gresty M, Gardner M, Lavie N. Attentional demands of continuously monitoring orientation using vestibular information. Neuropsychologia. 2002;40:373–383. doi: 10.1016/s0028-3932(01)00113-0. [DOI] [PubMed] [Google Scholar]

- 54.Zwaan RA. The immersed experiencer: toward an embodied theory of language comprehension. In: Ross BH, editor. The Psychology of Learning and Motivation. Vol. 44. Academic Press; New York: 2004. pp. 35–58. [Google Scholar]

- 55.Zwaan RA, Radvansky GA. Situation models in language comprehension and memory. Psychological Bulletin. 1998;123:162–185. doi: 10.1037/0033-2909.123.2.162. [DOI] [PubMed] [Google Scholar]