Abstract

Perception of the human body appears to involve predictive simulations that project forward to track unfolding body-motion events. Here we use representational momentum (RM) to investigate whether implicit knowledge of a learned arbitrary system of body movement such as sign language influences this prediction process, and how this compares to implicit knowledge of biomechanics. Experiment 1 showed greater RM for sign language stimuli in the correct direction of the sign than in the reverse direction, but unexpectedly this held true for non-signers as well as signers. Experiment 2 supported two biomechanical explanations for this result (an effect of downward movement, and an effect of the direction that the movement had actually been performed by the model), and Experiments 3 and 4 found no residual enhancement of RM in signers when these factors were controlled. In fact, surprisingly, the opposite was found: signers showed reduced RM for signs. Experiment 5 verified the effect of biomechanical knowledge by testing arm movements that are easy to perform in one direction but awkward in the reverse direction, and found greater RM for the easy direction. We conclude that while perceptual prediction is shaped by implicit knowledge of biomechanics (the awkwardness effect), it is surprisingly insensitive to expectations derived from learned movement patterns. Results are discussed in terms of recent findings on the mirror system.

Human actions are perceived differently than other stimuli. In particular, implicit knowledge derived from the observer's own body representation is engaged when watching or listening to the actions of others (for reviews see Rizzolatti & Sinigaglia, 2008; Schütz-Bosbach & Prinz 2007; Shmuelof & Zohary, 2007; Wilson & Knoblich, 2005; recent results include Aglioti, Cesari, Romani and Urgesi, 2008; Saunier, Papaxanthis, Vargas & Pozzo, 2008). Current theories postulate that these activated motor programs contribute to a simulation, or forward model, which runs forward in time from a given perceptual input, tracking the probable course of the unfolding action in parallel to the external event (Knoblich & Flach, 2001; Prinz, 2006; Wilson, 2006; Wilson & Knoblich, 2005).

These forward models are not limited to the case of human action. Beginning with the discovery of representational momentum (Freyd & Finke, 1984; see Hubbard, 2005, for review) and the flash-lag effect (Nijhawan, 1994), and continuing on to more recent neuropsychological studies (e.g. Guo et al., 2007; Mulliken, Musallam & Andresen, 2008; Rao et al., 2004; Senior, Ward, & David, 2002), it has become clear that perception of a variety of predictable types of motion involves mental simulation that anticipates the incoming signal, rather than lagging behind it (see Nijhawan, 2008, for review). Such mental simulation has substantial advantages: expectations generated by the forward model can provide top-down input to ongoing perception, resulting in a more robust percept; and motor control for interacting with the world can be planned in an anticipatory fashion, allowing rapid accurate interception of moving targets despite signal transmission delays within the nervous system.

What makes the case of human action different is the contribution of the observer's own body-representation to the simulation. For non-human actions, movement regularities based on simple physical principles, such as momentum, oscillation, collision, friction, and gravity, are used to generate predictions (see Hubbard, 2005, for review). In contrast, the prediction of human movement can tap into internal models of the body, including hierarchical limb structure, the dynamics of muscles, limitations on joint angles, and the forces involved in movement control (e.g. Desmurget & Grafton, 2000; Ito, 2008; Kawato, 1999; Wolpert & Flanagan, 2001).

Consideration of this possibility also raises a further question: whether movement patterns that are highly familiar, but learned and in some sense arbitrary, can generate perceptual expectations that result in representational momentum. Categories of movement that might qualify include performance skills such as dance, martial arts, and gymnastics; and the linguistic movements involved in signed languages such as American Sign Language (ASL). In this paper we investigate whether long-term daily experience with ASL can influence RM for body motions. Two previous lines of research suggest that it might.

One line of research concerns the effect of sign language expertise on perception of the human body. Deaf native signers of ASL, in contrast to non-signers, show categorical perception of the handshapes of ASL (Baker, Idsardi, Glinkoff & Petitto, 2005; Emmorey, McCullough & Brentari, 2003); are better at detecting subtle changes in facial configuration (Bettger, Emmorey, McCullough & Bellugi, 1997; McCullough & Emmorey, 1997); and are more likely to perceive paths that conform to real signs in apparent motion displays showing human arms (Wilson, 2001). In addition, brain imaging studies have found different patterns of activation in signers versus non-signers when perceiving both linguistic and non-linguistic hand movements and facial expressions (Corina et al., 2007; McCullough, Emmorey & Sereno, 2005).

The second line of research concerns object-specific effects on RM, though not involving the human body. There is evidence that an object's identity can influence the strength of the RM effect in particular directions. Objects such as arrows whose shapes have an inherent perceptual directionality (cf. Palmer, 1980) show stronger RM in the direction that they point (Freyd & Pantzer, 1995; Nagai & Yagi, 2001). Inanimate objects reliably show stronger RM downward than upward, presumably reflecting a perceptual expectation based on gravity (e.g. Nagai, Kazai & Yagi 2002), but a rocketship, which typically self-propels upward, does not show this bias. In fact the rocketship shows stronger RM than normally stationary objects, possibly in various directions (up, down, rightward), or possibly upward only (Reed & Vinson, 1996; Vinson & Reed, 2002; but see Halpern & Kelly, 1993, and Nagai & Yagi, 2001, for an absence of a self-propelled effect).

In Experiment 1, we bring together these two lines of research (effects of ASL experience on perception; object-identity effects on RM) to ask whether a fluent signer's perceptual expectations for the arm and hand movements of ASL can affect the strength of RM for signs. In many cases, handshape and arm position uniquely identify a sign, determining the direction the arm must move to produce a sign in ASL. Thus, fluent signers might be expected to show modulation of RM for stimuli based on these signs.

Experiment 1

Method

Participants

Two groups were tested. The nonsigners were twenty University of California Santa Cruz undergraduates who received course credit. All nonsigners reported that they had normal hearing and did not know any ASL or other sign language. The signers were ten deaf students from Gallaudet University in Washington D.C. who received monetary compensation. All signers used ASL as their primary language and were exposed to ASL from birth by their deaf parents (N=5) or before the age of five years (N=5) by hearing signing parents and/or pre-school teachers.

Stimuli

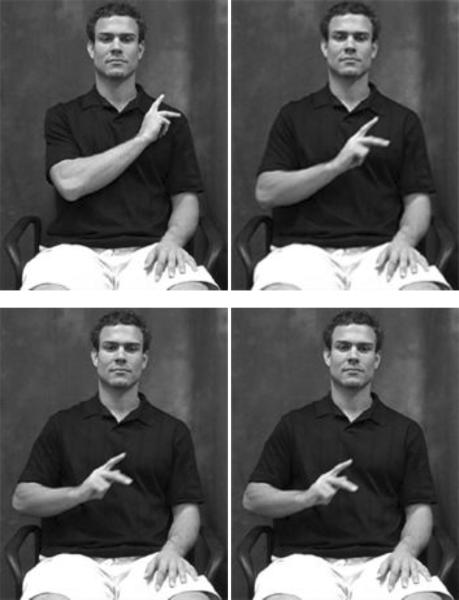

A native ASL signer (not one of the subjects) was filmed producing the sign KING (see Figure 1). Five frames were chosen from the video to be used as inducing stimuli, which we will refer to as frames a, b, c, d, and e. The frames were chosen so that the distance moved by the hand between frames was as nearly equal as possible. In the sign condition, frames a, b, and c were shown, thus progressing in the direction that the sign KING actually moves. In the reversed-sign condition, frames e, d, and c were shown. The arm moving in this direction results in a “nonsense sign” that is phonologically allowable in ASL but is not a meaningful sign. Frame c was always the last inducing stimulus, also called the memory stimulus.

Figure 1.

Video frames of the sign KING from Experiment 1 (originals in color). The first three frames are inducing stimuli a, b, and c. The fourth frame is the probe stimulus c+2.

In addition, five probe stimuli were used, only one of which was shown on any given trial. These consisted of frame c, and the four frames immediately surrounding frame c from the video – that is, frames c+1, c+2, c−1, and c−2. (Frame rate was 30 frames/sec, so that the probes differed from the memory stimulus by −67 ms, −33 ms, 0 ms, 33 ms, and 67 ms of movement as originally performed by the model.)

As a control condition, a directional movement that should be equally familiar to signers and non-signers was used. This consisted of a hand reaching for a mug. Inducing stimuli and probe stimuli were chosen in the same manner as described above.

Procedure

Each trial began with a fixation cross presented for 500 ms, followed by a blank interval of 250 ms. Next, four stimuli were presented for 250 ms each, with a 250 ms ISI. In the sign condition the stimuli were frames a, b, c, and one of the five probe stimuli (see Figure 1). In the reversed-sign condition the stimuli were frames e, d, c, and one of the five probe stimuli. Subjects were instructed to indicate by a keypress whether the final stimulus (the probe) was the same as or different from the immediately previous stimulus (the memory stimulus).

The sign and reversed-sign trials were pseudo-randomly mixed, as were trials with each of the five probes. The “KING” and “mug” conditions were blocked with order counterbalanced across subjects. Each block began with 10 practice trials, followed by 200 experimental trials (20 of each of the 10 possible stimulus X probe combinations).

Results and Discussion

For each condition, a weighted mean was calculated by multiplying the number of correct responses for each probe position by an integer value assigned to that probe position, then adding across all probe positions and dividing by five (Freyd & Jones, 1994; Munger et al., 2006). Probe positions were assigned a positive integer when shifted forward relative to the direction of the inducing motion, and negative when shifted backward. Thus, for the sign condition, frame c+1 received a value of 1, frame c+2 received a value of 2, and so on, while for the reversed-sign condition frame c+1 received a value of −1, frame c+2 received a value of −2, and so on. Frame c always received a value of 0. The weighted mean is positive when more “same” responses occur with forward-shifted probes than backward-shifted probes, and is negative when the opposite is true.

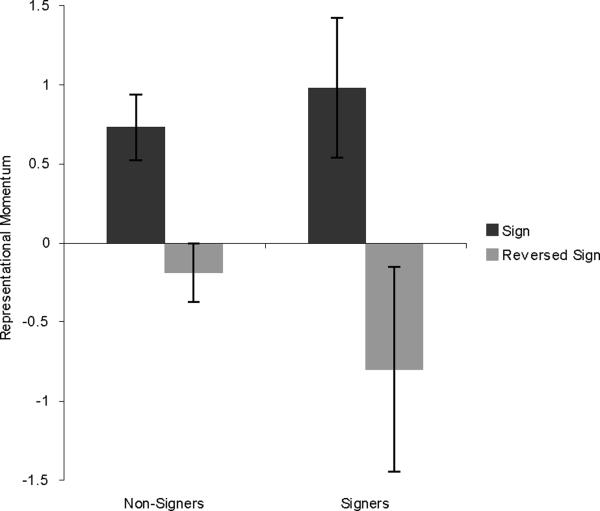

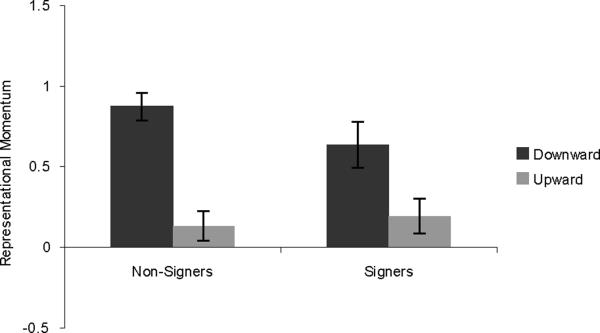

Results for the KING stimuli are shown in Figure 2. A 2×2 mixed-design ANOVA was run, with group (signers vs. non-signers) and direction (sign vs. reversed-sign) as factors. There was a main effect of direction, with the sign stimuli showing greater RM than the reversed-sign stimuli (F (1,28) = 11.318, p = .002). Surprisingly, there was no interaction between group and direction (F (1,28) = 1.148, p = .293), and the non-signers showed an effect of direction (t(19) = 3.149, p = .005). The effect of direction for signers was borderline significant (t(9) = 1.802, p = .052, one-tailed).

Figure 2.

Results from Experiment 1, showing RM for the ASL sign KING. Error bars represent standard error of the mean.

Results for the “mug” stimuli showed equally strong RM in both directions (F (1,28) < 1), indicating that this was a poor choice for a directional stimulus. In hindsight this is unsurprising, since a hand may be seen setting down a mug as often as picking one up. Therefore results from this condition are not discussed any further.

The results from the KING stimuli clearly show that something other than knowledge of ASL is contributing to stronger RM in the sign direction. In Experiment 2 we test two characteristics that may be contributing to this finding.

Experiment 2

Two features of the stimuli used in Experiment 1 may be responsible for the surprising finding of stronger RM in the sign direction than reversed in non-signers. First, the sign that was chosen moves downward. A general downward bias has been observed in RM for inanimate objects (e.g. Nagai, Kazai & Yagi 2002), presumably due to an expectation of gravity. However, as noted above, objects that are self-propelled do not usually show this bias (Reed & Vinson, 1996; Vinson & Reed, 2002). Thus, it is likely that the general downward bias would not apply to human arm movements. It is possible, though, that a downward bias for arm movements may occur for a different reason: downward arm motions are easier, and the observer's implicit knowledge of this biomechanical fact may contribute to a more robust forward-model.

Second, the sign direction was also the direction that the stimuli were actually performed by the signer during filming. It is possible that cues from joint angles, forearm rotation, or muscle tension indicated the direction of movement – in other words, the posture of an arm moving down may not look exactly the same as the posture of an arm moving up. This may include not only the posture shown in each frame considered individually, but also the sequence of postures across the frames. Consider, for example, the first three frames shown in Figure 1. If one imagines this as an upward movement sequence, from the third frame to the second to the first, it may be that the angle of the hand, or the implied motion of the shoulder joint, do not conform to how this movement would be performed most naturally. If subjects' perceptual systems are sensitive to these cues, then the cues may affect the forward model, boosting RM when going in the same direction as the inducing stimuli, and damping RM when going in the opposite direction.

To test these possibilities we chose the ASL sign HIGH, which moves upward, but filmed it being produced both upward (the actual sign) and downward (a phonologically legal nonsense sign). In addition, stimuli from both of these filmings were shown as-filmed and reversed. Only non-signers were tested in this experiment, as the purpose was only to examine the effects of biomechanical cues.

Method

Thirty-two University of California at Santa Cruz undergraduate nonsigners participated for course credit. A native signer of ASL was filmed producing the ASL sign HIGH, and also producing the sign with the direction of movement reversed. Each of these videos was used to select stimuli in the same manner as in Experiment 1. The procedure was the same as in Experiment 1, with the filmed-upward and filmed-downward conditions blocked and counterbalanced, while the as-filmed and reversed conditions were mixed. Participants were not told the meaning of the sign.

Results and Discussion

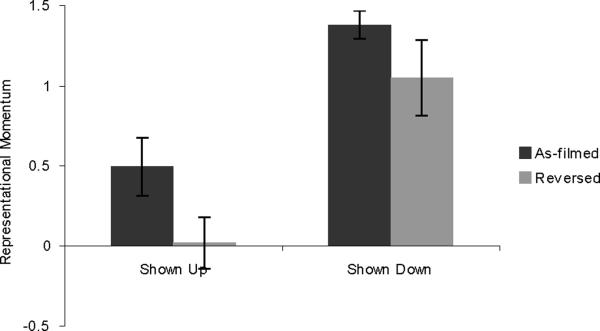

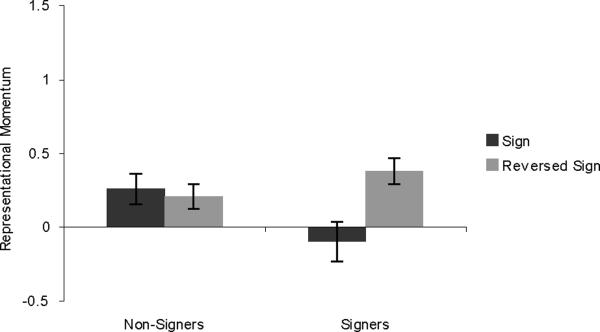

Results are shown in Figure 3. A 2×2 repeated measures ANOVA found a main effect of shown-up vs. shown-down (F(1,31) = 33.448, p = .001), with participants showing greater RM for movement in the downward direction. There was also a main effect of as-filmed vs. reversed (F(1,31) = 12.970, p = .001), with participants showing greater RM for stimuli shown as originally filmed. There was no interaction (F(1,31) < 1).

Figure 3.

Results from Experiment 2, showing RM for the ASL sign HIGH, in non-signers only. Error bars represent standard error of the mean.

These results support our hypothesis that the non-signers from Experiment 1 had implicit knowledge about the shown movement, not related to the movement's status in ASL, and this knowledge affected their RM. Observers were sensitive to the direction in which a movement had originally been filmed, and also showed stronger RM for downward arm movements despite the fact that human arms are self-propelled. Both of these factors reflect perceptual knowledge that is arguably biomechanical in nature. In Experiment 3, we control for these factors and again tested signers in order to ask whether knowledge of sign language affects RM.

Experiment 3

In this experiment, we used stimuli both from signs that move downward and signs that move upward. This allowed us to vary the direction relative to the ASL sign (sign vs. reversed-sign) independent of the absolute direction of the inducing motion (up vs. down). In other words, by using equal numbers of upward and downward signs, the effect of the downward bias should average out, allowing us to evaluate the effect of direction relative to the ASL sign.

In addition, we made changes to the filming procedure to reduce the biomechanical cues for direction of motion. Thus, in this experiment we control these two biomechanical factors, in order to test the effect of correct sign direction.

Method

Nonsigners were 26 University of California Santa Cruz undergraduates who received course credit. Signers were 21 deaf individuals who received monetary compensation. All signers used ASL as their primary and preferred language and were exposed to ASL from birth (N=11), before the age of seven years (N=5), or during adolescence (N = 5).

A native signer was filmed producing two signs that move upward (MORNING, RICH), and two signs that move downward (ESTABLISH, PHYSICAL). Signs were filmed only in the correct direction. Unlike Experiment 1, the signs were produced extremely slowly. Although the movement was smooth and continuous, the production more resembled passing through a series of arm positions than it resembled a naturally performed movement trajectory with the dynamics of acceleration and deceleration. This can be expected to reduce or eliminate the biomechanical movement cues that affected RM in the first two experiments. As will be described in the Results, this expectation was verified by demonstrating no effect of filmed direction in non-signers. An additional advantage of this technique is that it created more frames in the video from which to choose equidistant inducing stimuli as precisely as possible.

Inducing stimuli and probe stimuli were chosen in the same manner as in Experiments 1 and 2, resulting in sign and reversed-sign stimulus sequences for all four signs. Conditions were mixed, with trials for all four signs, all five probe positions, and sign and reversed-sign stimuli presented in pseudo-random order. Sixteen practice trials were followed by 200 experimental trials.

Results and Discussion

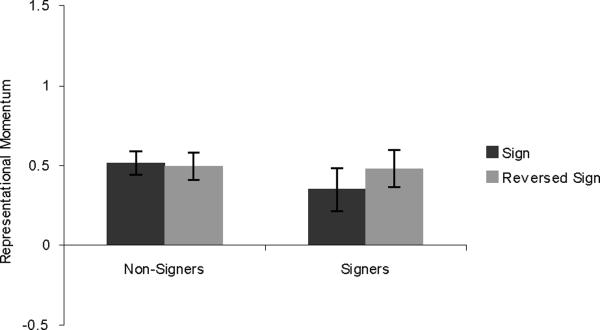

Results are shown in Figure 4. A 2×2 mixed-design ANOVA found no main effect of sign vs. reversed-sign (F (1,45) < 1). Nonsigners showed no effect of this variable (t(25) = .237, p = .815), verifying that we successfully eliminated the biomechanical effects of filming. This places us in a position to consider whether the signers show stronger RM for the sign direction. In fact the signers do not show any such effect. The difference in the means is opposite to the predicted direction, and is not significant (t(20) = −1.212. p = .239). Thus, there was no residual effect of expectation based on sign language expertise.

Figure 4.

Results from Experiment 3, showing RM for signs in the correct direction and reversed signs. Error bars represent standard error of the mean.

In addition, because upward vs. downward inducing movement was orthogonal to sign vs. reversed-sign direction, it is possible for us to check for a partial replication of Experiment 2. A second 2×2 mixed-design ANOVA was run, with direction of inducing movement and group as variables (see Figure 5). In accord with the results of Experiment 2, downward movements showed stronger RM than upward movements (F (1,45) = 54.737, p < .001). T-tests verified that the effect held for both the nonsigners (t(25) = −6.456, p < .001), and the signers (t(20) = −4.133, p = .001).

Figure 5.

Results from Experiment 3, showing RM for displayed upward movements and displayed downward movements, regardless of the sign's correct direction. Error bars represent standard error of the mean.

This difference between upward and downward movement also serves as a useful benchmark for the size of an RM difference that this experimental design can obtain, which can help us to evaluate the null effect found for sign vs. reversed sign. A power analysis showed that power was .96 to obtain an effect size of the magnitude found for downward vs. upward. Thus, any effect of sign-based expectation that might have been missed due to insufficient power would have to be quite weak compared to the downward effect.

In summary, when the effect of filmed direction is eliminated by filming very slow movements, and when the effect of a downward RM bias is controlled by using equal numbers of signs that move up and signs that move down, we find no residual effect of stronger RM in the signward direction for signers.

Experiment 4

An alternative way to control for the downward bias is to use signs that move horizontally. Unfortunately, signs that obligatorily move only left or only right are rare in ASL. (Instead, signs with horizontal movement usually move back and forth, use two hands moving in opposite directions, or are considered correct moving in either direction.) However, there are signs in which the dominant hand moves obligatorily in one direction relative to another body part, such as towards or away from the non-dominant hand or the torso. We use these signs in Experiment 4 to further verify the null effect of expected sign direction. This experiment not only eliminates the need to average over upward and downward signs, but also expands the range of signs tested to a total of 9, boosting our confidence in the finding.

Method

Nonsigners were 17 University of California Santa Cruz undergraduates who received course credit. Signers were 17 deaf individuals who received monetary compensation. All signers used ASL as their primary and preferred language and were exposed to ASL from birth (N=11), before the age of seven years (N=5), or during adolescence (N = 1).

A native signer was filmed producing four signs (MIND-BLANK, LIE, RUDE, SKIP), in the same manner as Experiment 3. (A fifth sign, AGAINST, was pilot-tested, but was eliminated because non-signers showed greater RM in the signward direction, suggesting that performance cues were not successfully eliminated.) In all other respects the method followed that of Experiment 3.

Results and Discussion

Results are shown in Figure 6. A 2×2 mixed-design ANOVA found lower RM for signs than for reversed signs (F(1,32) = 6.46, p = .016), the opposite of the predicted effect. Inspection of the means shows that this pattern only held for the signers. The interaction was significant (F(1,32) = 5.43, p=.026), and t-tests showed that there was a significant effect in the reverse of the predicted direction for the signers (t(16) = −2.93, p = .01) but not for the non-signers (t(16) = −0.19, p = .85).

Figure 6.

Results from Experiment 4, showing RM for horizontal signs and reversed signs. Error bars represent standard error of the mean.

This startling finding not only confirms our conclusion that there is no RM advantage for lexical movements that are expected by experienced signers, but even suggests that there may be a resistance to perceiving RM in such cases. Note that Experiment 3 shows a trend in the same direction (see Figure 4), although it did not reach significance. We consider these findings further in the General Discussion.

Experiment 5

In this experiment, we return to the issue of biomechanical factors. Previous results have shown that biomechanical knowledge affects perception (Daems & Verfaillie, 1999; Jacobs, Pinto & Shiffrar, 2004; Kourtzi & Shiffrar, 1999; Shiffrar & Freyd, 1990, 1993; Stevens, Fonlupt, Shiffrar, & Decety, 2000; Verfaillie & Daems, 2002). However, such effects have generally been shown only by comparing biomechanically possible versus impossible movements rather than the more subtle effects of biomechanical ease found in Experiment 2; and further, such effects have never been demonstrated to modulate RM.

The two biomechanical effects reported in Experiment 2 were discovered post hoc. In the next experiment we verify the impact of biomechanical knowledge on RM by demonstrating an awkwardness effect on the strength of RM.

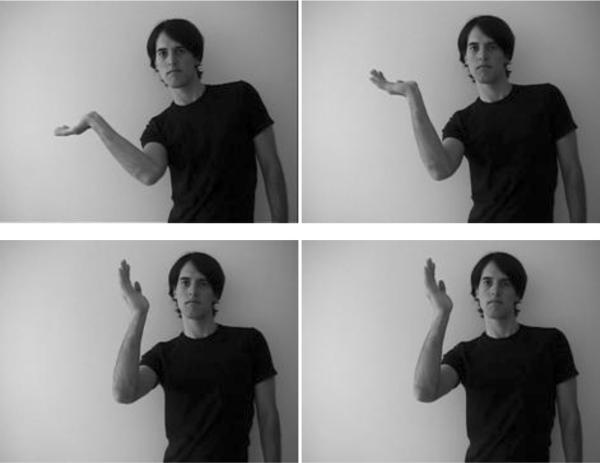

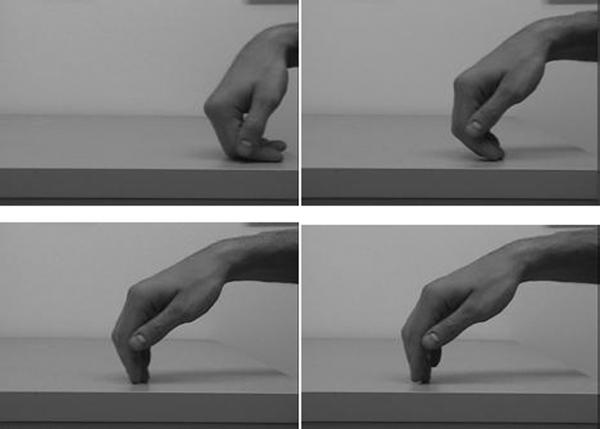

Two movements were constructed which are easy to perform in one direction, but awkward to perform in the reverse direction. One involves rotating the arm out of, or into, an awkward position. The other involves brushing the back of the hand along a surface in such a way that the reversed movement would tend to “jam” or “stub” the fingers against the surface. Stimuli from the two movements are shown in Figures 7 and 8.

Figure 7.

Video frames of the arm-twist movement from Experiment 5 (originals in color). The first three frames are inducing stimuli a, b, and c. The fourth frame is the probe stimulus c+2. This example shows the “easy” direction.

Figure 8.

Video frames of the hand-brush movement from Experiment 5 (originals in color). The first three frames are inducing stimuli a, b, and c. The fourth frame is the probe stimulus c+2. This example shows the “easy” direction.

Method

Subjects were 40 undergraduates from the University of California Santa Cruz who received course credit for participation. An arm-twist and a hand-brush movement were each filmed being performed very slowly, as described in Experiment 3, and inducing and probe stimuli were chosen as in the previous experiments. All trial types were pseudo-randomly mixed. There were 16 practice trials, followed by 200 experimental trials. In all other respects the methods followed that of the previous experiments.

Results and Discussion

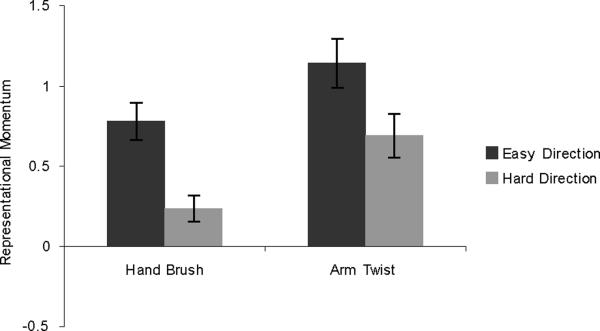

Results are shown in Figure 9. T-tests confirmed that there was greater RM in the easy direction than in the hard direction, both for the arm-twist stimuli (t(39) = 3.834, p < .001) and the hand brush stimuli (t(39) = 2.532, p = .015).

Figure 9.

Results from Experiment 5. Error bars represent standard error of the mean.

As with downward arm movements, and movements shown in the same direction as filmed, biomechanically easy movements yield stronger RM than biomechanically difficult movements. Thus we have clear evidence that the observer's implicit knowledge of the way the human body moves affects RM. More broadly, we can infer that such biomechanically easy movements yield a more robust perceptual prediction.

General Discussion

These experiments show that RM, an effect of predictive forward-projection, is sensitive to biomechanical knowledge, a finding we call the awkwardness effect. The present finding bears on theories of the function of the mirror system, which is involved in production of actions, but is also involved in perceiving others' actions (see Rizzolatti & Sinigaglia, 2008, for review), and has been shown to respond to actions that are predicted but not actually perceived (Umilta et al., 2001). Although numerous functions for the mirror system have been proposed, one likely function is that the perceptual activation of motor resources feeds back into perception, generating predictions of how the perceived event most plausibly will unfold (Blaesi & Wilson, 2010; Casile & Giese, 2006; Schütz-Bosbach & Prinz 2007; Wilson & Knoblich, 2005). Thus, according to this argument, the observer's own body-representation can act as a forward-model for enhancing the perception of others' bodies. A role of biomechanical knowledge in shaping perceptual predictions, such as we found here with the awkwardness effect, is exactly what one would expect based on this account.

In contrast, however, the present findings also show that RM is not enhanced by expectation of the direction of a sign, an expectation derived from years of use of ASL. In fact, the reverse was found: deaf signers actually showed a reduction in RM for lexically-specified movement.

This remarkable result is in fact in accord with very recent findings in the brain imaging literature. Emmorey, Xu, Gannon, Goldin-Meadow, and Braun (2010) found that deaf signers did not engage the mirror system (inferior frontal gyrus, ventral premotor and inferior parietal cortices) when passively viewing ASL signs or pantomimes, unlike hearing non-signers. Emmorey et al. argue that a lifetime of sign language experience leads to automatic and efficient sign recognition, which reduces neural firing within the mirror system. The consequence of this may be exactly the finding that we observed here: a reduction in the predictive simulation of the event, so that the movement is actually perceived more veridically and not projected forward beyond it's actual termination. On this account, biomechanical knowledge is used by perceivers precisely because human body movements are usually underdetermined. Biomechanics can help to constrain the possibilities and ready the perceiver for what is likely coming next. In contrast, when a sign movement is entirely determined (within the normal range of phonetic variation) by its lexical identity, identification and categorization happen rapidly and accurately, without the need for engaging predictive brain systems.

The findings of Emmorey et al., and the light they shed on the present findings, may help to explain the contrast between the present findings and earlier results that show enhanced and altered perceptual processing of signs in expert signers. As mentioned earlier, Wilson (2001) found that, in an apparent motion paradigm, signers tended to perceptually “fill in” different paths of motion compared to non-signers. That experiment presented signs in which the dominant hand either slid back and forth along a body surface (such as the palm of the hand), or made an arced path between two points of contact with a body surface. Since only the endpoints of the movements were shown in the apparent motion display, stimuli taken from either group of signs were compatible with either a straight movement or an arced movement. Nevertheless, signers perceived an arced path more frequently than did non-signers, with the arced-path-sign stimuli but not with the straight-path-sign stimuli. On this account, predictive simulations are different from other filling-in effects, which become stronger with greater expertise (e.g. over-regularization errors in reading).

In conclusion, although perceptual prediction is shaped by implicit knowledge of biomechanics (the awkwardness effect), it is insensitive to expectations based on over-learned movement patterns. We hypothesize that automatic recognition of manual signs obviates the need to engage predictive neurocognitive systems.

Acknowledgments

This research was supported by National Institutes of Health Grant R01 HD13249 to K.E. and San Diego State University. We thank Stephen McCullough for help with stimuli development; Jennie Pyers, Matt Pocci, Lucinda Batch, and Franco Korpics for help recruiting and running Deaf participants; and Christian Aeshliman, Sondra Bitonti, Martyna Citkowicz, Stefan Mangold, and Danielle Odom for help running hearing participants. Finally, we are grateful to all of the Deaf and hearing participants who made this research possible.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Aglioti SM, Cesari P, Romani M, Cosimo U. Action anticipation and motor resonance in elite basketball players. Nature Neuroscience. 2008;11:1109–1116. doi: 10.1038/nn.2182. [DOI] [PubMed] [Google Scholar]

- Baker SA, Idsardi WJ, Golinkoff RM, Petitto L-A. The perception of handshapes in American Sign Language. Memory & Cognition. 2005;33:887–904. doi: 10.3758/bf03193083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bettger J, Emmorey K, McCullough S, Bellugi U. Enhanced facial discrimination: Effects of experience with American Sign Language. Journal of Deaf Studies and Deaf Education. 1997;2:223–233. doi: 10.1093/oxfordjournals.deafed.a014328. [DOI] [PubMed] [Google Scholar]

- Blaesi S, Wilson M. The mirror reflects both ways: Action influences perception of others. Brain and Cognition. 2010;72:306–309. doi: 10.1016/j.bandc.2009.10.001. [DOI] [PubMed] [Google Scholar]

- Casile A, Giese MA. Critical features for the recognition of biological motion. Journal of Vision. 2005;5:348–360. doi: 10.1167/5.4.6. [DOI] [PubMed] [Google Scholar]

- Corina D, Chiu Y-S, Knapp H, Greenwald R, San Jose-Robertson L, Braun A. Neural correlates of human action observation in hearing and deaf subjects. Brain Research. 2007;1152:111–129. doi: 10.1016/j.brainres.2007.03.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daems A, Verfaillie K. Viewpoint-dependent priming effects in the perception of human actions and body postures. Visual Cognition. 1999;6:665–693. [Google Scholar]

- Desmurget M, Grafton S. Forward modeling allows feedback control for fast reaching movements. Trends in Cognitive Science. 2000;4:423–431. doi: 10.1016/s1364-6613(00)01537-0. [DOI] [PubMed] [Google Scholar]

- Emmorey K, McCullough S, Brentari D. Categorical perception in American Sign Language. Language and Cognitive Processes. 2003;18(1):21–45. [Google Scholar]

- Freyd JJ. Dynamic mental representations. Psychological Review. 1987;94:427–438. [PubMed] [Google Scholar]

- Freyd JJ. Five hunches about perceptual processes and dynamic representations. In: Meyer DE, Kornblum S, editors. Attention and performance 14: Synergies in experimental psychology, artificial intelligence, and cognitive neuroscience. MIT Press; Cambridge, MA: 1993. pp. 99–119. [Google Scholar]

- Freyd JJ, Finke RA. Representational momentum. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1984;10:126–132. [Google Scholar]

- Freyd JJ, Jones KT. Representational momentum for a spiral path. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1994;20:968–976. doi: 10.1037//0278-7393.20.4.968. [DOI] [PubMed] [Google Scholar]

- Freyd JJ, Pantzer TM. Static patterns moving in the mind. In: Smith SM, Ward TB, Finke RA, editors. The creative cognition approach. MIT Press; Cambridge, MA: 1995. pp. 181–204. [Google Scholar]

- Guo K, Robertson RG, Pulgarin M, Nevado A, Panzeri S, Thiele A, Young MP. Spatio-temporal prediction and inference by V1 neurons. European Journal of Neuroscience. 2007;26:1045–1054. doi: 10.1111/j.1460-9568.2007.05712.x. [DOI] [PubMed] [Google Scholar]

- Halpern AR, Kelly MH. Memory biases in left versus right implied motion. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1993;19:471–484. doi: 10.1037//0278-7393.19.2.471. [DOI] [PubMed] [Google Scholar]

- Hubbard TL. Representational momentum and related displacements in spatial memory: A review of findings. Psychonomic Bulletin & Review. 2005;12:822–851. doi: 10.3758/bf03196775. [DOI] [PubMed] [Google Scholar]

- Ito M. Control of mental activities by internal models in the cerebellum. Nature Reviews Neuroscience. 2008;9:304–313. doi: 10.1038/nrn2332. [DOI] [PubMed] [Google Scholar]

- Jacobs A, Pinto J, Shiffrar M. Experience, context, and the visual perception of human movement. Journal of Experimental Psycholoy: Human Perception and Performance. 2004;30:822–835. doi: 10.1037/0096-1523.30.5.822. [DOI] [PubMed] [Google Scholar]

- Kawato M. Internal models for motor control and trajectory planning. Current Opinion in Neurobiology. 1999;9:718–727. doi: 10.1016/s0959-4388(99)00028-8. [DOI] [PubMed] [Google Scholar]

- Knoblich G, Flach R. Predicting the effects of actions: Interactions of perception and action. Psychological Science. 2001;12:467–472. doi: 10.1111/1467-9280.00387. [DOI] [PubMed] [Google Scholar]

- Kourtzi Z, Shiffrar M. Dynamic representations of human body movement. Perception. 1999;28:49–62. doi: 10.1068/p2870. [DOI] [PubMed] [Google Scholar]

- McCullough S, Emmorey K. Face processing by deaf ASL signers: Evidence for expertise in distinguishing local features. Journal of Deaf Studies and Deaf Education. 1997;2:212–222. doi: 10.1093/oxfordjournals.deafed.a014327. [DOI] [PubMed] [Google Scholar]

- McCullough S, Emmorey K, Sereno M. Neural organization for recognition of grammatical and emotional facial expressions in deaf ASL signers and hearing nonsigners. Cognitive Brain Research. 2005;22:193–203. doi: 10.1016/j.cogbrainres.2004.08.012. [DOI] [PubMed] [Google Scholar]

- Mulliken GH, Musallam S, Andersen RA. Forward estimation of movement state in posterior parietal cortex. Proceedings of the National Academy of Sciences. 2008;105:8170–8177. doi: 10.1073/pnas.0802602105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Munger MP, Dellilnger MC, Lloyd TG, Johnson-Reid K, Tonelli NJ, Wolf K, Scott JM. Representational momentum in scenes: Learning spatial layout. Memory & Cognition. 2006;34:1557–1568. doi: 10.3758/bf03195919. [DOI] [PubMed] [Google Scholar]

- Nagai M, Kazai K, Yagi A. Larger forward memory displacement in the direction of gravity. Visual Cognition. 2002;9:28–40. [Google Scholar]

- Nagai M, Yagi A. The pointedness effect on representational momentum. Memory & Cognition. 2001;29:91–99. doi: 10.3758/bf03195744. [DOI] [PubMed] [Google Scholar]

- Nijhawan R. Motion extrapolation in catching. Nature. 1994;370:256–57. doi: 10.1038/370256b0. [DOI] [PubMed] [Google Scholar]

- Nijhawan R. Visual prediction: Psychophysics and neurophysiology of compensation for time delays. Behavioral and Brain Sciences. 2008;31:179–198. doi: 10.1017/S0140525X08003804. [DOI] [PubMed] [Google Scholar]

- Palmer SE. What makes triangles point: Local and global effects in configurations of ambiguous triangles. Cognitive Psychology. 1980;12:285–305. [Google Scholar]

- Prinz W. What re-enactment earns us. Cortex. 2006;42:515–517. doi: 10.1016/s0010-9452(08)70389-7. [DOI] [PubMed] [Google Scholar]

- Rao H, Han S, Jiang Y, Xue Y, Gu H, Cui Y, Gao D. Engagement of the prefrontal cortex in representational momentum: An fMRI study. NeuroImage. 2004;23:98–103. doi: 10.1016/j.neuroimage.2004.05.016. [DOI] [PubMed] [Google Scholar]

- Reed CL, Vinson NG. Conceptual effects on representational momentum. Human Perception and Performance. 1996;22:839–850. doi: 10.1037//0096-1523.22.4.839. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Sinigaglia C. Mirrors in the brain: How our minds share actions and emotions. Oxford University Press; New York: 2008. [Google Scholar]

- Saunier G, Papaxanthis C, Vargas CD, Pozzo T. Experimental Brain Research. 2008;185:399–409. doi: 10.1007/s00221-007-1162-2. [DOI] [PubMed] [Google Scholar]

- Schütz-Bosbach S, Prinz W. Perceptual resonance: Action-induced modulation of perception. Trends in Cognitive Sciences. 2007;11:349–355. doi: 10.1016/j.tics.2007.06.005. [DOI] [PubMed] [Google Scholar]

- Senior C, Ward J, David AS. Representational momentum and the brain: An investigation into the functional necessity of V5/MT. Visual Cognition. 2002;9:81–92. [Google Scholar]

- Shiffrar M, Freyd JJ. Apparent motion of the human body. Psychological Science. 1990;1:257–264. [Google Scholar]

- Shiffrar M, Freyd JJ. Timing and apparent motion path choice with human body photographs. Psychological Science. 1993;6:379–384. [Google Scholar]

- Shmuelof L, Zohary E. Watching others actions: Mirror representations in the parietal cortex. Neuroscientist. 2007;13:667–672. doi: 10.1177/1073858407302457. [DOI] [PubMed] [Google Scholar]

- Stevens JA, Fonlupt P, Shiffrar M, Decety J. New aspects of motion perception: Selective neural encoding of apparent human movements. NeuroReport. 2000;11:109–115. doi: 10.1097/00001756-200001170-00022. [DOI] [PubMed] [Google Scholar]

- Thornton IM, Hayes AE. Anticipating action in complex sciences. Visual Cognition. 2004;11:341–370. [Google Scholar]

- Umilta MA, Kohler E, Gallese V, Fogassi L, Fadiga L, Keysers C, Rizzolatti G. I know what you are doing: A neurophysiological study. Neuron. 2001;31:91–101. doi: 10.1016/s0896-6273(01)00337-3. [DOI] [PubMed] [Google Scholar]

- Verfaillie K, Daems A. Representing and anticipating human actions in vision. Visual Cognition. 2002;9:217–232. [Google Scholar]

- Vinson NG, Reed CL. Sources of object-specific effects in representational momentum. Visual Cognition. 2002;9:41–65. [Google Scholar]

- Wilson M. Covert imitation: How the body acts as a prediction device. In: Günther G, Thornton IM, Grosjean M, Shiffrar M, editors. Human body perception from the inside out: Advances in visual cognition. Oxford University Press; New York, NY: 2006. pp. 211–228. [Google Scholar]

- Wilson M, Knoblich G. The case for motor involvement in perceiving conspecifics. Psychological Bulletin. 2005;131:460–473. doi: 10.1037/0033-2909.131.3.460. [DOI] [PubMed] [Google Scholar]

- Wilson M. The impact of sign language expertise on visual perception. In: Clark MD, Marschark M, editors. Context, cognition, and deafness. Gallaudet University Press; Washington, DC: 2001. pp. 38–48. [Google Scholar]

- Wolpert DM, Flanagan JR. Motor prediction. Current Biology. 2001;11:729–732. doi: 10.1016/s0960-9822(01)00432-8. [DOI] [PubMed] [Google Scholar]