Abstract

This narrative review provides an overview on the topic of bias as part of Plastic and Reconstructive Surgery's series of articles on evidence-based medicine. Bias can occur in the planning, data collection, analysis, and publication phases of research. Understanding research bias allows readers to critically and independently review the scientific literature and avoid treatments which are suboptimal or potentially harmful. A thorough understanding of bias and how it affects study results is essential for the practice of evidence-based medicine.

The British Medical Journal recently called evidence-based medicine (EBM) one of the fifteen most important milestones since the journal's inception 1. The concept of EBM was created in the early 1980's as clinical practice became more data-driven and literature based 1, 2. EBM is now an essential part of medical school curriculum 3. For plastic surgeons, the ability to practice EBM is limited. Too frequently, published research in plastic surgery demonstrates poor methodologic quality, although a gradual trend toward higher level study designs has been noted over the past ten years 4, 5. In order for EBM to be an effective tool, plastic surgeons must critically interpret study results and must also evaluate the rigor of study design and identify study biases. As the leadership of Plastic and Reconstructive Surgery seeks to provide higher quality science to enhance patient safety and outcomes, a discussion of the topic of bias is essential for the journal's readers. In this paper, we will define bias and identify potential sources of bias which occur during study design, study implementation, and during data analysis and publication. We will also make recommendations on avoiding bias before, during, and after a clinical trial.

I. Definition and scope of bias

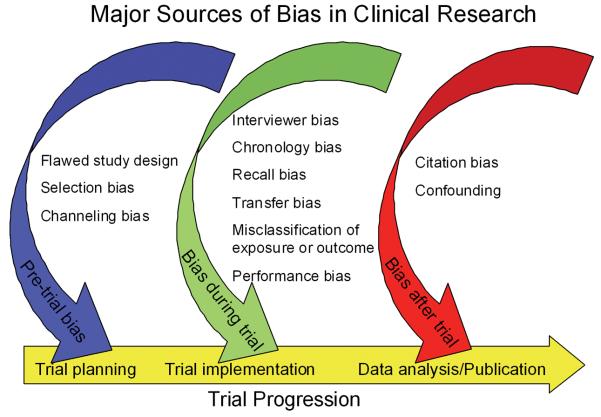

Bias is defined as any tendency which prevents unprejudiced consideration of a question 6. In research, bias occurs when “systematic error [is] introduced into sampling or testing by selecting or encouraging one outcome or answer over others” 7. Bias can occur at any phase of research, including study design or data collection, as well as in the process of data analysis and publication (Figure 1). Bias is not a dichotomous variable. Interpretation of bias cannot be limited to a simple inquisition: is bias present or not? Instead, reviewers of the literature must consider the degree to which bias was prevented by proper study design and implementation. As some degree of bias is nearly always present in a published study, readers must also consider how bias might influence a study's conclusions 8. Table 1 provides a summary of different types of bias, when they occur, and how they might be avoided.

Figure 1.

Major Sources of Bias in Clinical Research

Table 1.

Tips to avoid different types of bias during a trial.

| Type of Bias | How to Avoid |

|---|---|

| Pre-trial bias | |

| Flawed study design | • Clearly define risk and outcome, preferably with objective or validated methods. Standardize and blind data collection. |

| Selection bias | • Select patients using rigorous criteria to avoid confounding results. Patients should originate from the same general population. Well designed, prospective studies help to avoid selection bias as outcome is unknown at time of enrollment. |

| Channeling bias | • Assign patients to study cohorts using rigorous criteria. |

|

| |

| Bias during trial | |

| Interviewer bias | • Standardize interviewer's interaction with patient. Blind interviewer to exposure status. |

| Chronology bias | • Prospective studies can eliminate chronology bias. Avoid using historic controls (confounding by secular trends). |

| Recall bias | • Use objective data sources whenever possible. When using subjective data sources, corroborate with medical record. Conduct prospective studies because outcome is unknown at time of patient enrollment. |

| Transfer bias | • Carefully design plan for lost-to-followup patients prior to the study. |

| Exposure Misclassification | • Clearly define exposure prior to study. Avoid using proxies of exposure. |

| Outcome Misclassification | • Use objective diagnostic studies or validated measures as primary outcome. |

| Performance bias | • Consider cluster stratification to minimize variability in surgical technique. |

|

| |

| Bias after trial | |

| Citation bias | • Register trial with an accepted clinical trials registry. Check registries for similar unpublished or in-progress trials prior to publication. |

| Confounding | • Known confounders can be controlled with study design (case control design or randomization) or during data analysis (regression). Unknown confounders can only be controlled with randomization. |

Chance and confounding can be quantified and/or eliminated through proper study design and data analysis. However, only the most rigorously conducted trials can completely exclude bias as an alternate explanation for an association. Unlike random error, which results from sampling variability and which decreases as sample size increases, bias is independent of both sample size and statistical significance. Bias can cause estimates of association to be either larger or smaller than the true association. In extreme cases, bias can cause a perceived association which is directly opposite of the true association. For example, prior to 1998, multiple observational studies demonstrated that hormone replacement therapy (HRT) decreased risk of heart disease among post-menopausal women 8, 9. However, more recent studies, rigorously designed to minimize bias, have found the opposite effect (i.e., an increased risk of heart disease with HRT) 10, 11.

II. Pre-trial bias

Sources of pre-trial bias include errors in study design and in patient recruitment. These errors can cause fatal flaws in the data which cannot be compensated during data analysis. In this section, we will discuss the importance of clearly defining both risk and outcome, the necessity of standardized protocols for data collection, and the concepts of selection and channeling bias.

Bias during study design

The definition of risk and outcome should be clearly defined prior to study implementation. Subjective measures, such as the Baker grade of capsular contracture, can have high inter-rater variability and the arbitrary cutoffs may make distinguishing between groups difficult 12. This can inflate the observed variance seen with statistical analysis, making a statistically significant result less likely. Objective, validated risk stratification models such as those published by Caprini 13 and Davison 14 for venous thromboembolism, or standardized outcomes measures such as the Breast-Q 15 should have lower inter-rater variability and are more appropriate for use. When risk or exposure is retrospectively identified via medical chart review, it is prudent to crossreference data sources for confirmation. For example, a chart reviewer should confirm a patient-reported history of sacral pressure ulcer closure with physical exam findings and by review of an operative report; this will decrease discrepancies when compared to using a single data source.

Data collection methods may include questionnaires, structured interviews, physical exam, laboratory or imaging data, or medical chart review. Standardized protocols for data collection, including training of study personnel, can minimize inter-observer variability when multiple individuals are gathering and entering data. Blinding of study personnel to the patient's exposure and outcome status, or if not possible, having different examiners measure the outcome than those who evaluated the exposure, can also decrease bias. Due to the presence of scars, patients and those directly examining them cannot be blinded to whether or not an operation was received. For comparisons of functional or aesthetic outcomes in surgical procedures, an independent examiner can be blinded to the type of surgery performed. For example, a hand surgery study comparing lag screw versus plate and screw fixation of metacarpal fractures could standardize the surgical approach (and thus the surgical scar) and have functional outcomes assessed by a blinded examiner who had not viewed the operative notes or x-rays. Blinded examiners can also review imaging and confirm diagnoses without examining patients 16, 17.

Selection bias

Selection bias may occur during identification of the study population. The ideal study population is clearly defined, accessible, reliable, and at increased risk to develop the outcome of interest. When a study population is identified, selection bias occurs when the criteria used to recruit and enroll patients into separate study cohorts are inherently different. This can be a particular problem with case-control and retrospective cohort studies where exposure and outcome have already occurred at the time individuals are selected for study inclusion 18. Prospective studies (particularly randomized, controlled trials) where the outcome is unknown at time of enrollment are less prone to selection bias.

Channeling bias

Channeling bias occurs when patient prognostic factors or degree of illness dictates the study cohort into which patients are placed. This bias is more likely in non-randomized trials when patient assignment to groups is performed by medical personnel. Channeling bias is commonly seen in pharmaceutical trials comparing old and new drugs to one another 19. In surgical studies, channeling bias can occur if one intervention carries a greater inherent risk 20. For example, hand surgeons managing fractures may be more aggressive with operative intervention in young, healthy individuals with low perioperative risk. Similarly, surgeons might tolerate imperfect reduction in the elderly, a group at higher risk for perioperative complications and with decreased need for perfect hand function. Thus, a selection bias exists for operative intervention in young patients. Now imagine a retrospective study of operative versus non-operative management of hand fractures. In this study, young patients would be channeled into the operative study cohort and the elderly would be channeled into the nonoperative study cohort.

III. Bias during the clinical trial

Information bias is a blanket classification of error in which bias occurs in the measurement of an exposure or outcome. Thus, the information obtained and recorded from patients in different study groups is unequal in some way 18. Many subtypes of information bias can occur, including interviewer bias, chronology bias, recall bias, patient loss to follow-up, bias from misclassification of patients, and performance bias.

Interviewer bias

Interviewer bias refers to a systematic difference between how information is solicited, recorded, or interpreted 18, 21. Interviewer bias is more likely when disease status is known to interviewer. An example of this would be a patient with Buerger's disease enrolled in a case control study which attempts to retrospectively identify risk factors. If the interviewer is aware that the patient has Buerger's disease, he/she may probe for risk factors, such as smoking, more extensively (“Are you sure you've never smoked? Never? Not even once?”) than in control patients. Interviewer bias can be minimized or eliminated if the interviewer is blinded to the outcome of interest or if the outcome of interest has not yet occurred, as in a prospective trial.

Chronology bias

Chronology bias occurs when historic controls are used as a comparison group for patients undergoing an intervention. Secular trends within the medical system could affect how disease is diagnosed, how treatments are administered, or how preferred outcome measures are obtained 20. Each of these differences could act as a source of inequality between the historic controls and intervention groups. For example, many microsurgeons currently use preoperative imaging to guide perforator flap dissection. Imaging has been shown to significantly reduce operative time 40. A retrospective study of flap dissection time might conclude that dissection time decreases as surgeon experience improves. More likely, the use of preoperative imaging caused a notable reduction in dissection time. Thus, chronology bias is present. Chronology bias can be minimized by conducting prospective cohort or randomized control trials, or by using historic controls from only the very recent past.

Recall bias

Recall bias refers to the phenomenon in which the outcomes of treatment (good or bad) may color subjects' recollections of events prior to or during the treatment process. One common example is the perceived association between autism and the MMR vaccine. This vaccine is given to children during a prominent period of language and social development. As a result, parents of children with autism are more likely to recall immunization administration during this developmental regression, and a causal relationship may be perceived 22. Recall bias is most likely when exposure and disease status are both known at time of study, and can also be problematic when patient interviews (or subjective assessments) are used as a primary data sources. When patient-report data are used, some investigators recommend that the trial design masks the intent of questions in structured interviews or surveys and/or uses only validated scales for data acquisition 23.

Transfer bias

In almost all clinical studies, subjects are lost to follow-up. In these instances, investigators must consider whether these patients are fundamentally different than those retained in the study. Researchers must also consider how to treat patients lost to follow-up in their analysis. Well designed trials usually have protocols in place to attempt telephone or mail contact for patients who miss clinic appointments. Transfer bias can occur when study cohorts have unequal losses to follow-up. This is particularly relevant in surgical trials when study cohorts are expected to require different follow-up regimens. Consider a study evaluating outcomes in inferior pedicle Wise pattern versus vertical scar breast reductions. Because the Wise pattern patients often have fewer contour problems in the immediate postoperative period, they may be less likely to return for long-term follow-up. By contrast, patient concerns over resolving skin redundancies in the vertical reduction group may make these individuals more likely to return for postoperative evaluations by their surgeons. Some authors suggest that patient loss to follow-up can be minimized by offering convenient office hours, personalized patient contact via phone or email, and physician visits to the patient's home 20, 24.

Bias from misclassification of exposure or outcome

Misclassification of exposure can occur if the exposure itself is poorly defined or if proxies of exposure are utilized. For example, this might occur in a study evaluating efficacy of becaplermin (Regranex, Systagenix Wound Management) versus saline dressings for management of diabetic foot ulcers. Significantly different results might be obtained if the becaplermin cohort of patients included those prescribed the medication, rather than patients directly observed to be applying the medication. Similarly, misclassification of outcome can occur if non-objective measures are used. For example, clinical signs and symptoms are notoriously unreliable indicators of venous thromboembolism. Patients are accurately diagnosed by physical exam less than 50% of the time 25. Thus, using Homan's sign (calf pain elicited by extreme dorsi-flexion) or pleuritic chest pain as study measures for deep venous thrombosis or pulmonary embolus would be inappropriate. Venous thromboembolism is appropriately diagnosed using objective tests with high sensitivity and specificity, such as duplex ultrasound or spiral CT scan 26-28.

Performance bias

In surgical trials, performance bias may complicate efforts to establish a cause-effect relationship between procedures and outcomes. As plastic surgeons, we are all aware that surgery is rarely standardized and that technical variability occurs between surgeons and among a single surgeon's cases. Variations by surgeon commonly occur in surgical plan, flow of operation, and technical maneuvers used to achieve the desired result. The surgeon's experience may have a significant effect on the outcome. To minimize or avoid performance bias, investigators can consider cluster stratification of patients, in which all patients having an operation by one surgeon or at one hospital are placed into the same study group, as opposed to placing individual patients into groups. This will minimize performance variability within groups and decrease performance bias. Cluster stratification of patients may allow surgeons to perform only the surgery with which they are most comfortable or experienced, providing a more valid assessment of the procedures being evaluated. If the operation in question has a steep learning curve, cluster stratification may make generalization of study results to the everyday plastic surgeon difficult.

IV. Bias after a trial

Bias after a trial's conclusion can occur during data analysis or publication. In this section, we will discuss citation bias, evaluate the role of confounding in data analysis, and provide a brief discussion of internal and external validity.

Citation bias

Citation bias refers to the fact that researchers and trial sponsors may be unwilling to publish unfavorable results, believing that such findings may negatively reflect on their personal abilities or on the efficacy of their product. Thus, positive results are more likely to be submitted for publication than negative results. Additionally, existing inequalities in the medical literature may sway clinicians' opinions of the expected trial results before or during a trial. In recognition of citation bias, the International Committee of Medical Journal Editors(ICMJE) released a consensus statement in 2004 29 which required all randomized control trials to be pre-registered with an approved clinical trials registry. In 2007, a second consensus statement 30 required that all prospective trials not deemed purely observational be registered with a central clinical trials registry prior to patient enrollment. ICMJE member journals will not publish studies which are not registered in advance with one of five accepted registries. Despite these measures, citation bias has not been completely eliminated. While centralized documentation provides medical researchers with information about unpublished trials, investigators may be left to only speculate as to the results of these studies.

Confounding

Confounding occurs when an observed association is due to three factors: the exposure, the outcome of interest, and a third factor which is independently associated with both the outcome of interest and the exposure 18. Examples of confounders include observed associations between coffee drinking and heart attack (confounded by smoking) and the association between income and health status (confounded by access to care). Pre-trial study design is the preferred method to control for confounding. Prior to the study, matching patients for demographics (such as age or gender) and risk factors (such as body mass index or smoking) can create similar cohorts among identified confounders. However, the effect of unmeasured or unknown confounders may only be controlled by true randomization in a study with a large sample size. After a study's conclusion, identified confounders can be controlled by analyzing for an association between exposure and outcome only in cohorts similar for the identified confounding factor. For example, in a study comparing outcomes for various breast reconstruction options, the results might be confounded by the timing of the reconstruction (i.e., immediate versus delayed procedures). In other words, procedure type and timing may have both have significant and independent effects on breast reconstruction outcomes. One approach to this confounding would be to compare outcomes by procedure type separately for immediate and delayed reconstruction patients. This maneuver is commonly termed a “stratified” analysis. Stratified analyses are limited if multiple confounders are present or if sample size is small. Multi-variable regression analysis can also be used to control for identified confounders during data analysis. The role of unidentified confounders cannot be controlled using statistical analysis.

Internal vs. External Validity

Internal validity refers to the reliability or accuracy of the study results. A study's internal validity reflects the author's and reviewer's confidence that study design, implementation, and data analysis have minimized or eliminated bias and that the findings are representative of the true association between exposure and outcome. When evaluating studies, careful review of study methodology for sources of bias discussed above enables the reader to evaluate internal validity. Studies with high internal validity are often explanatory trials, those designed to test efficacy of a specific intervention under idealized conditions in a highly selected population. However, high internal validity often comes at the expense of ability to be generalized. For example, although supra-microsurgery techniques, defined as anastamosis of vessels less than 0.5mm-0.8mm in diameter, have been shown to be technically possible in high volume microsurgery centers 31-33 (high internal validity), it is unlikely that the majority of plastic surgeons could perform this operation with an acceptable rate of flap loss.

External validity of research design deals with the degree to which findings are able to be generalized to other groups or populations. In contrast with explanatory trials, pragmatic trials are designed to assess the benefits of interventions under real clinical conditions. These studies usually include study populations generated using minimal exclusion criteria, making them very similar to the general population. While pragmatic trials have high external validity, loose inclusion criteria may compromise the study's internal validity. When reviewing scientific literature, readers should assess whether the research methods preclude generalization of the study's findings to other patient populations. In making this decision, readers must consider differences between the source population (population from which the study population originated) and the study population (those included in the study). Additionally, it is important to distinguish limited ability to be generalized due to a selective patient population from true bias 8.

When designing trials, achieving balance between internal and external validity is difficult. An ideal trial design would randomize patients and blind those collecting and analyzing data (high internal validity), while keeping exclusion criteria to a minimum, thus making study and source populations closely related and allowing generalization of results (high external validity) 34. For those evaluating the literature, objective models exist to quantify both external and internal validity. Conceptual models to assess a study's ability to be generalized have been developed 35. Additionally, qualitative checklists can be used to assess the external validity of clinical trials. These can be utilized by investigators to improve study design and also by those reading published studies 36.

The importance of internal validity is reflected in the existing concept of “levels of evidence” 5, where more rigorously designed trials produce higher levels of evidence. Such high-level studies can be evaluated using the Jadad scoring system, an established, rigorous means of assessing the methodological quality and internal validity of clinical trials 37. Even so-called “gold-standard” RCT's can be undermined by poor study design. Like all studies, RCT's must be rigorously evaluated. Descriptions of study methods should include details on the randomization process, method(s) of blinding, treatment of incomplete outcome data, funding source(s), and include data on statistically insignificant outcomes 38. Authors who provide incomplete trial information can create additional bias after a trial ends; readers are not able to evaluate the trial's internal and external validity 20. The CONSORT statement 39 provides a concise 22-point checklist for authors reporting the results of RCT's. Manuscripts that conform to the CONSORT checklist will provide adequate information for readers to understand the study's methodology. As a result, readers can make independent judgments on the trial's internal and external validity.

Conclusion

Bias can occur in the planning, data collection, analysis, and publication phases of research. Understanding research bias allows readers to critically and independently review the scientific literature and avoid treatments which are suboptimal or potentially harmful. A thorough understanding of bias and how it affects study results is essential for the practice of evidence-based medicine.

Acknowledgments

Dr. Pannucci receives salary support from the NIH T32 grant program (T32 GM-08616).

Footnotes

Meeting disclosure:

This work was has not been previously presented.

None of the authors has a financial interest in any of the products, devices, or drugs mentioned in this manuscript.

This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Godlee F. Milestones on the long road to knowledge. BMJ. 2007;334(Suppl 1):s2–3. doi: 10.1136/bmj.39062.570856.94. [DOI] [PubMed] [Google Scholar]

- 2.Howes N, Chagla L, Thorpe M, et al. Surgical practice is evidence based. Br. J. Surg. 1997;84:1220–1223. [PubMed] [Google Scholar]

- 3.Sackett DL, Rosenberg WM, Gray JA, et al. Evidence based medicine: What it is and what it isn't. BMJ. 1996;312:71–72. doi: 10.1136/bmj.312.7023.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Loiselle F, Mahabir RC, Harrop AR. Levels of evidence in plastic surgery research over 20 years. Plast. Reconstr. Surg. 2008;121:207e–11e. doi: 10.1097/01.prs.0000304600.23129.d3. [DOI] [PubMed] [Google Scholar]

- 5.Chang EY, Pannucci CJ, Wilkins EG. Quality of clinical studies in aesthetic surgery journals: A 10-year review. Aesthet. Surg. J. 2009;29:144–7. doi: 10.1016/j.asj.2008.12.007. discussion 147-9. [DOI] [PubMed] [Google Scholar]

- 6. Dictionary.com; http://dictionary.reference.com/browse/bias.

- 7. Merriam-Webster.com; http://www.merriam-webster.com/dictionary/bias.

- 8.Gerhard T. Bias: Considerations for research practice. Am. J. Health. Syst. Pharm. 2008;65:2159–2168. doi: 10.2146/ajhp070369. [DOI] [PubMed] [Google Scholar]

- 9.Stampfer MJ, Colditz GA. Estrogen replacement therapy and coronary heart disease: A quantitative assessment of the epidemiologic evidence. Prev. Med. 1991;20:47–63. doi: 10.1016/0091-7435(91)90006-p. [DOI] [PubMed] [Google Scholar]

- 10.Rossouw JE, Anderson GL, Prentice RL, et al. Risks and benefits of estrogen plus progestin in healthy postmenopausal women: Principal results from the women's health initiative randomized controlled trial. JAMA. 2002;288:321–333. doi: 10.1001/jama.288.3.321. [DOI] [PubMed] [Google Scholar]

- 11.Hulley S, Grady D, Bush T, et al. Randomized trial of estrogen plus progestin for secondary prevention of coronary heart disease in postmenopausal women. heart and Estrogen/progestin replacement study (HERS) research group. JAMA. 1998;280:605–613. doi: 10.1001/jama.280.7.605. [DOI] [PubMed] [Google Scholar]

- 12.Burkhardt BR, Eades E. The effect of biocell texturing and povidone-iodine irrigation on capsular contracture around saline-inflatable breast implants. Plast. Reconstr. Surg. 1995;96:1317–1325. doi: 10.1097/00006534-199511000-00013. [DOI] [PubMed] [Google Scholar]

- 13.Caprini JA. Thrombosis risk assessment as a guide to quality patient care. Dis. Mon. 2005;51:70–78. doi: 10.1016/j.disamonth.2005.02.003. [DOI] [PubMed] [Google Scholar]

- 14.Davison SP, Venturi ML, Attinger CE, et al. Prevention of venous thromboembolism in the plastic surgery patient. Plast. Reconstr. Surg. 2004;114:43E–51E. doi: 10.1097/01.prs.0000131276.48992.ee. [DOI] [PubMed] [Google Scholar]

- 15.Pusic AL, Klassen AF, Scott AM, et al. Development of a new patient-reported outcome measure for breast surgery: The BREAST-Q. Plast. Reconstr. Surg. 2009;124:345–353. doi: 10.1097/PRS.0b013e3181aee807. [DOI] [PubMed] [Google Scholar]

- 16.Barnett HJ, Taylor DW, Eliasziw M, et al. Benefit of carotid endarterectomy in patients with symptomatic moderate or severe stenosis. north american symptomatic carotid endarterectomy trial collaborators. N. Engl. J. Med. 1998;339:1415–1425. doi: 10.1056/NEJM199811123392002. [DOI] [PubMed] [Google Scholar]

- 17.Ferguson GG, Eliasziw M, Barr HW, et al. The north american symptomatic carotid endarterectomy trial : Surgical results in 1415 patients. Stroke. 1999;30:1751–1758. doi: 10.1161/01.str.30.9.1751. [DOI] [PubMed] [Google Scholar]

- 18.Hennekens CH, Buring JE. Epidemiology in Medicine. Little, Brown, and Company; Boston: 1987. [Google Scholar]

- 19.Lobo FS, Wagner S, Gross CR, et al. Addressing the issue of channeling bias in observational studies with propensity scores analysis. Res. Social Adm. Pharm. 2006;2:143–151. doi: 10.1016/j.sapharm.2005.12.001. [DOI] [PubMed] [Google Scholar]

- 20.Paradis C. Bias in surgical research. Ann. Surg. 2008;248:180–188. doi: 10.1097/SLA.0b013e318176bf4b. [DOI] [PubMed] [Google Scholar]

- 21.Davis RE, Couper MP, Janz NK, et al. Interviewer effects in public health surveys. Health Educ. Res. 2009 doi: 10.1093/her/cyp046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Andrews N, Miller E, Taylor B, et al. Recall bias, MMR, and autism. Arch. Dis. Child. 2002;87:493–494. doi: 10.1136/adc.87.6.493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.McDowell I, Newell C. Measuring Health. Oxford University Press; Oxford: 1996. [Google Scholar]

- 24.Ultee J, van Neck JW, Jaquet JB, et al. Difficulties in conducting a prospective outcome study. Hand Clin. 2003;19:457–462. doi: 10.1016/s0749-0712(03)00023-4. [DOI] [PubMed] [Google Scholar]

- 25.Anderson FA, Jr, Wheeler HB, Goldberg RJ, et al. A population-based perspective of the hospital incidence and case-fatality rates of deep vein thrombosis and pulmonary embolism. the worcester DVT study. Arch. Intern. Med. 1991;151:933–938. [PubMed] [Google Scholar]

- 26.Gaitini D. Current approaches and controversial issues in the diagnosis of deep vein thrombosis via duplex doppler ultrasound. J. Clin. Ultrasound. 2006;34:289–297. doi: 10.1002/jcu.20236. [DOI] [PubMed] [Google Scholar]

- 27.Winer-Muram HT, Rydberg J, Johnson MS, et al. Suspected acute pulmonary embolism: Evaluation with multi-detector row CT versus digital subtraction pulmonary arteriography. Radiology. 2004;233:806–815. doi: 10.1148/radiol.2333031744. [DOI] [PubMed] [Google Scholar]

- 28.Schoepf UJ. Diagnosing pulmonary embolism: Time to rewrite the textbooks. Int. J. Cardiovasc. Imaging. 2005;21:155–163. doi: 10.1007/s10554-004-5345-7. [DOI] [PubMed] [Google Scholar]

- 29.DeAngelis CD, Drazen JM, Frizelle FA, et al. Clinical trial registration: A statement from the international committee of medical journal editors. JAMA. 2004;292:1363–1364. doi: 10.1001/jama.292.11.1363. [DOI] [PubMed] [Google Scholar]

- 30.Laine C, Horton R, DeAngelis CD, et al. Clinical trial registration--looking back and moving ahead. N. Engl. J. Med. 2007;356:2734–2736. doi: 10.1056/NEJMe078110. [DOI] [PubMed] [Google Scholar]

- 31.Chang CC, Wong CH, Wei FC. Free-style free flap. Injury. 2008;39(Suppl 3):S57–61. doi: 10.1016/j.injury.2008.05.020. [DOI] [PubMed] [Google Scholar]

- 32.Wei FC, Mardini S. Free-style free flaps. Plast. Reconstr. Surg. 2004;114:910–916. doi: 10.1097/01.prs.0000133171.65075.81. [DOI] [PubMed] [Google Scholar]

- 33.Koshima I, Inagawa K, Urushibara K, et al. Paraumbilical perforator flap without deep inferior epigastric vessels. Plast. Reconstr. Surg. 1998;102:1052–1057. doi: 10.1097/00006534-199809040-00020. [DOI] [PubMed] [Google Scholar]

- 34.Godwin M, Ruhland L, Casson I, et al. Pragmatic controlled clinical trials in primary care: The struggle between external and internal validity. BMC Med. Res. Methodol. 2003;3:28. doi: 10.1186/1471-2288-3-28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Bonell C, Oakley A, Hargreaves J, et al. Assessment of generalisability in trials of health interventions: Suggested framework and systematic review. BMJ. 2006;333:346–349. doi: 10.1136/bmj.333.7563.346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Bornhoft G, Maxion-Bergemann S, Wolf U, et al. Checklist for the qualitative evaluation of clinical studies with particular focus on external validity and model validity. BMC Med. Res. Methodol. 2006;6:56. doi: 10.1186/1471-2288-6-56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Jadad AR, Moore RA, Carroll D, et al. Assessing the quality of reports of randomized clinical trials: Is blinding necessary? Control. Clin. Trials. 1996;17:1–12. doi: 10.1016/0197-2456(95)00134-4. [DOI] [PubMed] [Google Scholar]

- 38.Gurusamy KS, Gluud C, Nikolova D, et al. Assessment of risk of bias in randomized clinical trials in surgery. Br. J. Surg. 2009;96:342–349. doi: 10.1002/bjs.6558. [DOI] [PubMed] [Google Scholar]

- 39.Moher D, Schulz KF, Altman D. The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomized trials. JAMA. 2001;285:1987–1991. doi: 10.1001/jama.285.15.1987. [DOI] [PubMed] [Google Scholar]

- 40.Masia J, Kosutic D, Clavero JA, et al. Preoperative computed tomographic angiogram for evaluation of deep inferior epigastric artery perforator flap breast reconstruction. J. Reconstr Microsurg. doi: 10.1055/s-0029-1223854. Epub ahead of print. [DOI] [PubMed] [Google Scholar]