Abstract

The maxim “no pain, no gain” summarizes scenarios in which an action leading to reward also entails a cost. Although we know a substantial amount about how the brain represents pain and reward separately, we know little about how they are integrated during goal-directed behavior. Two theoretical models might account for the integration of reward and pain. An additive model specifies that the disutility of costs is summed linearly with the utility of benefits, whereas an interactive model suggests that cost and benefit utilities interact so that the sensitivity to benefits is attenuated as costs become increasingly aversive. Using a novel task that required integration of physical pain and monetary reward, we examined the mechanism underlying cost–benefit integration in humans. We provide evidence in support of an interactive model in behavioral choice. Using functional neuroimaging, we identify a neural signature for this interaction such that, when the consequences of actions embody a mixture of reward and pain, there is an attenuation of a predictive reward signal in both ventral anterior cingulate cortex and ventral striatum. We conclude that these regions subserve integration of action costs and benefits in humans, a finding that suggests a cross-species similarity in neural substrates that implement this function and illuminates mechanisms that underlie altered decision making under aversive conditions.

Introduction

Goal-directed behavior engenders conflict when we trade the prospect of an appetitive gain against an equal prospect of an aversive cost. For example, mountain climbers will report that the agony of climbing a mountain is endured to sample the ecstasy of the mountain top. Despite a wealth of data regarding the separate representation of reward (Schultz, 2006; Montague and King-Casas, 2007) and punishment (Seymour et al., 2007), how they are integrated during goal-directed behavior is relatively unexplored (Phillips et al., 2007; Walton et al., 2007). Here, we designed a study in which participants were offered choices that incorporated simultaneous rewarding and punishing consequences, namely monetary gain and physical pain, and in which action selection required an online integration of the prospects of pain and reward.

It is well established that value is highly context dependent (Seymour and McClure, 2008). Here, we addressed a particular form of value integration associated with two fundamentally different sorts of outcomes, a primary visceral cost (pain) and an abstract rewarding benefit (monetary reward). We investigated this by comparing how well an additive and an interactive model account for the integration of pain and reward. According to an influential additive model, attitudes toward mixed outcomes are conceptualized as the net difference between the positive and negative affect that they arouse (Green and Goldried, 1965). The overall utility of a choice is likewise computed as the difference between its costs and benefits (Prelec and Loewenstein, 1998). Findings that positive and negative affect co-occur in emotionally charged situations (Miller, 1959; Berridge and Grill, 1984; Larsen et al., 2001, 2004; Schimmack, 2001) hint at separate brain representations of positive and negative value (Cacioppo and Berntson, 1994). Their integration in guiding choice may therefore be interactive, so that the sensitivity or slope of choice behavior as a function of reward will change with the level of pain. It is this pain-induced change in sensitivity to reward that we sought to characterize in terms of behavior and neurophysiological substrate. Our experimental design enabled us to test a prediction that the values associated with painful costs and monetary benefits in a choice situation would interact in a manner whereby pain would attenuate the neural representation of reward.

We predicted that cost–benefit integration would engage brain systems implicated in value learning, such as dopaminergic target structures. These include ventral striatum, implicated in reinforcement learning in both appetitive (Berns et al., 2001; O'Doherty et al., 2003, 2004; Tobler et al., 2007) and aversive (Jensen et al., 2003; Iordanova et al., 2006; Hoebel et al., 2007) processing, medial prefrontal cortical regions, including the orbitofrontal cortex (OFC), implicated in representations of positive as well as negative outcomes (O'Doherty, 2007), and anterior cingulate cortex (ACC), implicated in both optimal decisions and appetitive and aversive choice (Walton et al., 2007). Indeed, previous work in rats has shown that the nucleus accumbens (NA) and the ACC are pivotal when animals integrate costs and benefits (Caine and Koob, 1994; Aberman and Salamone, 1999; Salamone and Correa, 2002; Walton et al., 2003; Schweimer et al., 2005); these structures are known to be interconnected (Brog et al., 1993).

Materials and Methods

Participants

Twenty-one adult participants took part in the experiment. All participants received information describing the study before arrival. On arrival, participants were screened for neurological and psychiatric history and magnetic resonance imaging (MRI) compatibility and signed a consent form. One participant was removed from the analysis for failing to produce a minimum of five choices of DS+pain, which prevented us from analyzing his MRI data, and two others were removed because their choice behavior showed them to be statistical outliers (more than three times the interquartile range above the upper or lower quartiles). The remaining 18 participants (12 females) had mean ± SD age of 24.62 ± 4.44 years. The study was approved by the University College London Research Ethics Committee.

Materials

Nine 114 × 154 pixels, luminance-matched color pictures of neutral male faces from The Karolinska Directed Emotional Faces (Lundqvist et al., 1998) were used as discriminative stimuli (DSs). Of these, four faces were chosen on the basis of individual likeability ratings (see procedure). Two faces were used as the “pain pair” and two as the “touch pair.”

Apparatus

Electric skin stimulation.

Two Digitimer boxes (model DS7) were placed inside a radiofrequency (RF)-shielded box. Power was supplied by a battery and a mains inverter, which were also placed inside the box. The mains inverter was encased in another RF-shielded box, with RF filters on the battery input leads and mains output leads. Input to the Digitimer boxes was controlled by a computer in the scanner control room. To trigger each Digitimer box, the experimental computer program delivered a signal to a single data pin in a parallel port. Transistor–transistor logic (TTL) signal from the parallel port was converted to fiber optics, which were fed from the scanner control room, through a wave guide, to the scanner room, and through another wave guide, into the RF-shielded box. The signal was then converted back to TTL and connected to the trigger input in the Digitimer box. The Digitimer box output was fed through filters in the RF-shielded box. These were notch filters, tuned to the scanner center frequency, which were designed in such a way that there could not be a short circuit to ground in the case of a component failure. Wires, with safety resistors to prevent current being picked up from the scanner RF coil, were connected to the filters and wound around ferrites for additional filtering. The wires were attached to in-house-built circular electrodes with a radius of 6 mm.

Skin conductance recording.

Skin conductance was recorded on the fourth and fifth digits of the nondominant hand using 2 × 3 cm dry stainless steel electrodes and an AT64 Autogenic Systems device. The output of the coupler was converted into an optical pulse frequency. This pulse signal was transmitted using fiber optics, digitally converted outside the scanner room (Micro1401; Cambridge Electronic Design), and recorded (Spike2; Cambridge Electronic Design).

Procedure

DS selection.

Participants first rated nine pictures of male faces on a 9-point “first impression likability” Likert scale. The faces used as DSs comprised those each participant rated as medium in likeability (their third, fourth, fifth, and sixth most likeable faces), randomly allocated to the four conditions crossing probability (high, low) and stimulation type (pain, touch). At the end of the experiment, participants rated the nine pictures again on the same scale and then were presented with all face pairs for a forced-choice likability task.

Pain scaling.

Two electrodes, each connected to a Digitimer box, were attached to the back of each participant's left hand. Low (5 μV) stimulation was applied to the first electrode and increased or decreased until participants reported a just-discernable sensation that was not uncomfortable. They were told that this sensation would be called “touch” and corresponded to a 1 on a 1–100 sensation scale, with 100 representing the worst possible pain. Participants were then told that the level of stimulation on the second electrode would be increased gradually and were reminded that they were free to withdraw their participation at any point. They were asked to rate their sensation every time a stimulation was administered and notify the experimenter when they started feeling pain. When participants reported feeling pain, stimulation was subsequently increased to a level participants considered “the strongest pain that they could tolerate without distress.” Participants were told that this level of stimulation (which was at least 50 μV higher than the touch stimulation in all participants) would be called “pain.” The touch stimulation was then applied to the first electrode to verify it was still felt; if it was not, as a result of habituation, the level of stimulation was increased until it again represented a 1 on the pain scale.

Paradigm overview.

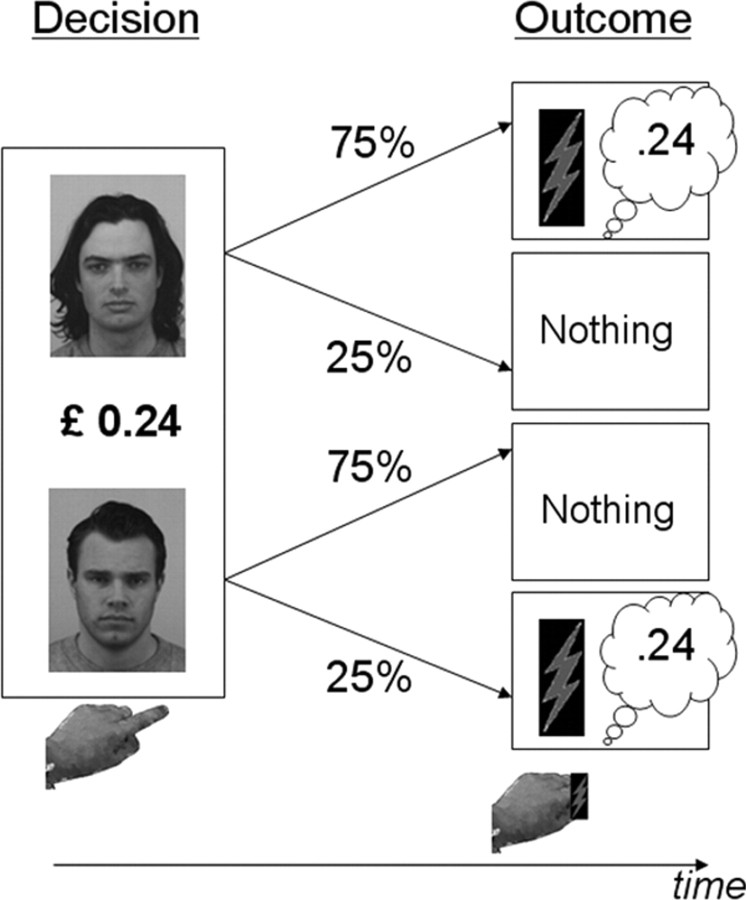

In each trial, participants had to choose between two faces, DS+pain and DS−pain, associated with a high or a low probability, respectively, of the delivery of a painful stimulation to the hand (Fig. 1). Two control faces, DS+touch and DS−touch, were associated with a high or low probability of a mild tactile stimulation. The probability and nature of the stimulation outcomes were entirely predictable based on previous experience in a pre-experimental conditioning session. In the experimental sessions, the amount of money that participants could gain or lose was announced visually at the onset of each trial, but participants knew that it would only be added to or subtracted from their balances if an actual stimulation was delivered. Thus, on any trial in which participants received a stimulation, be it pain or touch, they also gained or lost money. Therefore, any single choice always incorporated both a monetary and a stimulation (either pain or touch) outcome. The offered monetary amounts varied randomly around a mean of zero and were independent of the nature of the stimulation. Note that these outcome components could be congruent (involving pain and monetary loss) or incongruent (involving pain and monetary gain).

Figure 1.

Paradigm. In each trial, two faces and a monetary amount were displayed. One of the two faces was associated with 75% probability of receiving an outcome (and 25% probability of receiving nothing, DS+) and the other with 25% probability of receiving an outcome (and 75% of receiving nothing, DS−). Outcomes always included both the displayed monetary amount and a skin stimulation (touch or pain). Participants were free to choose either of the two faces. To maximize gain and minimize pain, participants should choose the DS− when the monetary amount was zero or negative. Critically, a positive monetary amount introduced a conflict between the goals of gain maximization and pain minimization. Choice consequences were revealed at the offset of the decision screen with outcome delivery determined according to the probability associated with the chosen face.

Experimental sessions.

We scanned participants using functional MRI (fMRI) over four experimental sessions, lasting ∼8 min. In each session, participants received 48 pain trials and 48 touch trials. In one-third of the trials of each type, gain was parametrically varied; in one-third, loss was parametrically varied; and the remaining trials had a zero amount. The order of pain and touch trials was pseudorandomized and fixed for all sessions with a constraint that no more than four pain/touch trials could appear consecutively. The allocation of monetary values to trials varied between sessions. Each trial began with a fixation cross displayed for 180 ms, followed by either the pain (DS+pain and DS−pain) or the touch (DS+touch and DS−touch) face pair (decision time point). The two faces were presented, randomly, 90 pixels to the left and right of the fixation cross. The DS+ faces were associated with 75% conditioned outcome probability and the DS− faces with a 25% conditioned outcome probability. The pairing of face identity and the probability and nature of the stimulation remained constant throughout the experiment. A monetary amount, displayed centrally, varied randomly around a mean of zero, ranging from 10 to 55 pence (sessions 1 and 3) or 12 to 57 pence (sessions 2 and 4) in steps of 3 pence in either the gain or the loss domain. Participants had 2 s to choose the right or left face using an MRI-compatible response box, and, once they responded, the non-chosen face disappeared. Two seconds after the decision time point, participants realized whether they would receive an outcome in this trial or not (outcome time point). If an outcome was not delivered, the screen turned black and the intertrial interval commenced, lasting for a jittered duration of 990–3060 ms (mean of 2025 ms). If an outcome was delivered, it was always signaled by the fixation cross changing to an asterisk, which blinked increasingly faster for 1581 ms, followed by a visual warning signal (a pictogram of a flash) displayed for 450 ms, followed by the stimulation (pain or touch). As described above, all outcomes comprised a combination of a monetary amount and stimulation, in accordance with the information available to participants at the decision time point. Outcomes were delivered strictly according to the outcome probability associated with the chosen face. After stimulation delivery, the intertrial interval commenced. If participants failed to respond within the 2 s decision window after the decision time point (this happened in 1.9% of the trials), the same sequence occurred, but both faces disappeared from the screen and the outcome was delivered with a 50% probability.

Instructions to participants.

For ease of learning, the task was described in the form of a game, but participants knew they were not playing online and that their “opponents” were not real people (see instructions to participants, available at www.jneurosci.org as supplemental material). This may be important to prevent participants from using social strategies. All participants were initially endowed with £20 and told that their goal was to maximize earnings and minimize physical pain. They were asked to imagine they were interacting with four other players. Participants were instructed that each round would begin with the display of the faces of two players. Participants “moved” by selecting one of the two players with which to interact. The chosen player then either “moved” or “passed.” Participants were told that the chosen player's move always had two components, changing the amount in participants' accounts and delivering stimulation to their hand. Participants were also told that the four players with whom they interacted were paired such that each player always appeared with one other unique individual and that one pair could deliver touch and the other pain. They were also told that each player would move with a frequency that was fixed for that player but varied between players. Participants were instructed to move in every round by responding as fast as they could once they made their decision and were informed that “their reaction time will be used to gauge their attention to the task.” Participants were told that, if they failed to respond within 2 s, the computer would play their turn and choose a player at random; thus, in this situation, they would have no control over the outcome. The experimenter then tested participants' knowledge of the rules and emphasized that participants were free to play the game any way they liked. The pre-experimental conditioning session was introduced as a practice session.

Pre-experimental conditioning session.

After the DS selection and pain scaling and before the experimental sessions, participants took part in a conditioning session. This session was identical to the experimental sessions with the exception that the monetary outcome was fixed to zero, thereby allowing participants to learn how they could avoid pain and to acquire an association between the faces and the likely stimulation outcome. There were 88 trials in this session, half with the pain pair and half with the touch pair. Participants were told that the most efficient learning strategy would be to choose the player who was “least likely to move.” Two conditioning sessions were administered when a participant failed to choose the DS−pain and DS−touch 75% of the time (data from the first session was then discarded).

Analysis

Reinforcement learning model of behavior.

We assessed how pain and reward are integrated using a reinforcement learning model (Sutton and Barto, 1998), a variant of temporal difference models that have provided a compelling account of a wealth of psychological, electrophysiological, and neuroimaging data (Schultz et al., 1997; Dayan and Balleine, 2002; O'Doherty et al., 2003; Montague et al., 2004; Seymour et al., 2004; Schultz, 2006), but in this instance applied to a situation in which all the probabilities are stable and well learned. According to this model (see details in supplemental Methods, available at www.jneurosci.org as supplemental material), each of the two faces in a trial (DS+ and DS−) is associated with an expected value, referred to as Q value, computed as the product of the outcome probability and the utility of the outcome. Because here each face is associated with two outcomes, reward (money) and stimulation (either pain or touch), we computed two Q values for each face, a money Q value and a pain Q value. We assumed that, because we used small monetary amounts, the utility of money would be proportional to the numerical monetary offer (Rabin and Thaler, 2001). Furthermore, we assumed that, after a pre-experimental conditioning training session, participants have learned the probabilities of getting an outcome for each DS, as well as the value, or “disutility,” of the pain, which they knew did not vary across trials [although we separately considered a “noisy pain” model, in which the value of pain is stochastic (supplemental Methods and Discussion, available at www.jneurosci.org as supplemental material)]. We determined the disutility of pain for each participant through model fitting. In addition to the pain and the money Q values, each face is also associated with a third Q value, which represents their interaction as the product of the presence of pain and the monetary offer. To characterize each choice, we subtracted the three Q values associated with DS− from the same three Q values associated with DS+, to give rise to the three key quantities associated with choice, called difference Q values. We used logistic regression to predict participants' behavioral choice from the sum of the difference Q values. The relative worth of the main effect of pain and its interaction with money were assessed directly through the regression.

Image acquisition.

We used a 1.5T Siemens SONATA system to acquire both T1-weighted anatomical images and T2*-weighted MRI transverse echoplanar images (64 × 64 mm; 3 × 3 mm pixels; echo time, 90 ms) with BOLD contrast. The echoplanar imaging (EPI) sequence was optimized for maximizing signal in inferior brain regions (Weiskopf et al., 2006). Each EPI comprised 48 2-mm-thick contiguous axial slices taken every 3 mm (1 mm gap), positioned to cover the whole brain, with an effective repetition time of 4.32 s per volume. The first five volumes were discarded to allow for T1 equilibration effects. Pulse and breathing signals were acquired to correct for cardiac and respiratory effects.

Imaging analysis.

The data were analyzed using Statistical Parametric Mapping (SPM8; Wellcome Department of Cognitive Neurology, University of College London, London, UK; http://www.fil.ion.ucl.ac.uk/spm). All volumes were realigned to the first volume to correct for interscan movement and unwarped to remove unwanted movement-related variance without removing variance attributable to the task (Andersson et al., 2001). The mean of the motion-corrected images was then coregistered to the individual's structural MRI using a 12-parameter affine transformation. This image was then spatially normalized to standard MNI space (Montreal Neurological Institute reference brain in Talairach space, Talairach and Tournaux, 1998) using the “unified segmentation” algorithm available within SPM (Ashburner and Friston, 2005), with the resulting deformation field applied to the functional imaging data. A mask of individual gray matter was also generated at this point and was used during the final estimation step. All normalized images were then smoothed with an isotropic 8 mm full-width half-maximum Gaussian kernel to account for differences between participants and allow valid statistical inference according to Gaussian random field theory (Friston et al., 1995a,b). The time series in each voxel were high-pass filtered at Hz to remove low-frequency confounds and were scaled to a grand mean of 100 over voxels and scans within each session.

Single-subject analysis.

We used a model-based analysis of the fMRI data with onset regressors at three trial time points: decision (when the cue is presented), outcome revelation (when a future outcome becomes certain, at cue offset), and outcome delivery. We modeled the decision time point using stimulation and reward Q values of the chosen face and the outcome revelation time point using stimulation and reward outcome prediction errors (PEs), defined as the differences between predicted Q values and revealed outcomes. Note that we use the term PE to denote both Q values and outcome PE, because both represent signed changes from initial predictions, assuming that prediction at trial onset is zero and prediction after choice is equivalent to the Q value of the chosen option. To clarify, if a participant chooses the DS+, the Q value of that choice would be high relative to the initial prediction of zero and larger than the Q value of a DS− choice. After choice, the best prediction is equivalent to the Q value. Outcome receipt would generate a positive PE, because the receipt of 40 pence is more rewarding than a 75% chance to obtain 40 pence, which has a Q value of 30 pence. Outcome omission would similarly generate a negative PE. Clearly, outcome PE would be lower after DS+ choice relative to after DS− choice, if an outcome is actually provided. Note that, although decision and revelation were temporally close, their parametric modulators were statistically dissociated through experimental design, which ensured stochasticity in outcome. Had participants always received an outcome, Q values and outcome PEs would be perfectly negatively correlated; instead, the outcome PEs depended on both the actual outcomes and the Q value.

Another potential signal of interest is the experienced utility, or response to actual obtained reward. For completeness, we examined an alternative version of the fMRI model that included experienced utility as a parametric modulator at the outcome delivery time point. To avoid colinearities, this alternative model did not include modulation of the outcome revelation time point by outcome PEs.

We analyzed pain and touch trials independently, making for eight regressors in total, corresponding to the cells of a 2 (trial type: pain and touch) × 2 (time point: decision and outcome revelation) × 2 (outcome: stimulation and reward) design. These stimulus functions were convolved with canonical hemodynamic response functions and entered as regressors in within-subject linear convolution models within SPM in the usual way. This provided contrast maps of sensitivity for stimulation and reward value.

Because of the free-choice nature of our paradigm, larger stimulation PEs (PEs for pain and touch) were associated with larger reward PEs. This stemmed from the natural preference of our participants to choose the DS+ more frequently when the monetary offers were high. To prevent ambiguity in the interpretation of brain signals expressing reward PEs, the crucial signal for the winning interactive model of behavior, we took care to first partial out from this signal any effects of stimulation PEs. For both decision and outcome revelation time points, we entered stimulation regressors before the reward regressors, so that the default option of serial orthogonalization of parametric modulators ensured that variance shared between these regressors would be assigned to the stimulation regressors. This means that any activation associated with the reward PE was not contaminated by stimulation PE. However, this step of the fMRI model design rendered ambiguous the interpretation of activation associated with the stimulation PEs. This meant that we could not analyze stimulation PEs, which carry the signal crucial for the additive model. Therefore, the focus of our fMRI analysis speaks solely to the more successful interactive model. Physiological activity (10 regressors coding cardiac phase, six regressors coding respiratory phase, and one regressor coding change in respiratory volume per unit time; calculated using the Physio toolbox in SPM) were modeled as covariates of no interest.

Group analysis.

The main effect of pain, relative to touch, was analyzed with a t test comparing all pain and touch trials (collapsing across the three time points in each trial: decision, outcome revelation, and outcome delivery). We determined where individual differences modulated the representation of pain in the brain by examining how the main effect of pain was modulated by individual reward sensitivity scores (the slope of each participant's behavioral choice sensitivity function under pain; see Results). This analysis was restricted to regions activated by the main effect of pain across the entire group. Our main analysis focused on regions that covaried positively with reward PEs. We were interested in signals that behaved similarly at both the decision and outcome time points, namely, where activation covaried positively with Q values and with outcome PE. The parametric regressors corresponding to reward PEs were analyzed with a 2 (trial type: pain and touch) × 2 (time point: decision and outcome revelation) full factorial model with a pooled error variance assumption to increase sensitivity and factors specified as “dependent” and variance as “unequal.” Areas sensitive to the main effect of reward PE were defined as those that covaried positively with all four regressors. To determine which of these activations was attenuated by pain, we contrasted reward PEs in touch trials versus pain trials, using the same factorial model. This analysis was restricted to regions activated by the main effect of reward. All analyses used a spatial extent threshold of 8 contiguous voxels. Main effects used whole-brain analyses that were thresholded at voxel level of p < 0.001 and cluster false discovery rate, <0.05 (Chumbley and Friston, 2009). We used p < 0.05 (uncorrected) to define restricted functional volumes as masks for follow-up analyses. All follow-up analyses of these restricted volumes were thresholded at p < 0.001 (uncorrected). Note that findings from these analyses are therefore exploratory in nature and should be replicated in additional studies.

Psychophysiological interactions.

We selected a seed region in the right anterior insula [coordinates (32, 18, −10)], which in our study expressed the main effect of pain more strongly in participants whose sensitivity to reward changed as a function of pain (see Results). We extracted the time series from a sphere of 6 mm radius around this voxel for each participant using the first eigen time series (principal component). The psychophysiological interaction (PPI) (Friston et al., 1997) regressor was calculated from the product of the mean-corrected activity in the seed region and a vector coding for the main effect of reward. By including the physiological activation in the seed region as well as the psychological effect of reward in the design matrix for the PPI analysis, we ensured that our analysis of effective connectivity was specific for insula influences that covaried with reward and that occurred over and above any effects of reward or reward-independent insula influences. Thus, this PPI analysis could reveal areas in which activation for reward PE is attenuated when insula activity increases. Subsequently, we asked whether there were differences in PPI as a function of individual differences in the influence of pain on choice. Specifically, we were interested in regions in which PPI was more negative (namely, when pain-related activation in the insula attenuated activation more intensely), the more participants expressed the interactive effect of pain on reward in their behavioral choice. We entered the PPI maps of sensitivity into a one-sample t test, as well as a covariate representing individual pain sensitivity score (the slope of each participant's behavioral choice sensitivity function under pain; see Results). We restricted our analysis to regions that expressed the main effect of reward (the same mask as the one used for the interaction of pain and reward above). We report regions that covary positively with this individual difference, namely regions in which the PPI is more negative in individuals who had shallower reward sensitivity slopes.

Skin conductance analysis.

Skin conductance response (SCR) data were analyzed using a general linear convolution model (Bach et al., 2009) as implemented in SCRalyze (version b0.4; http://scralyze.sourceforge.net). The signal was converted back to a waveform signal with 100 Hz time resolution, bandpass filtered with a first-order Butterworth filter and cutoff frequencies of 5 and 0.0159 Hz (corresponding to a time constant of 10 s), respectively, and downsampled to 10 Hz sampling rate. The time series was then z-transformed to account for between-subjects variance in SCR amplitude, which can be attributable to peripheral and nonspecific factors such as skin properties. For each condition of interest, a stick function encoding event onsets was convolved with the canonical skin conductance response function and parameter estimates were extracted for each participant.

Results

Behavioral results

Acquisition of the instrumental contingencies

During the pre-experimental conditioning session, participants demonstrated robust acquisition of instrumental conditioning, choosing the DS− more often than the DS+ for both pain (t(17) = 24.00, p < 0.001) and touch (t(17) = 23.80, p < 0.001). As a result of our suggestion that always choosing the DS− during this session would help learning, participants chose DS−pain and DS−touch equally often (82% of the time, t < 1).

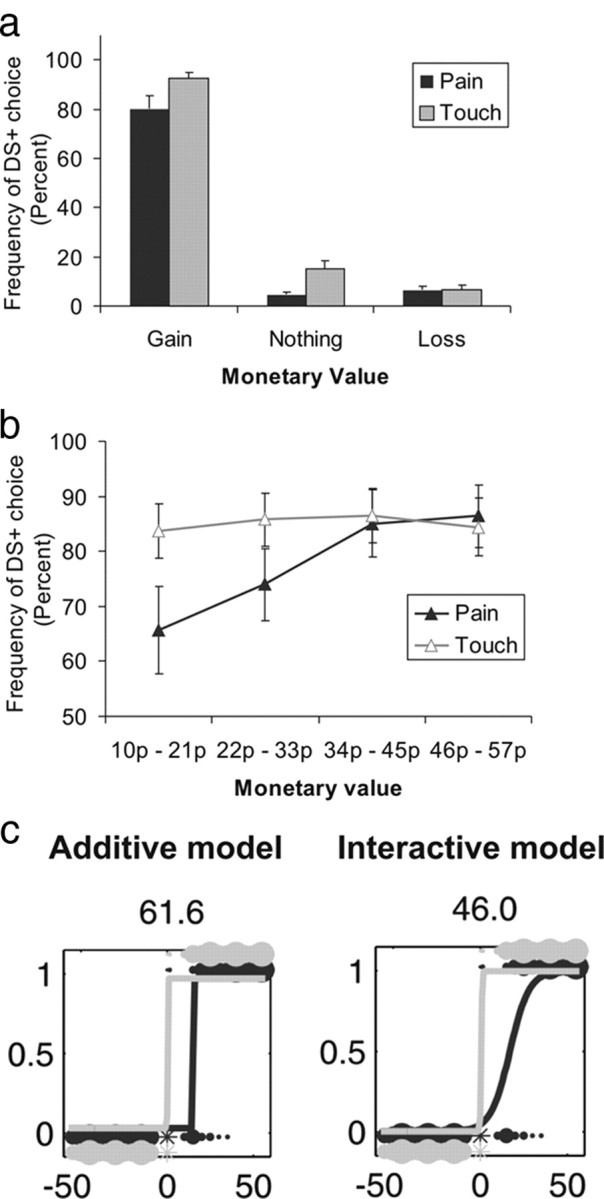

Manipulation check

To verify that both the pain and the reward manipulation had a significant influence on participants' choice, we binned pain and touch trials according to the offered monetary value (gain, zero, and loss) (Fig. 2a) and observed that both pain (F(1,17) = 7.48, p < 0.05, partial η2 = 0.31) and monetary value (F(2,34) = 299.89, p < 0.001, partial η2 = 0.95) influenced participants' choice of DS+. The interaction effect was also significant (F(2,34) = 4.56, p < 0.05, partial η2 = 0.21). Because our prediction was limited to an effect of pain on gain trials, we followed up on the significant interaction between money and pain by focusing on gain trials, dividing them to four equal bins (Fig. 2b). We found a main effect of monetary value (F(3,51) = 11.68, p < 0.001, partial η2 = 0.41) that interacted with pain (F(3,51) = 8.22, p < 0.001, partial η2 = 0.33), reflecting a lower frequency of DS+ choice as a function of pain only for low monetary offers (bin 1, t(17) = 2.44, p < 0.05; bin 2, t(17) = 2.95, p < 0.01; bins 3 and 4, t < 1). Although this confirmed our prediction that both money and pain factor into participants' decision in gain trials, the effect of pain was confined to trials with the lower monetary offers, limiting the way that its effects would appear in our study. Similarly, at debriefing, participants reported more conflict in pain–gain trials than in touch–gain trials (t(17) = 4.83, p < 0.001), but the average level of conflict reported in pain trials was not high (pain–gain, mean of 4.11; touch–gain, mean of 1.55; on a 1–9 scale, with 0 representing no conflict and 9 representing substantial conflict). Together, these observations suggest that the positive reward value of money was high relative to the negative value of pain, resulting in only moderate level of conflict. Of course we could not administer stronger pain because of ethical considerations but surmise that, had we used lower monetary offers, the effect of pain on choice may have appeared stronger.

Figure 2.

Behavioral analysis. a, Frequency of choosing the DS+ in pain (black) and touch (gray) trials as a function of binned monetary offers: gains (positive offers), losses (negative offers), and zero offers. Error bars represent SE. b, Frequency of DS+ choice in pain (black) and touch (gray) trials as a function of binned positive monetary offers. Error bars represent SE. c, Empirical and modeled choice behavior for a single participant. The circles represent empirical data as a function of the monetary amount offered in each pain (black) and touch (gray) trial for a single participant. Circle size represents choice frequency, with choice of DS+ and DS− corresponding to 1 and 0 on the y-axis, respectively. Line graphs represent the modeled choice for pain and touch in the additive (left) and interactive (right) models. The additive model predicts choice according to the sum of pain and reward Q values and is nested within the interactive model that predicts choice also according to the interaction (product) of these two Q values. The additive effect of pain can be seen in the rightward translation of the sigmoid function and the interactive effect of pain in the change of slope. The number at the top of each panel corresponds to the Bayesian information criterion for each model in this participant.

Additional evidence of value learning in relation to the faces was provided by participants' choice latency and face likeability ratings. Thus, during the pre-experimental conditioning phase, participants were faster to choose DS−pain compared with DS−touch (t(17) = 2.60, p < 0.05). During the course of the experiment, participants learned to like the pain face pair less than the touch face pair, regardless of the probability with which each face in the pair predicted an outcome, evident in choice likeability scores, derived from the number of times a face was selected as more likeable across all possible pairs in a postexperimental forced-choice task (F(1,19) = 5.41, p < 0.05, partial η2 = 0.25). Relative to the touch face pair, there was a trend for the likeability ratings the pain face pair received after the experiment to be lower than before the experiment (F(1,16) = 3.24, p < 0.09, partial η2 = 0.17).

Choice behavior

To characterize how money and pain are integrated within individuals, we implemented additive and interactive reinforcement learning models for each participant (see details in supplemental Methods, available at www.jneurosci.org as supplemental material). We used standard model comparison methods with our behavioral data to quantify the importance of the interaction term by comparing the additive model, which included difference Q values for money and pain, with the interaction model, which also included a difference Q value for their interaction. To quantify the importance of the pain to decision making, we also implemented a basic model that included money Q values alone. Thus, the basic model was nested within the additive model, which was nested within the interactive model.

The additive model [Bayesian information criterion (BIC), 3851] provided a significantly better fit than the basic model (BIC of 4128) according to a conventional BIC penalization of its extra complexity, demonstrating the influence of pain on participants' decisions. However, the interactive model (BIC of 3841) provided a significantly better fit than the additive model according to a conventional BIC penalization as well as in the likelihood ratio test (χ2(18) = 118, p < 0.001). The noisy pain model (BIC of 3878) (supplemental Methods, available at www.jneurosci.org as supplemental material) fitted less well than either the additive or the interactive models, in line with our assumption that, during practice, participants have learned what the disutility of the pain stimulation is for them (but see Discussion). The empirical data and the predictions of the additive and the interactive models for a single participant are depicted in Figure 2c (supplemental Figs. S1 and S2 depict the empirical data and the model prediction for all participants, available at www.jneurosci.org as supplemental material). Note that, in both models, the additive effect of pain is represented in the translation of the sigmoid to the right, signifying that, in pain trials, a higher monetary value is required to induce participants to choose the DS+. The interactive model represents the interaction effect as a change in the slope, with a shallower slope in pain trials relative to touch trials signifying that sensitivity to monetary reward is lower.

We quantified the slopes in the interactive model (supplemental Fig. S3, available at www.jneurosci.org as supplemental material). In the case of touch, the empirical slope was 0.96, consistent with a sharp change in behavior around monetary offers of zero, namely, the rational shift from choice of DS−touch when the monetary offer was negative to DS+touch when the monetary offer was positive. In pain trials, 11 of 18 participants had reduced slopes, indicating that their sensitivity to reward was attenuated under pain. Pain still had a significant influence on choice in the remaining seven subjects; however, this was only reflected in an additive shift of the choice function under pain, which was significant even in an analysis limited to this group alone (t(6) = −4.9, p = 0.003). To summarize, the interactive model provided a better account for behavior than the additive model, although the degree to which pain interacted with reward sensitivity varied across participants. Below we exploit the individual variability to shed more light on the way pain modulates reward sensitivity.

SCR results

Both pain and money influenced SCRs, providing additional evidence for the success of these manipulations. We analyzed SCRs for the outcome delivery time point with a 2 (money: gain vs loss/zero monetary offers) × 2 (stimulation: pain vs touch) repeated measures ANOVA. Pain stimulation generated higher SCR relative to touch stimulation (F(1,17) = 12.57, p < 0.01, partial η2 = 42), an effect that interacted with money (F(1,17) = 13.47, p < 0.01, partial η2 = 44). Although money did not significantly influence the SCRs for touch (p > 0.10) and SCRs were higher for pain than for touch regardless of the magnitude of the monetary offer (gain, t(17) = 3.96, p = 0.001; loss/zero, t(17) = 2.65, p = 0.02), SCRs for pain were higher in gain trials than in trials with zero or negative money offers (t(17) = 4.03, p = 0.001).

Strikingly, the slope of each participant's behavioral choice sensitivity function under pain correlated negatively with the main effect of pain on SCRs (r = −0.49, p < 0.05, collapsed across all monetary offers), showing that participants whose choices were more influenced by the interactive effect of pain had higher SCRs in pain trials relative to touch trials. Unlike the slope, the additive shift did not correlate significantly with SCRs. The significant correlation between the interactive effect of pain on behavioral choice and the physiological response to pain suggests that these measurements may tap into a global individual characteristic. To summarize, pain and reward influenced behavioral choice and SCRs. The interactive effect of pain and reward, which helped account for choice behavior, correlated with SCRs across individuals.

fMRI results

Having established a significant pain-related difference in sensitivity to monetary gain behaviorally, we proceeded to extract the physiological correlates of this interaction using the fMRI data. To do this, we assessed the sensitivity of physiological responses to reward by creating parametric stimulus functions encoding the reward PEs associated with the chosen DS in each trial. We use the term PE to denote both Q value at the time of decision and PE at the time of outcome revelation, because both represent changes from initial predictions. We coded pain and touch trials separately and also coded reward PEs separately for these two trial types, so that we could implicitly model the interaction between pain and reward. To identify brain regions responsible for encoding pain or reward and their interaction, we performed three tests to determine (1) the main effect of sensitivity to pain relative to touch, (2) the main effect of sensitivity to reward (averaged over pain and touch trials), and, finally, (3) the interaction between pain and reward, namely, the difference between sensitivity to reward under touch relative to pain. Because our hypothesis was that pain would modulate a PE signal rather than Q values or outcome PEs separately, all of our analyses examine both decision and outcome time points together (collapsing across the time point factor). Descriptively, however, we plot the parameter estimates to show that, indeed, the signal we obtained was similar across time points.

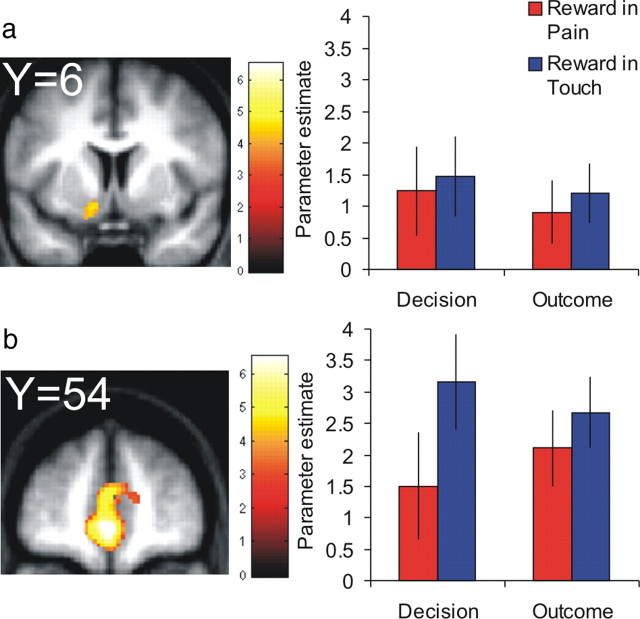

As anticipated, the main effect of pain activated regions corresponding to a putative pain matrix (Leknes and Tracey, 2008), with clusters in the primary and secondary somatosensory cortex (supplemental table and Fig. S6, available at www.jneurosci.org as supplemental material). Interestingly, Figure 5 shows that the difference between pain and touch activation in the right anterior insula [(32, 18, −10), T = 4.50; (36, 20, −8), T = 4.34; 27 voxels], a region thought to encode the subjective value of pain (Craig, 2003), was larger in participants who expressed the interactive effect of pain more strongly in their behavior. Figure 3 shows that reward PEs were expressed in a ventromedial prefrontal region extending into OFC and ACC, and left ventral striatum, as well as right hippocampus, bilateral insula, and posterior cingulate cortex (supplemental table, available at www.jneurosci.org as supplemental material). Parameter estimate plots show that the activation in OFC and ventral striatum for reward PEs was similar across trial types and time points.

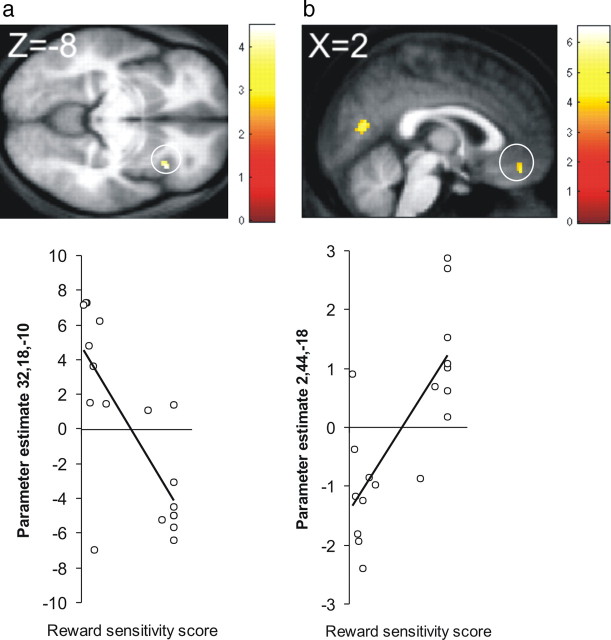

Figure 5.

Analysis of individual differences. Expression of the interactive effect of pain was defined as attenuated sensitivity to reward under pain. Reward sensitivity scores, which corresponded to the slopes of individual's behavioral choice function, were lower in individuals who expressed the interactive effect of pain more robustly. a, The right anterior insula was activated more strongly by pain, relative to touch, in individuals with lower reward sensitivity scores (b) The psychophysiological interaction between the right anterior insula and the expression of reward in the OFC was modulated by reward sensitivity scores. The positive-signed reward signal in the OFC was attenuated by the insula in individuals with lower reward sensitivity scores. Scatter plots depict the β parameter estimates for each of these regions as a function of reward sensitivity.

Figure 3.

Representation of reward. Reward prediction errors activated ventral striatum (a) and ventromedial prefrontal cortex extending to anterior cingulate cortex (b). Group-level β parameter estimate plots to the right of each activation map show that these regions expressed reward prediction errors positively across trial type (pain and touch) and time point (decision and outcome). Error bars represent SE.

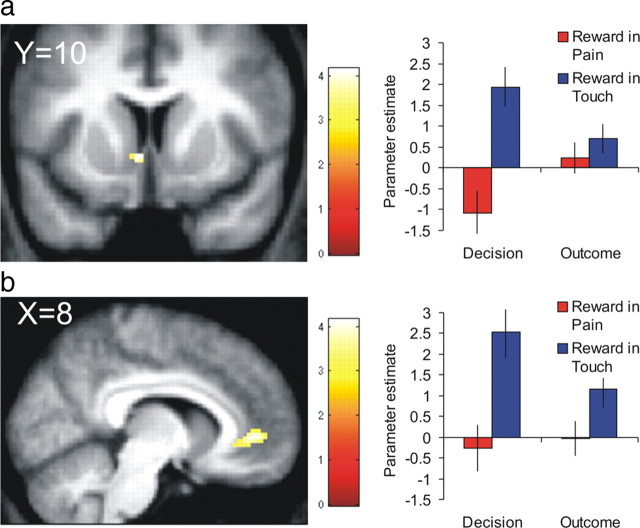

The interaction between pain and reward that we observed in participants' behavior corresponds to an attenuation of reward PE signal as a function of pain. To determine which regions expressed this interaction, we contrasted reward PEs in touch versus pain trials. This analysis revealed effects in the ventral striatum corresponding to the NA and subgenual ventral ACC (Fig. 4) (supplemental table, available at www.jneurosci.org as supplemental material). Parameter estimate plots show that signal in these regions was positively correlated with reward PEs in touch trials, at both decision and outcome revelation time points. Critically, pain stimulation attenuated this correlation between the blood oxygenation level-dependent signal (BOLD) and reward PEs. The interaction between time point and stimulation did not reach significance in any brain region.

Figure 4.

Interactive effects of pain. Pain attenuated the positive correlation between the BOLD signal and reward prediction errors in nucleus accumbens (a) and anterior cingulate cortex (b). Error bars represent SE.

The modulation of PE by pain appeared more robust at the point of decision than at outcome revelation. Therefore, we performed a follow-up analysis using the same ANOVA design but now focusing on the decision time point alone. This analysis revealed pain-dependent attenuation of PEs at decision, corresponding to Q values, in identical regions in which we observed a pattern of pain-dependent attenuation for PEs across both decision and outcome time points. In keeping with the previous analysis, this follow-up analysis was restricted to regions that were activated by the main effect of reward. Thus, pain significantly attenuated a positive correlation of Q values and BOLD in NA and ACC (supplemental Fig. S7, available at www.jneurosci.org as supplemental material), as well as in the left supplementary motor area [(−8, 8, 50), T = 3.56]. In a parallel follow-up analysis using the same ANOVA design but now focusing on outcome PEs, we did not find significant pain-dependent modulation of any brain region. To examine whether pain modulated experienced utility, we examined the same contrast in an alternative model, which included experienced utility instead of outcome PEs. Pain attenuated experienced utility in the left parahippocampal gyrus [(−34, −24, −22), T = 3.63].

A final question concerned the variability we observed across participants in the expression of an interaction between pain and reward in choice behavior. We hypothesized that such differences stemmed from variability in the subjective value participants assigned to pain, which then influenced their choice between the DS+ and DS−. A simple analysis of covariance, resembling that conducted for the main effect of pain, did not reveal any significant modulation of the pain × reward interaction effect as a function of individual reward sensitivity scores, and consequently we then used a more sensitive, trial-by-trial PPI approach. To capture the potential way in which differential sensitivity to pain modulated sensitivity to reward, we extracted the main effect of pain in the right anterior insula and computed the interaction between physiological pain activation in this region and the psychological effect of reward PEs. This PPI signal shows where the insula attenuates sensitivity to reward on a trial-by-trial basis.

We predicted that brain regions that represent the value of the chosen option would covary with this signal. Furthermore, we expected this covariance to be modulated by reward sensitivity scores. As described above, we used the slope of the behavioral choice sensitivity function under pain as an index of this individual difference. Figure 5 shows that reward sensitivity scores modulated the PPI in the OFC [(2, 44, −18]), T = 4.60, k = 22] (supplemental table, available at www.jneurosci.org as supplemental material), a region that represented reward PE across the entire sample (supplemental table, available at www.jneurosci.org as supplemental material). Parameter estimate plots show that participants whose behavioral choice slopes were shallower exhibited a negative PPI, indicating that the insula attenuated an overall positive correlation with reward in that region.

Discussion

Jeremy Bentham in An Introduction to the Principles of Morals and Legislation (1780) proposed that “nature has placed mankind under the governance of two sovereign masters, pain and pleasure. It is for them alone to point out what we ought to do, as well as to determine what we shall do.” Although we know a substantial amount about how the brain represents pleasure and pain separately, we know little about how they are integrated so “as to determine what we shall do.” Our behavioral findings point to an interaction between pain and reward, with pain substantially decreasing sensitivity to reward, an effect that was also significant in an analysis of the Q values alone at the decision time point. In support of a best-fitting interactive model of choice behavior, we found that evoked responses in two brain areas, ventral striatum and ACC, showed a significant attenuation in sensitivity to monetary reward as a function of higher levels of anticipated pain. This finding ties together two brain systems implicated in value learning, cortical and subcortical, and demonstrates their involvement in the integration of pain and gain.

Note that the ventral striatum showed two completely orthogonal effects: the activity of this region increased with anticipated and experienced excess monetary gain (a main effect), consistent with a wealth of data showing that these quantities are positively coded in striatum (O'Doherty et al., 2003; Tobler et al., 2007) and OFC (O'Doherty, 2007). Strikingly, the increase of striatal activation as a function of reward was substantially attenuated under high levels of anticipated pain (an interaction) in a manner that paralleled the observed effect of pain on behavior.

The finding that the signal for reward PEs was attenuated by pain in NA and the ACC is striking because these two interconnected structures (Brog et al., 1993) are thought to be pivotal when animals integrate costs and benefits. For example, using a cost–benefit T-maze paradigm, it has been shown that dopamine depletion or infusion of dopamine antagonist into NA suppresses an animal's propensity to respond for reward, biasing animals to choose the low-effort, low-reward option over the high-effort, high-reward option (Salamone and Correa, 2002). Similarly, after ACC damage, animals are disinclined to exert effort to obtain the higher reward and opt instead for the low-effort, low-reward option (Walton et al., 2003). The behavioral change in this task is selectively attributable to altered cost–benefit analysis because, when both high and low rewards required high effort, ACC-lesioned animals chose the option that led to high reward. It would therefore appear that both NA and components of ACC contribute to cost–benefit analysis that, given our findings, also extend to non-energetic cost–benefit analysis involving the integration of pain and reward. This result ties in with a recently published report (Croxson et al., 2009) that the right ventral striatum (in a region that is within a 8 mm sphere of the NA activation we report here) and the dorsal ACC are activated for the “‘net value” of motor effort and monetary reward. The more dorsal ACC location in the study by Croxson et al. could be related to their use of a different, and more motoric, aversive cost.

The degree to which choices were influenced by expected pain cost varied across participants. Our behavioral model captured this variability in the right shift of the choice function under pain (additive effect of pain) and the attenuation of the slope of this function (interactive effect of pain). Our fMRI analysis focused on the interactive effect of pain, and, accordingly, our analysis of individual differences focused on variability in the slope of the choice function, a signal that directly reflected the attenuated sensitivity to monetary reward under pain. The slope correlated with physiological markers of pain both in terms of SCRs and a signal in the right anterior insula, a region known to encode the subjective value of pain (Craig, 2003). The group-wide attenuation of the representation of reward under pain in the ACC and ventral striatum was not significantly modulated by individual differences in slope. Strikingly, however, the representation of reward in the OFC was modulated by the pain-related signal from the insula. Their effective connectivity was stronger the more participants were willing to forego reward to avoid pain. This finding suggests an underlying neural substrate, in terms of connection strength between insula and OFC, for participants' choice variability.

The interaction term in our behavioral model fits the excess stochasticity of participants' behavior on trials with both pain and moderate amounts of reward. A possible alternative source of randomness is that participants sample on each trial the disutility of the pain from a probability distribution. We therefore also fit a noisy pain model, using gamma distributions to characterize this uncertainty. This model fitted somewhat less well than the interactive model (despite having the same number of parameters). More importantly, it also seems a priori unlikely that participants would exhibit persistent and gross variability in the disutility of an outcome that they had experienced 40 times in the conditioning session that preceded the experiment proper.

Our model focused on changes in sensitivity to PEs, but we note that outcome PEs were correlated with obtained reward or “experienced utility.” This means that, although we could be certain that pain attenuated sensitivity to PEs at decision, we could not tease apart, using the present experimental design and fMRI model, whether pain attenuated sensitivity to outcome PEs or to experienced utility. We could not examine the additive effects of pain in the brain and focused instead on the interaction highlighted in the winning interactive model of behavioral choice. Our behavioral and fMRI data thus provide compelling evidence for a modulation of reward by pain, but the question of whether reward also changes the value of pain remains as a challenge for future research. Future studies should address these limitations.

Our task used faces as DSs and was administered within a game framework to make it easier for participants to attribute valence to the stimuli and understand how their goals related to the task. This social context seemed more ecologically valid than a context using abstract stimuli and rules but is still a unique instance of a myriad of contexts in which the processes we aimed to study takes place. To allow for generalization, future studies should replicate the reported findings in a nonsocial situation to ensure that they are not dependent on the unique framework we used here.

A feature of our task is that the aversive values of the choices were well learned and therefore potentially available via model-free or cached mechanism associated with pavlovian and/or instrumental controllers. In contrast, the appetitive values differed on every trial, possibly invoking a distinct model-based, or goal-directed, control mechanism (Dickinson and Balleine, 1994; Daw et al., 2005). Because our task did not incorporate a crucial devaluation test to prove goal directedness (Dickinson and Balleine, 1994; Valentin et al., 2007), we cannot conclusively attribute effects to interactions between model-based and model-free control systems. We also did not include any manipulation that would discriminate pavlovian from instrumental effects. Nevertheless, our observations of additive and multiplicative integration of pain and gain may speak to how these two systems interact, with aversive model-free predictions attenuating model-based reward PEs in NA, ventral ACC, and OFC (Phillips et al., 2007). The attenuation of the instrumental reward-based choice and reward representation in the NA as a function of pain prediction in our task thus suggests a possible mechanism for the effect of conditioned aversive pavlovian cues on appetitive conditioned instrumental response in transfer paradigms (Cardinal and Everitt, 2004), an effect that is attenuated after NA lesions in animals (Parkinson et al., 1999).

In our task, appetitive and aversive values were represented in distinct regions and converged in the striatum, ACC, and OFC. Our findings suggest a sophisticated interaction between appetitive and aversive predictions in the control of goal-directed behavior, providing a new perspective on predicting behavior and brain activity in the context of mixed outcomes. Moreover, our findings may imply that, when aversive predictions are invoked (e.g., under threat), when suffering from physical pain, or when predictions for the future are chronically aversive, as is typical in clinical depression, reward processing will be altered in a way that would influence decision making.

Footnotes

This work was supported by a Wellcome Trust Programme Grant (R.J.D.) and the Gatsby Charitable Foundation (P.D.). We thank Karl Friston for intellectual guidance and insightful comments on this manuscript and Y-Lan Boureau for discussions of interactions between dopamine and serotonin and reward and punishment.

References

- Aberman JE, Salamone JD. Nucleus accumbens dopamine depletions make rats more sensitive to high ratio requirements but do not impair primary food reinforcement. Neuroscience. 1999;92:545–552. doi: 10.1016/s0306-4522(99)00004-4. [DOI] [PubMed] [Google Scholar]

- Andersson JL, Hutton C, Ashburner J, Turner R, Friston K. Modeling geometric deformations in EPI time series. Neuroimage. 2001;13:903–919. doi: 10.1006/nimg.2001.0746. [DOI] [PubMed] [Google Scholar]

- Ashburner J, Friston KJ. Unified segmentation. Neuroimage. 2005;26:839–851. doi: 10.1016/j.neuroimage.2005.02.018. [DOI] [PubMed] [Google Scholar]

- Bach DR, Flandin G, Friston KJ, Dolan RJ. Time-series analysis for rapid event-related skin conductance responses. J Neurosci Methods. 2009;84:224–234. doi: 10.1016/j.jneumeth.2009.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berns GS, McClure SM, Pagnoni G, Montague PR. Predictability modulates human brain response to reward. J Neurosci. 2001;21:2793–2798. doi: 10.1523/JNEUROSCI.21-08-02793.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berridge KC, Grill HJ. Isohedonic tastes support a two-dimensional hypothesis of palatability. Appetite. 1984;5:221–231. doi: 10.1016/s0195-6663(84)80017-3. [DOI] [PubMed] [Google Scholar]

- Brog JS, Salyapongse A, Deutch AY, Zahm DS. The patterns of afferent innervation of the core and shell in the “accumbens” part of the rat ventral striatum: immunohistochemical detection of retrogradely transported fluoro-gold. J Comp Neurol. 1993;338:255–278. doi: 10.1002/cne.903380209. [DOI] [PubMed] [Google Scholar]

- Cacioppo JT, Berntson GG. Relationship between attitudes and evaluative space: a critical review, with emphasis on the separability of positive and negative substrates. Psychol Bull. 1994;115:401–423. [Google Scholar]

- Caine SB, Koob GF. Effects of dopamine D-1 and D-2 antagonists on cocaine self-administration under different schedules of reinforcement in the rat. J Pharmacol Exp Ther. 1994;270:209–218. [PubMed] [Google Scholar]

- Cardinal RN, Everitt BJ. Neural and psychological mechanisms underlying appetitive learning: links to drug addiction. Curr Opin Neurobiol. 2004;14:156–162. doi: 10.1016/j.conb.2004.03.004. [DOI] [PubMed] [Google Scholar]

- Chumbley JR, Friston KJ. False discovery rate revisited: FDR and topological inference using Gaussian random fields. Neuroimage. 2009;44:62–70. doi: 10.1016/j.neuroimage.2008.05.021. [DOI] [PubMed] [Google Scholar]

- Craig AD. Interoception: the sense of the physiological condition of the body. Curr Opin Neurobiol. 2003;13:500–505. doi: 10.1016/s0959-4388(03)00090-4. [DOI] [PubMed] [Google Scholar]

- Croxson PL, Walton ME, O'Reilly JX, Behrens TE, Rushworth MF. Effort-based cost-benefit valuation and the human brain. J Neurosci. 2009;29:4531–4541. doi: 10.1523/JNEUROSCI.4515-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- Dayan P, Balleine BW. Reward, motivation, and reinforcement learning. Neuron. 2002;36:285–298. doi: 10.1016/s0896-6273(02)00963-7. [DOI] [PubMed] [Google Scholar]

- Dickinson A, Balleine B. Motivational control of goal-directed action. Anim Learn Behav. 1994;22:1–18. [Google Scholar]

- Friston KJ, Ashburner J, Frith CD, Poline JB, Heather JD, Frackowiak RSJ. Spatial registration and normalization of images. Hum Brain Mapp. 1995a;2:165–189. [Google Scholar]

- Friston KJ, Holmes AP, Worsley KJ, Poline JB, Frith CD, Frackowiak RSJ. Statistical parametric maps in functional imaging: a general linear approach. Hum Brain Mapp. 1995b;2:189–210. [Google Scholar]

- Friston KJ, Buechel C, Fink GR, Morris J, Rolls E, Dolan RJ. Psychophysiological and modulatory interactions in neuroimaging. Neuroimage. 1997;6:218–229. doi: 10.1006/nimg.1997.0291. [DOI] [PubMed] [Google Scholar]

- Green RF, Goldried MR. On the bipolarity of semantic space. Psychol Monogr. 1965:79. [Google Scholar]

- Hoebel BG, Avena NM, Rada P. Accumbens dopamine-acetylcholine balance in approach and avoidance. Curr Opin Pharmacol. 2007;7:617–627. doi: 10.1016/j.coph.2007.10.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iordanova MD, Westbrook RF, Killcross AS. Dopamine activity in the nucleus accumbens modulates blocking in fear conditioning. Eur J Neurosci. 2006;24:3265–3270. doi: 10.1111/j.1460-9568.2006.05195.x. [DOI] [PubMed] [Google Scholar]

- Jensen J, McIntosh AR, Crawley AP, Mikulis DJ, Remington G, Kapur S. Direct activation of the ventral striatum in anticipation of aversive stimuli. Neuron. 2003;40:1251–1257. doi: 10.1016/s0896-6273(03)00724-4. [DOI] [PubMed] [Google Scholar]

- Larsen JT, McGraw AP, Cacioppo JT. Can people feel happy and sad at the same time? J Pers Soc Psychol. 2001;81:684–696. [PubMed] [Google Scholar]

- Larsen JT, McGraw AP, Mellers BA, Cacioppo JT. The agony of victory and thrill of defeat: mixed emotional reactions to disappointing wins and relieving losses. Psychol Sci. 2004;15:325–330. doi: 10.1111/j.0956-7976.2004.00677.x. [DOI] [PubMed] [Google Scholar]

- Leknes S, Tracey I. A common neurobiology for pain and pleasure. Nat Rev Neurosci. 2008;9:314–320. doi: 10.1038/nrn2333. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Litton A, Öhman A. Karolinska directed emotional faces. Stockholm: Karolinska Institute; 1998. [Google Scholar]

- Miller NE. Liberalization of basic S-R concepts: extensions to conflict behavior, motivation and social learning. In: Koch S, editor. Psychology: a study of a science. New York: McGraw-Hill; 1959. [Google Scholar]

- Montague PR, Hyman SE, Cohen JD. Computational roles for dopamine in behavioural control. Nature. 2004;431:760–767. doi: 10.1038/nature03015. [DOI] [PubMed] [Google Scholar]

- Montague PR, King-Casas B. Efficient statistics, common currencies and the problem of reward-harvesting. Trends Cogn Sci. 2007;11:514–519. doi: 10.1016/j.tics.2007.10.002. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP. Lights, camembert, action! The role of human orbitofrontal cortex in encoding stimuli, rewards, and choices. Ann N Y Acad Sci. 2007;1121:254–272. doi: 10.1196/annals.1401.036. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP, Dayan P, Friston K, Critchley H, Dolan RJ. Temporal difference models and reward-related learning in the human brain. Neuron. 2003;38:329–337. doi: 10.1016/s0896-6273(03)00169-7. [DOI] [PubMed] [Google Scholar]

- O'Doherty J, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- Parkinson JA, Robbins TW, Everitt BJ. Selective excitotoxic lesions of the nucleus accumbens core and shell differentially affect aversive Pavlovian conditioning to discrete and contextual cues. Psychobiology. 1999;27:256–266. [Google Scholar]

- Phillips PE, Walton ME, Jhou TC. Calculating utility: preclinical evidence for cost-benefit analysis by mesolimbic dopamine. Psychopharmacology. 2007;191:483–495. doi: 10.1007/s00213-006-0626-6. [DOI] [PubMed] [Google Scholar]

- Prelec D, Loewenstein G. The red and the black: mental accounting of savings and debt. Marketing Science. 1998;17:4–28. [Google Scholar]

- Salamone JD, Correa M. Motivational views of reinforcement: implications for understanding the behavioral functions of nucleus accumbens dopamine. Behav Brain Res. 2002;137:3–25. doi: 10.1016/s0166-4328(02)00282-6. [DOI] [PubMed] [Google Scholar]

- Schimmack U. Pleasure, displeasure, and mixed feelings: are semantic opposites mutually exclusive? Cogn Emot. 2001;15:81–97. [Google Scholar]

- Schultz W. Behavioral theories and the neurophysiology of reward. Annu Rev Psychol. 2006;57:87–115. doi: 10.1146/annurev.psych.56.091103.070229. [DOI] [PubMed] [Google Scholar]

- Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- Schweimer J, Saft S, Hauber W. Involvement of catecholamine neurotransmission in the rat anterior cingulate in effort-related decision making. Behav Neurosci. 2005;119:1687–1692. doi: 10.1037/0735-7044.119.6.1687. [DOI] [PubMed] [Google Scholar]

- Seymour B, McClure SM. Anchors, scales and the relative coding of value in the brain. Curr Opin Neurobiol. 2008;18:173–178. doi: 10.1016/j.conb.2008.07.010. [DOI] [PubMed] [Google Scholar]

- Seymour B, O'Doherty JP, Dayan P, Koltzenburg M, Jones AK, Dolan RJ, Friston KJ, Frackowiak RS. Temporal difference models describe higher-order learning in humans. Nature. 2004;429:664–667. doi: 10.1038/nature02581. [DOI] [PubMed] [Google Scholar]

- Seymour B, Singer T, Dolan R. The neurobiology of punishment. Nat Rev Neurosci. 2007;8:300–311. doi: 10.1038/nrn2119. [DOI] [PubMed] [Google Scholar]

- Sutton RS, Barto AG. Reinforcement learning: an introduction. Cambridge, MA: MIT; 1998. [Google Scholar]

- Talairach J, Tournaux P. Co-planar stereotaxic atlas of the human brain. Stuttgart, Germany: Thieme; 1998. [Google Scholar]

- Tobler PN, O'Doherty JP, Dolan RJ, Schultz W. Reward value coding distinct from risk attitude-related uncertainty coding in human reward systems. J Neurophysiol. 2007;97:1621–1632. doi: 10.1152/jn.00745.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Valentin VV, Dickinson A, O'Doherty JP. Determining the neural substrates of goal-directed learning in the human brain. J Neurosci. 2007;27:4019–4026. doi: 10.1523/JNEUROSCI.0564-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton ME, Bannerman DM, Alterescu K, Rushworth MFS. Functional specialization within medial frontal cortex of the anterior cingulate for evaluating effort-related decisions. J Neurosci. 2003;23:6475–6479. doi: 10.1523/JNEUROSCI.23-16-06475.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Walton ME, Rudebeck PH, Bannerman DM, Rushworth MFS. Calculating the cost of acting in frontal cortex. Ann N Y Acad Sci. 2007;1104:340–356. doi: 10.1196/annals.1390.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiskopf N, Hutton C, Josephs O, Deichmann R. Optimal EPI parameters for reduction of susceptibility-induced BOLD sensitivity losses: a whole-brain analysis at 3 T and 1.5 T. Neuroimage. 2006;33:493–504. doi: 10.1016/j.neuroimage.2006.07.029. [DOI] [PubMed] [Google Scholar]