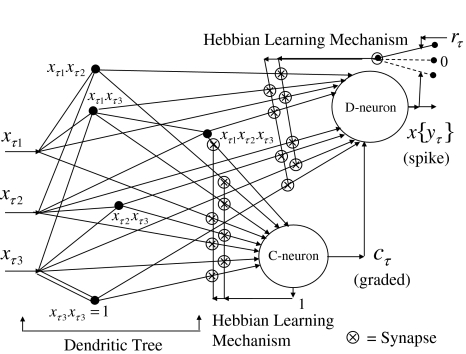

Fig. 3.

The structural diagram of the PU (processing unit) in Example 3 and Example 4. The dendritic tree is the orthogonal expansion of the input feature subvector xτ. The tree nodes are NXORs. A Hebbian learning mechanism for the D-neuron can perform supervised or unsupervised depending on whether the label rτ is provided from outside the PU or is the output x{yτ} of the D-neuron. A “pseudo-Hebbian” learning mechanism for the C-neuron performs only unsupervised learning and always uses 1 in so doing. While the D-neuron output spike trains, the C-neuron generates graded signals to modulate the D-neuron