Abstract

This paper reviews recent aspects of my research. It focuses, first, on the idea that during the perception of objects and people, action-based representations are automatically activated and, second, that such action representations can feed back and influence the perception of people and objects. For example, when one is merely viewing an object such as a coffee cup, the action it affords, such as a reach to grasp, is activated even though there is no intention to act on the object. Similarly, when one is observing a person's behaviour, their actions are automatically simulated, and such action simulation can influence our perception of the person and the object with which they interacted. The experiments to be described investigate the role of attention in such vision-to-action processes, the effects of such processes on emotion, and the role of a perceiver's body state in their interpretation of visual stimuli.

Keywords: Action simulation, Action affordance, Attention, Eye-gaze, Emotion

There is an increasing appreciation for the intimate link between vision and action: that vision's primary role is to serve action and that action states of the body influence our understanding of the visual world. As Gibson (1979) noted, sensory systems specifically evolved to serve action, to allow an animal to interact with its environment. From running across an uneven surface to escape a predator, to climbing a tree to grasp an apple, vision provides information aboutthe actions objects afford. Hence a major role of vision is to extract information that determines how the body can interact with the object: A small round-shaped apple affords grasping by the hand, a chair affords sitting, and steps afford foot responses to climb.

There is now substantial evidence that this conversion from vision into action is automatic, taking place even when a person has no intentions to act on a viewed object (see Tipper, 2004, for a review). For example, Tucker and Ellis (1998) showed that merely viewing an object that affords an action appears to activate that action. Thus, seeing a coffee cup activates the motor responses to grasp the cup, even though at no time is it necessary to actually grasp the cup. Such results support the notion that we represent our world primarily as opportunities for action (see Bub & Masson, in press, for boundary conditions).

This automatic conversion from vision to action also takes place, not just when looking at inanimate objects that can be acted upon, but also when viewing other people's actions. The discovery of mirror neurones (di Pellegrino, Fadiga, Fogassi, Gallese, & Rizzolatti, 1992) promoted this notion. Cells in ventral premotor cortex area F5 respond when an animal grasps an object in a particular manner. Importantly, however, the same cell will respond when the monkey sees another individual produce the same action. Thus the same neural system encodes the animal's own actions and responds when it observes another's action. It has been proposed that these mapping processes from other to self provide one means of understanding other people, by representing their actions as actions we could perform ourselves.

There is now extensive evidence that in humans this automatic simulation of another person's actions is a widespread phenomenon (e.g., Blakemore & Decety, 2001). For example, when seeing another person make a gaze shift to a particular location, our own attention systems and eye-movements make the same response, orienting our attention to the same location or object of interest (e.g., Friesen & Kingstone, 1998). Similarly, when we view a person smiling or frowning, we simulate the emotion expressed. That is, the face muscles associated with the emotion, such as the zygomaticus major cheek muscle when smiling and the corrugator supercilii brow muscle when frowning or angry, become automatically active in the person observing these emotions in someone else (e.g., Cannon, Hayes, & Tipper, 2009; Dimberg & Thunberg, 1998; Dimberg, Thunberg, & Elmehed, 2000). Finally, more extensive body actions such as grasping an object with the hands, or kicking an object such as a ball, also appear to be automatically simulated when they are viewed (e.g., Bach & Tipper, 2007; Brass, Bekkering, Wohlschlager, & Prinz, 2000; Hommel, Musseler, Aschersleben, & Prinz, 2001).

It is believed that these simulation processes may be a foundation of empathy, because they allow a viewer to experience another person's bodily states as if they were their own. The perception of another's pain is probably one of the clearest examples of how empathy may be achieved via simulations. For example, we all have experienced the emotional distress when we observe another person's injury, such as a child's finger trapped in a closing door. When experiencing pain, areas of the anterior cingulate cortex (ACC) and anterior insula appear to encode the emotional aspects of the pain, whereas somatosensory areas seem to encode its physical aspects. Interestingly, when one is observing someone else's body receive painful stimulation such as a pin-prick (e.g., Morrison, Lloyd, di Pellegrino, & Roberts, 2004), or the knowledge that a loved one is receiving pain (e.g., Singer et al., 2004), the same areas of ACC and insula are active. However, we (Morrison, Tipper, Adams, & Bach, 2010) have recently provided some evidence that a highly specific region of somatosensory area SII is also activated when a hand is seen to grasp a noxious object, such as a broken glass or lit match (see Figure 1). Hence empathy for others’ pain appears to rely on simulations of the emotional and physical aspects of the stimulus experience.

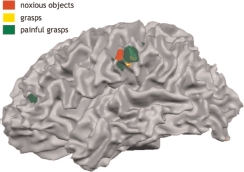

Figure 1.

Partly inflated cortical surface showing selective activation in left SII when viewing a hand approaching and grasping or withdrawing from a noxious pain-inducing object. Red is activation encoding noxious objects independent of whether they are grasped or not; yellow is the area encoding whether the object is grasped or not independent of the kind of object; and of most importance, green is a region activated when observing the specific action of grasping a noxious/painful object. Note also the activity in midcingulate cortex (MCC) in this last condition of grasping a noxious object, an area previously implicated in coding affective components of pain observation.

In the examples above there is a direct relationship between the visual stimulus and an action. Thus viewing the coffee cup affords grasping, viewing the gaze shift evokes a shift of attention and saccade, viewing kicking of a ball activates similar actions in the observer's motor system, and viewing painful injury activates emotion and somatosensory areas. However, it seems that the activation of motor systems when processing visual stimuli is even more extensive than was first thought. We have recently provided examples where the action system of a person can be activated even when a visual stimulus has no obvious action properties: that is, when viewing a face or a nonaction word.

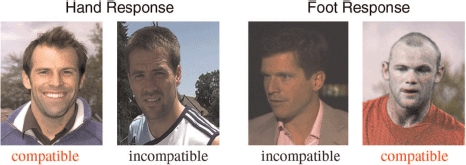

In one task we required participants to identify pairs of faces with either a foot key-press or a finger key-press. The faces clearly have no properties that could bias response of the hand versus the foot; likewise the use of motor responses with the hand or foot would not normally influence our ability to identify a face. However, when the faces to be identified were famous athletes whose special skill was associated with the foot, such as soccer player Wayne Rooney, and with the hand, such as tennis player Tim Henman, then motor compatibility effects were observed (see Figure 2). Thus even identifying a face can automatically activate motor-based representations associated with unrelated parts of the body (Bach & Tipper, 2006).

Figure 2.

These are some examples of the face stimuli presented in Bach and Tipper (2006). For this particular participant a finger key-press was required to identify the tennis player Greg Rusedksi (action compatible) and the soccer player Michael Owen (response incompatible), while a foot response was necessary to identify the tennis player Tim Henman (response incompatible) and the soccer player Wayne Rooney (response compatible). The images of Rusedski, Owen, Henman and Rooney were courtesy of Les Miller, Michael Kjaer, Andrew Haywood and Gordon Flood respectively.

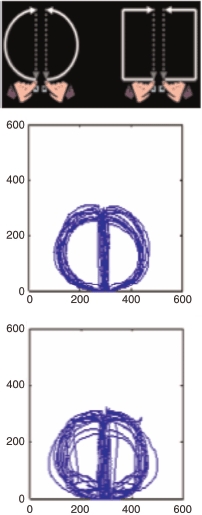

We found similar results when viewing words with no obvious action properties. For example, in one condition the task might require participants to lift their fingers from response keys and make a square movement with both hands to report whether the word denoted an object that was found in the house (e.g., television), or a round/circular-shaped movement to report whether the object would be found outside the house (e.g., moon). The top panel of Figure 3 shows the instruction screen used to guide participants in this somewhat unusual motor response. Although the shape of the object was irrelevant to the indoor/outdoor task, it nevertheless appeared to evoke action-based representations that influenced motor responses. Thus, while making a round shape action to report that an object is found outside the house, the reaction time (RT) to start the action and the movement time (MT) to complete the action were fast, and the trajectory describing the round shape was accurate (see the middle Panel of Figure 3), when the object's shape was round, such as the word “moon”. In contrast, RT and MT were slow, and hand path was disrupted when the object possessed a square shape, such as the word “billboard” (bottom panel of Figure 3). Hence, even though these words represent objects that do not evoke an action, semantic decisions nevertheless can interact with motor systems in some circumstances.

Figure 3.

The top panel shows the action instructions for participants. For this person they are required to make round shape actions when the word describes an outside object, and a square shaped action when the word describes an object typically found in a house. The lower panels depict examples of path trajectories. In these examples participants made a round action to report an object was found outside the house. The middle panel shows compatible trials where the object (e.g., moon) has a round shape, and a round action is produced. The bottom panel shows incompatible trials where the object has a square shape (e.g., billboard) while the round hand action is produced. Notice that in the compatible conditions the circle trajectories are more accurate (and reaction time, RT, to start and movement time, MT, to complete the action are fast), whereas in the incompatible conditions the shape of the object intrudes into the shape of the motor response to be produced (and RT and MT are slow). To view a colour version of this figure, please see the online issue of the Journal.

The research reviewed above indicates that embodiment processes, where our own body states are involved in representing the world around us, are widespread. In the following I discuss some of the research we have undertaken to further understand the processes that convert vision to action states: first, how these processes are guided by attention; second, how another person's eye-gaze is represented; third, how action simulations contribute to our emotional attitude toward objects and people we interact with; and fourth, how we use action simulations to understand other people. The core idea is that vision can evoke action automatically in many situations and that these evoked action states can in turn feed back and influence perceptual process. For example, perceiving a person produce an action can activate similar action states in the observer, which facilitates or inhibits the observer's own subsequent action; subsequently, this fluency of the observer's own action can then influence how the observed person or object is represented.

How attention influences the conversion of perception into action

One of the issues that we have been involved in has been to better specify the role of attention in theprocesses that convert vision into action. As noted, it seems the conversion from vision to action is automatic, in the sense that this process takes place even when an individual has no intentions of acting on the viewed stimulus. However, when we interact with complex environments in an effort to achieve specific goals, it is critical that our actions are directed towards the correct object at the appropriate moment in time. Therefore selection processes have evolved to enable sequential goal-directed behaviour. Allowing highly efficient vision-to-action processes to run to completion without selection processes would result in chaotic behaviour, where the strongest stimulus of the moment would capture and direct our actions. For example, when attempting to reach for my coffee cup, an irrelevant cup closer and ipsilateral to my hand could easily capture my action (Tipper, Lortie, & Baylis, 1992). One way in which attention could guide these processes would be by either enhancing or suppressing processing of the particular stimulus features that afford action.

As an initial investigation of the role of attention, we adapted the procedure of Tucker and Ellis (1998). As a reminder, Tucker and Ellis showed that even though the action evoked by the object was irrelevant to the task, this appeared to be encoded and influenced the speed of response. Thus if an object was oriented such that the right hand would be normally used to grasp it, right-hand key-press responses were facilitated.

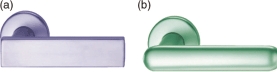

In our task, a door handle was presented to the centre of the computer screen (see Figure 4 for examples). Objects such as these automatically evoke action responses. For example, when these stimuli are viewed a right-hand grasp would be evoked, and when handles oriented in the opposite direction are viewed a left-hand response would be evoked. Previous studies have demonstrated that such response compatibility effects have been produced when participants decide whether the stimulus is normally oriented or inverted, or whether it is a kitchen or garage object, for example. Note that during these decision processes object shape must be analysed during the process of object recognition, and an object's shape is of course relevant to grasping actions. Therefore we wanted to see whether automatic vision-to-action processes also take place when attention is oriented towards a stimulus dimension that is unrelated to action (Tipper, Paul, & Hayes, 2006).

Figure 4.

Examples of the door handles used in Tipper et al. (2006). These handles evoke right-hand grasps; on other trials the handles were mirror reversed, evoking left-hand grasps. Exactly the same stimuli were presented to all participants: In one group they made left and right key-presses to report whether the shape was square (Panel A) or rounded (Panel B); another group made the same responses to report whether the handle was blue (Panel A) or green (Panel B). To view a colour version of this figure, please see the online issue of the Journal.

In our study we manipulated the object property that participants had to report. In one condition they had to report whether the handle had a square (e.g., Figure 4, Panel A) or rounded (e.g., Figure 4, Panel B) shape with left and right key-press responses. Because shape has a direct relationship with action, in that it determines the form of the hand as it grasps an object, clear stimulus-response compatibility effects were expected, and these were observed. In a second condition, while looking at the same displays participants were required to report the colour of the door handles. Colour of course is unrelated to action, in that whether a handle is blue (Panel A) or green (Panel B) will not affect the reach-to-grasp processes. And indeed, in this condition of colour identification the action affordance stimulus-response compatibility effects were significantly reduced.

Therefore such a result shows that even though vision-to-action processes are automatic, this is not what might be viewed as a strong form of automaticity. They depend on action-affording stimulus features being in the focus of attention. This suggests that one way in which attention can control ongoing behaviour is by either enhancing or suppressing the action-affording stimulus features. This was further confirmed in a study where the actions of another person were observed. Bach, Peatfield, and Tipper (2007) requiredparticipants to report the colour of a stimulus presented on different body parts of a static image of a viewed person. The task was to press a finger key to identify the patch of one colour (e.g., red) and to press a foot key to identify the other colour (e.g., blue). These colour patches were superimposed on different body parts of a person undertaking one of two actions: either kicking a soccer ball, or typing on a keyboard. The main manipulation was whether the colour was presented on a body site related to the action, such as the hand in the typing scene (e.g., right panel of Figure 5) or foot in the kicking scene, or on a body site unrelated to action such as the head (e.g., left panel of Figure 5).

Figure 5.

Examples of the static action images employed by Bach et al. (2007). The left panel shows a kicking action where the target (red colour patch in this example) is presented on the non-action-related body site of the head. On other trials the blue or red colour target would be presented on the foot. The right panel shows the typing scene where the target colour (in this example blue) was presented on the hand. In other trials the target could be presented on the head in this display. To view a colour version of this figure, please see the online issue of the Journal.

When a participant's attention was oriented to the body parts related to action, compatibility effects were produced. Thus if the colour was on the foot kicking the ball, foot colour identification responses were facilitated while hand responses were impaired, whereas if the colour was presented on the hand in the typing scene, finger responses were facilitated while foot responses were impaired. In contrast, if spatial attention was oriented towards the head, then no action compatibility effects were detected, even when the person was still seen performing the same typing or kicking actions. Therefore, again, attention does appear to guide the action simulation processes by either enhancing or suppressing processing of the action-relevant stimulus features.

It is noteworthy that in this study attending to and reporting colour did not prevent the conversion from vision into action, whereas in the study discussed above where inanimate door handles were viewed, attention to colour did disrupt the effect. We propose that the simulation processes triggered when viewing another person's actions are robust effects and not easily disrupted even when attending to colour, as long as spatial attention is oriented towards the action-relevant body site. Indeed, we also observed action priming when the observed person was passively standing or sitting producing no action, as long as attention was oriented to a body site such as the foot or hand. It is clear that orienting attention to locations on the body has potent and wideranging effects, as also reflected in the facilitation of the processing of tactile stimulation on observed body sites (e.g., Tipper et al., 1998, 2001).

In the studies described so far overt motor responses are required—for example, pressing keys with the fingers when processing door handles, which evoke hand responses, or finger and foot responses when viewing scenes evoking similar hand (typing) or foot (kicking) actions. However, it is possible that requiring overt actions with body parts related to viewed action (e.g., hand and/or foot) could feed back onto the perceptual processes encoding visual objects, selectively facilitating the processing of visual stimuli related to the action to be produced (e.g., Bub & Masson, in press; Humphreys & Riddoch, 2001). For example, by requiring participants to make responses by moving their foot, they become more sensitive to visual stimuli associated with this body part. Therefore, rather than measure behavioural stimulus response compatibility effects, we took a more direct measure of action processing. That is, we measured the mu rhythm over the motor cortex from electroencephalography (EEG) recordings in a task where no motor response was ever required (Schuch, Bayliss, Klein, & Tipper, 2010).

The mu rhythm is activity in the alpha frequency band (8–13 Hz) over sensorimotor cortex. It is most pronounced when a person is at rest and becomes suppressed during movement production, presumably reflecting desynchronization of firing of neuron assemblies in the motor system. Importantly, mu also becomes suppressed when a person is merely observing the movement of another person, indicating motor system activation during action observation (e.g., Gastaut & Bert, 1954; Kilner, Marchant, & Frith, 2006; Muthukumaraswamy, Johnson, & McNair, 2004).

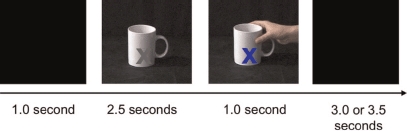

In our experiment, participants were required to observe videos of a hand grasping a coffee cup. Their task was simply to observe the video and monitor the number of occasions that a rare event happened. Thus during the EEG recordings no motor response was required. Figure 6 shows the sequence of events in typical trials. There were two tasks: In the attend–action condition participants had to attend to the form of the action. Most trials would be of one kind, such as a power grip at the top of the cup, whereas a small number of trials would be the occasional event of grasping the cup's handle. At the end of a block of trials the number of these rare handle grasps was reported. In the attend–colour condition, exactly the same videos were viewed, but participants now monitored the colour change of the X superimposed on the cup. For most trials the colour changed to blue, and participants were required to report at the end of the block the number of rare green colour changes (of course all these conditions were fully counterbalanced across participants).

Figure 6.

This figure depicts a typical trial in the mu experiment. There was a baseline display for 1 s, followed by the presentation of a cup; 2.5 s later the grasp started where the hand approached the cup from the right and grasped it either at the top or by the handle. At the point of grasp the grey X superimposed on the cup changed colour to either blue or green. Participants monitored either the colour change or the grasp action. To view a colour version of this figure, please see the online issue of the Journal.

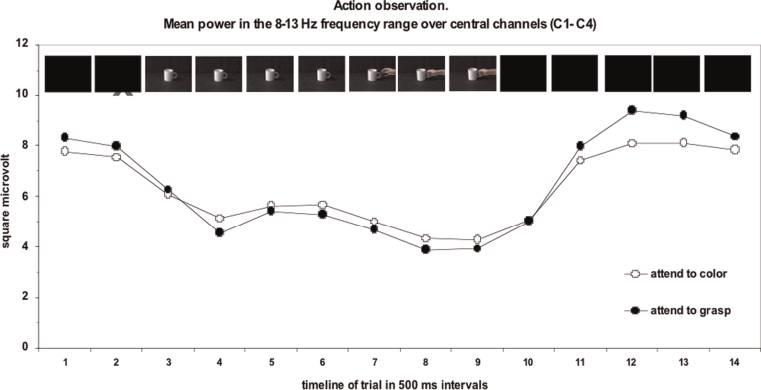

Figure 7 shows the mu rhythm data. As can be seen, compared to the baseline time period 1, the mu rhythm is suppressed when participants were merely observing an object and action (from the periods 3 to 9). The suppression is observed not only when participants are directly attending to the action but also when attending to the task-irrelevant property of colour change on the X. Therefore this is further support for the notion that observing another person's actions automatically activates the action system of the observer (this is especially the case when action movement is observed, as compared to observing static images of actions: See Bach & Tipper, 2007, for contrasts between static and moving stimuli). However, it should also be noted that although the effect is small, there is indeed greater mu suppression when attention is focused on the action than when it is focused on the colour.

Figure 7.

This figure shows the mu activity during action observation. At the top of the figure the sequence of visual stimuli is shown, and the mu suppression can clearly be seen as the action-evoking object becomes visible. After stimulus offset (time point 10) there is a rebound into the mu rhythm, and this is greater when participants were previously attending to action.

Unlike most studies of mu rhythms, we also examined EEG data after the trial was completed. Although it might be assumed that little of value can be detected during the rest period between trials when no stimuli are presented, the model of Houghton and Tipper (1994) makes specific predictions that can reveal the effects of attention after stimulus offset. Because the motor system appears to be automatically activated when one is viewing objects and other people's actions, there must be inhibitory control processes that prevent such actions from emerging as random overt behaviours driven by the visual environment. The cost of failed inhibition can be seen after lesions of the frontal lobe, where actions are continually captured by objects and other people's actions in the visible scene (e.g., utilization behaviour: Lhermitte, 1983; Luria, 1980). For example, even though able to verbalize that no actions should be produced, such individuals would automatically pick up a pen on the table in front of them and start to write.

We proposed that when attention is oriented towards a viewed action, the internal representations are more active, and hence focusing attention on action is more likely to result in overt behaviour. Therefore to prevent such overt responses greater levels of inhibition will be necessary when attending to action than when attending to colour. Houghton and Tipper (1994) describe a reactive inhibition model where the level of inhibition feeding back on to the activated representations is proportional to the activation levels of the representations. Hence greater activation when attending to action automatically results in greater levels of inhibitory feedback.

An important aspect of this model is that because the level of inhibition is proportional to the level of excitation, during stimulus processing the network is balanced. Hence while viewing a stimulus, there might not be a large difference between attending to action and attending to colour, as observed in our data. However, somewhat counterintuitively, the model actually predicts that after stimulus offset at the end of the trial, the differences between attention conditions can be more clearly revealed. This is because upon stimulus offset the excitatory inputs are terminated, revealing a transitory inhibitory state that will reflect the level of inhibition in the network. Therefore this predicts that the inhibitory rebound after stimulus offset will be larger after attending to action than after attending to colour. Examination of Figure 7 reveals just such a pattern of inhibitory rebound. Note that at the end of the trial (e.g., time period 12), the system rebounds back into the mu rhythm, but this rebound is larger after attending to action than after attending to colour. Such a result provides a glimpse of the inhibitory processes involved in controlling behaviour during action simulation and also confirms that attention does play a role in such action simulation processes. Other studies examining simulation of emotional expression via recording electromyographic (EMG) responses from face muscles associated with smiling (zygomaticus) and frowning (corrugator) confirmed that action is simulated even when ignored and that inhibition plays a role in the selection processes (Cannon et al., 2009).

Finally, a further converging approach attempted to investigate inhibitory control processes during action simulation. Although it is clear that basic actions, such as reach to grasp or eye-movements (see below), are simulated when we observe another person, there are also important selection mechanisms that determine which actions are produced by another person. As noted above, inhibition is critical for controlling our actions for successful achievement of goals. We examined whether there was any evidence for the internal representation/simulation of another person's inhibitory action selection processes (Schuch & Tipper, 2007).

A stop-signal task (e.g., Logan & Cowan, 1984) was employed because the inhibition of action is very salient to a viewer. In a typical procedure a visual stimulus is presented, and participants depress a response key as fast as possible. However, on some trials a stop-signal is presented, requiring participants to stop the action. The stop-signal could be a tone, or perhaps a change of colour or size, as in our studies. In such studies there are carry-over effects from one trial to another. That is, after an action is successfully stopped, response on the next go trial is slowed, and the ability to successfully stop an action is improved. This is assumed to reflect carry-over effects of prior inhibition (e.g., Rieger & Gauggel, 1999) as in tasks such as negative priming (e.g., Tipper, 1985).

In our study two people sat next to each other and participated in a stop-signal task. Stimuli were presented on a computer screen, and participants responded to alternate trials by reaching from a start location and depressing a response key common to both of them. In this task we provided evidence for the simulation of another person's inhibitory control processes. That is, after observing the other person successfully inhibit their action, the participant was more likely to fail to respond within the deadline for response, as would be expected if the observed inhibition were simulated.

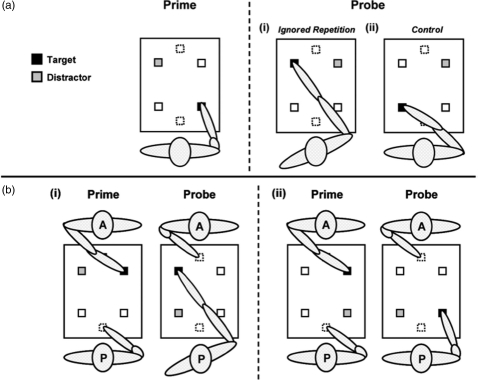

A further study revealed that not only do we simulate the inhibitory processes undertaken by another person, but we can do so by taking their viewpoint (Frischen, Loach, & Tipper, 2009). In a selective reaching task participants were required to reach towards target objects while ignoring distractors. Inhibition associated with a competing distractor can be revealed on the next trial where response to a target, which was a previously inhibited distractor, is impaired: This is negative priming (see Figure 8, Panel a). The inhibition associated with a distractor, as revealed via negative priming effects, is greater when it is closer to the participant's responding hand (see Figure 9, Panel a). This egocentric hand-centred effect emerges because objects closer to the hand win the race for the control of action and hence require greater levels of inhibition, as discussed above in the reactive inhibition model.

Figure 8.

Selective reaching task: Panel (a) represents the single person condition, illustrating the relationship between prime and subsequent probe responses in the ignored repetition condition (i) and the control condition (ii). Negative priming is revealed in longer reaction times in ignored repetition than in control trials. Panel (b) shows examples of ignored repetition trials in the dual person condition. The participant (P) observes the agent (A) perform the prime reach and then executes the probe reach. In (i), the salience of the prime distractor in the far-left location is high in terms of the agent's (allocentric) frame of reference, but low in terms of the participant's own (egocentric) frame of reference. In (ii), prime distractor salience (near-right location) is low in terms of the agent's frame of reference, but high in terms of the participant's egocentric frame of reference.

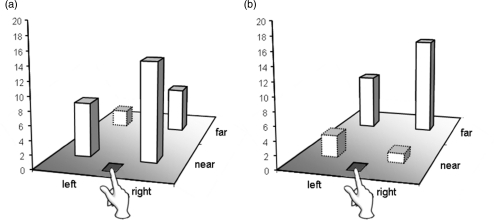

Figure 9.

Reaction time data representing the amount of negative priming (ignored repetition minus control; in ms) at each stimulus location in (a) the single person task and (b) the dual person task. Stimulus locations (near/right/far/left) correspond to the participant's frame of reference whose hand is positioned at the start point at the front of the display.

We were interested to know whether the inhibition required to select a target from a distractor was simulated when observing someone else make selective reaches. But more important, what frame of reference is simulated? Consider Figure 8, Panel b. The agent reaches in the prime display while being observed by the participant, and then the participant reaches in the subsequent probe display. If the simulated inhibition is represented in an egocentric frame based on the observing participant's hand, then negative priming would be larger in situations such as Panel ii, where the observed distractor is close to the participant's hand, than in the situation depicted in Panel i. That is, the negative priming when observing another person undertake a reach will be the same as when the participant undertakes the reach. In contrast, we hypothesized that simulation processes are more sophisticated. Participants are computing the task undertaken by the other person, where greater inhibition will be evoked when a distractor is closer to the observed agent's hand. That is, greater negative priming will be evoked in situations depicted in Panel i, where the distractor is close to the observed agent's hand, than in Panel ii. Figure 9, Panel b clearly supports the latter hypothesis, where negative priming is greater for a distractor far from the viewing participant's hand than when near, contrasting with situations where the participant produces both prime and probe responses (Figure 9, Panel a).

It is also noteworthy that we have recently observed a further boundary condition for such effects. When observing another person reach around an irrelevant distracting object, this primes the participant's own action only if the observed action is in the peripersonal/action space of the participant. That is, priming effects are only produced if the observed distractor is a potential distractor for the participant. If the observed action is outside the peripersonal/action space of the observing participant, no effects are produced (Griffiths & Tipper, 2009). Thus, when a participant observes the selective reaching actions of another person, two frames of reference influence action simulation. First, the observed person's selective reaching action is encoded from their action-centred perspective; but second, this only takes place if the action is within the peripersonal action space of the observer: a subtle interaction between the observed agent's and the participant's frame-of-reference.

In the studies reviewed above, attention influences the automatic conversion from vision to action, with inhibitory control processes being of particular importance. However, there is evidence for the opposite relationship where action can feed back and influence attention processes. In a further study, to search for this opposite data pattern, where action affordances facilitate attentional processes, we examined 2 patients with right parietal lesions resulting in visual extinction (di Pellegrino, Rafal, & Tipper, 2005).

Patients with this disorder may detect a stimulus in either the left or the right visual field as long as it is presented alone. However, when two stimuli are presented, one to the left and one to the right, the patient typically fails to report the one in the field contralateral to the lesion, in our case in the left visual field after right parietal lesions. Thus extinction entails an abnormal bias in visual attention in favour of ipsilesional right-side stimuli, with a failure to direct attention to contralateral left side of space. The idea tested was that if observing a handle, for example, automatically primes the motor programmes for its reaching and grasping within a perceiver, could such processes trigger orienting of attention to the site of the action (cf. Eimer, Forster, Van Zelzen, & Prabhu, 2005)?

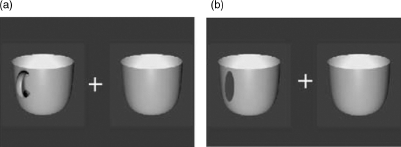

Cup stimuli were presented, and the patients simply had to report their presence. As expected, cups in the ipsilateral (right) visual field were always reported, whereas those in the contralateral (left) field were rarely reported during bilateral presentation. However, this result did not hold in one particular condition, and this is shown in Figure 10, Panel A. In this situation the cup in the contralateral (left) field evoked a reaching-and-grasp response from the left hand due to the location of its handle to the left side of the cup. This activation of motor response in the left visual field appears to have the effect of orienting attention to that side of space, aiding detection of the contralesional stimulus. Note that no such effects were found in control conditions where handles were replaced with patches equated for position, size, and mean luminance (see Figure 10, Panel B).

Figure 10.

Patients with visual neglect were required to report the presence of single and dual object displays, on “left”, “right”, or “both” sides. Typically detection of the cup on the left was poor when there was also a cup on the right. This was true except for the condition shown in Panel A, where left cup detection was significantly improved. In contrast, detection of the left cup remained poor in the stimulus control condition shown in Panel B.

Previous research has shown that explicit activation of motor-based representations can facilitate orienting of attention to the contralateral field. For example, asking patients to move or prepare to move the fingers of their left hand (e.g., Robertson & North, 1992) or asking them to search for an object that evokes a particular action (e.g., Humphreys & Riddoch, 2001) improves detection of stimuli presented towards the impaired region of space. However, our results showed that the effects of action on attention can be implicit and automatic, in that patients simply reported the presence of visual objects with a verbal response with no overt hand responses, and action-related information such as the location of a handle was irrelevant to their task. Nevertheless, even in these circumstances the action evoked by the handle was automatically encoded and shifted attention despite extensive damage to the right parietal lobule. That action-related information can be extracted by the visual system, even though it is unavailable for conscious report, implies that right inferior parietal cortexdoes not play a necessary role in mediating the automatic computation of vision to action.

In sum, there are two key properties of the vision-to-action processing system. First, it seems clear that when one is viewing an object that affords an action, or another person undertaking an action, the motor system of the observer is activated. Thus, the action of grasping a coffee cup is evoked by one simply viewing a coffee cup, and actions such as kicking a ball or typing are triggered when we see another person produce those actions. Furthermore, such evoked actions appear to attract attention and can facilitate detection of objects to the neglected side of space after parietal lesions. Such action simulation processes take place even though the participant has no intentions of producing similar actions. Second, even though the vision-to-action processes are automatic, attention nevertheless can play a role in these processes in some circumstances, increasing or decreasing activation states. In particular, attentional control processes are critical for enabling only appropriate behaviours to be produced, and we provide some evidence for the role of inhibition in preventing the overt modelling of viewed actions. Furthermore, observation of another individual controlling behaviour by inhibiting actions results in the simulation of such inhibition processes in the observer.

Eye-gaze and attention

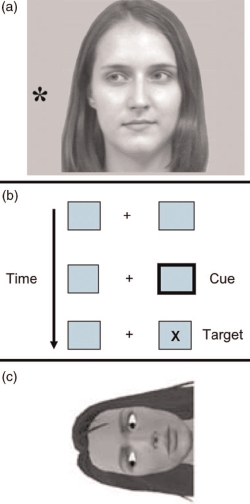

In the discussion above it was argued that one mechanism for understanding another person's actions and emotions was by simulating these actions. For example, activation of the same motor representations of another person might enable an intimate understanding of them by sharing their experiences. However, when interacting with other people it is important not only to be able to understand what they are currently doing and feeling, but also to be able to encode their current focus of attention, which might predict future actions. Hence, as with the simulation of body actions and emotions, there is also evidence for the simulation of another person's gaze direction. Thus, consider Figure 11, Panel A: When we observe a shift in gaze, our attention orients to the same location; in this case we rapidly orient to the left. Hence, when required to detect or identify a target, participants are faster when the target is presented to the left than to the right in this situation. This attention shift is a fast and automatic process in that even when instructed that future targets are more likely to be presented to the side of space opposite to the direction of gaze, attention nevertheless initially follows the gaze direction (e.g., Driver et al., 1999).

Figure 11.

Panel A shows an example of a leftward gaze cue. In such studies gaze would also be oriented to the right on 50% of the trials, and there was no relationship between the direction of gaze and the position of the asterisk target to be localized/detected. Panel B demonstrates peripheral/exogenous cueing. The task requires participants to detect the target X as fast as possible while ignoring the brief flicker of the box, which is the peripheral cue. The cue automatically orients attention, facilitating target processing at the attended location. However, after 300 ms this facilitation effect reverts to inhibition, where target detection is impaired at the cued location. Panel C represents an example of a face display employed to investigate head-centred gaze cueing. Typically targets are detected faster in the left than in the right side of the display in this situation. In this example, the face is oriented 90° anticlockwise from the upright; in other displays the face was oriented 90° clockwise from the upright, and the eyes gazed up and down equally often. To view a colour version of this figure, please see the online issue of the Journal.

Following the direction of another person's gaze is an example of joint attention, which has been found to emerge as early as 3 months (e.g., Hood, Willen, & Driver, 1998) and which is seen as an important step towards establishing social interactions (e.g., Moore & Dunham, 1995). The orientation of attention based on observed gaze direction is thought to reflect the activation of neural systems such as the superior temporal sulcus (STS) dedicated to decoding social stimuli (e.g., Perrett et al., 1985, for evidence from monkeys; Hoffman & Haxby, 2000, for humans), and the STS interacts with areas involved in orienting of attention such as the intraparietal sulcus (IPS).

There has now been a substantial amount of research investigating attention orienting evoked by perceived gaze shifts (see Frischen, Bayliss, & Tipper, 2007a, for review). Here I want to briefly comment on some studies that have further examined the attention processes activated when a gaze shift is observed. Although gaze cueing has social properties, which are reflected in, for example, interactions between gaze and face emotion and the effects of individual differences (e.g., Fox, Mathews, Calder, & Yiend, 2007, and see discussions below), in other ways gaze cueing of attention is similar to other forms of attention cueing. For example, as noted, a gaze cue triggers a fast and automatic orienting of attention to the gazed at location. This is similar to the effects of peripheral sudden onset cues. That is, when a cue is flashed in the periphery, targets presented at the cued location are detected faster than at the opposite side of space, and these facilitation effects can be detected within 100 ms of cue onset (see Figure 11, Panel B).

However, at longer cue–target intervals there is a dramatic difference between gaze and sudden onset cues. For the former gaze cues, the facilitative effects decline, such that by 1,000 ms there is no evidence for any cueing effect. In sharp contrast, at cue–target intervals longer than 300 ms peripheral cues produce an inhibition effect that lasts for at least 3 s and perhaps much longer (e.g., Tipper, Grison, & Kessler, 2003). This is known as inhibition of return (IOR) and is assumed to be a mechanism that prevents attention continuously orienting to a previously attended location, to facilitate search of the environment, as when foraging for food, for example (Posner & Cohen, 1984).

Because gaze cueing effects can no longer be detected by around 1,000 ms after the gaze shift, it was assumed that gaze cues do not evoke inhibition of return. However, we proposed that because of the social relevance of gaze shifts, there might be a delay in the onset of inhibition. That is, in contrast to the meaningless sudden onset peripheral cues often used to orient attention, human gaze shifts usually carry meaning. For example, during a social interaction, if a person suddenly breaks eye contact and looks to the periphery, it is reasonable to hypothesize that some interesting/important information has captured their attention, or, more importantly, that some event of interest is about to happen. In such a situation it may not be wise to rapidly inhibit information from that location after a brief attention shift to it.

We therefore tested gaze cueing effects at much longer intervals than previously investigated. That is, we varied the interval between cue and target from 200 to 1,200 to 2,400 ms. At the shorter intervals we replicated the usual finding of facilitation of target processing at the gazed-at location at 200 ms, but no cueing effects at 1,200 ms. But of most importance we detected IOR for the first time when the cue–target interval was 2,400 ms (Frischen & Tipper, 2004). In general it appears that gaze and peripheral cues can produce similar patterns of excitation followed by inhibition. However, in the case of gaze cues the period of initial excitation is prolonged, and there is adelay in the emergence of inhibition (see also Frischen, Smilek, Eastwood, & Tipper, 2007b, for further boundary conditions).

Further studies examined whether social gaze cues have other properties in common with other forms of attention orienting. Previous work has shown that attention can be object based, where processes of excitation and inhibition are not just directed to spatial location, but can be associated with objects (e.g., Tipper, Brehaut, & Driver, 1990; Tipper, Driver, & Weaver, 1991). For example, Tipper, Weaver, Jerreat, and Burak (1994) showed that IOR could be associated with both a location cued and with a moving object. That is, people are slower to process an object they have recently attended to, even if it has moved to a new location. Therefore we examined whether gaze cueing could also result in multiple frames of reference, where attention could be shifted in spatial-and head-centred frames.

Consider the face display shown in Figure 11, Panel C. When asked the somewhat odd question, “Is this person looking to the right or left?”, many people tend to report “left” in this forced-choice situation. This suggests that when encoding a face that is not in its normal orientation there is an automatic orientation to the normal upright view, and in this situation this face would indeed be looking left. That is, there is a form of momentum where it is assumed the face will not remain in the unusual orientation, but will shortly return to the normal upright position. Eye-gaze cueing studies have confirmed this anecdotal observation. Thus in this condition target detection is indeed slightly faster to the left than to the right side of the display. This supports the notion that gaze cueing is head centred (Bayliss, di Pellegrino, & Tipper, 2004). Of course, we also measured location-based gaze cueing in this situation and showed that target detection was also faster in the lower looked-at than the upper ignored location (Bayliss & Tipper, 2006a).

An issue that has to be considered concerns the relationship between experimental effects we interpret as due to attention processes and the influence of memory on these processes (e.g., Tipper, 2001). One proposal has been that attention states, such as inhibition, can be encoded into memory and retrieved at a later point in time (e.g., Kessler & Tipper, 2004; Tipper et al., 2003). This notion of retrieval of prior processes of attention has now been extended to gaze cueing. When another person makes a gaze shift, we have argued that this evokes similar processes in the observer, such that their attention moves to the same location. Frischen and Tipper (2006) demonstrated that such attention states can be retrieved from memory in some circumstances. That is, they showed that after viewing an image of a famous person making a gaze shift to the left or right, when that face was encountered 3 minutes later staring straight ahead, attention shifted to the location they were looking at 3 minutes earlier. Although limited to somewhat specific circumstances (see Frischen & Tipper, 2006, for details), it appears not only that we simulate another person's attentional states, but these are encoded in memory and can be retrieved when the person is next encountered, possibly influencing how we perceive the person, as discussed below.

As noted, gaze direction is an important social cue, as it is key to understanding the intentions of other people. That encoding another person's gaze shifts is important for social interactions is supported by the finding that the development of joint attention from gaze cues at 20 months can predict language development and theory of mind abilities at 44 months (Charman et al., 2001). We have shown that the properties of the gaze cueing attention system are similar to those of other attention systems, where IOR, multiple frames of reference, and retrieval from memory have been demonstrated. In particular, that another person's motor states are simulated, and such simulations can be retrieved from memory, has implications for emotion and person perception as discussed below.

Action simulation evokes emotion

It is clear that observation of another person's actions such as reaching and grasping (di Pellegrino et al., 1992) and gaze shifts (e.g., Grosbras, Laird, & Paus, 2005) evoke similar processes in the viewer. The same neural systems appear to be involved in producing particular actions and in perceiving those actions. That our body states can be involved in interpreting the world, especially in understanding other people, reflects embodied or grounded accounts of social cognition (e.g., Barsalou, 2003; Glenberg, 1997). Such a theory makes specific predictions about emotional responses and interpretations of complex social stimuli, emerging from the bodily states of a person. This is especially the case for emotional responses, as the following examples make clear (see Niedenthal, 2007, for review). Stepper and Strack (1993) required participants to maintain either an upright posture with the shoulders held back, or a slumped posture. When given the good news that they succeeded in an earlier test, those in the slumped posture felt less proud and reported more negative feelings such that it was not their achievement, but rather the test was easy. Similarly, when participants were required to hold a pen between the teeth, producing activation of the muscles associated with smiling (zygomaticus), they reported that jokes were funnier than when holding the pen between the lips, which inhibits smiling (Strack, Martin, & Stepper, 1988). Hence, as argued above, the state of one's body can influence the interpretation of one's own behaviour and objects in the environment.

We have investigated the role of body states, especially those determined by simulation of other people's actions, in a number of ways. It has been established that emotion can affect attention. That is, the emotional property of a stimulus can influence attention orienting. Thus a negative target stimulus in an array of distractors is detected faster than a neutral or positive stimulus, presumably reflecting fast preattentive encoding of emotion by the amygdala (e.g., Fenske & Eastwood, 2003; Fox et al., 2000). However, we have been investigating the opposite relationship where the attention processes of a person, which are activated when observing another individual, can influence how they feel about objects or other people.

For example, gaze direction tends to reflect emotion. That is, gaze tends to settle on objects that we decide we like (e.g., Shimojo, Simion, Shimojo, & Scheier, 2003). Thus we wondered whether, as well as observed gaze shifts triggering shifts of attention in the viewer, there was also simulation of the emotional responses they might trigger, and that these emotions may leave a trace in memory. Therefore, we tested whether the gaze shifts of other people, even though irrelevant to ongoing behaviour, might be internalized and produce emotional reactions in the viewer, and that these emotions might influence assessment of objects at a later point in time. In one study faces were presented to the centre of the display, and the eyes shifted to look left or right. The participant's task was to identify a single everyday target object (e.g., kettle) presented to the left or right of the central face. However, unbeknown to the participant we manipulated the relationship between the direction of gaze and object to be classified. Participants classified two examples of each type of object, such as a green kettle and a red kettle, and throughout the experiment gaze was always oriented to one of the kettles (e.g., red) and on other trials always away from the other kettle (e.g., green).

Typical cueing effects were observed, confirming no explicit awareness of gaze–object contingencies. However, in a later test where we presented the pairs of objects and asked which was preferred, there was a tendency to select the object that had been looked at by other individuals throughout the study (Bayliss, Paul, Cannon, & Tipper, 2006). A further study showed that this preference effect interacted with the emotion of the face viewing the object. If the face was smiling, then objects repeatedly looked at were preferred over those looked away from. In contrast, if the face expressed disgust, then the object looked at was not preferred relative to the one where gaze consistently looked away from it (Bayliss, Frischen, Fenske, & Tipper, 2007). Critically, these effects on liking are not produced when an arrow predictably orients attention towards certain objects. Therefore, it appears as if the effect emerges from a simulation of the preferencesof the person we are viewing, rather than just an effect of attention on speed of processing.

Further work extended the idea that simulation of other peoples’ actions can influence the emotional response to objects in the environment. As noted above, for survival in complex and dangerous environments, information able to guide action has to be extracted rapidly from the visual array. Actions that are a few milliseconds too slow, or a few millimetres in error, could result in death when fleeing a predator, for example. Given that conversion from vision to action is fundamental to survival, the fluency of such processes might evoke emotional responses, possibly mediated by dopamine networks. Therefore we investigated whether the simulation of others’ actions might involve the fluency/ease of the action undertaken by another person, and the simulation of such actions might also evoke an emotional response in the observer that is similar to when an action is produced fluently (Hayes, Paul, Beuger, & Tipper, 2008).

In a first study we wanted to demonstrate that fluent/easy actions do indeed result in greater liking of the object acted upon, as embodied or grounded accounts of cognitive processes would predict. The task simply required participants to pick up an everyday consumer product, such as a jar of coffee, and move it to a different location. Two conditions were examined. In the first, (see Figure 12, Panel B on the right) the reach was difficult in the sense that the object had to be moved around and placed behind an obstacle. The obstacle was a thin glass vase containing water, so a cautious reach was required. In the second condition, the reach was easier (see Panel A on the left of Figure 12), where no obstacle had to be avoided. Once the object was placed on the table, participants rated it for liking on a 9-point scale.

Figure 12.

Frames from video displays employed to study simulation of emotion emerging from action fluency. Panel A shows an easy reach where no obstacle has to be avoided. Panel B shows a difficult reach where the object has to be moved around and placed behind a fragile object. After viewing such displays participants tended to prefer objects after easy reaches were observed (Panel A), but only if the face gazing towards the action was visible. To view a colour version of this figure, please see the online issue of the Journal.

The fluency of this very simple motor task did influence how people felt about the object they had interacted with. Those objects in the more difficult obstacle avoidance condition were generally liked less than those in the easier no obstacle condition. Therefore fluency of action can have effects on emotion, where motor fluency is associated with the object acted upon, and participants like the object more. The critical question was, of course, what happened when such actions were merely observed, and participants made no motor responses throughout the experiment? In this new task video clips of the reaches shown in Figure 12 were presented, and participants simply sat passively observing the clip and gave liking ratings after the object was placed back on to the table. Therefore any effects on the liking of an object must be due to simulation of observed actions. We found that just as when actually moving objects around, people prefer objects after a simple action has been performed on them. Therefore this suggests that not only is action simulated when it is observed, but also the emotional effects of fluent action are simulated.

Finally we also considered the critical importance of gaze direction in these studies. As noted above, observing another person merely looking towards an object can influence how a participant feels about the object (e.g., Bayliss et al., 2006). In the current studies we also found that the action fluency manipulation only influenced a person's liking rating of the viewed object if the person's face in the video clip was visible. There were two effects: First, when the person was not seen to be looking towards the grasped objects, overall liking of objects was significantly less than when gaze towards the object was visible, confirming previous results. Second, when gaze was not visible, the contrast between fluent and nonfluent actions was no longer detected. Such findings are in line with single unit recording studies of Jellema, Baker, Wicker, and Perrett (2000). They found a population of cells in STS that responded when a particular reach-to-grasp action was viewed, but only when the individual producing the action was seen to look towards the action. Note also that in our conditions of visible or occluded eye-gaze the reach-to-grasp actions are identical, and hence preference effects for fluent and nonfluent actions are not caused only by some basic visual properties of the action—the actor's attention and intention is also critical.

We also investigated whether the viewpoint of an action could influence emotional responses. Therefore we presented images similar to thosejust described, where an object was grasped and moved. In this case, however, we compared firstperson egocentric views (Figure 13) with third-person allocentric views (Figure 12). Previous work has shown that when actions are viewed from an egocentric first-person perspective subsequent imitation is faster and more accurate, indicating that the visual properties of the movements can be transformed into one's own action more fluently (Jackson, Meltzoff, & Decety 2006). But even more interestingly, there is evidence that viewing actions from a first-person perspective activates different brain areas, especially those involved in the perception of bodily states and those involved in emotional processing. That is, in contrasting first-versus third-person views of action, David et al. (2006) showed greater activation in midline structures such as the medial prefrontal cortex (MPFC) and orbitofrontal cortex, areas thought to be core components for the sense of self as well as cingulate cortex and the posterior insula associated with monitoring states of the body. They also observed greater activity when viewing firstperson action in limbic structures such as the amygdala—areas associated with emotional encoding of stimuli.

Figure 13.

A frame from a video showing reaches from an egocentric perspective. We found that objects were liked more when participants viewed actions from this egocentric perspective than from the allocentric third-person view described in Figure 12. To view a colour version of this figure, please see the online issue of the Journal.

Therefore we predicted that when viewing actions from a first-person perspective there would be more activation of emotion centres in the brain, greater somatosensory/proprioceptive “feelings” of doing the action, and a greater sense of fluency. We hypothesized that such processes would result in more ease during such action observation, and perhaps such positive affect would be associated with the manipulated object. Just such effects were indeed observed. People consistently reported that when viewing actions, they preferred objects that were manipulated from a first-person egocentric perspective more than those manipulated from a third-person allocentric viewpoint. This further supports the idea that body states activated by merely observing a visual object being manipulated can feed back on to the object viewed, influencing how a person feels about the object.

It is noteworthy that our measures of emotion in the studies described above were explicit. That is, participants were required to think about how they felt about objects or people and attempt to provide a number from a scale that reflects their emotional feelings. This of course is a somewhat artificial situation, as we do not respond in such a controlled and conscious manner when we typically interact with our everyday environment. Rather, many of our emotional reactions are not carefully scrutinized, but guide our behaviour towards and away from objects and situations in an implicit manner.

Therefore we reexamined situations with different visuomotor fluency, but now used implicit/nonconscious measures of liking. Thus we employed electromyography (EMG) to record from the zygomaticus and corrugator face muscles (Cannon, Hayes, & Tipper, in press). As noted before, these muscles reflect our emotions, with greater activity in the zygomaticus cheek muscle associated with smiling when we are viewing someone else smiling, or when perceptual processing is more fluent (e.g., Winkielman & Cacioppo, 2001). Thus recording of facial muscles enables us to detect a person's subtle emotional reactions. We found similar effects during vision-to-action processing depending on the fluency/ease of the action. That is, when actions were a little faster and more accurate the zygomaticus muscle became more active, reflecting the participant's positive emotional reaction to such action fluency. This was an implicit measure of emotion, as at no time during the recording of the EMGs were participants ever asked to consider their feelings towards objects that they viewed.

In sum, these studies show that positive affect can be evoked when one is interacting with objects, or when merely observing another person interact with an object, and this can be detected with explicit conscious reports and with implicit nonconscious EMG measures. The emotional response emerging from a body state, in this case action fluency, may be the mechanism by which long-term preferences emerge. Thus, the emotion evoked by action fluency informs the actor about the environment and reinforces fluent action and the selection of objects that aid fluent action. Finally, the emotion effects of viewing action only emerge when the actor is seen to look at the object, supporting the notion that shared attention between the actor and observer is a necessary requirement for actions to be simulated and for empathic states between people to be achieved.

Action simulation and person perception

In the studies just described, simulation of another person's actions, such as their direction of gaze/attention, or the fluency of their actions, influenceshow we feel about objects they attend and act on. However, we also wanted to investigate whether such simulation processes could also influence how people interpret other people. That is, could personal traits such as trustworthiness, or interests/skills, be influenced via action observation and the resulting internal simulations?

To examine trust we again manipulated probability of gaze cueing. However, unlike the studies above, which manipulated whether particular objects were always looked at or always ignored, we now manipulated whether a particular person always looked towards or always looked away from targets in the visual field (Bayliss & Tipper, 2006b). Even though gaze direction is highly predictive of another person's point of interest, and hence extremely valuable during social interactions, gaze direction can also be used to deceive (e.g., Emery, 2000). For example, an individual wishing to hide from another person something of value, such as food, would tend to look away from the point of interest.

In our experiment there were three sets of faces. In the 50/50 group, each face gazed towards or away from to-be-identified target objects equally often. This is the standard procedure employed in gaze cueing studies. In a second group, each face always looked towards targets over 12 presentations; this was the predictive group. And in the third group of faces, the individuals never looked towards targets, always looking in the opposite direction across 12 exposures, and this was the nonpredictive group.

Surprisingly, in terms of cueing, whether faces always looked towards or always looked away from targets made absolutely no difference, as the cueing effects were the same as those typically found with 50/50 faces. Furthermore, not only were attention shifts unaffected by gaze probability, but participants were also unaware that the faces differed when questioned in a debriefing session. Hence it would appear that we are insensitive to whether another person was reliable and always looked towards targets and hence aided our behaviour, or whether a person always looked away from targets, orienting our attention in the wrong direction. However, when we presented pairs of faces, such that one had always looked to targets, and the other never had, and then asked who was more trustworthy, participants tended to select the face that had looked at targets.

Interestingly, in a follow-on study we did not initially replicate this effect, but then discovered that the detection of a person's trustworthiness only seems to take place when they are smiling, rather than expressing a negative or neutral emotion (Bayliss, Griffiths, & Tipper, 2009). It may be the case that in the context of friendly smiling faces, breaking of social norms when looking away from objects is a more salient cue to deception. Certainly a person attempting to deceive another is more likely to smile to encourage trust (consider the second-hand car salesman); hence sensitivity to cues to trust would need to be high, relative to an explicitly hostile encounter when viewing an angry person. Furthermore, this latter result would tend to rule out more general associative effects, where, for example, arrows of a particular colour that always pointed to targets would be “trusted” more. That an individual's emotion can alter the cueing/trust effect supports the role of gaze perception in sophisticated and complex social interactions.

Finally we wanted to further test predictions that emerge from embodied accounts of perception and action and extend the results from trustworthiness to other personal traits. That is, could the fluency of the visual–motor processing states of a person influence how they interpret another person's personal attributes/interests? In other words, can one's own body state be misattributed to that of another person? A version of the task described in Figure 5 was used. However, in this situation no colour patches were presented, and the actions were shown as short video clips rather than static images. Participants were required to identify the two individuals “George” and “John” with either a foot or a finger response. In the first part of the experiment we replicated motor compatibility effects. Thus, when participants were identifying George with a foot response, responses were faster and more accurate when a video of George kicking a ball was viewed than of him typing on a keyboard. Of course theopposite result was found for John: When participants were identifying him with a finger key-press, responses were faster and more accurate when he was viewed typing than when kicking a ball.

However, the next stage of the study was more important. Faces of George and John were presented, and participants were required to rate how sporty or academic they felt each person was. We discovered that the participant's motor fluency while identifying George and John in the first part of the experiment determined what kind of people they subsequently felt George and John were. That is, if George was more fluently identified with a foot response while he was kicking a ball and more slowly with greater errors when seen typing, George was considered to be a sporty rather than academic person. For John the opposite personal-trait assignments were produced (Bach & Tipper, 2007).

Not only were these personal-trait effects observed with explicit decisions when participants were rating George and John on sporty/academic scales, but they were also detected with implicit measures in a priming study where participants were never asked to explicitly rate George and John. Further studies by Tipper and Bach (2008) demonstrated that the effects were not determined by association of motor responses with personal traits, such as foot responses associated with sport (kicking, running, jumping, etc.) and finger responses more associated with academic pursuits (typing, writing, etc.). And finally, the effect required observation of the person actually producing the actions of kicking or typing in a video clip, as static images of single action frames did not produce the personal-trait effects.

Therefore these experiments show that the effects of observing another person's behaviours can go in two directions. Initially there is simulation of the motor processes that another person is undertaking. When we see them kick the ball, our own motor responses for such an action become active, facilitating foot responses. However, the motor states activated in the perceiver are in turn used to interpret the actions of the person viewed: If our action is more fluid and efficient, we perceive the other person to be better at what they are currently doing. Evidence that our own body states influence how we perceive the world support embodiment accounts of cognitive processes (e.g., Barsalou, 2003).

CONCLUSION

This paper has reviewed a range of studies that my colleagues and I have undertaken over recent years. The central focus has been to investigate the processes that convert visual information into action-based representations—in particular the situations where people encounter objects associated with action: that is, objects a person typically acts on, such as grasping and drinking from a cup, and the actions that other people produce. Understanding how these stimuli are processed is crucial for basic core interactions with our inanimate world, but is also key to our understanding of other people and hence our human ability to thrive within social groups.

A key focus of the research programme has been to investigate the role of attention in these processes. It appears that conversion from vision to action is automatic in the sense that it takes place even when a person has no intention to produce an action, but nevertheless attention plays an important role. That is, when attending to an action, stronger action-based representations are activated, and, second, inhibitory processes play an important role in controlling overt behaviour while viewing action-evoking objects or other people's actions. Clearly the most obvious place where attention is key to action understanding is in terms of gaze shifts. Actually observing someone else's behaviour, such as a sudden look to the left, automatically triggers a shift of attention to the same location in the viewer. Our studies show that these gaze attention systems have similar properties to other attention systems, such as evoking IOR, but interestingly they also have unique properties such as evoking emotional reactions to objects looked towards, or emotional responses (e.g., trust) to the person seen to make the gaze shift. These and other results support the notion of embodied representations mediatingperception. Thus, body states are activated when viewing visual stimuli, and these body states are in turn used to interpret our visual world containing objects and people.

Future work should investigate the interactions between action encoding regions such as the STS and its interactions with brain areas involved in emotional encoding such as the amygdala and the orbitofrontal cortex. It is possible that emotional responses evoked during visuomotor processes are key to whether body states influence perception. That is, it is not just more fluent visuomotor processing that makes us like an object more, trust a person more, or believe a person has particular traits. Rather, I hypothesise that only if a positive emotion is evoked by fluent visuomotor processes are such effects obtained. Techniques such as EMG to directly monitor emotion online will confirm that emotion mediates many of the effects described in this paper.

Acknowledgments

The work described in this article was supported by the Economic and Social Research Council (ESRC; RES–000–23–0429) and Wellcome Trust (071924/z/03/z). I am indebted to the recent contributions of the following students and research collaborators: Patric Bach, Andrew Bayliss, Peter Cannon, Alexandra Frischen, Debbie Griffiths, Amy Hayes, Matthew Paul, and Stefanie Schuch.

REFERENCES

- Bach P., Peatfield N., Tipper S. P. Focusing on body sites: The role of spatial attention in action perception. Experimental Brain Research. 2007;178:509–517. doi: 10.1007/s00221-006-0756-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bach P., Tipper S. P. Bend it like Beckham: Embodying the motor skills of famous athletes. Quarterly Journal of Experimental Psychology. 2006;59:2033–2039. doi: 10.1080/17470210600917801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bach P., Tipper S. P. Implicit action encoding influences personal-trait judgments. Cognition. 2007;102:151–178. doi: 10.1016/j.cognition.2005.11.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barsalou L. W. Situated simulation in the human conceptual system. Amsterdam: Elsevier; 2003. [Google Scholar]

- Bayliss A. P., di Pellegrino G., Tipper S. P. Orienting of attention via observed eye-gaze is head-centred. Cognition. 2004;94:B1–B10. doi: 10.1016/j.cognition.2004.05.002. [DOI] [PubMed] [Google Scholar]

- Bayliss A. P., Frischen A., Fenske M. J., Tipper S. P. Affective evaluations of objects are influenced by observed gaze direction and emotional expression. Cognition. 2007;104:644–653. doi: 10.1016/j.cognition.2006.07.012. [DOI] [PubMed] [Google Scholar]

- Bayliss A. P., Griffiths D., Tipper S. P. Predictive gaze cues affect face evaluation: The effects of facial emotion. The European Journal of Cognitive Psychology. 2009;21:1072–1084. doi: 10.1080/09541440802553490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayliss A. P., Paul M. A., Cannon P. R., Tipper S. P. Gaze cueing and affective judgments: I like what you look at. Psychonomic Bulletin & Review. 2006;13:1061–1666. doi: 10.3758/bf03213926. [DOI] [PubMed] [Google Scholar]

- Bayliss A. P., Tipper S. P. Gaze cues evoke both spatial and object-centred shifts of attention. Perception & Psychophysics. 2006a;68:310–318. doi: 10.3758/bf03193678. [DOI] [PubMed] [Google Scholar]

- Bayliss A. P., Tipper S. P. Predictive gaze cues and personality judgements: Should eye trust you. Psychological Science. 2006b;17:514–520. doi: 10.1111/j.1467-9280.2006.01737.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blakemore S. J., Decety J. From the perception of action to the understanding of intention. Nature Reviews Neuroscience. 2001;2:561–567. doi: 10.1038/35086023. [DOI] [PubMed] [Google Scholar]

- Brass M., Bekkering H., Wohlschlager A., Prinz W. Compatibility between observed and executed finger movements: Comparing symbolic spatial and imitative cues. Brain & Cognition. 2000;44:124–143. doi: 10.1006/brcg.2000.1225. [DOI] [PubMed] [Google Scholar]

- Bub D. N., Masson M. E.J. (in press). Grasping beer mugs: On the dynamics of alignment effects induced by handled objects. Journal of Experimental Psychology: Human Perception & Performance. doi: 10.1037/a0017606. [DOI] [PubMed] [Google Scholar]

- Cannon P. R., Hayes A. E., Tipper S. P. An electromyographic investigation of the impact of task relevance on facial mimicry. Cognition & Emotion. 2009;23:918–929. [Google Scholar]

- Cannon P. R., Hayes A. E., Tipper S. P. (in press). Sensorimotor fluency influences affect: Evidence from electromyography. Cognition & Emotion. Advance online publication. doi:10.1080/02699930902927698.

- Charman T., Baron-Cohen S., Swettenham J., Baird G., Cox A., Drew A. Testing joint attention, imitation and play as infancy precursors to language and theory of mind. Cognitive Development. 2001;15:481–498. [Google Scholar]

- David N., Bewernick B. H., Cohen M. X., Newen A., Lux S., Fink G. R. Neural representations of self versus other: Visual-spatial perspective taking and agency in a virtual ball tossing game. Journal of Cognitive Neuroscience. 2006;18:898–910. doi: 10.1162/jocn.2006.18.6.898. [DOI] [PubMed] [Google Scholar]

- Dimberg U., Thunberg M. Rapid facial reactions to emotional facial expressions. Scandinavian Journal of Psychology. 1998;39((1)):39–45. doi: 10.1111/1467-9450.00054. [DOI] [PubMed] [Google Scholar]

- Dimberg U., Thunberg M., Elmehed K. Unconscious facial reactions to emotional facial expressions. Psychological Science. 2000;11((1)):86–89. doi: 10.1111/1467-9280.00221. [DOI] [PubMed] [Google Scholar]

- di Pellegrino G., Fadiga L., Fogassi L., Gallese L., Rizzolatti G. Understanding motor events: A neurophysiological study. Experimental Brain Research. 1992;91:176–180. doi: 10.1007/BF00230027. [DOI] [PubMed] [Google Scholar]