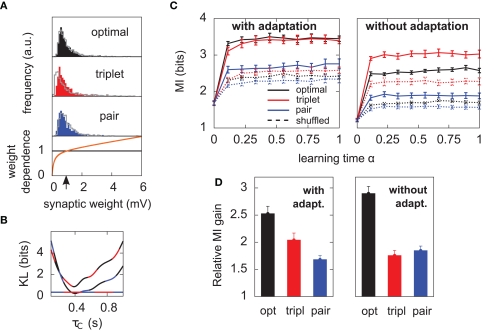

Figure 5.

Results hold for “soft-bounded” STDP. The experiments of Figure 4 are repeated with soft-bounds on the synaptic weights (see Materials and Methods). (A) Bottom: LTP is weight-independent (black line), whereas the amount of LTD required by each learning rule (Δw < 0) is modulated by a growing function of the momentary weight value (orange curve). The LTP and LTD curves cross at w0 = 1 mV, which is also the initial value of the weights in our simulations. Top: this form of weight dependence produces unimodal but skewed distributions of synaptic weights after learning, for all three learning rules. The learning paradigm is the same as in Figure 4. Gray lines denote the weight distributions when adaptation is switched off. Note that histograms are computed by binning all weight values from all learning experiments, but the distributions look similar on individual experiments. In these simulations λ = 0, a = 9, and τC = 0.4 s. (B) The parameter τC of the optimal learning rule has been chosen such that the weight distribution after learning stays as close as possible to that of the pair and triplet models. τC = 0.4 s minimizes the KL divergences between the distribution obtained from the optimal model and those from the pair (black-blue) and triplet (black-red) learning rules. The distance is then nearly as small as the triplet-pair distance (red-blue). (C) MI along learning time in this weight-dependent STDP scenario (cf. Figures 4B,C). (D) Normalized information gain (see text for definition).