Abstract

Sensory information in the retinal image is typically too ambiguous to support visual object recognition by itself. Theories of visual disambiguation posit that to disambiguate, and thus interpret, the incoming images, the visual system must integrate the sensory information with previous knowledge of the visual world. However, the underlying neural mechanisms remain unclear. Using functional magnetic resonance imaging (fMRI) of human subjects, we have found evidence for functional specialization for storing disambiguating information in memory versus interpreting incoming ambiguous images. Subjects viewed two-tone, “Mooney” images, which are typically ambiguous when seen for the first time but are quickly disambiguated after viewing the corresponding unambiguous color images. Activity in one set of regions, including a region in the medial parietal cortex previously reported to play a key role in Mooney image disambiguation, closely reflected memory for previously seen color images but not the subsequent disambiguation of Mooney images. A second set of regions, including the superior temporal sulcus, showed the opposite pattern, in that their responses closely reflected the subjects' percepts of the disambiguated Mooney images on a stimulus-to-stimulus basis but not the memory of the corresponding color images. Functional connectivity between the two sets of regions was stronger during those trials in which the disambiguated percept was stronger. This functional interaction between brain regions that specialize in storing disambiguating information in memory versus interpreting incoming ambiguous images may represent a general mechanism by which previous knowledge disambiguates visual sensory information.

Introduction

The perception of two-tone or “Mooney” images provides a striking demonstration of visual disambiguation through the application of previous knowledge. When viewed for the first time, a Mooney image appears to be an unrecognizable pattern of black and white regions. However, after viewing a full color or gray-level version of the Mooney image, it becomes easy to interpret. The disambiguation of the pattern of black and white regions is rapid and can be extremely compelling (Gregory, 1973; Dolan et al., 1997; Moore and Cavanagh, 1998; Andrews and Schluppeck, 2004; McKeeff and Tong, 2007; Hsieh et al., 2010) (see Fig. 1A).

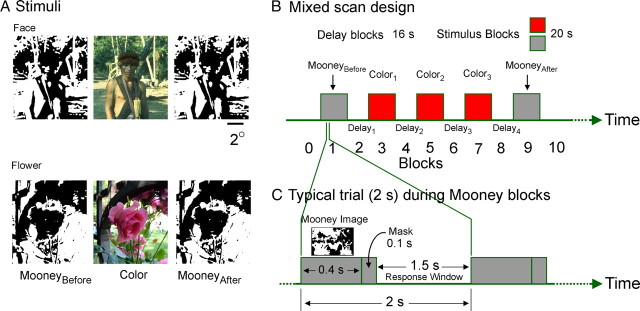

Figure 1.

Stimuli and scan paradigm. A, Stimuli. In the main experiment (shown in this panel), subjects viewed two-tone (Mooney) versions of natural images of faces or flowers before and after viewing the full color counterparts of the same images. In the control experiment (data not shown), the color images were unrelated to the Mooney images. B, The events-within-block (i.e., mixed) scan design. Each scan featured 10 unfamiliar images of either faces or flowers presented in blocks (rectangles), with three color image blocks interspersed between the two Mooney image blocks. During each 20 s stimulus block, stimuli were presented every 2 s. Each stimulus block was preceded and followed by a 16 s delay block. C, Composition of the Mooney blocks. Either Mooney block consisted of 10 consecutive 2 s trials during which the subjects rated each Mooney image on a scale of 1 (most ambiguous) to 4 (least ambiguous). During the color blocks, the subjects viewed the color counterparts of the same set of images presented for 2 s in a reshuffled order (data not shown) but did not rate the stimuli. For details, see Materials and Methods.

Learning to interpret Mooney images can be understood as an extreme case of knowledge-mediated disambiguation that is part and parcel of normal visual perception (Gregory, 1973; Cavanagh, 1991; Dolan et al., 1997; Moore and Cavanagh, 1998; Epshtein et al., 2008). Natural images have inherent objective ambiguities regarding the scene causes of their features, yet our subjective interpretation of an image is rarely ambiguous (Kersten and Yuille, 2003). Furthermore, not all causes are equally informative. For example, an image edge can be caused by a change in illumination or a change in depth, but depth change (or orientation or material) is a more useful source of information for recognition (Biederman, 1985; Naor-Raz et al., 2003). Computational vision studies have shown that unambiguous image interpretation (or “parsing”) can be achieved through scene knowledge-mediated selection and integration of multiple weak cues (Tu et al., 2005; Epshtein et al., 2008). Thus, disambiguation likely occurs at multiple levels, in particular at the levels of both feature selection and decision stages of recognition. By introducing subjective ambiguity through drastic bit reduction, not dissimilar to night vision, Mooney images provide a useful tool for investigating how the human visual system uses previous knowledge to turn an ambiguous image into an interpretable scene. Changes in illumination or depth, for example, are now highly confusable, both being represented by sharp changes from white to black. Mooney quantization reduces the amount and quality of local features, necessitating greater reliance on previous knowledge for accurate interpretation (Cavanagh, 1991).

Several previous studies have identified multiple brain regions, including regions in the temporal lobe and the medial parietal cortex, that are preferentially responsive to Mooney images before versus after disambiguation (Dolan et al., 1997; Moore and Engel, 2001; Andrews and Schluppeck, 2004; McKeeff and Tong, 2007; Hsieh et al., 2010), indicating that these regions play a role in Mooney image disambiguation. However, the precise roles these regions play in this process remains unclear. For instance, do these regions play mutually equivalent, redundant roles, or are they specialized for various aspects of the disambiguation task? Does the same set of regions help both store the information from the disambiguating information in memory and apply it subsequently to disambiguating Mooney images? Conversely, if the regions are functionally specialized, how do they interact during disambiguation and how is the neural activity of the various regions and interaction among them related to disambiguation at the perceptual level?

Using functional magnetic resonance imaging (fMRI) of human subjects during Mooney image disambiguation, we report here evidence not only for a specialization between the memory and disambiguation functions but also for a disambiguation-specific link between them.

Materials and Methods

Subjects and fMRI

Ten adult human subjects (five females) with normal or corrected-to-normal vision participated in this study. All subjects gave previous informed consent. All protocols used in the study were approved in advance by the Institutional Review Board of the University of Minnesota.

Scans were performed as described previously (Hegdé et al., 2008). Briefly, blood oxygenation level-dependent (BOLD) signals were measured with an echo-planar imaging sequence (echo time, 30 ms; repetition time, 2000 ms; field of view, 220 × 220 mm2; matrix, 64 × 64; flip angle, 75°; slice thickness, 3 mm; interslice gap, 0 mm; number of slices, 28, slice orientation: axial, with the lowest slice below the bottom of the temporal lobes).

Stimuli and task

Stimuli (8° × 10° or 10° × 8° for pictures in landscape or portrait format, respectively) were unfamiliar natural images of faces or flowers (Corel Photo CD Library; Corel Corp.) or custom-generated two-tone versions thereof (Fig. 1A). Images were chosen so that a given image contained a face or a flower in the foreground and covered ∼15–60% of the area of the image. This meant that the images typically also contained other body/floral parts, given that the images were actual photographs.

Face images were chosen because the cortical responses associated with face perception in Mooney images have been well studied (Tovee et al., 1996; Dolan et al., 1997; Moore and Cavanagh, 1998; Andrews and Schluppeck, 2004; Jemel et al., 2005; McKeeff and Tong, 2007; Hsieh et al., 2010). Flower stimuli, rather than a broader set of objects (Dolan et al., 1997), were chosen so as to help control within-category perceptual variance, because some previous studies have reported that within-category perceptual variance has important effects on category-specific brain responses, although this has been debated (Rossion and Jacques, 2008 and references therein). Indeed, post hoc analyses of behavioral data (Table 1) indicated that face- and flower stimuli were indistinguishable in terms of the variance in ratings and reaction times (F tests, p > 0.05). Flowers, as opposed to another category of natural objects, were used because flowers are a familiar category of natural objects and tend to have stereotypical shapes in a manner broadly analogous to faces.

Table 1.

Behavioral results

| Response measure | MooneyBefore |

MooneyAfter |

||

|---|---|---|---|---|

| Faces | Flowers | Faces | Flowers | |

| Main experiment | ||||

| Mean ± SEM rating (1–4 scale) | 0.82 ± 0.05 | 0.73 ± 0.04 | 3.05 ± 0.07 | 2.80 ± 0.08 |

| Reaction time ± SEM (ms) | 1158 ± 13 | 1166 ± 13 | 1227 ± 13 | 1201 ± 12 |

| Control experiment | ||||

| Mean ± SEM rating (1–4 scale) | 0.81 ± 0.6 | 0.79 ± 0.7 | 1.15 ± 0.12 | 1.07 ± 0.16 |

| Reaction time ± SEM (ms) | 1142 ± 18 | 1148 ± 17 | 1161 ± 16 | 1171 ± 17 |

Each scan consisted of a series of five stimulus blocks alternating with six delay blocks (Fig. 1B). The stimulus blocks lasted 20 s each, and the delay blocks lasted 16 s each. Each scan followed an “events-within-block,” or mixed, design, in which each stimulus block consisted of 10 consecutive 2 s trials (Donaldson et al., 2001; Dosenbach et al., 2006) (Fig. 1C). The stimulus blocks consisted of a MooneyBefore block followed by three color blocks and by a MooneyAfter block. We used three color blocks because our pilot studies showed that this many blocks were needed to produce a reasonably consistent asymptotic Mooney learning, i.e., a mean disambiguation rating of ≥3.0 in ≥75% of MooneyAfter blocks for each subject (see below). The two Mooney blocks in a given scan consisted of an identical set of Mooney images but in randomized order.

We performed two experiments. In the “main” experiment, the color blocks consisted of full color versions of the corresponding Mooney images, also in a randomized order. In the “control” experiment, the color images were unrelated to Mooney images.

During each given scan of either experiment, 10 novel images of either faces or flowers (but never both) were presented. During Mooney blocks, stimuli were presented for 0.4 s each within 2 s trials (Fig. 1C), and the subjects rated the Mooney images on an ordinal scale, ranging from 1 (most ambiguous) to 4 (least ambiguous). During the color blocks, subjects did not rate the images but instead had to view carefully the color images that were presented for 2 s each. Each subject underwent four scans each for faces and flowers in the main experiment and two scans each in the control experiment.

Data analyses

Data were analyzed as described previously (Hegdé et al., 2008) except as noted otherwise. Briefly, data analyzes were performed using SPM5 (www.fil.ion.ucl.ac.uk/spm), BrainVoyager (www.brainvoyager.com), or custom-written software. For response comparisons involving MooneyBefore or MooneyAfter, selection bias is a potential concern because these conditions were involved in defining the regions of interest (ROIs) in the first place. To ensure that our results were not attributable to such selection bias, we used an independent split-data analysis (Kriegeskorte et al., 2009) (also see Baker et al., 2007a,b; Hegdé et al., 2008), in which we defined ROIs using one-half of the data from each subject and performed all the selective data analyses using the other half of the data.

Within-subject statistical maps were generated using a general linear model of either individual blocks (block model) or trials (event model). The block model consisted of individual regressors for each block, along with a common task-set regressor at the start of each stimulus block (Wagner et al., 2005). Event models were identical to block models, except that each stimulus-block regressor was replaced with the regressors corresponding to the individual trials within that block.

Group statistical maps were constructed using a random-effects analysis of the individual within-subject block design maps. Regions of significant activation were identified using one-tailed t contrasts of MooneyAfter > MooneyBefore or MooneyAfter < MooneyBefore at p < 0.05 after correcting for multiple comparisons (Toothaker, 1993). Clusters with >50 contiguous voxels (with each voxel at p < 0.05 after correcting for multiple comparisons) were further analyzed. Corresponding statistical maps generated using event models yielded similar results (data not shown), although they were not used in defining the regions.

The responses of individual regions were estimated using the MarsBaR utility (www.marsbar.sourceforge.net) and custom-written software. For blocked models, regression coefficient βi represented the estimate of the response of the given region to the given block i. Similarly for event models, the β values of individual events were taken as the response estimates of the corresponding events.

Tests of significance.

For hypothesis-driven comparisons, we used the nonparametric Mann–Whitney test. For data-driven pairwise comparisons, we used Tukey's honestly significant difference (HSD) test rather than more stringent corrections (e.g., Bonferroni's correction), because the former is more appropriate for multiple pairwise comparisons (Toothaker, 1993).

Response change index.

To calculate this index for Mooney stimuli, the response change using the main experiment was adjusted by the corresponding response change during the control experiment, using

|

where MA and MB were the responses during the MooneyAfter and MooneyBefore blocks estimated using the block model. We calculated the response change across color blocks (block 7 vs block 3) (for block numbering, see Fig. 1B) or delay blocks (block 8 vs block 2) in a similar manner. The p values were determined using randomization.

Similarly, the change of response between the last and the first color blocks were calculated as

|

where Color3 main and Color1 main were, respectively, the estimated responses during the last and the first color blocks during the main experiment, and Color3 control and Color1 control were the corresponding responses from the control experiment. The Response Change IndexDelay was similarly calculated by comparing the responses from Delay4 and Delay1 blocks of the main and control experiments.

Comparing BOLD versus behavioral responses: logistic regression

Modeling was performed separately for face versus flower data. In either case, we pooled the data across subjects, given the lack of statistical power for within-subject analyses. We regressed the behavioral rating mj elicited given stimulus j during the MooneyAfter block (dependent variable) on the BOLD response of a given region to the stimulus (independent variable) using the proportional odds model, which specifies the probability of observing a rating mj ≥ g given the BOLD response Xi from the brain region i as

where g = {1, 2, 3}, αg is the offset, and β1 is the common regression coefficient (Harrell, 2001). The modeling was implemented using the Design library (Harrell, 2001) in R software package (www.r-project.org) and custom-written R software.

Connectivity analyses.

Connectivity between a given pair of regions was assessed using the β series correlation analysis technique (Rissman et al., 2004; Gazzaley et al., 2007). Briefly, this technique measures the strength of functional connectivity between a pair of regions during a given condition as the correlation (i.e., coactivation) between the responses of the two regions during the condition, as measured by the corresponding regression coefficients. We measured the pairwise connectivity between the regions that provided significant fit in the aforementioned regression analysis. We used the regression coefficients determined by the event model as estimates of the BOLD response of a given region to a given condition. The statistical significance of the correlation coefficient was determined using randomization (with corrections for multiple comparisons).

Results

To study the neural basis of Mooney image disambiguation, we used the standard sequential stimulus presentation design where subjects viewed, in order, a set of previously unseen Mooney images, a set of unambiguous (i.e., color) images, and the same set of Mooney images (Tovee et al., 1996; Moore and Cavanagh, 1998; Moore and Engel, 2001; Andrews and Schluppeck, 2004; Jemel et al., 2005; McKeeff and Tong, 2007; Hsieh et al., 2010) (for details, see Fig. 1 and Materials and Methods). This design allows the viewer to store the information from the color image in some type of memory and subsequently apply it to disambiguating the Mooney image, thus temporally separating the acquisition of the disambiguating information from the disambiguation process itself. Whether these two processes are mediated by different neural substrates is unknown. One possibility is that the same set of brain regions mediates both processes. Alternatively, the substrates may be specialized, with the two processes being mediated by two different sets of brain regions. To distinguish between these scenarios, we identified brain regions that are differentially responsive to Mooney images before versus after the exposure to color images and studied the extent to which the responses of these regions also reflect memory of the intervening color images.

We performed two experiments (main and control), in each of which subjects were scanned while learning to disambiguate Mooney images. In both experiments, the subjects rated the interpretability of a set of Mooney images of faces or flowers before and after viewing a set of color images (Fig. 1) (see Materials and Methods). In the main experiment, the Mooney and the color images were exact counterparts of each other, so that the subjects could use the information from the color images to disambiguate the corresponding Mooney images.

Although the exposure to the specific color images clearly helps disambiguate their Mooney counterparts, two additional, nonspecific exposure effects may also contribute to Mooney disambiguation: (1) Mooney learning attributable to repeated exposure to Mooney images (i.e., in which the initial viewing of the Mooney images helps prime the subsequent interpretability of the same images) (Moore and Cavanagh, 1998; Andrews and Schluppeck, 2004; Jemel et al., 2005) and (2) Mooney learning attributable to the exposure to any intervening color images (Tovee et al., 1996; Dolan et al., 1997; Moore and Cavanagh, 1998; Moore and Engel, 2001; Andrews and Schluppeck, 2004; Jemel et al., 2005; McKeeff and Tong, 2007; Hsieh et al., 2010). The control experiment, in which the intervening color images were unrelated to the Mooney images and thus provided no information for disambiguating specific Mooney images, helped us estimate the magnitude of these nonspecific learning effects. To help isolate the magnitude of Mooney learning resulting from memory of specific color images, we factored out the contribution of these nonspecific effects (see Materials and Methods).

Behavioral results showed that, as expected, Mooney images were better interpreted after exposure to the corresponding color stimuli (main experiment) (Table 1, top). The subjects' disambiguation rating of Mooney stimuli significantly improved for both faces and flowers after exposure to the corresponding color images (two-way ANOVA, exposure × object category; p < 0.05 for exposure factor). The disambiguation also significantly increased in the control experiment, although significantly less than the increase in the main experiment (ANCOVA, p < 0.05 for experiment factor). Thus, much of the learning was attributable to the exposure to the corresponding images and not to exposure effects per se, in accordance with previous studies (Moore and Cavanagh, 1998; Andrews and Schluppeck, 2004). Moreover, disambiguation ratings during the MooneyBefore blocks showed no significant trend across successive scans (Kruskall–Wallis test, p > 0.05 for scans). That is, for each new set of images, the subjects' performance started out at approximately the same level. In addition, Mooney disambiguation was much less pronounced in the control experiment as expected, indicating that the disambiguation in the main experiment was not attributable to exposure effects per se (Table 1, bottom). Together, these results indicate that Mooney learning was not solely attributable to a nonspecific improvement in the subjects' ability to interpret Mooney images in general during the course of the experiment.

Differential BOLD responses to MooneyAfter versus MooneyBefore conditions

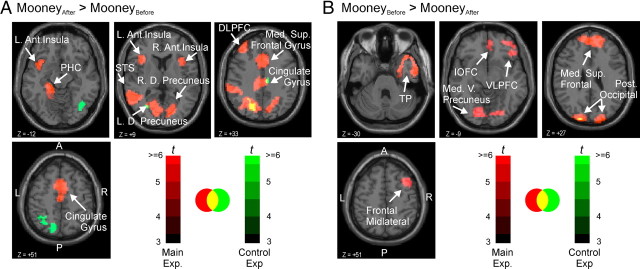

We analyzed the BOLD responses using separate linear models for stimulus blocks and for individual stimuli within blocks (“block model” and “event model”, respectively; see Materials and Methods). We used the block model to identify ROIs that may play a role in Mooney image disambiguation and the event model to test specific hypotheses about a given ROI (functional ROI approach) (Saxe et al., 2006). The block analysis revealed 16 different brain regions in which the responses significantly differed between MooneyAfter and MooneyBefore blocks after the aforementioned exposure effects were accounted for, indicating that these regions play a role in Mooney image disambiguation (regions denoted by red hues in Fig. 2). Of these 16 regions of interest, nine showed increased responses to Mooney stimuli after the exposure to their color counterparts (MooneyAfter > MooneyBefore, p < 0.05, corrected), and the remaining seven regions showed a response decrease (Table 2).

Figure 2.

Brain regions activated in the main experiment, control experiment, or both. Separate statistical maps were generated using the group data for each experiment and transparently overlaid on transverse sections of a standard single-subject. Yellow regions denote overlap between the activation in the main experiment (red hues) and the control experiment (green hues), according the color scales at bottom right. A, Brain regions with significantly larger responses during the MooneyAfter than during the MooneyBefore block. Arrows denote regions listed in Table 2 (top). Activations from the control experiment are not labeled, because they were not of direct interest. B, Brain regions with significantly larger responses during the MooneyBefore than during the MooneyAfter block. Arrows denote regions listed in Table 2 (bottom). Ant., Anterior; D., dorsal; DLPFC, dorsolateral prefrontal cortex; IOFC, inferior orbitofrontal cortex; L., left; Med., medial; Post., posterior; R., right; Sup., superior; V., ventral; VLPFC, ventrolateral prefrontal cortex.

Table 2.

Brain regions involved in learning Mooney images

| Region | Hemisphere | Brodmann area | xa | ya | za | t | Response change indexMooney (MooneyAfter vs MooneyBefore)b |

Correlation between BOLD responses and ratings (MooneyAfter stimuli)c |

||

|---|---|---|---|---|---|---|---|---|---|---|

| Faces | Flowers | Faces | Flowers | |||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 |

| MooneyAfter > MooneyBefore (p < 0.05, corrected) | ||||||||||

| Dorsolateral prefrontal cortex | L | 46 | −33 | 36 | 27 | 8.48 | 0.44* | 0.34 | −0.10 | −0.01 |

| Parahippocampal cortex | L | 30 | −18 | −27 | −12 | 6.66 | 0.53* | 0.41* | 0.15* | 0.05 |

| Anterior insula | L | (not assigned) | −30 | 18 | 9 | 7.03 | 0.44* | 0.48* | −0.04 | 0.05 |

| Dorsal precuneus | L | 17 | −15 | −53 | 9 | 5.47 | 0.38* | 0.52* | 0.04 | 0.05 |

| Superior temporal sulcus | L | 22 | −63 | −45 | 9 | 11.83 | 0.98** | 0.93** | 0.14* | 0.04 |

| Cingulate gyrus | L, Rd | 24 | 3 | 15 | 36 | 5.30 | 0.56* | 0.50* | 0.09 | −0.07 |

| Anterior insula | R | 48 | 39 | 0 | 0 | 5.96 | 0.31 | 0.21 | −0.03 | −0.01 |

| Dorsal precuneus | R | 19 | 15 | −48 | 9 | 6.47 | 0.62* | 0.69* | 0.06 | 0.04 |

| Medial superior frontal gyrus | L, Rd | 8 | 1 | 15 | 51 | 5.80 | 0.37* | 0.53* | 0.05 | −0.08 |

| MooneyBefore > MooneyAfter (p < 0.05, corrected) | ||||||||||

| Medial ventral precuneus | L, R | 23 | 3 | −54 | 27 | 5.29 | −1.55** | −1.16** | 0.01 | 0.05 |

| Ventrolateral prefrontal cortex | R | 11 | 27 | 51 | −9 | 5.45 | −0.85** | −1.11** | 0.01 | −0.05 |

| Inferior orbitofrontal cortex | R | 47 | 45 | 27 | −12 | 5.93 | −1.80** | −0.72* | 0.04 | 0.07 |

| Posterior occipital | L, Rd | 18 | 18 | −90 | 27 | 11.32 | −1.51** | −0.70* | −0.16** | 0.06 |

| Frontal midlateral | R | 44 | 30 | 15 | 36 | 5.56 | −0.58* | −0.86** | −0.05 | −0.01 |

| Temporal pole | R | 38 | 33 | 18 | −33 | 4.49 | −1.34** | −1.22** | −0.04 | −0.02 |

| Medial superior frontal | L, Rd | 9 | −6 | 48 | 33 | 8.18 | −1.61** | −1.17** | −0.15** | −0.09 |

*p < 0.05, **p < 0.005. L, Left; R, Right.

aMontreal Neurological Institute coordinate of the peak voxel.

bThis index took into account the corresponding response changes during the control experiment. For details, see Materials and Methods.

cSpearman's rank-order correlation with the average reported rating versus the β value of the BOLD response during the MooneyAfter block. Values −0.01 < r < 0.01 were rounded out to the closest corresponding value. The p values were corrected for multiple comparison using Tukey's HSD test (Toothaker, 1993).

dRegions that were coextensive in the statistical map because they were on the medial wall of the hemispheres were treated as a single region, i.e., the voxels were not partitioned into two hemispheres.

Several lines of evidence indicate that the learning-dependent changes in the BOLD responses, especially the tonic changes in responses, seen in some brain regions (Fig. 3) are not attributable to drift, i.e., an artifactual trend in the overall BOLD response attributable to factors other than changes in the underlying brain responses. First, all the data were preprocessed using standard methods to remove the effects of drift (Friston et al., 2007; Hegdé et al., 2008). Second, both tonic response increases as well as response decreases were observed in different brain regions (Fig. 3, compare A, C), which is inconsistent with a generic trend in the data. Third, BOLD response in many brain regions, including visual regions, either did not vary significantly at all or varied in a manner inconsistent with drift (data not shown).

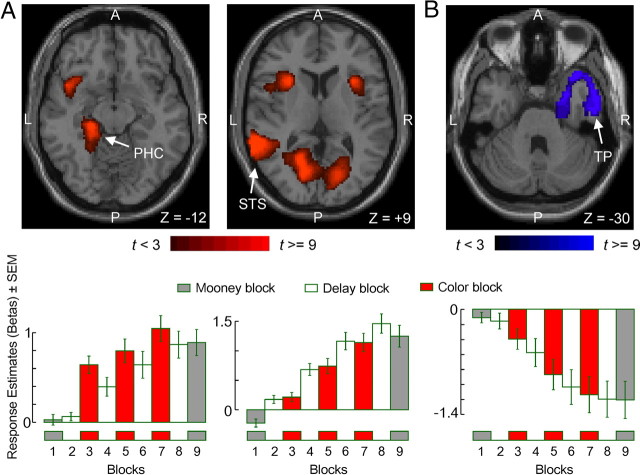

Figure 3.

Three key brain regions with significant differential responses to MooneyAfter versus MooneyBefore conditions. A, MooneyAfter > MooneyBefore (p < 0.05, corrected). B, MooneyAfter < MooneyBefore (p < 0.05, corrected). The three regions, the PHC, the STS, and the right TP, are shown in this figure superimposed on axial slices of a standard individual brain template (top row). The horseshoe appearance of the TP activation (C) results from the fact that the image plane slices through an activation that is roughly conical (data not shown). The time course of the responses to the face stimuli (β values in the corresponding block design models) in each region is shown in the bottom row. A β value of 0 denotes the baseline response across the whole brain (i.e., regression constant of the block model). The blocks are numbered as in Figure 1B. A, Anterior; L, lateral; P, posterior; R, right.

To quantify the response change between MooneyAfter and MooneyBefore blocks, we used the Response Change IndexMooney for each given ROI as described in Materials and Methods. The magnitude of the response change between MooneyAfter and MooneyBefore blocks, as measured by this index, was often different for faces versus flowers (Table 2, column 8 vs 9), consistent with many previous reports of category specificity in Mooney learning (Dolan et al., 1997; Andrews and Schluppeck, 2004).

A previous positron emission tomography (PET) study reported a single large activation encompassing the precuneus that showed response changes during Mooney learning (Dolan et al., 1997). Our results confirm and extend this result, in that they indicate that the activation in the precuneus region is functionally heterogeneous, with at least three distinct subregions: left and right dorsal precuneus and ventral medial precuneus (Table 2) (Cavanna and Trimble, 2006; Hassabis et al., 2007; Spreng et al., 2009).

Many regions also showed significant response changes, as measured by the corresponding response change indices, during delay blocks (Table 3, columns 3, 4), and/or during color blocks (columns 5 and 6). The sign of this response change was generally (although not always) consistent with the response changes during the Mooney blocks (Table 2, columns 8 and 9), indicating that these regions showed tonic response increases or decreases between the two Mooney blocks.

Table 3.

Response changes across color stimulus blocks and across delay blocks

| Region | Hemisphere | Response change |

Correlation with behaviorb |

||||||

|---|---|---|---|---|---|---|---|---|---|

| Response change indexDelay (Delay4 vs Delay1)a |

Response change indexColor(Color3 vs Color1)a |

Delay4 vs Delay1 |

Color3 vs Color1 |

||||||

| Faces | Flowers | Faces | Faces | Faces | Flowers | Faces | Flowers | ||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| Brain regions with MooneyAfter > MooneyBefore | |||||||||

| Dorsolateral prefrontal cortex | L | 48.2* | 36.4 | 26.9* | 36.2* | −0.01 | −0.15 | −0.12 | −0.13 |

| Parahippocampal cortex | L | 39.6 | 34.8 | 38.5 | 25.7 | −0.01 | 0.02 | 0.10 | 0.01 |

| Anterior insula | L | 40.6* | 38.7* | 32.7* | 32.3* | 0.10 | −0.16 | 0.07 | −0.14 |

| Dorsal preuneus | L | 44.9* | 46.5* | 9.2 | 28.9 | 0.28* | 0.07 | 0.27* | −0.01 |

| Superior temporal sulcus | L | 82.o** | 58.8* | 69.4* | 53.6* | 0.04 | −0.27* | 0.01 | −0.26* |

| Cingulate gyrus | L, R | 35.3 | 41.5* | 36.0 | 25.8 | −0.20 | −0.33* | −0.19 | −0.40** |

| Anterior insula | R | −18.5 | 11.6 | −9.6 | 16.7 | −0.04 | −0.16 | −0.02 | −0.16 |

| Dorsal preuneus | R | 39.0* | 64.7* | 24.7 | 42.0 | 0.20 | 0.04 | 0.21 | −0.01 |

| Medial superior frontal gyrus | L, R | 31.4* | 34.7* | 26.0* | 44.7* | −0.15 | −0.14 | −0.08 | −0.11 |

| Brain regions with MooneyBefore > MooneyAfter | |||||||||

| Medial ventral precuneus | R | −121.0** | −93.0** | −110.3** | −85.5** | 0.04 | −0.27* | 0.09 | −0.30 |

| Ventrolateral prefrontal cortex | R | −83.6** | −100.9** | −40.9* | −59.2** | −0.04 | −0.08 | 0.02 | −0.07 |

| Inferior orbitofrontal cortex | R | −148.1** | −63.6* | −100.6** | −31.7 | −0.15 | −0.16 | −0.17 | −0.11 |

| Posterior occipital | L, R | −113.6** | −52.7* | −107.5** | −54.1** | −0.14 | 0.38* | −0.09 | 0.47** |

| Frontal midlateral | R | −44.9* | −61.6** | −35.7* | −50.6** | −0.18 | −0.10 | −0.19 | −0.09 |

| Temporal pole | R | −141.9** | −89.8* | −74.5* | −72.6* | 0.02 | −0.10 | 0.06 | −0.04 |

| Medial superior frontal | L, R | −137.5** | −105.1** | −91.4** | −53.7* | −0.25* | −0.22 | −0.21 | −0.18 |

*p < 0.05, **p < 0.005. L, Left; R, right.

aThis index took into account the corresponding response changes during the control experiment. For details, see Materials and Methods.

bSpearman's rank-order correlation with the average reported rating versus the β value of the BOLD response during the Color3 block (i.e., block 7). Values −0.01 < r < 0.01 were rounded out to the closest corresponding value. The p values were corrected for multiple comparison using Tukey's HSD test (Toothaker, 1993).

Parallels between the BOLD and behavioral responses to MooneyAfter stimuli: evidence for disambiguation-related activity

To measure the extent to which the BOLD response of each region reflected the subject's perceptual response after Mooney learning, we used two different methods. The first, the coarse-grained method, compared the behavioral and neural responses on a scan-by-scan basis. To do this, we calculated the correlation coefficient between the subject's average response during a given MooneyAfter block versus the overall BOLD response during that block estimated using the block model. Many regions showed a significant positive correlation, so that when the BOLD response was larger, the image was likely to be perceptually more interpretable and vice versa (Table 2, top, column 10, asterisks). Conversely, many occipital and temporal regions showed significant anticorrelation, so that smaller BOLD responses were associated with greater disambiguation and vice versa (Table 2, bottom). In each of these cases, the correlation was significant for faces but not for flowers, suggesting that the neural activity underlying Mooney disambiguation may depend on the object category. Notably, none of 16 regions showed a significant correlation between the BOLD responses and the stimulus rating during the MooneyBefore block (data not shown), indicating that these regions played a role in the disambiguation after Mooney learning, not before. The corresponding comparisons for the Response Change IndexDelay and Response Change IndexColor are shown in Table 3 (columns 7–10).

The second method compared the neural versus behavioral responses during the MooneyAfter block on a finer timescale, i.e., on a stimulus-by-stimulus basis. To do this, we used an ordinal logistic model to regress the subject's rating of each individual MooneyAfter stimulus on the BOLD response of a given region to the given stimulus, estimated using the event-related model (Materials and Methods). For face stimuli, this procedure revealed three regions in which the BOLD responses significantly paralleled the behavioral responses, left parahippocampal cortex (PHC), left superior temporal sulcus (STS), and right temporal pole (TP) (Fig. 3). As noted earlier, face stimuli elicited an average behavioral rating of 3.05 ± 0.07 (SEM) during the MooneyAfter block (Table 1, top). The fit of the BOLD responses from PHC, STS, and TP to the behavioral ratings of individual stimuli was significant at p values of 0.0085, 0.0164, and 0.0064, respectively (Table 4). Altogether, this regression analysis showed that the BOLD responses of these three regions, individually or collectively, to a given MooneyAfter stimulus reliably reflected the subject's behavioral response to the stimulus (Table 4). When the behavioral responses were reshuffled with respect to the BOLD responses, the model no longer provided a significant fit (p > 0.05; data not shown), suggesting that the original fit obtained using the nonrandomized data was not attributable to chance.

Table 4.

Logistic regression of disambiguation ratings on BOLD responses during MooneyAfter block: model fit parameters

| Model (regressor/s) | Dataset | p | c Indexa | Somers' Dxyb | Gammac | Tau-ad | RN2e |

|---|---|---|---|---|---|---|---|

| Left PHC | Faces | 0.0085 | 0.577 | 0.154 | 0.156 | 0.115 | 0.02 |

| Left STS | Faces | 0.0164 | 0.548 | 0.095 | 0.096 | 0.071 | 0.017 |

| Right TP | Faces | 0.0064 | 0.561 | 0.122 | 0.123 | 0.091 | 0.022 |

| PHC, STS, and TP | Faces | <1−12 | 0.612 | 0.223 | 0.224 | 0.166 | 0.065 |

| All 16 ROIs | Faces | <1−4 | 0.651 | 0.32 | 0.303 | 0.225 | 0.131 |

| PHC, STS, and TP | Flowers | 0.0146 | 0.567 | 0.134 | 0.135 | 0.1 | 0.031 |

| All 16 ROIs | Flowers | 0.0188 | 0.621 | 0.243 | 0.244 | 0.181 | 0.085 |

aThe value of c index is identical to the area under the receiver operating characteristic curve (Bamber, 1975; Hanley and McNeil, 1982).

bMeasure of concordance between the observed disambiguation rating and the rating estimated by the corresponding model (Somers, 1962).

cGoodman–Kruskal gamma statistic of association between predicted probabilities and observed responses (Goodman and Kruskal, 1954).

dKendall' tau-a rank correlations between predicted probabilities and observed responses (Kendall, 1938).

eGeneralized RN2 index of Nagelkerke (1991).

In addition, we used the logistic model to assess how well activity in these regions predicted the behavioral responses to “new” stimuli. To do this, we recalculated the models using the BOLD response of each region to one-half of the MooneyAfter stimuli and used this model to predict the subject's behavioral responses to the remaining half of the stimuli. We found that the BOLD responses in the three regions were able to successfully predict the responses (data not shown). Activity in none of 16 regions, including PHC, STS, TP, and the precuneus complex (Dolan et al., 1997), significantly paralleled the behavioral responses to flower stimuli during the MooneyAfter block. During the MooneyBefore block, the BOLD responses failed to show significant correlation with the behavioral responses for either faces or flowers in any brain region (data not shown). Together, these results indicate that the image disambiguation is dependent on object category and that the aforementioned three regions, PHC, STS, and TP, may play a direct role in the disambiguation of Mooney face images. This is consistent with the fact that lesions that affect these regions severely impair perception of Mooney faces (Crane and Milner, 2002; Steeves et al., 2006).

Evidence for BOLD activity related to the memory of color images

How do the aforementioned three “disambiguation-related” regions access information from the previously viewed color images? As noted earlier, one possibility is that one or more of these regions are also involved in storing the disambiguating information in some type of memory. This is consistent with the fact that both PHC and STS have been reported previously to play a role in visual memory (Courtney et al., 1996; Ranganath et al., 2004; Tanabe et al., 2005). Both these regions show tonic increases during Mooney learning, including delay blocks (Fig. 3B, bottom). Such a response increase is a characteristic of the readiness or intention to respond, an aspect of working memory called preparatory set (Connolly et al., 2002). Thus, the activity in these regions is consistent with the functional definition of this type of working memory (Goldman-Rakic, 1995; Fuster, 2001).

Conversely, it is possible that the other mechanisms of short-term memory, updating mechanisms of long-term memory, or processes related to perceptual memory, including priming or adaptation, contribute to the sustained responses during the delay period (Andrews et al., 2002; Leopold et al., 2002; Parker and Krug, 2003; Chen and He, 2004; Pearson and Clifford, 2004; Roth and Courtney, 2007; Jonides et al., 2008; Pearson and Brascamp, 2008; Brascamp et al., 2010). Regardless of the mechanism(s), one or more of these regions may serve to store the information from color images for use during the ensuing MooneyAfter block. If this were the case, one would expect that, when these regions respond preferentially to a given color image, the subsequent disambiguation of its Mooney counterpart is greater. In other words, in this scenario, the activity of these regions would reflect how much information they store about a given color image.

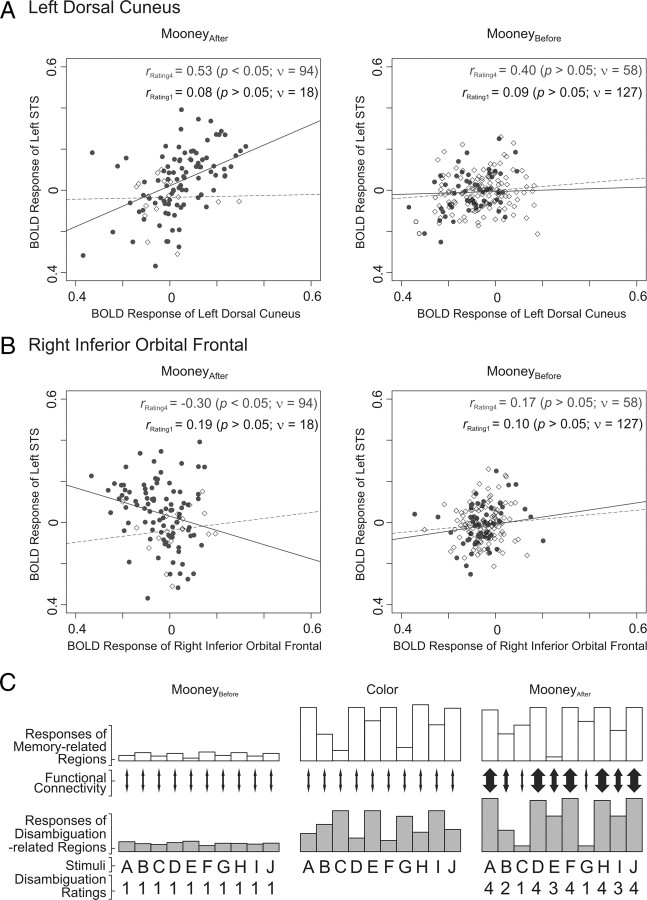

Disambiguation-related changes in functional connectivity

To test the hypothesis that PHC, STS, or TP indeed play a role storing the information from the color images in memory, we regressed the BOLD responses in each region to the corresponding color stimuli during the last color block (block 7) on the individual ratings during a given MooneyAfter block (block 9). We found that the model fits were insignificant for the three regions individually or together (p > 0.05; data not shown), indicating that the response of these regions to color stimuli did not directly reflect the behavioral responses during the MooneyAfter block. Thus, it is unlikely that these three regions play a role of storing information about specific images in memory. Other regions may instead play a more direct role in storing the specific disambiguation information and conveying it to the aforementioned three regions that play a direct role in Mooney disambiguation.

To identify such regions, we performed a connectivity analysis using the β series correlation technique, which uses correlated activity (i.e., coactivation) between a pair of regions as a measure of the strength of functional connectivity between them (Rissman et al., 2004; Gazzaley et al., 2007) (see Materials and Methods). To perform this analysis, we searched for regions that had (1) significant correlated activity with one of the aforementioned three regions during the MooneyAfter block and (2) stronger correlation for Mooney stimuli that elicited higher ratings than to those that elicited lower ratings.

Figure 4 shows two regions, left dorsal precuneus and right inferior orbital sulcus, that met both these criteria. Both the regions have been shown previously to play key roles in various types of memory (Courtney et al., 1996; Crane and Milner, 2002; Tanabe et al., 2005; Wagner et al., 2005; Cavanna and Trimble, 2006; Hassabis et al., 2007; Roth and Courtney, 2007; Jonides et al., 2008; Spreng et al., 2009). We found that functional connectivity, as measured by coactivation with STS, was stronger for stimuli that elicited larger ratings than for stimuli that elicited lower ratings (Fig. 4A,B, left columns). The coactivation was weaker regardless of the disambiguation rating during the MooneyBefore block, suggesting that Mooney learning selectively strengthened the functional connectivities, in terms of increased correlated activity, in an image-specific manner (Fig. 4A,B, right columns). Together, these results indicate that these two regions play an important role in storing the information and selectively interact with the aforementioned three disambiguation-related regions during the disambiguation. This functional connectivity is consistent with the previous anatomical findings that both precuneus and inferior orbital cortex have strong reciprocal connections with STS (Petrides and Pandya, 2002; Cavanna and Trimble, 2006; Takahashi et al., 2007; Hagmann et al., 2008).

Figure 4.

Variations in the strength of functional connectivity of STS with other brain regions as a function of disambiguation rating. Functional connectivity was measured as pairwise correlated activation (or coactivation) of STS and selected other brain regions (Rissman et al., 2004; Gazzaley et al., 2007). A, Responses of left dorsal precuneus (x-axis) to individual Mooney stimuli are plotted against the responses left STS (y-axis) as a function of the disambiguation rating elicited by the stimulus (filled circles, rating of 4; open diamonds, rating of 1) for MooneyAfter (left scatter plot) or MooneyBefore (right scatter plot) condition. The straight lines denote the best-fitting regression lines; the corresponding correlation coefficients r are shown at top right. Note that the statistical significance of r values depends not only on its magnitude but also on its degree of freedom ν. B, Connectivity between right inferior orbital frontal region and the STS. C, A schematic summary of the results. During the MooneyBefore block (left column), both memory-related regions (white bars) and disambiguation-related regions (gray bars) responded poorly to the stimuli, and the magnitude of the functional connectivity (denoted by the thickness of the double arrows) was low. During the color blocks (only one of which is shown) and during delay periods (data not shown), the responses of the memory-related regions increased much more than the activity of the disambiguation-related regions did. Note that the stimuli that elicited the larger responses from memory-related regions during the color blocks were generally also those that elicited the higher disambiguation ratings during the MooneyAfter block (e.g., stimuli A, C, F, H, J). Also during the MooneyAfter block, the functional connectivity between the two sets of regions was stronger for those stimuli that elicited higher disambiguation ratings. Moreover, the disambiguation ratings during this block were correlated with the responses of the disambiguation-related regions but not of the memory-related regions. Note that the actual experiment differed from this idealized account in many respects, including the fact that there were three color blocks (instead of one block as shown) and that the order of the stimuli was randomized from one block to the next.

Discussion

Together, our results suggest that Mooney “online” learning involves at least two overlapping sets of regions with distinct functions. One set of brain regions, including those in the precuneus region identified by a previous PET study (Dolan et al., 1997), plays a primary role in storing the visual information from color images in memory. Many of these regions, including the precuneus, are known to be part of a generic, core memory network for objects and are known to be recruited into various object recognition tasks in a task-specific manner (Cavanna and Trimble, 2006; Hassabis et al., 2007; Roth and Courtney, 2007; Spreng et al., 2009). Visual information from these regions is conveyed to the regions that play a more direct role in disambiguation (Fig. 4C). The latter set of regions presumably disambiguate the Mooney images by integrating the ambiguous sensory information about the images with the disambiguating information about the corresponding color images from one or more memory systems. The disambiguation-related regions, PHC and STS, have been shown previously to play comparable integrative roles, including in the integration of complex visual features into abstract representations and the integration of sensory cues across multiple modalities (Campanella and Belin, 2007; Noppeney et al., 2008; Barense et al., 2010). It is plausible that perceptual learning evident in the third region, TP, helps better “filter” the incoming ambiguous sensory information (Tovee et al., 1996; Andrews and Schluppeck, 2004).

Learning accurate interpretations of Mooney images involves an early stage in which images have low global interpretability (semantic) as well as low local interpretability (e.g., edges do not have distinguishable causes) but, after exposure to the color “explanations,” can be accurately interpreted at global and local scales. The complexity of this process suggests roles for working memory, updating mechanisms of long-term memory, priming, and/or adaptation (Courtney et al., 1996; Andrews et al., 2002; Connolly et al., 2002; Leopold et al., 2002; Parker and Krug, 2003; Chen and He, 2004; Pearson and Clifford, 2004; Ranganath et al., 2004; Tanabe et al., 2005; Roth and Courtney, 2007; Jonides et al., 2008). We consider several possibilities in turn.

First, long-term memory of familiar categories acquired before the experiment (faces, flowers, but also natural background “noise” objects that are not part of the target class) could help the subjects learn to resolve ambiguity. For example, given time, the Dalmatian dog can be interpreted by most observers without any perceptual “clues”; however, novel (Moore and Engel, 2001) or familiar objects in high clutter (as in our study) may require previous exposure to an interpretable version for rapid disambiguation. Second, for Mooney images with substantial clutter (as in our study), working memory is likely important for integrating recently acquired information (e.g., from the training exposures) with long-term memory and applying it to group local image features robustly tied to the target object and reject those that are not. False features occur both on the target (shadows in the study by Cavanagh, 1991) and off the object [e.g., background clutter, which poses a problem similar to camouflage (Brady and Kersten, 2003)].

Third, a number of recent studies using bistable images (Pearson and Brascamp, 2008; Brascamp et al., 2010) have provided evidence for a memory trace that codes low-level features of stored stimuli and that accumulates over stimulus presentations. For example, Brascamp et al. (2010) showed that transcranial magnetic stimulation applied to human motion complex hMT+ biased perceptual reports toward the more common perceptual interpretation, suggesting an impairment of an accumulation of perceptual memory traces that act against the common interpretation. Pearson and Brascamp (2008) have argued that the idea of perceptual memory traces suggested by bistable perception studies shares important characteristics with priming by nonambiguous stimuli. Although the perceptions of our images were not phenomenally bistable, learning accurate interpretations of the Mooney images required experience with easily interpretable color image versions, perhaps involving a kind of priming similar to that in these other studies. If so, the visual system would need to accumulate and hold information from multiple traces for the 10 distinct scenes, randomly interspersed during training.

Fourth, the learning process may involve mechanisms that test (e.g., through memory-based predictions of low-level features) whether the perceptual interpretation is consistent with previously acquired target knowledge. Our finding of a change in posterior occipital activity for interpretable Mooney images is consistent with this idea and with another study reporting that areas as early as primary visual cortex V1 are involved in Mooney disambiguation (Hsieh et al., 2010). This idea also provides a potential tie-in to the perceptual memory trace discussed above. High-level explanations (involving long-term and working memory) may induce visual traces to resolve ambiguity during learning and later recognition. It is conceivable that the same neural substrate is also involved in the effects of conscious imagery on short-term sensory traces (Pearson et al., 2008) and the patterns of V1 activity reflecting working memory (Harrison and Tong, 2009) that which occur in the absence of visual stimuli.

Our finding of a decrease in posterior occipital activity is consistent with the proposal that early disambiguation involves the suppression of “noisy” neural activity rather than an increase in neural activity consistent with the perceptual interpretation (Murray et al., 2004). Two previous studies using Mooney images found that activity in the fusiform face area was correlated with the perceptual decision and awareness of a face (Andrews and Schluppeck, 2004; McKeeff and Tong, 2007), suggesting that this region is also involved in integrating sensory information with stored knowledge. The fact that we did not find a similar correlate in our study may, in part, be because our design required observers to rank interpretability rather than recognize a particular object class. Another difference was that our face images had the considerable clutter typical of natural scenes and also included more of the body, so that the face constituted a comparatively smaller proportion of the image.

The functional interaction between the memory mechanisms versus brain regions more directly involved in image disambiguation may represent an integrated system of knowledge-mediated disambiguation wherein perceptual knowledge is used to disambiguate incoming sensory information that operates under everyday vision. Obviously, the precise disambiguation-related regions and the types of memory mechanisms likely vary depending on the task and memory needs. Indeed, the mechanisms elucidated in this study may not underlie long-term Mooney learning. For instance, Mooney images, once disambiguated, can stay unambiguous for years, especially after repeated exposure, as in the case of the classic Dalmatian dog image (Gregory, 1973). In such cases, longer-term memory mechanisms are likely to be involved. Thus, what may be generalizable from our study is not specific brain networks per se but functional specialization of memory-related versus disambiguation-related functions and disambiguation mediated by enhanced functional connectivity with the appropriate memory mechanisms. Although the role of the various memory mechanisms in sensory disambiguation remains to be characterized, our study suggests that examining selective functional connectivity between the relevant memory mechanisms on the one hand and disambiguation-related regions on the other is a promising avenue for elucidating such processes.

Footnotes

This work was supported by Office of Naval Research Grant N00014-05-1-0124 and by National Institutes of Health Grant R01 EY015261 (D.K.). The 3 T scanner at the University of Minnesota Center for Magnetic Resonance Research is supported by Biotechnology Research Resource Grant P41 008079 and by the MIND Institute. We thank many of our colleagues, especially Drs. Clare Bergson, David Blake, Christos Constantinidis, Stephen Engel, Wilma Koutstaal, and Jennifer McDowell, for helpful discussions and comments on this manuscript.

References

- Andrews TJ, Schluppeck D. Neural responses to Mooney images reveal a modular representation of faces in human visual cortex. Neuroimage. 2004;21:91–98. doi: 10.1016/j.neuroimage.2003.08.023. [DOI] [PubMed] [Google Scholar]

- Andrews TJ, Schluppeck D, Homfray D, Matthews P, Blakemore C. Activity in the fusiform gyrus predicts conscious perception of Rubin's vase-face illusion. Neuroimage. 2002;17:890–901. [PubMed] [Google Scholar]

- Baker CI, Hutchison TL, Kanwisher N. Does the fusiform face area contain subregions highly selective for nonfaces? Nat Neurosci. 2007a;10:3–4. doi: 10.1038/nn0107-3. [DOI] [PubMed] [Google Scholar]

- Baker CI, Liu J, Wald LL, Kwong KK, Benner T, Kanwisher N. Visual word processing and experiential origins of functional selectivity in human extrastriate cortex. Proc Natl Acad Sci U S A. 2007b;104:9087–9092. doi: 10.1073/pnas.0703300104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bamber D. The area above the ordinal dominance graph and the area below the receiver operating characteristic graph. J Math Psychol. 1975;12:387–415. [Google Scholar]

- Barense MD, Henson RN, Lee AC, Graham KS. Medial temporal lobe activity during complex discrimination of faces, objects, and scenes: effects of viewpoint. Hippocampus. 2010;20:389–401. doi: 10.1002/hipo.20641. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biederman I. Human image understanding: recent research and a theory. Comput Vis Graphics Image Process. 1985;32:29–73. [Google Scholar]

- Brady MJ, Kersten D. Bootstrapped learning of novel objects. J Vis. 2003;3:413–422. doi: 10.1167/3.6.2. [DOI] [PubMed] [Google Scholar]

- Brascamp JW, Kanai R, Walsh V, van Ee R. Human middle temporal cortex, perceptual bias, and perceptual memory for ambiguous three-dimensional motion. J Neurosci. 2010;30:760–766. doi: 10.1523/JNEUROSCI.4171-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campanella S, Belin P. Integrating face and voice in person perception. Trends Cogn Sci. 2007;11:535–543. doi: 10.1016/j.tics.2007.10.001. [DOI] [PubMed] [Google Scholar]

- Cavanagh P. What's up in top-down processing? In: Gorea A, editor. Representations of vision: trends and tacit assumptions in vision research. New York: Cambridge UP; 1991. pp. 295–304. [Google Scholar]

- Cavanna AE, Trimble MR. The precuneus: a review of its functional anatomy and behavioural correlates. Brain. 2006;129:564–583. doi: 10.1093/brain/awl004. [DOI] [PubMed] [Google Scholar]

- Chen X, He S. Local factors determine the stabilization of monocular ambiguous and binocular rivalry stimuli. Curr Biol. 2004;14:1013–1017. doi: 10.1016/j.cub.2004.05.042. [DOI] [PubMed] [Google Scholar]

- Connolly JD, Goodale MA, Menon RS, Munoz DP. Human fMRI evidence for the neural correlates of preparatory set. Nat Neurosci. 2002;5:1345–1352. doi: 10.1038/nn969. [DOI] [PubMed] [Google Scholar]

- Courtney SM, Ungerleider LG, Keil K, Haxby JV. Object and spatial visual working memory activate separate neural systems in human cortex. Cereb Cortex. 1996;6:39–49. doi: 10.1093/cercor/6.1.39. [DOI] [PubMed] [Google Scholar]

- Crane J, Milner B. Do I know you? Face perception and memory in patients with selective amygdalo-hippocampectomy. Neuropsychologia. 2002;40:530–538. doi: 10.1016/s0028-3932(01)00131-2. [DOI] [PubMed] [Google Scholar]

- Dolan RJ, Fink GR, Rolls E, Booth M, Holmes A, Frackowiak RS, Friston KJ. How the brain learns to see objects and faces in an impoverished context. Nature. 1997;389:596–599. doi: 10.1038/39309. [DOI] [PubMed] [Google Scholar]

- Donaldson DI, Petersen SE, Ollinger JM, Buckner RL. Dissociating state and item components of recognition memory using fMRI. Neuroimage. 2001;13:129–142. doi: 10.1006/nimg.2000.0664. [DOI] [PubMed] [Google Scholar]

- Dosenbach NU, Visscher KM, Palmer ED, Miezin FM, Wenger KK, Kang HC, Burgund ED, Grimes AL, Schlaggar BL, Petersen SE. A core system for the implementation of task sets. Neuron. 2006;50:799–812. doi: 10.1016/j.neuron.2006.04.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Epshtein B, Lifshitz I, Ullman S. Image interpretation by a single bottom-up top-down cycle. Proc Natl Acad Sci U S A. 2008;105:14298–14303. doi: 10.1073/pnas.0800968105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fuster JM. The prefrontal cortex—an update: time is of the essence. Neuron. 2001;30:319–333. doi: 10.1016/s0896-6273(01)00285-9. [DOI] [PubMed] [Google Scholar]

- Gazzaley A, Rissman J, Cooney J, Rutman A, Seibert T, Clapp W, D'Esposito M. Functional interactions between prefrontal and visual association cortex contribute to top-down modulation of visual processing. Cereb Cortex. 2007;17(Suppl 1):i125–i135. doi: 10.1093/cercor/bhm113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldman-Rakic PS. Cellular basis of working memory. Neuron. 1995;14:477–485. doi: 10.1016/0896-6273(95)90304-6. [DOI] [PubMed] [Google Scholar]

- Goodman LA, Kruskal WH. Measures of association for cross classifications. J Am Stat Assoc. 1954;49:732–764. [Google Scholar]

- Gregory RL. New York: McGraw-Hill; 1973. The intelligent eye. [Google Scholar]

- Hagmann P, Cammoun L, Gigandet X, Meuli R, Honey CJ, Wedeen VJ, Sporns O. Mapping the structural core of human cerebral cortex. PLoS Biol. 2008;6:e159. doi: 10.1371/journal.pbio.0060159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley JA, McNeil BJ. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology. 1982;143:29–36. doi: 10.1148/radiology.143.1.7063747. [DOI] [PubMed] [Google Scholar]

- Harrell FE. New York: Springer; 2001. Regression modeling strategies. [Google Scholar]

- Harrison SA, Tong F. Decoding reveals the contents of visual working memory in early visual areas. Nature. 2009;458:632–635. doi: 10.1038/nature07832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hassabis D, Kumaran D, Maguire EA. Using imagination to understand the neural basis of episodic memory. J Neurosci. 2007;27:14365–14374. doi: 10.1523/JNEUROSCI.4549-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hegdé J, Fang F, Murray SO, Kersten D. Preferential responses to occluded objects in the human visual cortex. J Vis. 2008;8:16, 11–16. doi: 10.1167/8.4.16. [DOI] [PubMed] [Google Scholar]

- Hsieh PJ, Vul E, Kanwisher N. Recognition alters the spatial pattern of FMRI activation in early retinotopic cortex. J Neurophysiol. 2010;103:1501–1507. doi: 10.1152/jn.00812.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jemel B, Pisani M, Rousselle L, Crommelinck M, Bruyer R. Exploring the functional architecture of person recognition system with event-related potentials in a within- and cross-domain self-priming of faces. Neuropsychologia. 2005;43:2024–2040. doi: 10.1016/j.neuropsychologia.2005.03.016. [DOI] [PubMed] [Google Scholar]

- Jonides J, Lewis RL, Nee DE, Lustig CA, Berman MG, Moore KS. The mind and brain of short-term memory. Annu Rev Psychol. 2008;59:193–224. doi: 10.1146/annurev.psych.59.103006.093615. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kendall M. A new measure of rank correlation. Biometrika. 1938;30:81–89. [Google Scholar]

- Kersten D, Yuille A. Bayesian models of object perception. Curr Opin Neurobiol. 2003;13:150–158. doi: 10.1016/s0959-4388(03)00042-4. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Simmons WK, Bellgowan PS, Baker CI. Circular analysis in systems neuroscience: the dangers of double dipping. Nat Neurosci. 2009;12:535–540. doi: 10.1038/nn.2303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leopold DA, Wilke M, Maier A, Logothetis NK. Stable perception of visually ambiguous patterns. Nat Neurosci. 2002;5:605–609. doi: 10.1038/nn0602-851. [DOI] [PubMed] [Google Scholar]

- McKeeff TJ, Tong F. The timing of perceptual decisions for ambiguous face stimuli in the human ventral visual cortex. Cereb Cortex. 2007;17:669–678. doi: 10.1093/cercor/bhk015. [DOI] [PubMed] [Google Scholar]

- Moore C, Cavanagh P. Recovery of 3D volume from 2-tone images of novel objects. Cognition. 1998;67:45–71. doi: 10.1016/s0010-0277(98)00014-6. [DOI] [PubMed] [Google Scholar]

- Moore C, Engel SA. Neural response to perception of volume in the lateral occipital complex. Neuron. 2001;29:277–286. doi: 10.1016/s0896-6273(01)00197-0. [DOI] [PubMed] [Google Scholar]

- Murray SO, Schrater P, Kersten D. Perceptual grouping and the interactions between visual cortical areas. Neural Netw. 2004;17:695–705. doi: 10.1016/j.neunet.2004.03.010. [DOI] [PubMed] [Google Scholar]

- Nagelkerke NJD. A note on a general definition of the coefficient of determination. Biometrika. 1991;78:691–692. [Google Scholar]

- Naor-Raz G, Tarr MJ, Kersten D. Is color an intrinsic property of object representation? Perception. 2003;32:667–680. doi: 10.1068/p5050. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Josephs O, Hocking J, Price CJ, Friston KJ. The effect of prior visual information on recognition of speech and sounds. Cereb Cortex. 2008;18:598–609. doi: 10.1093/cercor/bhm091. [DOI] [PubMed] [Google Scholar]

- Parker AJ, Krug K. Neuronal mechanisms for the perception of ambiguous stimuli. Curr Opin Neurobiol. 2003;13:433–439. doi: 10.1016/s0959-4388(03)00099-0. [DOI] [PubMed] [Google Scholar]

- Pearson J, Brascamp J. Sensory memory for ambiguous vision. Trends Cogn Sci. 2008;12:334–341. doi: 10.1016/j.tics.2008.05.006. [DOI] [PubMed] [Google Scholar]

- Pearson J, Clifford CG. Determinants of visual awareness following interruptions during rivalry. J Vis. 2004;4:196–202. doi: 10.1167/4.3.6. [DOI] [PubMed] [Google Scholar]

- Pearson J, Clifford CW, Tong F. The functional impact of mental imagery on conscious perception. Curr Biol. 2008;18:982–986. doi: 10.1016/j.cub.2008.05.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Comparative cytoarchitectonic analysis of the human and the macaque ventrolateral prefrontal cortex and corticocortical connection patterns in the monkey. Eur J Neurosci. 2002;16:291–310. doi: 10.1046/j.1460-9568.2001.02090.x. [DOI] [PubMed] [Google Scholar]

- Ranganath C, DeGutis J, D'Esposito M. Category-specific modulation of inferior temporal activity during working memory encoding and maintenance. Brain Res Cogn Brain Res. 2004;20:37–45. doi: 10.1016/j.cogbrainres.2003.11.017. [DOI] [PubMed] [Google Scholar]

- Rissman J, Gazzaley A, D'Esposito M. Measuring functional connectivity during distinct stages of a cognitive task. Neuroimage. 2004;23:752–763. doi: 10.1016/j.neuroimage.2004.06.035. [DOI] [PubMed] [Google Scholar]

- Rossion B, Jacques C. Does physical interstimulus variance account for early electrophysiological face sensitive responses in the human brain? Ten lessons on the N170. Neuroimage. 2008;39:1959–1979. doi: 10.1016/j.neuroimage.2007.10.011. [DOI] [PubMed] [Google Scholar]

- Roth JK, Courtney SM. Neural system for updating object working memory from different sources: sensory stimuli or long-term memory. Neuroimage. 2007;38:617–630. doi: 10.1016/j.neuroimage.2007.06.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saxe R, Brett M, Kanwisher N. Divide and conquer: a defense of functional localizers. Neuroimage. 2006;30:1088–1096. doi: 10.1016/j.neuroimage.2005.12.062. discussion 1097–1099. [DOI] [PubMed] [Google Scholar]

- Somers RH. A new asymmetric measure of association for ordinal variable. Am Sociol Rev. 1962;27:799–811. [Google Scholar]

- Spreng RN, Mar RA, Kim AS. The common neural basis of autobiographical memory, prospection, navigation, theory of mind and the default mode: a quantitative meta-analysis. J Cogn Neurosci. 2009;21:489–510. doi: 10.1162/jocn.2008.21029. [DOI] [PubMed] [Google Scholar]

- Steeves JK, Culham JC, Duchaine BC, Pratesi CC, Valyear KF, Schindler I, Humphrey GK, Milner AD, Goodale MA. The fusiform face area is not sufficient for face recognition: evidence from a patient with dense prosopagnosia and no occipital face area. Neuropsychologia. 2006;44:594–609. doi: 10.1016/j.neuropsychologia.2005.06.013. [DOI] [PubMed] [Google Scholar]

- Takahashi E, Ohki K, Kim DS. Diffusion tensor studies dissociated two fronto-temporal pathways in the human memory system. Neuroimage. 2007;34:827–838. doi: 10.1016/j.neuroimage.2006.10.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanabe HC, Honda M, Sadato N. Functionally segregated neural substrates for arbitrary audiovisual paired-association learning. J Neurosci. 2005;25:6409–6418. doi: 10.1523/JNEUROSCI.0636-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toothaker LE. Newbury Park, CA.: Sage UP; 1993. Multiple comparison procedures. [Google Scholar]

- Tovee MJ, Rolls ET, Ramachandran VS. Rapid visual learning in neurones of the primate temporal visual cortex. Neuroreport. 1996;7:2757–2760. doi: 10.1097/00001756-199611040-00070. [DOI] [PubMed] [Google Scholar]

- Tu Z, Chen X, Yuille A, Zhu S. Image parsing: unifying segmentation, detection and recognition. Int J Comput Vis. 2005;63:113–140. [Google Scholar]

- Wagner AD, Shannon BJ, Kahn I, Buckner RL. Parietal lobe contributions to episodic memory retrieval. Trends Cogn Sci. 2005;9:445–453. doi: 10.1016/j.tics.2005.07.001. [DOI] [PubMed] [Google Scholar]