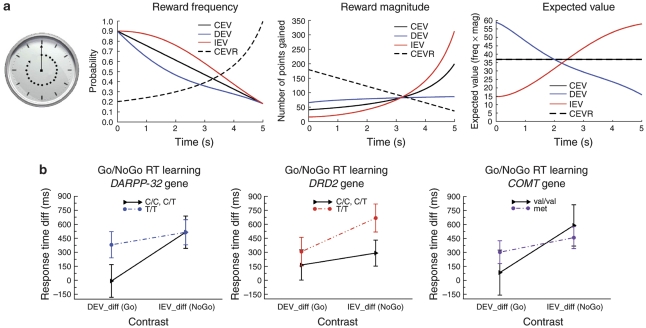

Figure 1.

Representation of the action selection used by Frank et al (2009). (a) Participants observed a clock arm that completed a revolution over 5 s, and could stop the clock with a key press in an attempt to obtain rewards. Rewards were delivered with a probability and magnitude that varied as a function of response times (RTs), defining changing expected values. There were four conditions (one not shown here). In the expected value decreases (DEV) condition, expected values declined with slower RTs, such that performance benefited from Go learning to produce quicker responses. In the expected value increases (IEV) condition, expected values increased such that performance benefited from No-Go learning to produce adaptively slower responding. Finally in the (control) CEV condition, expected values remained constant as a function of RTs. (b) Mean RTs (plus standard error bars) in the IEV relative to CEV conditions for carriers of the T/T compared with the C/C and C/T alleles of the DARPP-32 and DRD2 genes, and carriers of the met allele compared with the met/val and val/val alleles of the COMT gene.