Abstract

Individuals make choices and prioritize goals using complex processes that assign value to rewards and associated stimuli. During Pavlovian learning, previously neutral stimuli that predict rewards can acquire motivational properties, whereby they themselves become attractive and desirable incentive stimuli. But individuals differ in whether a cue acts solely as a predictor that evokes a conditional response, or also serves as an incentive stimulus, and this determines the degree to which a cue might bias choice or even promote maladaptive behavior. Here we use rats that differ in the incentive motivational properties they attribute to food cues to probe the role of the neurotransmitter dopamine in stimulus-reward learning. We show that intact dopamine transmission is not required for all forms of learning in which reward cues become effective predictors. Rather, dopamine acts selectively in a form of reward learning in which “incentive salience” is assigned to reward cues. In individuals with a propensity for this form of learning, reward cues come to powerfully motivate and control behavior. This work provides insight into the neurobiology of a form of reward learning that confers increased susceptibility to disorders of impulse control.

Dopamine is central for reward-related processes1,2, but the exact nature of its role remains controversial. Phasic neurotransmission in the mesolimbic dopamine system is initially triggered by the receipt of reward (unconditional stimulus; US), but shifts to a cue predictive of reward (conditional stimulus; CS) after associative learning3,4. Dopamine responsiveness appears to encode discrepancies between rewards received and those predicted, consistent with a “prediction error” teaching signal used in formal models of reinforcement learning5,6. Therefore, a popular hypothesis is that dopamine is used to update the predictive value of stimuli during associative learning7. In contrast, others have argued that the role of dopamine in reward is in attributing Pavlovian incentive value to cues that signal reward, rendering them desirable in their own right8–10, and thereby increasing the pool of positive stimuli that have motivational control over behavior. To date, it has been difficult to determine whether dopamine mediates the predictive or the motivational properties of reward-associated cues, because these two features are often acquired together. However, the extent to which a predictor of reward acquires incentive value differs between individuals, providing the opportunity to parse the role of dopamine in stimulus-reward learning (for simplicity, referred to as ‘reward learning’ throughout the remainder of the manuscript).

Individual variation in behavioral responses to reward-associated stimuli can be seen using one of the simplest reward paradigms, Pavlovian conditioning. If a CS is presented immediately prior to US delivery at a separate location, some animals approach and engage the CS itself and only go to the location of food delivery upon CS termination. This conditioned response (CR), which is maintained by Pavlovian contingency11, is called “sign-tracking” because animals are attracted to the cue or “sign” that indicates impending reward delivery. However, other individuals do not approach the CS, but during its presentation engage the location of US delivery, even though the US is not available until CS termination. This CR is called “goal-tracking”12. The CS is an effective predictor in animals that learn either a sign-tracking or a goal-tracking response; it acts as an “excitor”, evoking a CR in both. However, only in sign-trackers is the CS an attractive incentive stimulus, and only in sign-trackers is it strongly desired (i.e. “wanted”), in the sense that animals will work avidly to get it13. In rats selectively-bred for differences in locomotor responses to a novel environment14, high responders to novelty (bHR) consistently learn a sign-tracking CR but low responders to novelty (bLR) consistently learn a goal-tracking CR15. Here, we exploit these predictable phenotypes in the selectively-bred rats, as well as normal variation in outbred rats, to probe the role of dopamine transmission in reward learning in individuals that vary in the incentive value they assign to reward cues.

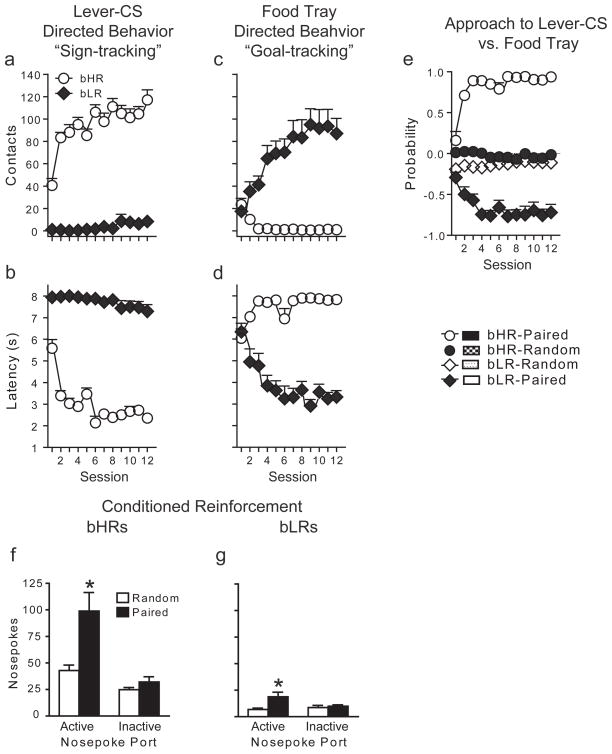

Rats from the S20 generation were used for behavioral analysis of Pavlovian conditioned approach behavior15 (Fig. 1a-e). When presentation of a lever-CS was paired with food delivery both bHRs and bLRs developed a Pavlovian CR, but as we have described previously15, the topography of the CR was different in the two groups. With training, bHRs came to rapidly approach and engage the lever-CS (Fig. 1a-b), whereas upon CS presentation bLRs came to rapidly approach and engage the location where food would be delivered (Fig. 1c-d; see detailed statistics in Supplementary Information). Both bHRs and bLRs acquired their respective CRs as a function of training, as there was a significant effect of session for all measures of sign-tracking behavior for bHRs (a-b; P≤0.0001), and of goal-tracking behavior for bLRs (c-d; P≤0.0001). Furthermore, bHRs and bLRs learned their respective CRs at the same rate, as indicated by analyses of variance in which session was treated as a continuous variable and the phenotypes were directly compared. There were non-significant phenotype x session interactions for: 1) the number of contacts with the lever-CS for bHRs vs. the food-tray for bLRs (F(1,236)=3.02, P=0.08) and 2) the latency to approach the lever-CS for bHRs vs. the food-tray for bLRs (F(1,236)=0.93, P=0.34). Importantly, rats that received non-contiguous (pseudorandom) presentations of the CS and the US did not learn either a sign-tracking or a goal-tracking CR (Fig. 1e).

Figure 1. Development of sign-tracking vs. goal-tracking CRs in bHR and bLR animals, respectively.

Behavior directed towards the lever-CS (sign-tracking) is shown in panels a-b and that directed towards the food-tray (goal-tracking behavior) in panels c-d (n=10/group). Mean + SEM (a) number of lever-CS contacts made during the 8-s CS period, (b) latency to the first lever-CS contact, (c) number of food-tray beam breaks during lever-CS presentation, (d) latency to the first beam break in the food-tray during lever-CS presentation. For all of these measures (a-d) there was a significant effect of phenotype, session, and a phenotype x session interaction (P≤0.0001). (e) Mean+ SEM probability of approach to the lever minus the probability of approach to the food-tray. A score of zero indicates that neither approach to the lever-CS nor approach to the food-tray was dominant. (f, g) Test for conditioned reinforcement illustrated as the mean + SEM number of active and inactive nose pokes in bred rats that received either paired (bHR, n=10; bLR, n=9) or pseudorandom (bHR, n=9; bLR, n=9) CS-US presentations. Rats in the paired groups poked more in the active port relative to random groups of the same phenotype (*P<0.02), but the magnitude of this effect was greater for bHRs (phenotype x group interaction, P=0.04).

These data indicate that the CS acquired one defining property of an incentive stimulus in bHRs but not bLRs, the ability to attract. Another feature of an incentive stimulus is to be “wanted” and as such animals should work to obtain it10,16. Therefore, we quantified the ability of the lever-CS to serve as a conditioned reinforcer in the two groups (Figure 1f-g) in the absence of the food-US. Following Pavlovian training rats were given the opportunity to perform an instrumental response (nosepoke) for presentation of the lever-CS. Responses into a port designated “active” resulted in the brief presentation of the lever-CS and responses into an “inactive” port were without consequence. Both conditioned bHRs and bLRs made more active than inactive nose pokes, and more active nose pokes than control groups that received pseudorandom presentations of the CS and the US (Fig. 1f-g; detailed statistics in Supplementary Information). However, the lever-CS was a more effective conditioned reinforcer in bHR than bLR animals, as indicated by a significant phenotype x group interaction for active nose pokes (F(1,33)=4.82, P=0.04), which controls for “basal” differences in nosepoke responding. Moreover, in outbred rats where this baseline difference in responding does not exist, we have found similar results, indicating that the lever-CS is a more effective conditioned reinforcer for sign-trackers than goal-trackers13. In summary, the lever-CS was equally predictive, evoking a CR in both groups, but it acquired two properties of an incentive stimulus to a greater degree in bHRs than bLRs: it was more attractive, as indicated by approach behavior (Fig. 1a) and more desirable, as indicated by the ability to serve as a conditioned reinforcer (Fig. 1f).

The core of the nucleus accumbens is an important anatomical substrate for motivated behavior17,18 and has been specifically implicated as a site where dopamine acts to mediate the acquisition and/or performance of Pavlovian conditioned approach behavior19–22. Therefore, we used fast-scan cyclic voltammetry (FSCV) at carbon-fiber microelectrodes23 to characterize the pattern of phasic dopamine signaling in this region during Pavlovian conditioning (see Fig. S1 for recording locations). Similar to surgically naïve animals, bHRs learned a sign-tracking CR (session effect on lever contacts: F(5,20) = 5.76, P = 0.002) and bLRs a goal-tracking CR (session effect on food-receptacle contacts: F(5,20) = 5.18, P = 0.003) during neurochemical data collection (Fig. S2). Changes in latency during learning were very similar in each group for their respective CRs (main effect of session: F(5,40) = 10.5, P < 0.0001; main effect of phenotype: F(1,8) = 0.13, P = 0.73; session by phenotype interaction: F(5,40) = 1.16, P = 0.35), indicating that the CS acts as an equivalent predictor of reward in both groups. Therefore, if CS-evoked dopamine release encodes the strength of the reward prediction, as previously postulated 5–7, it should increase to a similar degree in both groups during learning; however, if it encodes the attribution of incentive value to the CS, then it should increase to a greater degree in sign-trackers than in goal-trackers.

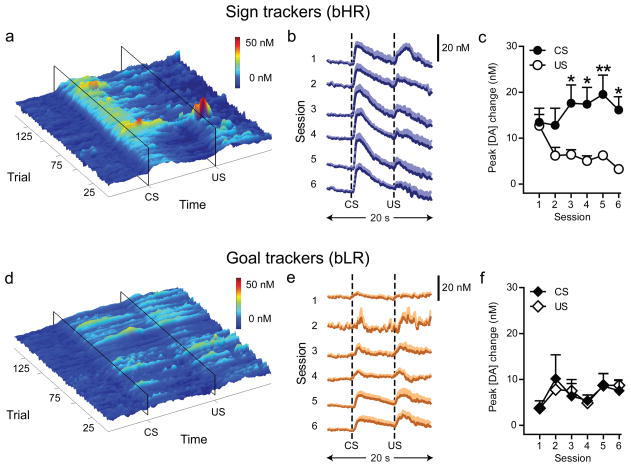

During the acquisition of conditioned approach, CS-evoked dopamine release (Fig. 2, Fig. S3) increased in bHR rats relative to unpaired controls (pairing x session interaction: F(5,35) = 4.58, P = 0.003), but there was no such effect in bLRs (Fig. 2, Fig. S3; pairing x session interaction: F(5,35) = 0.94, P = 0.46). Indeed, the trial-by-trial correlation between CS-evoked dopamine release and trial number was significant for bHR (r2=0.14, P < 0.0001) but not bLR (r2=0.003, P=0.54) rats, producing significantly different slopes (P=0.005) and higher CS-evoked dopamine release in bHR rats after acquisition (Fig. S4, session six; P = 0.04). US-evoked dopamine release also differed between bHR and bLR rats during training (session x phenotype interaction: F(5,40) = 6.09, P = 0.0003), but for this stimulus dopamine release was lower after acquisition in bHRs (session six; P = 0.002; Fig. S4). Collectively these data highlight that bHR and bLR rats produce fundamentally different patterns of dopamine release to reward-related stimuli during learning (see Videos S1-2 in Supplementary Information). The CS and US signals diverge in bHR rats (stimulus x session interaction: F(5,40) = 5.47, P = 0.0006; Fig. 2c) but not bLR rats (stimulus x session interaction: F(5,40) = 0.28, P = 0.92; Fig. 2f).

Figure 2. Phasic dopamine signaling in response to CS and US presentation during the acquisition of Pavlovian conditioned approach behavior in bHR and bLR rats.

Phasic dopamine release was recorded in the core of the nucleus accumbens using FSCV across six days of training. (a, d) Representative surface plots depict trial-by-trial fluctuations in dopamine concentration during the twenty-second period around CS and US presentation in individual animals throughout training. (b, e) Mean + SEM change in dopamine concentration in response to CS and US presentation for each session of conditioning. (c, f) Mean + SEM change in peak amplitude of the dopamine signal observed in response to CS and US presentation for each session of conditioning (n=5/group; Bonferroni post-hoc comparison between CS- and US-evoked dopamine release: *P<0.05; **P<0.01). Panels a-c demonstrate that bHR animals, which developed a sign-tracking CR, show increasing phasic dopamine responses to CS presentation and decreasing responses to US presentation across the six sessions of training. In contrast, panels d-f demonstrate that bLR rats, which developed a goal-tracking CR, maintain phasic responses to US presentation throughout training.

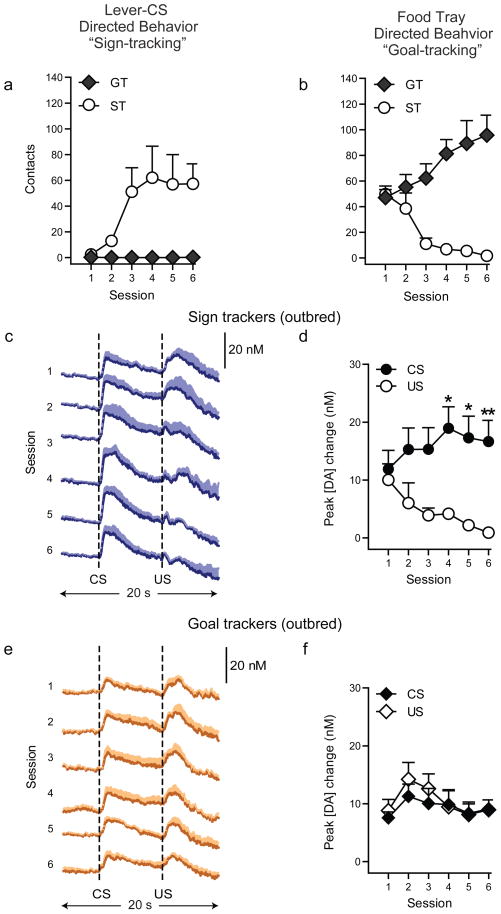

Importantly, experiments conducted in commercially-obtained outbred rats reproduced the pattern of dopamine release observed in the selectively-bred rats (Fig. 3, Fig. S4). Specifically, there was an increase in CS-evoked and a decrease in US-evoked dopamine release during learning in outbred rats that learned a sign-tracking CR (stimulus x session interaction: F(5,50) = 4.43, P = 0.002; Fig. 3d), but not in those that learned a goal-tracking CR (stimulus x session interaction: F(5,40) = 0.48, P = 0.72; Fig. 3f). To test the robustness of these patterns of dopamine release, a subset of outbred rats received extended training. During four additional sessions, the profound differences in dopamine release between sign- and goal-trackers were stable (Fig. S5), demonstrating that these differences are not limited to the initial stages of learning. The consistency of these dopamine patterns in selectively-bred and outbred rats indicates that they are neurochemical signatures for sign- and goal-trackers rather than an artifact of selective breeding.

Figure 3. Conditonal responses and phasic dopamine signaling in response to CS and US presentation in outbred rats.

Phasic dopamine release was recorded in the core of the nucleus accumbens using FSCV across six days of training. (a) Behavior directed towards the lever-CS (sign-tracking) and (b) that directed towards the food-tray (goal-tracking behavior) during conditioning. Learning was evident in both groups as there was a significant effect of session for rats that learned a sign-tracking response (n=6; session effect on lever contacts: F(5,25) = 11.85, P = 0.0001) and for those that learned a goal-tracking response (n=5; session effect on food-receptacle contacts: F(5,20) = 3.09, P = 0.03). (c, e) Mean + SEM change in dopamine concentration in response to CS and US presentation for each session of conditioning. (d, f) Mean + SEM change in peak amplitude of the dopamine signal observed in response to CS and US presentation for each session of conditioning. (Bonferroni post-hoc comparison between CS- and US- evoked dopamine release: *P<0.05; **P<0.01). Panels c-d demonstrate that animals developing a sign-tracking CR (n=6) show increasing phasic dopamine responses to CS presentation and decreasing responses to US presentation consistent with bHR animals. Panels e-f demonstrate that animals developing a goal-tracking CR (n=5) maintain phasic responses to US presentation consistent with bLR animals.

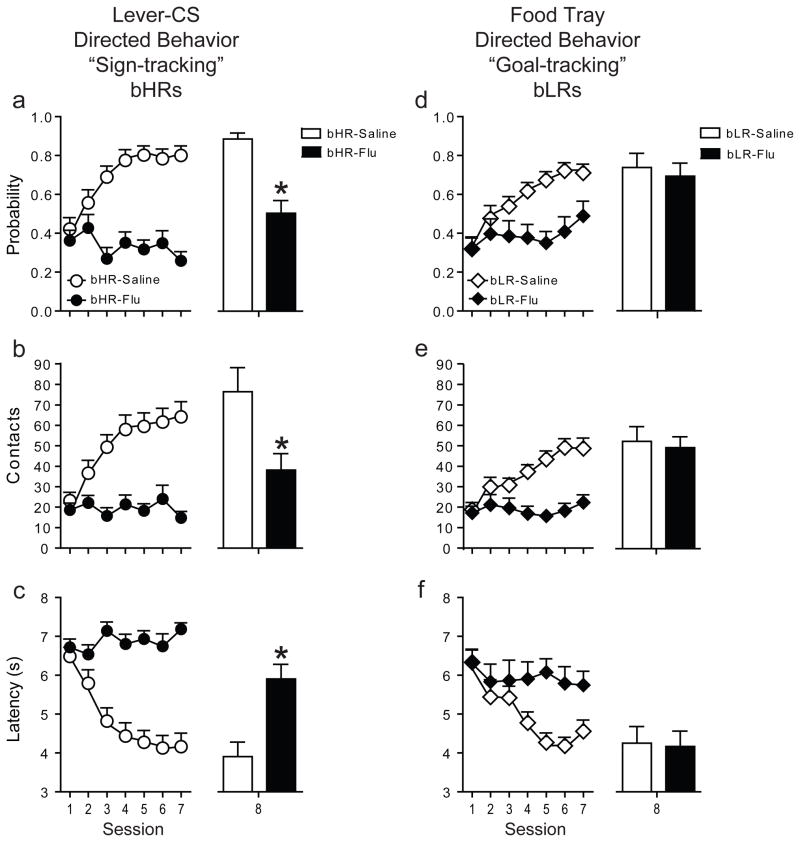

Given the disparate patterns of dopamine signaling observed during learning a sign- vs. goal-tracking CR, we tested whether the acquisition and performance of these CRs were differentially dependent on dopamine transmission. Systemic administration of flupenthixol, a nonspecific dopamine receptor antagonist, attenuated performance of the CR for both bHRs and bLRs. This effect was clearly evident when the antagonist was administered during training (Fig. 4, sessions 1–7). It was also observed after the rats had already acquired their respective CR (Fig. S6), but this latter finding needs to be interpreted cautiously because of a non-specific effect on activity (Figure S6e). More importantly, when examined off flupenthixol during the 8th test session, bHRs still failed to demonstrate a sign-tracking CR (P≤0.01 vs. saline, session 8; Fig. 4a-c), indicating that dopamine is necessary for both the performance and the learning of a sign-tracking CR, consistent with previous findings20. In contrast, flupenthixol had no effect on learning the CS-US association that lead to a goal-tracking CR (P≥0.6 vs. saline, session 8; Fig. 4d-f), because on the drug-free session bLRs showed a fully developed goal-tracking CR—their session 8 performance differed significantly from their session 1 performance (P≤0.0002). Further, they differed from the bLR saline group on session 1 (P≤0.0001), but did not differ from the bLR saline group on session 8. Thus, while dopamine may be necessary for the performance of both sign-tracking and goal-tracking CRs, it is only necessary for acquisition of a sign-tracking CR, indicating that these forms of learning are mediated by distinct neural systems.

Figure 4. DA is necessary for learning CS-US associations that lead to sign-tracking, but not goal-tracking.

The effects of flupenthixol are shown for: 1. Measures of sign-tracking: (a) probability to approach the lever-CS, (b) number of contacts with the lever-CS, (c) latency to contact the lever-CS. 2. Measures of goal-tracking: (d) probability to approach the food-tray during lever-CS presentation, (e) number of contacts with the food-tray during lever-CS presentation, (f) latency to contact the food-tray during lever-CS presentation. Data are expressed as mean + SEM. Flupenthixol (sessions 1–7) blocked the performance of both sign-tracking and goal-tracking CRs. To determine whether flupenthixol influenced performance or learning of a CR, behavior was examined following a saline injection on session 8 for all rats. bLR rats that were treated with flupenthixol prior to sessions 1–7 (bLR-Flu, n=16) responded similarly to the bLR saline (bLR-Saline, n=10) group on all measures of goal-tracking behavior on session 8, whereas bHRs treated with flupenthixol (bHR-Flu, n=22) differed significantly from the bHR saline (bHR-Saline, n=10) group on session 8 (*P<0.01, saline vs. flupenthixol). Thus, bLRs learned the CS-US association that produced a goal-tracking CR even though the drug prevented the expression of this behavior during training. Parenthetically, bHRs treated with flupenthixol did not develop a goal-tracking CR.

Collectively, these data provide several lines of evidence demonstrating that dopamine does not act as a universal teaching signal in reward learning, but selectively participates in a form of stimulus-reward learning whereby Pavlovian incentive value is attributed to a CS. First, US-evoked dopamine release in the nucleus accumbens decreased during training in sign-trackers, but not in goal-trackers. Thus, during the acquisition of a goal-tracking CR, there is not a dopamine-mediated “prediction-error” teaching signal since, by definition, prediction errors become smaller as delivered rewards become better predicted. Second, the CS evoked dopamine release in both sign- and goal-tracking rats, but this signal increased to a greater degree in sign-trackers, which attributed incentive salience to the CS. These data indicate that the strength of the CS-US association is reflected by dopamine release to the CS only in some forms of reward learning. Third, bHR rats that underwent Pavlovian training in the presence of a dopamine receptor antagonist did not acquire a sign-tracking CR, consistent with previous reports24; however, dopamine antagonism had no effect on learning a goal-tracking CR in bLR rats. Thus, learning a goal-tracking CR does not require intact dopamine transmission, whereas learning a sign-tracking CR does.

The attribution of incentive salience is the product of previous experience (i.e., learned associations) interacting with an individual’s genetic propensity and neurobiological state 16,24–27. The selectively-bred rats used in this study have distinctive behavioral phenotypes, including greater behavioral disinhibition and reduced impulse control in bHRs15. Moreover in these lines, unlike the case in outbred rats13,28, there is a strong correlation between locomotor response to novelty and the tendency to sign-track15. These behavioral phenotypes are accompanied by baseline differences in dopamine transmission, with bHRs showing elevated sensitivity to dopamine agonists, increased proportion of striatal D2receptors in a high-affinity state, greater frequency of spontaneous dopamine transients15, and higher reward-related dopamine release prior to conditioning, all of which could enhance their attribution of incentive salience to reward cues29,30. However, basal differences in dopaminergic tone do not provide the full explanation for differences in learning styles and associated dopamine responsiveness. Outbred rats with similar baseline locomotor activity13 and similar baseline levels of reward-related dopamine release in the nucleus accumbens (see Fig. 3), differ in whether they are prone to learn a sign-tracking or goal-tracking CR, but they still develop patterns of dopamine release specific to that CR. Therefore, it appears that different mechanisms control basal dopamine neurotransmission versus the unique pattern of dopamine responsiveness to a reward cue.

The neural mechanisms underlying sign-tracking and goal-tracking behavior remain to be elucidated. Here we show that stimulus-reward associations that produce different CRs are mediated by different neural circuitry. Previous research using site-specific dopamine antagonism20 and dopamine-specific lesions21 indicated that dopamine acts in the nucleus accumbens core to support the learning and performance of sign-tracking behavior. The current work demonstrates that dopamine-encoded prediction-error signals are indeed present in the nucleus accumbens of sign-trackers, but not in the accumbens of goal-trackers. Although these neurochemical data alone do not rule out the possibility that prediction-error signals are present in other dopamine terminal regions, the results from systemic dopamine antagonism demonstrate that intact dopamine transmission is generally not required for learning of a goal-tracking CR.

In sum, we show that dopamine is an integral part of reward learning that is specifically associated with the attribution of incentive salience to reward cues. Individuals who attribute reward cues with incentive salience find it more difficult to resist such cues, a feature associated with reduced impulse control15,31. Human motivated behavior is subject to a wide span of individual differences ranging from highly deliberative to highly impulsive actions directed towards the acquisition of rewards32. The current work provides insight into the biological basis of these individual differences, and may provide an important step for understanding and treating impulse-control problems that are prevalent across several psychiatric disorders.

METHODS SUMMARY

The majority of these studies were conducted with adult male Sprague-Dawley rats from a selective-breeding colony which has been previously described14. The data presented here were obtained from bHR and bLR rats from generations S18–S22. Equipment and procedures for Pavlovian conditioning have been described in detail elsewhere 13,15. Selectively-bred rats from generations S18, S20 and S21 were transported from the University of Michigan (Ann Arbor, MI) to the University of Washington (Seattle, WA) for the FSCV experiments. During each behavior session, chronically implanted microsensors, placed in the core of the nucleus accumbens, were connected to a head-mounted voltammetric amplifier for detection of dopamine by FSCV23. Voltammetric scans were repeated every 100 ms to obtain a sampling rate of 10 Hz. Voltammetric analysis was carried out using software written in LabVIEW (National Instruments, Austin, TX). On completion of the FSCV experiments, recording sites were verified using standard histological procedures. To examine the effects of flupenthixol (Sigma, St. Louis, Missouri; dissolved in 0.9% NaCl) on the performance of sign-tracking and goal-tracking behavior, rats received an injection (i.p.) of 150, 300 or 600 μg/kg of the drug one hour prior to Pavlovian conditioning sessions 9, 11 and 13. Doses of the drug were counterbalanced between groups and interspersed with saline injections (i.p., 0.9% NaCl; prior to sessions 8, 10, 12 and 14) to prevent any cumulative drug effects. To examine the effects of flupenthixol on the acquisition of sign-tracking and goal-tracking behavior, rats received an injection of either saline (i.p.) or 225 μg/kg of the drug one hour prior to Pavlovian conditioning sessions 1–7.

Detailed methods and any associated references are available in the online version of the paper at www.nature.com/nature.

Supplementary Material

Acknowledgments

This work was supported by National Institutes of Health Grants: R01-MH079292 (PEMP), T32-DA07278 (JJC), F32-DA24540 (JJC), R37-DA04294 (TER), and 5P01-DA021633-02 (TER and HA). The selective breeding colony was supported by a grant from the Office of Naval Research (ONR) to HA (N00014-02-1-0879). We would like to thank Drs. Kent Berridge and Jonathan Morrow for comments on earlier versions of the manuscript, and Dr. Scott Ng-Evans for technical support.

Footnotes

Supplementary Information contains detailed results, additional descriptive statistics, Figures S1-S6 and two supplemental videos. This material is linked to the online version of the paper.

Author contributions

S.B.F, J.J.C., T.E.R., P.E.M.P. and H.A. designed the experiments and wrote the manuscript. S.B.F., J.J.C., L.M., A.C., I.W., C.A.A. and S.M.C. conducted the experiments, and S.B.F. and J.J.C. analyzed the data.

Author Information

Reprints and permissions information is available at www.nature.com/reprints.

The authors declare no competing financial interests.

References

- 1.Schultz W. Behavioral theories and the neurophysiology of reward. Annu Rev Psychol. 2006;57:87–115. doi: 10.1146/annurev.psych.56.091103.070229. [DOI] [PubMed] [Google Scholar]

- 2.Wise RA. Dopamine, learning and motivation. Nat Rev Neurosci. 2004;5 (6):483–494. doi: 10.1038/nrn1406. [DOI] [PubMed] [Google Scholar]

- 3.Day JJ, Roitman MF, Wightman RM, Carelli RM. Associative learning mediates dynamic shifts in dopamine signaling in the nucleus accumbens. Nat Neurosci. 2007;10 (8):1020–1028. doi: 10.1038/nn1923. [DOI] [PubMed] [Google Scholar]

- 4.Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275 (5306):1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 5.Montague PR, Dayan P, Sejnowski TJ. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J Neurosci. 1996;16 (5):1936–1947. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Waelti P, Dickinson A, Schultz W. Dopamine responses comply with basic assumptions of formal learning theory. Nature. 2001;412 (6842):43–48. doi: 10.1038/35083500. [DOI] [PubMed] [Google Scholar]

- 7.Balleine BW, Daw ND, O’Doherty JP. Multiple forms of value learning and the function of dopamine. In: Glimcher PW, Camerer CF, Fehr E, Poldrack RA, editors. Neuroeconomics: Decision Making and the Brain. Academic Press; New York: 2008. pp. 367–389. [Google Scholar]

- 8.Berridge KC, Robinson TE. What is the role of dopamine in reward: hedonic impact, reward learning, or incentive salience? Brain Res Brain Res Rev. 1998;28 (3):309–369. doi: 10.1016/s0165-0173(98)00019-8. [DOI] [PubMed] [Google Scholar]

- 9.Berridge KC, Robinson TE. Parsing reward. Trends Neurosci. 2003;26 (9):507–513. doi: 10.1016/S0166-2236(03)00233-9. [DOI] [PubMed] [Google Scholar]

- 10.Berridge KC, Robinson TE, Aldridge JW. Dissecting components of reward: ‘liking’, ‘wanting’, and learning. Curr Opin Pharmacol. 2009;9 (1):65–73. doi: 10.1016/j.coph.2008.12.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hearst E, Jenkins H. Monograph of the Psychonomic Society. Austin: 1974. Sign-tracking: the stimulus-reinforcer relation and directed action. [Google Scholar]

- 12.Boakes R. Performance on learning to associate a stimulus with positive reinforcement. In: Davis H, Hurwitz HMB, editors. Operant-Pavlovian Interactions. Erlbaum; Hillsdale, NJ: 1977. pp. 67–97. [Google Scholar]

- 13.Robinson TE, Flagel SB. Dissociating the predictive and incentive motivational properties of reward-related cues through the study of individual differences. Biol Psychiatry. 2009;65 (10):869–873. doi: 10.1016/j.biopsych.2008.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Stead JD, et al. Selective breeding for divergence in novelty-seeking traits: heritability and enrichment in spontaneous anxiety-related behaviors. Behav Genet. 2006;36 (5):697–712. doi: 10.1007/s10519-006-9058-7. [DOI] [PubMed] [Google Scholar]

- 15.Flagel SB, et al. An animal model of genetic vulnerability to behavioral disinhibition and responsiveness to reward-related cues: implications for addiction. Neuropsychopharmacology. 2010;35 (2):388–400. doi: 10.1038/npp.2009.142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Berridge KC. Reward learning: reinforcement, incentives and expectations. In: Medin DL, editor. Psychology of learning and motivation. Academic Press; 2001. pp. 223–278. [Google Scholar]

- 17.Kelley AE. Functional specificity of ventral striatal compartments in appetitive behaviors. Ann N Y Acad Sci. 1999;877:71–90. doi: 10.1111/j.1749-6632.1999.tb09262.x. [DOI] [PubMed] [Google Scholar]

- 18.Cardinal RN, Parkinson JA, Hall J, Everitt BJ. Emotion and motivation: the role of the amygdala, ventral striatum, and prefrontal cortex. Neurosci Biobehav Rev. 2002;26 (3):321–352. doi: 10.1016/s0149-7634(02)00007-6. [DOI] [PubMed] [Google Scholar]

- 19.Dalley JW, et al. Time-limited modulation of appetitive Pavlovian memory by D1 and NMDA receptors in the nucleus accumbens. Proc Natl Acad Sci U S A. 2005;102 (17):6189–6194. doi: 10.1073/pnas.0502080102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Di Ciano P, Cardinal RN, Cowell RA, Little SJ, Everitt BJ. Differential involvement of NMDA, AMPA/kainate, and dopamine receptors in the nucleus accumbens core in the acquisition and performance of pavlovian approach behavior. J Neurosci. 2001;21 (23):9471–9477. doi: 10.1523/JNEUROSCI.21-23-09471.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Parkinson JA, et al. Nucleus accumbens dopamine depletion impairs both acquisition and performance of appetitive Pavlovian approach behaviour: implications for mesoaccumbens dopamine function. Behav Brain Res. 2002;137 (1–2):149–163. doi: 10.1016/s0166-4328(02)00291-7. [DOI] [PubMed] [Google Scholar]

- 22.Parkinson JA, Olmstead MC, Burns LH, Robbins TW, Everitt BJ. Dissociation in effects of lesions of the nucleus accumbens core and shell on appetitive pavlovian approach behavior and the potentiation of conditioned reinforcement and locomotor activity by D-amphetamine. J Neurosci. 1999;19 (6):2401–2411. doi: 10.1523/JNEUROSCI.19-06-02401.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Clark JJ, et al. Chronic microsensors for longitudinal, subsecond dopamine detection in behaving animals. Nat Methods. 2010;7(2):126–129. doi: 10.1038/nmeth.1412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Berridge KC. The debate over dopamine’s role in reward: the case for incentive salience. Psychopharmacology (Berl) 2007;191 (3):391–431. doi: 10.1007/s00213-006-0578-x. [DOI] [PubMed] [Google Scholar]

- 25.Robinson TE, Berridge KC. The neural basis of drug craving: an incentive-sensitization theory of addiction. Brain Res Rev. 1993;18 (3):247–291. doi: 10.1016/0165-0173(93)90013-p. [DOI] [PubMed] [Google Scholar]

- 26.Tindell AJ, Smith KS, Berridge KC, Aldridge JW. Dynamic computation of incentive salience: “wanting” what was never “liked”. J Neurosci. 2009;29 (39):12220–12228. doi: 10.1523/JNEUROSCI.2499-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Zhang J, Berridge KC, Tindell AJ, Smith KS, Aldridge JW. A neural computational model of incentive salience. PLoS Comput Biol. 2009;5 (7):e1000437. doi: 10.1371/journal.pcbi.1000437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Beckmann JS, Marusich JA, Gipson CD, Bardo MT. Novelty seeking, incentive salience and acquisition of cocaine self-administration in the rat. Behav Brain Res. 2010 doi: 10.1016/j.bbr.2010.07.022. In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wyvell CL, Berridge KC. Intra-accumbens amphetamine increases the conditioned incentive salience of sucrose reward: enhancement of reward “wanting” without enhanced “liking” or response reinforcement. J Neurosci. 2000;20 (21):8122–8130. doi: 10.1523/JNEUROSCI.20-21-08122.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wyvell CL, Berridge KC. Incentive sensitization by previous amphetamine exposure: increased cue-triggered “wanting” for sucrose reward. J Neurosci. 2001;21 (19):7831–7840. doi: 10.1523/JNEUROSCI.21-19-07831.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Tomie A, Aguado AS, Pohorecky LA, Benjamin D. Ethanol induces impulsive-like responding in a delay-of-reward operant choice procedure: impulsivity predicts autoshaping. Psychopharmacology (Berl) 1998;139 (4):376–382. doi: 10.1007/s002130050728. [DOI] [PubMed] [Google Scholar]

- 32.Kuo WJ, Sjostrom T, Chen YP, Wang YH, Huang CY. Intuition and deliberation: two systems for strategizing in the brain. Science. 2009;324 (5926):519–522. doi: 10.1126/science.1165598. [DOI] [PubMed] [Google Scholar]

- 33.Clinton SM, et al. Individual differences in novelty-seeking and emotional reactivity correlate with variation in maternal behavior. Horm Behav. 2007;51 (5):655–664. doi: 10.1016/j.yhbeh.2007.03.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Verbeke G, Molenberghs G. Linear Mixed Models for Longitudinal Data. Springer; New York: 2000. [Google Scholar]

- 35.Heien ML, Johnson MA, Wightman RM. Resolving neurotransmitters detected by fast-scan cyclic voltammetry. Anal Chem. 2004;76 (19):5697–5704. doi: 10.1021/ac0491509. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.