Abstract

Background

Quality endoscopy reporting is essential when community endoscopists perform colonoscopies for veterans who cannot be scheduled at a Veterans Health Administration (VA) facility.

Objective

To examine the quality of colonoscopy reports received from community practices and to determine factors associated with more complete reporting, using national documentation guidelines.

Design

Cross-sectional analysis

Setting

Reports submitted to the Durham VA Medical Center, Durham, North Carolina, from 2007 to 2008.

Patients

Subjects who underwent fee-basis colonoscopy.

Main Outcome Measurements

Scores created by comparing community reports to published documentation guidelines. Three scores, one for each category of information: Universal Elements (found on all endoscopy reports), Indication Elements (specific to the procedure indication), and Finding Elements (specific to exam findings).

Results

For the 135 included reports, the summary scores were Universal Elements 57.6% [95% Confidence Interval (C.I.) 55%-60%], Indication Elements 73.7% (95% C.I. 69%-78%), and Finding Elements 75.8% (95% C.I. 73%-79%). Examples of poor reporting included patient history (20.7%), last colonoscopy date (18.0%), average versus high risk screening (32.0%), withdrawal time (5.9%), and cecal landmark photographs (45.2%). Only the use of automated reporting software was associated with more thorough reporting.

Limitations

Modest sample size, mostly male participants, frequent pathological findings, limited geography, and lack of complete reporting by a minority of providers

Discussion

The overall completeness of colonoscopy reports was low, possibly reflecting a lack of knowledge of reporting guidelines or a lack of agreement regarding important colonoscopy reporting elements. Automated endoscopy software may improve reporting compliance but may not completely standardize reporting quality.

Keywords: endoscopy reporting, practice guidelines, quality

Increasingly, complete and transparent procedure documentation is considered a marker of a high-quality exam.1 The endoscopy report may be the only record of a colonoscopy. Assessment of report quality has been explored in research and clinical practice as a measurement of endoscopic performance.2, 3 Complete documentation is necessary for ongoing patient care by the referring physicians and for appropriate surveillance of neoplasia.

In 2002, Robertson and colleagues4 described endoscopy reporting quality compared to 1999 American Society of Gastrointestinal Endoscopy (ASGE) guidelines,5 and Lieberman et al3 recently published a similar analysis of automated colonoscopy reports submitted to the Community Outcomes Research Initiative (CORI) database. Robertson et al reported an overall completeness of 64% compared to ASGE standards, and Lieberman et al found deficits up to 66% in specific quality indicators. These studies may not be generalizable to the community setting because Robertson et al analyzed clinical trial data and Lieberman et al examined a system with mandatory reporting requirements.

Colonoscopy demands have increased in the VA, partly from mandates to provide screening colonoscopy and to complete a colonoscopy for a positive fecal occult blood test within 60 days. To provide timely colonoscopies, while increasing local capacity, some VA medical centers pay for colonoscopies to be performed outside the VA as part of the fee-basis program. After the colonoscopy, VA physicians assume responsibility for the patient’s care. Therefore, the VA physicians must be informed of colonoscopy findings and pathology results, and a thorough endoscopy report is essential.

Our primary goals were to measure the quality of fee-basis colonoscopy reports submitted to the VA, to provide benchmarking data on the current level of endoscopy reporting quality, to expose poorly-reported elements that may indicate disagreement between community providers and the creators of the national reporting standard, and to examine predictors of more complete reporting.4

Methods

Subjects/Data Source

Patients at the Durham VA Medical Center who were contacted by the VA between May 21, 2007 and May 19, 2008 and underwent a fee-basis colonoscopy were included. Patients were mailed a fee-basis packet with instructions on the fee-basis process and information to give the non-VA endoscopist. Physicians were instructed to fax the completed endoscopy report and any pathology reports to the VA, thus confirming the procedure had been completed and providing VA physicians with the procedure results. The community endoscopists performed colonoscopies in accordance with their site-specific policies and documentation practices. As the colonoscopy report may be the only document forwarded to referring physicians, information from clinic consultations or nursing documentation were not included. Reimbursement from the VA was not linked to the extent or quality of this documentation. We analyzed reports received through February 20, 2009.

Fee-based colonoscopy reports were scanned into the VA computerized patient reporting system (CPRS) as they were received and the scan-date was documented. CPRS is the VA medical record and is accessible by all VA providers. Colonoscopy reports and general internet searches were used to obtain information regarding individual practices and practitioners.

Colonoscopy reporting standard

We defined, a priori, a colonoscopy documentation standard to be the comparison for analysis. This standard was based on the 2006 Quality Assurance Task Group of the National Colorectal Cancer Roundtable (NCCRT) standardized colonoscopy reporting and data system, CO-RADS, which contains over 190 informational elements.6 As the community endoscopists were not responsible for monitoring pathology results, data regarding pathology reporting and interpretation were not included in the documentation standard. The fee-based endoscopy reports were then compared to the documentation standard. A numerical score was created by adding the number of elements included in each community report and dividing this number by the total number of elements in the documentation standard report.

Data elements were categorized as: 1) Universal Elements 2) Indication Elements or 3) Finding Elements. Universal elements are items to be included in all endoscopy reports, such as patient demographics. Indication elements are specific to the reason that the colonoscopy was performed. Some elements are not relevant to all indications, and the physician performing the procedure may be required to provide more or less information depending upon the specific indication. Finding Elements are similar to Indication Elements in that the number of elements needed to adequately describe a certain finding is specific to that finding [Appendix Outline A].

Summary Score Algorithms

Each report was assigned three summary scores, one score for each data element category. Each summary score was based on a proportion of elements included in the reported as compared to documentation standard. Thirty-eight data elements were included in the Universal Elements summary score. To assign summary scores for Finding Elements and Indication Elements, a scoring algorithm was created for each condition to define the total number of necessary data elements. [Delineated in Appendix Outline A.]

Data collection and statistical analysis

All data were abstracted by L.P. A 10% random sample was also coded by N.H to verify accuracy. Agreement between the original and verification abstractions was greater than 99%. We calculated the means, medians, ranges, percents, and confidence intervals for the study sample. Six characteristics were pre-determined for regression model inclusion: use of automated software, ambulatory surgery center setting, years since medical school graduation (endoscopist), number of physicians in endoscopy practice, presence of potentially neoplastic findings [findings other than diverticula, hemorrhoids, or arteriovenous malformations], and indication other than screening. Automated reporting software included software packages specifically designed to generate endoscopy reports and excluded type-written reports of dictated exams. The largest practice had 29 partners (an academic medical center with many affiliates at local hospitals), but the next-largest practice had 9 partners. Therefore, we categorized practice size as: small (1-2 physicians), mid-sized (3-6 physicians), and large (≥7 physicians). Bivariate comparisons between these six variables and the outcome of interest, Universal Elements score, were made using simple linear regression for continuous variables and Student’s t-test for categorical variables.

Multivariable linear regression was used to examine associations between the Universal Elements score and the six a-priori defined variables, and included calculating the model R-squared, residual-by-predicted plots, residual quantile-quantile (Q-Q) plots, and standardized normal probability (P-P) plots to test the aptness of model fit. Microsoft© Excel was used for data abstraction, SAS statistical software (SAS Institute, Cary, North Carolina) was used to calculate summary scores, and Stata statistical software (Stata SE Ver 9.0, College Station, Texas) was used for all statistical analysis and modeling.

Research approval and support

The Durham VA Medical Center Institutional Review Board approved this study.

Results

One hundred thirty-five colonoscopy reports were included. The patients were 95.5% male and 60.7% Caucasian [Table 1]. Fifty-five percent of exams were for screening. Forty-nine physicians in 35 separate practices performed the procedures. Only 3 physicians representing 4 reports (3% of the total sample) were associated with an academic medical center. Fifty-nine percent of the colonoscopies were performed by physicians in solo or two-person practices. Table 2 lists characteristics of the physicians and practices.

Table 1.

Patient Characteristics

| n* (total = 135) |

Percent (%) |

|

|---|---|---|

| Age | 62† (S.D.# 8) | (30-86)§ |

| Male Sex | 126 | (93.3) |

| Race/Ethnicity | ||

| African American | 44 | (32.6) |

| Caucasian | 82 | (60.7) |

| Other | 4 | (3.0) |

| Unknown/Not listed | 5 | (3.7) |

| Indication for Exam | ||

| Screening | 75 | (55.1) |

| Surveillance | 29 | (21.3) |

| Abnormal Test Result | 9 | (6.6) |

| Symptoms | 19 | (14.0) |

| Unknown | 4 | (3.0) |

n=number;

Age in years;

S.D. = standard deviation;

range in years

Table 2.

Provider Characteristics

| n* | Percent (%) |

|

|---|---|---|

| Practitioners | 49 | − |

| Practices | 35 | − |

| Practice Size | 3.4† (S.D. 5 ) | (1-29)§ |

| Years from Medical School Graduation | 22‡ (S.D. 7.8) | (7-48)§ |

| Board Certified in Gastroenterology | 46 | (93.9) |

| Use of Automated Software | 90** | (66.4) |

n=number;

mean number of physicians;

S.D. = standard deviation;

range;

mean time in years;

based on the total number of endoscopy reports (n=135)

The summary scores were Universal Elements 57.6% [95% Confidence Interval (C.I.) 55%-60%], Indication Elements 73.7% (95% C.I. 69%-78%), and Finding Elements 75.8% (95% C.I. 73%-79%). Table 3 summarizes each element in the Universal Elements score and the Robertson et al results for comparison.4 Though initially included, information regarding patient anticoagulation, prophylactic antibiotics, and intra-cardiac devices were so inconsistently recorded that these items were deleted from the Universal Elements score. Including only equivalent data elements results in a score of 60.4% for the Robertson et al reports compared to 64.3% for this study. (“Comparison Scores”, Table 3)

Table 3.

Universal Elements with comparison to 2002 benchmarks*

| Data Element |

2008 n=135 % |

2002 n=122 % |

Data Element |

2008 n=135 % |

2002 n=122 % |

|---|---|---|---|---|---|

| Endoscopist | 100 | 98.4 | Level of sedation | 40.0 | − |

| Assistant | 14.8 | 8.2 | Extent of exam | 98.5 | 91.0 |

| Informed consent | 85.2 | 32.0 | Cecum Reached | 98.5 | − |

| Facility | 93.3 | -† | Cecal landmarks documented | 78.5 | − |

| Name | 100 | 97.5 | Method of cecal documentation (images) |

45.2 | − |

| Age | 88.2 | 48.4 | Total duration of exam | 8.9 | − |

| Sex | 38.5 | 95.1 | Withdrawal time | 5.9 | − |

| Race | 10.4 | 85.2 | Retroflexion in rectum | 65.2 | − |

| Patient History# | 20.7 | 50.0 | Type of bowel prep | 3.7 | 6.6 |

| Physical Exam# | 38.5 | 21.3 | Quality of bowel prep§ | 75.6 | 29.5 |

| Digital Rectal Exam | 65.9 | − | Prep was adequate to detect polyps <10 mm§ |

14.8 | 17.2 |

| ASA classification‡ | 26.7 | − | Difficult exam | 60.7 | − |

| Indication for procedure | 97.8 | 89.3 | Patient discomfort | 64.4 | − |

| Date of last colonoscopy** | 5.19 | − | Type of instrument used | 62.2 | 52.4 |

| Technical description of procedure†† |

97.8 | − | Assessment | 97.0 | 82.0 |

| Procedure date | 99.3 | 98.3 | Complications | 68.2 | 58.2 |

| Procedure time of day | 34.8 | − | Follow-up & discharge plan | 87.7 | 68.9 |

| Sedation | 94.8 | − | Documentation of communication with patient |

15.6 | − |

| Medications (with dosages) given |

85.2 | 72.9 | |||

| Sedation provider | 5.9 | − | |||

|

| |||||

| Comparison Score ## | 64.3 | 60.4 | |||

|

| |||||

| Universal Elements Score | 62.3 | ||||

Robertson et al, citation 4;

not calculated in 2002;

Includes all reports that indicating review of patient’s history and performance of a physical exam prior to procedure. Does not include information from nursing notes or consultation visits.

Prep quality and adequacy to detect polyps <10 mm are separate elements in the NCCRT report.

Any accepted risk classification strategy or documentation of risk was included.

Also includes documentation that this is the patient’s first colonoscopy if applicable

Distinguishes summary letters from complete endoscopy reports.

Calculated using only those elements also measured both in 2002 and 2008 reports

Compared to 2002, a higher proportion of physicians commented on informed consent (85.2% versus 32.0%), patient age (88.2% versus 48.4%), and quality of the bowel preparation (75.6% versus 29.5%). In contrast, the reporting of some elements declined, including patient gender (38.5% from 95.1%), race (10.4% from 85.2%), and history (20.7% from 50.0%). Persistently lacking documentation was noted for the pre-procedure physical exam, type of bowel preparation, and adequacy of preparation to detect polyps <10 millimeters.

Additional elements, not recorded in the Robertson study, were infrequently documented including American Society of Anesthesiologists (ASA) classification (or equivalent risk stratification, 26.7%) and sedation level (40.0%). Exam timing was uncommonly reported—34.8% recorded the time of day, 8.9% listed the total exam duration, and 5.9% reported withdrawal time. Only 15.6% of practitioners documented communicating the procedure findings with the patient.

Analysis of the Indication Elements score also revealed deficits in reporting. Of the 79 screening exams, only 32% reported whether the exams were average or high risk. Only 18% of exams performed for surveillance included the date of the last colonoscopy. Though 94% of surveillance exams included the most significant lesion found during the prior exam, 84% of these reports listed the lesion as “polyps” without specifying type, size, or location.

In general, reporting rates were higher for describing findings than for Universal Elements. Seventy-five percent reported polyp morphology, 99% reported polyp location and removal technique, and 87% reported sending pathology specimens. However, only 40% and 48% reported complete removal and retrieval of polyps, respectively.

In the multivariable linear regression model, only the use of automated software was associated with more complete documentation, increasing the Universal Elements score by 12% over reports not generated by automated software (95% C.I. 8%-15%). That is, the predicted Universal Elements score was 63% (95% C.I. 61%-65%) for reports using automated software and 51% (95% C.I. 48%-54%) for reports not using automated software. The model R-squared was 0.319. Residual-by-predicted plots, residual quantile-quantile (Q-Q) plots and standardized normal probability (P-P) plots showed adequate approximation to normality.

We performed a similar analysis exploring the predictors of more complete documentation for the Finding Elements. Only the subgroup of reports with polyp or polyp cluster (n=83) findings was large enough to support this analysis. Automated software was associated with more complete documentation, increasing the Findings Elements score by 8% (95% C.I. 1-15%). Medium-sized practices (3-5 persons) also had more complete reports compared to those from 1-2 person practices by 8% (95% C.I. 1%-15%). There was no difference between reporting in large practices compared to small practices (1% increase in completeness, 95% C.I. −13% - +14%). Secondary regression analyses using the practice sizes (1, 2, 3, 5, 7, 9 and 29 persons) as individual indicator variables (as opposed to the small, medium, large categorization) showed significant results for only the 3-person practice size.

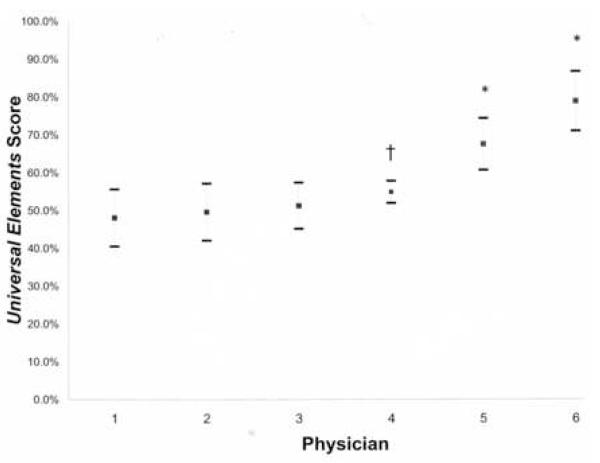

In an exploratory analysis, we investigated the variability of reporting for the subset of physicians using automated software. We graphed the mean Universal Elements score ± 2 standard deviations (S.D.) for the 6 physicians who performed 5 or more colonoscopy exams (41% of the reports), denoting whether they used automated software [Figure 1]. The mean scores were better in the group using automated software (indicated by asterisks). The variability (S.D.) was similar in both groups, save physician 4 (S.D. 2.9%). Physician 4 submitted the fewest reports (5) in this group with a mix of automated-software-generated and dictated reports.

Figure 1.

Graph of Universal elements score ± 2 standard deviations (whiskers) for physicians submitting more than 5 reports. Asterisk indicates physicians using automated reporting. Dagger indicates physician using non-automated and automated reporting.

Discussion

Despite published recommendations, there has been minimal change in colonoscopy documentation over the last six years, and the observed differences in individual reporting items may reflect changing documentation styles, not changes in the quality of documentation. Either effective strategies have not been widely implemented and/or providers may not agree upon what constitutes a complete endoscopy report. Physician preference and disagreement regarding best practices has been raised as one explanation for variability in colorectal cancer surveillance rates7, 8 and it may play a role in endoscopy reporting as well.

Both the American College of Gastroenterology-American Society of Gastrointestinal Endoscopy (ACG-ASGE) joint taskforce 9, 10 and the Quality Assurance Task Group of the NCCRT 6 have proposed quality indicators for endoscopy that can usually be extracted directly from endoscopy reports or procedural notes. The variability seen in this and other analyses 3, 4 suggests that physicians should change their documentation practices to ensure that their procedure reports consistently provide all data needed for ongoing patient care. Routinely absent elements in our study, such as prior colonoscopy details, bowel preparation quality, exam timing, and documentation of cecal landmarks, have been associated with neoplasia risk and detection.2, 11-13 Also, few providers documented that they communicated colonoscopy findings to patients, which could impact patient satisfaction and overall quality of care. While it is possible that these processes were performed, inconsistent or absent documentation presents a problem for quality monitoring.

Several recommended indicators rely on documenting details of patient history and prior endoscopies that may be difficult to obtain, especially with open-access endoscopy. The dates and findings from prior procedures may only be available from the patient. While patients with a personal or family history of colorectal cancer are usually able to recall the dates of prior procedures,14-16 other patients may have less accurate recall.15, 17, 18 Similarly, patients may report a history of “polyps”;19, 20 however, they rarely differentiate between polyps types. 19 Our finding that 84% of exams performed for surveillance listed only “polyps” as the indication also suggests that prior histology was unknown. In addition, the appropriateness of the indication and colonoscopy interval are recommended quality indicators, but in routine practice these are often determined by the referring physician. These issues suggest that guidelines must address whether the colonoscopy report is the best data source for measuring quality indicators that rely upon accurate input from individuals other than the endoscopist. Nonetheless, we chose to use the published guidelines as our comparison and analysis because they were developed through a consensus and peer review process and have been endorsed by gastroenterology professional societies. Alternatively we could have developed our own list of quality markers; however, these could have reflected our biases and would have limited comparison to other studies using the published national standard.

An intriguing issue is the role that automated software plays in endoscopy reporting. Automated software is a documentation tool with face-validity, but whether it can standardize reporting behavior and improve the amount and type of information delivered to referring physicians is unknown. We found that use of automated software was associated with a significant increase in the completeness of endoscopic reporting but potentially not the variability of reporting. This is similar to the findings of Lieberman et al3 who examined reporting quality in the automated CORI reporting system where quality indicators are built into the standardized report. The quality of the CORI automated endoscopy reports was better for all items than was seen in this analysis; however, they also demonstrated imperfect compliance with quality indicators. This may indicate that automated software has the potential to improve quality, but this intervention alone will not completely standardize endoscopy reporting.

In the analysis of predictors of Findings Elements, we found that 3 person practices produced more complete reports than 1 person practices, but no significant results were seen in the other practice sizes. Though there was a trend to more complete reporting in medium sized practices (compared to both small and large practices), the small number of observations in each category leads us to question whether this is a true association.

Limitations of our study include the modest sample size, predominance of male participants, frequent pathological findings, limited geographical region, and summary letters but not full endoscopy reports on some subjects. A larger sample would facilitate generalizability and enhance our ability to identify characteristics associated with reporting quality. We cannot generalize our findings to women, though there is no evidence of gender-based differences in reporting quality. Geographic variation in healthcare utilization and quality is common.21-23 Therefore, other regions might have different documentation patterns than what we noted.

These limitations should not diminish our contribution to identifying and understanding documentation issues in current community gastroenterology practice. Our data represented the routine practices of 49 endoscopists in 35 groups and the patients were community-dwelling adults. Quality assessment will affect all practice settings; therefore, knowledge of current practices is important in setting realistic benchmarks. The NCCRT’s specific goals in creating a documentation standard were “to produce a tool that will provide endoscopists with a quality improvement instrument and to provide referring physicians a colonoscopy report that will use standard terms and provide follow-up recommendations.”6 Though the NCCRT included “experts in gastroenterology, diagnostic radiology, primary care, and health care delivery”, our findings suggest a lack of agreement between what is routinely recorded at colonoscopy and what leaders recommend recording. Consensus regarding what the appropriate quality indicators should be will ensure a fair accounting of the procedure and may encourage broader adoption of quality improvement efforts.

Table 4.

Colonoscopy Findings

| Findings | n | (%)* |

|---|---|---|

| Mass | 1 | (0.7) |

| Polyp | 79 | (58.5) |

| Polyp Cluster | 4 | (3.0) |

| Mucosal Abnormality | 6 | (4.4) |

| Diverticula | 58 | (43.0) |

| Hemorrhoids | 67 | (49.6) |

| AVM’s | 0 | (0.0) |

more than 1 finding per colonoscopy was possible

Table 5.

Factors associated with more complete reporting

| Change in Universal Elements Score (%)* |

95% Confidence Interval |

|

|---|---|---|

| Use of Automated Software | 11.7 | 8.0, 15.5 |

| Years from Graduation† | −0.4 | −1.4, 0.6 |

| Practice size | ||

| Small Practices (1-2 physicians)# | − | − |

| Mid-Sized Practices (3-6 physicians) | 0.01 | −3.8 , 3.9 |

| Large Practices (7 or more physicians) | −2.0 | −8.9, 4.7 |

| Procedure performed in ASC | 0.4 | −2.9, 3.8 |

| Potentially Neoplastic findings | 2.7 | −0.7, 6.1 |

| Indication other than Screening | 0.4 | −2.9, 3.6 |

beta coefficients from linear regression expressed as percents

In five-year increments

Small practices (1-2 physicians) were considered the referent group

Acknowledgement

Dr. Palmer was supported in part by a T32 National Institutes of Health (NIH) Training Grant 5-T32 DK007634-19. Dr. Fisher was supported in part by a VA Health Services Research and Development Career Development Transition Award (CDA 03-174). Dr. Provenzale was supported in part by a NIH K24 grant 5 K24 DK002926. The views expressed in this manuscript are those of the authors and do not necessarily represent the views of the Department of Veterans Affairs.

Appendix A Outline: Indication Elements and Finding Elements scoring algorithm

- Indication Elements

- Screening

- Average Risk (2 data elements needed for completeness)

- Screening

- Average Risk

- High Risk (4 data elements needed)

- Family history of neoplasia

- Degree of relative

- Age of relative at diagnosis

- Number of affected family members

- Abnormal Test Result (2 data elements)

- Abnormal Test Result

- Type of test

- Symptoms (2 data elements)

- Symptoms

- Type of symptoms

- Surveillance (3-4 data elements)

- Surveillance

- Date of Last colonoscopy

- Most significant prior lesion

- Type of lesion

- Location if prior carcinoma

- Finding Elements

- Mass (7 data elements)

- Location

- Size

- Morphology

- Biopsy taken

- Biopsy method

- Retrieved

- Sent to pathology

- Polyp (8 data elements)

- Location

- Size

- Morphology

- Attempted removal

- Removal method

- Complete removal

- Complete retrieval

- Sent to pathology

- Polyp Cluster (9 data elements)

- Number in cluster

- Location

- Size range in cluster

- Morphology

- Attempted removal

- Removal method

- Complete removal

- Complete retrieval

- Sent to pathology

- Submucosal Lesion (6 data elements)

- Location

- Size

- Biopsy taken

- Complete retrieval of biopsy

- Complete removal

- Sent to pathology

- Mucosal Abnormality (3 data elements)

- Suspected diagnosis

- Biopsy taken

- Sent to pathology

- Normal mucosa in patient with diarrhea (1 data element)

- Pathology specimen obtained

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Lieberman D. A Call to Action -- Measuring the Quality of Colonoscopy. N Engl J Med. 2006 December 14;355(24):2588–2589. doi: 10.1056/NEJMe068254. 2006. [DOI] [PubMed] [Google Scholar]

- 2.Barclay RL, Vicari JJ, Doughty AS, Johanson JF, Greenlaw RL. Colonoscopic Withdrawal Times and Adenoma Detection during Screening Colonoscopy. N Engl J Med. 2006 December 14;355(24):2533–2541. doi: 10.1056/NEJMoa055498. 2006. [DOI] [PubMed] [Google Scholar]

- 3.Lieberman DA, Faigel DO, Logan JR, et al. Assessment of the quality of colonoscopy reports: results from a multicenter consortium. Gastrointestinal Endoscopy. 2009;69(3, Part 2):645–653. doi: 10.1016/j.gie.2008.08.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Robertson DJ, Lawrence LB, Shaheen NJ, et al. Quality of colonoscopy reporting: a process of care study. Am J Gastroenterol. 2002;97(10):2651–2656. doi: 10.1111/j.1572-0241.2002.06044.x. [DOI] [PubMed] [Google Scholar]

- 5.Quality Improvement of Gastrointestinal Endoscopy: GUIDELINES for Clinical Application. Gastrointestinal Endoscopy. 1999;49(6):842–844. [PubMed] [Google Scholar]

- 6.Lieberman D, Nadel M, Smith RA, et al. Standardized colonoscopy reporting and data system: report of the Quality Assurance Task Group of the National Colorectal Cancer Roundtable. Gastrointestinal Endoscopy. 2007;65(6):757–766. doi: 10.1016/j.gie.2006.12.055. [DOI] [PubMed] [Google Scholar]

- 7.Rossi F, Sosa JA, Aslanian HR. Screening colonoscopy and fecal occult blood testing practice patterns: a population-based survey of gastroenterologists. J Clin Gastroenterol. 2008 Nov-Dec;42(10):1089–1094. doi: 10.1097/MCG.0b013e3181599bfc. [DOI] [PubMed] [Google Scholar]

- 8.Mysliwiec PA, Brown ML, Klabunde CN, Ransohoff DF. Are Physicians Doing Too Much Colonoscopy? A National Survey of Colorectal Surveillance after Polypectomy. Ann Intern Med. 2004 August 17;141(4):264–271. doi: 10.7326/0003-4819-141-4-200408170-00006. 2004. [DOI] [PubMed] [Google Scholar]

- 9.Rex DK, Petrini JL, Baron TH, et al. Quality Indicators for Colonoscopy. Am J Gastroenterol. 2006;101(4):873–885. doi: 10.1111/j.1572-0241.2006.00673.x. [DOI] [PubMed] [Google Scholar]

- 10.Faigel DO, Pike IM, Baron TH, et al. Quality Indicators for Gastrointestinal Endoscopic Procedures: An Introduction. Am J Gastroenterol. 2006;101(4):866–872. doi: 10.1111/j.1572-0241.2006.00677.x. [DOI] [PubMed] [Google Scholar]

- 11.Radaelli F, Meucci G, Sgroi G, Minoli G. Technical Performance of Colonoscopy: The Key Role of Sedation/Analgesia and Other Quality Indicators. Am J Gastroenterol. 2008;103(5):1122–1130. doi: 10.1111/j.1572-0241.2007.01778.x. [DOI] [PubMed] [Google Scholar]

- 12.Florian F, Vincent W, Jean-Jacques G, Bernard B, John-Paul V. Impact of colonic cleansing on quality and diagnostic yield of colonoscopy: the European Panel of Appropriateness of Gastrointestinal Endoscopy European multicenter study. Gastrointestinal Endoscopy. 2005;61(3):378–384. doi: 10.1016/s0016-5107(04)02776-2. [DOI] [PubMed] [Google Scholar]

- 13.Rex DK. Quality in Colonoscopy: Cecal Intubation First, Then What[quest] Am J Gastroenterol. 2006;101(4):732–734. doi: 10.1111/j.1572-0241.2006.00483.x. [DOI] [PubMed] [Google Scholar]

- 14.Baier M, Calonge N, Cutter G, et al. Validity of Self-Reported Colorectal Cancer Screening Behavior. Cancer Epidemiol Biomarkers Prev. 2000 February 1;9(2):229–232. 2000. [PubMed] [Google Scholar]

- 15.Madlensky L, McLaughlin J, Goel V. A Comparison of Self-reported Colorectal Cancer Screening with Medical Records. Cancer Epidemiol Biomarkers Prev. 2003 July 1;12(7):656–659. 2003. [PubMed] [Google Scholar]

- 16.Partin MR, Grill J, Noorbaloochi S, et al. Validation of Self-Reported Colorectal Cancer Screening Behavior from a Mixed-Mode Survey of Veterans. Cancer Epidemiol Biomarkers Prev. 2008 April 1;17(4):768–776. doi: 10.1158/1055-9965.EPI-07-0759. 2008. [DOI] [PubMed] [Google Scholar]

- 17.Fisher DA, Voils CI, Coffman CJ, et al. Validation of a questionnaire to assess self-reported colorectal cancer screening status using face-to-face administration. Dig Dis Sci. 2009 Jun;54(6):1297–1306. doi: 10.1007/s10620-008-0471-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Bradbury BD, Brooks DR, Brawarsky P, Mucci LA. Test-retest reliability of colorectal testing questions on the Massachusetts Behavioral Risk Factor Surveillance System (BRFSS) Preventive Medicine. 2005;41(1):303–311. doi: 10.1016/j.ypmed.2004.11.015. [DOI] [PubMed] [Google Scholar]

- 19.Madlensky L, Daftary D, Burnett T, et al. Accuracy of Colorectal Polyp Self-Reports: Findings from the Colon Cancer Family Registry. Cancer Epidemiol Biomarkers Prev. 2007 September 1;16(9):1898–1901. doi: 10.1158/1055-9965.EPI-07-0151. 2007. [DOI] [PubMed] [Google Scholar]

- 20.Hoffmeister M, Chang-Claude J, Brenner H. Validity of Self-Reported Endoscopies of the Large Bowel and Implications for Estimates of Colorectal Cancer Risk. Am. J. Epidemiol. 2007 July 15;166(2):130–136. doi: 10.1093/aje/kwm062. 2007. [DOI] [PubMed] [Google Scholar]

- 21.Wennberg J, Gittelsohn A. Small area variations in health care delivery. Science. 1973;182:1102–1108. doi: 10.1126/science.182.4117.1102. [DOI] [PubMed] [Google Scholar]

- 22.Perrin JM, Homer CJ, Berwick DM, Woolf AD, Freeman JL, Wennberg JE. Variations in rates of hospitalization of children in three urban communities. N Engl J Med. 1989 May 4;320(18):1183–1187. doi: 10.1056/NEJM198905043201805. [DOI] [PubMed] [Google Scholar]

- 23.Wennberg JE. Unwarranted variations in healthcare delivery: implications for academic medical centres. BMJ. 2002 October 26;325(7370):961–964. doi: 10.1136/bmj.325.7370.961. 2002. [DOI] [PMC free article] [PubMed] [Google Scholar]