Abstract

Much of the work on face-selective neural activity has focused on posterior, ventral areas of the human and non-human primate brain. However, electrophysiological and fMRI studies have identified face responses in the prefrontal cortex. Here we used fMRI to characterize these responses in the human prefrontal cortex compared with face selectivity in posterior ventral region. We examined a region at the junction of the right inferior frontal sulcus and the precentral sulcus (right inferior frontal junction or rIFJ) that responds more to faces than to several other object categories. We find that the rIFJ and the right fusiform face area (rFFA) are broadly similar in their responses to whole faces, headless bodies, tools, and scenes. Strikingly, however, while the rFFA preferentially responds to the whole face, the rIFJ response to faces appears to be driven primarily by the eyes. This dissociation provides clues to the functional role of the rIFJ face response. We speculate on this role with reference to emotion perception, gaze perception, and to behavioral relevance more generally.

Keywords: faces, eyes, prefrontal cortex, FFA, fMRI

Introduction

It is well established that for humans and other primates, the visual appearance of the face provides rich, socially relevant cues. Accordingly, the neural representation of faces in the visual cortex (occipital and ventrolateral temporal lobes) has been well explored using multiple techniques. In macaques, single-unit (Gross et al., 1972; Desimone et al., 1984; Perrett et al., 1985; Logothetis et al., 1999; Tsao et al., 2006; Freiwald et al., 2009), fMRI (Tsao et al., 2003, 2008a,b; Pinsk et al., 2005a, 2009; Bell et al., 2009; Rajimehr et al., 2009), and genetic and protein expression (Zangenehpour and Chaudhuri, 2005) studies have demonstrated regional specialization for faces in the temporal lobe. In humans, studies have used intracranial recordings (Allison et al., 1999; McCarthy et al., 1999; Puce et al., 1999; Quiroga et al., 2005), ERP (Rossion et al., 2003; Itier and Taylor, 2004; Bentin et al., 2006; Thierry et al., 2007), MEG (Liu et al., 2002; Xu et al., 2005), PET (Haxby et al., 1994), and fMRI (Puce et al., 1996, 1997, 1998; Kanwisher et al., 1997; Haxby, 2006) in order to reveal face-selective responses in the occipital and temporal lobes.

Additionally, some evidence has suggested that face-selective responses exist in the prefrontal cortex (Thorpe et al., 1983). In particular, Scalaidhe et al. (1997, 1999) identified a small number of highly face-selective cells in the prefrontal region in monkeys. They showed that face selectivity for viewing faces was found both in monkeys that had been previously trained to perform a working memory (WM) task, and in monkeys who had not learned WM tasks. This distinctive population of face neurons responded strongly to faces but weakly or not at all to non-face items such as common objects, scrambled faces, and simple colored shapes, supporting the claim that these neurons are category-selective. Intriguingly, these face-selective neurons received more than 95% of input from the temporal visual cortex.

More recently, Tsao et al. (2008b) used fMRI to identify three regions of macaque prefrontal cortex that respond highly selectively to images of faces. Intriguingly, a follow-up study (Tsao et al., 2008a) revealed a face-selective patch near the right inferior frontal sulcus in three out of nine human participants. Further evidence using fMRI in humans and macaque monkeys (Rajimehr et al., 2009) has also revealed a frontal face patch in the inferior frontal sulcus near the frontal eye field (FEF) across both species.

Recently, researchers have proposed a “core” network for face processing that consists of mainly occipitotemporal structures, and an “extended” network including limbic and frontal structures (see Haxby et al., 2000; Avidan and Behrmann, 2009). For example, Ishai et al. (2005) assessed the response to line drawings and photographs of faces, compared to scrambled controls, and found responses in many areas including the orbitofrontal cortex and inferior frontal gyrus. While studies like these reveal face-driven responses outside the ventral stream, they do not establish the selectivity of these regions by comparison to other kinds of visual stimuli (Wiggett and Downing, 2008). Using a free-viewing task, we have previously reported a region in the human right lateral prefrontal region that elicited face-selective activation relative to 19 other object categories (Downing et al., 2006). The activation is found at the junction of the inferior frontal sulcus and the precentral sulcus [45 9 36].

Here, we further investigate the properties of this region. Because it can be identified in most individual participants, it is amenable to a functional localizer approach as used in previous studies of extrastriate cortex (Tootell et al., 1995; Kanwisher et al., 1997; Downing et al., 2001). Throughout the rest of this paper, we refer to this region as the right inferior frontal junction (rIFJ).

Previous work in monkey physiology (Scalaidhe et al., 1997, 1999; Rajimehr et al., 2009) has attempted to establish the functional role of the prefrontal face-selective region while suggesting its functional properties are similar the face-selective cluster in the temporal visual cortex. The present study not only aims to provide further clues to the functional role of the rIFJ in face representations in human, but also to investigate whether we can functionally dissociate between the face-selective rIFJ and the face-selective fusiform face area (FFA; Kanwisher et al., 1997) in the visual cortex. In a wider perspective, our current studies intend to bridge the findings in monkey and human literatures.

To achieve this, similar to previous studies, we manipulated WM demands (Scalaidhe et al., 1997, 1999; Kanwisher et al., 1998) and stimulus categories (Tong et al., 2000) to explore the nature of activation in rIFJ and right FFA (rFFA). In the first experiment, in order to compare the response profiles of rIFJ and the rFFA and to examine their selectivity profiles in the presence and absence of WM demand, we compared the responses of these regions in a free-viewing task and a 1-back WM task. In the second experiment, we examined the response of the IFJ and FFA specifically to the eyes, relative to several control conditions. This was motivated by the clear significance of the eyes in communicating social information, as reflected in previous studies that have examined the neural responses in the ventral stream to the eyes (Tong et al., 2000; Bentin et al., 2006; Itier et al., 2006; Harel et al., 2007; Lerner et al., 2008). Finally, given recent evidence (Rajimehr et al., 2009) that shows that some frontal face responses are found near the FEF, we tested whether activation in the rIFJ overlaps with cortical regions involved in the execution of eye movements.

Materials and Methods

Experiment 1

Participants

All participants were recruited from the University of Wales, Bangor community. Participants satisfied all requirements in volunteer safety screening, and gave written informed consent. Procedures were approved by the Psychology Ethics Committee at Bangor University. Twenty healthy adult volunteers were recruited.

Stimuli

Images of unfamiliar faces, bodies without heads, tools, and scenes were presented. Each image was 400 × 400 pixels. A small fixation cross overlaid the center of each image. Forty full color images were used for each category, which were divided into two stimulus sets. One set was presented in half of the scans, and the other in the other half.

Experimental design and tasks

The experiment consisted of four runs per participant. Within each run there were twenty-one 15-s blocks, resulting in a run duration of 5 min 15 s. Blocks 1, 6, 11, 16, and 21 were a fixation baseline condition. Each of the remaining blocks comprised presentation of 20 exemplars from a single category. The order of blocks was symmetrically counterbalanced within each version, so that the first half of each version was the mirror order of the second half, resulting in an equivalent mean serial position for each condition. The order of blocks was counterbalanced across runs, with two versions: the first half and second half of one version were swapped to create the second version. Within a block, each image was presented for 300 ms, with an ISI of 450 ms between images. Ten participants were instructed to perform a free-viewing task (view the stimuli passively) during the scan session. The other 10 performed a 1-back task, in which they were asked to press a button whenever an image occurred twice in immediate succession. Two image repetitions trials occurred at randomly selected time points in each block in the 1-back task. Both groups were instructed to maintain central fixation throughout the scan.

fMRI data acquisition and preprocessing

Brain imaging was performed on a Philips Gyroscan Intera 1.5 T scanner equipped with a SENSE head coil (Pruessmann et al., 1999). An EPI sequence was used to image functional activation. Thirty slices were collected per image covering the whole brain. Scanning parameters were: repetition time/echo time (TR/TE) = 3000/50 ms, flip angle (FA) = 90°, slice thickness = 5 mm (no gap), acquisition matrix = 64 × 64, in-plane resolution = 3.75 mm × 3.75 mm. For anatomical localization, a structural scan was made for each participant using a T1-weighted sequence. Scanning parameters were: TR/TE = 12/2.9 ms, FA = 8°, coronal slice thickness = 1.3 mm (no gap), acquisition matrix = 256 × 256, in-plane resolution = 1 mm × 1 mm. Three dummy volumes were acquired before each scan in order to reduce the effect of T1 saturation. Preprocessing of data and statistical analyses were performed using Brain Voyager 4.9 (Brain Innovation, Maastricht, The Netherlands). Preprocessing of functional images included: 3D-motion correction of functional data using trilinear interpolation and temporal high pass filtering (0.006 Hz cutoff). Five millimeters of spatial smoothing was applied. Functional data were manually co-registered with the anatomical scans. The anatomical scans were transformed into Talairach and Tournoux space (Talairach and Tournoux, 1993), and the parameters for this transformation were then applied to the co-registered functional data.

fMRI data analysis

For multiple-regression analyses, predictors were generated for each category. The event time series for each condition was convolved with a model of the hemodynamic response. Voxel time series were z-normalized for each run, and additional predictors accounting for baseline differences between runs were included in the design matrix. For functional region of interest (ROI) analyses, general linear models were performed on the aggregate time course of the voxels included in the ROI, and the resulting beta parameters were used as estimates of the magnitude of the ROI's response to a given stimulus condition. A split-half analysis method was employed in order to avoid circularity (Kriegeskorte et al., 2009). The data from each participant was divided into two sets (one set for each block order design). Runs with order version 1 were used to define the ROIs, and runs with order version 2 to estimate the responses of the ROIs across conditions, and vice versa. The results of these two calculations were combined before further analysis with ANOVA. This procedure ensured that all data contributed to the analysis, and that the data were independent from those used for ROI definition. Where a robust ROI could not be identified with both halves of the data, the results from the ROIs defined by one-half were analyzed.

The rIFJ and the rFFA ROIs were defined by the contrast of faces minus tools in each participant. For rFFA, the most activated voxel was identified in close proximity to previously reported anatomical locations (Puce et al., 1996, 1997, 1998; Kanwisher et al., 1997; Haxby, 2006). For rIFJ, the region was selected within each participant's anatomy, and the most activated voxel was identified within the junction where the inferior frontal sulcus met the precentral sulcus. The rIFJ and rFFA clusters were defined as the set of contiguous voxels that were significantly activated within 9 mm in the direction of anterior/posterior, superior/inferior, and medial/lateral direction of the most activated voxel. Voxels were included at a threshold of p < 0.05 uncorrected; the lenient threshold was adopted because the split-half procedure reduced the amount of data available for a given localizer by half. This procedure ensured that the ROIs were segregated from nearby areas, and ensured that each ROI contained a similar number of voxels.

Experiment 2

Participants

Another nine participants were recruited to participate in Experiment 2. Recruitment and ethics procedures were as in Experiment 1.

Stimuli

Images of whole faces, faces with a gray rectangle covering the eyes (where the brightness of the gray rectangle was matched with the surrounding face in Adobe Photoshop 8.0), images of pairs of eyes (in a rectangular cut out), and flowers were presented in gray-scale. In total, there were 20 400 × 400 pixel images in each condition. Half of the stimuli for each of the three face conditions were female and half were male. Different face images were used in the whole faces condition and the eyes masked condition. Stimuli in the eyes alone condition were cut-outs from the faces used in the eyes masked condition. For the stimuli used in the localizer scan, we used the same stimuli in Experiment 1, except that a different set of face images were used.

Experimental design and task

Four localizer scans (1-back task) were tested on each participant, interleaved with runs for the main experiment. Stimuli in the localizer scans were identical to Experiment 1 except with regard to the face stimuli, as noted above. In the main experiment (free-viewing), block designs and presentation rates were identical to Experiment 1, except with regard to the specific stimuli used.

fMRI data acquisition, preprocessing, and analysis

Experiment 2 fMRI data were acquired and pre-processed exactly as in Experiment 1. The rIFJ and rFFA were defined in a similar way as in Experiment 1, except that the ROIs were defined independently from a four runs of localizer scan. The rIFJ and rFFA were defined in each participant by the contrast of faces vs tools at the threshold of p < 0.0001 (uncorrected).

Eye movement localizer

To identify the location of eye movement related regions, four participants (who had also participated in Experiment 1) were asked to perform alternating blocks of repeated eye movements and fixation. In the eye movement blocks, participants were required to look at the central fixation cross on the screen first, then look to small dot on the left, back to the center and then look at a small dot on the right and back to the center and so on for 30 s. Eye movements were self-paced but participants were instructed to re-fixate approximately once per second. After each eye movement block, the fixation condition would start with a fixation cross at the center, and the dots on both sides would no longer be presented. Participants were required to fixate the cross for 30 s. There were 10 cycles of eye movement and fixation blocks. fMRI data acquisition and preprocessing were the same as for Experiments 1 and 2.

Results

Experiment 1

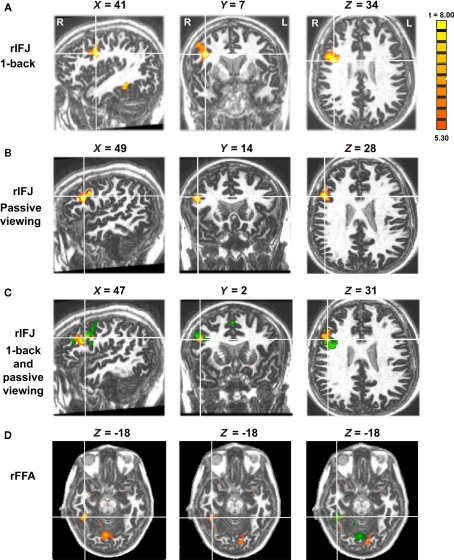

In the free-viewing task group, 10 out of 10 participants showed significant activation in the rIFJ, and 8 out of 10 showed significant activation in the rFFA. In the 1-back task group, all participants showed significant activation in both the rIFJ and rFFA. To facilitate comparison between the two groups, analyses were performed on those eight participants in the free-viewing task group who showed significant activation in both regions, and on 8 out of 10 participants who were selected randomly from the 1-back task group. The mean Talairach coordinates (with standard deviation, SD) of the peak location of the ROIs across participants were: 1-back group: rIFJ [48(6), 8(7), 33(5)]; rFFA [38(3), −45(7), −17(4)]; free-viewing group: rIFJ [43(7), 11(9), 35(6)]; rFFA [38(3), −48(8), −14(4); see Figure 1].

Figure 1.

Panel (A) shows activations in the rIFJ and the rFFA during a 1-back task. Panel (B) shows activations in the rIFJ during passive viewing. Panel (C) shows the activation overlap in the rIFJ for both tasks (green for 1-back, yellow for passive viewing). All regions are defined by the contrast of faces–tools (p < 0.0001, t > 5.30). For comparison, panel (D) shows the FFA in the 1-back task (left), in the passive task (center), and the overlap between these activations (right). All regions are defined by the contrast of faces–tools (p < 0.0005, t > 3.50). Axial slices at Z = −18.

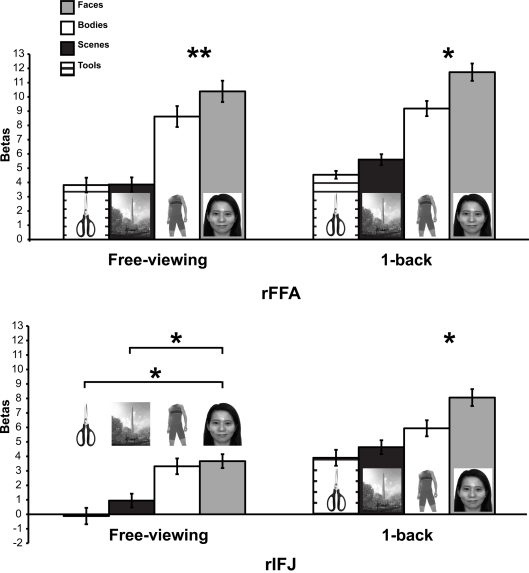

A mixed-design ANOVA was conducted on the beta values from each participant, with ROI (within-participants), stimulus category (within-participants), and task (between participants) as factors (Figure 2). A significant main effect of category was found, F(3,21) = 25.4, p < 0.005, indicating that faces elicited the strongest activation compared to other categories. A significant ROI × category interaction, F(1,7) = 12.7, p < 0.001 was observed. There was also a significant task × category interaction F(1,7) = 6.4, p < 0.05, in the absence of a significant three-way interaction of task × category × ROI, F(3,21) = 0.34, p = 0.79. To examine the effect of categories in each ROI regardless of task, an ANOVA within each ROI was performed. We found that both rFFA [F(3,45) = 35.05, p < 0.0001] and rIFJ [F(3,45) = 18.68, p < 0.0001] showed a significant main effect of category, suggesting that both regions are selective for faces. We also examined the effect of category in each task, and found a robust main effect of category in both free-viewing task [F(3,45) = 16.87, p < 0.0001], and 1-back task [F(3,45) = 36.75, p < 0.0001]. We then conducted planned comparisons within each region and in each task. In the 1-back task, we found that the response to faces was greater than to the next-most-effective category (bodies) in each ROI [rFFA: t(7) = 2.7, p < 0.05; rIFJ: t(7) = 3.4, p < 0.05]. In the free-viewing task this difference was significant for the rFFA [t(7) = 3.6, p < 0.01], but not for rIFJ [t(7) = 0.79, p = 0.45]. However, rIFJ responded more strongly to faces than to the other two categories [scenes: t(7) = 3.5, p < 0.05; tools: t(7) = 2.8, p < 0.05].

Figure 2.

Results of Experiment 1. Responses of rFFA and rIFJ, based on independent functional localizers, to faces, headless bodies, tools, and outdoor scenes, in both free-viewing and 1-back tasks. Response magnitudes indicate beta weights from general linear models fit to the aggregate data from each region of interest. Error bars indicate standard error of the mean. Asterisks indicate significant differences between conditions: *p < 0.05; **p < 0.01.

The above analyses indicate that to a large extent the rFFA and rIFJ showed a similar activation pattern to categories. Both regions showed robust activation to faces across both tasks, thus leading to a lack of significant three-way interaction. However, a difference between the two regions could be found in the free-viewing task, where the rIFJ showed robust responses not only faces but also for bodies, relative to scenes and tools.

Behavioral performance in the 1-back task for six participants (data was not collected for two participants due to technical error) was generally successful (mean hit rate = 77%) and did not differ significantly by category, F(1,5) = 2.84, p = 0.21.

We identify three main findings from this experiment. First, we were able to demonstrate a strong response in most participants to visually presented faces, not only in rFFA, as expected, but also in the right inferior prefrontal cortex (rIFJ). Second, the response profile of these two regions was generally comparable, both in the ordering of response magnitude to the categories tested and in the overall increase in response in the 1-back as opposed to passive viewing tasks. Finally, responses were larger in rFFA as opposed to rIFJ, but in ratio terms the degree of selectivity was comparable, at least in the 1-back task. In contrast, in the passive viewing task, the response to bodies and faces in the rIFJ were approximately equal.

Experiment 2

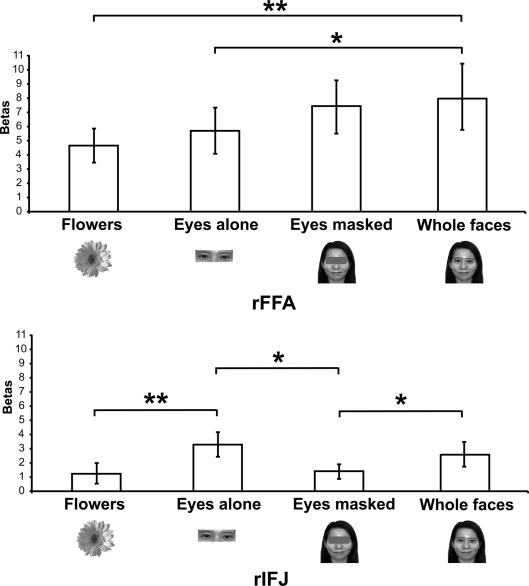

A significant rFFA activation was found in seven out of nine participants, and robust rIFJ activation was found in eight out of nine participants. ROI and statistical analyses were conducted on the seven participants with robust activation in both rFFA and rIFJ. The mean Talairach coordinates (with SD) of the peak location of the ROIs across participants were: rIFJ [43(7), 1(7), 41(12)]; rFFA [40(3), −47(8), −20(9)]. Data from these ROIs were assessed with a within-subjects repeated-measures ANOVA, with ROI and stimulus type as factors (Figure 3; also see the mean time courses in Figure 4).

Figure 3.

Results of Experiment 2. Responses of rFFA and rIFJ, based on independent functional localizers, to flowers, whole faces, eyes, and faces with eyes masked. Conventions as in Figure 2. Asterisks indicate significant differences between conditions: *p < 0.05; **p < 0.01.

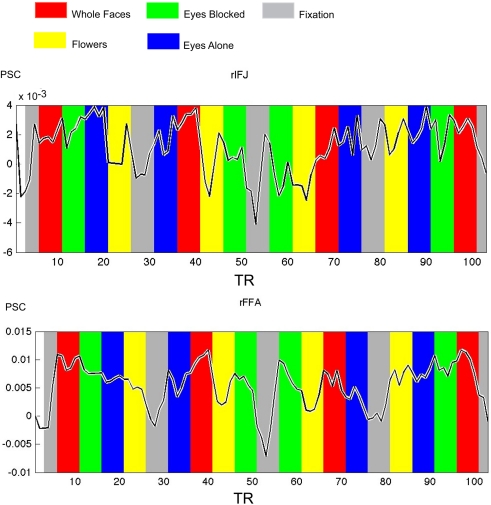

Figure 4.

Times courses extracted from ROIs within each participant in Experiment 2, both IFJ and FFA were identified by the localizer scans (faces vs tool, p < 0. 0001; N = 6). Mean percent signal change (PSC) is plotted along the y-axis, repetition time (TR) is plotted on the x-axis. The colored bars in the figure are shifted by two timepoints to accommodate the HRF.

There was a significant interaction of stimulus type and ROI [F(3,18) = 6.5, p < 0.001], indicating a different pattern of responses to these stimuli in the two ROIs. A series of paired-samples t-tests was performed to examine the effect of category in each ROI. In the rFFA, whole faces elicited a stronger response than that to eyes [t(6) = 3.6, p < 0.05] and flowers [t(6) = 3.9, p < 0.01]. The responses to eyes alone and faces with the eyes masked did not differ significantly, t(6) = 1.5, p = 0.19. In contrast, in the rIFJ the greatest response was elicited by the eyes alone condition. This response was greater than that to faces with the eyes masked, t(6) = 2.9, p < 0.05, and to flowers, t(6) = 3.4, p < 0.01. The response to the eyes alone did not differ from that to whole faces, t(6) = 1.4, p = 0.2), and importantly, due to the fact that there was also a significant greater responses for whole faces than to eyes masked t(6) = 2.1, p < 0.05, we argue that the region's preference for eyes cannot be due to any low level effect of the eyes being cropped out. In contrast, the response to faces with the eyes masked did not differ from that to flowers, t(6) = 0.53, p = 0.61.

Our results show a pattern of responses in rFFA that is consistent with the results of previous imaging studies (Tong et al., 2000), which found a gradation of responses from whole faces (the most effective category), faces with eyes masked, and eyes alone, compared to houses, which elicited weak responses. In contrast, the face response in rIFJ is driven strongly, perhaps entirely, by a response to the eyes. The response to a pair of eyes alone was no different from that to the entire face, showing that the eyes are sufficient to generate a strong rIFJ response. On the other hand, when the eyes are removed from an otherwise whole face, the response drops to a level no greater than that to flowers, showing that the presence of the eyes is necessary to generate a strong rIFJ response. Future investigation to examine the IFJ response to other face parts would also provide a useful comparison against the eye conditions tested here.

Eye movement localizer

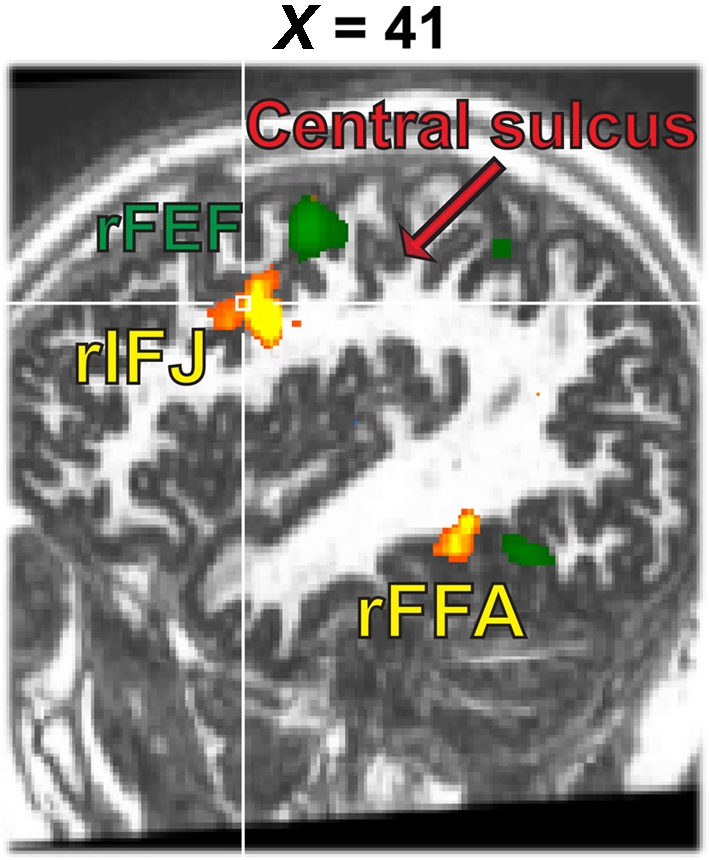

A whole brain fixed-effects analysis contrasted eye movement blocks vs fixation blocks at an uncorrected threshold of p < 0.00001. The group-defined FEFs (right peak: 50, −1, 42; left peak: −48, −4, 45) and supplementary eye fields (peak: 0, −10, 66) did not overlap with rIFJ, as defined in a comparable fixed-effects group analysis (see Figure 5).

Figure 5.

Activation map from the 1-back task (yellow, faces–tools, p < 0. 0001, t > 5.30) overlaid onto the activation map from the eye movement localizer (green, eye movements-fixation, p < 0.0001, t > 12.0).

General Discussion

In this paper, we report that a region of the right prefrontal cortex at the junction of the inferior frontal sulcus and the precentral sulcus responds strongly to faces compared to scenes and tools (and to a lesser extent, to human bodies). In contrast to the rFFA, the response to faces in the rIFJ appears to be driven primarily by the presence of the eyes. While this is a preliminary finding, it raises a number of questions about the functional role of this region, which we address below.

In the macaque, a strong connection between the PFC and the inferior temporal cortex (ITC) has been well documented (Kuypers et al., 1965; Jones and Powell, 1970; Ungerleider et al., 1989; Bullier et al., 1996; Scalaidhe et al., 1997, 1999; Levy and Goldman-Rakic, 2000; Miller and Cohen, 2001). It has been proposed that the ventral PFC is functionally associated with and is an extension of the ventral temporal cortex, and that there is selective connectivity between the two cortical regions (Goldman-Rakic, 1996; Levy and Goldman-Rakic, 2000). Connectivity between the two regions has further been illustrated by Scalaidhe et al. (1997), who used injections of wheat germ agglutinin-horseradish peroxidase or fluorescent dyes to show that the face-selective neurons that were located in the inferior frontal convexity received more than 95% of input from the temporal visual cortex. Specifically, these neurons received inputs from the ventral bank of the superior temporal sulcus (STS), as well as the neighboring inferior temporal gyrus, which have frequently been reported to contain face-selective neurons (Perrett et al., 1982, 1985; Desimone et al., 1984; Pinsk et al., 2005b). These projections, if also present in humans, could provide the visual analysis that contributes to the stimulus selectivity seen in the present findings.

The literature on lateral prefrontal cortex implicates this broad region in a very diverse set of cognitive functions, spanning domains such as object recognition, WM, and task switching, as demonstrated in animal, patient, and neuroimaging studies (Kuypers et al., 1965; Jones and Powell, 1970; Ungerleider et al., 1989; Bullier et al., 1996; Scalaidhe et al., 1997, 1999; Levy and Goldman-Rakic, 2000; Miller and Cohen, 2001; Brass et al., 2005). In a recent review, for example, Duncan and Owen (2000) identified three relatively focal regions of prefrontal cortex – mid dorsolateral, mid ventrolateral, and dorsal anterior cingulate – that are recruited across many cognitive tasks requiring WM, inhibition, response conflict, and the like. They suggest that these regions contribute in a flexible way to meet current task demands. From the perspective of selective attention, activation of this “multiple demands” network is associated with a selective focus on task-relevant information (Riesenhuber and Poggio, 2000; Everling et al., 2006).

At first glance this perspective appears at odds with the present results. Although the rIFJ region studied here probably overlaps anatomically with the multiple demands network, participants in the free-viewing group of Experiment 1, and in the main study of Experiment 2, did not have an explicit task to perform. Therefore none of the stimuli in any condition was more or less behaviorally relevant to the current task than any other. However, it is reasonable to assume that there are steady-state biases in favor of certain kinds of stimuli, due to their generally high behavioral relevance (Treisman, 1960). For example, in divided attention tasks, attention will tend to be drawn to stimuli such as: one's own name (Mack et al., 1998); images of the body (Downing et al., 2004) or body parts (Ro et al., 2007); and the face (Vuilleumier, 2000; Ro et al., 2001).

The eyes may enjoy a similar status of sustained attentional salience, for several reasons. The eyes carry information about the identity and gender of other individuals. For example, Schyns et al. (2002) used the “Bubbles” stimulus sampling method to identify those areas of the face that contribute to perceptual performance on a series of tasks requiring identification of specific face attributes. In a gender task, and especially in an identity task, the area around the eyes was found to contribute substantially to making a successful judgment. Furthermore, the eyes carry information relevant to perceiving emotion, and particularly fear. As in the previous example, the “Bubbles” technique reveals that information about the eyes is particularly relevant for discriminating fear from other emotions (Schyns et al., 2007; Smith et al., 2007). However, while human patient (Adolphs et al., 1995) and neuroimaging (Breiter et al., 1996; Morris et al., 1996; Fusar-Poli et al., 2009) studies have associated the perception of fearful faces with the amygdala – and even more specifically, with fixation of the fearfully widened eyes (Gamer and Buchel, 2009) – a link with fearful faces and the lateral prefrontal cortex has not been established.

The eyes provide information about others’ direction of gaze. Gaze information, and the interpretation of gaze with respect to the head, has been associated with the STS in both monkeys and humans (Puce and Perrett, 2003; Materna et al., 2008). A recent functional connectivity study (Nummenmaa et al., 2009) has reported that during a gaze perception task, the middle frontal gyrus (MFG) elicited positive changes when coupling with the fusiform gyrus (FG), whereas the FEF was found positively correlated with the posterior STS. They concluded that regions in the frontal cortex are communicating with the visual face regions to form an interactive system for gaze processing. In a recent review of the literature (Van Overwalle and Baetens, 2009) covering 14 neuroimaging studies that examined some aspects of viewing gaze and executing eye movements, nearly all of the studies identified right STS activity in gaze conditions compared to a variety of controls. Most of these studies found no activity in the premotor cortex. However, of the three that did, all three identified clusters of activity near the frontal region identified here [Experiment 1, 1-back group: (48, 8, 33); free-viewing group: (43, 11, 35). Experiment 2: (43, 1, 41)]. These include studies by Pierno et al. (2006): (48, 0, 46); Williams et al. (2005): (44, 2, 42); and Hooker et al. (2003): (49, 3, 44).

To elucidate whether the rIFJ overlapped with regions related to executing eye movements, we localized both in a subset of participants. We found that the FEF regions engaged by voluntary eye movements, compared to fixation, were dorsal and posterior to the region we identify here as rIFJ. However, these activations do fall at close quarters, and fMRI studies place the peak coordinates of the lateral FEF [e.g., Petit et al., 2009: (52, 8, 40)] very close to those seen here and to those seen in the gaze studies of Pierno et al. (2006), Williams et al. (2005), and Hooker et al. (2003). So further experiments will be needed to qualify the functional distinction between the lateral FEF and the eye-selective rIFJ response tested here.

Taking all of the above considerations into account, we speculate that the strong response to faces in the rIFJ region can be explained by a combination of: (1) the high general behavioral relevance of faces (and particularly the eyes); (2) a possible role in the processing of the eyes for understanding gaze; and (3) a possible role in the generation of eye movements, or an overlap with the lateral focus of the FEFs. This combination of factors would explain the presence of this activation focus in the lateral prefrontal cortex – consistent with the predictable activation of this region by the selection of behaviorally relevant stimuli – and more specifically in a region near the motor regions engaged in eye movements. We might further speculatively account for the strong right lateralization of the face response in IFJ as being part of a long-known general association of the right hemisphere with visual processing of faces (Rhodes, 1985).

It is important to acknowledge that our studies have explored only a fraction of the whole picture in the visual perception of eyes/gaze in the frontal cortex. Further investigations are indeed needed to explore the functions of IFJ, specifically, studying its interactions between the MFG, FEF, and STS in gaze processing seems to be the next critical step to understand the role of gaze perception of IFJ. As for bridging the human and monkey literatures, future investigations are also needed to establish the link between face-selective cells identified with single-unit recordings in macaque, the face “patches” seen in the same species with fMRI, and the small body of evidence for stimulus-driven face responses in human prefrontal cortex. Such studies would do well to consider the possible role of gaze, and of eye movements, in activating this region.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was supported by The Economic and Social Research Council (UK) PhD studentship to Annie W.-Y. Chan. The authors would like to thank Dr. Chris Baker, Assaf Harel, and Cibu Thomas for their helpful comments and discussions on the manuscript.

References

- Adolphs R., Tranel D., Damasio H., Damasio A. R. (1995). Fear and the human amygdala. J. Neurosci. 15, 5879–5891 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Allison T., Puce A., Spencer D. D., McCarthy G. (1999). Electrophysiological studies of human face perception. I: Potentials generated in occipitotemporal cortex by face and non-face stimuli. Cereb. Cortex 9, 415–430 10.1093/cercor/9.5.415 [DOI] [PubMed] [Google Scholar]

- Avidan G., Behrmann M. (2009). Functional MRI reveals compromised neural integrity of the face processing network in congenital prosopagnosia. Curr. Biol. 19, 1146–1150 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bell A. H., Hadj-Bouziane F., Frihauf J. B., Tootell R. B., Ungerleider L. G. (2009). Object representations in the temporal cortex of monkeys and humans as revealed by functional magnetic resonance imaging. J. Neurophysiol. 101, 688–700 10.1152/jn.90657.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S., Golland Y., Flevaris A., Robertson L. C., Moscovitch M. (2006). Processing the trees and the forest during initial stages of face perception: electrophysiological evidence. J. Cogn. Neurosci. 18, 1406–1421 [DOI] [PubMed] [Google Scholar]

- Brass M., Derrfuss J., Forstmann B., von Cramon D. Y. (2005). The role of the inferior frontal junction area in cognitive control. Trends Cogn. Sci. 9, 314–316 [DOI] [PubMed] [Google Scholar]

- Breiter H. C., Etcoff N. L., Whalen P. J., Kennedy W. A., Rauch S. L., Buckner R. L., Strauss M. M., Hyman S. E., Rosen B. R. (1996). Response and habituation of the human amygdala during visual processing of facial expression. Neuron 17, 875–887 10.1016/S0896-6273(00)80219-6 [DOI] [PubMed] [Google Scholar]

- Bullier J., Schall J. D., Morel A. (1996). Functional streams in occipito-frontal connections in the monkey. Behav. Brain Res. 76, 89–97 [DOI] [PubMed] [Google Scholar]

- Desimone R., Albright T. D., Gross C. G., Bruce C. (1984). Stimulus-selective properties of inferior temporal neurons in the macaque. J. Neurosci. 4, 2051–2062 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downing P. E., Bray D., Rogers J., Childs C. (2004). Bodies capture attention when nothing is expected. Cognition 93, B27–B38 [DOI] [PubMed] [Google Scholar]

- Downing P. E., Chan A. W., Peelen M. V., Dodds C. M., Kanwisher N. (2006). Domain specificity in visual cortex. Cereb. Cortex 16, 1453–1461 [DOI] [PubMed] [Google Scholar]

- Downing P. E., Jiang Y., Shuman M., Kanwisher N. (2001). A cortical area selective for visual processing of the human body. Science 293, 2470–2473 10.1126/science.1063414 [DOI] [PubMed] [Google Scholar]

- Duncan J., Owen A. M. (2000). Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends Neurosci. 23, 475–483 10.1016/S0166-2236(00)01633-7 [DOI] [PubMed] [Google Scholar]

- Everling S., Tinsley C. J., Gaffan D., Duncan J. (2006). Selective representation of task-relevant objects and locations in the monkey prefrontal cortex. Eur. J. Neurosci. 23, 2197–2214 [DOI] [PubMed] [Google Scholar]

- Freiwald W. A., Tsao D. Y., Livingstone M. S. (2009). A face feature space in the macaque temporal lobe. Nat. Neurosci. 12, 1187–1196 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fusar-Poli P., Placentino A., Carletti F., Allen P., Landi P., Abbamonte M., Barale F., Perez J., McGuire P., Politi P. L. (2009). Laterality effect on emotional faces processing: ALE meta-analysis of evidence. Neurosci. Lett. 452, 262–267 [DOI] [PubMed] [Google Scholar]

- Gamer M., Buchel C. (2009). Amygdala activation predicts gaze toward fearful eyes. J. Neurosci. 29, 9123–9126 10.1523/JNEUROSCI.1883-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldman-Rakic P. S. (1996). The prefrontal landscape: implications of functional architecture for understanding human mentation and the central executive. Philos. Trans. R. Soc. Lond. B Biol. Sci. 351, 1445–1453 [DOI] [PubMed] [Google Scholar]

- Gross C. G., Rocha-Miranda C. E., Bender D. B. (1972). Visual properties of neurons in inferotemporal cortex of the Macaque. J. Neurophysiol. 35, 96–111 [DOI] [PubMed] [Google Scholar]

- Harel A., Ullman S., Epshtein B., Bentin S. (2007). Mutual information of image fragments predicts categorization in humans: electrophysiological and behavioral evidence. Vision Res. 47, 2010–2020 10.1016/j.visres.2007.04.004 [DOI] [PubMed] [Google Scholar]

- Haxby J. V. (2006). Fine structure in representations of faces and objects. Nat. Neurosci. 9, 1084–1086 [DOI] [PubMed] [Google Scholar]

- Haxby J. V., Hoffman E. A., Gobbini M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233 [DOI] [PubMed] [Google Scholar]

- Haxby J. V., Horwitz B., Ungerleider L. G., Maisog J. M., Pietrini P., Grady C. L. (1994). The functional organization of human extrastriate cortex: a PET-rCBF study of selective attention to faces and locations. J. Neurosci. 14(Pt 1), 6336–6353 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hooker C. I., Paller K. A., Gitelman D. R., Parrish T. B., Mesulam M. M., Reber P. J. (2003). Brain networks for analyzing eye gaze. Brain Res. Cogn. Brain Res. 17, 406–418 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ishai A., Schmidt C. F., Boesiger P. (2005). Face perception is mediated by a distributed cortical network. Brain Res. Bull. 67, 87–93 [DOI] [PubMed] [Google Scholar]

- Itier R. J., Latinus M., Taylor M. J. (2006). Face, eye and object early processing: what is the face specificity? Neuroimage 29, 667–676 [DOI] [PubMed] [Google Scholar]

- Itier R. J., Taylor M. J. (2004). N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb. Cortex 14, 132–142 [DOI] [PubMed] [Google Scholar]

- Jones E. G., Powell T. P. (1970). An anatomical study of converging sensory pathways within the cerebral cortex of the monkey. Brain 93, 793–820 [DOI] [PubMed] [Google Scholar]

- Kanwisher N., McDermott J., Chun M. M. (1997). The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17, 4302–4311 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N., Tong F., Nakayama K. (1998). The effect of face inversion on the human fusiform face area. Cognition 68, B1–B11 [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N., Simmons W. K., Bellgowan P. S., Baker C. I. (2009). Circular analysis in systems neuroscience: the dangers of double dipping. Nat. Neurosci. 12, 535–540 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuypers H. G., Szwarcbart M. K., Mishkin M., Rosvold H. E. (1965). Occipitotemporal corticocortical connections in the rhesus monkey. Exp. Neurol. 11, 245–262 [DOI] [PubMed] [Google Scholar]

- Lerner Y., Epshtein B., Ullman S., Malach R. (2008). Class information predicts activation by object fragments in human object areas. J. Cogn. Neurosci. 20, 1189–1206 [DOI] [PubMed] [Google Scholar]

- Levy R., Goldman-Rakic P. S. (2000). Segregation of working memory functions within the dorsolateral prefrontal cortex. Exp. Brain Res. 133, 23–32 [DOI] [PubMed] [Google Scholar]

- Liu J., Harris A., Kanwisher N. (2002). Stages of processing in face perception: an MEG study. Nat. Neurosci. 5, 910–916 [DOI] [PubMed] [Google Scholar]

- Logothetis N. K., Guggenberger H., Peled S., Pauls J. (1999). Functional imaging of the monkey brain. Nat. Neurosci. 2, 555–562 [DOI] [PubMed] [Google Scholar]

- Mack A., Rock I. (1998). Inattentional Blindness. London: MIT Press [Google Scholar]

- Materna S., Dicke P. W., Thier P. (2008). The posterior superior temporal sulcus is involved in social communication not specific for the eyes. Neuropsychologia 46, 2759–2765 [DOI] [PubMed] [Google Scholar]

- McCarthy G., Puce A., Belger A., Allison T. (1999). Electrophysiological studies of human face perception. II: Response properties of face-specific potentials generated in occipitotemporal cortex. Cereb. Cortex 9, 431–444 [DOI] [PubMed] [Google Scholar]

- Miller E. K., Cohen J. D. (2001). An integrative theory of prefrontal cortex function. Annu. Rev. Neurosci. 24, 167–202 [DOI] [PubMed] [Google Scholar]

- Morris J. S., Frith C. D., Perrett D. I., Rowland D., Young A. W., Calder A. J., Dolan R. J. (1996). A differential neural response in the human amygdala to fearful and happy facial expressions. Nature 383, 812–815 10.1038/383812a0 [DOI] [PubMed] [Google Scholar]

- Nummenmaa L., Passamonti L., Rowe J., Engell A. D., Calder A. J. (2009). Connectivity analysis reveals a cortical network for eye gaze perception. Cereb. Cortex 20, 1780–1787 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perrett D. I., Rolls E. T., Caan W. (1982). Visual neurones responsive to faces in the monkey temporal cortex. Exp. Brain Res. 47, 329–342 [DOI] [PubMed] [Google Scholar]

- Perrett D. I., Smith P. A., Potter D. D., Mistlin A. J., Head A. S., Milner A. D., Jeeves M. A. (1985). Visual cells in the temporal cortex sensitive to face view and gaze direction. Proc. R. Soc. Lond. B Biol. Sci. 223, 293–317 [DOI] [PubMed] [Google Scholar]

- Petit L., Zago L., Vigneau M., Andersson F., Crivello F., Mazoyer B., Mellet E., Tzourio-Mazoyer N. (2009). Functional asymmetries revealed in visually guided saccades: an FMRI study. J. Neurophysiol. 102, 2994–3003 10.1152/jn.00280.2009 [DOI] [PubMed] [Google Scholar]

- Pierno A. C., Becchio C., Wall M. B., Smith A. T., Turella L., Castiello U. (2006). When gaze turns into grasp. J. Cogn. Neurosci. 18, 2130–2137 [DOI] [PubMed] [Google Scholar]

- Pinsk M. A., Arcaro M., Weiner K. S., Kalkus J. F., Inati S. J., Gross C. G., Kastner S. (2009). Neural representations of faces and body parts in macaque and human cortex: a comparative FMRI study. J. Neurophysiol. 101, 2581–2600 10.1152/jn.91198.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pinsk M. A., DeSimone K., Moore T., Gross C. G., Kastner S. (2005a). Representations of faces and body parts in macaque temporal cortex: a functional MRI study. Proc. Natl. Acad. Sci. U.S.A. 102, 6996–7001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pinsk M. A., Moore T., Richter M. C., Gross C. G., Kastner S. (2005b). Methods for functional magnetic resonance imaging in normal and lesioned behaving monkeys. J. Neurosci. Methods 143, 179–195 [DOI] [PubMed] [Google Scholar]

- Pruessmann K. P., Weiger M., Scheidegger M. B., Boesiger P. (1999). SENSE: sensitivity encoding for fast MRI. Magn. Reson. Med. 42, 952–962 [PubMed] [Google Scholar]

- Puce A., Allison T., Asgari M., Gore J. C., McCarthy G. (1996). Differential sensitivity of human visual cortex to faces, letterstrings, and textures: a functional magnetic resonance imaging study. J. Neurosci. 16, 5205–5215 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A., Allison T., Bentin S., Gore J. C., McCarthy G. (1998). Temporal cortex activation in humans viewing eye and mouth movements. J. Neurosci. 18, 2188–2199 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A., Allison T., McCarthy G. (1999). Electrophysiological studies of human face perception. III: Effects of top-down processing on face-specific potentials. Cereb. Cortex 9, 445–458 [DOI] [PubMed] [Google Scholar]

- Puce A., Perrett D. (2003). Electrophysiology and brain imaging of biological motion. Philos. Trans. R. Soc. Lond. B Biol. Sci. 358, 435–445 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A., Allison T., Spencer S. S., Spencer D. D., McCarthy G. (1997). Comparison of cortical activation evoked by faces measured by intracranial field potentials and functional MRI: two case studies. Hum. Brain Mapp. 5, 298–305 [DOI] [PubMed] [Google Scholar]

- Quiroga R. Q., Reddy L., Kreiman G., Koch C., Fried I. (2005). Invariant visual representation by single neurons in the human brain. Nature 435, 1102–1107 [DOI] [PubMed] [Google Scholar]

- Rajimehr R., Young J. C., Tootell R. B. (2009). An anterior temporal face patch in human cortex, predicted by macaque maps. Proc. Natl. Acad. Sci. U.S.A. 106, 1995–2000 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rhodes G. (1985). Lateralized processes in face recognition. Br. J. Psychol. 76 (Pt 2), 249–271 [DOI] [PubMed] [Google Scholar]

- Riesenhuber M., Poggio T. (2000). Models of object recognition. Nat. Neurosci. 3(Suppl), 1199–1204 [DOI] [PubMed] [Google Scholar]

- Ro T., Friggel A., Lavie N. (2007). Attentional biases for faces and body parts. Vis. Cogn. 15, 322–348 [Google Scholar]

- Ro T., Russell C., Lavie N. (2001). Changing faces: a detection advantage in the flicker paradigm. Psychol. Sci. 12, 94–99 [DOI] [PubMed] [Google Scholar]

- Rossion B., Joyce C. A., Cottrell G. W., Tarr M. J. (2003). Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. Neuroimage 20, 1609–1624 10.1016/j.neuroimage.2003.07.010 [DOI] [PubMed] [Google Scholar]

- Scalaidhe S. P., Wilson F. A., Goldman-Rakic P. S. (1997). Areal segregation of face-processing neurons in prefrontal cortex. Science 278, 1135–1138 10.1126/science.278.5340.1135 [DOI] [PubMed] [Google Scholar]

- Scalaidhe S. P., Wilson F. A., Goldman-Rakic P. S. (1999). Face-selective neurons during passive viewing and working memory performance of rhesus monkeys: evidence for intrinsic specialization of neuronal coding. Cereb. Cortex 9, 459–475 10.1093/cercor/9.5.459 [DOI] [PubMed] [Google Scholar]

- Schyns P. G., Bonnar L., Gosselin F. (2002). Show me the features! Understanding recognition from the use of visual information. Psychol. Sci. 13, 402–409 [DOI] [PubMed] [Google Scholar]

- Schyns P. G., Petro L. S., Smith M. L. (2007). Dynamics of visual information integration in the brain for categorizing facial expressions. Curr. Biol. 17, 1580–1585 [DOI] [PubMed] [Google Scholar]

- Smith M. L., Gosselin F., Schyns P. G. (2007). From a face to its category via a few information processing states in the brain. Neuroimage 37, 974–984 10.1016/j.neuroimage.2007.05.030 [DOI] [PubMed] [Google Scholar]

- Talairach J., Tournoux P. (1993). Referentially Oriented Cerebral MRI Anatomy: An Atlas of Stereotaxic Anatomical Correlations for Gray, and White Matter. New York: Thieme Medical Publishers [Google Scholar]

- Thierry G., Martin C. D., Downing P., Pegna A. J. (2007). Controlling for interstimulus perceptual variance abolishes N170 face selectivity. Nat. Neurosci. 10, 505–511 [DOI] [PubMed] [Google Scholar]

- Thorpe S. J., Rolls E. T., Maddison S. (1983). The orbitofrontal cortex: neuronal activity in the behaving monkey. Exp. Brain Res. 49, 93–115 [DOI] [PubMed] [Google Scholar]

- Tong F., Nakayama K., Moscovitch M., Weinrib O., Kanwisher N. (2000). Response properties of the human fusiform face area. Cogn. Neuropsychol. 17, 257–279 [DOI] [PubMed] [Google Scholar]

- Tootell R. B., Reppas J. B., Dale A. M., Look R. B., Sereno M. I., Malach R., Brady T. J., Rosen B. R. (1995). Visual motion aftereffect in human cortical area MT revealed by functional magnetic resonance imaging. Nature 375, 139–141 [DOI] [PubMed] [Google Scholar]

- Treisman A. M. (1960). Contextual cues in selective listening. Q. J. Exp. Psychol. 12, 242–248 [Google Scholar]

- Tsao D. Y., Freiwald W. A., Knutsen T. A., Mandeville J. B., Tootell R. B. (2003). Faces and objects in macaque cerebral cortex. Nat. Neurosci. 6, 989–995 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsao D. Y., Freiwald W. A., Tootell R. B., Livingstone M. S. (2006). A cortical region consisting entirely of face-selective cells. Science 311, 670–674 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsao D. Y., Moeller S., Freiwald W. A. (2008a). Comparing face patch systems in macaques and humans. Proc. Natl. Acad. Sci. U.S.A. 105, 19514–19519 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsao D. Y., Schweers N., Moeller S., Freiwald W. A. (2008b). Patches of face-selective cortex in the macaque frontal lobe. Nat. Neurosci. 11, 877–879 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ungerleider L. G., Gaffan D., Pelak V. S. (1989). Projections from inferior temporal cortex to prefrontal cortex via the uncinate fascicle in rhesus monkeys. Exp. Brain Res. 76, 473–484 [DOI] [PubMed] [Google Scholar]

- Van Overwalle F., Baetens K. (2009). Understanding others’ actions and goals by mirror and mentalizing systems: a meta-analysis. Neuroimage 48, 564–584 [DOI] [PubMed] [Google Scholar]

- Vuilleumier P. (2000). Faces call for attention: evidence from patients with visual extinction. Neuropsychologia 38, 693–700 [DOI] [PubMed] [Google Scholar]

- Wiggett A. J., Downing P. E. (2008). The face network: overextended? (Comment on: “Let's face it: It's a cortical network” by Alumit Ishai). Neuroimage 40, 420–422 [DOI] [PubMed] [Google Scholar]

- Williams J. H., Waiter G. D., Perra O., Perrett D. I., Whiten A. (2005). An fMRI study of joint attention experience. Neuroimage 25, 133–140 10.1016/j.neuroimage.2004.10.047 [DOI] [PubMed] [Google Scholar]

- Xu Y., Liu J., Kanwisher N. (2005). The M170 is selective for faces, not for expertise. Neuropsychologia 43, 588–597 10.1016/j.neuropsychologia.2004.07.016 [DOI] [PubMed] [Google Scholar]

- Zangenehpour S., Chaudhuri A. (2005). Patchy organization and asymmetric distribution of the neural correlates of face processing in monkey inferotemporal cortex. Curr. Biol. 15, 993–1005 [DOI] [PubMed] [Google Scholar]