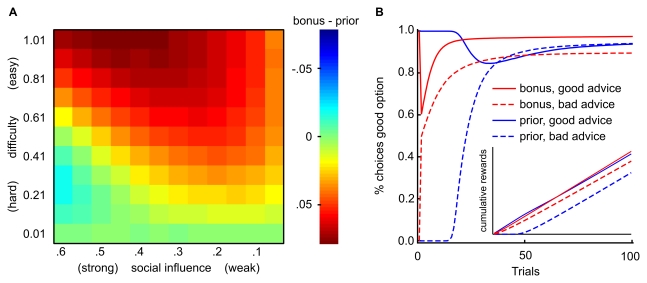

Figure 3. Adaptive value of social learning models.

We used computer simulations to compare the average rewards gained by the prior model and the outcome-bonus model when performing a 4-armed bandit task with 100 trials after receiving advice about which bandit has the highest payoffs. To examine the models' performance over a range of learning settings, simulations were repeated for different task difficulties, levels of social influence, probabilities of correct advice, and learning parameters (see Text S1). (A) Difference in average payoffs across learning environments for 50% good advice. Each cell depicts the difference in average payoffs of the two models for a particular combination of task difficulty and social influence. The difference was computed from 1,000 simulated learning tasks for each model, whereby each model received good advice in half of the tasks. Difficulty, defined as difference in mean payoffs divided by payoff variance, is varied on the y-axis. Social influence, defined as either the magnitude of outcome-bonus (βb) or higher initial reward expectation for the recommended option (βp), is varied on the x-axis. The outcome-bonus model is more likely to choose a highly rewarded option (and thus accumulates most reward) for most levels of task difficulty and social influence, with exception of the combination of bad advice in a difficult task with strong social influence. (B) Learning curves show that the outcome-bonus model is better because it profits from good advice in the long run, even if individual learning already leads to a clear preference for a good bandit. The inset depicts cumulative rewards for both models. After bad advice, cumulative payoffs are reduced less for the outcome-bonus model compared to the prior model (see inset). In contrast, the prior model does not profit from good advice in the long run, and cumulative payoffs are greatly reduced after bad advice (see also Figures S3–S5). The inset also highlights that bad advice harms the prior model because it abolishes choices of the better option until the prior expectation induced by advice has been unlearned.