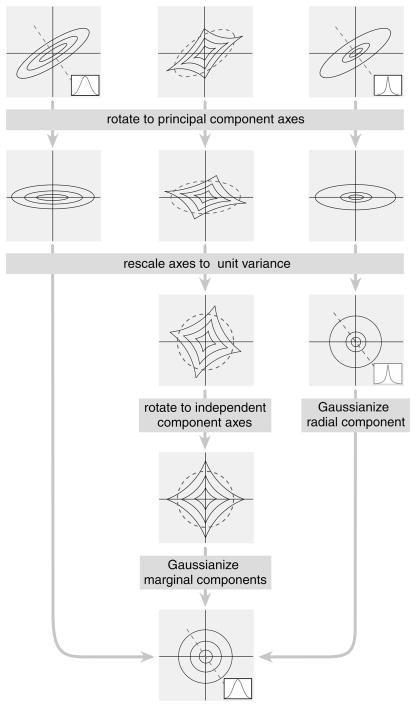

Figure 1.

Three methods of dependency elimination and their associated source models, illustrated in two dimensions. Dashed ellipses indicate covariance structure. Inset graphs are slices through the density along the indicated (dashed) line. (Left) PCA/whitening a gaussian source. The first transformation rotates the coordinates to the principal coordinate axes of the covariance ellipse, and the second rescales each axis by its standard deviation. The output density is a spherical, unit-variance gaussian. (Middle) Independent component analysis, applied to a linearly transformed factorial density. After whitening, an additional rotation aligns the source components with the Cartesian axes of the space. Last, an optional nonlinear marginal gaussianization can be applied to each component, resulting in a spherical gaussian. (Right) Radial gaussianization, applied to an elliptically symmetric nongaussian density, maps the whitened variable to a spherical gaussian.