Abstract

Purpose

To provide quantitative information on the image registration differences from multiple systems for IGRT credentialing and margin reduction in clinical trials.

Methods and Materials

Images and IGRT shift results from three different treatment systems (Tomotherapy Hi-Art, Elekta Synergy, Varian Trilogy) have been sent from various institutions to the Image-Guided Therapy QA Center (ITC) for evaluation for the Radiation Therapy Oncology Group (RTOG) trials. Nine patient datasets (five head&neck and four prostate) were included in the comparison, with each patient having 1-4 daily individual IGRT studies. In all cases, daily shifts were re-calculated by re-registration of the planning CT with the daily IGRT data using three independent software systems (MIMvista, FocalSim, VelocityAI). Automatic fusion was used in all calculations. The results were compared with those submitted from institutions. Similar regions of interest (ROIs) and same initial positions were used in registrations for inter-system comparison. Different slice spacings for CBCT sampling and different ROIs for registration were used in some cases to observe the variation of registration due to these factors.

Results

For the 54 comparisons with head&neck datasets, the absolute values of differences of the registration results between different systems were 2.6±2.1mm (mean±SD; range 0.1-8.6mm, left-right (LR)), 1.7±1.3mm (0.0-4.9mm, superior-inferior (SI)), and 1.8±1.1mm (0.1-4.0mm, anterior-posterior (AP)). For the 66 comparisons in prostate cases, the differences were 1.1±1.0mm (0.0-4.6mm, LR), 2.1±1.7mm (0.0-6.6mm, SI), and 2.0±1.8mm (0.1-6.9mm, AP). The differences caused by the slice spacing variation were relatively small and the different ROI selections in FocalSim and MIMvista also had limited impact.

Conclusion

The extent of differences was reported when different systems were used for image registration. Careful examination and quality assurance of the image registration process are crucial before considering margin reduction using IGRT in clinical trials.

Keywords: IGRT, clinical trial, registration, multi-system verification

Introduction

Image-guided radiotherapy (IGRT) is being involved in more clinical trials because of its ability to detect, evaluate, and reduce the interfraction setup errors and monitor the intrafraction target motion for various disease sites (1-6). It raises the concerns on quality assurance (QA) and credentialing of IGRT in clinical trials (7). IGRT verification has to be taken at the same importance level as the other parts of clinical trials to improve treatment consistency and data quality (8, 9).

IGRT covers many aspects such as immobilization, in-room image acquisition, and registration. The outcome may vary largely depending on the implementation since there are accumulated uncertainties that occur for various steps of the process. Image registration mostly carried out automatically by treatment software system is one of the main sources contributing to the uncertainty. Due to the limitation of rigid registration for deformable objects and the variation of implementation of registration techniques, uncertainty exists during the procedure of image registration between planning and daily on-line IGRT datasets (10-13). For IGRT credentialing purpose, shift information provided together with other patient data for a particular clinical trial needs to be evaluated using an independent software system. Quantitative information on the differences of image registration results from multiple systems is needed as guidance for establishing IGRT credentialing procedure (14).

Based on the improved alignment accuracy, margin reduction for planning target volume (PTV) is considered a possibility in clinical trials which involve IGRT (15-18). Nevertheless, the marginal benefit derived from IGRT is still debatable because of the uncertainties of this procedure (19-21). Studies are needed to provide information that can be used to reduce margins in future clinical trials, compared to previous trials, when IGRT is used for patient positioning correction. Image registration with multiple systems provides a good estimation of the degree of system-dependent variation. Since there is no absolute ground truth in evaluation of registration accuracy, the deviation of registration results between different IGRT systems and software systems may provide a useful estimate of that uncertainty (10). This may be particularly useful to the radiation therapy community in the studies that allow margin reduction based on IGRT.

The purpose of this study is to provide quantitative information on the image registration differences when several different IGRT systems and software systems are used for comparison. The research reported here repeated the image registration using cases submitted for clinical trials where the IGRT process is performed. Multiple commercially available software packages designed for radiation therapy implementation were used for the comparison. In all software systems we tried to mimic the same registration settings and procedures used in clinic to minimize the operator-dependent variation. The image registration using independent software systems can be incorporated into the IGRT verification procedure in clinical trials. The quantitative comparison between registration results from different systems provides useful information for IGRT credentialing and margin reduction in clinical trials.

Method

Data

Clinical IGRT data and image registration results used for patient setup have been sent from various institutions to the Image-Guided Therapy QA Center (ITC) for evaluation in the Radiation Therapy Oncology Group (RTOG) trials. IGRT data included planning CT, radiotherapy structure set (contours drawn on planning CT images), radiotherapy plan from a treatment planning system (TPS), radiotherapy dose (a calculated dose from TPS), and the daily in-room CT images such as kilovoltage cone-beam CT (kV CBCT) and megavoltage (MV) CT. All data were in DICOM or DICOM-RT format, so they were easily imported to other software systems. The adjustments of patient position derived from image registration between planning CT and daily on-line CT images were also provided. Since 3D-3D rigid registration was used, the results were given as the isocenter shifts (left-right (LR), superior-inferior (SI), and anterior-posterior (AP)) and couch rotations (pitch, roll, and yaw) of daily CT.

Images and IGRT registration results from three different treatment systems, TomoTherapy Hi-Art (TomoTherapy Inc., Madison, WI), Elekta Synergy (Elekta Oncology Systems Ltd., Crawley, UK), and Varian Trilogy (Varian Medical Systems Inc., Palo Alto, CA), were used for evaluation. TomoTherapy Hi-Art has an on-board MV CT system (CTrue) built into the treatment unit. Both Elekta Synergy and Varian Trilogy have gantry-mounted kV CBCT imaging systems (referred to as the X-ray Volumetric Imager (XVI) and On-Board Imager (OBI), respectively). Daily on-line images for patient setup were acquired using these imaging systems and registered to planning kV CT images using the software provided by the treatment unit manufacturer. Datasets from five head&neck and four prostate patients from these three systems were used for the study. For each patient 1-4 daily image datasets from different treatment dates were included. The modalities, numbers, and sites of the daily images used in our study are listed in Table 1.

Table 1.

Summary of image data used in this study

| Treatment system |

Treatment site |

No. of planning CT datasets |

No. of daily image datasets |

Modality of daily on-line image |

Slice spacing of daily image |

kVp of daily image |

Pixel spacing of daily image |

|---|---|---|---|---|---|---|---|

| TomoTherapy | Head&neck | 2 | 3 | MV CT | 6 mm | 6000 | 0.75 mm |

| Prostate | 1 | 4 | MV CT | 6 mm | 6000 | 0.75 mm | |

|

| |||||||

| Elekta | Head&neck | 2 | 3 | kV CBCT | 1 mm | 100 | 1.00 mm |

| Prostate | 2 | 4 | kV CBCT | 1 mm | 120 | 1.00 mm | |

|

| |||||||

| Varian | Head&neck | 1 | 3 | kV CBCT | 2.5 mm | 100 | 0.49 mm |

| Prostate | 1 | 3 | kV CBCT | 2.5 mm | 125 | 0.78 mm | |

Software systems

Three commercially available software systems were used in this study to repeat the image registration for all cases. The software systems are: FocalSim (Version 4.4; CMS Inc., St. Louis, MO), MIMvista (Version 4.1.2; MIMvista Corp., Cleveland, OH), and VelocityAI (Velocity Medical Solutions, Atlanta, GA). Each system provides 3D-3D rigid image registration, and there are several options available when using this function. As mentioned above, the registration result depends on the registration algorithm itself and the initial settings used for its implementation. While each software system uses its unique algorithm, some initial settings can be adjusted by the users. These initial settings mainly include ROI selection, initial position of moving image relative to reference image, and choice of registration metric. Different ROIs can be defined by users for the image registration in these software systems with varying levels of flexibility. An arbitrary initial position of the moving image is achievable in all three software systems by dragging the image using the computer mouse or by inputting a set of parameters which defines the relative position of the moving image. For the registration metric, a geometry-based or an intensity-based method can be chosen (22). The geometry-based metric uses anatomic or artificial landmarks (e.g. externally-placed fiducial markers) or organ boundaries to measure the degree of match of two images. The intensity-based metric uses the intensities of two images to measure how well they are registered. This metric is based on the differences or products of intensities (e.g. cross correlation or sum of the squares of the differences of intensities) when the intensities of similar anatomy are similar in two images, or it is based on intensity statistics (e.g. mutual information) when the intensities of similar anatomy from two images are inherently different. All three software systems used in this study provide the mutual information based registration metric.

Study design

In this study the initial settings of implementation of registration in three software systems were adjusted to be same or as similar as possible with the settings used in clinical treatment systems for the same case. This allows us to minimize the contribution of different initial settings from operators to the uncertainty of registration results, and thus concentrate on the registration variation caused by different systems used. The first step was to identify the ROIs used in each IGRT case for image registration, and then to reproduce same or similar ROIs in three software systems for the re-implementation of image registration using these systems. The next step was to reproduce the same initial position of daily image relative to reference image (derived from the planning CT). The DICOM coordinates of treatment isocenter in planning CT, volume center of planning CT, and volume center of daily image, were derived from radiotherapy plan, planning CT, and daily on-line CT data, respectively. In the treatment systems, the planning CT image and on-line CT image were put together by aligning the treatment isocenter of planning CT with volume center of on-line CT, and image registration was initiated from this position. By using the DICOM coordinates derived above, the same initial position of daily image can be acquired in three software systems. Finally, the mutual information based methods were used in all three software systems. That is because the intensities of similar anatomy from two images were different in our cases and the treatment systems involved in this study also used same form of the mutual information or similar intensity statistics based measurement as the metrics for image registration (23-26). However, it is worth noting that detailed usages of registration metric are different between all the systems.

With the same or similar initial settings, all daily images listed in Table 1 were registered again to the corresponding planning CT by automatic registration using each of the three software systems, and the results were compared with that used in the clinic of origin for treatment systems. The results from three software systems were also compared with each other. Statistical analysis (Student’s t-test) was performed for pairs of the software systems in all three dimensions to investigate any potential systematic differences in the registration between the systems.

Besides inter-system comparisons, registration results with different ROIs and slice spacings were also compared to study their impact on registration outcome in our cases. For the cases from Elekta Synergy, 1mm slice spacing was used for reconstruction of daily kV CBCT image in XVI for IGRT. In this study, the CBCT data from Elekta were reconstructed with another slice spacing of 3mm, and the resulting images were also registered to the planning CT using the three software systems. Comparison was performed between the registration results using 1mm and 3mm spacing images. In order to see the variation of registration results connected to the ROI selection, two different ROIs (whole volume and a cubic volume including whole PTV) were used in FocalSim for the registrations in ten image datasets, and five different ROIs (anterior, center, posterior, PTV+2cm, skin) were used in MIMvista for one head&neck and one prostate cases.

Result

This work concentrated on translational shift information in three dimensions to compare the registration results from different systems. This approach was taken because only translational table adjustments were made in the clinical process for these submitted cases. However, the rotations around the three directions are also important for IGRT and should be further addressed in future studies.

For each of 20 daily image datasets used in this study, at least four registrations were done by different software systems. However, not all of the automatic registration results with given initial settings were visually acceptable. Few registrations resulted in apparent mismatching, which are further described in discussion section. Manual changes of initial settings were used for those unsuccessful registrations, followed by the auto registration until the result was reasonable by visual inspection. The registration results with modified initial settings were then used instead of the unsuccessful registrations.

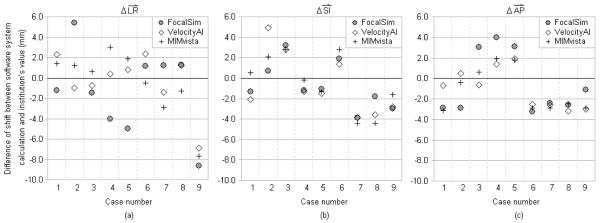

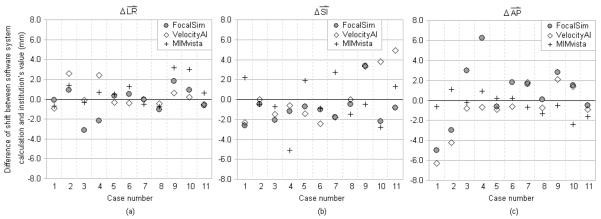

Registration results from the three software systems were compared with the corresponding values submitted from institutions, and the differences of the registration shifts in the three dimensions are shown in Fig. 1 (for head&neck cases) and Fig. 2 (for prostate cases). The actual values of shifts used in clinic are also shown in the same figures. The summary of registration differences is given in Table 2. The difference of registrations was expressed by the absolute values of differences of registration shifts in three dimensions respectively, and the combined magnitude of difference in three-dimension. The mean value, standard deviation (SD), and range (min-max) were given for several different subsets of the comparisons. For the 27 comparisons between treatment systems and the three software systems for head&neck cases, the absolute values of differences of the registration shifts were 2.5±2.3mm (mean±SD; range 0.4-8.6mm, LR), 2.3±1.3mm (0.2-4.9mm, SI), and 2.3±1.0mm (0.4-4.0mm, AP). For the 33 comparisons in prostate cases, the differences were 1.0±0.9mm (0.0-3.2mm), 1.8±1.3mm (0.0-5.1mm), and 1.7±1.6mm (0.1-6.3mm), in three dimensions respectively. In more complete comparisons, where the registration results from all different systems were compared with each other, the differences were 2.6±2.1mm (0.1-8.6mm, LR), 1.7±1.3mm (0.0-4.9mm, SI), and 1.8±1.1mm (0.1-4.0mm, AP), from 54 comparisons with head&neck cases. For the 66 complete comparisons for prostate cases, the differences were 1.1±1.0mm (0.0-4.6mm, LR), 2.1±1.7mm (0.0-6.6mm, SI), and 2.0±1.8mm (0.1-6.9mm, AP).

Fig. 1.

Difference of registration shifts between the software system calculations and the submitted clinical values in head&neck cases. X-axis represents different cases. The symbols with same X coordinate are for the same image dataset (case) calculated by different software systems. Cases 1-3 are from TomoTherapy CTrue. Cases 4-6 are from Elekta XVI. Cases 7-9 are from Varian OBI. Y-axis represents the magnitude of the differences. (a) Comparison in left-right dimension. To the patient’s left is positive direction. (b) Comparison in superior-inferior dimension. To the patient’s superior is positive direction. (c) Comparison in anterior-posterior dimension. To the patient’s anterior is positive direction.

Fig. 2.

Difference of registration shifts between the software system calculations and the submitted clinical values for prostate cases. X-axis represents different cases. The symbols with same X coordinate are for the same image dataset (case) calculated by different software systems. Cases 1-4 are from TomoTherapy CTrue. Cases 5-8 are from Elekta XVI. Cases 9-11 are from Varian OBI. Y-axis represents the magnitude of the differences. (a) Comparison in left-right dimension. To the patient’s left is positive direction. (b) Comparison in superior-inferior dimension. To the patient’s superior is positive direction. (c) Comparison in anterior-posterior dimension. To the patient’s anterior is positive direction.

Table 2.

Summary of registration differences between different systems (n: number of comparisons)

| Absolute value of difference of registration shifts (mm); mean±SD (range) |

|||||

|---|---|---|---|---|---|

| Subsets of the comparisons of registration results |

Treatment site | LR dimension |

SI dimension |

AP dimension |

Three-dimension |

| TomoTherapy vs. the three | Head&neck (n=9) | 1.7±1.5 (0.6-5.4) | 2.3±1.4 (0.5-4.9) | 1.6±1.3 (0.4-3.1) | 3.8±1.2 (2.5-6.2) |

| software systems | Prostate (n=12) | 1.3±1.0 (0.1-3.1) | 1.6±1.4 (0.0-5.1) | 2.7±2.3 (0.2-6.3) | 3.9±2.0 (0.8-6.8) |

|

| |||||

| Elekta vs. the three | Head&neck (n=9) | 2.1±1.6 (0.4-5.0) | 1.4±0.7 (0.2-2.8) | 2.5±0.9 (1.4-4.0) | 3.8±1.4 (2.0-6.0) |

| software systems | Prostate (n=12) | 0.5±0.4 (0.0-1.3) | 1.4±0.8 (0.0-2.7) | 0.9±0.6 (0.1-1.8) | 1.9±0.6 (0.9-2.8) |

|

| |||||

| Varian vs. the three | Head&neck (n=9) | 3.6±3.2 (1.2-8.6) | 3.3±1.0 (1.6-4.4) | 2.6±0.6 (1.1-3.2) | 6.1±2.0 (3.4-9.2) |

| software systems | Prostate (n=9) | 1.3±1.1 (0.2-3.2) | 2.6±1.5 (0.5-4.9) | 1.5±0.8 (0.5-2.8) | 3.5±1.3 (1.1-5.0) |

|

| |||||

| All clinical results vs. the | Head&neck (n=27) | 2.5±2.3 (0.4-8.6) | 2.3±1.3 (0.2-4.9) | 2.3±1.0 (0.4-4.0) | 4.6±1.8 (2.0-9.2) |

| three software systems* | Prostate (n=33) | 1.0±0.9 (0.0-3.2) | 1.8±1.3 (0.0-5.1) | 1.7±1.6 (0.1-6.3) | 3.1±1.7 (0.8-6.8) |

|

| |||||

| Complete comparison | Head&neck (n=54) | 2.6±2.1 (0.1-8.6) | 1.7±1.3 (0.0-4.9) | 1.8±1.1 (0.1-4.0) | 4.1±1.9 (1.1-9.2) |

| between each other† | Prostate (n=66) | 1.1±1.0 (0.0-4.6) | 2.1±1.7 (0.0-6.6) | 2.0±1.8 (0.1-6.9) | 3.5±2.0 (0.2-8.3) |

Registration results from treatment systems are compared with the results from three software systems for all the cases. This is the union of the three subsets (i.e., TomoTherapy vs. softwares, Elekta vs. softwares, and Varian vs. softwares) of the comparisons.

All the results from different systems are compared each other. It includes the comparison between different software systems too.

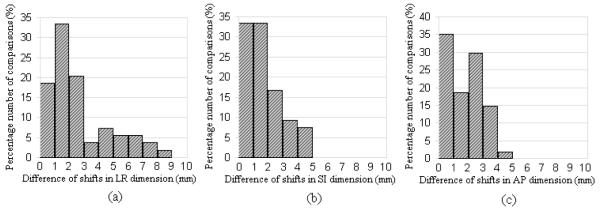

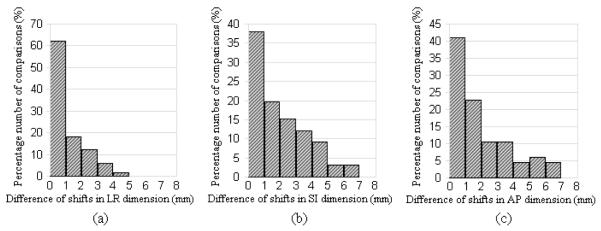

Out of 27 comparisons between treatment system and software system in head&neck cases, five (19%) had differences larger than or equal to 5mm in any of three dimensions, and fourteen (52%) had differences larger than or equal to 3mm but less than 5mm. And out of 33 comparisons in prostate cases, none had differences larger than or equal to 7mm, and six (18%) had differences larger than or equal to 4mm in any of three dimensions. Out of 54 complete comparisons in head&neck cases, nine (17%) had differences larger than or equal to 5mm in any of three dimensions, and twenty (37%) had differences larger than or equal to 3mm but less than 5mm. Out of 66 complete comparisons in prostate cases, none had differences larger than or equal to 7mm, and eighteen (27%) had differences larger than or equal to 4mm in any of three dimensions. The distributions of registration differences from the complete comparisons are shown as histograms in Fig. 3 (for head&neck cases) and Fig. 4 (for prostate cases).

Fig. 3.

The histogram of the distributions of registration differences from the 54 complete comparisons for head&neck cases (bin size=1mm). X-axis represents the absolute value of difference of registration shifts in (a) LR dimension, (b) SI dimension, and (c) AP dimension. Y-axis represents the normalized percentage number of comparisons.

Fig. 4.

The histogram of the distributions of registration differences from the 66 complete comparisons for prostate cases (bin size=1mm). X-axis represents the absolute value of difference of registration shifts in (a) LR dimension, (b) SI dimension, and (c) AP dimension. Y-axis represents the normalized percentage number of comparisons.

There was no statistical difference from the registration results derived between any two of the systems (p > 0.05), except for the comparison between FocalSim and VelocityAI in AP direction (p = 0.04). This shows the random (rather than systematic) nature of the registration differences from different systems; however, it needs to be further verified in future studies with more cases.

Although we have tried to simulate the same initial settings of image registration that were performed clinically, inter-observer variation could be a contributing factor to the registration differences. Since the registrations with the three software systems were performed by same operator, the results from this operator only were compared with each other, minimizing inter-operator variation. From the 27 of such comparisons in head&neck cases, the registration differences were 2.7±2.0mm (0.1-7.0mm, LR), 1.1±1.0mm (0.0-4.2mm, SI), and 1.3±1.1mm (0.1-3.7mm, AP). And in the 33 comparisons for prostate cases, the registration differences were 1.2±1.1mm (0.0-4.6mm, LR), 2.3±2.0mm (0.0-6.6mm, SI), and 2.3±1.8mm (0.1-6.9mm, AP). These results are similar to what are presented in Table 2.

The differences caused by the slice spacing variation (1mm and 3mm) were 0.4±0.4mm (0.0-1.4mm, LR), 1.5±1.8mm (0.0-7.2mm, SI), and 0.4±0.4mm (0.0-1.4mm, AP), from 24 comparisons in total. The registration differences between the results from two different ROIs in FocalSim were 0.7±0.7mm (0.0-2.0mm, LR), 1.5±1.5mm (0.1-3.9mm, SI), and 0.7±0.6mm (0.0- 1.7mm, AP), from 10 comparisons in total. And the differences in results with five different ROIs using MIMvista were 0.7±0.5mm (0.0-1.9mm, LR), 1.4±1.4mm (0.0-4.4mm, SI), and 0.7±0.5mm (0.0-2.0mm, AP), from 20 comparisons in total.

Discussion

IGRT is used to minimize the patient setup errors through on-line evaluation. At this point in time, rigid-body registration is commonly used for IGRT (27). One of the main uncertainties of IGRT procedure is the accuracy of image registration (10, 11). Image registration mainly consists of three basic components (22): a geometric transformation T that relates the two images, a metric (or degree of similarity, or merit function) to define how well the two images aligned, and an iterative search method to find the parameters of T that optimizes the metric. In current practice, the rigid transformation, which consists of 6 degrees of freedom (3 translation parameters and 3 rotation parameters), are used for determining the patient shifts in the space (rotations may not be provided by some IGRT systems depending on the manufacturer). Rigid transformation is a global transformation in which a single mathematical expression applies to an entire image. However, the motion of human organs/tissues is essentially a deformable transformation, which poses an intrinsic uncertainty in rigid registration (28-30). Because of the local differences of volumetric image, the region of interest (ROI) used for registration also affects the result of registration. For the registration metric, different methods exist with different performance (22, 31). Finally the imperfect implementation of the search method (e.g. local convergence other than global convergence) and other factors (e.g. image quality) also contribute to the uncertainty of image registration outcome.

Multi-system verification of registrations utilized in IGRT processes is useful for not only the evaluation of IGRT shifts used in treatment systems for patient treatments, but also providing the quantitative information of registration variations caused by different systems. In this study, we included three widely used treatment systems that include an image registration capability, and three independent commercial software systems for the comparison of image registration results from these systems. The mean value of differences of registration shifts in a single dimension was larger than 2mm, and the maximum difference could be larger than 8mm. Although derived from a limited number of cases and comparisons, this result suggests that there are considerable differences in the registration results derived from different systems. The accuracy, robustness, and efficiency should be carefully considered when using image registration for IGRT, and the uncertainty of image registration should be accounted for when managing the PTV margins (27, 30).

Independent software systems are often used in IGRT credentialing for the purpose of repeating image registrations and comparing with institution’s result. The fusion uncertainty caused by the different systems should be accounted for if only automatic registrations are used for the process. If large discrepancy between institution’s result and software calculation is observed, some alternative methods can be used to confirm the institution’s IGRT result. For example, the daily online image and planning image can be aligned in the software system by applying the institution’s shift, and then the target coverage or the normal tissue sparing can be observed by overlapping the contours and dose distribution to the daily image. For the institutions wishing to participate in the clinical trials with their reduced margin treatment, a careful evaluation for the image registration needs be carried out for the IGRT credentialing. The results from this study are being used as guidance to IGRT credentialing criteria establishment.

The registration rotations were calculated by some of the individual software systems, but they were not included in the comparison because few delivery systems include this capability at this point in time. This may limit the comparison of the registration shifts in that the image registration results will be different if rotations are not allowed. More recently, with more treatment delivery systems capable of adjusting patient’s position in full six directions, the software incorporated into the IGRT system will also give the rotation shifts as part of the image registration. In future studies, the comparison including registration rotations may provide more accurate and complete evaluations for the differences in multi-system image registration results.

The registration variations for the different slice spacings and ROIs using the same software system were also evaluated in this study with a reduced number of tests. The differences caused by the slice spacing variation were relatively small and the different ROIs in FocalSim and MIMvista also had limited impact on the registration results for the cases used. This could be due to the limited variations in our ROI selections in size, shape, and position. The impact of varying image contents and qualities on registration is not evaluated quantitatively in this study. However, we did observe that they can sometimes affect the success of the automatic registration as seen in this study. For two daily image datasets of a prostate case from TomoTherapy, VelocityAI could not perform successful auto registration with given initial positions. After manually adjusting the initial position of moving image, VelocityAI could generate a visually reasonable registration result for these two image datasets. In three daily image datasets of a prostate case from Varian, MIMvista failed to register two images even with attempts to manually adjust the initial positions. For these three image sets, a different ROI (PTV+2cm) was used for registration and visually reasonable results were achieved with this ROI. For three daily image datasets for a prostate case from Elekta, VelocityAI gave an obviously incorrect registration result even though several different initial positions were used in an attempt to improve the result. We then used different ROIs (small cubic volume including PTV) for these three image datasets and obtained visually reasonable registration results.

Conclusion

Image registration in IGRT was repeated with multiple independent software systems for evaluation purpose, which can be incorporated into the part of IGRT credentialing process for clinical trials. Quantitative information on the variation of image registration results from different systems was provided. This study does not attempt to claim that any of these registration results represents “truth”, but only shows the extent of registration differences that might be observed when different systems are used in IGRT. Careful examination and quality assurance of the image registration process are needed before considering margin reduction using IGRT in clinical trials.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflicts of Interest Notification: No actual or potential conflicts of interest exist.

References

- 1.Letourneau D, Martinez AA, Lockman D, et al. Assessment of residual error for online cone-beam CT-guided treatment of prostate cancer patients. International journal of radiation oncology, biology, physics. 2005;62:1239–1246. doi: 10.1016/j.ijrobp.2005.03.035. [DOI] [PubMed] [Google Scholar]

- 2.Gerben RB, Jan-Jakob S, Anja B, et al. Kilo-Voltage Cone-Beam Computed Tomography Setup Measurements for Lung Cancer Patients; First Clinical Results and Comparison With Electronic Portal-Imaging Device. International journal of radiation oncology, biology, physics. 2007;68:555–561. doi: 10.1016/j.ijrobp.2007.01.014. [DOI] [PubMed] [Google Scholar]

- 3.Li H, Zhu XR, Zhang L, et al. Comparison of 2D Radiographic Images and 3D Cone Beam Computed Tomography for Positioning Head-and-Neck Radiotherapy Patients. International journal of radiation oncology, biology, physics. 2008;71:916–925. doi: 10.1016/j.ijrobp.2008.01.008. [DOI] [PubMed] [Google Scholar]

- 4.Guckenberger M, Meyer J, Vordermark D, et al. Magnitude and clinical relevance of translational and rotational patient setup errors: A cone-beam CT study. International journal of radiation oncology, biology, physics. 2006;65:934–942. doi: 10.1016/j.ijrobp.2006.02.019. [DOI] [PubMed] [Google Scholar]

- 5.Ghilezan M, Yan D, Liang J, et al. Online image-guided intensity-modulated radiotherapy for prostate cancer: How much improvement can we expect? A theoretical assessment of clinical benefits and potential dose escalation by improving precision and accuracy of radiation delivery. International journal of radiation oncology, biology, physics. 2004;60:1602–1610. doi: 10.1016/j.ijrobp.2004.07.709. [DOI] [PubMed] [Google Scholar]

- 6.Kan MWK, Leung LHT, Wong W, et al. Radiation Dose From Cone Beam Computed Tomography for Image-Guided Radiation Therapy. International journal of radiation oncology, biology, physics. 2008;70:272–279. doi: 10.1016/j.ijrobp.2007.08.062. [DOI] [PubMed] [Google Scholar]

- 7.Ishikura S. Quality Assurance of Radiotherapy in Cancer Treatment: Toward Improvement of Patient Safety and Quality of Care. Japanese Journal of Clinical Oncology. 2008;38:723–729. doi: 10.1093/jjco/hyn112. [DOI] [PubMed] [Google Scholar]

- 8.Galvin J. TH-A-M100E-05: Credentialing IGRT Verification Techniques for Clinical Trials. Medical Physics. 2007;34:2616–2616. [Google Scholar]

- 9.Ibbott G. MO-A-BRC-01: Credentialing for Clinical Trials: The Role of the RPC. Medical Physics. 2009;36:2687–2687. [Google Scholar]

- 10.Wu J, Murphy M. TU-C-351-06: Comparison of Multiple 3D-3D Anatomy-Based Rigid Image Registration Methods for Prostate Patient Setup Before External-Beam Radiotherapy. Medical Physics. 2008;35:2892–2892. [Google Scholar]

- 11.Lindsay P, Choi B, Bucci M, et al. SU-FF-J-31: Comparison of Image Registration Tools Used for Daily KV Imaging-Based Patient Set-Up. Medical Physics. 2007;34:2375–2375. [Google Scholar]

- 12.Court LE, Dong L. Automatic registration of the prostate for computed-tomography-guided radiotherapy. Medical Physics. 2003;30:2750–2757. doi: 10.1118/1.1608497. [DOI] [PubMed] [Google Scholar]

- 13.O’Daniel JC, Dong L, Zhang L, et al. Dosimetric comparison of four target alignment methods for prostate cancer radiotherapy. International journal of radiation oncology, biology, physics. 2006;66:883–891. doi: 10.1016/j.ijrobp.2006.06.044. [DOI] [PubMed] [Google Scholar]

- 14.Ibbott G, Followill D, Molineu H, et al. Challenges in Credentialing Institutions and Participants in Advanced Technology Multi-institutional Clinical Trials. International journal of radiation oncology, biology, physics. 2008;71:S71–S75. doi: 10.1016/j.ijrobp.2007.08.083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Grills IS, Hugo G, Kestin LL, et al. Image-Guided Radiotherapy via Daily Online Cone-Beam CT Substantially Reduces Margin Requirements for Stereotactic Lung Radiotherapy. International journal of radiation oncology, biology, physics. 2008;70:1045–1056. doi: 10.1016/j.ijrobp.2007.07.2352. [DOI] [PubMed] [Google Scholar]

- 16.Mardirossian G, Toonkel LM, Samuels MA, et al. Treatment Margin Following Anatomical Registration with CBCT in Patients with Prostate Cancer. International journal of radiation oncology, biology, physics. 2008;72:S561–S562. [Google Scholar]

- 17.Hammoud R, Patel SH, Pradhan D, et al. Examining Margin Reduction and Its Impact on Dose Distribution for Prostate Cancer Patients Undergoing Daily Cone-Beam Computed Tomography. International journal of radiation oncology, biology, physics. 2008;71:265–273. doi: 10.1016/j.ijrobp.2008.01.015. [DOI] [PubMed] [Google Scholar]

- 18.van Herk M, Remeijer P, Lebesque JV. Inclusion of geometric uncertainties in treatment plan evaluation. International journal of radiation oncology, biology, physics. 2002;52:1407–1422. doi: 10.1016/s0360-3016(01)02805-x. [DOI] [PubMed] [Google Scholar]

- 19.Amols HI, Jaffray DA, Orton CG. Image-guided radiotherapy is being overvalued as a clinical tool in radiation oncology. Medical Physics. 2006;33:3583–3586. doi: 10.1118/1.2211707. [DOI] [PubMed] [Google Scholar]

- 20.Kessler M. WE-E-351-04: Image Registration Solves Everything? Medical Physics. 2008;35:2956–2957. [Google Scholar]

- 21.Min C, Song D, Teslow T, et al. Determination of Inter-user Image Registration Variability for PTV Margin Determination in MVCT Image Guided Prostate Radiotherapy. International journal of radiation oncology, biology, physics. 2008;72:S572. [Google Scholar]

- 22.Balter JM, Kessler ML. Imaging and Alignment for Image-Guided Radiation Therapy. J Clin Oncol. 2007;25:931–937. doi: 10.1200/JCO.2006.09.7998. [DOI] [PubMed] [Google Scholar]

- 23.Boswell S, Tome W, Jeraj R, et al. Automatic registration of megavoltage to kilovoltage CT images in helical tomotherapy: An evaluation of the setup verification process for the special case of a rigid head phantom. Medical Physics. 2006;33:4395–4404. doi: 10.1118/1.2349698. [DOI] [PubMed] [Google Scholar]

- 24.Roche A, Malandain G, Pennec X, et al. Medical Image Computing and Computer-Assisted Interventation — MICCAI’98. 1998. The Correlation Ratio as a New Similarity Measure for Multimodal Image Registration; p. 1115. [Google Scholar]

- 25.Ruchala KJ, Olivera GH, Kapatoes JM. Limited-data image registration for radiotherapy positioning and verification. International journal of radiation oncology, biology, physics. 2002;54:592–605. doi: 10.1016/s0360-3016(02)02895-x. [DOI] [PubMed] [Google Scholar]

- 26.Yan H, Zhang L, Yin F-F. A Phantom Study on Target Localization Accuracy Using Cone-Beam Computed Tomography. Clinical Medicine: Oncology. 2008;2008:501. doi: 10.4137/cmo.s808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Xing L, Thorndyke B, Schreibmann E, et al. Overview of image-guided radiation therapy. Medical dosimetry : official journal of the American Association of Medical Dosimetrists. 2006;31:91–112. doi: 10.1016/j.meddos.2005.12.004. [DOI] [PubMed] [Google Scholar]

- 28.Kessler ML. Image registration and data fusion in radiation therapy. Br J Radiol. 2006;79:S99–108. doi: 10.1259/bjr/70617164. [DOI] [PubMed] [Google Scholar]

- 29.Hawkins MA, Brock KK, Eccles C, et al. Assessment of residual error in liver position using kV cone-beam computed tomography for liver cancer high-precision radiation therapy. International journal of radiation oncology, biology, physics. 2006;66:610–619. doi: 10.1016/j.ijrobp.2006.03.026. [DOI] [PubMed] [Google Scholar]

- 30.Sharpe M, Brock KK. Quality Assurance of Serial 3D Image Registration, Fusion, and Segmentation. International journal of radiation oncology, biology, physics. 2008;71:S33–S37. doi: 10.1016/j.ijrobp.2007.06.087. [DOI] [PubMed] [Google Scholar]

- 31.Birkfellner W, Stock M, Figl M, et al. Stochastic rank correlation: A robust merit function for 2D/3D registration of image data obtained at different energies. Medical Physics. 2009;36:3420–3428. doi: 10.1118/1.3157111. [DOI] [PMC free article] [PubMed] [Google Scholar]