Abstract

Introduction

Population-based surveys are used to assess colorectal cancer (CRC) screening rates, but may be subject to self-report biases. Clinical data from electronic health records (EHR) are another data source for assessing screening rates and self-report bias; however, use of EHR data for population research is relatively new. We sought to compare CRC screening rates from a self-report survey, the 2007 California Health Interview Survey (CHIS), to EHR data from Palo Alto Medical Foundation (PAMF), a multi-specialty healthcare organization serving three counties in California.

Methods

Ever- and up-to-date CRC screening rates were compared between CHIS respondents (N=18,748) and PAMF patients (N=26,283). Both samples were limited to English proficient subjects aged 51–75 with health insurance and a physician visit in the past two years. PAMF rates were age-sex standardized to the CHIS population. Analyses were stratified by racial/ethnic group.

Results

EHR data included PAMF internally completed tests (84%), and patient-reported externally completed tests which were either confirmed (7%) or unconfirmed (9%) by a physician. When excluding unconfirmed tests, PAMF screening rates were 6–14 percentage points lower than CHIS rates, for both ever- and up-to-date CRC screening among Non-Hispanic White, Black, Hispanic/Latino, Chinese, Filipino and Japanese subjects. When including unconfirmed tests, differences in screening rates between the two data sets were minimal.

Conclusion

Comparability of CRC screening rates from survey data and clinic-based EHR data depends on whether or not unconfirmed patient-reported tests in EHR are included. This indicates a need for validated methods of calculating CRC screening rates in EHR data.

Keywords: colorectal cancer screening, self-report bias, validity, California Health Interview Survey, prevalence

Introduction

Colorectal cancer (CRC) is the second leading cause of cancer deaths in the United States.1 Routine screening of all men and women aged 50 years or older could reduce CRC deaths by up to 60%,2 but screening is underutilized and disparities between some racial/ethnic groups have been increasing rather than decreasing.3–6 Large-scale surveys such as the National Health Interview Survey and the California Health Interview Survey (CHIS) are periodically used to assess self-reported CRC screening to monitor trends in screening utilization and draw attention to population groups with low screening rates. However, surveillance of CRC screening through self-report surveys may be inaccurate due to recall bias, social desirability bias and respondents’ lack of knowledge regarding screening tests.7–8 Due to these factors, survey data are generally thought to overestimate cancer screening utilization.9 Some studies with limited samples have included validation of self-report through provider records to estimate the accuracy of self-reports.7, 10–12 However, it is extremely challenging to verify self-reported CRC screening in a large population-based sample with interviewees receiving medical care in many clinical settings10 and in many cases from more than one provider.13 Due to Health Insurance Portability and Accountability Act (HIPAA) regulations, each interviewee would have to provide a written release allowing their provider to report their CRC screening history to a research group. Thus, verification of self-reported CRC screening in a large population-based sample would require multiple contacts by mail and phone with thousands of patients and physicians. In addition, studies suggest that only a small proportion of survey participants agree to release their medical information, which would introduce selection bias.10

Given these difficulties, there is a need for alternative approaches to assessing population CRC screening rates and the accuracy of self-reported screening. One possible option is to use clinical data in electronic health records (EHR). The use of EHR data for population research is relatively nascent, and it is important to assess comparability of EHR data with other more commonly used data sources for CRC screening rates. EHR data may be seen as comparable to manual chart review, which is considered the gold standard for assessing CRC screening. However, clinical data from EHR has its’ own inherent limitations, including a highly selected, usually insured population. EHR data may also come from a particular geographic region, and not fully represent all areas of a broader region, such as a state. One could not expect to use EHR to validate self-report at the individual respondent level; in most settings, it is not possible to match individual survey respondents with their EHR. However, the survey and clinical samples could be made highly comparable on an aggregate level using standardization techniques and restrictions on geographic location, race/ethnicity, health insurance status and other characteristics. Comparison of screening rates in the two similarly defined and circumscribed samples could provide insight as to the magnitude of self-report bias in survey data.

To assess the feasibility of this approach, we compared rates of CRC screening reported by CHIS participants to rates of screening based on the EHR of patients from the Palo Alto Medical Foundation (PAMF), a multi-specialty healthcare organization serving three counties in California. To promote comparability, both samples were restricted to adults aged 51–75 years who had health insurance, were English proficient, and had visited a physician in the past two years, and comparisons were made within racial/ethnic groups. Both samples were racially/ethnically diverse, reflecting the population demographics of the state of California.

Methods

CHIS 2007 (most recent year available) public use data were used to obtain population- based estimates for the state of California. The CHIS is the largest state health survey in the U.S. and is designed to provide population-based statewide estimates of health indicators for all major racial/ethnic groups and several Asian racial/ethnic subgroups.14 The CHIS, conducted biennially since 2001, employs a multi-stage sampling design in which households are selected by random-digit dialing to obtain a representative sample of the California population. Interviews are conducted in English, Spanish, Cantonese, Mandarin, Korean and Vietnamese. The CHIS 2007 surveyed over 53,000 households and over 51,000 adults.

To increase comparability between the CHIS and PAMF samples, analyses were confined to respondents aged 51–75 years who were currently insured, Non-Hispanic White (NHW), Black/African American, Asian or Hispanic/Latino, English proficient, and who reported seeing a physician in the past two years about their health. Of 22,833 CHIS respondents aged 51–75 years, 18,748 (82%) met these criteria. We used CHIS data from the state of California rather than just the three counties of PAMF’s service area to obtain more statistically stable estimates for smaller racial/ethnic groups. We used Office of Management and Budget racial/ethnic categories for major racial/ethnic groups15 to match PAMF categories. Asian racial/ethnic subgroups with sufficient sample size, Chinese, Filipino, and Japanese, were also included in stratified analyses. Estimates for other Asian subgroups could not be obtained from CHIS due to small sample sizes. Respondents were classified as English proficient if they spoke English at home or, if another language was spoken at home, reported that they spoke English well or very well.

CHIS respondents were asked several items regarding history of CRC screening tests. We defined ever-screened for CRC as ever had a colonoscopy, sigmoidoscopy, or fecal occult blood test (FOBT), and up-to-date with CRC screening as a colonoscopy within 10 years, sigmoidoscopy within 5 years or FOBT in the past year.

Data from five PAMF clinics with established primary care services were used. Compared to the underlying service area population (Alameda, Santa Clara, and San Mateo counties), the demographic characteristics of PAMF patients aged 51–75 years are similar with respect to the sex distribution, but have slightly more NHWs, and higher education and income levels than the CHIS sample.16–17 Roughly 13% of residents in counties with PAMF clinics are PAMF patients, and 10% of California’s population lives in these three counties. The Epic EHR system18 has been in use at all PAMF clinics since 2000. Self-identified race/ethnicity and language information has been collected at clinic visits and entered into the Epic EHR system at PAMF since May 2008.19

At PAMF, a HIPAA-limited dataset20 of EHR of 26,283 patients aged 51–75 years with a primary care physician, at least one primary care visit in the past two years, self-reported race/ethnicity, and English proficient was used to determine rates of CRC screening. Institutional Review Board approval was obtained to extract information from patients’ EHR for this study. A patient was determined to have ever received screening if there was any record of billing, completed procedure, or confirmed external completion for a colonoscopy, sigmoidoscopy, or FOBT/fecal immunohistochemistry test (FIT) in the PAMF EHR. PAMF switched from FOBT to FIT in July 2007. Similarly, up-to-date screening was defined as record of billing, completed test, or confirmed external completion of a colonoscopy in the past 10 years, sigmoidoscopy in the past 5 years, and FOBT/FIT in the past year. Year 2010 was used to calculate rates, to allow for 10 years (2000–2010) for accurate data capture. In some analyses, unconfirmed patient-reported externally completed tests were included.

Estimates of the proportion of California residents who were ever-screened and up-to-date with CRC screening were obtained using CHIS replicate weights and the survey proportion command in Stata 11.21 Analyses were stratified by race/ethnicity and standardized to the CHIS 2007 age and sex distribution. PAMF proportions were age-sex standardized to the same distribution. All proportions are reported with 95% confidence intervals, and non-overlapping confidence intervals were used to determine statistical significant differences for CHIS-PAMF comparisons. Type of CRC screening test obtained by up-to-date subjects was also tabulated for both the CHIS and PAMF samples.

Results

Sample sizes are presented in Table 1. While the largest racial/ethnic group was NHWs, there was sufficient representation of Black/African Americans, Asians and Hispanics/Latinos to support comparisons. Among Asian racial/ethnic subgroups, there were sufficient individuals in the Chinese, Filipino and Japanese subgroups to obtain stable estimates.

Table 1.

California Health Interview Survey (CHIS) and Palo Alto Medical Foundation (PAMF) samples by racial/ethnic group.

| CHIS (N = 18,748) | PAMF (N = 26,283) | |

|---|---|---|

| Non-Hispanic White | 15,179 (81.0%) | 19,676 (74.9%) |

| Black/African American | 992 (5.3%) | 635 (2.4%) |

| Hispanic/Latino | 1,604 (8.6%) | 1,489 (5.7%) |

| Asian | 973 (5.2%) | 4,483 (17.0%) |

| Asian subgroups | ||

| Chinese | 307 (1.6%) | 2,110 (8.0%) |

| Filipino | 235 (1.3%) | 713 (2.7%) |

| Japanese | 191 (1.0%) | 458 (1.7%) |

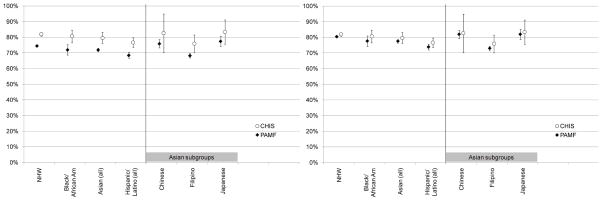

Age-sex standardized proportions of individuals ever-screened for CRC for CHIS and PAMF by race/ethnicity are presented in Figure 1. CHIS proportions were 6–9 percentage points higher than PAMF proportions for each racial/ethnic group, and statistically significantly higher for the categories NHW, Black/African American, Asian (all), and Hispanic/Latino (all). The relative differences between racial/ethnic groups were similar for CHIS and for PAMF, with Japanese having the highest rates and Filipino having the lowest rates. When unconfirmed screening procedures were included, the PAMF proportions increased an additional 4–6 percentage points, and there were no statistically significantly differences between PAMF and CHIS estimates.

Figure 1.

Proportions ever-screened for colorectal cancer (95% CI) based on California Health Interview Survey (CHIS) and Palo Alto Medical Foundation (PAMF) data. Left: PAMF unconfirmed patient-reported tests not included. Right: PAMF unconfirmed patient-reported tests included.

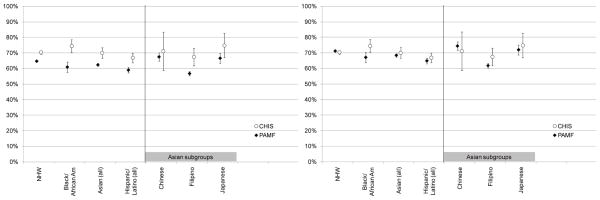

Age-sex standardized proportions of individuals up-to-date with CRC screening for CHIS and PAMF by race/ethnicity are presented in Figure 2. When unconfirmed tests were excluded, CHIS proportions were 4–14 percentage points higher than PAMF proportions across racial/ethnic groups, and statistically significantly higher in NHW, Black/African American, Asian (all), Hispanic/Latino (all), and Filipino groups. PAMF NHWs had a slightly lower CRC up-to-date screening rate (65%; CI: 64–65%) compared to CHIS NHWs (70%; CI: 69–72%). Most of the relative differences between racial/ethnic groups were similar between CHIS and PAMF (see Figure 2). When unconfirmed tests were included, the PAMF proportions increased an additional 5–7 percentage points, and only the estimates for Black/African Americans were statistically significantly different between CHIS and PAMF (75%; CI: 71–78% and 67%; CI: 63–71%, respectively).

Figure 2.

Proportions up-to-date with colorectal cancer screening (95% CI) based on California Health Interview Survey (CHIS) and Palo Alto Medical Foundation (PAMF) data. Left: PAMF unconfirmed patient-reported tests not included. Right: PAMF unconfirmed patient-report tests included.

Among CHIS respondents who were up-to-date with CRC screening, 45% met screening criteria via a colonoscopy, 11% via a sigmoidoscopy, 13% via an FOBT, 28% by a combination of two tests, and 4% by a combination of all three tests. Among PAMF patients up-to-date with screening, 84% met screening criteria via a colonoscopy, 2% via sigmoidoscopy, 5% via FIT, 9% by two tests, and <1% by all three tests.

Conclusion

We compared CRC screening rates in two different samples, a statewide telephone health survey and clinical data from EHR, with sample restrictions and standardization to promote comparability. A striking finding was that these two different samples, with dissimilar limitations, showed similar patterns across racial/ethnic groups and yielded similar estimates of CRC screening rates when unconfirmed patient-reported tests were included in the EHR data source. Possible explanations are that the biases in self report data and clinical data are minimal, or that they occur in a similar direction. However, complexities remain. While manual chart review may be regarded as the “gold standard” in terms of validation of procedures received, our results highlight the fact that EHR data can contain some data uncertainties, such as unconfirmed patient reports of externally completed tests. In PAMF EHR, among patients that were considered ever-screened, 5.8% were considered ever-screened exclusively by unconfirmed tests. Among patients that were considered up-to-date, 6.3% were considered up-to-date with CRC screening exclusively by unconfirmed tests. When excluding unconfirmed tests, our analysis suggests that screening prevalence based on EHR is lower than self-reported CRC screening. Self-reported CRC screening corresponded well with screening prevalence based on EHRs when unconfirmed tests are included. It is unclear whether one should include or exclude patient-reported screening tests that are unconfirmed by physicians for accurate calculation of screening rates in EHR. One of the reasons for this uncertainty is that validated definitions for calculating CRC screening rates using EHR data have not been developed. This is an important area for future research.

In interpreting our findings, it is important to note that there were several factors operating that may have enhanced concordance. Our comparisons of ever-screened and up-to-date with CRC screening included receipt of a CRC screening test for any reason (screening or diagnostic). Reason for test was not asked in the 2007 CHIS and thus was unavailable. Previous studies have shown discrepancies in self-report and medical records regarding the reason for receipt of a CRC screening test.12 In addition, we compared CRC screening during a rather long time period -- ever-- or, in the case of up-to-date for CRC screening, the past 10 years, since most respondents were up-to-date due to a colonoscopy rather than a stool blood test. Previous studies have shown that receipt of a screening test by itself is recalled more accurately than the exact time at which the test was received12 and the long time interval for up-to-date colonoscopy may have allowed more leeway in terms of accuracy of recall. We compared any type of CRC screening, without distinguishing colonoscopy, sigmoidoscopy or stool blood test. This eliminates incorrect recall of type of test (e.g., sigmoidoscopy versus colonoscopy) which in some studies lowers accuracy of self-report.12, 22 There were differences in the type of screening test reported, however, the majority of subjects in both samples reported a colonoscopy rather than a stool blood test. This is consistent with the observed trend that colonoscopy is currently the most widely used screening test.23 Several studies have shown that colonoscopy is recalled more accurately and may be more likely to be documented in a patients’ chart than a stool blood test.10, 22, 24 All of these factors may have contributed to similar estimates of CRC screening in the two samples when including unconfirmed tests in the EHR.

One of the strengths of this study is the large sample sizes for less well-studied racial/ethnic subgroups. Few studies have compared self-reported CRC screening and provider records among non-NHW subjects, which are often limited by small sample sizes.10, 12, 22 Our comparisons show similar estimates of CRC screening based on self-report and EHRs in all racial/ethnic groups that were compared, except for a lower rate for up-to-date screening in Black/African Americans at PAMF (Figure 2). An important weakness of this study is that it excludes respondents without health insurance and those who are not fluent in English. These are typically groups with low levels of CRC screening6, 25 and they may be more likely to self-report CRC screening incorrectly due to social desirability bias or lack of knowledge of screening tests. The two samples were also from different years (CHIS 2007, PAMF 2010) due to data availability (CHIS) and completeness (PAMF) issues. We may have expected the PAMF rates to be slightly higher due to anticipated temporal trends in CHIS, though this will be impossible to predict. In addition, PAMF EHR data on CRC screening may not be completely accurate. We used PAMF billing data to capture CRC screening tests completed in the PAMF clinics, which may underestimate tests performed at outside clinics. These screening tests in outside clinics may be captured in the patient-reported externally completed category, but with less accuracy than the billing data.

In summary, comparison of self-reported CRC screening rates in samples of survey respondents with screening rates in clinical samples based on EHR, with sample restriction, stratification and standardization to promote comparability, can provide insights on the accuracy of self-report data. Strengths of this approach include low cost, large sample sizes, and avoidance of selection bias that can occur when respondents must consent to medical records release, while limitations include difficulties in aligning clinical and survey samples, the presence of unconfirmed patient-reported tests in EHR, and inability to include individuals without health insurance. There is a need for further research on the use of EHR data to estimate CRC screening rates, including validated definitions of screening in the presence of unconfirmed patient-reported external completion of tests.

Acknowledgments

Financial Support: Crespi was supported by NIH/NCI grant P30 CA 16042.

The authors gratefully acknowledge the assistance of Kristen M. J. Azar, RN, MSN/MPH for her editorial assistance in preparation of the manuscript.

Footnotes

Competing Interests

The authors declare that they have no competing interests.

References

- 1.American Cancer Society. [Accessed March, 8 2010];Cancer Facts & Figures. 2009 http://www.cancer.org/downloads/STT/500809web.pdf.

- 2.U.S. Preventive Services Task Force (USPSTF) [Accessed August 1, 2009];Screening for colorectal cancer. 2008 http://www.ahrq.gov/clinic/uspstf/uspscolo.htm.

- 3.Meissner HI, Breen N, Klabunde CN, Vernon SW. Patterns of colorectal cancer screening uptake among men and women in the United States. Cancer Epidemiol Biomarkers Prev. 2006 Feb;15(2):389–394. doi: 10.1158/1055-9965.EPI-05-0678. [DOI] [PubMed] [Google Scholar]

- 4.Wong ST, Gildengorin G, Nguyen T, Mock J. Disparities in colorectal cancer screening rates among Asian Americans and non-Latino whites. Cancer. 2005 Dec 15;104(12 Suppl):2940–2947. doi: 10.1002/cncr.21521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Pollack LA, Blackman DK, Wilson KM, Seeff LC, Nadel MR. Colorectal cancer test use among Hispanic and non-Hispanic U.S. populations. Prev Chronic Dis. 2006 Apr;3(2):A50. [PMC free article] [PubMed] [Google Scholar]

- 6.Maxwell AE, Crespi CM. Trends in colorectal cancer screening utilization among ethnic groups in California: are we closing the gap? Cancer Epidemiol Biomarkers Prev. 2009 Mar;18(3):752–759. doi: 10.1158/1055-9965.EPI-08-0608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ferrante JM, Ohman-Strickland P, Hahn KA, et al. Self-report versus medical records for assessing cancer-preventive services delivery. Cancer Epidemiol Biomarkers Prev. 2008 Nov;17(11):2987–2994. doi: 10.1158/1055-9965.EPI-08-0177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Newell SA, Girgis A, Sanson-Fisher RW, Savolainen NJ. The accuracy of self-reported health behaviors and risk factors relating to cancer and cardiovascular disease in the general population: a critical review. Am J Prev Med. 1999 Oct;17(3):211–229. doi: 10.1016/s0749-3797(99)00069-0. [DOI] [PubMed] [Google Scholar]

- 9.Rauscher GH, Johnson TP, Cho YI, Walk JA. Accuracy of self-reported cancer-screening histories: a meta-analysis. Cancer Epidemiol Biomarkers Prev. 2008 Apr;17(4):748–757. doi: 10.1158/1055-9965.EPI-07-2629. [DOI] [PubMed] [Google Scholar]

- 10.Bastani R, Glenn BA, Maxwell AE, Ganz PA, Mojica CM, Chang LC. Validation of self-reported colorectal cancer (CRC) screening in a study of ethnically diverse first-degree relatives of CRC cases. Cancer Epidemiol Biomarkers Prev. 2008 Apr;17(4):791–798. doi: 10.1158/1055-9965.EPI-07-2625. [DOI] [PubMed] [Google Scholar]

- 11.Griffin JM, Burgess D, Vernon SW, et al. Are gender differences in colorectal cancer screening rates due to differences in self-reporting? Prev Med. 2009;49(5):436–441. doi: 10.1016/j.ypmed.2009.09.013. [DOI] [PubMed] [Google Scholar]

- 12.Hall HI, Van Den Eeden SK, Tolsma DD, et al. Testing for prostate and colorectal cancer: comparison of self-report and medical record audit. Prev Med. 2004 Jul;39(1):27–35. doi: 10.1016/j.ypmed.2004.02.024. [DOI] [PubMed] [Google Scholar]

- 13.Schenck AP, Klabunde CN, Warren JL, et al. Evaluation of claims, medical records, and self-report for measuring fecal occult blood testing among Medicare enrollees in fee for service. Cancer Epidemiol Biomarkers Prev. 2008;17(4):799–804. doi: 10.1158/1055-9965.EPI-07-2620. [DOI] [PubMed] [Google Scholar]

- 14.California Health Interview Survey. [Accessed May 12, 2010];About CHIS. http://www.chis.ucla.edu/about.html.

- 15.Office of Management and Budget. [Accessed May 10, 2010];Revisions to the Standard for the Classification of Federal Data on Race and Ethnicity. 1997 http://www.whitehouse.gov/omb/fedreg_1997standards/

- 16.U.S. Census Bureau. American Community Survey. [Accessed May 10, 2010];Table C02003. 2006–2008 Available at: http://factfinder.census.gov/servlet/DTTable?_bm=y&-context=dt&-ds_name=ACS_2008_3YR_G00_&-CONTEXT=dt&-mt_name=ACS_2008_3YR_G2000_B02003&-mt_name=ACS_2008_3YR_G2000_C02003&-tree_id=3308&-redoLog=false&-geo_id=05000US06001&-geo_id=05000US06081&-geo_id=05000US06085&-geo_id=NBSP&-search_results=01000US&-format=&-_lang=en.

- 17.U.S. Census Bureau. American Community Survey. [Accessed May 10, 2010];Table B1001. 2006–2008 Available at: http://factfinder.census.gov/servlet/DTTable?_bm=y&-context=dt&-ds_name=ACS_2008_3YR_G00_&-CONTEXT=dt&-mt_name=ACS_2008_3YR_G2000_B01001&-tree_id=3308&-redoLog=false&-geo_id=05000US06001&-geo_id=05000US06081&-geo_id=05000US06085&-geo_id=NBSP&-search_results=01000US&-format=&-_lang=en.

- 18. [Accessed September 10, 2009];Epic Electronic Health Record Software. Epic Web Site. http://www.epic.com.

- 19.Palaniappan LP, Wong EC, Shin JJ, Moreno MR, Otero-Sabogal R. Collecting patient race/ethnicity and primary language data in ambulatory care settings: a case study in methodology. Health Serv Res. 2009 Oct;44(5 Pt 1):1750–1761. doi: 10.1111/j.1475-6773.2009.00992.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.National Institutes of Health (NIH) Research Repositories, Databases, and the HIPAA Privacy Rule. 2004. [Google Scholar]

- 21.Stata Corporation. Statistical Software: Release 10.1. Stata Corporation; College Station, TX: 2009. [Google Scholar]

- 22.Baier M, Calonge N, Cutter G, et al. Validity of self-reported colorectal cancer screening behavior. Cancer Epidemiol Biomarkers Prev. 2000 Feb;9(2):229–232. [PubMed] [Google Scholar]

- 23.NIH State-of-the-Science Conference. [Accessed March 8, 2010, 2010];Enhancing Use and Quality of Colorectal Cancer Screening: Draft Statement. 2010 http://consensus.nih.gov/2010/images/colorectal/colorectal_panel_stmt.pdf. [PubMed]

- 24.Madlensky L, McLaughlin J, Goel V. A comparison of self-reported colorectal cancer screening with medical records. Cancer Epidemiol Biomarkers Prev. 2003 Jul;12(7):656–659. [PubMed] [Google Scholar]

- 25.Maxwell AE, Crespi CM, Antonio C, Lu P. Explaining disparities in colorecal cancer screening among five Asian ethnic groups: A population-based study in California. BMC Cancer. 2010;10(214) doi: 10.1186/1471-2407-10-214. [DOI] [PMC free article] [PubMed] [Google Scholar]