Abstract

We introduce variational Bayes methods for fast approximate inference in functional regression analysis. Both the standard cross-sectional and the increasingly common longitudinal settings are treated. The methodology allows Bayesian functional regression analyses to be conducted without the computational overhead of Monte Carlo methods. Confidence intervals of the model parameters are obtained both using the approximate variational approach and nonparametric resampling of clusters. The latter approach is possible because our variational Bayes functional regression approach is computationally efficient. A simulation study indicates that variational Bayes is highly accurate in estimating the parameters of interest and in approximating the Markov chain Monte Carlo-sampled joint posterior distribution of the model parameters. The methods apply generally, but are motivated by a longitudinal neuroimaging study of multiple sclerosis patients. Code used in simulations is made available as a web-supplement.

Keywords and phrases: Approximate Bayesian inference, Markov chain Monte Carlo, penalized splines

1. Introduction

Due to ever-expanding methods for the acquisition and storage of information, functional data is often encountered in scientific applications. A common problem in the field of functional data analysis is determining the relationship between a scalar outcome Y and a densely observed functional predictor X(t) [18, 22, 9]. Increasingly, this problem is longitudinal in that both the functional predictors and scalar outcomes are observed at several visits for each subject. Bayesian approaches to cross-sectional and longitudinal functional regression possess a number of advantages, including the ability to jointly model the observed functions and scalar outcomes and easily constructed credible intervals [5, 7]. However, these approaches require computationally expensive Markov chain Monte Carlo (MCMC) simulations of joint posterior distributions. The goal of this paper is to introduce a fast and scalable alternative to accommodate new types of data sets.

Variational approximations, now regularly used in computer science, are a collection of techniques for deriving approximate solutions to inference problems [10, 11, 25]. They have a growing visibility in the statistics literature [16, 19, 24]. In the Bayesian context, these methods are useful in approximating intractable posterior density functions. While this approximation sacrifices some of MCMC’s accuracy, it provides large gains in terms of computational feasibility, especially in large-data settings.

In this article, we derive an iterative algorithm for approximate Bayesian inference in functional regression. Using this algorithm, inference on model parameters can be obtained several orders of magnitude faster than MCMC sampling methods. Importantly, the construction of credible intervals for the functional coefficient is straightforward. Moreover, this procedure retains the ability to jointly model the predictor process and the scalar outcome. The computational advantage conveyed by the variational methods also allows resampling techniques, such as the nonparametric bootstrap of subjects, to be used. Unlike MCMC sampling, the variational approach cannot be made arbitrarily accurate. However, simulations indicate that the quality of the approximation is high in our setting. Our variational method is not designed to replace MCMC sampling, but it is a useful additional inferential tool in that it provides near-instant and highly accurate approximate posterior distributions. This will become increasingly relevant as functional datasets become larger and more complex.

In particular, we develop variational Bayes methods for two functional regression models: the classic cross-sectional case, in which a single scalar outcome and functional predictor are observed for each subject; and the more recent longitudinal case, in which scalar outcomes and functional predictors are observed repeatedly for each subject. This methodology is based on a penalized approach to functional regression that is flexible and widely applicable [6]. Although variational techniques typically incur initial algebraic and implementation costs, the present article alleviates these considerations.

We apply the methods developed to a longitudinal neuroimaging study, in which multiple sclerosis patients undergo both tests of cognitive ability and a diffusion tensor imaging scan at each of several visits. From the diffusion tensor imaging scans, we construct functional predictors that provide detailed quantitative information about major white matter fiber bundles (see Figure 1). Because multiple sclerosis results in the degradation of cerebral white matter, researchers hope to use the functional predictors and cognitive disability measures to understand the progression of the disease. This study was previously analyzed in [7].

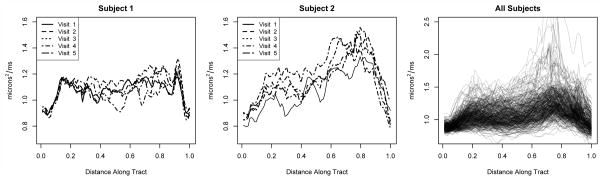

Fig 1.

The functional predictor used in our diffusion tensor imaging application. The left and middle panels show the functional predictors observed for individual subjects; the right panel shows the collection of all observed functions.

In Section 2 we introduce variational Bayes and a penalized approach to functional regression. Section 3 combines these ideas and develops a scalable iterative algorithm for approximate Bayesian inference in functional regression. The results of a simulation study are described in Section 4 and a real-data analysis is performed in Section 5. We conclude the main text with a discussion in Section 6. Appendices A and B contain algebraic derivations and expressions used in the construction of the iterative algorithm. All code used in the simulation study is available as a web-supplement to this article.

2. Background

In the following subsections we introduce variational approximations for Bayesian inference and an approach to functional regression which uses penalized B-splines to estimate the coefficient function.

2.1. Variational Bayes

Here we give an overview of variational Bayes; for a more complete treatment see [19] and [3], Chapter 10.

Bayesian inference is based on the posterior density function

where θ ∈ Θ is the parameter vector, y is the observed data, p(y) is the marginal likelihood of the observed data, and p(y, θ) is the joint likelihood of the data and model parameters. The goal of the density transform approach is to approximate the posterior density p(θ|y) by a function q(θ) for which the q-specific lower bound on the marginal likelihood (defined below) is more tractable than the marginal likelihood itself. The first step is to restrict q to a more manageable class of densities and choose the element of that class with minimum Kullback-Leibler distance from p(θ|y).

More concretely, let q be an arbitrary density function over Θ. Then

| (2.1) |

with equality if and only if q(θ) = p(θ|y) almost everywhere [12]. It follows that ; we define the q-specific lower bound on the marginal likelihood as

| (2.2) |

It can be shown that minimizing the Kullback-Leibler distance between q(θ) and p(θ|y) is equivalent to maximizing p(y; q). Stated generally, the following result holds.

Result 2.1

Let u and v be continuous random vectors with joint density p(u, v). Then

is achieved by q*(u) = p(u|v).

Next, we restrict q to a class of functions for which p(y; q) is more tractable than p(y). While several restrictions are possible, here we focus on the product density transform: we assume that for some partition {θ1, …, θL} of θ it is possible to write . In the functional regression setting, the posterior dependence of some subsets of the model parameters is weak and the assumption that q factorizes provides accurate approximate inference. In other settings where the posterior dependence of parameters is stronger, this assumption may lead to poor approximations and inference due to the failure to account for correlations between model parameters. There are three simple strategies to gain insight into what sets of parameters are a-posteriori weakly correlated: 1) theoretical work on asymptotic posterior correlations; 2) Bayesian inference on smaller or simpler data sets; and 3) prior experience. If posterior correlation is potentially problematic, a more flexible component density ql that allows for this correlation could be used; however, this must be balanced against the simplification desired in the approximating class of functions. While none of the approaches above is infallible, when combined with powerful variational approximations they can provide a valuable alternative to Bayesian inference. The methods provided in this paper are intended as a reasonable and tractable complement of and not replacement for Bayesian computations.

Combining the assumption that q factorizes over a partition of θ with Result 2.1, we can derive explicit solutions for each factor ql(θl), 1 ≤ l ≤ L, in terms of the remaining factors. Solving for each factor in terms of the others leads to an iterative algorithm for obtaining a solution for q. The explicit solution for each ql(θl) is derived as follows. Assuming that q is subject to the factorization restriction, it follows that

Define the joint density function p̃ (y, θ1) to be

so that

Then, using Result 2.1, the optimal q1 is

where Eθ−1 log p(y, θ) is the expectation with respect to q2(θ2) … qL(θL). The same argument for l in 1, …, L yields optimal densities satisfying

| (2.3) |

where rest ≡ {y, θ1, …, θl−1, θl+1, …, θL} is the collection of all remaining parameters and the observed data. Solving for each factor in terms of the others leads to an iterative algorithm for obtaining a solution for q. We update each factor in turn until the change in p(y; q) is negligible.

2.2. Penalized functional regression

Next we introduce penalized approaches to cross-sectional and longitudinal functional regression [5, 6, 7].

In the cross-sectional case, we observe data of the form [Yi, Xi(t), zi] for subjects 1 ≤ i ≤ I, where Yi is a continuous outcome, Xi(t) is a functional covariate, and zi is a 1 × p vector of non-functional covariates. The linear functional regression model is given by [4, 21]

| (2.4) |

We call the parameter γ(t) the coefficient function. In practice, the predictor functions Xi(t) are observed over a discrete grid, and often with error. That is, we observe {Wi(tij): tij ∈ [0, 1]} for 1 ≤ i ≤ I and 1 ≤ j ≤ Ji, where and . The sampling scheme on which the functional predictors are observed may take a variety of forms: points may be equally or unequally spaced, sparse or dense at the subject level, identical or different across subjects. For simplicity, we will assume that all subjects are observed over the same grid {t1, …, tN } and are observed at an equal number of visits J. Extensions to different grids and different number of visits is straightforward, but with considerable increase in notational complexity.

To estimate the parameters in model (2.4), we use the following two-stage procedure. First, the predictor functions Xi(t) are expressed using a principal components (PC) decomposition. Second, the coefficient function γ(t) is estimated using penalized B-splines. Smoothness of γ̂(t) is explicitly induced via a mixed effects model. Specifically, let Σ̂X(s, t) be an estimator of the covariance operator Cov (Xi(s), Xi(t)) based on the available functional observations. Further, let be the spectral decomposition of Σ̂X(s, t), where λ1 ≥ λ2 ≥ · · · are the non-increasing eigenvalues and ψ(t) = {ψk(t): k ∈ Z+} are the corresponding orthonormal eigenfunctions. An approximation for Xi(t), based on a truncated Karhunen-Lóeve expansion, is given by , where Kx is the truncation lag, the PC loadings are uncorrelated random variables with variance λk, and μ(t) is the mean function over all subjects and visits.

Next, we use a large cubic B-spline basis to smoothly estimate the coefficient function γ(t) using a mixed effects model. Let φ(t) = {φ1(t), …, φKg(t)} be a cubic B-spline basis of dimension Kg. Then the integral in model (2.4) can be written as where is the row vector of subject i’s PC loadings, and M is a Kx × Kg matrix with (k, l)th entry . Smoothness of γ̂(t) is enforced by assuming a modified first order random walk prior on the vector g [13]. That is, we assume for 2 ≤ l ≤ Kg and let . These are standard assumptions in Bayesian P-splines modeling [23, 13]. Taken together, we jointly model the scalar outcome Yi and the functional exposure Xi(t) using the following model:

| (2.5) |

where β are treated as fixed parameters with diffuse priors, D is the covariance matrix induced by the first order random walk prior, and Λ = diag[λ1, …, λKx]. Inference for the functional regression model is based on the posterior density

| (2.6) |

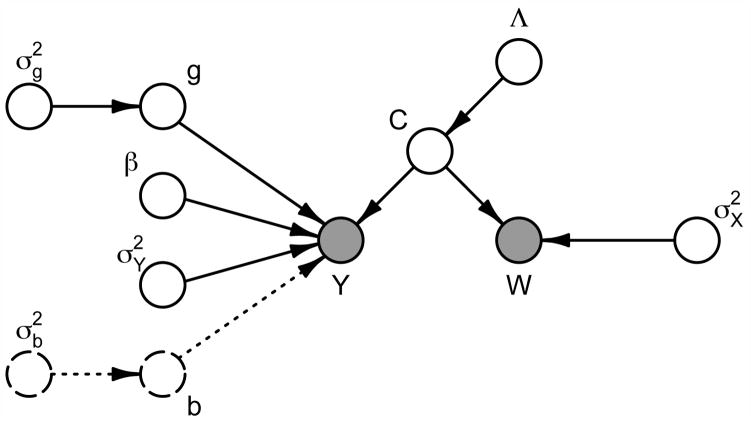

where C is the matrix of PC loadings constructed by row-stacking the ci, , and W is the matrix of observed predictor functions constructed by row-stacking the Wi(t). Because the functional predictors Xi(t) are observed with error, this model extends Bayesian inference for measurement error regression problems to the functional setting. A directed acyclic graph depicting model (2.5) is presented in Figure 2.

Fig 2.

Directed acyclic graph corresponding to the functional regression model (2.5). Shaded nodes correspond to observed data, and unshaded nodes to model parameters. Arrows indicate conditional dependence. The nodes for and b, shown as dashed lines, appear in the longitudinal functional regression model (2.8) but not in the cross-sectional model (2.5).

In the longitudinal case, we observe data of the form [Yij, Xij(t), zij] for 1 ≤ i ≤ I and 1 ≤ j ≤ Ji. Thus we observe a distinct functional predictor and scalar outcome for each subject over several visits, and again note that in place of the true functional predictors Xij(t) we often observe a measured-with-error function Wij(t). The longitudinal functional regression model is given by [7]

| (2.7) |

this differs from model (2.4) in the use of subject-specific random effects Zi to account for correlation in the repeated outcomes at the subject level. Moreover, longitudinal data sets tend to be much larger than cross-sectional data sets because of the number of visits.

Given the advent of multiple observational studies collecting dense functional data at multiple visits, the importance of longitudinal functional regression cannot be understated. Unfortunately, with the exception of the work in [7], no other approach can currently deal with the combination of subject-specific random effects and functional predictors necessary to capture the structure of the data. While a wide array of functional regression methods exist, we contend that the specific modeling choices described here made the extension not only possible, but seamless. Estimation of the parameters in the longitudinal setting extends naturally from the procedure outlined for the cross-sectional setting. Again, we express the functional predictors using a PC basis and use a penalized B-spline expansion for the coefficient function. The joint model for the outcome, Yij, and exposure, Xij(t), becomes

| (2.8) |

Again, inference is based on the posterior density

| (2.9) |

A directed acyclic graph of the longitudinal functional regression model appears in Figure 2.

3. Variational approximations for penalized functional regression

We now combine the ideas introduced above to develop a scalable iterative algorithm for approximate Bayesian inference in functional regression. We will focus on the longitudinal functional regression model (2.8); the cross-sectional case can be obtained as a special case by omitting the vector of subject-specific random effects b. We pause briefly to introduce the following useful notation: for a scalar random variables θ, let

be the mean and variance with respect to the q distribution. For a vector parameter θ, we use the analogously defined μq(θ) and Σq(θ).

As noted, inference in the longitudinal functional regression model is based on the posterior density (2.9). Using variational Bayes, we approximate this posterior density using

| (3.1) |

and by solving for each factor q(·) in terms of the remaining factors. The additional factorization

follows as a consequence of (2.3) and the structure of the current model as shown in Figure 2 [3, Sec. 10.2.5]. We take advantage of this induced factorization in deriving optimal densities for the variance components in the penalized functional regression model.

To provide an example of how optimal densities are constructed, we derive the optimal densities q*(g) and ; derivations of these and for the other parameters are provided in Appendix A. Recall that and . According to (2.3), the optimal densities are given by

where rest includes both the observed data and all parameters not currently under consideration.

Using the full conditional distribution , the optimal density q*(g) is

where

| (3.2) |

Thus the optimal density q*(g) is N(μq(g), Σq(g)). Similarly, the optimal density is

Thus is where

| (3.3) |

Note that, when , the term appearing in (3.2) is equal to .

Thus, the optimal densities q*(g) and belong to parametric families with the parameters explicitly determined by the distributions of the remaining model parameters and the observed data. Similar derivations for the parameters of the remaining optimal densities are derived in Appendix A. Taken together, these solutions lead to Algorithm 1 for approximate Bayesian inference in the functional linear regression setting.

Algorithm 1.

Iterative scheme for obtaining the parameters in the optimal densities in the longitudinal functional regression model (2.8).

| Initialize: , , μq(C) = 0, μq(g) = 0, μq(β) = 0, Σq(g) = I, Λq = I. | |

| Cycle: | |

|

| |

| until the increase in p(Y, W; q) is negligible. |

Further, as shown in Appendix B, the q-specific lower bound on the marginal log-likelihood has the form

where const. is an additive constant that remains unchanged in the iterations of Algorithm 1. All parameters denoted A and B and indexed by a subscript are hyperparameters of the inverse gamma prior distributions of the variance components. The quantity log p(Y, W; q) is typically monitored for convergence in place of p(Y, W; q). Note that, because several substitutions are made to simplify the expression, this form for log p(Y, W; q) is only valid at the end of each iteration of Algorithm 1, and only if the parameters are updated in the order given.

Finally, posterior credible intervals are readily obtained for all model parameters. However, variational approximations in effect fit a parametric distribution to a mode of the posterior density, which may have consequences when the posterior is multi-modal or more diffuse than the approximating parametric distribution; in such cases one could expect that credible intervals from variational Bayes and MCMC sampling may not agree. This was not a problem in our simulations, where the agreement between the approximate and MCMC-sampled posterior distribution is high, although in our application the variational credible intervals are slightly narrower than those from MCMC sampling.

4. Simulations

In this section we undertake simulation exercises with two goals. First, we evaluate our approach’s overall ability to accurately estimate all coefficients in a functional regression model. Second, we compare the individual approximate posterior distributions q*(θl) ≈ p(θl | rest) to those given by Markovchain Monte Carlo (MCMC) sampling in order to examine the quality of the variational approximation in the functional regression setting. We conduct separate simulations for the cross-sectional and longitudinal situations. The MCMC sampling was executed in WinBUGS and the variational Bayes approach was implemented in R.

4.1. Cross-sectional functional regression

We generate samples from the model

| (4.1) |

Here we assume I = {100, 500} subjects and generate zi ~ Unif [−5, 5]. We take , β2 = 3, and γ(t) = cos(2πt).

To generate our simulated functional predictors , we use the functional predictors from our scientific application in the following way. First, we compute a functional principal components decomposition of the with eigenfunctions ψ1(t), ψ2(t), … and corresponding eigenvalues λ1, λ2, …. Recall that the application predictors can be approximated using where μ(t) is a population mean function, Kx is the truncation lag and the cik are uncorrelated random variables with variance λk. Using this, we construct simulated regressors

This parametric construction of the simulated functional predictors is related to the application predictors through the mean function μ(t), the eigenfunctions ψk(t), and the variance components λk. As in our application, the simulated predictors are observed on a grid of length 93.

We generate 100 such datasets for I = 100 and I = 500 and fit model (4.1) using both MCMC simulation and the variational approximation approach. For the MCMC simulation, we use chains of length 2500 with the first 1000 as burn-in. Representative examples of the MCMC model fits were inspected using trace and autocorrelation plots to ensure that the posterior samples were reasonable and that the comparison with variational Bayes was fair. To evaluate the ability of the proposed approach to estimate the functional coefficient γ(t) we use the mean squared error (MSE) . A comparison of MSEs for the variational approach with the more computationally intensive MCMC sampling is given in table 1. To provide context for this table, in the left panel of Figure 3 we plot the estimated coefficient function resulting in the median MSE for I = 100.

Table 1.

Average integrated MSE for γ(t) and average MSE for the non-functional covariates β1, β2 estimated using the variational approximation, taken over 100 simulated datasets. For I = 500, large outlier for the MCMC MSE were removed in the calculation of the average

| γ(t) | β1 | β2 | ||

|---|---|---|---|---|

| I = 100 | VB | .050 | .071 | .051 |

| MCMC | .054 | .071 | .051 | |

|

| ||||

| I = 500 | VB | .046 | .008 | .001 |

| MCMC | .120 | .008 | .001 | |

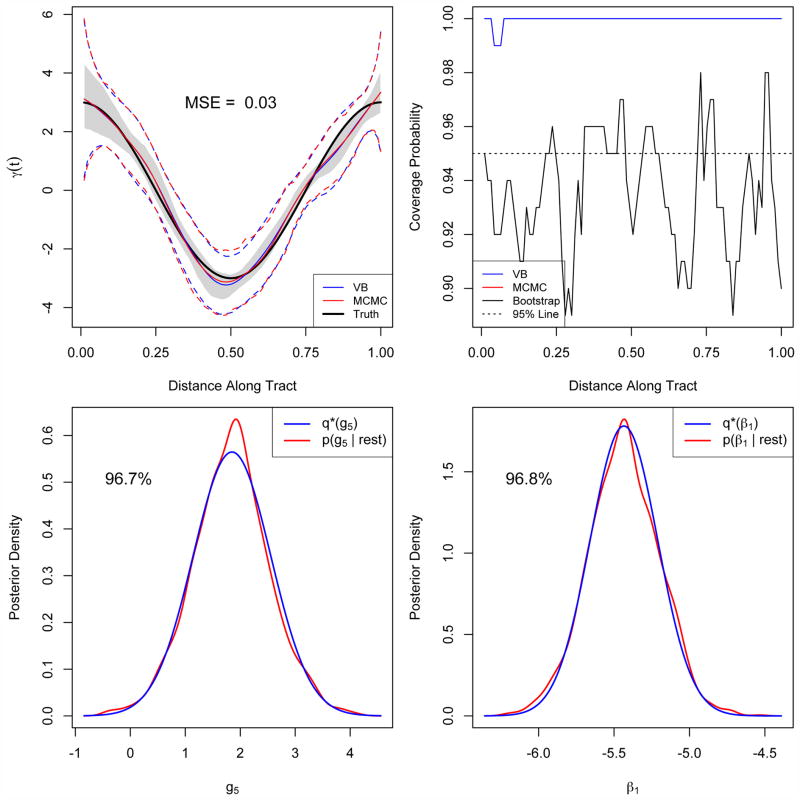

Fig 3.

The top left panel shows the estimated coefficient function corresponding to the median MSE = 0.049, as well as variational and MCMC 95% credible intervals (dashed lines) and the 95% bootstrap interval (shaded region). The top-right panel displays the coverage probabilities of the credible intervals over the domain of the predictor (note there is perfect overlap of the VB and MCMC coverage probabilities). The bottom panels show posterior densities estimated by variational approximations and by MCMC sampling from the same simulated dataset (shown in dashed and solid lines, respectively), and provide the accuracy of the approximation expressed as a percent.

Interestingly, when I = 500 the MSEs for MCMC sampling contain several large outliers, which raises the average MSE for the coefficient function in Table 1. Upon inspection, it was found that these large values corresponded to model fits in which the chains for g were bimodal. A large primary mode surrounded the true parameter value but a smaller, more diffuse mode corresponded to a model overfit. We refit these models using as initial parameter values the estimates provided by the variational approach, which caused the bimodal behavior to disappear and brought the MSEs (and average MSE) down to levels similar to the remaining model fits.

We also quantify the quality of the variational approximation to the MCMC-sampled posterior by computing the accuracy for each parameter in the model using ; scores near 1 indicate a high 2 level of agreement between the two densities. Due to the large number of parameters in the model, we present only a subset of the average accuracies in Table 2. As with the MSE, context for this table in given in Figure 3. The accuracy of g is affected by the presence of outliers, attributable to the same bimodal MCMC samples that caused the very large MSEs appearing in Table 1.

Table 2.

The accuracy of the variational approximation to the MCMC-sampled posterior, expressed as a percentage, for a subset of parameters in the cross-sectional functional regression model (2.5)

| Accuracy | g5 | g20 | c1,1 | c1,10 | λ1,1 | λ1,10 | ||

|---|---|---|---|---|---|---|---|---|

| I = 100 | 96.3 | 95.1 | 98.3 | 98.0 | 96.9 | 97.2 | 95.0 | |

| I = 500 | 86.7 | 82.6 | 97.6 | 97.8 | 97.6 | 88.3 | 96.3 |

Due to the substantial decrease in computation time using variational Bayes over MCMC methods, we are able to construct 95% bootstrap confidence intervals by sampling subjects with replacement and refitting model (4.1) using the variational approach. While credible intervals provided by MCMC or by a single variational fit are overly conservative, the bootstrap intervals are on average .46 times narrower and, averaged over the domain, have coverage probability 93.4% for I = 100 and 93.6% for I = 500. The far right panel of Figure 3 displays the coverage probabilities of the various credible and confidence intervals for I = 500.

As demonstrated in Table 2 and Figure 3, the variational approximation performs well in this functional regression setting, both in terms of low MSEs and of agreement the with MCMC-sample posterior density. This stems from the low posterior dependence between the parameters, which is assumed in the use of the density transform approach. Additionally, the use of the bootstrap allows the construction of confidence intervals that are not overly conservative.

Importantly, even in this simulation the computational burden is greatly reduced through the use of variational approximations. For I = 100, the MCMC sampling took on average 315 seconds, while the approximation was computed in on average 0.04 seconds (Dual Core 3.06GHz Processor; 4 GB RAM; OS X 10.6.4). For I = 500, the respective times were 1614 and 0.2 seconds. Constructing the bootstrap confidence intervals, based on 400 bootstrap samples, took on average an additional 20 and 76 seconds for I = 100 and I = 500, respectively.

4.2. Longitudinal functional regression

Next, we generate samples from the model

| (4.2) |

We take I = 100 subjects with J = 3 visits per subject; random effects b are with . Again, we generate zi ~ Unif [−5, 5], take , β2 = 3, and select γ(t) = cos(2πt). The functional predictors are constructed as above; we take cij ~ N (0, diag(λ1, …, λijKx)) so that the PC loadings are not correlated within subjects.

We fit model (2.8) for 100 simulated datasets. As in the cross-sectional case, we use chain lengths of 2500, with 1000 as burn-in, for the MCMC sampling. In Table 3 we display the average MSE of the estimated functional and scalar parameters and the subject-specific random effects. Again, the variational approximation performs as well as the MCMC sampling with a substantial difference in computation time: the MCMC sampling took on average 973 seconds, while the approximation was calculated in on average .2 seconds.

Table 3.

Average integrated MSE for γ(t) and average MSE for the non-functional covariates β1, β2 estimated using the variational approximation, taken over 100 simulated datasets

| γ(t) | β1 | β2 | ||

|---|---|---|---|---|

| I = 100, J = 3 | VB | .026 | .0003 | .0002 |

| MCMC | .030 | .0002 | .0002 |

5. Application

In our scientific application, we analyze the association between measures of intracranial white matter and cognitive decline in multiple sclerosis patients. White matter is made up of myelinated axons, the long fibers used to transmit electrical signals in the brain, and is organized into bundles, or tracts. Major examples of white matter tracts are the corpus callosum, the corticospinal tracts, and the optic radiations. Here we focus on the corpus callosum, a collection of white-matter fibers which connects the two hemispheres of the brain.

Myelin, the fatty insulation surrounding white matter fibers, allows electrical signals to be propagated at high speeds along white matter tracts. Multiple sclerosis is a demyelinating autoimmune disease that causes in lesions in the white matter. These lesions disrupt electrical signals, and, over time, result in severe disability in affected patients. To measure cognitive disability, we use the Paced Auditory Serial Addition Test (PASAT), which assesses auditory processing speed and calculation ability. In this test, a proctor reads aloud a sequence of 60 numbers at three-second intervals, while the subject provides the sum of the previous two numbers spoken. This test has scores between 0 and 60 indicating the number of correct sums provided by the subject; the score 60 indicates the highest level of cognitive ability [8].

To quantify white matter, we use diffusion tensor imaging, a magnetic resonance imaging that measures the diffusivity of water in the brain. Because white matter is organized in bundles, water tends to diffuse anisotropically along the tract, which makes their reconstruction from MRI possible. By measuring diffusivity along several gradients, diffusion tensor imaging is able to produce detailed images of intracranial white matter [1, 2, 14, 17]. Moreover, continuous summaries of individual white matter tracts, parameterized by distance along the tract and called tract profiles, can be constructed from diffusion tensor images. Here we study the fractional anisotropy tract profile of the right corticospinal tract; this gives a measure of how anisotropic diffusion is along the tract.

Our study consists of 100 multiple sclerosis patients with between two and eight visits each; a total of 334 visits were observed. Study participants had ages between 21 and 71 years, and 63% were women. We fit model (2.8), using age and gender as non-functional covariates and the mean diffusivity tract profile of the corpus callosum as a functional predictor. We include subject-specific random intercepts to account for the repeated observations at the subject level. The model was fit using both the variational approximation and MCMC sampling; the results are shown in Figure 4.

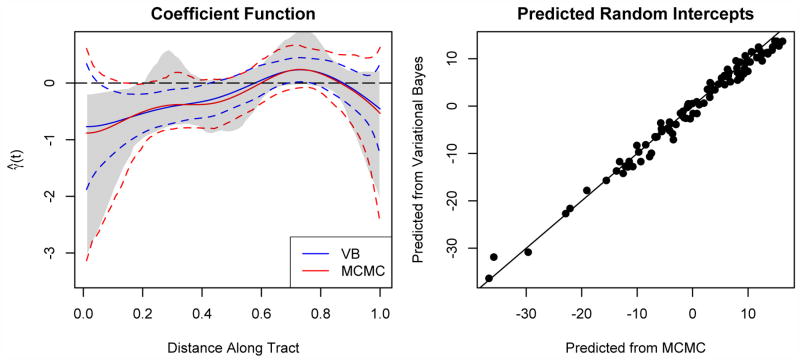

Fig 4.

Results of fitting model (2.8) to the diffusion tensor imaging dataset. The left panel shows the estimated coefficient function; credible intervals for both methods are shown in dashed lines, and the nonparametrically bootstrapped interval shown in grey; the right panel shows the random intercepts predicted by both variational Bayes and MCMC sampling.

Previous studies have linked damage in the corpus callosum to cognitive decline as measured by PASAT and other tests [15, 20]. However, these studies lacked the spatial information present in the functional treatment here, which proves to be important. From the estimated coefficient function and bootstrapped confidence intervals, we see that the region from roughly 0 to .2 is negatively associated with the PASAT outcome – that is, subjects with above-average mean diffusivity in these regions tend to have lower PASAT scores. A second region, from .65 to .8, is positively associated with the outcome. We base inference on the bootstrapped intervals due to the overly conservative coverage of the MCMC and variational Bayes credible intervals; however, there is broad agreement between all intervals regarding the location of regions of interest. Note the interpretation of the coefficient function is marginal, rather than conditional on a subject’s random intercept. The random intercepts are an important component of the model: a model including only random intercepts explains roughly 80% of the outcome variability, while adding functional and nonfunctional covariates raises this to 89%. Finally, age and gender were not found to be statistically significant, but were retained as scientifically important covariates. Their inclusion did not meaningfully affect the shape or significance of the functional predictor.

There is broad agreement between the variational Bayes and MCMC model fits: the point estimates of the coefficient and the random intercepts are very similar, and the credible intervals indicate the same regions of significance. On the other hand, the credible interval using MCMC is wider than that using variational Bayes. As noted above, the variational method can result in narrower confidence intervals if the approximating density is less diffuse than the MCMC-sampled posterior which appears to be the case here. In this application, we posit that the lesser importance of the functional predictors in comparison to the random intercepts leads to increased posterior variability in the estimated functional coefficient. Indeed, when we fit a model without the random subject-specific intercept the confidence intervals for Bayesian and variational Bayesian approximations became indistinguishable.

Also shown in Figure 4 as a grey band is the 95% bootstrap confidence interval, constructed by nonparametrically resampling subjects and fitting the longitudinal functional regression model using variational Bayes. Inference for the coefficient function is largely unchanged based on the bootstrap interval except in the region from .2 to .4, which does not appear to be significantly associated with the outcome. Although the credible intervals using variational Bayes are likely too narrow, the computational gain and accurate point estimates provided by this method allow for the construction of bootstrap confidence intervals, which performed much better in our simulations.

6. Discussion

The variational Bayes approach to functional regression was motivated by a pressing need for computationally feasible Bayesian inference in a large-data setting. We have developed iterative algorithms for approximate inference in both the cross-sectional and longitudinal regression settings, and analyzed a longitudinal neuroimaging study. The methods developed: 1) flexibly estimate all parameters in the cross-sectional and longitudinal functional regression models; 2) accurately approximate the posterior distributions of all model parameters; 3) retain the advantages of Bayesian inference, including the ability to jointly model the functional predictors and scalar outcomes and easily constructed credible bands; 4) require orders of magnitude less computational effort than MCMC techniques; and 5) allow the construction of nonparametric bootstrap confidence intervals, which seem to have good coverage probabilities.

A few limitations of the variational Bayes method are apparent. While our simulations indicate that variational techniques can be used with confidence in the functional regression setting, the approximation cannot be made more accurate by increasing computation time. Additionally, the iterative algorithms are based on involved algebraic derivations; those needed for functional regression have been carried out here, but additional work may be needed to adapt these algorithms to specific scientific settings. Lastly, the performance of credible intervals approximated using variational Bayes may not be satisfactory if the posterior distribution is multimodal or more diffuse than the approximating distribution, although the use of the nonparametric bootstrap can alleviate this issue.

Future work may proceed in several directions. The adaptation of the approach to non-Gaussian outcomes will expand the class of applications in which variational Bayes may be used for functional regression. Very large gains in computation time may be found in functional magnetic resonance imaging or other studies where the predictors are sampled at thousands or tens of thousands of points. More generally, variational Bayes has potential applications in several functional data analysis topics, including function-on-function regression and the decomposition of populations of functions.

Appendix A: Derivations

In this appendix we derive the optimal densities q* for approximate Bayesian inference in the longitudinal functional regression model. For the cross-sectional case, one may omit the random effects b. We recall that, given a partition {θ1, …, θL} of the parameter space θ, the explicit solution for q(θl), 1 ≤ l ≤ L, has the form

| (A.1) |

where rest ≡ {y, θ1, …, θl−1, θl+1, …, θL}

A.1. Optimal densities for g and

Recall that and . According to (A.1), the optimal densities are given by

The full conditional distribution p(g|rest) appearing above is given by

Therefore the optimal density q*(g) is

where

| (A.2) |

Thus the optimal density q*(g) is N(μq(g), Σq(g)).

Further, the full conditional is given by

so that the optimal density is

Thus is where

| (A.3) |

Note that, when , the term appearing in (A.2) is equal to .

A.2. Optimal densities for b and

Recall that and .

The full conditional distribution p(b|rest) is given by

Therefore, by (A.1), the optimal density q*(b) is

After taking the expectation above, the optimal density q*(b) is N(μq(b), Σq(b)) where

| (A.4) |

Further, the full conditional is given by

so that the optimal density is

Thus is where

| (A.5) |

Note that, when , the term appearing in (A.4) is equal to .

A.3. Optimal densities for C and λj

Recall that cij ~ N (0, Λ), where Λ = diag(λ1, …, λKx) and λk ~ IG (Aλ, Bλ) for 1 ≤ k ≤ Kx. In the following, we continue to use cij as the PC loadings for subject i at visit j and C as the matrix constructed by row-stacking the cij. We additionally use μq(c),ij as the expected value of cij with respect to the q(C) distribution and μq(C) as the matrix constructed by row-stacking the μq(c),ij. Finally, let

The full conditional distribution p(C|rest) is given by

Therefore, by (A.1), the optimal density q*(C) is

where

| (A.6) |

Thus the optimal density q*(C) is a product of Normally distributed random vectors sharing a common covariance matrix and with means the rows of μq(C).

In the derivation of the optimal density q*(λk), 1 ≤ k ≤ Kx, we let Ck denote the kth column of C and denote the kth column of μq(C). Further, we let (Σq(C))kk denote the (k, k)th element of Σq(C). The full conditional p(λk|rest) is given by

so that the optimal density q*(λk) is

Thus q*(λk) is IG(Aλ + (nJ)/2, Bq(λk) where

| (A.7) |

Note that, when q(λk) = q*(λk), the term (k, k)th entry of appearing in (A.6) is equal to .

A.4. Optimal density for β

Recall that .

The full conditional distribution p(β|rest) is given by

Therefore, by (A.1), the optimal density q*(β) is

After taking the expectation above, the optimal density q*(β) is N(μq(β), Σq(β)) where

| (A.8) |

A.5. Optimal density for

Recall that the functional predictors are observed over a grid of length N. The full conditional is given by

so that the optimal density is

Thus is where

| (A.9) |

Note that, when , the term appearing in (A.6) is equal to .

A.6. Optimal density for

Finally, the full conditional is given by

so that the optimal density is

Next, we see that

| (A.10) |

Thus is where

| (A.11) |

Note that, when , the term appearing regularly above is equal to .

Appendix B: Expression for p(Y, W; q)

In this appendix we derive an expression for the lower bound of the log likelihood. This quantity is use to monitor convergence in Algorithm 1, and its derivation takes advantage of the order of updates in the algorithm to simplify the expression.

We have that . Now,

| (B.1) |

The first term appearing in (B.1) is

The second term is

Next, we have

The fourth term is given by

Further, we have

The sixth term in (B.1) is

Next,

Additionally, the eighth term in (B.1) is

Next, we have

The tenth term is

Finally, for 1 ≤ k ≤ Kx

We combine the above factors noting that many terms cancel. For example, the terms and appear in and respectively. Moreover, we can make substitutions for terms appearing in the updates given in Algorithm 1 and again simplify the expression. An example is to combine and and substitute for ; this term cancels with another appearing in . Thus we have

Using const. to represent an additive constant that is not affected by updates in Algorithm 1, we have

Contributor Information

Jeff Goldsmith, Email: jgoldsmi@jhsph.edu, Johns Hopkins Bloomberg School of Public Health Department of Biostatistics 615 North Wolfe Street Baltimore, Maryland 21205, USA.

Matt P. Wand, Email: Matt.Wand@uts.edu.au, School of Mathematical Sciences University of Technology, Sydney P.O. Box 123 Broadway, 2007, Australia.

Ciprian Crainiceanu, Email: ccrainic@jhsph.edu, Johns Hopkins Bloomberg School of Public Health Department of Biostatistics 615 North Wolfe Street Baltimore, Maryland 21205, USA.

References

- 1.Basser P, Mattiello J, LeBihan D. MR Diffusion Tensor Spectroscopy and Imaging. Biophysical Journal. 1994;66:259–267. doi: 10.1016/S0006-3495(94)80775-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Basser P, Pajevic S, Pierpaoli C, Duda J. In vivo fiber tractography using DT-MRI data. Magnetic Resonance in Medicine. 2000;44:625–632. doi: 10.1002/1522-2594(200010)44:4<625::aid-mrm17>3.0.co;2-o. [DOI] [PubMed] [Google Scholar]

- 3.Bishop CM. Pattern Recognition and Machine Learning. New York: Springer; 2006. [Google Scholar]

- 4.Cardot H, Ferraty F, Sarda P. Functional Linear Model. Statistics and Probability Letters. 1999;45:11–22. [Google Scholar]

- 5.Crainiceanu CM, Goldsmith J. Bayesian Functional Data Analysis using WinBUGS. Journal of Statistical Software. 2010;32:1–33. [PMC free article] [PubMed] [Google Scholar]

- 6.Goldsmith J, Bobb J, Crainiceanu CM, Caffo B, Reich D. Penalized Functional Regression. Journal of Computational and Graphical Statistics. doi: 10.1198/jcgs.2010.10007. To Appear. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Goldsmith J, Crainiceanu CM, Caffo B, Reich D. A Case Study of Longitudinal Association Between Disability and Neuronal Tract Measurements. Under Review 2011 [Google Scholar]

- 8.Gronwall DMA. Paced auditory serial-addition task: A measure of recovery from concussion. Perceptual and Motor Skills. 1977;44:367–373. doi: 10.2466/pms.1977.44.2.367. [DOI] [PubMed] [Google Scholar]

- 9.James GM, JW, JZ Functional Linear Regression That’s Interpretable. Annals of Statistics. 2009;37:2083–2108. [Google Scholar]

- 10.Jordan MI. Graphical models. Statistical Science. 2004;19:140–155. [Google Scholar]

- 11.Jordan MI, Ghahramani Z, Jaakkola TS, Saul LK. An Introduction to Variational Methods for Graphical Models. Machine Learning. 1999;37:183–233. [Google Scholar]

- 12.Kullback S, Leibler D. On Information and Sufficiency. The Annals of Mathematical Statistics. 1951;22:79–86. [Google Scholar]

- 13.Lang S, Brezger A. Bayesian P-splines. Journal of Computational and Graphical Statistics. 2004;13:183–212. [Google Scholar]

- 14.LeBihan D, Mangin J, Poupon C, Clark C. Diffusion Tensor Imaging: Concepts and Applications. Journal of Magnetic Resonance Imaging. 2001;13:534–546. doi: 10.1002/jmri.1076. [DOI] [PubMed] [Google Scholar]

- 15.Lin X, Tench CR, Morgan PS, Constantinescu CS. Use of combined conventional and quantitative MRI to quantify pathology related to cognitive impairment in multiple sclerosis. Journal of Neurology, Neurosurgery, and Psychiatry. 2008;237:437–441. doi: 10.1136/jnnp.2006.112177. [DOI] [PubMed] [Google Scholar]

- 16.McGrory CA, Titterington DM, Reeves R, Pettitt AN. Variational Bayes for Estimating the Parameters of a Hidden Potts Model. Statistics and Computing. 2009;19:329–340. [Google Scholar]

- 17.Mori S, Barker P. Diffusion magnetic resonance imaging: its principle and applications. The Anatomical Record. 1999;257:102–109. doi: 10.1002/(SICI)1097-0185(19990615)257:3<102::AID-AR7>3.0.CO;2-6. [DOI] [PubMed] [Google Scholar]

- 18.Müller H-G, Stadtmüller U. Generalized functional linear models. Annals of Statistics. 2005;33:774–805. [Google Scholar]

- 19.Ormerod J, Wand MP. Explaining Variational Approximations. The American Statistician. 2010;64:140–153. [Google Scholar]

- 20.Ozturk A, Smith S, Gordon-Lipkin E, Harrison D, Shiee N, Pham D, Caffo B, Calabresi P, Reich D. MRI of the corpus callosum in multiple sclerosis: association with disability. Multiple Sclerosis. 2010;16:166–177. doi: 10.1177/1352458509353649. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Ramsay JO, Silverman BW. Functional Data Analysis. New York: Springer; 2005. [Google Scholar]

- 22.Reiss P, Ogden R. Functional Principal Component Regression and Functional Partial Least Squares. Journal of the American Statistical Association. 2007;102:984–996. [Google Scholar]

- 23.Ruppert D, Wand MP, Carroll RJ. Semiparametric Regression. Cambridge: Cambridge University Press; 2003. [Google Scholar]

- 24.Teschendorff AE, Wang Y, Barbosa-Morais NL, Brenton JD, Caldas C. A Variational Bayesian Mixture Modeling Framework for Cluster Analysis of Gene-Expression Data. Bioinformatics. 2005;21:3025–3033. doi: 10.1093/bioinformatics/bti466. [DOI] [PubMed] [Google Scholar]

- 25.Titterington DM. Bayesian Methods for Neural Networks and Related Models. Statistical Science. 2004;19:128–139. [Google Scholar]