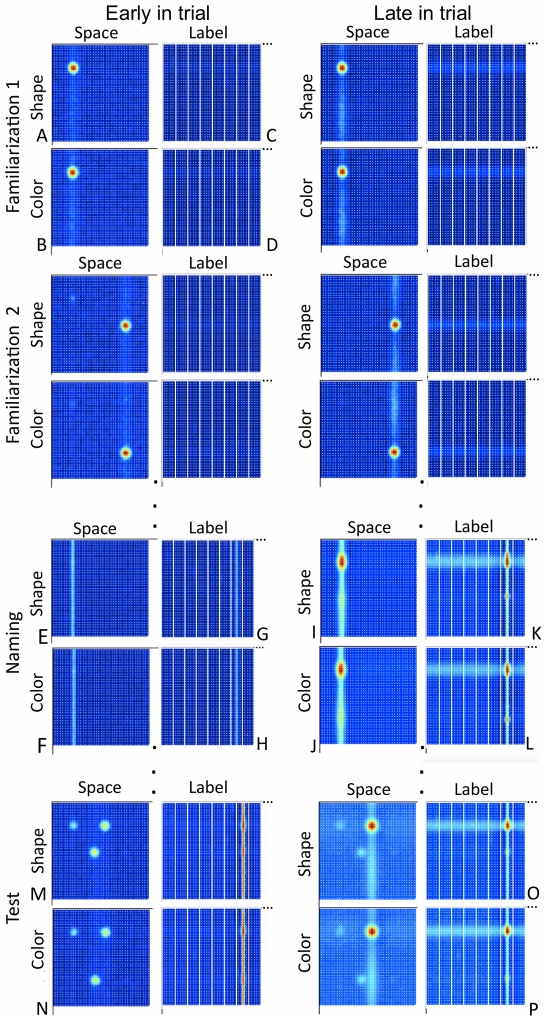

Figure 3. Dynamic Neural Field (DNF) model that captured Experiments 1–4 performance and predicted Experiment 5 behavior.

Panels A and B show a variant of Johnson et al.'s [10] model of visual feature binding; panels C and D show a variant of the Faubel and Schöner's [17] model of fast object recognition. Our integration brings these prior models together to encode and bind visual features in real time as “peaks” of neural activation built in the shape-space (red hot spots in A) and color-space fields (red hot spots in B) via local excitation and surround inhibition (see [38]). Binding is achieved through the shared spatial coupling between these fields. Labels (words) are fed into the label-feature fields shown in C and D. These fields can bind labels to the visual features encoded by the visuo-spatial system via in-the-moment coupling across the shared feature dimensions (shape to shape; color to color). A Hebbian process enables the model to learn which features were where from trial to trial and also learn the label-feature associations quickly to influence performance on subsequent test trials. This figure also shows a simulation of the model at key points in time as we capture events in our experimental task.