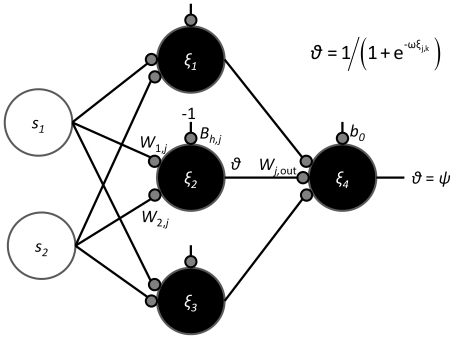

Figure 2. The architecture of the ANNs used in the simulations.

Sensory nodes (open) simply propagate the signals elicited by the resource. Each hidden node j, j = 1,2,3 in the second layer has three ‘synaptic’ weights associated with it: w 1j and w 2j weight the inputs from sensory nodes 1 and 2 respectively and wj out weights the value of the output entering the third layer output node (shown for the middle node only). Further, the hidden nodes have bias weights bhj, and the output node the bias weight bo connecting an input of −1. Thus, each perceptron has a representation [w 1, w 2, w 3, w o],where w j = (w 1j, w 2j, bh j, wj out) for j = 1,2,3, are the hidden node values and w o = b o is the output node value (seen as colors in Fig. 3). The output θ that determines the response of each node is given by the sigmoidal threshold function (upper right corner) where, for sufficiently large ω (here = 4), an internal activity ξjk<0 produces output close to 0, otherwise a value close to 1. For a hidden node j = 1,2,3 with niche (host) k's signal applied, the activity values are determined by ξjk = (sk 1 w 1,j+sk 2 w 2,j−bhj) and for the output neuron by ξ 4k = (θ 1k w 1,out+θ 2k w 2,out+θ 3k w 3,out−b 0). The output of the perceptron (ψ) is hence between 0 and 1 and interpreted as ‘approximately 0⇒avoid niche’ and ‘approximately 1⇒exploit niche’.