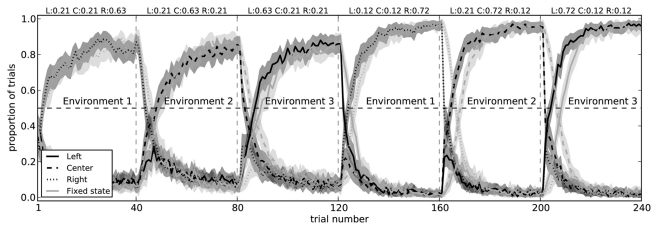

Figure 10.

Model performance on a three-arm bandit task with fixed state information (light gray) and with state that changes for each block (dark gray). In both conditions, the model successfully learns to choose the action which most often gives it a reward. When the state information is fixed (i.e., when the pattern of spikes in the cortex does not change to indicate which of the three environments it is in), the model takes longer to switch actions. Shaded areas are 95% bootstrap confidence intervals over 200 runs.