Abstract

Background

Incident reporting systems (IRS) are used to identify medical errors in order to learn from mistakes and improve patient safety in hospitals. However, IRS contain only a small fraction of occurring incidents. A more comprehensive overview of medical error in hospitals may be obtained by combining information from multiple sources. The WHO has developed the International Classification for Patient Safety (ICPS) in order to enable comparison of incident reports from different sources and institutions.

Methods

The aim of this paper was to provide a more comprehensive overview of medical error in hospitals using a combination of different information sources. Incident reports collected from IRS, patient complaints and retrospective chart review in an academic acute care hospital were classified using the ICPS. The main outcome measures were distribution of incidents over the thirteen categories of the ICPS classifier “Incident type”, described as odds ratios (OR) and proportional similarity indices (PSI).

Results

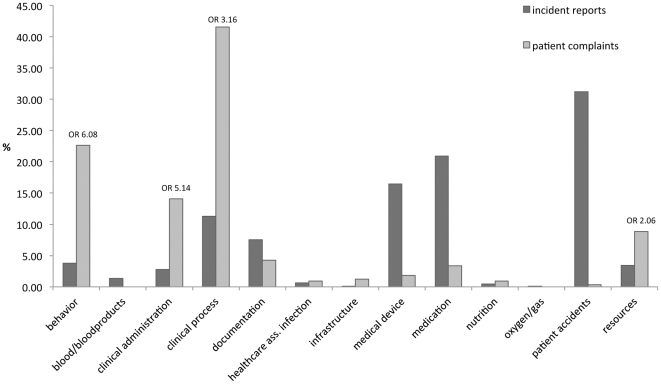

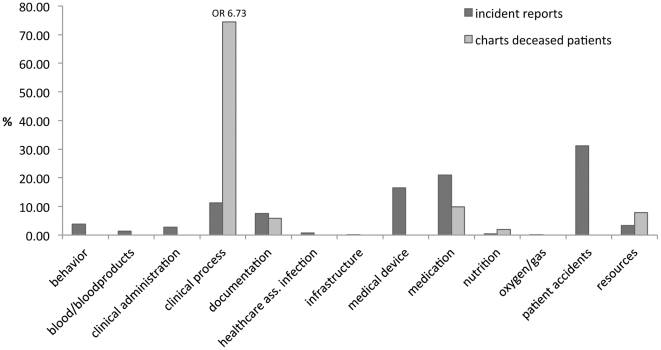

A total of 1012 incidents resulted in 1282 classified items. Large differences between data from IRS and patient complaints (PSI = 0.32) and from IRS and retrospective chart review (PSI = 0.31) were mainly attributable to behaviour (OR = 6.08), clinical administration (OR = 5.14), clinical process (OR = 6.73) and resources (OR = 2.06).

Conclusions

IRS do not capture all incidents in hospitals and should be combined with complementary information about diagnostic error and delayed treatment from patient complaints and retrospective chart review. Since incidents that are not recorded in IRS do not lead to remedial and preventive action in response to IRS reports, healthcare centres that have access to different incident detection methods should harness information from all sources to improve patient safety.

Introduction

It has been increasingly recognised that hospitals can be dangerous places for patients, since medical errors have been shown to cause harm to patients [1], [2]. In order to identify medical errors, to learn from mistakes and to improve patient safety, the healthcare community has introduced incident reporting systems (IRS) [2]. Since 2008, Dutch hospitals are required by law to have in place a safety management system, and with that an IRS. Studies have shown that IRS can have a positive effect on the safety climate of a hospital [3] and afford a global overview of incidents. However, as IRS are based on voluntary reporting a non-punitive environment has to be present in hospitals to generate high reporting rates [2]. That this type of environment is difficult to achieve may be reflected in the fact that IRS have been reported to reveal only the tip of the iceberg of incidents [2], [4], [5], estimated at 10% at most [6]. It is not known whether the reported 10% is representative of all errors. However, if IRS do not capture all types of error and if action to address errors is based on IRS reports, patient safety may not be served optimally.

IRS are not the only source of information for studies of incidents in hospitals. Thomas et al. described eight methods of detecting errors and adverse events, including chart review, malpractice claims analysis, observation of patient care, and IRS [7]. Although retrospective chart review is considered the gold standard [2], [8], and has been used in many studies [1], [9], [10], all methods have strengths and weaknesses, with some focusing specifically on latent (system) errors and others on active errors. [7], [11] Most studies of patient safety and adverse events rely on healthcare workers for information, but patients have also been shown to be a useful source of information, which should not be ignored [12]–[17]. A combination of information about adverse events from patients and healthcare workers may thus offer a more comprehensive representation of hospital incidents. Other studies have used such a combination, which yielded a more comprehensive picture of adverse events [14], [18]–[21]. However, the optimal combination of detection methods, where the weaknesses of one method can be overcome by another method, remains to be ascertained.

In the absence of a universally accepted incident classification system, studies focusing on IRS have used different classification systems [9], [10], [22]–[25], which hampers comparisons across studies and univocal conclusions. In an attempt to define a standardised set of patient safety concepts, the World Health Organisation (WHO) recently developed the International Classification of Patient Safety (ICPS) [26]–[28]. Although ICPS is still being tested and some criticism has been voiced [29], it appears to be an important step towards a comprehensive overview of concepts related to patient safety which, if proven successful, can facilitate comparison of results from different information sources both within and between institutions, on a local as well as national and international level.

For the present study we retrieved information from different sources (incident reports, patient complaints and retrospective chart review of deceased patients) to identify incidents and adverse events. We classified this combined information using the ICPS in order to create a comprehensive picture of incidents occurring in hospitals. We specifically addressed the following research question: Are the different information sources complementary with regard to the types of incidents they report?

Methods

Ethical considerations

In accordance with Dutch Law on Medical Scientific Research, retrospective research using patient charts was automatically granted ethics approval in the participating institutions and there was no requirement for individual patient consent, provided confidentiality was maintained.

Setting

We collected data from a medium sized (700 beds) academic acute care hospital in the Netherlands, serving both adults and paediatric patients. Three information sources were used: 1) all incident reports for 2007; 2) patient complaints filed in 2007; 3) retrospective chart reviews of all inpatients that died in 2008. These information sources applied to different subgroups able to provide information about adverse events in hospitals. We used data for 2007 to ensure that incidents could not be traced back to staff or patients and referred to events before the introduction of statutory safety management systems in 2008.

Information Sources

Because of anonymity of patient and staff information, overlap between incidents from different sources could not be detected. The results are therefore not presented as absolute differences between information sources, but as distributions of incidents over categories.

Incident reports

Incidents were reported on paper. All hospital personnel are authorised to report incidents, and the IRS contains information about nature, severity and place of incidents and about action taken to prevent recurrence. We transformed the available data for 2007 into a digital data file.

Patient complaints

Any patient can file a complaint against the hospital or an individual healthcare provider. We collected all written patient complaints, handled in 2007 by a complaint mediator or the complaints committee. Complaints not directly related to patient care (such as complaints about billing) were not included in the study (N = 59).

Retrospective chart review of all deceased patients

The hospital has a committee, consisting of six medical doctors and seven nurses, which retrospectively inspects the files of all deceased patients in order to identify any adverse events. The review method and definitions are based on similar national research [30], in which an adverse event is defined as “an unintended (physical and /or mental) injury which resulted in temporary or permanent disability, death or prolongation of hospital stay, and was caused by healthcare management rather than the patient's disease” [9]. All files are scanned by trained nurses, looking for triggers suggesting the occurrence of an adverse event. If an event is suspected, a medical doctor scrutinises the file to determine whether an event actually occurred and whether it was avoidable [30]. If an error is identified, the file is submitted to the full committee of medical doctors who discuss the case to reach consensus as to whether the event could have been avoided. The attending physician of the patient in question is always involved in this process. He or she comments on the committee's preliminary judgements and is notified of the final outcome of the procedure. For 119 out of 744 files of deceased patients the committee requested additional information from the attending physician. Avoidable adverse events were identified in the files of 44 patients (5.9%). Similar numbers percentages have been reported in other national research [9].

We use the term ‘reports’ to refer to incidents from the IRS, from patient complaints and from retrospective chart review.

International Classification of Patient Safety (ICPS)

We classified all reports as ‘incident type’. This ICPS classifier, which contains thirteen categories (table 1), each with subcategories [26], [31], was deemed to be most suitable to our data.

Table 1. Overview of all categories in the classifier “Incident Type” (adapted from ICPS), with examples to clarify each category.

| Category | Example | |

| 1 | behaviour | treatment of patient by staff was inconsiderate or rude |

| 2 | blood/ blood products | request for a blood product was for the wrong patient; or blood with the wrong blood type was administered to a patient |

| 3 | clinical administration | wrong documents were filled out for admission; or a patient was treated by different doctor than previously discussed |

| 4 | clinical process/ procedure | a delay in treatment due to postponement of surgery; or a diagnosis was missed |

| 5 | documentation | patient chart was missing; or information on patient chart was incorrect or missing |

| 6 | health care ass. infection | patient develops infection near the surgical site, due to a gauze that has been left behind in the wound. |

| 7 | infrastructure | trolley does not fit into the lift; or nurse slips on wet floor |

| 8 | medical device/ equipment | computer malfunction or surgical tools that break or are unsterile |

| 9 | medication/iv fluids | wrong drug is administered to the patient; or patient has not received medication |

| 10 | nutrition | wrong quantity or wrong sort of drip-feed is administered |

| 11 | oxygen/gas/vapour | patient returns from procedure and a nurse forgets to connect the oxygen |

| 12 | patient accidents | patient that has fallen out of bed; or patient that has fallen in the bathroom |

| 13 | resources/organizational management | understaffing or no available beds |

A report can fall into several categories [26]. The maximum in this study was three (box S1). As we aimed to identify different types of incidents, all categories deemed pertinent to a report were included in the analysis, which resulted in a total of 1282 classified items.

Procedure

JMF classified a sample (from all three sources) of 300 reports and discussed the results with a second researcher (RPK) until consensus was reached. JMF then classified the remaining reports, while a random sample of 10% was also classified by RPK in order to determine interrater reliability using Cohen's kappa. Since Cohen's kappa is based on the assumption that one item cannot be in more than one category, only the first classification of each report, representing the main category for that report, was used to calculate kappa. Kappa was 0.73, indicating substantial interrater agreement [32].

Suitability of the ICPS

There are several reasons why we deemed the ICPS suitable for our study: 1) It was developed using a Delphi procedure [28] among stakeholders from different fields, which ensures a broad view of patient safety; 2) A standardised classification, like the ICPS, enables comparison and replication of results within and between institutions and studies; 3) The classifier ‘Incident Type’ enabled us to start with a global classification in categories, followed by a more specific classification in subcategories, thereby creating a detailed classification of each report; 4) The ICPS discriminated distinctively between the thirteen categories, which made it possible to use all of them; 5) All incidents fell into one of the thirteen categories, thus no categories were lacking in the ICPS.

Statistical Analysis

We calculated the proportional similarity index (PSI) for distributions of the relative frequencies of incident reports from two information sources over ICPS categories in order to determine whether the sources were complementary [33]. The PSI ranges from 1-0, i.e. from highest possible similarity between two distributions to completely different patterns [34]. First we determined the distribution over all categories for each of the three databases. Using the IRS reports as the starting point, we compared the distributions of reports from the other two databases with the distribution of IRS reports.

We calculated odds ratios to determine if a specific ICPS category was more likely to be present in the IRS or in one of the two other information sources. A high odds ratio (OR≥2) indicates that an incident of this category is more frequently represented in either of the other information sources (patient complaints or retrospective chart review) than in the IRS. A low odds ratio (OR≤1) shows that an incident of this category was more strongly represented in the IRS. SPSS 15.0 was used for all calculations.

Results

The number of reports from each information source and the total number of classified items (including 2nd and 3rd categories for some incidents) are displayed in table 2. All calculations were made for the total number of classified items (N = 1282).

Table 2. Overview of collected data.

| Information source | Number of incidents (N) | Total number of classified items (incl. 2nd and 3rd category) (N) |

| Incident reports | 736 | 904 |

| Patient complaints | 235 | 327 |

| Retrospective chart review | 44 | 51 |

| Total | 1015 | 1282 |

Incident reports vs. patient complaints

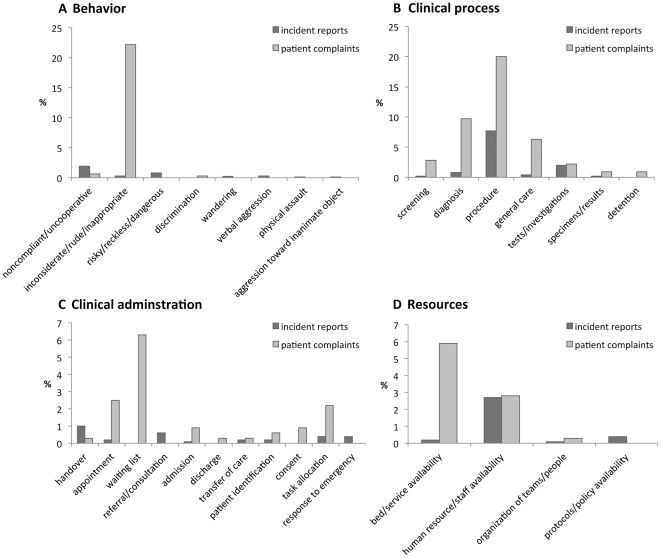

Figure 1 shows a substantial difference between the distributions of incident reports (IR) and patient complaints (PC), with a low PSI of 0.32. Some categories are strongly represented in PC and not in IR, and vice versa. The odds ratios show that incidents in the following four categories are more likely to be detected by patient complaints than by incident reports: behaviour (OR = 6.08), clinical administration (OR = 5.14), clinical process (OR = 3.16) and resources (OR = 2.06). The subcategories yield more detailed information about the differences. Figure 2a shows that the difference between the sources in the category behaviour relates primarily to inconsiderate/rude/inappropriate behaviour (0.3% (IR) vs. 22.2% (PC)). Differences in clinical administration (figure 2c) relate to appointments (0.2% (IR) vs. 2.5% (PC)), waiting list (0% (IR) vs. 6.3% (PC)) and task allocation (0% ( IR) vs. 2.2% (PC)). The difference in clinical process (figure 2b) relates to procedure (7.7% (IR) vs. 20.0% (PC)), diagnosis/assessment (0.8% (IR) vs. 9.7% (PC)) and general care (0.4% (IR) vs. 6.3% (PC)). Differences in resources (figure 2d) relate to bed/service availability (0.2% (IR) vs. 5.9% (PC)).

Figure 1. Distributions of incident reports and patient complaints over categories of the classifier ‘incident type’ (in %).

Figure 2. Distributions of incident reports and patient complaints over subcategories of Incident Types.

A: subcategorie of “Behaviour”. B: subcategorie of “Clinial administration”. C: subcategorie of “Clinical Process”. D: subcategorie of “Resources/ organizational management”.

Incident reports vs. charts of deceased patients

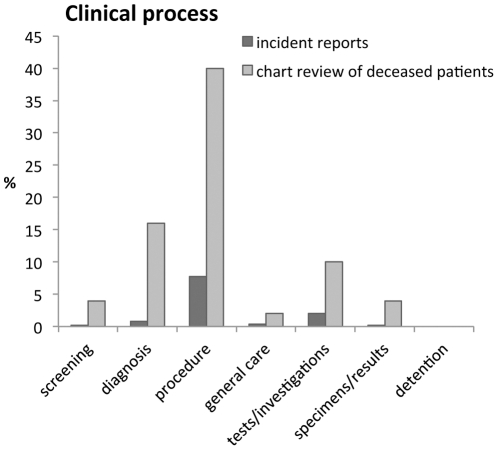

Figure 3 shows differences between incident reports (IR) and retrospective review of charts of deceased patients (CDP) (PSI 0.31) primarily related to clinical process (OR = 6.73). Figure 4 shows that this difference is mainly due to more reports from retrospective chart review relating to diagnosis/assessment (0.8% (IR) vs. 16% (CDP)) and procedure/treatment (7.7% (IR) vs. 40% (CDP)).

Figure 3. Distributions of incident reports and the chart reviews of deceased patients over categories of the classifier ‘incident type’ (in %).

Figure 4. Distribution of incident reports and chart reviews of deceased patients over subcategories of “Clinical process”.

Discussion

The primary aim of this study was to investigate whether information about reported incidents differed between information sources. The distribution of reports over categories and subcategories of the ICPS class ‘Incident Type’ showed remarkable differences between incident reports, patient complaints and retrospective chart review of deceased patients. This suggests that a combination of detection methods, using information from patients [14], [15], healthcare workers [3] and the gold standard of retrospective chart review [2], [8], may be preferable for studies of medical errors and patient safety in hospitals. Incident reports alone did not capture the full picture of medical errors, while other data sources, such as patient complaints and retrospective chart review, enhanced the comprehensiveness of information. The ICPS subcategories were particularly useful in specifying differences between information sources.

Patient complaints differed from IRS in several ways. First of all, patient complaints revealed more incidents in the category clinical process, particularly in relation to diagnosis, general care and procedure/treatment. Particularly striking is the difference between patient complaints and IRS in diagnosis-related incidents, mostly relating to delay in diagnosis or wrong or missed diagnoses. This is surprising, as one would expect healthcare workers to be aware of and therefore report missed diagnoses. The literature reports extensively on the prevalence of diagnostic errors and their impact on patient safety [35]–[38]. It is therefore intriguing that these errors should turn up in other types of data than IRS [35], [36], [38], [39] as was the case in our study. A possible explanation is that doctors may be aware of a wrong diagnosis, but decide that, since they know, there is no point in reporting it. Alternatively, doctors may not be aware of a wrong diagnosis, because it may take a long time before it is detected [35]. Whichever explanation applies, our study clearly shows that IRS do not suffice to reveal all diagnostic errors.

Secondly, patient complaints identified more incidents in the category behaviour, inconsiderate behaviour in particular. Previous research has shown that inconsiderate behaviour or unprofessional conduct is one of the main reasons for patient complaints or lawsuits [40]–[43]. In fact, it seems logical that this information should be found in patient complaints rather than in incident reports by hospital personnel, since the latter are unlikely to complain about their own behaviour.

Thirdly, patient complaints revealed more incidents in the category clinical administration in relation to waiting lists, management of appointments and task allocation, such as complaints about being seen or operated upon by a different doctor than expected or agreed upon. Complaints about waiting lists and management reports have also been reported elsewhere [40], [42]. They are closely related to patient complaints in the category resources, as patients tend to see insufficient resources as a cause for waiting lists, whereas doctors, who are familiar with the hospital organisation, know that delayed appointments or waiting lists cannot always be prevented due to staffing and organisational issues. It should be noted, however, that delays and problems with task allocation can cause significant harm to patients. A delay in treatment, for example, may lead to complications, while involvement of different doctors in a patient's treatment may cause handover problems, which are potentially harmful to patients [44].

Apart from patient complaints we gathered incident reports from retrospective chart review, which is generally considered the gold standard measurement of incidents occurring in hospitals [2], [8]. But even gold standards have limitations. For example, the fact that not everything is written down in charts, may lead to underestimation of the occurrence of incidents [7]. Our results show that retrospective chart review of inpatient deaths yields mostly incidents concerning delayed diagnosis and inadequate performance of procedures. With regard to diagnostic errors, the same applies for retrospective chart review as for patient complaints. These errors must be addressed in order to learn from them. As for inadequate performance of a procedure, incidents with medical procedures have also been identified in other studies involving retrospective chart review [9], [10].

Limitations of this research

This study has several limitations. Firstly, most of the data were collected in one academic medical centre. Consequently, the results may not be generalisable to other hospitals or other countries. Secondly, because of anonymity of patient and staff information, overlap between incidents from different sources could not be detected. This might result in a slight overestimation of some incident types. Thirdly, we used ICPS to classify incidents in order to improve the comparability of findings. However, the ICPS is still under development and needs to be tested with more and different databases of other healthcare centres in order to optimise the (sub)categories.

Practical implications and conclusions

There are also several practical implications to this study. First of all, the results suggest that IRS alone does not provide a comprehensive picture of what goes wrong in a hospital. Moreover, the fact that diagnostic errors and delay in treatment are rarely reported in IRS impacts on actions undertaken to remedy and prevent such incidents. Healthcare centres using more than one method of incident detection (e.g. methods relying on patients and health care workers as sources of information) should combine these data, preferably using the same classification for each source, in order to enhance comparability. This will give a better insight into the most prevalent latent and active errors, and can help to prioritise which of these problems should receive immediate attention and which are less urgent.

The second practical implication considers its use for medical education. The incidents that were identified can be used to educate medical students, residents and faculty about patient safety issues. Incidents can enhance awareness of vulnerabilities of hospital organisations and identify which situations are more conducive to error. Increased attention through education could increase doctors' awareness of these situations and, consequently, reduce the number of (e.g. diagnostic) errors. We therefore recommend that medical schools should incorporate this information in their courses on patient safety.

Supporting Information

Example of an incident classified in more than one category.

(DOC)

Acknowledgments

The authors would like to thank Mereke Gorsira for editing the final version of the manuscript.

Footnotes

Competing Interests: The authors have declared that no competing interests exist.

Funding: The authors have no support or funding to report.

References

- 1.Brennan TA, Leape LL, Laird NM, Hebert L, Localio AR, et al. Incidence of adverse events and negligence in hospitalized patients. Results of the Harvard Medical Practice Study I. New England Journal of Medicine. 1991;324:370–376. doi: 10.1056/NEJM199102073240604. [DOI] [PubMed] [Google Scholar]

- 2.Kohn KT, Corrigan JM, Donaldson MS. To err is human. Building a safer health system. Washington, DC: National Academy Press; 1999. [PubMed] [Google Scholar]

- 3.Hutchinson A, Young TA, Cooper KL, McIntosh A, Karnon JD, et al. Trends in healthcare incident reporting and relationship to safety and quality data in acute hospitals: results from the National Reporting and Learning System. Qual Saf Health Care. 2009;18:5–10. doi: 10.1136/qshc.2007.022400. [DOI] [PubMed] [Google Scholar]

- 4.Barach P, Small SD. Reporting and preventing medical mishaps: lessons from non-medical near miss reporting systems. BMJ. 2000;320:759–763. doi: 10.1136/bmj.320.7237.759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Jones KJ, Cochran G, Hicks RW, Mueller KJ. Translating Research Into Practice: Voluntary Reporting of Medication Errors in Critical Access Hospitals. The Journal of Rural Health. 2004;20:335–343. doi: 10.1111/j.1748-0361.2004.tb00047.x. [DOI] [PubMed] [Google Scholar]

- 6.Sari AB-A, Sheldon TA, Cracknell A, Turnbull A. Sensitivity of routine system for reporting patient safety incidents in an NHS hospital: retrospective patient case note review. BMJ. 2007;334:79. doi: 10.1136/bmj.39031.507153.AE. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Thomas E, Petersen L. Measuring errors and adverse events in health care. Journal of General Internal Medicine. 2003;18:61–67. doi: 10.1046/j.1525-1497.2003.20147.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Blais R, Bruno D, Bartlett G, Tamblyn R. Can we use incident reports to detect hospital adverse events? Journal of Patient Safety. 2008;4:9–12. [Google Scholar]

- 9.Zegers M, de Bruijne MC, Wagner C, Hoonhout LH, Waaijman R, et al. Adverse events and potentially preventable deaths in Dutch hospitals: results of a retrospective patient record review study. Qual Saf Health Care. 2009;18:297–302. doi: 10.1136/qshc.2007.025924. [DOI] [PubMed] [Google Scholar]

- 10.Thomas EJ, Studdert DM, Burstin HR, Orav EJ, Zeena T, et al. Incidence and Types of Adverse Events and Negligent Care in Utah and Colorado. Medical Care. 2000;38:261–271. doi: 10.1097/00005650-200003000-00003. [DOI] [PubMed] [Google Scholar]

- 11.Reason J. Understanding adverse events: human factors. Qual Health Care. 1995;4:80–89. doi: 10.1136/qshc.4.2.80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Solberg LI, Asche SE, Averbeck BM, Hayek AM, Schmitt KG, et al. Can patient safety be measured by surveys of patient experiences? Jt Comm J Qual Patient Saf. 2008;34:266–274. doi: 10.1016/s1553-7250(08)34033-1. [DOI] [PubMed] [Google Scholar]

- 13.King A, Daniels J, Lim J, Cochrane DD, Taylor A, et al. Time to listen: a review of methods to solicit patient reports of adverse events. Qual Saf Health Care. 2010;19:148–157. doi: 10.1136/qshc.2008.030114. [DOI] [PubMed] [Google Scholar]

- 14.Weissman JS, Schneider EC, Weingart SN, Epstein AM, David-Kasdan J, et al. Comparing Patient-Reported Hospital Adverse Events with Medical Record Review: Do Patients Know Something That Hospitals Do Not? Annals of Internal Medicine. 2008;149:100–108. doi: 10.7326/0003-4819-149-2-200807150-00006. [DOI] [PubMed] [Google Scholar]

- 15.Weingart S, Pagovich O, Sands D, Li J, Aronson M, et al. What can hospitalized patients tell us about adverse events? Learning from patient-reported incidents. Journal of General Internal Medicine. 2005;20:830–836. doi: 10.1111/j.1525-1497.2005.0180.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Agoritsas T, Bovier P, Perneger T. Patient reports of undesirable events during hospitalization. Journal of General Internal Medicine. 2005;20:922–928. doi: 10.1111/j.1525-1497.2005.0225.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Evans S, Berry J, Smith B, Esterman A. Consumer perceptions of safety in hospitals. BMC Public Health. 2006;6:41. doi: 10.1186/1471-2458-6-41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Olsen S, Neale G, Schwab K, Psaila B, Patel T, et al. Hospital staff should use more than one method to detect adverse events and potential adverse events: incident reporting, pharmacist surveillance and local real-time record review may all have a place. Qual Saf Health Care. 2007;16:40–44. doi: 10.1136/qshc.2005.017616. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Michel P, Quenon JL, de Sarasqueta AM, Scemama O. Comparison of three methods for estimating rates of adverse events and rates of preventable adverse events in acute care hospitals. BMJ. 2004;328:199. doi: 10.1136/bmj.328.7433.199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Levtzion-Korach O, Frankel A, Alcalai H, Keohane C, Orav J, et al. Integrating incident data from five reporting systems to assess patient safety: making sense of the elephant. Jt Comm J Qual Patient Saf. 2010;36:402–410. doi: 10.1016/s1553-7250(10)36059-4. [DOI] [PubMed] [Google Scholar]

- 21.Meyer-Massetti C, Cheng CM, Schwappach DLB, Paulsen L, Ide B, et al. Systematic review of medication safety assessment methods. American Journal of Health-System Pharmacy. 2011;68:227–240. doi: 10.2146/ajhp100019. [DOI] [PubMed] [Google Scholar]

- 22.Lessing C, Schmitz A, Albers B, Schrappe M. Impact of sample size on variation of adverse events and preventable adverse events: systematic review on epidemiology and contributing factors. Qual Saf Health Care. 2010;19:e24. doi: 10.1136/qshc.2008.031435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Milch CE, Salem DN, Pauker SG, Lundquist TG, Kumar S, et al. Voluntary electronic reporting of medical errors and adverse events. An analysis of 92,547 reports from 26 acute care hospitals. J Gen Intern Med. 2006;21:165–170. doi: 10.1111/j.1525-1497.2006.00322.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Silas R, Tibballs J. Adverse events and comparison of systematic and voluntary reporting from a paediatric intensive care unit. Qual Saf Health Care. 2010;19:568–571. doi: 10.1136/qshc.2009.032979. [DOI] [PubMed] [Google Scholar]

- 25.Tuttle D, Holloway R, Baird T, Sheehan B, Skelton WK. Electronic reporting to improve patient safety. Qual Saf Health Care. 2004;13:281–286. doi: 10.1136/qshc.2003.009100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Runciman W, Hibbert P, Thomson R, Van Der Schaaf T, Sherman H, et al. Towards an International Classification for Patient Safety: key concepts and terms. Int J Qual Health Care. 2009;21:18–26. doi: 10.1093/intqhc/mzn057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sherman H, Castro G, Fletcher M, Hatlie M, Hibbert P, et al. Towards an International Classification for Patient Safety: the conceptual framework. Int J Qual Health Care. 2009;21:2–8. doi: 10.1093/intqhc/mzn054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Thomson R, Lewalle P, Sherman H, Hibbert P, Runciman W, et al. Towards an International Classification for Patient Safety: a Delphi survey. Int J Qual Health Care. 2009;21:9–17. doi: 10.1093/intqhc/mzn055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Schulz S, Karlsson D, Daniel C, Cools H, Lovis C. Is the “International Classification for Patient Safety” a classification? Stud Health Technol Inform. 2009;150:502–506. [PubMed] [Google Scholar]

- 30.Zegers M, de Bruijne MC, Wagner C, Groenewegen PP, Waaijman R, et al. Design of a retrospective patient record study on the occurrence of adverse events among patients in Dutch hospitals. BMC Health Serv Res. 2007;7:27. doi: 10.1186/1472-6963-7-27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.World Health Organization website. Available: http://www.who.int/patientsafety/implementation/taxonomy/icps_technical_report_en.pdf Accessed 2012 Jan 16.

- 32.Cohen J. A coefficient of agreement for nominal scales. Educational and Psychological Measurement. 1960;20:37–46. [Google Scholar]

- 33.Vegelius J, Janson S, Johansson F. Measures of similarity between distributions. Quality and Quantity. 1986;20:437–441. [Google Scholar]

- 34.International Working Group for Molecular Epidemiology website. Available: http://www.epi-interactive.com/mepiworks/content/proportional-similarity-index. Accessed 2012 Jan 16.

- 35.Berner ES, Graber ML. Overconfidence as a cause of diagnostic error in medicine. Am J Med. 2008;121:S2–23. doi: 10.1016/j.amjmed.2008.01.001. [DOI] [PubMed] [Google Scholar]

- 36.Graber ML, Franklin N, Gordon R. Diagnostic error in internal medicine. Arch Intern Med. 2005;165:1493–1499. doi: 10.1001/archinte.165.13.1493. [DOI] [PubMed] [Google Scholar]

- 37.Kachalia A, Gandhi TK, Puopolo AL, Yoon C, Thomas EJ, et al. Missed and delayed diagnoses in the emergency department: a study of closed malpractice claims from 4 liability insurers. Ann Emerg Med. 2007;49:196–205. doi: 10.1016/j.annemergmed.2006.06.035. [DOI] [PubMed] [Google Scholar]

- 38.Newman-Toker DE, Pronovost PJ. Diagnostic errors–the next frontier for patient safety. JAMA. 2009;301:1060–1062. doi: 10.1001/jama.2009.249. [DOI] [PubMed] [Google Scholar]

- 39.Singh H, Thomas EJ, Petersen LA, Studdert DM. Medical errors involving trainees: a study of closed malpractice claims from 5 insurers. Arch Intern Med. 2007;167:2030–2036. doi: 10.1001/archinte.167.19.2030. [DOI] [PubMed] [Google Scholar]

- 40.Moghadam JM, Ibrahimipour H, Sari Akbari A, Farahbakhsh M, Khoshgoftar Z. Study of patient complaints reported over 30 months at a large heart centre in Tehran. Qual Saf Health Care. 2010;19:1–5. doi: 10.1136/qshc.2009.033654. [DOI] [PubMed] [Google Scholar]

- 41.Hickson GB, Federspiel CF, Pichert JW, Miller CS, Gauld-Jaeger J, et al. Patient Complaints and Malpractice Risk. JAMA: The Journal of the American Medical Association. 2002;287:2951–2957. doi: 10.1001/jama.287.22.2951. [DOI] [PubMed] [Google Scholar]

- 42.Montini T, Noble AA, Stelfox HT. Content analysis of patient complaints. International Journal for Quality in Health Care. 2008;20:412–420. doi: 10.1093/intqhc/mzn041. [DOI] [PubMed] [Google Scholar]

- 43.Kuosmanen L, Kaltiala-Heino R, Suominen S, Kärkkäinen J, Hätönen H, et al. Patient complaints in Finland 2000–2004: a retrospective register study. Journal of Medical Ethics. 2008;34:788–792. doi: 10.1136/jme.2008.024463. [DOI] [PubMed] [Google Scholar]

- 44.Horwitz LI, Moin T, Krumholz HM, Wang L, Bradley EH. Consequences of inadequate sign-out for patient care. Arch Intern Med. 2008;168:1755–1760. doi: 10.1001/archinte.168.16.1755. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Example of an incident classified in more than one category.

(DOC)