Abstract

The verbal transformation effect (VTE) refers to perceptual switches while listening to a speech sound repeated rapidly and continuously. It is a specific case of perceptual multistability providing a rich paradigm for studying the processes underlying the perceptual organization of speech. While the VTE has been mainly considered as a purely auditory effect, this paper presents a review of recent behavioural and neuroimaging studies investigating the role of perceptuo-motor interactions in the effect. Behavioural data show that articulatory constraints and visual information from the speaker's articulatory gestures can influence verbal transformations. In line with these data, functional magnetic resonance imaging and intracranial electroencephalography studies demonstrate that articulatory-based representations play a key role in the emergence and the stabilization of speech percepts during a verbal transformation task. Overall, these results suggest that perceptuo (multisensory)-motor processes are involved in the perceptual organization of speech and the formation of speech perceptual objects.

Keywords: multistable perception of speech, verbal transformation effect, speech scene analysis, speech perception, audiovisual speech, multisensory

1. Introduction

The verbal transformation effect (VTE) is experienced during rapid and continuous repetition of a speech sound. For example, while listening to the repetition of the word ripe, listeners initially perceive the veridical percept (i.e. ‘ripe’), but after a few seconds, they may report hearing utterances such as ‘right’, ‘rife’ and ‘bright’ [1]. This transformation process persists throughout the repetition procedure, leading to perceptual switches from one percept to another. The VTE was first reported by Warren & Gregory [2] and was described as an auditory illusion which may reveal the processes responsible for enhancement of the accuracy of perception in the noisy environments encountered in everyday life [3]. It is a speech-specific case of perceptual multistability, which is well known in vision (for a review, see [4]) and in audition (e.g. [5]), and provides a rich paradigm for studying the perceptual organization of sensory scenes.

Perceptual organization refers to the structuring of a sensory scene into meaningful perceptual objects. Drawing an analogy to the visual domain, Bregman [6] describes two types of mechanisms, respectively based on primitives and schemas, guiding the perceptual organization of auditory scenes (for a review of auditory multistability, see [7]). Primitives are basic, probably largely innate, auditory processes driven by the incoming acoustic data while schema-based mechanisms involve the activation of learned familiar perceptual patterns. Bregman [6] suggests that the same types of mechanisms, i.e. auditory primitives and phonetic schemas, are used for speech scene analysis. A few years later, Remez et al. [8] argued that general auditory scene analysis mechanisms fail to explain the perceptual coherence of speech. The authors suggested instead that ‘the perceptual organization of speech depends on sensitivity to time-varying acoustic patterns specific to phonologically governed vocal sources of sound ([8], p. 130)’. In addition, they proposed that ‘ … visible structures of articulation and the acoustic signal of speech combine perceptually as if organized in common to promote multimodal perceptual analysis ([8], p. 152)’.

The VTE provides an efficient tool for studying speech scene analysis. It enables researchers to address questions such as: (i) is it possible to decompose the speech stream into ‘natural’ units of information that play a pivotal role in the transformation process? (ii) If there are indeed elementary units of information, are they purely auditory or are they multimodal (audiovisual at least)? More generally, can auditory and visual streams be bound together in the multistability process? (iii) What is the role of motor processes in the processing of speech representations and in the switches from one state to an other? (iv) Ultimately, what is the nature of the speech perceptual object (auditory, motor or perceptuo-motor)? In this paper, we review a number of recent results on the VTE, related to these questions about the perceptual organization of speech.

2. Auditory bases of the verbal transformation effect

(a). Primitive- and schema-based verbal transformations

Different types of verbal transformations have been reported in the literature (e.g. [9–13]). They can be tentatively separated into primitive- and schema-based transformations based on Bregman's classification scheme for auditory scene analysis.

The primitive-based VTE should involve only general principles, not based on learned speech forms. This is typically the case for transformations based on auditory streaming. For example, the repetition of /skin/ may lead to the segregation of the /s/ sound from the main stream, so that /kin/ or /gin/ is perceived in the foreground and /s/ in the background [12]. These transformations can be considered as phonetic equivalents of auditory switches between fused and segregated percepts as described earlier [5] (see also [7]). Another very common kind of transformation can be described as the online re-segmentation of the speech stream. For example, listening to the rapid repetition of the word life may lead to the perception of the word fly (by cutting the acoustic stream before ‘f’ rather than after), which may transform back, after a few seconds, to life and so on. This segmentation process could be conceived as a ‘speech primitive’ grouping mechanism in which perception alternates between two possible organizations of the phonetic stream.

The schema-based VTE involves learned patterns such as in phonetic, lexical or semantic modifications of the repeated sequence. Phonetic modifications consist of the substitution of the repeated phoneme by a phonetically similar one (e.g. /ɛ/ → /æ/ [9]) and/or phoneme insertions (e.g. / / → /pold/ [12]). Lexical modifications can be small phonetic deviations of the repeated sequence resulting in words (e.g. ‘ripe’ → ‘bright’ [1]). Finally, verbal transformations may involve semantic modifications with large phonetic deviations from the repeated sequence (e.g. ‘trice’ → ‘florist’ [1]). Note that schema-based mechanisms may also contribute to what we have called primitive-based VTE (at least in verbal transformations that involve re-segmentation, such as ‘fly’ → ‘life’) in the sense that possible alternating forms can be meaningful words. However, this does not exclude the contribution of ‘speech primitives’ in the primitive-based VTE.

/ → /pold/ [12]). Lexical modifications can be small phonetic deviations of the repeated sequence resulting in words (e.g. ‘ripe’ → ‘bright’ [1]). Finally, verbal transformations may involve semantic modifications with large phonetic deviations from the repeated sequence (e.g. ‘trice’ → ‘florist’ [1]). Note that schema-based mechanisms may also contribute to what we have called primitive-based VTE (at least in verbal transformations that involve re-segmentation, such as ‘fly’ → ‘life’) in the sense that possible alternating forms can be meaningful words. However, this does not exclude the contribution of ‘speech primitives’ in the primitive-based VTE.

(b). Explanations and models for the verbal transformation effect

Adaptation of speech representations and perceptual regrouping of acoustic elements have been suggested to be the cause of the VTE [12]. The most elaborate framework for understanding the VTE is the node structure theory (NST) [14]. The NST is a localist model (i.e. a single node represents one processing or memory unit) with three levels of representation: muscle movement, phonological and sentential. The nodes at the muscle movement level are dedicated to speech production and represent patterns of movement for the speech muscles. The phonological level contains sublexical nodes, such as syllables and phonetic features. The nodes at the sentential level represent words and phrases. During speech perception, the input primes nodes at the phonological level. Priming increases the possibility of a node being activated but it cannot by itself activate a node. The strength of priming is related to how well a node matches the input of the model. The priming spreads from the phonological level to the lexical nodes (at the sentential level). Several nodes may be primed simultaneously. The activating mechanism in the NST ensures that only one node is activated at once: only the most-primed node that reaches the threshold becomes activated. According to the NST, the repeated activation of a node owing to the repetition of an utterance causes adaptation of the node. This results in a fall in the node's priming eventually to below the priming of a competitor node. The competitor node therefore becomes dominant, and a verbal transformation occurs [15].

Another class of models is based on the properties of dynamic systems (see also [16]). Ditzinger et al. [17] developed a computational model for the VTE based on a model of perceptual switches of visual reversible figures [18]. In this model, percepts are represented as system attractors, i.e. local minima of the system energy. A verbal transformation occurs when the energy associated with a percept increases, so that it no longer represents a local minimum. This leads the system to switch to a more stable state. The energy increase in this model is owing to the saturation of attention to the current percept. Notice that, while this model provides a possible explanation for multistability based on multiple equilibrium states within limited cycles of a dynamic process, it does not clarify the nature of speech representations and the underlying analysis processes.

3. Articulatory processes underlying some verbal transformation effect properties

The potential role of the perceptuo-motor link in the VTE has been explored in various kinds of paradigms, and this has led to the emergence of two possible roles for motor processes: increasing the probability of transformations, and selecting some transformations rather than others.

(a). Motor involvement favours transformations

In a series of experiments, Reisberg et al. [19] demonstrated the role of articulatory processes in the VTE. The authors asked participants to mentally repeat a word and report any verbal transformations they perceived. Other participants were asked to repeat the same word aloud and report any transformations from the repeated word. Different degrees of subvocalized involvement were tested (whispering, silent mouthing and mental repetition without mouthing). The authors also examined some conditions where subvocal involvement was impeded, by having participants chew gum or do a concurrent articulatory task, or by clamping their articulators. The results showed that the probability of a verbal transformation increased with increasing degree of both auditory feedback and articulatory involvement: verbal transformations were more frequent with overt repetition than with whispering and silent mouthing, and than with purely mental repetition. Moreover, when subvocal involvement was impeded, the VTE disappeared. These results suggest that articulatory processes play a role in the VTE.

(b). Articulatory processes influence the selection of preferred perceptual states

In two series of studies, Sato et al. [20,21] explored whether articulatory mechanisms could influence the preference for some percepts over others in the VTE. Their hypothesis was that some sequences of articulatory gestures, which are considered as more stable than others in speech production, should also be more often produced in verbal transformations. A typical example is the syllabification process, according to which some sequences like CV (a consonant C followed by a vowel V) are more frequent in human languages than others like VC (see e.g. [22,23]). Various perceptual or motor explanations of this preference have been proposed and tested (see e.g. [24]). In the ‘articulatory phonology’ framework for analysing speech production in terms of articulatory gestures coordinated in time into temporally overlapping structures [25,26], it is proposed that the CV sequence is favoured because the consonantal and vocalic gestures can be coupled in-phase, i.e. triggered synchronously. In contrast, a VC sequence would be triggered out-of-phase, with the vocalic gesture produced first, and the consonantal gesture produced only when the V is completed. The conclusion from articulatory phonology is that the CV sequence is more stable in production thanks to the in-phase coupling of C and V. Actually, various experiments have shown that, when asked to speak rapid sequences of CV or VC sounds, the latter tend to be produced as CV sounds, whereas the reverse does not occur (e.g. [27]). Interestingly, in the VTE, transformations occur much more often toward syllables beginning with a C, as in CV, than toward those beginning with a V, as in VC (e.g. [12]). A possible interpretation is that the preferred transformation involves more stable articulatory sequences, stability being defined with reference to in-phase coupling of the articulators.

Sato et al. [20] tested whether this tendency could be observed, in a more general way, for other speech sequences than CV and VC. For example, in the classic ‘life life life’ sequence, the two Cs, ‘f’ and ‘l’ can be produced almost in synchrony in ‘fly’ [25], while the two consonantal gestures are desynchronized in ‘life’ (being separated by the V in the middle). The same reasoning as previously leads to the prediction that the sequence with more in-phase coupling between the two consonantal gestures, i.e. ‘fly’, should be more stable than the less synchronized ‘life’. Thus, the authors hypothesized that sequences displaying larger inter-articulatory synchrony in speech production should be preferred states in the VTE. To test the hypothesis, they asked French participants to repeat monosyllabic sequences, such as repeated /ps / or /s

/ or /s p/, in an overt (i.e. saying aloud) or covert (i.e. saying mentally) mode and to report verbal transformations (non-word /ps

p/, in an overt (i.e. saying aloud) or covert (i.e. saying mentally) mode and to report verbal transformations (non-word /ps / and /s

/ and /s p/ were used rather than ‘life’ and ‘fly’ to avoid any lexical effect). The repetition of /ps

p/ were used rather than ‘life’ and ‘fly’ to avoid any lexical effect). The repetition of /ps / can lead to /s

/ can lead to /s p/ and vice versa (other transformations are also possible, see [20]). Previously, the authors had shown that a speeded production of /ps

p/ and vice versa (other transformations are also possible, see [20]). Previously, the authors had shown that a speeded production of /ps / or /s

/ or /s p/ sequences both displayed a significant trend towards /ps

p/ sequences both displayed a significant trend towards /ps /. The results showed a significantly larger number of transformations from /s

/. The results showed a significantly larger number of transformations from /s p/ to /ps

p/ to /ps / than from /ps

/ than from /ps / to /s

/ to /s p/, both in the overt and covert modes. This was explained by the fact that /ps

p/, both in the overt and covert modes. This was explained by the fact that /ps /, for which the consonantal gestures of /p/ and /s/ are produced almost in synchrony, would involve more articulatory stability than /s

/, for which the consonantal gestures of /p/ and /s/ are produced almost in synchrony, would involve more articulatory stability than /s p/. More specifically, a verbal transformation from /s

p/. More specifically, a verbal transformation from /s ps

ps ps

ps p … / to /ps

p … / to /ps ps

ps ps

ps … / involves a resynchronization of /p/ and /s/ gestures, which leads to a more stable speech sequence. The articulatory synchrony assumption could, therefore, explain why /ps

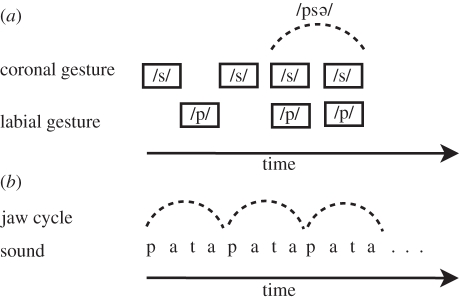

… / involves a resynchronization of /p/ and /s/ gestures, which leads to a more stable speech sequence. The articulatory synchrony assumption could, therefore, explain why /ps / was a more stable form in this experiment (figure 1a).

/ was a more stable form in this experiment (figure 1a).

Figure 1.

Speech segmentation based on articulatory constraints. (a) The /p/ and /s/ gestures are asynchronous in /s p/, while they are almost synchronous in /ps

p/, while they are almost synchronous in /ps /. This may explain why, in a VTE production task (i.e. while overtly or mentally repeating a verbal sequence), a verbal transformation from /s

/. This may explain why, in a VTE production task (i.e. while overtly or mentally repeating a verbal sequence), a verbal transformation from /s p/ to /ps

p/ to /ps / is more probable than a transformation from /ps

/ is more probable than a transformation from /ps / to /s

/ to /s p/ [20]. (b) / … (pa)tapatapatapa … / is perceived as /pata/ rather than /tapa/ [21] since /pata/ can be produced in a single jaw cycle [28]. This may explain why /pata/ is more stable than /tapa/ in an auditory verbal transformation task (i.e. while listening to the repetition of a verbal sequence).

p/ [20]. (b) / … (pa)tapatapatapa … / is perceived as /pata/ rather than /tapa/ [21] since /pata/ can be produced in a single jaw cycle [28]. This may explain why /pata/ is more stable than /tapa/ in an auditory verbal transformation task (i.e. while listening to the repetition of a verbal sequence).

The next step involved gathering evidence for the role of articulatory stability in the VTE in a purely auditory mode (with no explicit speech production involvement). This was the focus of the study by Sato et al. [21], using C1VC2V-type stimuli where C1 and C2 were /p/ and /t/ and V was /a/, /i/ or /o/ (e.g. /pata/, /topo/ and /piti/). In this study, participants were asked to listen to repetition of a C1VC2V sequence and to report any verbal transformations they perceived. Most of the reported transformations included the veridical sequence C1VC2V and its ‘reversible’ form C2VC1V (for example, transformation from /pata/ to /tapa/ and vice versa). However, there is a trend in human languages that labial–coronal C1VC2V sequences such as ‘pata’ and ‘bada’ (/p/ and /b/ being labial Cs and /t/ and /d/ coronal ones) are more frequent than coronal–labial sequences such as ‘tapa’ and ‘daba’ [23,29]. This is known as the labial–coronal effect. The same kind of preference exists in infants' first words [29]. This tendency might have an articulatory origin. It has been suggested that the /pata/ sequence is more stable than the /tapa/ sequence since it can be produced on a single jaw cycle by anticipating the coronal gesture /t/ while producing the labial one /p/, but the reverse pattern is not possible [28] (figure 1b). As a matter of fact, since the lips are in front of the tongue tip in the vocal tract, anticipating the lip closure in /tVpV/ sequences would result in closing the vocal tract in front of the tongue tip and hence hiding the coronal C /t/ at the beginning of the /tVpV/ sequence, making it inaudible. Once again, a speeded production task confirmed that ‘pata’ sequences are more stable than ‘tapa’ sequences [28]. The assumption of Sato et al. [21] was that the underlying jaw cycles would lead listeners to segment / … (pa)tapatapatapa … / sequences into ‘jaw-compatible’ /pata/ chunks rather into the reverse /tapa/ utterances. Hence, the /pata/ state would be preferred in the VTE. This was actually the case: /pata/ was heard significantly longer than /tapa/ and globally /pVtV/ percepts were more stable than /tVpV/ percepts (figure 1b; notice that other interpretations of the preference for /pata/, based on psycholinguistic arguments such as lexical status and word frequency of repeated sequences and phonotactic probability (i.e. the probability of occurrence of a particular phonetic segment in a given word position) were discounted on the basis of controls carried out in the original study).

(c). Conclusions

In summary, the results reviewed in this section suggest that articulatory processes play a significant role in the VTE, both by controlling the emergence of new states, and by orienting perception towards some states rather than others, preferred states being associated with more ‘stable’ articulatory sequences, either because of increased inter-articulator synchrony, or because of the role of underlying jaw cycles chunking sequences into coherent pieces of information.

4. Multimodal nature of verbal transformations

Speech perception is not merely auditory but multi-modal. Several studies have shown that visual information from the speaker's face can increase the intelligibility of speech sounds (e.g. [30]). The McGurk effect demonstrates the role of audiovisual interactions in speech perception (for example, auditory /ba/ dubbed on visual /ga/ can lead to the perception of /da/) [31]. Models of audiovisual speech perception generally consider that there is a preliminary stage of independent processing of the auditory and visual inputs, before fusion takes place at some level (e.g. [32,33]). However, recent data suggest that interaction between the auditory and visual flows could happen at a very early stage of auditory processing (e.g. [34]) and that the visual speech flow could modulate ongoing auditory feature processing at various levels [35]. This leads to the idea that the perceptual organization of speech should be conceived as audiovisual rather than purely auditory, consistent with the suggestion of Remez et al. [8] (see §1). From this point of view, the VTE could be a useful paradigm for assessing how audition and vision are bound together in the perceptual organization of audiovisual speech.

(a). Visual speech influences the verbal transformation effect

In a series of studies, Sato et al. [36] examined whether visual information from the speaker's face influences the VTE. The participants were asked to listen to and/or watch the stimuli and report any changes in the repeated utterance they perceived. In a first experiment, the stimuli were repetitions of /ps / and /s

/ and /s p/ sequences. They were presented in four conditions: audio (A) only, video (V) only, congruent audiovisual (AV) and incongruent audiovisual (AVi). In the AVi condition, the /ps

p/ sequences. They were presented in four conditions: audio (A) only, video (V) only, congruent audiovisual (AV) and incongruent audiovisual (AVi). In the AVi condition, the /ps / audio track was dubbed onto the /s

/ audio track was dubbed onto the /s p/ video track or vice versa. All transformations were classified as /ps

p/ video track or vice versa. All transformations were classified as /ps /, /s

/, /s p/ and ‘other’. The global stability duration of each reported form was calculated by summing the durations spent perceiving that form. The results showed that the global stability duration of the percept that was consistent with the auditory track was lower for the AVi condition than for the AV condition. For example, the global stability duration of /ps

p/ and ‘other’. The global stability duration of each reported form was calculated by summing the durations spent perceiving that form. The results showed that the global stability duration of the percept that was consistent with the auditory track was lower for the AVi condition than for the AV condition. For example, the global stability duration of /ps / was larger when the audio and the video tracks were /ps

/ was larger when the audio and the video tracks were /ps / (AV condition) than when the audio track was /ps

/ (AV condition) than when the audio track was /ps / and the video track was /s

/ and the video track was /s p/ (AVi condition). In a second experiment, the authors used video tracks changing over time from AV to AVi, and from AVi to AV, while keeping the audio track constant. The results showed a larger effect of the visual input than in the first experiment. Moreover, there was a high temporal synchrony between reported transformations and changes in the video track. The visual changes, from /ps

p/ (AVi condition). In a second experiment, the authors used video tracks changing over time from AV to AVi, and from AVi to AV, while keeping the audio track constant. The results showed a larger effect of the visual input than in the first experiment. Moreover, there was a high temporal synchrony between reported transformations and changes in the video track. The visual changes, from /ps / to /s

/ to /s p/ and vice versa, appeared to precisely control the verbal transformations. Since the visual material was essentially characterized by a salient visual lip-opening gesture for the /p/, the authors suggested that the visual driving of the VTE was controlled by this visual onset event.

p/ and vice versa, appeared to precisely control the verbal transformations. Since the visual material was essentially characterized by a salient visual lip-opening gesture for the /p/, the authors suggested that the visual driving of the VTE was controlled by this visual onset event.

(b). Vision may influence chunking of auditory stimuli

The potential role of visual onset on the VTE was tested in a third experiment using /pata/ and /tapa/ sequences which contain two salient visual onsets, one for /pa/ and one for /ta/. These stimuli may switch from the perception of ‘pata’ to the perception of ‘tapa’, though with a bias towards ‘pata’ as discussed in §3b. They were presented in four conditions. In an audio only condition (A), just the repeated acoustic sequences /pata/ or /tapa/ were presented. In the AV condition, both the audio and the video input were presented. The other two conditions, called AV-pa and AV-ta, were prepared from the audiovisual coherent material. In these conditions, the video component was edited to remove all the information about either the /ta/ or the /pa/ syllables. These syllables were replaced by /a/ images in order to provide subjects with only visible /pa/ gestures in the AV-pa condition, and visible /ta/ gestures in the AV-ta condition, in contrast to the AV condition, where both /pa/ and /ta/ gestures were provided. In other words, in the AV-pa condition, the acoustic stimulus /pata/ was dubbed on a video stimulus /paaa/, or the acoustic stimulus /tapa/ was dubbed on a video stimulus /aapa/. In the AV-ta condition, the acoustic stimulus /pata/ was dubbed on a video stimulus /aata/, or the acoustic stimulus /tapa/ was dubbed on a video stimulus /taaa/. Participants were asked to report any transformations they perceived. The prediction was that the visible-opening gesture (/pa/ for AV-pa or /ta/ for AV-ta) would stabilize the percept beginning with it, leading to a preference for the /pata/ percept in the AV-pa condition, and for the /tapa/ percept in the AV-ta condition. As predicted, seeing the lip-opening gestures significantly drove the perception towards the sequences characterized by the visual onset: in the AV-pa condition, listeners perceived /pata/ more often than /tapa/, regardless of the repeated auditory sequence. An inverse pattern was observed for the AV-ta condition. This result suggests that the lip-opening gestures provide listeners with onset cues that are used in audiovisual speech segmentation.

The next question addressed was whether the visual onset cue could be provided by any visible information, even non-speech, or if it was specific to seeing the articulatory gestures through lip movements. Some hints that the effect might be speech-specific are available from previous studies showing that the audibility of speech sounds embedded in noise is improved by seeing coherent lip movements, but that the enhancement is decreased or eliminated if lip movements are replaced by bars going up and down in synchrony with the original lip movements [37,38]. Therefore, in an original experiment reported next, we tested whether the visual onset effect observed by Sato et al. [36] would occur when the lip movements of /paaa/ and /taaa/ were replaced by a vertical bar varying in height. Two a priori hypotheses were tested. If the visual onset effect is not speech specific, then it should occur for bars as well as for lips, and may even be stronger with bars than with a moving face in which the information is much less focused on the adequate visual information. In contrast, if the visual onset effect is speech-specific, it should not occur with bars, or at least it should decrease, if the bars can be interpreted as suggesting lip movement associated with the speech stream. Testing these hypotheses thus required assessment of whether the effect was greater with lips or with bars.

Two sets of conditions were contrasted: visual onset provided by lip opening or by bar movement. Audio stimuli /pata/ and /tapa/ were dubbed onto four different videos. Two of them contained real lip movements of /paaa/ and /taaa/. For the two other videos, the lip movements of /paaa/ and /taaa/ were replaced by a vertical red bar moving in synchrony with the audio sequences. The time course of lip movements was simulated by four images: the minimum lip opening (/p/ and /t/ closure) was replaced by minimum bar height (identical for /p/ and /t/). Bar height increased linearly to its maximum, replacing maximum lip opening for /a/, remained stable for 280 ms, and decreased linearly before the next repetition of the audio sequence. In this way, the temporal audiovisual coherence of the stimuli was preserved while changing the nature of the visual information. Notice that while the minimum lip opening is typically different for /p/ and /t/, the bar dynamics were kept exactly the same for /p/ and /t/. Hence, the bar should provide only timing information, and lead to symmetric effects for AV-pa and AV-ta conditions. Five conditions were presented for each audio sequence: audio-only (A), AV-pa with lip movement (lip-pa), AV-ta with lip movement (lip-ta), AV-pa with bar (bar-pa) and AV-ta with bar (bar-ta). Seventeen native French speakers with no reported hearing problems and with normal or corrected to normal vision participated. They were asked to listen to and watch the stimuli and report any changes in the repeated utterance they perceived.

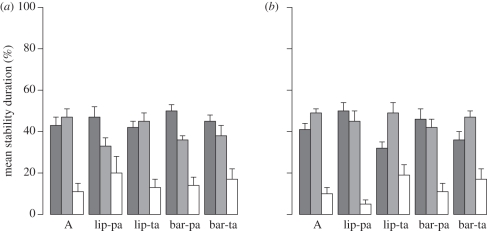

All transformations were classified as /pata/, /tapa/ and ‘other’. The mean global stability durations (see §4a) of /pata/, /tapa/ and ‘other’ reported percepts for the five conditions and for the two audio sequences are shown in figure 2. As expected, the majority of transformations were /pata/ and /tapa/. The analysis was performed on the difference between the global stability durations of /pata/ and /tapa/, expressed as the percentage of the total stimulus duration. We call this difference ‘delta score’. This measure reflects the relative global stability duration of /pata/ and /tapa/ percepts. Using this measure enabled us to test the effect of the presentation conditions on the stability of these two percepts. An ANOVA on delta scores showed a significant effect of condition (F4,64 = 3.86, p < 0.01) and of audio sequence (F1,16 = 6.20, p <0.05), but no interaction (F4,64 = 0.54). Post hoc Newman–Keuls analysis showed a significantly larger delta score for lip-pa and bar-pa than for A, lip-ta and bar-ta conditions. The fact that the lip-pa condition led to significantly increased stability of the /pata/ percept (larger delta score for lip-pa than for A), while the lip-ta effect was not significant is not surprising and is consistent with our previous results [36], since the visual movement for /pa/ is much larger (therefore, more visible) than for /ta/. It is more surprising that the same difference occurred with bars, since in this case, the movement amplitude was kept exactly the same for bar-pa and bar-ta stimuli. This suggests that the ‘bar’ effect may be partly driven by an underlying interpretation of bars as lips, with implicit knowledge of visual differences between /pa/ and /ta/. In a further analysis, we assessed whether there was any difference in the capacity of lip movements and bars to drive percepts towards /pata/ (while seeing /paaa/) or /tapa/ (while seeing /taaa/). To do this, we calculated the difference between delta scores1 for lip-pa and lip-ta (lip-index) and bar-pa and bar-ta (bar-index) separately for each participant. A paired t-test showed a significantly lower value for bar-index than lip-index (t16 = 2.25, p < 0.05). Bar-index values were correlated with lip-index values (r = 0.55, p < 0.01).

Figure 2.

Mean global stability durations of /pata/ (dark grey), /tapa/ (light grey) and ‘other’ (white) percepts for two audio sequences ((a) /pata/, (b) /tapa/) for the five presentation conditions. Error bars represent +1 s.d.

In summary, the movement of a vertical bar simulating lip-opening gestures can provide visual onset cues, but it is somewhat less effective than lip movements in driving percepts. This is in agreement with the hypothesis that the effect is driven by the perception of the visible onset cue as a speech gesture, though further experiments are certainly necessary to confirm this conclusion. The asymmetry of the effect between bar-pa and bar-ta conditions, similar to the asymmetry between lip-pa and lip-ta, is consistent with this assumption. The fact that the effects of lips and bar were correlated suggests that there is probably a single effect with lips and bars (though in a somewhat weaker way in the second case) rather than two different effects, one speech-specific and the other psychophysical.

(c). Conclusions

The experiments reviewed in this section clearly show that multistable speech perception is audiovisual. The visual onset effect provides an additional input to the ‘articulatory chunking’ mechanism reviewed in §3. Possible chunks are determined by articulatory principles including inter-articulator synchrony and jaw opening–closing cycles. The visual modality may participate in this ‘chunking’ process through a visual onset mechanism driving the percept towards the item beginning with the visible onset.

5. Involvement of the ‘dorsal stream’ in the verbal transformation effect

Recent brain imaging and transcranial magnetic stimulation studies suggest a functional link between speech perception and production. Indeed, brain areas involved in the planning and execution of speech gestures (i.e. the left inferior frontal gyrus, the ventral premotor and primary motor cortices) have been repeatedly found to be activated during auditory, visual and/or auditory–visual speech perception [39–50]. In addition, perceptual performance on auditory syllable decision tasks is affected by temporarily disrupting the activity of the speech motor centres, thus suggesting a mediating role of the motor system in speech perception, especially under noisy conditions [51–54].

In recent neurobiological models of speech perception, the motor activity has been attributed to a so-called temporo-parieto-frontal ‘dorsal stream’, which is supposed to provide a mechanism for the development and maintenance of correspondence between sound-based representations in the superior temporal gyrus and articulatory-based representations in the inferior frontal gyrus/premotor cortex, via sensorimotor recoding in the posterior part of the superior temporal gyrus and/or the inferior parietal lobule [55–57]. A few studies have investigated neural correlates of the VTE in the human brain, in relation to this dorsal stream, considering that this type of study could provide information about the potential role of articulatory processes in the VTE.

(a). Neuroanatomy of the verbal transformation effect

In a functional MRI (fMRI) study using a block design, Sato et al. [58] compared two conditions, a verbal transformation condition involving the mental repetition of speech sequences (e.g. /ps /-/

/-/ ps/ and /sp

ps/ and /sp /-/p

/-/p s/) while actively searching for verbal transformations, and a baseline condition involving simple repetition of the same items. In the verbal transformation condition, subjects were instructed to change what they mentally repeated when they perceived a verbal transformation. For example, for the /sp

s/) while actively searching for verbal transformations, and a baseline condition involving simple repetition of the same items. In the verbal transformation condition, subjects were instructed to change what they mentally repeated when they perceived a verbal transformation. For example, for the /sp / sequence in the verbal transformation condition, subjects mentally repeated /sp

/ sequence in the verbal transformation condition, subjects mentally repeated /sp / until the perceptual emergence of /p

/ until the perceptual emergence of /p s/, then they continued mentally repeating /p

s/, then they continued mentally repeating /p s/ while waiting for the emergence of the percept /sp

s/ while waiting for the emergence of the percept /sp /, and so on. In contrast, for the /sp

/, and so on. In contrast, for the /sp / sequence in the baseline condition they just had to continue mentally repeating /sp

/ sequence in the baseline condition they just had to continue mentally repeating /sp / all along the epoch. There was left-hemisphere activity related to the verbal transformation task (VTE condition–baseline) within the inferior frontal, the supramarginal and the superior temporal gyri and the anterior part of the insular cortex. Activity was also observed within the right anterior cingulate cortex and the cerebellum bilaterally. The authors suggested that this temporo-parieto-frontal network performs online analysis of the repeated speech sequence and the temporary storage of the resulting representations. In addition, the authors proposed that the emergence of new representations may involve syllable parsing in the left inferior frontal gyrus and competition between representations in the anterior cingulate cortex.

/ all along the epoch. There was left-hemisphere activity related to the verbal transformation task (VTE condition–baseline) within the inferior frontal, the supramarginal and the superior temporal gyri and the anterior part of the insular cortex. Activity was also observed within the right anterior cingulate cortex and the cerebellum bilaterally. The authors suggested that this temporo-parieto-frontal network performs online analysis of the repeated speech sequence and the temporary storage of the resulting representations. In addition, the authors proposed that the emergence of new representations may involve syllable parsing in the left inferior frontal gyrus and competition between representations in the anterior cingulate cortex.

Using purely auditory tasks and event-related fMRI, Kondo & Kashino [59] contrasted a verbal transformation and a tone detection condition (the speech sequence used in the verbal transformation condition was /banana/). Participants were asked to report their perceptual changes by pressing a button. The same stimulus was used in the tone detection condition except that a tone pip was emitted in the background. The tone detection condition was an ‘emulation’ of the verbal transformation condition, i.e. for each participant, the tone pips were timed to follow the time course of switches reported in the previous verbal transformation condition. Thus, the number of responses matched as closely as possible that for the verbal transformation condition and the motor responses were nearly identical in both conditions (for the same kind of procedure, see e.g. [60]). Participants were asked to press a button when the tone pip was presented. For both conditions, bilateral activation was observed within the primary auditory cortex, and the posterior part of the superior temporal and the supramarginal gyri. Additional activity was observed in the left insular cortex. However, the anterior cingulate cortex, the prefrontal cortex and the left inferior frontal gyrus were activated only for the verbal transformation task. The authors found a positive correlation between the number of transformations and the intensity of the observed signal in the left inferior frontal gyrus. According to the authors, this finding may reflect the role of predictive processes based on articulatory gestures in the generation of verbal transformations which are updated in the left inferior frontal cortex (an area involved in speech production). Conversely, a negative correlation was observed between the activity of the anterior part of the left cingulate cortex and the number of transformations. The authors noted the role of the dorsal part of the cingulate cortex in the stabilization of percepts and in error detection. They proposed that this area might play a role in matching between possible verbal forms and auditory input in the VTE.

Altogether, a common network emerges from these two studies, basically corresponding to the organization of the dorsal stream. However, no temporal information is provided by fMRI studies about when the brain areas involved in the VTE are activated. The objective of the experiment described in the next section was to provide such information.

(b). Neurophysiological correlates of the decision process in the verbal transformation effect

Basirat et al. [61] investigated the temporal dynamics of verbal transformations using intracranial EEG (iEEG) recordings by means of electrodes implanted inside the brain of two epileptic patients as part of their presurgical evaluation. This method provides a high temporal and spatial resolution. Two experimental conditions were used: the verbal transformation condition (ENDO, for endogenously driven perceptual switch) and an auditory change condition (EXO, for exogenously driven perceptual switch). In the ENDO condition, the participants were asked to listen to the auditory stimulus /patapata … / or /tapatapa … / and to press a button whenever they perceived any change in the stimulus. In the EXO condition, real random changes between /pa/ and /ta/ were presented (i.e. / … papa … tata … papa … /). Participants were asked to report any changes in percept by pressing a button. A time-frequency analysis of iEEG responses showed an increase of gamma band activity (above 40 Hz) in the left inferior frontal and supramarginal gyri 300–800 ms before the button press in the ENDO condition. In the EXO condition, gamma band activity was found 200 ms prior to the button press, mainly in the left superior temporal and supramarginal gyri. The authors reasoned that gamma band increases in the ENDO condition could not be due to the preparation for the motor response per se, since these activities were observed much earlier than in the EXO condition. This result confirms the specific role of the observed parieto-frontal network in perceptual switches and decision-making.

(c). Conclusions

Despite different imaging methods and different materials and tasks used in the three studies reviewed above, quite similar areas were observed in relation to verbal transformations. These areas, including the left inferior frontal, left supramarginal and left superior temporal gyri, are consistent with the dorsal stream of speech processing. Altogether, the neuroimaging results on the VTE reviewed above support the idea that the speech motor system is activated in speech perception, in agreement with a possible role of articulatory-based representations in the emergence and the stabilization of speech percepts.

6. Discussion

We now return to the questions raised at the beginning of this paper, to summarize what has been learned about the perceptual organization of speech through the use of the VTE.

(a). Perceptuo-motor speech ‘chunks’

The question of possible ‘natural’ units in speech perception is old and complex. Syllables have been repeatedly considered as possible candidates, although their role remains controversial [62–64]. Auditory processing provides some bases for enhancing elements of syllable structures, through the processing of modulations (see e.g. [65,66]) or the detection of auditory events (e.g. [67]). The data presented in this paper suggest that ‘chunks' could emerge from a variety of cues, including articulatory and visual ones. Section 3 showed how ‘articulatory chunking’ principles related to inter-articulatory synchrony or underlying jaw cycles could provide a ‘glue’ for sticking together pieces of acoustic information. Section 4 pointed towards the potential role of visual information and particularly of speech visual onsets. Overall, we suggest that the perceptual organization of the speech stream could involve perceptuo-motor chunks formed on the basis of articulatory, auditory and visual information. These chunks would serve as a basis for further processing by higher-level mechanisms involved in comprehension. This would extend classical facts about speech perception, namely the pivotal role of syllables in the auditory processing of speech (e.g. [64]), the role of syllable onsets in speech segmentation [68] and the importance of word onsets in lexical access and speech comprehension (e.g. [69]). It could provide a basis for future experiments on the role of visual speech in lexical access (see [70]), particularly concerning audiovisual detection of word onsets.

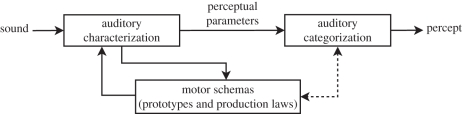

Overall, the behavioural and neurophysiological data presented in this paper suggest that the perceptual organization of speech is based on perceptuo-motor and multimodal processes. This fits well with the perception-for-action-control theory (PACT) developed by Schwartz et al. [71], which is based on the assumption of two roles for perceptuo-motor links (possibly implemented in the human brain within the ‘dorsal stream’) during speech perception. The first involves co-structuring of perceptual and motor representations, enabling auditory categorization mechanisms to take motor information into account (dotted line in figure 3). The second (solid lines in figure 3) is related to speech analysis per se: it represents the contribution provided by motor knowledge to the speech scene analysis process, as demonstrated, among other things, by the present data on the VTE. The ‘articulatory chunking’ phenomenon is related to this second role of perceptuo-motor processes during speech perception.

Figure 3.

A general architecture for PACT [71]. Perceptuo-motor links contribute to co-structuring of perceptual and motor representations (dotted line) and to perceptual organization of speech (solid lines).

Coming back to the issues raised by Bregman [6] and Remez et al. [8] about the principles of speech scene analysis, should these perceptuo-motor chunks be considered as primitives or schemas? If we consider that one main difference between primitive and schema-based mechanisms is that the latter need learning, articulatory chunking (which is based on motor knowledge) should be considered as a schema-based process. However, the mechanism based on articulatory stability could be quite general, and possibly learned very early, and perhaps even present in a speech communication module genetically specified in the human brain and available in pre-linguistic infants. In their review of the perception of speech, Pardo and Remez [72] claim that the perceptual organization of speech does not require learning and quote evidence from studies of pre-linguistic (14-week-old) infants, who are able to integrate acoustic elements of speech even when they are spectrally and spatially disparate. Further experiments on the potential existence of articulatory stability and articulatory chunking in infants should shed some light on this question (see e.g. [73]).

(b). The verbal transformation effect in relation to a general framework for multistability

We now attempt to connect the tentative portrait of the VTE that we propose in this paper with a global framework for multistability, as it emerges from the literature and particularly from the present special issue. The framework we consider is based on the ‘predictive coding’ approach, according to which perception involves active prediction (or synthesis) of sensory input. Prediction is compared against the input signal; an error signal is then fed back to the perceptual system, which enables the re-evaluation of the sensory input. Predictive coding has become an influential hypothesis about how the brain deals with sensory information coming from the external world, which is usually noisy and ambiguous (for a review, see [74]).

Predictive coding has a special appeal in speech perception, since it provides general principles supporting a model introduced long ago in the literature, the so-called ‘analysis-by-synthesis’ model of speech perception [75]. Analysis-by-synthesis is based on the assumption that speech perception involves analysing the sound by assessing the possible articulatory commands that generated it. Predictive coding has been considered as a possible model for explaining neurophysiological data on the influence of vision on auditory processing in speech perception [76]: the natural dynamics of audiovisual speech as well as phonological knowledge would allow the speech-processing system to build an online prediction of auditory signals [77]. This prediction system would involve a motor-based ‘analysis-by-synthesis’ mechanism associated with a temporo-parieto-frontal network in the brain [50]. In a more general way, PACT has extended this concept to speech scene analysis (see solid lines in figure 3) [78].

The neuroimaging findings on the VTE reviewed in §5 seem to fit well with this framework. The enhanced parietal and frontal activity before verbal transformations observed by Basirat et al. [61] may contribute to hypothesis generation and error minimization mechanisms: a verbal transformation happens when the prediction error becomes large, so that a new hypothesis (with a smaller prediction error) is considered as a better choice. A positive correlation between the number of transformations and the intensity of the observed signal in the left inferior frontal gyrus reported by Kondo & Kashino [59] is also consistent with this interpretation. We cannot determine at this point whether enhanced frontal and parietal activity reflects prediction or a prediction-error signal. The interpretation of these data based on predictive coding does not exclude a contribution of stimulus-driven mechanisms such as adaptation (see also [79]). One possibility is that the prediction-error signal in the VTE is modulated by neural fatigue (in low-level brain areas) and then sent to high-level areas for generation of a better prediction, based on articulatory representations. This suggestion is speculative, considering the small amount of neuroimaging data on the VTE. Further studies on the interaction of general ‘low-level’ mechanisms in speech perception (including e.g. onset detection, extraction of spectro-temporal primitives and sensory adaptation) and perceptuo-motor links in the VTE would be helpful to investigate this.

It has been suggested that similar principles govern auditory and visual multistability [5]. Predictive coding is actually mentioned in this issue by several authors to explain how various perceptual configurations could compete in the human brain and how the dynamics of perceptual transitions could be predicted on the basis of this kind of model [80–83]. The VTE could then be conceived as a ‘speech-specific’ form of this general scenario. Although the idea that the same kind of mechanisms may be involved in the VTE and in perceptual multistability in other modalities may seem speculative, neuroimaging findings provide some hints. Several recent studies observed the activation of parietal and frontal areas by visual multistable stimuli (for a review, see [79]). In an influential paper on perceptual multistability, Leopold & Logothetis [4] have suggested that these sensorimotor areas are involved in re-evaluating the current perceptual interpretation, in both normal and multistable vision. Their intervention becomes noticed when there is ambiguity in the visual input, that is when several relevant interpretations are possible. The authors suggest that this re-evaluation mechanism might be based on an iterative and random system of ‘checks and balances’: the role of parieto-frontal areas would be to periodically force perception to reorganize, which may lead to perceptual switches during multistability tasks. Although the data considered by the authors were all focused on visual multistability, the data reviewed in §5 on the role of the dorsal stream in the VTE are consistent with this mechanism. By this reasoning, the perceptuo-motor links in speech analysis and perception form a speech-specific component of a more general mechanism enabling the control of meaningful perception of sensory input in parieto-frontal regions. This general mechanism, in which the re-evaluation of a scene by parieto-frontal areas is initiated by a signal received from sensory areas, is compatible with ‘analysis-by-synthesis’ for speech perception (and predictive coding ideas in general).

(c). Conclusions

We return to the last question raised at the beginning of this paper: what is the nature of speech perceptual objects? This question is a kind of ‘Holy Grail’ for speech communication researchers. Kubovy & Van Valkenburg [84] suggested that ‘a perceptual object is that which is susceptible to figure-ground segregation’: principles of perceptual grouping produce possible perceptual objects, attention selects one or a set of these objects to become figure and assigns all other information from the sensory scene to ground. These are figures that represent perceptual objects. In their view (see also [85]), visual and auditory objects are, respectively, formed in a space–time and a pitch-time space. What, then, are the ‘metrics’ for defining speech objects? Although the answer to this question is far beyond the scope of this paper, we suggest that motor principles might contribute to speech object formation. Whatever the view on this, the VTE provides an interesting tool for studying the nature of speech objects.

Acknowledgements

We thank Brian Moore and Mark Pitt for their comments, suggestions and corrections of previous versions of this manuscript. This work was supported by the Centre National de la Recherche Scientifique (CNRS) and the Agence Nationale de la Recherche (ANR-08-BLAN-0167-01, project Multistap).

Endnote

In this second analysis, we focused on the duration of /pata/ and /tapa/, without including the duration of ‘other’ transformations. The delta score was, therefore, calculated as the difference between the global stability durations of /pata/ and /tapa/ normalized by the sum of the global stability durations of /pata/ and /tapa/ (instead of normalizing by the total duration of all transformations as in the first analysis).

Reference

- 1.Warren R. M. 1961. Illusory changes of distinct speech upon repetition: the verbal transformation effect. Br. J. Psychol. 52, 249–258 10.1111/j.2044-8295.1961.tb00787.x (doi:10.1111/j.2044-8295.1961.tb00787.x) [DOI] [PubMed] [Google Scholar]

- 2.Warren R. M., Gregory R. L. 1958. An auditory analogue of the visual reversible figure. Am. J. Psychol. 71, 612–613 10.2307/1420267 (doi:10.2307/1420267) [DOI] [PubMed] [Google Scholar]

- 3.Warren R. M. 1983. Auditory illusions and their relation to mechanisms normally enhancing accuracy of perception. J. Audio Eng. Soc. 31, 623–629 [Google Scholar]

- 4.Leopold D. A., Logothetis N. K. 1999. Multistable phenomena: changing views in perception. Trends Cogn. Sci. 3, 254–264 10.1016/S1364-6613(99)01332-7 (doi:10.1016/S1364-6613(99)01332-7) [DOI] [PubMed] [Google Scholar]

- 5.Pressnitzer D., Hupé J. M. 2006. Temporal dynamics of auditory and visual bistability reveal common principles of perceptual organization. Curr. Biol. 16, 1351–1357 10.1016/j.cub.2006.05.054 (doi:10.1016/j.cub.2006.05.054) [DOI] [PubMed] [Google Scholar]

- 6.Bregman A. S. 1990. Auditory scene analysis: the perceptual organization of sound. Cambridge, UK: MIT Press [Google Scholar]

- 7.Moore B. C. J., Gockel H. E. 2012. Properties of auditory stream formation. Phil. Trans. R. Soc. B 367, 919–931 10.1098/rstb.2011.0355 (doi:10.1098/rstb.2011.0355) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Remez R. E., Rubin P. E., Berns S. M., Pardo J. S., Lang J. M. 1994. On the perceptual organization of speech. Psychol. Rev. 101, 129–156 10.1037/0033-295X.101.1.129 (doi:10.1037/0033-295X.101.1.129) [DOI] [PubMed] [Google Scholar]

- 9.Goldstein L. M., Lackner J. R. 1972. Alterations of the phonetic coding of speech sounds during repetition. Cognition 2, 279–297 10.1016/0010-0277(72)90036-4 (doi:10.1016/0010-0277(72)90036-4) [DOI] [Google Scholar]

- 10.Pitt M. A., Shoaf L. 2001. The source of a lexical bias in the verbal transformation effect. Lang. Cogn. Proc. 16, 715–721 10.1080/01690960143000056 (doi:10.1080/01690960143000056) [DOI] [Google Scholar]

- 11.Kaminska Z., Pool M., Mayer P. 2000. Verbal transformation: habituation or spreading activation? Brain Lang. 71, 285–298 10.1006/brln.1999.2181 (doi:10.1006/brln.1999.2181) [DOI] [PubMed] [Google Scholar]

- 12.Pitt M. A., Shoaf L. 2002. Linking verbal transformations to their causes. J. Exp. Psychol. Hum. Percept. Perf. 28, 150–162 10.1037/0096-1523.28.1.150 (doi:10.1037/0096-1523.28.1.150) [DOI] [PubMed] [Google Scholar]

- 13.Shoaf L. C., Pitt M. A. 2002. Does node stability underlie the verbal transformation effect? A test of node structure theory. Percept. Psychophys. 64, 795–803 10.3758/BF03194746 (doi:10.3758/BF03194746) [DOI] [PubMed] [Google Scholar]

- 14.MacKay D. G. 1987. The organization of perception and action: a theory for language and other cognitive skills. New York, NY: Springer [Google Scholar]

- 15.MacKay D. G., Wulf G., Yin C., Abrams L. 1993. Relations between word perception and production: new theory and data on the verbal transformation effect. J. Mem. Lang. 32, 624–646 10.1006/jmla.1993.1032 (doi:10.1006/jmla.1993.1032) [DOI] [Google Scholar]

- 16.Kelso J. A. S. 2012. Multistability and metastability: understanding dynamic coordination in the brain. Phil. Trans. R. Soc. B 367, 906–918 10.1098/rstb.2011.0351 (doi:10.1098/rstb.2011.0351) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ditzinger T., Tuller B., Haken H., Kelso J. A. S. 1997. A synergetic model for the verbal transformation effect. Biol. Cybern. 77, 31–40 10.1007/s004220050364 (doi:10.1007/s004220050364) [DOI] [PubMed] [Google Scholar]

- 18.Ditzinger T., Haken H. 1995. A synergetic model of multistability in perception. In Ambiguity in mind and nature: multistable cognitive phenomena (eds Kruse P., Stadler M.), pp. 255–274 Berlin, Germany: Springer [Google Scholar]

- 19.Reisberg D., Smith J. D., Baxter D. A., Sonenshine M. 1989. ‘Enacted’ auditory images are ambiguous; ‘pure’ auditory images are not. Q. J. Exp. Psychol. A 41, 619–641 10.1080/14640748908402385 (doi:10.1080/14640748908402385) [DOI] [PubMed] [Google Scholar]

- 20.Sato M., Schwartz J.-L., Abry C., Cathiard M. A., Lœvenbruck H. 2006. Multistable syllables as enacted percepts: a source of an asymmetric bias in the verbal transformation effect. Percept. Psychophys. 68, 458–474 10.3758/BF03193690 (doi:10.3758/BF03193690) [DOI] [PubMed] [Google Scholar]

- 21.Sato M., Vallée N., Schwartz J.-L., Rousset I. 2007. A perceptual correlate of the labial–coronal effect. J. Speech Lang. Hear. R. 50, 1466–1480 10.1044/1092-4388(2007/101) (doi:10.1044/1092-4388(2007/101)) [DOI] [PubMed] [Google Scholar]

- 22.Maddieson I. 1984. Patterns of sounds. Cambridge, UK: Cambridge University Press [Google Scholar]

- 23.Vallée N., Rossato S., Rousset I. 2009. Favoured syllabic patterns in the world's languages and sensorimotor constraints. In Approaches to phonological complexity (eds Pellegrino F., Marsico E., Chitoran I., Coupe C.), pp. 111–139 Berlin, Germany: Mouton de Gruyter [Google Scholar]

- 24.Redford M. A., Diehl R.L. 1999. The relative perceptual distinctiveness of initial and final consonants in CVC syllables. J. Acoust. Soc. Am. 106, 1555–1565 10.1121/1.427152 (doi:10.1121/1.427152) [DOI] [PubMed] [Google Scholar]

- 25.Browman C. P., Goldstein L. 1989. Articulatory gestures as phonological units. Phonology 6, 201–251 10.1017/S0952675700001019 (doi:10.1017/S0952675700001019) [DOI] [Google Scholar]

- 26.Goldstein L., Byrd D., Saltzman E. 2006. The role of vocal tract gestural action units in understanding the evolution of phonology. In Action to language via the mirror neuron system (ed. Arbib M.), pp. 215–249 Cambridge, UK: Cambridge University Press [Google Scholar]

- 27.Tuller B., Kelso J. A. S. 1991. The production and perception of syllable structure. J. Speech Hear. Res. 34, 501–508 [DOI] [PubMed] [Google Scholar]

- 28.Rochet-Capellan A., Schwartz J.-L. 2007. An articulatory basis for the labial-to-coronal effect: /pata/ seems a more stable articulatory pattern than /tapa/. J. Acoust. Soc. Am. 121, 3740–3754 10.1121/1.2734497 (doi:10.1121/1.2734497) [DOI] [PubMed] [Google Scholar]

- 29.MacNeilage P. F., Davis B. L. 2000. On the origin of internal structure of word forms. Science 288, 527–531 10.1126/science.288.5465.527 (doi:10.1126/science.288.5465.527) [DOI] [PubMed] [Google Scholar]

- 30.Sumby W. H., Pollack I. 1954. Visual contribution to speech intelligibility in noise. J. Acoust. Soc. Am. 26, 212–215 10.1121/1.1907309 (doi:10.1121/1.1907309) [DOI] [Google Scholar]

- 31.McGurk H., MacDonald J. 1976. Hearing lips and seeing voices. Nature 264, 746–748 10.1038/264746a0 (doi:10.1038/264746a0) [DOI] [PubMed] [Google Scholar]

- 32.Summerfield Q. 1987. Some preliminaries to a comprehensive account of audio-visual speech perception. In Hearing by eye: the psychology of lipreading (eds Dodd B., Campbell R.), pp. 3–51 Hillsdale, NJ: Lawrence Erlbaum Associates [Google Scholar]

- 33.Schwartz J.-L., Robert-Ribes J., Escudier P. 1998. Ten years after Summerfield: a taxonomy of models for audio-visual fusion in speech perception. In Hearing by eye II: advances in the psychology of speechreading and auditory-visual speech (eds Campbell R., Dodd B., Burnham D.), pp. 85–108 Hove, UK: Psychology Press [Google Scholar]

- 34.Grant K. W., Seitz P. F. 2000. The use of visible speech cues for improving auditory detection of spoken sentences. J. Acoust. Soc. Am. 108, 1197–1208 10.1121/1.1288668 (doi:10.1121/1.1288668) [DOI] [PubMed] [Google Scholar]

- 35.Bernstein L. E., Auer E. T., Moore J. K. 2004. Audiovisual speech binding: convergence or association? In The handbook of multisensory processes (eds Calvert G. A., Spence C., Stein B. E.), pp. 203–204 Cambridge, UK: MIT Press [Google Scholar]

- 36.Sato M., Basirat A., Schwartz J.-L. 2007. Visual contribution to the multistable perception of speech. Percept. Psychophys. 69, 1360–1372 10.3758/BF03192952 (doi:10.3758/BF03192952) [DOI] [PubMed] [Google Scholar]

- 37.Bernstein L. E., Auer E. T., Takayanagi S. 2004. Auditory speech detection in noise enhanced by lipreading. Speech Commun 44, 5–18 10.1016/j.specom.2004.10.011 (doi:10.1016/j.specom.2004.10.011) [DOI] [Google Scholar]

- 38.Schwartz J.-L., Berthommier F., Savariaux C. 2004. Seeing to hear better: evidence for early audio-visual interactions in speech identification. Cognition 93, 69–78 10.1016/j.cognition.2004.01.006 (doi:10.1016/j.cognition.2004.01.006) [DOI] [PubMed] [Google Scholar]

- 39.Sundara M., Namasivayam A. K., Chen R. 2001. Observation-execution matching system for speech: a magnetic stimulation study. Neuroreport 12, 1341–1344 10.1097/00001756-200105250-00010 (doi:10.1097/00001756-200105250-00010) [DOI] [PubMed] [Google Scholar]

- 40.Fadiga L., Craighero L., Buccino G., Rizzolatti G. 2002. Speech listening specifically modulates the excitability of tongue muscles: a TMS study. Eur. J. Neurosci. 15, 399–402 10.1046/j.0953-816x.2001.01874.x (doi:10.1046/j.0953-816x.2001.01874.x) [DOI] [PubMed] [Google Scholar]

- 41.Möttönen R., Schurmann M., Sams M. 2004. Time course of multisensory interactions during audiovisual speech perception in humans: a magnetoencephalographic study. Neurosci. Lett. 363, 112–115 10.1016/j.neulet.2004.03.076 (doi:10.1016/j.neulet.2004.03.076) [DOI] [PubMed] [Google Scholar]

- 42.Watkins K. E., Strafella A. P., Paus T. 2003. Seeing and hearing speech excites the motor system involved in speech production. Neuropsychologia 41, 989–994 10.1016/S0028-3932(02)00316-0 (doi:10.1016/S0028-3932(02)00316-0) [DOI] [PubMed] [Google Scholar]

- 43.Watkins K., Paus T. 2004. Modulation of motor excitability during speech perception: the role of Broca's area. J. Cogn. Neurosci. 16, 978–987 10.1162/0898929041502616 (doi:10.1162/0898929041502616) [DOI] [PubMed] [Google Scholar]

- 44.Wilson S. M., Saygin A. P., Sereno M. I., Iacoboni M. 2004. Listening to speech activates motor areas involved in speech production. Nat. Neurosci. 7, 701–702 10.1038/nn1263 (doi:10.1038/nn1263) [DOI] [PubMed] [Google Scholar]

- 45.Ojanen V., Möttönen R., Pekkola J., Jääskeläinen I. P., Joensuu R., Autti T., Sams M. 2005. Processing of audiovisual speech in Broca's area. NeuroImage 25, 333–338 10.1016/j.neuroimage.2004.12.001 (doi:10.1016/j.neuroimage.2004.12.001) [DOI] [PubMed] [Google Scholar]

- 46.Pekkola J., Ojanen V., Autti T., Jääskeläinen I.P., Möttönen R., Tarkiainen A., Sams M. 2005. Primary auditory cortex activation by visual speech: an fMRI study at 3T. Neuroreport 16, 125–128 10.1097/00001756-200502080-00010 (doi:10.1097/00001756-200502080-00010) [DOI] [PubMed] [Google Scholar]

- 47.Skipper J. I., Nusbaum H. C., Small S. L. 2005. Listening to talking faces: motor cortical activation during speech perception. NeuroImage 25, 76–89 10.1016/j.neuroimage.2004.11.006 (doi:10.1016/j.neuroimage.2004.11.006) [DOI] [PubMed] [Google Scholar]

- 48.Pulvermüller F., Huss M., Kherif F., del Prado Martin F. M., Hauk O., Shtyrov Y. 2006. Motor cortex maps articulatory features of speech sounds. Proc. Natl Acad. Sci. USA 103, 7865–7870 10.1073/pnas.0509989103 (doi:10.1073/pnas.0509989103) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Wilson S. M., Iacoboni M. 2006. Neural responses to non-native phonemes varying in producibility: evidence for the sensorimotor nature of speech perception. NeuroImage 33, 316–325 10.1016/j.neuroimage.2006.05.032 (doi:10.1016/j.neuroimage.2006.05.032) [DOI] [PubMed] [Google Scholar]

- 50.Skipper J. I., Van Wassenhove V., Nusbaum H. C., Small S. L. 2007. Hearing lips and seeing voices: how cortical areas supporting speech production mediate audiovisual speech perception. Cereb. Cortex 17, 2387–2399 10.1093/cercor/bhl147 (doi:10.1093/cercor/bhl147) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Meister I. G., Wilson S. M., Deblieck C., Wu A. D., Iacoboni M. 2007. The essential role of premotor cortex in speech perception. Curr. Biol. 17, 1692–1696 10.1016/j.cub.2007.08.064 (doi:10.1016/j.cub.2007.08.064) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.D'Ausilio A., Pulvermüller F., Salmas P., Bufalari I., Begliomini C., Fadiga L. 2009. The motor somatotopy of speech perception. Curr. Biol. 19, 381–385 10.1016/j.cub.2009.01.017 (doi:10.1016/j.cub.2009.01.017) [DOI] [PubMed] [Google Scholar]

- 53.Möttönen R., Watkins K.E. 2009. Motor representations of articulators contribute to categorical perception of speech sounds. J. Neurosci. 29, 9819–9825 10.1523/JNEUROSCI.6018-08.2009 (doi:10.1523/JNEUROSCI.6018-08.2009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Sato M., Tremblay P., Gracco V. L. 2009. A mediating role of the premotor cortex in phoneme segmentation. Brain Lang. 111, 1–7 10.1016/j.bandl.2009.03.002 (doi:10.1016/j.bandl.2009.03.002) [DOI] [PubMed] [Google Scholar]

- 55.Hickok G., Poeppel D. 2004. Dorsal and ventral streams: a framework for understanding aspects of the functional anatomy of language. Cognition 92, 67–99 10.1016/j.cognition.2003.10.011 (doi:10.1016/j.cognition.2003.10.011) [DOI] [PubMed] [Google Scholar]

- 56.Hickok G., Poeppel D. 2007. The cortical organization of speech processing. Nat. Rev. Neurosci. 8, 393–402 10.1038/nrn2113 (doi:10.1038/nrn2113) [DOI] [PubMed] [Google Scholar]

- 57.Scott S.K., Johnsrude I. S. 2003. The neuroanatomical and functional organization of speech perception. Trends Neurosci. 26, 100–107 10.1016/S0166-2236(02)00037-1 (doi:10.1016/S0166-2236(02)00037-1) [DOI] [PubMed] [Google Scholar]

- 58.Sato M., Baciu M., Lœvenbruck H., Schwartz J.-L., Cathiard M. A., Segebarth C., Abry C. 2004. Multistable representation of speech forms: a functional MRI study of verbal transformations. NeuroImage 23, 1143–1151 10.1016/j.neuroimage.2004.07.055 (doi:10.1016/j.neuroimage.2004.07.055) [DOI] [PubMed] [Google Scholar]

- 59.Kondo H. M., Kashino M. 2007. Neural mechanisms of auditory awareness underlying verbal transformations. NeuroImage 36, 123–130 10.1016/j.neuroimage.2007.02.024 (doi:10.1016/j.neuroimage.2007.02.024) [DOI] [PubMed] [Google Scholar]

- 60.Lumer E. D., Friston K. J., Rees G. 1998. Neural correlates of perceptual rivalry in the human brain. Science 280, 1930–1934 10.1126/science.280.5371.1930 (doi:10.1126/science.280.5371.1930) [DOI] [PubMed] [Google Scholar]

- 61.Basirat A., Sato M., Schwartz J.-L., Kahane P., Lachaux J. P. 2008. Parieto-frontal gamma band activity during the perceptual emergence of speech forms. NeuroImage 42, 404–413 10.1016/j.neuroimage.2008.03.063 (doi:10.1016/j.neuroimage.2008.03.063) [DOI] [PubMed] [Google Scholar]

- 62.Healy A. F., Cutting J. E. 1976. Units of speech perception: phoneme and syllable. J. Verb. Learn. Verb. Behav. 15, 73–83 10.1016/S0022-5371(76)90008-6 (doi:10.1016/S0022-5371(76)90008-6) [DOI] [Google Scholar]

- 63.Greenberg S. 1996. Understanding speech understanding: towards a unified theory of speech perception. In Proc. of the ESCA Tutorial and Advanced Research Workshop on the Auditory Basis of Speech Perception (eds Ainsworth W. A., Greenberg S.), pp. 1–8 Keele, UK: Keele University [Google Scholar]

- 64.Greenberg S., Carvey H., Hitchcock L., Chang S. 2003. Temporal properties of spontaneous speech: a syllable-centric perspective. J. Phon. 31, 465–485 10.1016/j.wocn.2003.09.005 (doi:10.1016/j.wocn.2003.09.005) [DOI] [Google Scholar]

- 65.Drullman R., Festen J. M., Plomp R. 1994. Effect of temporal envelope smearing on speech reception. J. Acoust. Soc. Am. 95, 1053–1064 10.1121/1.408467 (doi:10.1121/1.408467) [DOI] [PubMed] [Google Scholar]

- 66.Giraud A. L., Lorenzi C., Ashburner J., Wable J., Johnsrude I., Frackowiak R., Kleinschmidt A. 2000. Representation of the temporal envelope of sounds in the human brain. J. Neurophysiol. 84, 1588–1589 [DOI] [PubMed] [Google Scholar]

- 67.Delgutte B. 1997. Auditory neural processing of speech. In The handbook of phonetic sciences (eds Hardcastle W. J., Laver J.), pp. 507–538 Oxford, UK: Blackwell [Google Scholar]

- 68.Dumay N., Frauenfelder U.H., Content A. 2002. The role of the syllable in lexical segmentation in French: word-spotting data. Brain Lang. 81, 144–161 10.1006/brln.2001.2513 (doi:10.1006/brln.2001.2513) [DOI] [PubMed] [Google Scholar]

- 69.Marslen-Wilson W., Zwitserlood P. 1989. Accessing spoken words: the importance of word onsets. J. Exp. Psychol. Human Percept. Perf. 15, 576–585 10.1037/0096-1523.15.3.576 (doi:10.1037/0096-1523.15.3.576) [DOI] [Google Scholar]

- 70.Fort M., Spinelli E., Savariaux C., Kandel S. 2010. The word superiority effect in audiovisual speech perception. Speech Commun. 52, 525–532 10.1016/j.specom.2010.02.005 (doi:10.1016/j.specom.2010.02.005) [DOI] [Google Scholar]

- 71.Schwartz J.-L., Abry C., Boë L.-J., Cathiard M. 2002. Phonology in a theory of perception-for-action-control. In Phonetics, phonology and cognition (eds Durand J., Laks B.), pp. 244–280 New York, NY: Oxford University Press [Google Scholar]

- 72.Pardo J. S., Remez R. E. 2006. The perception of speech. In Handbook of psycholinguistics (eds Traxler M., Gernsbacher M. A.), pp. 201–248 New York, NY: Academic Press [Google Scholar]

- 73.Nazzi T., Bertoncini J., Bijeljac-Babic R. 2009. A perceptual equivalent of the labial-coronal effect in the first year of life. J. Acoust. Soc. Am. 126, 1440–1446 10.1121/1.3158931 (doi:10.1121/1.3158931) [DOI] [PubMed] [Google Scholar]

- 74.Friston K. 2002. Functional integration and inference in the brain. Prog. Neurobiol. 68, 113–143 10.1016/S0301-0082(02)00076-X (doi:10.1016/S0301-0082(02)00076-X) [DOI] [PubMed] [Google Scholar]

- 75.Stevens K. N. 2002. Toward a model for lexical access based on acoustic landmarks and distinctive features. J. Acoust. Soc. Am. 111, 1872–1891 10.1121/1.1458026 (doi:10.1121/1.1458026) [DOI] [PubMed] [Google Scholar]

- 76.Van Wassenhove V., Grant K.W., Poeppel D. 2005. Visual speech speeds up the neural processing of auditory speech. Proc. Natl Acad. Sci. USA 102, 1181–1186 10.1073/pnas.0408949102 (doi:10.1073/pnas.0408949102) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Poeppel D., Idsardi W. J., Van Wassenhove V. 2008. Speech perception at the interface of neurobiology and linguistics. Phil. Trans. R. Soc. B 363, 1071–1086 10.1098/rstb.2007.2160 (doi:10.1098/rstb.2007.2160) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Schwartz J.-L., Basirat A., Ménard L., Sato M. In press The perception-for-action-control theory (PACT): a perceptuo-motor theory of speech perception. J. Neurolinguistics. 10.1016/j.jneuroling.2009.12.004 (doi:10.1016/j.jneuroling.2009.12.004) [DOI] [Google Scholar]

- 79.Sterzer P., Kleinschmidt A., Rees G. 2009. The neural bases of multistable perception. Trends Cogn. Sci. 13, 310–318 10.1016/j.tics.2009.04.006 (doi:10.1016/j.tics.2009.04.006) [DOI] [PubMed] [Google Scholar]

- 80.Kashino M., Kondo H. M. 2012. Functional brain networks underlying perceptual switching: auditory streaming and verbal transformations. Phil. Trans. R. Soc. B 367, 977–987 10.1097/rstb.2011.0370 (doi:10.1097/rstb.2011.0370). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Kleinschmidt A., Sterzer P., Rees G. 2012. Variability of perceptual multistability: from brain state to individual trait. Phil. Trans. R. Soc. B 367, 988–1000 10.1098/rstb.2011.0367 (doi:10.1098/rstb.2011.0367) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Klink P. C., van Wezel R. J. A., van Ee R. 2012. United we sense, divided we fail: context-driven perception of ambiguous visual stimuli. Phil. Trans. R. Soc. B 367, 932–941 10.1098/rstb.2011.0358 (doi:10.1098/rstb.2011.0358) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Winkler I., Denham S., Mill R., Bőhm T. M., Bendixen A. 2012. Multistability in auditory stream segregation: a predictive coding view. Phil. Trans. R. Soc. B 367, 1001–1012 10.1098/rstb.2011.0359 (doi:10.1098/rstb.2011.0359) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Kubovy M., Van Valkenburg D. 2001. Auditory and visual objects. Cognition 80, 97–126 10.1016/S0010-0277(00)00155-4 (doi:10.1016/S0010-0277(00)00155-4) [DOI] [PubMed] [Google Scholar]

- 85.Kubovy M., Yu M. 2012. Multistability, cross-model binding and the additivity of conjoined grouping principles. Phil. Trans. R. Soc. B 367, 954–964 10.1098/rstb.2011.0365 (doi:10.1098/rstb.2011.0365) [DOI] [PMC free article] [PubMed] [Google Scholar]