Abstract

Diversity of expertise at an individual level can increase intelligence at a collective level—a type of swarm intelligence (SI) popularly known as the ‘wisdom of the crowd’. However, this requires independent estimates (rare in the real world owing to the availability of public information) and contradicts people's bias for copying successful individuals. To explain these inconsistencies, 429 people took part in a ‘guess the number of sweets’ exercise. Guesses made with no public information were diverse, resulting in highly accurate SI. Individuals with access to the previous guess, mean guess or a randomly chosen guess, tended to over-estimate the number of sweets and this undermined SI. However, when people were provided with the current best guess, this prevented very large (inaccurate) guesses, resulting in convergence of guesses towards the true value and accurate SI across a range of group sizes. Thus, contrary to previous work, we show that social influence need not undermine SI, especially where individual decisions are made sequentially and then aggregated. Furthermore, we offer an explanation for why people have a bias to recruit and follow experts in team settings: copying successful individuals can enable accuracy at both the individual and group level, even at small group sizes.

Keywords: swarm intelligence, averaging, collective cognition, copying behaviour, cognitive problem, leadership

1. Introduction

There has been much recent interest in how groups of individuals solve cognitive problems, including the relationship between individual performance and collective performance [1,2]. Such studies have shown that, if groups use a processing rule—like averaging—to reach a decision [3], this offers a more accurate solution than the estimate of a single individual, or small group of people, even if these individuals are experts [1,4]. This phenomenon represents a type of ‘swarm intelligence’ (SI) [1] that has popularly come to be known as the ‘wisdom of the crowd’ [5]. Moreover, adding diversity to a group—that is, individuals with different estimating abilities—can be more beneficial to SI than adding expertise [6].

A criticism aimed at SI calculations is that they are unlikely to hold up in the real world, since as soon as people have access to public information conveying knowledge about the quality of a feature [7], they tend to use it [2]. Individual estimates are then no longer independent and SI may break down. Indeed, Lorenz et al. [8] studied the influence of others on the decision-making process and observed that, when people had access to aggregated ‘average’ or multiple non-aggregated sources of public information, this resulted in a convergence in estimates and reduced SI. Studies have thus concluded that people's innate bias for copying others may be to the detriment of diversity as far as teams are concerned, as it is predicted to reduce the ‘wisdom of the crowd’ effect [1,6].

To further test this idea, we ran a number of variations of a sweet-guessing experiment, following Krause et al. [6], at our university open day. In our first trial (A), attendees made an independent guess about the number of sweets in a jar. This was to demonstrate that SI worked for our sweet-guessing task, and that collective accuracy improved in larger groups [1,3,6]. We ran three further trials, where people had the same jar of sweets to judge, but were provided with sources of public information—the guess of the previous person (B), the mean guess of previous people (C) or a random guess of a previous person (D). We predicted that such information would result in a convergence in estimates, to the detriment of SI [8].

However, no study has yet explored whether copying successful individuals reduces SI. People in corporate, military and sports teams all strive to recruit successful individuals [9], large animal swarms and flocks often rely on a minority of informed individuals when making collective decisions [9], and animals frequently copy successful individuals when selecting breeding sites and choosing mates [10]. Thus, unlike other forms of public information, we expected that access to the current best guess should result in a convergence in estimates towards the true number of sweets in the jar, resulting in accurate guesses at both the individual and the collective level. We tested this in our final trial (E), in which we presented people with the best guess that had been made before them.

2. Material and methods

(a). Experimental procedure

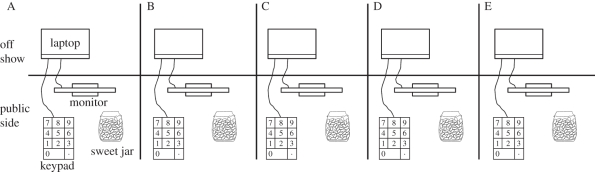

The experiment was carried out at the Royal Veterinary College Hawkshead Campus (Hertfordshire, UK) on 7 May 2011. Members of the public (students typically aged 16–18 and their families) were invited to guess the number of sweets in a jar at one of our five voting booths (figure 1). Each voting booth was screened off, so that those waiting to take part could not view the guesses being made. Guesses were requested by a custom-designed Windows-based program (see electronic supplementary material). The computer in booth A asked ‘How many sweets are in the jar on the table? Enter your guess using the keypad’. The other voting booths provided the following additional information ‘The last persons' guess was N’ (booth B), ‘A random previous guess is N’ (booth C), ‘The average of previous guesses is N’ (booth D) or ‘The best guess so far is N’ (booth E). The program accepted only positive integers, and we placed no limit on guess size. After each guess, the public information provided was updated if required, and the guess was recorded by the program. A total of 429 people took part which included 82 people in voting booth A, 103 people in B, 80 people in C, 92 in D and 71 in E. Where a party arrived to take part in the experiment together, party members were assigned to different voting booths, and we operated a one-way system to ensure people voted only once. All participants in the study were anonymous and thus no information on age or gender is available.

Figure 1.

Participants were invited to attend one of the five ‘voting booths’ (A–E). Each booth contained a monitor, keypad and sweet jar containing 751 sweets secured to the table. Visitors entered their estimate for the number of sweets using the keypad, which was displayed on the screen. Once a guess was made, the program was reset, and where necessary, public information updated.

(b). Analyses

Basic descriptive statistics including the median, mean and standard deviation for all guesses from each trial were calculated. As group sizes for each trial differed, we repeated these analyses with equal-sized groups (n = 71); however, our results were qualitatively equivalent (electronic supplementary material, figure S1), and we therefore used full sample sizes available for each trial. We regressed the response guess accuracy (Ga) against guess number (Gn) as E(Ga|Gn) = i + jGn for each trial, to determine the coefficients i and j. This provided an indication of whether individual estimates of sweet number were increasing or decreasing over guesses, and we used Levene's tests for equal variances to compare variance of guesses. Then, we compared the median guess from each voting booth for our full sample, and for samples (group sizes) of 10, 50 and 70. These group sizes are equivalent to the range of group sizes observed in human teams [11] and provided insight into how group size influenced SI. Finally, we also explored the effect of excluding especially large guesses upon SI, following Krause et al. [5].

3. Results

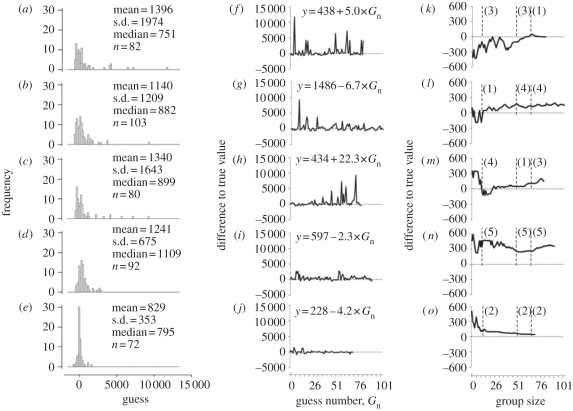

The distributions of guesses were non-Gaussian, and in all trials, the median of guesses was more accurate than the mean (figure 2a–e). Guesses made with no public information (trial A) were collectively very accurate, and this accuracy improved with larger group sizes (figure 2k). This SI was attributed to very large (inaccurate) guesses, since if we discounted guesses greater than 1200, then collective accuracy plummeted (electronic supplementary material, figure S1).

Figure 2.

(a,f,k) Data for sweet-guessing experiments where individuals had no public information, or (b,g,l) public information in the form: previous guess, (c,h,m) random previous guess, (d,i,n) mean average of previous guesses or (e,j,o) the best estimate from previous guesses. (a–e) Individual guesses represented as frequency histograms with relevant descriptive statistics. (f–j) How different guesses are from true values as a function of guess number. Regression equations for guess accuracy (Ga) against guess number (Gn) are also shown (see §2 for more detail). (k–o) SI accuracy (median of n guesses) as a function of group size. The rank SI accuracy of trials at group size 10, 50 and 70 are also shown for comparison (see also electronic supplementary material, figure S1).

Where people had access to the guess of the previous person, the mean guess or a random previous guess (trials B, C and D, respectively), this reduced the number of very large (inaccurate) guesses made (figure 2a–j). However, the variability in guesses observed in these three trials were not statistically different from trial A (Levene's tests, p > 0.12), and people's tendency to over-estimate the number of sweets appeared to be reinforced by the public information they received, thus reducing SI (figure 2a–j). Copying others was therefore to the detriment of diversity, reducing the ‘wisdom of the crowd’ effect [1,6]. Intuitively, because distributions were skewed, this effect was strongest where average information was provided (figure 2l), and therefore discounting especially large guesses above 1200 was required to improve SI (electronic supplementary material, figure S1).

Where people had access to the current best guess (E), this significantly reduced the diversity of individual guesses compared with all other trial conditions (Levene's tests p < 0.002 in all cases; figure 2e). However, this reduced diversity improved SI compared with other public information trials, with a median of 795 (true value 751) for our largest sample of n = 71 (figure 2o). This accuracy was also robust to the exclusion of extremely large guesses, as these were so rare (electronic supplementary material, figure S1).

Finally, as we had different numbers of people taking part in each of our trials, and there is no ‘natural’ group size that we should explore, we considered how SI performed at set group sizes of 10, 50 and 70 across trials (figure 2k–o). SI was consistently accurate in trial E across these group sizes, with aggregation of guesses from trial A superior only at larger group sizes (n ∼ 70; figure 2k–o).

4. Discussion

Our results show a strong wisdom of the crowd effect when people had no public information—with the median guesses of 82 people coming within just one sweet of the true quantity. It was expected, however, that such calculations are unlikely to hold up in the real world, where people tend to have access to public information. Our results support this notion: where people had access to the last persons' guess, the mean guess or a random previous guess, SI was reduced (if we take this to be the median of all guesses [6]). Specifically, people with access to public information over-estimated the number of sweets in the jar, resembling information cascades [7] that result in ‘economic bubbles’—where people drive prices of items (e.g. stocks) above their value [8]. Thus, exclusion of extremely large values was required to increase collective accuracy when using an aggregation rule [5].

Where people had access to the current best guess, although variability of guesses decreased, these were highly accurate (figure 2o). Our finding that people with access to the current best guess performed better individually and collectively at smaller group sizes (than our other conditions studied) may therefore offer an explanation for why people have a bias to recruit and follow successful individuals in team settings: it can enable accuracy at both the individual and group level and reduce the likelihood of extreme predictions. This also makes sense when considering typical human group sizes: while people are capable of maintaining social contacts with over 100 individuals [11], collective decisions are more frequently made in smaller groups that tend to contain around 40 individuals [12].

The SI ‘decision’ in this study was calculated statistically, and we assumed no differential time costs associated with the decisions that we calculated. This may be unrealistic, but our simple approach appears to have real-life empirical examples. Where populations of individuals make decisions about the quality of a resource sequentially (or, at least not all at the same time), copying successful individuals can ensure accuracy in foraging decisions, mate choice and habitat selection [7,10]. Future studies of how people, and other animals, integrate the information presented by successful individuals when making collective decisions may therefore enhance our understanding of the evolution of signals and hence communication, and offer ways to improve individual decision-making accuracy, without undermining ‘wisdom of the crowd’ effects at the collective level.

Acknowledgements

A.J.K. and J.P.M. designed the study. L.C. and S.D.S. collected the data, and A.J.K. and S.D.S. performed analyses. A.J.K. and J.P.M. wrote the paper. This work was supported by an RVC ‘Public Engagement Grant’ and NERC Fellowship (NE/H016600/2) awarded to A.J.K. J.P.M. and L.C. were supported by an EPSRC grant (EP/H013016/1). Thanks to Chris Hobson, Ida Bacon, Penelope Hudson and Zoe Self for help.

References

- 1.Krause J., Ruxton G. D., Krause S. 2010. Swarm intelligence in animals and humans. Trends Ecol. Evol. 25, 28–34 10.1016/j.tree.2009.06.016 (doi:10.1016/j.tree.2009.06.016) [DOI] [PubMed] [Google Scholar]

- 2.King A. J., Cowlishaw G. 2007. When to use social information: the advantage of large group size in individual decision making. Biol. Lett. 3, 137–139 10.1098/rsbl.2007.0017 (doi:10.1098/rsbl.2007.0017) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Simons A. M. 2004. Many wrongs: the advantage of group navigation. Trends Ecol. Evol. 19, 453–455 10.1016/j.tree.2004.07.001 (doi:10.1016/j.tree.2004.07.001) [DOI] [PubMed] [Google Scholar]

- 4.Hong L., Page S. E. 2004. Groups of diverse problem solvers can outperform groups of high-ability problem solvers. Proc. Natl Acad. Sci. USA 101, 16 385–16 389 10.1073/pnas.0403723101 (doi:10.1073/pnas.0403723101) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Surowiecki J. 2004. The wisdom of crowds: why the many are smarter than the few and how collective wisdom shapes business, economies, societies and nations. London, UK: Little Brown [Google Scholar]

- 6.Krause S., James R., Faria J. J., Ruxton G. D., Krause J. 2011. Swarm intelligence in humans: diversity trumps ability. Anim. Behav. 81, 941–948 10.1016/j.anbehav.2010.12.018 (doi:10.1016/j.anbehav.2010.12.018) [DOI] [Google Scholar]

- 7.Dall S. R. X., Giraldeau L. A., Olsson O., McNamara J. M., Stephens D. W. 2005. Information and its use by animals in evolutionary ecology. Trends Ecol. Evol. 20, 187–193 10.1016/j.tree.2005.01.010 (doi:10.1016/j.tree.2005.01.010) [DOI] [PubMed] [Google Scholar]

- 8.Lorenz J., Rauhutb H., Schweitzera F., Helbing D. 2011. How social influence can undermine the wisdom of crowd effect. Proc. Natl Acad. Sci. USA 108, 9020–9025 10.1073/pnas.1008636108 (doi:10.1073/pnas.1008636108) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.King A. J., Johnson D. D. P., Van Vugt M. 2009. The origins and evolution of leadership. Curr. Biol. 19, R911–R916 10.1016/j.cub.2009.07.027 (doi:10.1016/j.cub.2009.07.027) [DOI] [PubMed] [Google Scholar]

- 10.Danchin E., Giraldeau L. A., Valone T. J., Wagner R. H. 2004. Public information: from nosy neighbors to cultural evolution. Science 305, 487–491 10.1126/science.1098254 (doi:10.1126/science.1098254) [DOI] [PubMed] [Google Scholar]

- 11.Zhou W.-X., Sornette D., Hill R. A., Dunbar R. I. M. 2005. Discrete hierarchical organization of social group sizes. Proc. R. Soc. B 272, 439–444 10.1098/rspb.2004.2970 (doi:10.1098/rspb.2004.2970) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Couzin I. D. 2009. Collective cognition in animal groups. Trends Cogn. Sci. 13, 36–43 10.1016/j.tics.2008.10.002 (doi:10.1016/j.tics.2008.10.002) [DOI] [PubMed] [Google Scholar]