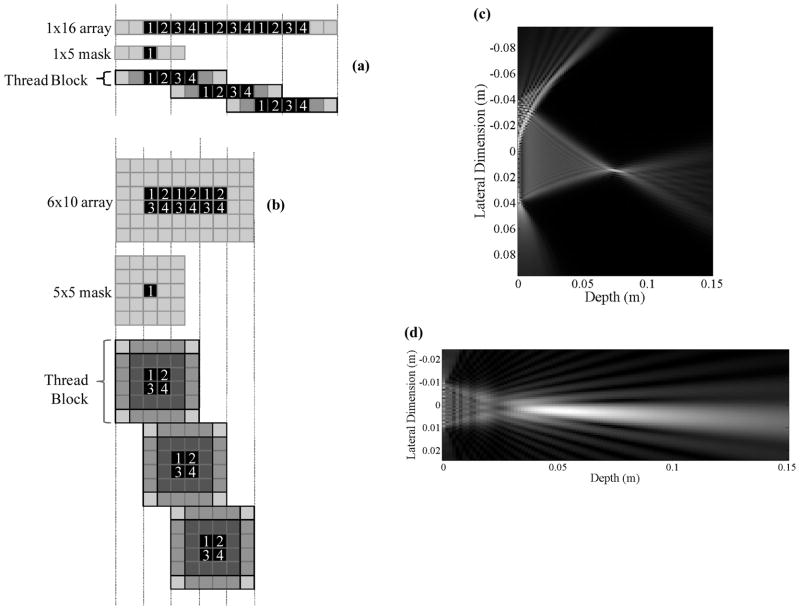

Fig. 3.

GPU shared memory usage and thread organization for (a) 1-D and (b) 2-D convolution, and corresponding example steered beams in (c) and (d) for 1-D and 2-D convolution, respectively. (a) In the 1-D example, a 1 × 5 piston mask is convolved with a 1 × 16 array of field points (aligned in azimuth along the array of pistons) stored in shared memory. The computation is divided into 3 blocks of 4 threads per block (arranged in a 1 × 4 grid), resulting in 20 shared memory accesses per block. Each thread block operates on a 1 × 8 array. (b) In the 2-D example, a 5 × 5 piston mask is convolved with a 6 × 10 array of field points from the azimuth-elevation plane stored in shared memory. The computation is divided into 3 blocks of 4 threads per block (arranged in a 2 × 2 grid), resulting in 100 shared memory accesses per block. In (c), the beam is generated for a 1-D array of 64 pistons with λ/2 spacing in the lateral dimension and 3λ height in elevation. In (d), the beam is generated for a 2-D array of 16 × 16 pistons, with λ/2 spacing in each dimension.