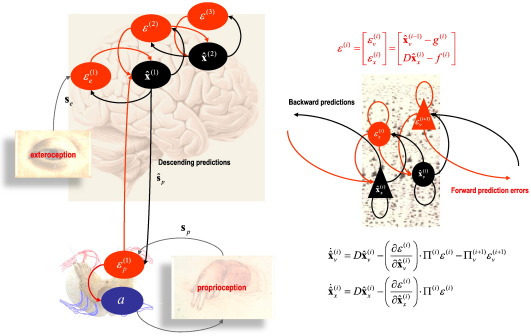

Fig. 1.

This figure illustrates the neuronal architectures that might implement predictive coding and active inference. The left panel shows a schematic of predictive coding schemes in which Bayesian filtering is implemented by neuronal message passing between superficial (red) and deep (black) pyramidal cells encoding prediction errors and conditional predictions or estimates respectively (Mumford 1992). In these predictive coding schemes, top-down predictions conveyed by backward connections are compared with conditional expectations at the lower level to form a prediction error. This prediction error is then passed forward to update the expectations in a Bayes-optimal fashion. In active inference, this scheme is extended to include classical reflex arcs, where proprioceptive prediction errors drive action — a (alpha motor neurons in the ventral horn of the spinal-cord) to elicit extrafusal muscle contractions and changes in primary sensory afferents from muscle spindles. These suppress prediction errors encoded by Renshaw cells. The right panel presents a schematic of units encoding conditional expectations and prediction errors at some arbitrary level in a cortical hierarchy. In this example, there is a distinction between hidden states xx that model dynamics and hidden causes xv that mediate the influence of one level on the level below. The equations correspond to a generalized Bayesian filtering or predictive coding in generalized coordinates of motion as described in (Friston, 2010). In this hierarchical form f(i) : = f(xx(i), xv(i)) corresponds to the equations of motion at the i-th level, while g(i) : = g(xx(i), xv(i)) link levels. These equations constitute the agent's prior beliefs. D is a derivative operator and Π(i) represents precision or inverse variance. These equations were used in the simulations presented in the next figure.